Abstract

Background

Pediatric asthma affects 7.1 million American children incurring an annual total direct healthcare cost around 9.3 billion dollars. Asthma control in children is suboptimal, leading to frequent asthma exacerbations, excess costs, and decreased quality of life. Successful prediction of risk for asthma control deterioration at the individual patient level would enhance self-management and enable early interventions to reduce asthma exacerbations. We developed and tested the first set of models for predicting a child’s asthma control deterioration one week prior to occurrence.

Methods

We previously reported validation of the Asthma Symptom Tracker, a weekly asthma self-monitoring tool. Over a period of two years, we used this tool to collect a total of 2912 weekly assessments of asthma control on 210 children. We combined the asthma control data set with patient attributes and environmental variables to develop machine learning models to predict a child’s asthma control deterioration one week ahead.

Results

Our best model achieved an accuracy of 71.8 %, a sensitivity of 73.8 %, a specificity of 71.4 %, and an area under the receiver operating characteristic curve of 0.757. We also identified potential improvements to our models to stimulate future research on this topic.

Conclusions

Our best model successfully predicted a child’s asthma control level one week ahead. With adequate accuracy, the model could be integrated into electronic asthma self-monitoring systems to provide real-time decision support and personalized early warnings of potential asthma control deteriorations.

Similar content being viewed by others

Background

Asthma is the most common pediatric chronic disease [1, 2] and the most frequent reason for preventable pediatric hospitalization [3]. Asthma affects 7.1 million American children [4, 5], accounts for one third of pediatric emergency department (ED) visits [6], and incurs an annual total direct healthcare cost around 9.3 billion dollars [1]. In 2009, 640,000 ED visits, 157,000 hospitalizations, and 185 deaths [4] were due to pediatric asthma. Poor asthma control in children is associated with decreased quality of life [7], increased school absenteeism with work loss for parents [8], and a high hospital readmission rate [9]. Despite its impact, asthma remains a poorly controlled disease [10]. Effective interventions to improve and maintain asthma control are needed.

Asthma control on a patient fluctuates frequently over time due to multiple factors [11–13]. An asthma exacerbation is often preceded by a critical period of decreased asthma control [14]. The critical period often goes unrecognized by patients, caregivers, and physicians [10, 15–18], resulting in missed opportunities for taking preventive interventions such as education and medication prescription and adjustment [15, 17].

Using predictive models can facilitate recognition of impending loss of asthma control before significant symptoms emerge. While many predictive models for diagnosing and treating asthma exist [19], little has been done for predicting asthma control deterioration. Existing models focus on predicting asthma exacerbations, which often represent a late manifestation of persisting loss of asthma control, and have low sensitivities and low positive predictive values [20–24].

In the past, our group developed and validated an asthma control monitoring tool, the Asthma Symptom Tracker (AST) [25]. This self-monitoring tool was designed to assess a child’s asthma control level on a weekly basis. The objective of this study was to develop a model for predicting asthma control deterioration one week ahead, by using scores from previously completed AST assessments [25] in conjunction with patient attributes and environmental variables.

Methods

Study setting

The data collected in our AST validation study [25] included demographics and clinical status for patients living primarily in Utah as well as several patients living in Idaho, Nevada, and Wyoming. The patients were recruited during hospitalization for asthma exacerbation. Written informed consent was obtained from each study participant before data were collected on the participant. Environmental exposure data matched by time and location were obtained from multiple regional monitoring stations (federal data sources) [26, 27]. The Germ Watch program [28] of Intermountain Healthcare (Salt Lake City, Utah) provided data for time-matched prevalent viral activity in the Intermountain Region. Intermountain Allergy & Asthma (Salt Lake City, Utah) [29] provided time-matched pollen count and mold level data. Analysis took place at the School of Medicine, University of Utah. The study was reviewed and approved by the Institutional Review Boards of the University of Utah and Intermountain Healthcare.

Data collection

As shown in Fig. 1, the AST score is derived from responses to the five questions of a modified Asthma Control Test [18, 25] adapted for weekly assessment of asthma control status. The AST score is the total score of the responses to the five questions, ranges from 5 to 25, and reflects the patient’s asthma control level over the past week. Each patient’s AST assessments were collected for six months. For the current study, a patient was excluded if he/she did not have at least two consecutive AST assessments one week apart. The first AST assessment was completed in the hospital, was almost always categorized as “uncontrolled asthma” reflecting the patient’s status in the pre-hospitalization week, and was excluded from analysis.

Patient demographics included age, race, sex, home address, and health insurance provider. Clinical status included chronic asthma severity level, secondhand smoke exposure, comorbidities, and healthcare use including ED visits and hospital admissions within the six months prior to the index hospital admission. Demographics and clinical status were obtained through extraction from Intermountain Healthcare’s Enterprise Data Warehouse [30] and manual chart review. Median household income and percentage of the population with a college degree based on zip code and health insurance category were obtained as surrogates for socioeconomic status. Low socioeconomic status and Medicaid insurance are known to be associated with poor asthma control in children [31, 32]. Patient home address was used in computing the patient’s environmental exposure via location matching.

Environmental variable data included particulate matter with a diameter of 2.5 micrometers or less (PM2.5), PM10, carbon monoxide, nitrogen dioxide, sulfur dioxide, ozone, temperature, relative humidity, wind speed, precipitation, dew point, tree pollen count, grass pollen count, weed pollen count, mold level, and activities of each of the following viruses: adenovirus, enterovirus, influenza A virus, influenza B virus, human metapneumovirus, parainfluenza virus types 1, 2, and 3, respiratory syncytial virus, and rhinovirus.

Data analysis

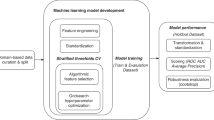

Data preparation

Our primary goal was to predict asthma control deterioration one week ahead. The dependent variable was the patient’s AST score one week following the prediction date, dichotomized to “controlled asthma” or “uncontrolled asthma” based on a cutoff score of >19 = “controlled asthma” [18, 33]. Uncontrolled asthma occurred much less frequently than controlled asthma. This could degrade a predictive model’s performance. To address this issue for the imbalanced dependent variable ([34], Chapter 16), we applied the standard Synthetic Minority Over-sampling TEchnique (SMOTE) [35] to the training set used for estimating a model’s parameters, but not to the test set used for evaluating the model’s performance. Basically, SMOTE over samples the rarer class “uncontrolled asthma” to make the numbers of instances more balanced for the two classes “controlled asthma” and “uncontrolled asthma.” To remove distributional skewedness ([34], Section 3.2), the standard Box-Cox transformation [36] was used to transform each numerical independent variable, which was then normalized by first subtracting its mean and then dividing by its standard deviation ([34], Section 3.2). This makes the data more normal distribution-like.

Evaluation was performed using two approaches. The first approach used standard, stratified 10-fold cross validation ([37], Section 5.3). The data were split into 10 partitions of roughly the same size. In each partition, the proportion of uncontrolled asthma was about the same as that in the full data set. Ten iterations were completed rotating through all partitions, using one for testing and the other nine for training. The 10 performance estimates were averaged to yield an overall performance estimate of the model. In the second approach, the data for each patient’s last AST assessment was used for testing, with the remaining data used as the training set. The performance estimate reflected a model’s performance in making predictions when a patient was in his/her typical clinical asthma status.

Performance metrics

As shown in Table 1 and the formulas below, six standard metrics were used to measure a model’s performance: accuracy, sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV), and Area Under the receiver operating characteristic Curve (AUC). For instance, false negative (FN) is the number of instances of uncontrolled asthma that the model incorrectly identifies as controlled asthma. Sensitivity measures the proportion of all instances of uncontrolled asthma that the model identifies. Specificity measures the proportion of all instances of controlled asthma that the model identifies.

Classification algorithms

Our basic predictive model was built using the decision stump classifier, which makes a prediction based on a single independent variable. Advanced predictive models were built using the top six classification algorithms recognized in the machine learning and data mining literature [38, 39]: support vector machine, random forest, multiboost with decision stumps, naive Bayes, k-nearest neighbor, and deep learning. Briefly, a support vector machine constructs a hyperplane in a high-dimensional space to separate instances of the two classes. A random forest is an ensemble of decision tree classifiers. Multiboost with decision stumps is an ensemble of decision stump classifiers trained through combining boosting with a variant of bagging. A naive Bayes classifier computes conditional probability by assuming that given the class variable, all independent variables are independent of each other. A k-nearest neighbor classifier classifies a new instance based on the classes of the k training instances closest to it. An example deep learning classifier is an artificial neural network with multiple hidden layers, i.e., a deep neural network.

Weka [37], the most widely used open-source machine learning and data mining toolkit, was used to build the models. Weka integrates a large set of commonly used machine learning algorithms and methods for handling the imbalanced class problem (i.e., the categories of the dependent variable are imbalanced). For deep learning that is not part of the Weka toolkit, we used the deepnet package in R [40] that implements deep neural network with weights initialized by deep belief network [39, 41, 42].

The classification algorithms and SMOTE require parameter entry. For instance, SMOTE has a parameter controlling the amount of up-sampling [35]. For each predictive model, we chose the parameter values of the corresponding classification algorithm and SMOTE to maximize sensitivity without overly degrading accuracy. Among the six performance measures, sensitivity and accuracy are the primary targets because our main goal is to identify uncontrolled asthma beforehand.

Results

The original study [25] provided 2912 weekly assessments of asthma control on 210 asthmatic children 2 to 18 years old. After excluding baseline assessments and 30 patients with only one AST assessment, 2617 AST assessments from 180 patients were available for predictive modeling. Table 2 shows patient demographics and baseline clinical characteristics. The percentage of uncontrolled asthma in the AST assessments was 23.5 % overall. As shown in Fig. 2, this percentage was significantly higher in the first week after hospitalization (50 %), then stabilized near 19 % during the remaining follow-up assessments.

Our basic predictive model used the decision stump classifier with one independent variable, the patient’s AST score on the prediction date. As shown in Table 3, the model achieved an accuracy of 73.4-73.9 %, with a low sensitivity of 51.1 % when measured by the method of testing on each patient’s last assessment. Table 3 also lists the performance of the six advanced machine learning classifiers measured by the two evaluation approaches. To improve performance, each of the six advanced classifiers used the five individual component scores of the patient’s AST assessment on the prediction date as independent variables.

The multiboost with decision stumps classifier had the best performance, with a sensitivity of 73.8 % vs. 74.5 %, an accuracy of 71.8 % vs. 74.4 %, a specificity of 71.4 % vs. 74.4 %, an AUC of 0.761 vs. 0.757, a PPV of 37.1 % vs. 50.7 %, and a NPV of 92.4 % vs. 89.2 %, when measured by the two evaluation approaches, respectively. The support vector machine and deep learning classifiers performed similarly. The naive Bayes, random forest, and k-nearest neighbor classifiers performed less well, particularly with respect to sensitivity.

We also used additional independent variables, beyond the component scores of the patient’s AST assessment, collected for this study to improve the advanced predictive models’ performance. These variables included the AST assessment one week prior to the prediction date, the patient attributes, and the environmental variables described above. None of these variables improved the models’ performance (detailed results are not shown). Thus, our best models used only the five individual component scores of the patient’s AST assessment on the prediction date as independent variables, making these models easy to use in practice.

Discussion

The objective of our study was to develop and test new predictive models for asthma control deterioration using a combination of patient demographic information, clinical information, and environmental variables. Using the multiboost with decision stumps classifier, we were able to successfully predict asthma control deterioration one week ahead with reasonable accuracy, demonstrating the feasibility of predictive modeling. However, performance needs to achieve a higher level of accuracy (e.g., >80 %) and PPV, while maintaining high sensitivity and specificity, before such models can be used to support real-time clinical decision making. If accuracy goals can be met, such a model could be integrated into electronic asthma self-monitoring systems, such as the electronic-Asthma Tracker (e-AT) [43], to provide prediction-based decision support and personalized early warnings of potential asthma control deterioration for asthmatic children. In this case, all independent variables used in the model need to be collected by the electronic asthma self-monitoring system. After the user enters his/her current AST assessment into the system, the system will use the model to predict the user’s asthma control level one week later. If the user is predicted to experience asthma control deterioration, the system will display a personalized warning message to the user.

Although not perfect, our results are encouraging, particularly as the first work on predicting a child’s asthma control deterioration one week ahead. In comparison, despite years of work, existing models on predicting asthma exacerbations have low sensitivities (typically <60 %) and low PPVs (typically <27 %) [20–24], much below those achieved by our best model.

Despite bringing significant burden to patients and the healthcare system, asthma continues to be a poorly controlled disease [10]. Poor asthma control is associated with frequent asthma exacerbations [44]. However, an asthma exacerbation is usually preceded by a critical period of asthma control deterioration [14]. This provides opportunity for interventions if early evidence of deterioration can be identified. Physicians, caregivers, and patients all tend to overestimate the level of asthma control, particularly in children [10, 15–18], resulting in poor recognition of deterioration until an acute exacerbation occurs. One way to identify risk of asthma control deterioration is to develop predictive models. To date, predictive models for deteriorating asthma control have focused on asthma exacerbation, often a late manifestation of loss of asthma control [44].

Using environmental variables, patient attributes, and the patient’s daily peak expiratory flow rate (PEFR) in the previous few days, Lee et al. [45] built a model to predict an asthma exacerbation. Lee’s model, however, did not predict asthma control deteriorations preceding an exacerbation, and thus cannot be used to support early intervention to prevent clinical deterioration. In addition, although monitoring PEFR is commonly used to identify early signs of asthma control deterioration, it has several limitations including: (1) the measurement is labor intensive, impacting compliance [43]; (2) PEFR is effort dependent [46] with low reproducibility [47–49]; (3) PEFR primarily assesses large airway airflow and underestimates airflow limitations in medium and small airways [49, 50]; (4) PEFR goals are usually based on the patient’s best PEFR [49], which may differ from the predicted or desired goals [43]; and (5) PEFR goals increase with age and height [51] and must be re-calculated periodically, which is often overlooked [43].

In our AST, asthma control assessments are based on the Asthma Control Test questionnaire adapted for weekly assessment of asthma control status. Thus, we avoid using the more difficult and less accessible forced expiratory volume in 1 second (FEV), forced vital capacity (FVC), and PEFR, and their limitations for use in children.

In our study, the multiboost with decision stumps, support vector machine, and deep learning classifiers performed similarly and achieved reasonable accuracy, sensitivity, specificity, AUC, and NPV. All of these three classifiers could predict a child’s asthma control deterioration one week ahead with reasonable accuracy. The naive Bayes, random forest, and k-nearest neighbor classifiers performed less well, particularly with respect to sensitivity.

The AST assessment reflects the patient’s asthma control level over the past week. Successive patient AST assessments are highly correlated with each other. Also, adding the AST assessment one week prior to the prediction date does not improve the models’ performance. We would expect that obtaining AST assessments on a patient more than once per week will not increase prediction accuracy, as information contained in additional AST assessments has already been included in the AST assessments on the prediction date and one week prior to the prediction date.

Our study has several limitations. First, the patients were recruited during hospitalization for asthma exacerbation. Each year, only ~1.6 % of asthmatic children are hospitalized [4]. As is typical with predictive modeling, our models’ performance is affected by the percentage of uncontrolled asthma in AST assessments. The percentage may be lower in patients not hospitalized than in patients hospitalized. A model’s performance usually degrades as the percentage of uncontrolled asthma in AST assessments decreases. It remains to be seen how our models will perform on patients not hospitalized. Second, we had a small sample size and were limited by the number of patient attributes and environmental variables. Collecting additional AST assessments and patient attributes can potentially improve the models’ performance. Such attributes might include information on allergies, parental asthma [20], healthcare access, the number of prescribing providers [21], viral infection severity [52], compliance with asthma controller medications, and other known predictors of asthma control such as pet exposure [53]. Third, our sample is relatively homogenous. For instance, 66 % of the patients are white. The small sample size limits our capability to (a) detect the association between a variable that is relatively homogenous and the asthma control level, and (b) conduct subgroup analysis to determine whether prediction accuracy differs among various patient subgroups (e.g., by race or by chronic asthma severity level). Fourth, our environmental variable data came from regional monitoring stations and may not reflect a patient’s actual exposures [45, 54, 55]. Accurate measurement of environmental exposures would benefit from using a personal exposure monitor [46, 55–57] and may help increase the models’ performance. We did find environmental variables correlated with an asthmatic child’s level of asthma control, but the correlation was relatively weak [58, 59]. By including the environmental variables, but not the patient’s AST score, in the predictive models, our best model achieved a low sensitivity of 41.7 % and a low AUC of 0.593.

To better understand our predictive models’ performance, we used two evaluation methods simultaneously. These two methods address different situations. When a choice among multiple predictive models needs to be made, these two evaluation methods can provide insights into which model is most suitable for the clinical situation and desired outcome. The patient’s AST score on the prediction date reflects the patient’s asthma control level, and hence can be an approximate surrogate for the environmental variables in the previous seven days assuming they have a non-trivial influence on asthma control. We felt that this, and the high correlation between successive patient AST assessments, rendered our modeling attempts less successful than desired. It is likely that AST assessments have an overpowering influence on the prediction in comparison to environmental variables and other patient attributes, making their relative contributions insignificant. This effect was compounded by our small sample size.

As with any intervention relying on patient-reported data, our technology’s utility hinges on patient adherence to continuous reporting of their data. If patients cannot obtain benefits from efforts expended on reporting their data, adherence is likely to wane. The functionality of predicting asthma control deterioration, once done accurately and incorporated into the e-AT, will provide direct benefits to patients and may help improve patient adherence.

We have several goals in mind for future work. First, we would like to improve the models’ performance. This will be accomplished by: (1) increasing the sample size to improve the capability to detect the association between a variable and the asthma control level, (2) obtaining additional patient attributes among the known predictors of asthma control, (3) collecting additional environmental variables, such as pet exposure at the patient’s home, (4) collecting patient-specific environmental variables with portable monitors rather than estimating from regional monitoring stations, and (5) integrating patient pharmacogenomics information relating to medication metabolism.

Second, we would like to investigate how our models will perform in the ambulatory setting with non-hospitalized patients.

Third, we would like to probe the possibility of making an earlier prediction. In general, the earlier and the more accurate the prediction, the more useful the prediction will be for clinical decision making.

Fourth, we would like to extend our predictive models to incorporate intervention information, in a way similar to that in interrupted time series models or intervention models [60]. Our current models consider no intervention information. However, once prediction-based warnings start to be provided to an asthmatic child, the child may be given a preventive intervention. The intervention will impact the child’s asthma control level in the future and thus needs to be considered in the predictive model.

Conclusions

Our best models predicted with reasonable accuracy a child’s asthma control level one week ahead. With improvements in accuracy, the models can be integrated into electronic asthma self-monitoring systems to provide real-time decision support and personalized early warnings on potential asthma control deterioration for asthmatic children. This will allow implementing preventive actions to reduce asthma exacerbations, improve clinical outcomes, increase quality of life, and reduce healthcare cost.

Ethics approval

The study was reviewed and approved by the Institutional Review Boards of the University of Utah and Intermountain Healthcare.

Abbreviations

- AST:

-

Asthma symptom tracker

- AUC:

-

Area under the receiver operating characteristic curve

- e-AT:

-

Electronic-asthma tracker

- ED:

-

Emergency department

- FN:

-

False negative

- FP:

-

False positive

- NPV:

-

Negative predictive value

- PEFR:

-

Peak expiratory flow rate

- PPV:

-

Positive predictive value

- SMOTE:

-

Synthetic minority over-sampling technique

- TN:

-

True negative

- TP:

-

True positive

References

Roemer M. Health Care Expenditures for the Five Most Common Children’s Conditions, 2008: Estimates for U.S. Civilian Noninstitutionalized Children, Ages 0–17. MEPS Statistical Brief #349. AHRQ: Rockville, MD; 2011.

Malveaux FJ. The state of childhood asthma: introduction. Pediatrics. 2009;123 Suppl 3:S129–30.

Weissman JS, Gatsonis C, Epstein AM. Rates of avoidable hospitalization by insurance status in Massachusetts and Maryland. JAMA. 1992;268(17):2388–94.

Akinbami LJ, Moorman JE, Liu X. Asthma prevalence, health care use, and mortality: United States, 2005–2009. Natl Health Stat Report. 2011;32:1–14.

Akinbami LJ, Moorman JE, Bailey C, Zahran HS, King M, Johnson CA, et al. Trends in asthma prevalence, health care use, and mortality in the United States, 2001–2010. NCHS Data Brief. 2012;94:1–8.

Vargas PA, Simpson PM, Bushmiaer M, Goel R, Jones CA, Magee JS, et al. Symptom profile and asthma control in school-aged children. Ann Allergy Asthma Immunol. 2006;96(6):787–93.

Zeiger RS, Yegin A, Simons FE, Haselkorn T, Rasouliyan L, Szefler SJ, et al. Evaluation of the National Heart, Lung, and Blood Institute guidelines impairment domain for classifying asthma control and predicting asthma exacerbations. Ann Allergy Asthma Immunol. 2012;108(2):81–7.

Wang LY, Zhong Y, Wheeler L. Direct and indirect costs of asthma in school-age children. Prev Chronic Dis. 2005;2(1):A11.

Mitchell EA, Bland JM, Thompson JM. Risk factors for readmission to hospital for asthma in childhood. Thorax. 1994;49(1):33–6.

Chapman KR, Boulet LP, Rea RM, Franssen E. Suboptimal asthma control: prevalence, detection and consequences in general practice. Eur Respir J. 2008;31(2):320–5.

Stempel DA, McLaughin TP, Stanford RH, Fuhlbrigge AL. Patterns of asthma control: a 3-year analysis of patient claims. J Allergy Clin Immunol. 2005;115(5):935–9.

Calhoun WJ, Sutton LB, Emmett A, Dorinsky PM. Asthma variability in patients previously treated with β2-agonists alone. J Allergy Clin Immunol. 2003;112(6):1088–94.

Zhang J, Yu C, Holgate ST, Reiss TF. Variability and lack of predictive ability of asthma end-points in clinical trials. Eur Respir J. 2002;20(5):1102–9.

Robroeks CM, van Vliet D, Jöbsis Q, Braekers R, Rijkers GT, Wodzig WK, et al. Prediction of asthma exacerbations in children: results of a one-year prospective study. Clin Exp Allergy. 2012;42(5):792–8.

Rabe KF, Adachi M, Lai CK, Soriano JB, Vermeire PA, Weiss KB, et al. Worldwide severity and control of asthma in children and adults: the global asthma insights and reality surveys. J Allergy Clin Immunol. 2004;114(1):40–7.

Davis KJ, Disantostefano R, Peden DB. Is Johnny wheezing? Parent–child agreement in the childhood asthma in America survey. Pediatr Allergy Immunol. 2011;22(1 pt 1):31–5.

Halterman JS, Yoos HL, Kitzman H, Anson E, Sidora-Arcoleo K, McMullen A. Symptom reporting in childhood asthma: a comparison of assessment methods. Arch Dis Child. 2006;91(9):766–70.

Nathan RA, Sorkness CA, Kosinski M, Schatz M, Li JT, Marcus P, et al. Development of the asthma control test: a survey for assessing asthma control. J Allergy Clin Immunol. 2004;113(1):59–65.

Zolnoori M, Zarandi MH, Moin M. Application of intelligent systems in asthma disease: designing a fuzzy rule-based system for evaluating level of asthma exacerbation. J Med Syst. 2012;36(4):2071–83.

Forno E, Celedón JC. Predicting asthma exacerbations in children. Curr Opin Pulm Med. 2012;18(1):63–9.

Lieu TA, Quesenberry CP, Sorel ME, Mendoza GR, Leong AB. Computer-based models to identify high-risk children with asthma. Am J Respir Crit Care Med. 1998;157(4 Pt 1):1173–80.

Lieu TA, Capra AM, Quesenberry CP, Mendoza GR, Mazar M. Computer-based models to identify high-risk adults with asthma: is the glass half empty of half full? J Asthma. 1999;36(4):359–70.

Frey U. Predicting asthma control and exacerbations: chronic asthma as a complex dynamic model. Curr Opin Allergy Clin Immunol. 2007;7(3):223–30.

McCoy K, Shade DM, Irvin CG, Mastronarde JG, Hanania NA, Castro M, et al. Predicting episodes of poor asthma control in treated patients with asthma. J Allergy Clin Immunol. 2006;118(6):1226–33.

Nkoy FL, Stone BL, Fassl BA, Uchida DA, Koopmeiners K, Halbern S, et al. Longitudinal validation of a tool for asthma self-monitoring. Pediatrics. 2013;132(6):e1554–61.

Air quality data homepage of the United States Environmental Protection Agency. http://www.epa.gov/airdata/. Accessed Jan. 27, 2015.

MesoWest homepage. http://mesowest.utah.edu/. Accessed Jan. 27, 2015.

Respiratory virus surveillance homepage of GermWatch. https://intermountainphysician.org/gw/respiratoryviruses/Pages/default.aspx. Accessed Jan. 27, 2015.

Pollen count data homepage of Intermountain Allergy & Asthma. http://www.intermountainallergy.com/pollen.html. Accessed Jan. 27, 2015.

Evans RS, Lloyd JF, Pierce LA. Clinical use of an enterprise data warehouse. AMIA Annu Symp Proc. 2012;2012:189–98.

Bloomberg GR, Banister C, Sterkel R, Epstein J, Bruns J, Swerczek L, et al. Socioeconomic, family, and pediatric practice factors that affect level of asthma control. Pediatrics. 2009;123(3):829–35.

Cope SF, Ungar WJ, Glazier RH. Socioeconomic factors and asthma control in children. Pediatr Pulmonol. 2008;43(8):745–52.

Schatz M, Sorkness CA, Li JT, Marcus P, Murray JJ, Nathan RA, et al. Asthma control test: reliability, validity, and responsiveness in patients not previously followed by asthma specialists. J Allergy Clin Immunol. 2006;117(3):549–56.

Kuhn M, Johnson K. Applied Predictive Modeling. New York: Springer; 2013.

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP. SMOTE: Synthetic Minority Over-sampling Technique. J Artif Intell Res. 2002;16:321–57.

Box GEP, Cox DR. An analysis of transformations. J R Stat Soc Ser B. 1964;26:211–52.

Witten IH, Frank E, Hall MA. Data Mining: Practical Machine Learning Tools and Techniques. 3rd ed. Burlington, MA: Morgan Kaufmann; 2011.

Wu X, Kumar V. The Top Ten Algorithms in Data Mining. Chapman & Hall/CRC: Boca Raton, FL; 2009.

Deng L, Yu D. Deep learning: methods and applications. Foundations and Trends in Signal Processing. 2014;7(3–4):197–387.

Deepnet package in R homepage. http://cran.r-project.org/web/packages/deepnet/index.html. Accessed Jan. 27, 2015.

Hinton GE, Osindero S, Teh YW. A fast learning algorithm for deep belief nets. Neural Comput. 2006;18(7):1527–54.

Hinton GE, Salakhutdinov RR. Reducing the dimensionality of data with neural networks. Science. 2006;313(5786):504–7.

Nkoy FL, Stone BL, Fassl BA, Koopmeiners K, Halbern S, Kim EH, et al. Development of a novel tool for engaging children and parents in asthma self-management. AMIA Annu Symp Proc. 2012;2012:663–72.

Haselkorn T, Fish JE, Zeiger RS, Szefler SJ, Miller DP, Chipps BE, et al. Consistently very poorly controlled asthma, as defined by the impairment domain of the Expert Panel Report 3 guidelines, increases risk for future severe asthma exacerbations in The Epidemiology and Natural History of Asthma: Outcomes and Treatment Regimens (TENOR) study. J Allergy Clin Immunol. 2009;124(5):895–902.

Lee CH, Chen JC, Tseng VS. A novel data mining mechanism considering bio-signal and environmental data with applications on asthma monitoring. Comput Methods Programs Biomed. 2011;101(1):44–61.

Delfino RJ, Staimer N, Tjoa T, Gillen D, Kleinman MT, Sioutas C, et al. Personal and ambient air pollution exposures and lung function decrements in children with asthma. Environ Health Perspect. 2008;116(4):550–8.

Gautrin D, D'Aquino LC, Gagnon G, Malo JL, Cartier A. Comparison between peak expiratory flow rates (PEFR) and FEV1 in the monitoring of asthmatic subjects at an outpatient clinic. Chest. 1994;106(5):1419–26.

Frischer T, Meinert R, Urbanek R, Kuehr J. Variability of peak expiratory flow rate in children: short and long term reproducibility. Thorax. 1995;50(1):35–9.

Adeniyi A, Erhabor G. The peak flow meter and its use in clinical practice. Afr J Respir Med. 2011;6(2):5–7.

Goldberg S, Springer C, Avital A, Godfrey S, Bar-Yishay E. Can peak expiratory flow measurements estimate small airway function in asthmatic children? Chest. 2001;120(2):482–8.

Carson JW, Hoey H, Taylor MR. Growth and other factors affecting peak expiratory flow rate. Arch Dis Child. 1989;64(1):96–102.

Walter MJ, Castro M, Kunselman SJ, Chinchilli VM, Reno M, Ramkumar TP, et al. Predicting worsening asthma control following the common cold. Eur Respir J. 2008;32(6):1548–54.

Schatz M. Predictors of asthma control: what can we modify? Curr Opin Allergy Clin Immunol. 2012;12(3):263–8.

Delfino RJ, Quintana PJ, Floro J, Gastañaga VM, Samimi BS, Kleinman MT, et al. Association of FEV1 in asthmatic children with personal and microenvironmental exposure to airborne particulate matter. Environ Health Perspect. 2004;112(8):932–41.

Keeler GJ, Dvonch T, Yip FY, Parker EA, Isreal BA, Marsik FJ, et al. Assessment of personal and community-level exposures to particulate matter among children with asthma in Detroit, Michigan, as part of Community Action Against Asthma (CAAA). Environ Health Perspect. 2002;110 Suppl 2:173–81.

Delfino RJ, Coate BD, Zeiger RS, Seltzer JM, Street DH, Koutrakis P. Daily asthma severity in relation to personal ozone exposure and outdoor fungal spores. Am J Respir Crit Care Med. 1996;154(3 Pt 1):633–41.

Maestrelli P, Canova C, Scapellato ML, Visentin A, Tessari R, Bartolucci GB, et al. Personal exposure to particulate matter is associated with worse health perception in adult asthma. J Investig Allergol Clin Immunol. 2011;21(2):120–8.

Dick S, Doust E, Cowie H, Ayres JG, Turner S. Associations between environmental exposures and asthma control and exacerbations in young children: a systematic review. BMJ Open. 2014;4(2):e003827.

Jackson DJ, Johnston SL. The role of viruses in acute exacerbations of asthma. J Allergy Clin Immunol. 2010;125(6):1178–87.

Velicer WF, Fava JL. Time series analysis for psychological research. In: Weiner IB, Schinka JA, Velicer WF, editors. Handbook of Psychology, Research Methods in Psychology (Volume 2). Hoboken, NJ: Wiley; 2012.

Acknowledgments

We thank Intermountain Allergy & Asthma for sharing their pollen count and mold level data, and Tom H. Greene and Xiaoming Sheng for helpful discussions. Drs. Nkoy, Stone, Fassl, and Maloney are supported by grants 1R18HS018166-01A1 and 1R18HS018678-01A1 from the Agency for Healthcare Research and Quality. Dr. Stone is also supported by award KM1CA156723 from the National Cancer Institute.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

Drs. Nkoy, Stone, Fassl, and Maloney have a patent application pending for the electronic-Asthma Tracker. None of the sponsors participated in design and conduct of the study; collection, management, analysis, and interpretation of the data; or preparation, review, or approval of the manuscript. Its contents are solely the responsibility of the authors and do not necessarily represent the official view of the AHRQ and NCI.

Authors’ contributions

GL conceptualized and designed the study, conducted machine learning experiments, drafted the initial manuscript, and approved the final manuscript as submitted. BS participated in conceptualizing and designing the study, provided feedback on various issues, revised the manuscript, and approved the final manuscript as submitted. BF provided feedback on various issues, revised the manuscript, and approved the final manuscript as submitted. CM provided feedback on various issues, revised the manuscript, and approved the final manuscript as submitted. PG participated in conceptualizing and designing the study, provided feedback on various issues, revised the manuscript, and approved the final manuscript as submitted. SY participated in conceptualizing and designing the study, conducting machine learning experiments, and drafting the initial manuscript, and approved the final manuscript as submitted. FN conceptualized and designed the study, provided feedback on various issues, revised the manuscript, and approved the final manuscript as submitted.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Luo, G., Stone, B.L., Fassl, B. et al. Predicting asthma control deterioration in children. BMC Med Inform Decis Mak 15, 84 (2015). https://doi.org/10.1186/s12911-015-0208-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12911-015-0208-9