Abstract

Background

Guidelines for colorectal cancer screening recommend that patients be informed about options and be able to select preferred method of screening; however, there are no existing measures available to assess whether this happens.

Methods

Colorectal Cancer Screening Decision Quality Instrument (CRC-DQI) includes knowledge items and patients' goals and concerns. Items were generated through literature review and qualitative work with patients and providers. Hypotheses relating to the acceptability, feasibility, discriminant validity and retest reliability of the survey were examined using data from three studies: (1) 2X2 randomized study of participants recruited online, (2) cross-sectional sample of patients recruited in community health clinics, and (3) cross-sectional sample of providers recruited from American Medical Association Master file.

Results

338 participants were recruited online, 94 participants were recruited from community health centers, and 115 physicians were recruited. The CRC-DQI was feasible and acceptable with low missing data and high response rates for both online and paper-based administrations. The knowledge score was able to discriminate between those who had seen a decision aid or not (84% vs. 64%, p < 0.001) and between providers, online patients and clinic patients (89% vs. 74% vs. 41%, p < 0.001 for all comparisons). The knowledge score and most of the goals had adequate retest reliability. About half of the participants received a test that matched their goals (47% and 51% in online and clinic samples respectively). Many respondents who had never been screened had goals that indicated a preference for colonoscopy. A minority of respondents in the online (21%) and in clinic (2%) samples were both well informed and received a test that matched their goals.

Conclusions

The CRC-DQI demonstrated good psychometric properties in diverse samples, and across different modes of administration. Few respondents made high quality decisions about colon cancer screening.

Similar content being viewed by others

Background

Clinical guidelines strongly recommend that adults age 50–75 be screened for colorectal cancer (CRC); however, guidelines also recognize that there are multiple screening options available [1]. A high quality decision in situations where there is more than one reasonable option requires ensuring that patients are informed, and that the choice of test reflects patients’ goals and concerns [2, 3].

Several studies have examined the extent to which patients are informed and engaged in the decision about CRC screening. Patients have been shown to have significant knowledge gaps about screening, and lack understanding of common terms relating to colon cancer [4–6]. Studies have also identified several factors that are important to patients when considering what kind of test to have, such as reducing chance of dying of colon cancer, the invasiveness of the test, and test preparation [5, 7]. Each of these studies used different measures of knowledge and different methods of assessing patients’ goals. There is a need for a comprehensive measure of decision quality that can assess whether patients are informed and receive screening tests that match their goals.

Sepucha and colleagues have developed and tested decision quality instruments that assess patients’ knowledge, and the extent to which treatments match their goals for patients facing surgical decisions, including breast cancer surgery [8–10]. A similar process was used to develop and evaluate a survey instrument to measure the quality of CRC screening decisions. This manuscript describes the development process and results of three studies that evaluated the psychometric properties (e.g. reliability, validity, and precision) and clinical sensibility (e.g. interpretability, acceptability, and feasibility) of the new survey instrument [11].

Methods

Item development

The Colorectal Cancer Screening Decision Quality Instrument (CRC-DQI) development process followed principles of survey research methods [12, 13]. Nine candidate facts and thirteen goals were identified through a review of the clinical evidence and reports from focus groups of patients. The facts and goals were then rated on importance, accuracy and completeness by a convenience sample of patients (n = 27) and clinical experts (n = 16) including primary care physicians, nurses and gastroenterologists. Based on the ratings, new content was added (e.g. covering how often the tests need to be done, which tests require sedation) and some content was removed (e.g. quantitative estimate of disease free survival benefit from screening). Then, experts in survey research drafted questions to cover the content and conducted cognitive interviews with men and women (n = 6) to evaluate the items. Revisions to the items, responses and formatting of the survey were made to improve acceptability and comprehension. The resulting instrument was then tested in three samples.

Study sample and procedures

Study 1: online sample

Patients were recruited through Craig’s List Internet ads in 8 U.S. cities. All respondents were screened for eligibility by a member of the study staff. Eligible respondents were adults 35–70 years of age who did not have a prior diagnosis of colon cancer. Younger participants (aged 35–49) were included in order to ensure a range of knowledge scores and adequate sample size for comparisons for the knowledge items across the different modes of administration.

Eligible participants were randomized in 2×2 design to (1) receive a decision aid or not and (2) complete the survey online or by mail. The decision aid, Colon Cancer Screening: Deciding What’s Right For You, is an 31 minute DVD and booklet produced by Informed Medical Decisions Foundation and Health Dialog ©2010. The initial packet included a cover letter, decision aid (if appropriate), survey (if appropriate) and incentive. Participants randomized to complete the survey online received an email with a link to the survey about 1 week after the cover letter. Non-respondents received a reminder (via email or mail) two weeks later. A subset of responders received a retest packet four weeks later (delivered in the same mode, email or mail, as the initial survey). A small incentive was provided with each survey (valued at $30 US for decision aid group, $20 US for no-decision aid group, $20 US for retest).

The study protocol for the online patient survey was approved by the Partners Human Research Committee, UMass Boston Institutional Review Board and University of Virginia Insitutional Review Board for Health Services Research. Consent was implied by the voluntary completion of the survey instruments.

Study 2: clinic sample

The online patient sample had very high education levels and limited diversity, so we recruited a sample of patients aged 50 and older from community health centers in order to examine the generalizability of the results. Patients and family members in the clinics’ waiting rooms were approached by trained staff and asked if they wanted to participate in an interview about colorectal cancer screening. Subjects did not need to have any prior experience with screening. If participants consented, they were brought to a separate room to complete a 20 minute interview followed by the written questionnaire. Staff members were trained to administer the written survey orally if the participant asked for help. Subjects were compensated $20 for participating in the interview and completing the questionnaire. The protocol was approved by the Institutional Review Boards at all participating sites.

Study 3: provider survey

We identified primary care physicians and specialists through the American Medical Association Master File from 17 cities in the United States (including the 8 cities where patients were recruited for the online study). For the colon cancer screening sample, we selected 200 physicians across internal medicine (n = 100) and gastroenterology (n = 100). Each provider was mailed a study packet with a $20 US cash incentive. A phone reminder was made at two weeks and a mailed reminder was sent at four weeks. The study protocol for the providers was approved by the Institutional Review Board and provider consent was implied by the voluntary completion of the survey.

Measures

Patients completed demographics, screening history and the following measures.

CRC-DQI (Colorectal Cancer Screening Decision Quality Instrument). 16 multiple choice knowledge items and 10 goals and concerns rated on an importance scale from 0 (not at all important) to 10 (extremely important). The items used in the field test are available as Additional file 1.

Top Three Goals and Concerns: Patients indicated the top three goals and concerns that were most important to their decision.

Involvement in Decision Making: assessed with two items (1) who made the decision about testing and (2) how much they were involved in the decision.

Providers completed the full set of CRC-DQI knowledge items and were asked to rate the knowledge items overall and individually for how well they covered information that is essential for patients to know.

Statistical Analysis

Sample Size: 150 participants in each group would allow us to detect a clinically meaningful difference in knowledge between decision aid and control groups of 10% with 90% power (assuming common standard deviation of 20%) and a difference in mode (online vs. mail) on response rates and on missing data of 2% (assuming common standard deviation of 0.5%) with 80% power.

Item Retention and Deletion: A group of experts in survey research, decision sciences and clinical experts examined responses for issues such as difficulty (e.g., too easy or too hard), problematic format (e.g., multiple responses checked off when only one was expected), redundancy (high inter-item correlation), and floor or ceiling effects (responses bunched at bottom or top of the scale). Problematic items were deleted or recommended for revision.

DQI- Knowledge Score: Each correct response received one point. Missing responses were considered incorrect and a total knowledge score was calculated for those who completed at least half of the items. A total knowledge score was standardized by dividing the number of correct responses by the number of items, resulting in scores from 0% to 100%.

DQI-Concordance Score: Respondents aged 50 and older who had a screening test were included in these analyses and were categorized by their screening history into ‘colonoscopy’ and ‘other test’ groups. Patients who had never had a screening test were not included in the regression model and for the purposes of the concordance score, they were not considered to have had a test that matched their goals. A logistic regression model, with testing group (colonoscopy vs. other test) as the dependent variable and the goals as independent variables, generated a predicted probability of having a colonoscopy. Patients with a model predicted probability of colonoscopy >0.5 and who had a colonoscopy or those with a predicted probability of colonoscopy ≤0.5 and who had some other test, were classified as a “match.” This yielded a summary concordance score that indicated the percentage of patients whose screening decisions “matched” their goals. Higher concordance scores indicate that more participants received screening tests that matched their goals.

Decision Quality Indicator: A binary variable was created that was “1” for patients who were both well-informed (i.e. knowledge score at or above the mean knowledge score of the decision aid group) and had a test that matched their goals, indicating high decision quality, and “0” otherwise.

Acceptability and Feasibility: Acceptability was examined using the response rates. Feasibility was examined using rates of missing data.

Reliability: Test-retest reliability was assessed by calculating the intraclass correlation coefficient (ICC) for the total knowledge score and for the individual goals and concerns. The target was to exceed 0.7 [14]. Internal consistency for the knowledge score was not calculated as the set of knowledge items is not a measure of one underlying construct.

There is no gold standard for measuring knowledge or concordance so the following hypotheses were used to examine the validity of the scores:

Discriminant Validity: A key feature of a knowledge test is that it can discriminate among those with different levels of knowledge. Two hypotheses were examined (a) mean knowledge scores would be higher for providers than patients (two sample t-test) and (b) the decision aid group would have higher knowledge than the control group (two sample t-test).

Construct Validity: The hypothesis that patients who are more involved in decision making should be more likely to have high decision quality was examined using Fisher’s exact test to compare the percentages across groups.

Mode Effect: Differences in response rates and rates of missing items by mode (online versus mail) were compared.

Brief version

A short version of the instrument, with five knowledge items, was created and evaluated for reproducibility, retest reliability and discriminant validity.

Analyses were conducted using PASW Statistics 18.0.

Results

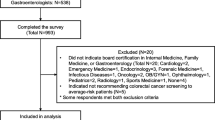

Response rates and sample

For the online sample, 338/367 (92%) participants responded to the initial survey and 71/84 (84.5%) responded to the retest survey. The decision aid and no decision aid arms were balanced on all demographic characteristics. For the clinic sample, 94 participants were enrolled (data regarding response rate was not available). Participant characteristics for these two samples are in Table 1. For the provider sample, 115/193 (59.6%) responded and their characteristics are summarized in Table 2. Provider non responders were slightly younger than responders (51 vs. 53 years old, p = 0.04), but did not differ by gender or specialty.

Item retention and deletion

Six knowledge items were identified as being too easy (i.e., patient scores were >85%). Four of them were removed because the provider importance scores were also low and two were revised to be included in the future version of the instrument. Across both patient samples, total knowledge scores ranged from 10-100% with no evidence of a floor or ceiling effect.

Responses for the goals ranged across the entire set of options, though three of the goals had evidence of a ceiling effect. Many respondents selected 10 out of 10 for “to know whether or not you have colon cancer” (64%), “to try to find colon cancer or polyps early” (57%), and “to avoid a test that can cause bleeding or a tear in the colon” (56%). These three items were kept in for analyses because they also had the highest percentage of patients who indicated that each goal was one of their top three issues. Two goals were eliminated as few patients included them as one of their top three goals: “avoid a test where you have to drink a liquid before the test to clean out your colon (6% selected this item), and “avoid a test that requires you to handle your stool” (only 5% selected this item).

The remaining analyses were conducted using a reduced set of 10 knowledge items and the 8 goals and concerns.

Acceptability and feasibility

The survey had a high response rate 338/367 (92%) and few missing items (1.3% for knowledge items and 0.7% for goals on average) for the online patient sample. Missing items did vary by mode of administration for knowledge (2.5% vs. 0.2%, p < 0.001 for paper and online versions respectively) but did not vary by mode for the goals. There were more missing items in the clinic sample, but overall the amount was still low, 3.2% (range 1.2-7.4%) for knowledge items and 4.0% (range 1.1 – 5.3%) for goal items. None of the online respondents and only one respondent in the clinic sample was missing more than half of the knowledge items and did not receive a total knowledge score.

Reliability

The knowledge score had retest reliability ICC = 0.67 (95% CI 0.47, 0.79). The retest reliability of three goals was strong “find colon cancer or polyps early” (ICC = 0.87), “to know whether or not you have colon cancer” (ICC = 0.85), and “avoid test where a tube is put into your rectum to look at the colon” (ICC = 0.74). Three others were modest “avoid test that is painful” (ICC = 0.68), “to avoid a test that can cause bleeding or a tear in the colon” (ICC = 0.68), and “choose test that doesn’t cost you a lot of money” (ICC = 0.61). Two had low retest reliability “chose a test that does not need to be done every year” (ICC = 0.47) and “chose a test where you take medicine before the test that makes you sleepy” (ICC = 0.55).

Knowledge score

Participants in the decision aid (DA) group had higher knowledge scores than those in the control, (84% vs. 64%, p < 0.001). This difference remained significant when the sample was restricted to the population aged 50 and older (e.g. estimated mean difference 17.5% (95%CI 12.1%, 22.9%), p = 0.001 in the DA and non DA groups). Providers also had significantly higher knowledge scores than the online sample (88.7% vs. 73.8%, p < 0.001). Table 3 shows scores on the individual knowledge items across the different samples. The clinic sample, none of whom had viewed a decision aid, had significantly lower mean knowledge score than the online sample (40.6% vs. 73.8%, p <0.001).

The majority of providers (81%) felt that the set of knowledge items covered the key facts extremely or very well, supporting content validity. The provider ratings of whether the individual items were “essential” varied from 17% for “Out of every 100 people, about how many will die from colon cancer?” to 69% for “At what age do doctors usually recommend people start getting regular tests for colon cancer.”

DQI-concordance score

Figure 1 contains the plots of the mean importance scores on selected goals for patients who reported having had a colonoscopy, some other screening test and never being screened. Three goals, desire to “find colon cancer or polyps early,” “know whether or not you have cancer,” and “avoid a test where a tube is put into your rectum to look at the colon,” discriminated significantly between those who had colonoscopy and those who did not in the online sample (see Figure 1). In multivariable analysis, two of these goals were associated with having colonoscopy versus some other test (see Table 4). Patients who felt it was important to “know whether or not you had colon cancer” were more likely to have a colonoscopy and patients who felt it was important to “avoid a test where a tube is put into rectum to look at the colon” were less likely to have a colonoscopy. The concordance score, or number of participants in the online sample who received a screening test that matched that predicted by their stated goals was 47.3% (71/150).

The fifty-two respondents who were 50 or older and who had never been screened in the online sample were not considered to have received a test that matched their goals. The majority of them (57%) had goals that suggested a preference for colonoscopy and 43% had goals that suggested some other screening test would be the best fit. In the clinic sample, (35/74) 47.3% had a screening test that matched their goals. About half the clinic participants who had never been screened (53.8%) had goals that suggested a preference for colonoscopy.

Decision quality indicator

In the online sample, 32/151 (21.2%) met the definition of high decision quality, (i.e. knowledge score at or above 84%, the mean of the decision aid group, and had a test that matched their goals). Participants with high decision quality were less likely to say that the doctor made the decision compared to those who did not meet criteria for high decision quality, but the difference was not significant (29.7% vs. 38.9%, p = 0.25 for the online sample). For the clinic sample, only two repondents met the threshold for being considered informed, and as a result, only 3% of respondents met our definition of high decision quality.

Brief version

A shorter, five item version of the knowledge test had retest reliability (ICC = 0.54) and reproducibility to the total knowledge score (Pearson R = 0.89). The knowledge scores using the brief version also discriminated between the DA group and the control (85.4% vs. 58.9%, p <0.001) and between providers and patients in the online sample (82.1% vs. 74.3%, p = 0.008).

Discussion

The CRC-DQI is a new survey that examines the extent to which patients are informed and receive tests that match their goals. High acceptability and feasibility of the instrument is evidenced by the high response rate and the low number of missing responses in diverse patient samples. The survey instrument is acceptable and feasible when administered online, by mail or in person.

The knowledge score was able to discriminate among those who were more or less informed, including providers and patients, and those who have seen a decision aid or not. The participants in the decision aid group and virtually all providers had a good understanding of the frequency of screening for the different tests and the likelihood of serious side effects with colonoscopy. Participants in the decision aid arm were also more likely to have realistic expectations for the incidence and lethality of colon cancer, significantly more accurate than providers (p < 0.001). The overall magnitude of the improvement in knowledge for the decision aid group compared to the control was more than that seen in the Cochrane Collaborative systematic review of decision aids [15].

Few patients from the clinic sample, which was a more diverse and underserved sample, were able to answer the knowledge items correctly. Other studies have found knowledge gaps about colon cancer screening, particularly in underserved populations [16, 17]. Decision aids, like the one used in the online sample, have been shown to increase knowledge and intention to screen, even in underserved samples [15, 18]. It may be more important to distribute these types of tools to underserved patients to ensure informed decisions.

There are several screening options available to patients. Three goals appeared to have strongest association with screening choice: the desire to find colon cancer early and to know whether you have cancer appeared to be traded off with the desire to avoid invasive tests. These three goals had good retest reliability and were able to discriminate among those who had colonoscopy versus some other test. To support shared decision making conversations around colon cancer testing, it would be important for providers to assess, at a minimum, how patients feel about these three issues.

In this study, those who did not get screened were categorized as not having made a “concordant” decision. Guidelines clearly support some sort of colon cancer screening for men and women age 50–75 [1]. It is certainly possibly that an individual may consider the potential benefits and harms of colon cancer screening and make an informed decision not to pursue screening. However, about half of the respondents who did not report any screening test for each sample, had goals that indicated a preference for colonoscopy. The other half were not significantly different from those who had some other test.

A small percentage in each group met both of the criteria for a high quality decision, suggesting considerable room for improvement across all samples. The level of decision quality did not vary by the type of test (colonoscopy vs. other test). The results from this study did not find an association between self reported participation in the decision and decision quality. Studies have suggested that engaging patients though the use of decision aids or offering them a choice of screening options may increase screening rates [19–21]. It would be important for future studies to examine whether higher decision quality leads to higher screening rates.

There are several limitations to the studies that should be noted. The retrospective surveys are subject to recall bias and the respondents’ knowledge and goals may be different if assessed closer to the time of the actual decision. The sample recruited online was well educated, had Internet access and may not generalize to the wider population. The clinic sample had much more diversity with respect to race, socioeconomic variables and education; however, it was limited to English speaking respondents and we did not have information on clinic non responders. Further, some of the differences between the online and clinic samples may be due to differences in mode of administration (e.g. those completing the survey online may have looked up information regarding the knowledge items whereas those completing it in clinic did not have that opportunity). Despite these limitations, the studies do provide considerable data to evaluate the properties of the survey instrument and its use in different populations, and with different modes of administration.

Conclusions

The CRC-DQI is a new survey instrument that has demonstrated strong content validity, discriminant validity and moderate retest reliability. As guidelines increasingly emphasize the importance of informing patients and offering them a choice of screening tests for colon cancer, the CRC-DQI provides a validated means of measuring whether that occurs. The survey may be administered online, by mail, or in person, to evaluate the extent to which colon cancer screening decisions are informed and match patients’ goals.

References

U.S. Preventive Services Task Force: Screening for Colorectal Cancer: U.S. Preventive Services Task Force Recommendation Statement. AHRQ Publication 08-05124-EF-3,http://www.uspreventiveservicestaskforce.org/uspstf08/colocancer/colors.htm,

Sepucha K, Fowler FJ, Mulley AG: Policy support for patient-centered care: the need for measurable improvements in decision quality. Health Aff (Millwood). 2004, Suppl Web Exclusive:VAR54-62. doi:10.1377/hlthaff.var.54

Sepucha K, Thomson R, Borkhoff C, Lally J, Levin CA, Matlock DD, Ng CJ, Ropka M, Stacey D, Williams NJ, Wills CE: Establishing The Effectiveness. 2012 Update of the International Patient Decision Aids Standards Collaboration’s Background Document. Chapter L. Edited by: Volk R, Llewellyn-Thomas H. 2012, http://ipdas.ohri.ca/IPDAS-Chapter-L.pdf, http://ipdas.ohri.ca/resources.html

Hoffman RM, Lewis CL, Pignone MP, Couper MP, Barry MJ, Elmore JG, Levin CA, Van Hoewyk J, Zikmund-Fisher BJ: Decision-making processes for breast, colorectal, and prostate cancer screening: the DECISIONS survey. Med Decis Making. 2010, 5 (Suppl): 53S-64S.

Dolan NC, Ferreira R, Davis TC, Fitzgibbon ML, Rademaker A, Liu D, Schmitt BP, Gorby N, Wolf M, Bennett C: Colorectal cancer screening knowledge, attitudes, and beliefs among veterans: does literacy make a difference. J Clin Oncol. 2004, 22 (13): 2617-2622.

Shokar NK, Carlson CA, Weller SC: Informed decision making changes test preferences for colorectal cancer screening in a diverse population. Ann Fam Med. 2010, 8 (2): 141-150. doi:10.1370/afm.1054

van Dam L, Hol L, de Bekker-Grob EW, Steyerberg EW, Kuipers EJ, Habbema JDF, Essink-Bot ML, van Leerdam ME: What determines individuals’ preferences for colorectal cancer screening programmes? A discrete choice experiment. Eur J Cancer. 2010, 46: 150-159.

Sepucha KR, Stacey D, Clay CF, Chang Y, Cosenza C, Dervin G, Dorrwachter J, Feibelmann S, Katz JN, Kearing SA, Malchau H, Taljaard M, Tomek I, Tugwell P, Levin CA: Decision quality instrument for treatment of hip and knee osteoarthritis: a psychometric evaluation. BMC Musculoskelet Disord. 2011, 12 (149): 1-12.

Sepucha KR, Feibelmann S, Abdu W, Clay CF, Cosenza C, Kearing S, Levin CA, Atlas SJ: Psychometric evaluation of a decision quality instrument for treatment of lumbar herniated disc. Spine. 2012, 37 (18): 1609-1616.

Sepucha KR, Belkora JK, Chang Y, Cosenza C, Levin CA, Moy B, Partridge A, Lee CN: Measuring decision quality: psychometric evaluation of a new instrument for breast cancer surgery. BMC Med Inform Decis Mak. 2012, 12: 51-

Fitzpatrick R, Davey C, Buxton MJ, Jones DR: Evaluating patient-based outcome measures for use in clinical trials. Health Technol Assess. 1998, 2 (14): i-iv. 1-74

Fowler FJ: Survey Research Methods. 1993, Thousand Oaks; CA: Sage Publications, Inc

DeVellis R: Scale Development Theory and Applications. 2003, Thousand Oaks: Sage Publications, 2

Nunnally JC, Bernstein IH: Psychometric Theory. 1967, New York: McGraw-Hill, 3

Stacey D, Bennett CL, Barry MJ, Col NF, Eden KB, Holmes-Rovner M, Llewellyn-Thomas H, Lyddiatt A, Légaré F, Thomson R: Decision aids for people facing health treatment or screening decisions. Cochrane Database Syst Rev. 2011, (10, Art. No.: CD001431): doi: 10.1002/14651858.CD001431.pub3

Shokar NK, Vernon SW, Weller SC: Cancer and colorectal cancer: Knowledge, beliefs, and screening preferences of a diverse patient population. Clin Res Meth. 2005, 37 (5): 341-347.

Miller DP, Brownlee CD, McCoy TP, Pignone MP: The effect of health literacy on knowledge and receipt of colorectal cancer screening: a survey study. BMC Fam Pract. 2007, 8: 16-

Miller DP, Spangler JG, Case LD, Goff DC, Singh S, Pignone M: Effectiveness of a web-based colorectal cancer screening patient decision aid: a randomized controlled trial in a mixed-literacy population. Am J Prev Med. 2011, 40 (6): 608-615. doi:10.1016/j.amepre.2011.02.019

Inadomi JM, Vijan S, Janz NK, Fagerlin A, Thomas JP, Lin YV, Muñoz R, Lau C, Somsouk M, El-Nachef N, Hayward RA: Adherence to colorectal cancer screening: a randomized clinical trial of competing strategies. Arch Intern Med. 2012, 172 (7): 575-582. doi:10.1001/archinternmed.2012.332

Pignone M, Harris R, Kinsinger L: Videotape-based decision aid for colon cancer screening: A randomized, controlled trial. Ann Intern Med. 2000, 133 (10): 761-769.

Schroy PC, Lal S, Glick JT, Robinson PA, Zamor P, Heeren TC: Patient preferences for colorectal cancer screening: how does stool DNA testing fare?. Am J Manag Care. 2007, 13 (7): 393-400.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1472-6947/14/72/prepub

Acknowledgement

The authors would like to acknowledge the participation of the many patients and providers in these studies. The funding for the study was provided from the Informed Medical Decisions Foundation (IMDF). The decision aid used in the online sample was developed by the IMDF in collaboration with Health Dialog, Inc. The AMA is the source of the data used to identify the providers surveyed in this research study.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

Dr. Sepucha receives salary and research support from the Informed Medical Decisions Foundation. Dr. Levin receives salary support as Research Director for the Informed Medical Decisions Foundation, a not-for-profit (501 (c) 3) private foundation (http://www.informedmedicaldecisions.org). The funding agreements ensured the authors’ independence in designing the study, interpreting the data, writing, and publishing the report.

The Foundation develops content for patient education programs. The Foundation has an arrangement with a for-profit company, Health Dialog, to co-produce these programs. The programs are used as part of the decision support and disease management services Health Dialog provides to consumers through health care organizations and employers.

Authors’ contributions

All authors contributed substantially to one or more of the studies including (1) the conception and design of patient study (KRS, CC, CL) and provider study (KRS, CC, CL), acquisition of data (KRS, SF, CC), or analysis and interpretation of data (all authors) (2) drafting the article or revising it critically for important intellectual content (all authors) (3) final approval of the version to be submitted (all authors). The corresponding author, KRS (ksepucha@partners.org), is responsible for the integrity of the work as a whole.

Electronic supplementary material

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Sepucha, K.R., Feibelmann, S., Cosenza, C. et al. Development and evaluation of a new survey instrument to measure the quality of colorectal cancer screening decisions. BMC Med Inform Decis Mak 14, 72 (2014). https://doi.org/10.1186/1472-6947-14-72

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1472-6947-14-72