Abstract

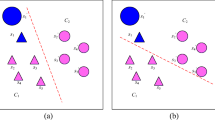

AdaBoost is a method for improving the classification accuracy of a given learning algorithm by combining hypotheses created by the learning alogorithms. One of the drawbacks of AdaBoost is that it worsens its performance when training examples include noisy examples or exceptional examples, which are called hard examples. The phenomenon causes that AdaBoost assigns too high weights to hard examples. In this research, we introduce the thresholds into the weighting rule of AdaBoost in order to prevent weights from being assigned too high value. During learning process, we compare the upper bound of the classification error of our method with that of AdaBoost, and we set the thresholds such that the upper bound of our method can be superior to that of AdaBoost. Our method shows better performance than AdaBoost.

Similar content being viewed by others

References

T.G. Dietterich, Approximate statistical tests for comparing supervised classification learning algorithms, Neural Computation 10(7) (1998) 1895–1923.

T.G. Dietterich, An experimental comparison of three methods for constructing ensembles of decision trees: Bagging, boosting, and randomization, Machine Learning 32(1) (1999) 1–22.

Y. Freund and R.E. Schapire, A decision-theoretic generalization of on-line learning and an application to boosting, Journal of Computer and System Sciences 55(1) (1997) 119–139.

L. Mason, J. Baxter, P. Bartlett and M. Frean, Boosting algorithms as gradient descent, Advances in Neural Information Processing Systems 12 (2000) 512–518.

J.R. Quinlan, Bagging, boosting and C4.5, in: Proc. of 13th AAAI (1996) pp. 725–730.

G. Rätsch, T. Onoda and K.R. Müller, Soft margins for AdaBoost, Machine Learning 42(3) (2000) 287–320.

R.E. Schapire, Theoretical views of boosting, in: Proc. of 4th EuroCOLT'99 (1999) pp. 1–10.

R.E. Schapire, Y. Freund, P. Bartlett and W.S. Lee, Boosting the margin: A new explanation for the effectiveness of voting methods, in: Proc. of 14th International Conference on Machine Learning (1997) pp. 322–330.

R.E. Schapire and Y. Singer, Improved boosting algorithms using confidence-rated predictions, in: Proc. of 11th Annual Conference on Computational Learning Theory (1998) pp. 80–91.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Nakamura, M., Nomiya, H. & Uehara, K. Improvement of Boosting Algorithm by Modifying the Weighting Rule. Annals of Mathematics and Artificial Intelligence 41, 95–109 (2004). https://doi.org/10.1023/B:AMAI.0000018577.32783.d2

Issue Date:

DOI: https://doi.org/10.1023/B:AMAI.0000018577.32783.d2