Abstract

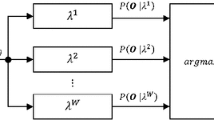

Handling variable, non-stationary ambient noise is a challenging task for automatic speech recognition (ASR) systems. To address this issue, multi-style, noise condition independent (CI) model training using speech data collected in diverse noise environments, or uncertainty decoding techniques can be used. An alternative approach is to explicitly approximate the continuous trajectory of Gaussian component mean and variance parameters against the varying noise level, for example, using variable parameter hidden Markov model (VPHMM). This paper investigates a more generalized form of variable parameter HMMs (GVP-HMM). In addition to Gaussian component means and variances, it can also provide a more compact trajectory modeling for tied linear transformations. An alternative noise condition dependent (CD) training algorithm is also proposed to handle the bias to training noise condition distribution. Consistent error rate gains were obtained over conventional VP-HMM mean and variance only trajectory modeling on a media vocabulary Mandarin Chinese in-car navigation command recognition task.

Similar content being viewed by others

References

Lippmann R, Martin E, Paul D. Multi-style training for robust isolated-word speech recognition. In: Proceedings of IEEE ICASSP, Dallas, Texas, USA, 1987. 705–708

Anastasakos T, McDonough J, Schwartz R, et al. A compact model for speaker-adaptive training. In: Proceedings of ICSLP, Philadelphia, PA, USA, 1996. 1137–1140

Gales M J F. Maximum likelihood linear transformations for HMM-based speech recognition. Comput Speech Lang, 1998, 12: 171–185

Leggetter C J, Woodland P C. Maximum likelihood linear regression for speaker adaptation of continuous density HMMs. Comput Speech Lang, 1995, 9: 171–186

Flego F, Gales M J F. Discriminative adaptive training with VTS and JUD. In: Proceedings of ASRU, Merano, Italy, 2009. 170–175

Yu K, Gales M J F. Bayesian adaptive inference and adaptive training. IEEE Trans Audio Speech Lang Process, 2007, 15: 1932–1943

Gales M J F. Adaptive training for robust ASR. In: Proceedings of ASRU, Madonna di Campiglio, Italy, 2001. 15–20

Yu K, Gales M J F. Bayesian adaptation and adaptively trained systems. In: Proceedings of ASRU, Cancun, Mexico, 2005. 209–214

Arrowood J A, Clements M A. Using observation uncertainty in HMM decoding. In: Proceedings of ICSLP, Denver, Colorado, USA, 2002. 1561–1564

Deng L, Droppo J, Acero A. Dynamic compensation of HMM variances using the feature enhancement uncertainty computed from a parametric model of speech distortion. IEEE Trans Speech Audio, 2005, 13: 412–421

Kristjansson T T, Frey B J. Accounting for uncertainty in observations: A new paradigm for robust speech recognition. In: Proceedings of ICASSP, Orlando, Florida, USA, 2002. 61–64

Droppo J, Acero A, Deng L. Uncertainty decoding with SPLICE for noise robust speech recognition. In: Proceedings of ICASSP, Orlando, Florida, USA, 2002. 57–60

Liao H, Gales M J F. Issues with uncertainty decoding for noise robust speech recognition. In: Proceedings of Interspeech, Pittsburgh, PA, USA, 2006

Benitez C, Segura J, de la Tore A, et al. Including uncertainty of speech observation in robust speech recognition. In: Proceedings of ICSLP, Jeju island, Korea, 2004. 137–140

Liao H, Gales M J F. Joint uncertainty decoding for noise robust speech recognition. In: Proceedings of Interspeech, Lisbon, Portugal, 2005

Liao H, Gales M J F. Adaptive training with joint uncertainty decoding for robust recognition of noisy data. In: Proceedings of ICASSP, Honolulu, Hawaii, USA, 2007. 389–392

Arrowood J A, Clements M A. Using observation uncertainty in HMM decoding. In: Proceedings of ICSLP, Denver, Colorado, USA, 2002. 1561–1564

Steouten V, van Hamme H, Wambacq P. Accounting for the uncertainty of speech estimates in the context of model-based feature enhancement. In: Proceedings of ICSLP, Jeju island, Korea, 2004. 105–108

Deng L, Droppo J, Acero A. Exploiting variances in robust feature extraction based on a parametric model of speech distortion. In: Proceedings of ICSLP, Jeju island, Korea, 2002. 806–809

Wolfel M, Faubel F. Considering uncertainty by particle filter enhanced speech feature in large vocabulary continuous speech recognition. In: Proceedings of ICASSP, Honolulu, Hawaii, USA, 2007. 1049–1052

Fujinaga K, Nakai M, Shimodaira H, et al. Multiple-regression hidden Markov model. In: Proceedings of IEEE ICASSP, Salt Lake City, Utah, USA, 2001. 1: 513–516

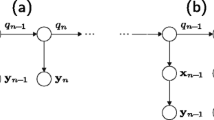

Cui X, Gong Y. A study of variable-parameter Gaussian mixture hidden Markov modeling for noisy speech recognition. IEEE Trans Audio Speech Lang Process, 2007, 15: 1366–1376

Yu D, Deng L, Gong Y, et al. Discriminative training of variable-parameter HMMs for noise robust speech recognition. In: Proceedings of Interspeech, Brisbane, Australia, 2008. 285–288

Yu D, Deng L, Gong Y, et al. Parameter clustering and sharing in variable-parameter HMMs for noise robust speech recognition. In: Proceedings of Interspeech, Brisbane, Australia, 2008. 1253–1256

Yu D, Deng L, Gong Y, et al. A novel framework and training algorithm for variable-parameter hidden Markov models. IEEE Trans Audio Speech Lang Process, 2009, 17: 1348–1360

Bjorck A, Pereyra V. Solution of Vandermonde systems of equations. Math Comput (Am Math Soc), 1970, 24: 893–903

Dempster A P, Laird N M, Rubin D B. Maximum likelihood from incomplete data via the EM algorithm. J Royal Stat Soc, 1977, 39: 1–39

Martin R. An efficient algorithm to estimate the instantaneous SNR speech signals. In: Proceedings of Eurospeech, Berlin, Germany, 1993. 1093–1096

Young S, Evermann G, Gales M, et al. The HTK Book. Version 3.4.1. Cambridge: Cambridge University Engineering Department, 2009

Author information

Authors and Affiliations

Corresponding author

Additional information

CHENG Ning was born in 1981. He received the Ph.D. degree in pattern recognition and intelligent systems from Institute of Automation, Chinese Academy of Sciences, Beijing, China in 2009. Currently, he is a postdoctoral researcher at Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences. His research interests include robust speech recognition, speech enhancement and microphone array.

WANG Lan is a Professor of Shen-Zhen Institutes of Advanced Technology, Chinese Academy of Sciences. She received her M.S. degree in the Center of Information Science, Peking University. She obtained her Ph.D. degree from the Machine Intelligence Laboratory of Cambridge University Engineering Department in 2006, and then worked as a research associate in CUED. Her research interests are large vocabulary continuous speech recognition, speech visualization and audio information indexing.

LIU XunYing was born in 1978. He received the Ph.D. degree in speech recognition in 2006 and MPhil degree in computer speech and language processing in 2001 both from University of Cambridge, prior to a bachelor’s degree from Shanghai Jiao Tong University in 2000. He is currently a Senior Research Associate at the Machine Intelligence Laboratory of the Cambridge University Engineering Department. He is the lead researcher on the EPSRC funded Natural Speech Technology and the DARPA funded Broad Operational Language Translation Programs at Cambridge. He was the recipient of best paper award at ISCA Interspeech2010. His current research interests include large vocabulary continuous speech recognition, language modelling and adaptation, weighted finite state transducers, factored acoustic modelling, noise robust speech recognition and statistical machine translation. Dr. Liu Xunying is a member of IEEE and ISCA.

Rights and permissions

About this article

Cite this article

Cheng, N., Liu, X. & Wang, L. A flexible framework for HMM based noise robust speech recognition using generalized parametric space polynomial regression. Sci. China Inf. Sci. 54, 2481–2491 (2011). https://doi.org/10.1007/s11432-011-4490-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11432-011-4490-6