Abstract

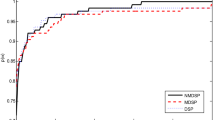

For finding the minimum value of differentiable functions over a nonempty closed convex subset of a Hilbert space, the hybrid steepest descent method (HSDM) can be applied. In this work, we study perturbed algorithms in line with a generalized HSDM and discuss how some selections of perturbations enable us to increase the convergence speed. When we specialize these results to constrained minimization then the perturbations become bounded perturbations used in the superiorization methodology (SM). We show usefulness of the SM in studying the constrained convex minimization problem for subdifferentiable functions and proceed with the study of the computational efficiency of the SM compared with the HSDM. In the computational experiment comparing the HSDM with superiorization, the latter seems to be advantageous for the specific experiment.

Similar content being viewed by others

References

Halpern, B.: Fixed points of nonexpanding maps. Bull. Am. Math. Soc. 73, 957–961 (1967)

Yamada, I.: The hybrid steepest descent method for the variational inequality problems over the intersection of fixed point sets of nonexpansive mappings. In: Butnariu, D, Censor, Y, Reich, S (eds.) Inherently Parallel Algorithms in Feasibility and Optimization and Their Applications. Studies in Computational Mathematics, vol. 8, pp 473–504. North-Holland, Amsterdam (2001)

Yamada, I., Ogura, N., Shirakawa, N.: A numerically robust hybrid steepest descent method for the convexly constrained generalized inverse problems. Contemp. Math. 313, 269–305 (2002)

Censor, Y., Davidi, R., Herman, G.T.: Perturbation resilience and superiorization of iterative algorithms. Inverse Prob. 26, 065008 (2010)

Nikazad, T., Davidi, R., Herman, G.T.: Accelerated perturbation-resilient block-iterative projection methods with application to image reconstruction. Inverse Prob. 28, 035005 (2012)

Censor, Y.: Weak and strong superiorization: between feasibility-seeking and minimization. An. St. Univ. Ovidius Constanta, Ser. Mat. 23, 41–54 (2015)

Censor, Y., Zaslavski, A.J.: Strict fejér monotonicity by superiorization of feasibility-seeking projection methods. J. Optim. Theory Appl. 165, 172–187 (2015)

Cegielski, A., Al-Musallam, F.: Superiorization with level control. Inverse Prob. 33, 044009 (2017)

Censor, Y., Davidi, R., Herman, G.T., Schulte, R.W., Tetruashvili, L.: Projected subgradient minimization versus superiorization. J. Optim. Theory Appl. 160, 730–747 (2014)

Censor, Y.: Superiorization and perturbation resilience of algorithms: a bibliography compiled and continuously updated. http://math.haifa.ac.il/yair/bib-superiorization-censor.html see also: arXiv:1506.04219

Zaslavski, A.J.: Numerical Optimization with Computational Errors, vol. 108. Springer, Berlin (2016)

Herman, G.T., Garduño, E., Davidi, R., Censor, Y.: Superiorization: an optimization heuristic for medical physics. Med. Phys. 39, 5532–5546 (2012)

Zaslavski, A.J.: Asymptotic behavior of two algorithms for solving common fixed point problems. Inverse Prob. 33, 044004 (2017)

Censor, Y.: Can linear superiorization be useful for linear optimization problems. Inverse Prob. 33, 044006 (2017)

He, H., Xu, H.K.: Perturbation resilience and superiorization methodology of averaged mappings. Inverse Prob. 33, 044007 (2017)

Censor, Y., Heaton, H., Schulte, R.: Derivative-free superiorization with component-wise perturbations. Numer. Algor. 80, 1219–1240 (2019)

Saeidi, S., Kim, D.S.: Combination of the hybrid steepest-descent method and the viscosity approximation. J. Optim. Theory Appl. 160, 911–930 (2014)

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. Springer, New York (2017)

Mordukhovich, B.S.: Variational analysis and applications. Vol. 8 springer (2018)

Xu, H.K.: Iterative algorithms for nonlinear operators. J. London Math. Soc. 66, 240–256 (2002)

Xu, H.K., Kim, T.H.: Convergence ofhybrid steepest-descent method for variational inequalities. J. Optim. Theory Appl. 119, 185–201 (2003)

Yamada, I., Ogura, N.: Hybrid steepest descent method for variational inequality problem over the fixed point set of certain quasi-nonexpansive mappings. Numer. Funct. Anal. Optim. 25, 619–655 (2004)

Cegielski, A., Al-Musallam, F.: Strong convergence of a hybrid steepest descent method for the split common fixed point problem. Optimization 65, 1463–1476 (2016)

Xu, H.K.: An iterative approach to quadratic optimization. J. Optim. Theory Appl. 116, 659–678 (2003)

Moudafi, A.: Viscosity approximation methods for fixed-points problems. J. Math. Anal. Appl. 241, 46–55 (2000)

Garcia-Falset, J., Llorens-Fuster, E., Prus, S.: The fixed point property for mappings admitting a center. Nonlinear Anal. 66, 1257–1274 (2007)

Yamagishi, M., Yamada, I.: Nonexpansiveness of a linearized augmented Lagrangian operator for hierarchical convex optimization. Inverse Prob. 33, 044003 (2017)

Bargetz, C., Reich, S., Zalas, R.: Convergence properties of dynamic string-averaging projection methods in the presence of perturbations. Numer. Algor. 77, 185–209 (2018)

Iiduka, H.: Three-term conjugate gradient method for the convex optimization problem over the fixed point set of a nonexpansive mapping. Appl. Math. Comput. 217, 6315–6327 (2011)

Acknowledgments

The authors would like to thank the referee for giving valuable and constructive comments that greatly contributed to improving the final version of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hoseini, M., Saeidi, S. & Kim, D.S. On perturbed hybrid steepest descent method with minimization or superiorization for subdifferentiable functions. Numer Algor 85, 353–374 (2020). https://doi.org/10.1007/s11075-019-00818-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-019-00818-3