Abstract

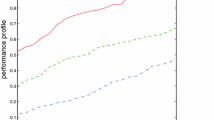

Nonmonotone line search methods for unconstrained minimization with the objective functions in the form of mathematical expectation are considered. The objective function is approximated by the sample average approximation (SAA) with a large sample of fixed size. The nonmonotone line search framework is embedded with a variable sample size strategy such that different sample size at each iteration allow us to reduce the cost of the sample average approximation. The variable sample scheme we consider takes into account the decrease in the approximate objective function and the quality of the approximation of the objective function at each iteration and thus the sample size may increase or decrease at each iteration. Nonmonotonicity of the line search combines well with the variable sample size scheme as it allows more freedom in choosing the search direction and the step size while the sample size is not the maximal one and increases the chances of finding a global solution. Eventually the maximal sample size is used so the variable sample size strategy generates the solution of the same quality as the SAA method but with significantly smaller number of function evaluations. Various nonmonotone strategies are compared on a set of test problems.

Similar content being viewed by others

References

Bastin, F.: Trust-Region Algorithms for Nonlinear Stochastic Programming and Mixed Logit Models, PhD thesis. University of Namur, Belgium, 2004

Bastin, F., Cirillo, C., Toint, P. L.: An adaptive Monte Carlo algorithm for computing mixed logit estimators. Comput. Manag. Sci. 3(1), 55–79 (2006)

Bastin, F., Cirillo, C., Toint, P. L.: Convergence theory for nonconvex stochastic programming with an application to mixed logit. Math. Program., Ser. B. 108, 207–234 (2006)

Birgin, E. G., Krejić, N., Martínez, J. M.: Globaly convergent inexact quasi-Newton methods for solving nonlinear systems. Numer. Algorithm 32, 249–260 (2003)

Byrd, R., Chin, G., Neveitt, W., Nocedal, J.: On the Use of Stochastic Hessian Information in Optimization Methods for Machine Learning. SIAM J. Optim. 21(3), 977–995 (2011)

Byrd, R., Chin, G., Nocedal, J., Wu, Y.: Sample Size Selection in Optimization Methods for Machine Learning. Math. Program. 134(1), 127–155 (2012)

Cheng, W., Li, D.H.: A derivative-free nonmonotone line search and its applications to the spectral residual method. IMA J. Numer. Anal. 29, 814–825 (2008)

Dai, Y.H.: On the nonmonotone line search. J. Optim. Theory Appl. 112, 315–330 (2002)

Deng, G., Ferris, M. C.: Variable-number sample path optimization. Math. Program. 117(1–2), 81–109 (2009)

Diniz-Ehrhardt, M.A., Martínez, J. M., Raydan, M.: A derivative-free nonmonotone line-search technique for unconstrained optimization. J. Comput. Appl. Math. 219(2), 383–397 (2008)

Dolan, E. D., Moré, J. J., Benchmarking optimization software with performance profiles. Math. Program. Ser. A. 91, 201–213 (2002)

Friedlander, M. P., Schmidt, M.: Hybrid deterministic-stochastic methods for data fitting, SIAM. J. Sci. Comput. 34(3), 1380–1405 (2012)

Fu, M. C.: Handbook in OR & MS. In: Henderson, S.G., Nelson, B.L. (eds.) Gradient Estimation, Vol. 13, pp 575–616 (2006)

Grippo, L., Lampariello, F., Lucidi, S.: A nononotone line search technique for Newton’s method, SIAM. J. Numer. Anal. 23(4), 707–716 (1986)

Grippo, L., Lampariello, F., Lucidi, S.: A class of nonmonotone stabilization methods in unconstrained optimization. Numer. Math. 59, 779–805 (1991)

Homem-de-Mello, T.: Variable-Sample Methods for Stochastic Optimization. ACM Trans. Model. Comput. Simul. 13(2), 108–133 (2003)

Krejić, N., Krklec, N.: Line search methods with variable sample size for unconstrained optimization. J. Comput. Appl. Math. 245, 213–231 (2013)

Krejić, N., Rapajić, S.: Globally convergent Jacobian smoothing inexact Newton methods for NCP. Comput. Optim. Appl. 41(2), 243–261 (2008)

La Cruz, W., Martínez, J. M., Raydan, M.: Spectral residual method without gradient information for solving large-scale nonlinear systems of equations. Math. Comput. 75, 1429–1448 (2006)

Li, D. H., Fukushima, M.: A derivative-free line search global convergence of Broyden-like method for nonlinear equations. Opt. Methods Softw. 13, 181–201 (2000)

Lizotte, D. J., Greiner, R., Schuurmans, D.: An experimental methodology for response surface optimization methods. J. Glob. Optim. 53(4), 699–736 (2012)

Nesterov, Y.: Introductory Lectures on Convex Optimization. Kluwer Academic Publishers (2004)

Nocedal, J., Wright, S. J.: Numerical Optimization. Springer (1999)

Pasupathy, R.: On Choosing Parameters in Retrospective-Approximation Algorithms for Stochastic Root Finding and Simulation Optimization. Oper. Res. 58(4), 889–901 (2010)

Polak, E., Royset, J. O.: Eficient sample sizes in stochastic nonlinear programing. J. Comput. Appl. Math. 217(2), 301–310 (2008)

Raydan, M.: The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Optim. 7, 26–33 (1997)

Royset, J. O.: Optimality functions in stochastic programming. Math. Program. 135(1–2), 293–321 (2012)

Shapiro, A., Ruszczynski, A.: Stochastic Programming. In: Handbooks in Operational Research and Management Science, vol. 10, pp. 353–425. Elsevier (2003)

Spall, J. C.: Introduction to Stochastic Search and Optimization. In: Wiley-Interscience serises in discrete mathematics. New Jersey (2003)

Tavakoli, R., Zhang, H.: A nonmonotone spectral projected gradient method for large-scale topology optimization problems. Numer. Algebra Control. Optim. 2(2), 395–412 (2012)

Toint, P. L.: An assessment of nonmonotone line search techniques for unconstrained optimization. SIAM J. Sci. Comput. 17(3), 725–739 (1996)

Zhang, H., Hager, W. W.: A nonmonotone line search technique and its application to unconstrained optimization. SIAM J. Optim. 4, 1043–1056 (2004)

Author information

Authors and Affiliations

Corresponding author

Additional information

Research supported by Serbian Ministry of Education Science and Technological Development, grant no. 174030

Rights and permissions

About this article

Cite this article

Krejić, N., Krklec Jerinkić, N. Nonmonotone line search methods with variable sample size. Numer Algor 68, 711–739 (2015). https://doi.org/10.1007/s11075-014-9869-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-014-9869-1