Abstract

Neuronal adaptation is the intrinsic capacity of the brain to change, by various mechanisms, its dynamical responses as a function of the context. Such a phenomena, widely observed in vivo and in vitro, is known to be crucial in homeostatic regulation of the activity and gain control. The effects of adaptation have already been studied at the single-cell level, resulting from either voltage or calcium gated channels both activated by the spiking activity and modulating the dynamical responses of the neurons. In this study, by disentangling those effects into a linear (sub-threshold) and a non-linear (supra-threshold) part, we focus on the the functional role of those two distinct components of adaptation onto the neuronal activity at various scales, starting from single-cell responses up to recurrent networks dynamics, and under stationary or non-stationary stimulations. The effects of slow currents on collective dynamics, like modulation of population oscillation and reliability of spike patterns, is quantified for various types of adaptation in sparse recurrent networks.

Similar content being viewed by others

1 Introduction

Most neurons in primary sensory areas tend to change the strength of their dynamical responses over time for sustained and constant inputs, in a so-called adaptation process. The detailed mechanisms of this adaptation are still not clearly understood, and can result from various phenomena that might be combined: homeostasis or intracellular mechanisms (Turrigiano and Nelson 2004; Benda and Herz 2003), short term plasticity (Tsodyks et al. 2000), or even network-wide effect originating from lateral connections (Haider et al. 2010). In this paper, we propose to investigate computationally some effects of neuronal adaptation, from both a single-cell and a network point of view. By using a phenomenological model for neurons based on an integrate-and-fire model with intrinsic adaptation and its macroscopic counterparts, we studied the effects of slow adaptation currents on neuronal dynamics at the network level.

Detailed models of intrinsic plasticity as a source of homeostasis and neuronal adaptation have already been investigated. Such biological models are used to explore the biomechanistic effects of slow ionic channels on the microscopic cortical activity of single cells (Benda and Herz 2003; Gigante et al. 2007). On a more phenomenological level, they can be used to study the firing rates dynamics (Treves 1993) or constrain their distribution (Benucci et al. 2013) in macroscopic models of cortical networks. All those models, however, are mostly based on two types of current involved in slow adaptation and related to the flow of potassium and calcium ions through the membrane. The interaction of the adaptation currents with the neuronal dynamics is complex, impacting both the firing threshold and the behaviour of the cell (Benda et al. 2010). On one hand, voltage-gated potassium currents, like Kv1, are activated by a mild depolarization of the membrane potential and control the propagation of spikes by modulating the spike threshold (Higgs and Spain 2011), a phenomena also referred to as accommodation. On the other hand, calcium-gated potassium currents are activated only at high levels of depolarization and result in after-hyper-polarization (AHP), a drop of the membrane potential and long lasting decrease of excitability after a spike is emitted (Andrade 2011). The time scale of these adaptation mechanisms can expand over a wide range up to minutes (Pozzorini et al. 2013) and are very heterogeneously distributed among cells but especially pronounced in pyramidal cells (Nowak et al. 2003).

Combined all together, those two distinct adaptation mechanisms can prevent the saturation in the spike generation process of a neuron or modulate its synchronization properties, both acting in a different manner as shown in previous experimental (Deemyad et al. 2012) or theoretical works (Ermentrout 1998; Ermentrout et al. 2001; Prescott and Sejnowski 2008; La Camera et al. 2004). The voltage-gated sub threshold current shifts the input threshold for triggering spike depending on the basal activity: it can thus be seen as a good mechanism to explain that some response properties of a neuron are independent of the background inputs. It has also been shown to play a role in the homeostasis after sensory deprivation (Nataraj et al. 2010). Conversely, the calcium-gated current triggered by a spike does not only affect the threshold but also increase the dynamic range of the neuronal response and thus avoids saturation. Based on those observations, we will refer to those two different mechanisms of adaptation as a linear or sub-threshold one (voltage-gated channels) versus a non-linear or supra-threshold one (calcium-gated channels). The role of adaptation in enhancing reliability of spike-timing of neurons stimulated with periodic inputs was also studied in Schreiber et al. (2004). Adaptation could also act functionally as a decorrelation machine (Wang 1998).

The dynamics of cortical networks are considerably enriched with adaptation currents, inducing bursting activity, slow oscillations, and homeostasis (Tabak et al. 2000; Giugliano et al. 2004; Gigante et al. 2007). Rhythmic activity of central pattern generators involved in locomotor behaviour (Grillner 1997), or multi-stable dynamics of cortical networks when ambiguous stimulus is presented (Wilson 2003) were related to slow potassium currents. More recently it has been shown that such currents should be included to explain the rich repertoire of ongoing activity observed in vivo (Mattia and Sanchez-Vives 2012) and which could be the microscopic substrate of the resting state activity recorded in fMRI (Gusnard and Raichle 2001). It is therefore crucial to have a better understanding of the effect of this adaptation at a single-cell or at a population level.

In this study, we investigated those physiological observations on adaptation in a model of cortical dynamics simple enough, so that large scale simulations can be performed. To do so, we used an adaptive exponential integrate-and-fire neuron model suited for large-scale simulations of cortical networks (Brette and Gerstner 2005) and known to be complex enough to reproduce all the dynamical repertoire recorded in vitro in various cell types (Izhikevich 2001; Brette and Gerstner 2005). Indeed, the dynamics of networks of such units have been recently investigated in Ladenbauer et al. (2014) and Farkhooi et al. (2011) and this model have been successful in capturing more diverse dynamics by generating a slow inhibitory feedback, reflecting the fact that neurons tends to adapt when stimulated with a constant inputs. While a classical model would provide a sustained response, models with adaptation will have response closer to what is observed in biological recordings. By studying the sub-threshold (linear) and the supra-threshold (non-linear) effects of the adaptation on single-cell response or in a neuronal network, we were able to disentangle the functional role of those two components on aspects of the neuronal dynamics, like oscillations or the reliability of spike patterns.

2 Materials and methods

Neuron model

Simulations of the spiking neurons were performed using a custom version of the NEST simulator (Gewaltig and Diesmann 2007) and the PyNN interface (Davison et al. 2008), with a fixed time step of 0.1ms. In all simulations, we use a planar integrate and fire neuron with exponential non-linearity as introduced in Brette and Gerstner (2005). The dynamics of the membrane potential is composed of a capacitive current \(C_{\mathrm {m}} \frac {dV_{\mathrm {m}}(t)}{dt}\) and a leak current −g L(V m(t)−E L ), with leak conductance g L and leak reversal potential E L . The ratio τ m = C m/g L gives the relaxation time constant for the membrane voltage. Spikes are generated quasi-instantaneously by active conductances rendered by an exponential non-linearity for the current \(\psi (V_{\mathrm {m}}(t))={\Delta }_{T} e^{\frac {V_{\mathrm {m}}(t)-V_{T}}{{\Delta }_{T}}}\). Thus, a spike is initiated when the membrane potential V m goes over V T (with V T > E L ). The spike is cut when the voltage reaches V spike, the membrane potential is then reset to V reset.

An additional slow variable u, with timescale τ u, accounts for the effects of adaptation currents resulting from channels with slow dynamics. The coupling parameter a between V m and u is a linear approximation of hyper-polarizing (a>0) ionic conductances such as those associated with voltage gated potassium channels. Finally, u is increased by an amount b after each spike, which models the effects of highly non-linear conductances such as those associated with calcium gated potassium channels. This results in the following system for (V m, u):

with the spike condition:

The details of all numerical values for cell properties can be found in Table 1. These values were chosen according to those found in the literature for cortical neurons (Pospischil et al. 2011; Rossant et al. 2011; Hertäg et al. 2014). Parameters are identical for excitatory and inhibitory neurons except when specified, for example adaptation parameters are set to 0 for inhibitory cells. For every simulation, initial values of V m are drawn from a distribution uniform in [V rest, V T].

Synapses

Changes in synaptic conductances triggered by incoming spikes from excitatory and inhibitory neurons are modeled such that the total synaptic current to a neuron can be written as

The times \({t_{k}^{s}}\) (s∈{exc, inh}) are the times of the incoming spikes, respectively at excitatory and inhibitory synapses. The dynamics g s∈{exc, inh}(t) after a spike is described by an alpha-function, from Rall synapse model (Bard Ermentrout and Terman 2010), so that the synaptic inputs may be rewritten as the convolution of the spike trains with kernels K s with \(K^{s}(t)=[t]_{+} e^{-\frac {t}{\tau _{s}}}/\tau _{s}\), [.]+ representing the Heaviside function. If the maximal conductance for a synapse type s is written \({g_{s}^{m}}\), we have \(g_{s}(t)={\sum }_{k}{g_{s}^{m}} K^{s}(t-{t_{k}^{s}})\), with k running over incoming spikes and s∈{exc, inh}. Figure 1 is illustrating all those concepts, in a condensed form. In all the paper, we took τ exc = 2ms and τ inh = 3ms for the synaptic time constants.

Diffusion approximation

The spiking activity for a neuron receiving only excitatory inputs at a rate ν exc is controlled by the total effective conductance input \(\mu =g^{m}_{exc}\nu _{\text {exc}}\tau _{\text {exc}}\). The minimum effective conductance input for spike to be triggered, related to the rheobase current, is the one that brings the membrane potential just above its threshold value and it is defined in the following by \(g^{\text {rheo}}=\frac {g_{L}(V_{T}-E_{L})}{V_{T}}\). The deviation of the membrane potential from the diffusion approximation is measured by the Kullback-Leibler divergence between the simulated membrane potential P mc and a theoretical Gaussian distribution P th having mean and variance as predicted from the diffusion approximation:

Adaptation

In all the manuscript, we consider the sub-threshold or linear part of the adaptation as the one controlled by the a parameter in the equation of the adaptive exponential neuron, and the supra-threshold or non-linear part of the adaptation as the one controlled by the b parameter. Therefore, a neuron with only linear adaptation is one with b = 0, and one with only non-linear adaptation has a = 0. Physiological interpretation of these parameters is discussed in the neuron model description.

Cortical column

A column is composed of two populations of excitatory and inhibitory neurons connected in a random manner (Erdös-Renyi wiring) with excitatory and inhibitory weights g exc and g inh, and receiving external input ν ext from spike source generated through Poisson processes with weights g ext. This is equivalent to a so-called sparse balanced network (Brunel 2000). Neuron parameters are the same as those described in Table 1. A schematic drawing of the column can be found on Fig. 4a. More precisely, the sizes of the populations are N exc = 800, N inh = 200 and N ext = 200 (a classical 4:1 ratio between excitatory and inhibitory cells). Connections among neurons are drawn randomly with probabilities p AB , A (and B) being populations from which input (and output) neurons are selected (E for excitatory, I for inhibitory, ext for external input). In and out degrees of neurons are thus distributed according to a Poisson law with parameter p AB N A N B . In all the simulations, we use a value of p EE = p EI = 0.05, p II = p IE = 0.05 and p ext = 0.01. Excitatory weights are fixed to g exc = 6nS and inhibitory weights are modified through the control parameter g defined by g inh = g.g exc. For external connections, we have g ext = g exc/6. Delays of the connection are all equal to the simulation time step, i.e. 0.1ms.

Classification of dynamical regimes

The column is considered to be in a Synchronous regime if the pairwise spike correlations 〈CC(0)〉 are over a 0.026 threshold value. Pairwise spike correlations are computed as the mean Pearson correlation coefficient averaged over N = 10000 pairs of randomly chosen cells. The area for saturated regime with Synchronous regular dynamics correspond to an average firing rate over 75 Hz and a mean coefficient of variation for the interspike intervals (CV ISI) less than 0.2. Silent regimes correspond to firing rates lower than 0.2 Hz. Regions of the diagram not detected by these criteria are denoted as the Asynchronous Irregular regime.

Reliability of responses

The reliability of the response is assessed by considering repeated input spike trains from a population of 2000 neurons connected with probability p ext = 0.01 to the excitatory cells of the column. For inputs as homogeneous Poisson process, we consider spike trains with firing rate, r cst = 85Hz and for inputs as inhomogeneous Poisson processes, the firing rate is modulated by a sine function, r var = f 0+f 1 cos(2πωt) with f 0 = 100Hz, f 1 = 40Hz and ω = 5Hz. The spiking response and the average membrane voltage is recorded for 40 times and measures are averaged on these 40 repetitions of the same input spike trains.

The reliability of the spiking response is monitored by the pairwise spike correlation averaged over pairs of repetitions. To assess the reliability of membrane potentials, we monitor the Coefficient of Variation (CV) of the mean voltage V c of excitatory neurons, that is the standard deviation divided by the mean over the 40 repeated trials, and is unitless. The spike and voltage reliability measures are averaged over 10 different inputs and reported with standard deviations for various values of a and b in Fig. 9.

Mean field

The coarse-grained dynamics of a column can be captured by the firing rates (r E , r I ) averaged over excitatory and inhibitory populations. The evolution equation for the firing rate dynamics is then reduced to the Wilson-Cowan system:

with w AB standing for the effective coupling from the sub-population A to the sub-population B (\(w_{AB}=p_{AB}N_{A} N_{B} {g_{A}^{m}}\), p AB probability of connection from A to B, N A number of neurons in population A, \({g_{A}^{m}}\) conductance level at a synaptic contact), τ A the synaptic time constant of the sub-population A mentionned in the neuron model and f A the firing rate response of sub-population A. The function f A (I) is the response curve, that is the mean firing rate of neurons in sub-population A when stimulated by incoming inputs I. These response curves and their approximations are discussed in the results Section 3.1. We are using standard numerical methods of bifurcation theory as implemented in the AUTO library with the python interface PyDSTools (Clewley 2012). The local stability analysis for the fixed points of the dynamical system consists in the study of the set of parameters where some eigenvalues of the Jacobian are zero. Further study of the Jacobian are indicative of the type of instability at these points (Kuznetsov 1998). A fold (or saddle-node) bifurcation curve separates regions with one stable fixed point from bistable regions with two stable fixed points separated by an unstable fixed point. Along those curves, the Jacobian has a zero eigenvalue and a stable fixed point and an unstable fixed point annihilate. When an eigenvalue is non-zero but has a zero real part, there is a Hopf bifurcation curve separating a region with one stable fixed point from a region with a limit cycle. On those curves, eigenvalues of the Jacobian are a pair of pure conjugated numbers. If 2 saddle-node branches collide in the parameter plane, the resulting bifurcation point is a so-called cusp. Other bifurcations points are related to Hopf bifurcations when the linear field cancels (Bogdanov-Takens point) or when the quadratic contribution to the vector field cancels (Bautin point). Note that Bautin points separate the part of the Hopf curve where the limit cycle arising has infinitesimal amplitude from the part where it has finite amplitude. Simulations of the stochastic dynamics where implemented in C++ using the Milstein scheme.

3 Results

3.1 Dynamics of a single neuron without adaptation

First, we consider the case of a single adaptive exponential integrate-and-fire neuron neuron simply bombarded with excitatory and inhibitory homogeneous Poisson inputs. Both types of inputs are triggering conductances changes at the soma level as illustrated on Fig. 1a, and the spiking activity of the neuron is modified by the adaptation mechanisms regulating the activity. In all the following, we assume that “linear” (or sub-threshold) adaptation is mediated by the parameter a of the model (see Section 2), while “non-linear” (or supra-threshold) adaptation is related to the b parameter. To gain a better understanding of the responses, we consider the spiking activity of the neuron without any adaptation (a = 0, b = 0, see Section 2). Without a loss of generality, we can restrict the analysis to the case where only excitatory inputs are impinging the cell (ν inh = 0). In this case, the average synaptic input onto the cell for filtered Poisson spike train of rate ν exc with ν exc τ exc>>1 can simply be approximated by a Gaussian process of distribution G exc = N(μ, σ 2) with a mean μ and a variance σ 2 (Papoulis 1965; Ricciardi and Sacerdote 1979; Lánský and Lánská 1987; Richardson and Gerstner 2005; Hertäg et al. 2014) (see Section 2 for the synapse model):

Illustrationof synaptic current and diffusion approximation. a. Schematic view of a neuron with the various components included in the model. Excitatory and Inhibitory inputs are modelled as α-shaped conductances (inset), and examples of the V m dynamics can be seen in different cases: without adaptation or with non-linear adaptation. b.Plain line: KL-divergence between input simulated conductance distribution and theoretical estimate \(\mathcal {N}(\mu , \sigma ^{2})\) in the diffusion limit (ν exc> > τ exc) for various synaptic time constants, assuming independent Poisson inputs at excitatory synapses. Dashed line KL-divergence between the recorded membrane potential distribution and its closest Gaussian distribution with same mean and variance. Insets are example of simulated conductance traces and recorded V m distribution (in mV), compared to theoretical predictions

The validity of such a diffusion approximation can be checked by computing the Kullback-Leibler divergence (KL, see Section 2) between the theoretical distribution of the synaptic input conductances (G exc) and numerical simulations for various values of the maximal conductance \(g^{m}_{\text {exc}}\) and input firing rate ν exc, keeping the total mean effective input \(\mu =g^{m}_{\text {exc}} \tau _{\text {exc}} \nu _{\text {exc}}\) constant. In all the numerical simulations, we use the planar adaptive integrate and fire neuron described in Brette and Gerstner (2005) and further studied in Touboul and Brette (2008).

As we can see from Fig. 1b, in absence of adaptation and for our particular model, this diffusion approximation on G exc becomes valid for a synaptic time constant of τ exc = 2 ms., and for input rates of approximately 1000Hz (KL ≃ 0). This can be seen in the insets by the convergence of the conductance distribution toward a Gaussian distribution, while incoming synaptic conductances are shown as black traces. Similarly, the KL-divergence between the empirically measured distribution of the V m and a Gaussian distribution having mean μ V and variance \({\sigma _{V}^{2}}\) of the real V m distribution (dashed line in Fig. 1b) is converging toward zero. The voltage being filtered with a slower timescale (in the order of τ m≈10 ms, see Section 2), converges faster than for the conductance distribution. Therefore, this diffusion approximation is valid for a neuron receiving input spikes at realistic rate (1–10Hz) from a population as small a 1000 neurons. A reasonable size of a population from which a mean field model can be used is needed to describe its dynamics and to justify the size of the cortical columns we will use in the following.

Then, it is known that for a stationary Poisson input at a firing rate ν exc in the diffusion limit, i.e for low values of \(g^{m}_{\text {exc}}\) and approximated by a Gaussian process \(\mathcal {N}(\mu , \sigma ^{2})\), individual fluctuations in V m are small compared to V T . Therefore, the synaptic current \(I_{\text {exc}}(t)=G_{\text {exc}}(t)(V_{\mathrm {m}}(t)-E^{exc}_{\text {rev}})\) can also be approximated by a Gaussian process with mean \(\hat {\mu } = \mu (V_{T}-E^{exc}_{\text {rev}})\) and variance \(\hat {\sigma }^{2}=\sigma ^{2} (V_{T}-E^{exc}_{\text {rev}})^{2}\) (Destexhe et al. 2001; Richardson and Gerstner 2005). The dynamics for the exponential integrate-and-fire neuron can be summarized by the adaptive integrate-and-fire Ornstein-Uhlenbeck process defined as follows

where η(t) is drawn from a Gaussian distribution \(\mathcal {N}(0, 1)\).

For this model, as already studied in La Camera et al. (2004) the rheobase effective input g rheo, i.e. the minimum conductance input triggering a spike, is a fixed value that can be compared with estimations obtained from numerical simulations. As we can see on Fig. 2a, for low input firing rate ν exc, the neuron tends to fire spikes at lower values h rheo of input threshold than the theoretical one but as the input firing rate is increased, the fluctuations in synaptic inputs vanish and the neuron tends to behave as its deterministic limit g rheo. From Fig. 2a, we conclude that this deterministic limit, reached at around 5000Hz, is more restrictive than the one given by the diffusion approximation alone, but remains valid for the networks considered in the following.

a. Difference between the real rheobase conductance g rheo and its estimated value h rheo from diffusion approximation as a function of the rate of excitatory Poissonian input. b. Firing rate of the neuron without adaptation subject to excitatory inputs from a Poisson source as a function of the input μ, for several maximal conductances \(g^{max}_{exc}\). Red curve is for low membrane conductances values and high firing rates, while green curve is obtained for high conductances and low firing rates. Plain lines are theoretical approximations obtained for the situations (low rate, high conductance) (green) and (high rate, low conductance) (red), dots are obtained from numerical simulations. Inset shows simulations of couples (rate, conductance) for several values of g m ., color-coded

The two limiting cases of large and small conductance g exc can be studied while maintaining the mean effective input μ = g exc ν exc τ exc constant. When the maximal conductance is close to \(g^{max}_{\text {exc}}\), response is linear at low inputs rates and saturates to the maximal firing rate imposed by the refractory period, with \(\nu _{\text {out}}=\frac {\nu _{\text {exc}}}{1 + \tau _{\text {ref}} \nu _{\text {exc}}}\) (see green curve on Fig. 2b). On the opposite, in the limit of high firing rates, ν exc≫1/τ exc, the sub-threshold dynamics is well approximated by the Ornstein-Uhlenbeck process shown above, and it is known Ricciardi and Sacerdote (1979) and Sacerdote and Giraudo (2013) that the mean first passage time is given by

where Erf is the error function and other parameters are described in the Methods (see Richardson and Gerstner 2005, Ostojic and Brunel 2011 for a fast method to compute this quantity). The firing rate for the adaptive exponential neuron without adaptation is then simply ν out = 1/(τ ref +T OU ) (see red curve on Fig. 2b). Note that in the inset of Fig. 2b firing rate response are plotted as a function of g exc ranging from 0.05 to 50 nS, color-coded. Response behaves linearly for \(g_{exc} \simeq g^{max}_{\text {exc}}\). Interestingly, for values of g exc in between, response curves cross almost all in the same region around which the response firing rate is not dependent on the size of post-synaptic potential for a given effective input. This could be interesting for networks including heterogeneities of synaptic weights as their output rate would be similar for identical effective input when input rates are scaled appropriately.

3.2 Dynamics of a single neuron with adaptation

Now if we consider adaptation, its dynamics (τ u = 100ms) is slower than timescales involved in spike generation or membrane relaxation (τ m = 10ms) so for the analysis of response properties it is possible to assume timescale separation (van Vreeswijk and Hansel 2001; Benda and Herz 2003; Ermentrout 1998). Let us first study the effect of linear (sub-threshold) adaptation current on the response to Poisson input spike train. At slow time scale, the fast membrane dynamics can be averaged so that \(\langle (V_{\mathrm {m}}(t)-E_{L})\rangle =\frac {\hat {\mu }}{g_{L}}\) and injecting this value in the dynamics for the adaptation current gives the stationary current \(u=a \frac {\hat {\mu }}{g_{L}}\) resulting in the effective dynamics for V m(t)

The effect of linear adaptation is thus to reduce the effective input and the firing rate response for all inputs, shifting the response curve. This negative feedback could be useful to tune the threshold depending on the basal level of computation, and a similar mechanism has been shown to enable contrast invariant computations in the visual system (Carandini and Ferster 1997).

If we now consider the non-linear effect of adaptation, we can notice that between two consecutive spikes t k+1−t k = T, a discrete map describes the dynamics of the adaptation current. At each spike time, we have \(u(t) \rightarrow u(t)e^{-T/\tau _{u}} + b\), so that between 0 and T we have \(u(t)=b(1-e^{-t/\tau _{u}})/(1-e^{-T/\tau _{u}})\). For a high firing rate ν exc compared to the adaptation time scale τ u , the average adaptation current can therefore be estimated as 〈u〉=τ u bν exc . Figure 2b (red curve) showed that for ν exc far below saturation, the response curves of the neuron without adaptation behave linearly. In this linear regime of the response, the firing rate can therefore be described with a linear relationship \(\nu _{\text {out}}=k(\hat {\mu }-\hat {\mu }_{c})\), with \(k, \hat {\mu }_{c}\) being constants and \(\hat {\mu }\) the total current to the membrane \(\hat {\mu }=I_{syn}-u\). By replacing u(t) by its mean value estimated above, we have \(\nu _{\text {out}}= k (I_{syn}-\hat {\mu }_{c})/(1+kb\tau _{u})\), such that the net effect of non-linear adaptation is to change the slope of the response to slow input variations, as also found in Ermentrout (1998) and Benda and Herz (2003).

Figure 3a shows examples of the dynamics of the exact same neuron, in different conditions of adaptation. We compare single-cell responses to a particular input without any adaptation (a = 0, b = 0) to a regime with full adaptation (a, b with standard values as defined in Section 2), one with only linear adaptation (b = 0), and one with only non-linear spike frequency adaptation (a = 0). In Fig. 3b, the firing rate responses as functions of the input μ are plotted for those different adaptation conditions. The effect of linear adaptation is a shift in the spike threshold (see Fig. 3b, the fact that when b = 0 (green curve), the curve is rising for higher values of μ compared to the response curve with no adaptation (in red)). Similarly, the effect of non-linear adaptation (a = 0) is equivalent to a change in the slope of the response curve to slow input variations (see blue curve). Schematically, we can fit with a least square fitting procedure those response curves to sigmoidal functions \(f(\nu _{\text {ext}})=1/(1+e^{-\alpha (\nu _{\text {ext}} -\beta )})\) (see Fig. 3c, top) and quantify this behavior.

Influence of linear and non linear adaptation a. Comparison of the response to the same excitatory input from a Poisson spiking process for a neuron without adaptation and neuron with (up) linear adaptation (middle) non linear adaptation (bottom) combined linear and non linear adaptation conditions (see Section 2). b. Firing rate response as a function of the input rate in the 4 conditions previously listed. c.Top: Sigmoidal rate function used to estimate the effect of adaptation. Middle: Effect of linear adaptation on threshold (β, unfilled square markers) and gain (α, filled square markers) of the sigmoid. Bottom: same as middle, but when the non-linear adaptation is varied

Using the sigmoidal fits, we study the variation of the thresholds β and the gains α, as functions of the adaptation parameters of the neuron, a and b. We can see on Fig. 3c, middle, that the linear adaptation (controlled by a) affects mostly the threshold β shifting the response curve to the right with higher values of a resulting in higher thresholds β. This dependence is linear as expected from the analysis via time scales separation. On the other hand, the non-linear adaptation (controlled by the parameter b) affects mostly the gain α of the sigmoidal response curve by decreasing the slope of the response function, with higher values of b resulting in lower α (see Fig. 3c, bottom). Again, as expected from former considerations, this dependence is non-linear. The decrease in gain also results in a wider dynamic range. Note that the change in α is necessary to keep the rheobase constant so that non-linear adaptation changes both α and β. We note that having lower gain, the input range for which linear approximation of the response is valid is increased and this can be summarized by stating that the net effect of non-linear adaptation is to linearize the response curve.

3.3 Dynamics of a cortical column with adaptation

In the neocortex, neurons are arranged in complex microcircuits affecting their response properties and giving rise to internal dynamics. The cortical column (Horton and Adams 2005) is a good example of such canonical circuits encountered in the brain and has been shown to give rise to oscillatory rhythms and self-sustained irregular activity (Brunel and Hakim 1999; Vogels et al. 2005). If the fine connectivity details of such a column are still poorly understood (Binzegger et al. 2007), we can still understand the dynamical properties of a homogeneous balanced network of adapting neurons, arranged in a columnar fashion. To this aim, we simulated what we called a cortical column composed of two populations of 800 excitatory and 200 inhibitory neurons connected in a random manner with an averaged probability of connection of 5 % (Renart et al. 2010; Brunel 2000), and receiving external input from spike source generated through Poisson processes. Such a generic and classical network is often referred to in the literature as a random balanced network (Brunel 2000; Vreeswijk et al. 1996), and has been well used as a good model of in vivo activity in sensory cortices. More details could be found in the corresponding section of the Section 2. Schematically, the structure of the network is represented in Fig. 4a. Excitatory weights are fixed to g exc = 6nS and inhibitory weights are modified through the control parameter g such that g inh = g.g exc. Note that on Fig. 4a, insets shows the distribution of the indegree for all the different connections.

Structure and dynamics of the cortical column model a. Schematics of the wiring connections in the cortical column. Two populations of 800 excitatory (red) and 200 inhibitory (blue) neurons are reciprocally connected with a probability of 5 %. Excitatory cells are also stimulated by external inputs at a rate ν ext. Insets are showing the distribution of the indegree for all the different connections between populations involved in the column. b. Phase diagram as a function of external firing rate ν ext and inhibitory maximal conductance (when g is varied), without any adaptation. We can see four distinct regimes of activity. c. Typical spike rasters for the three non-silent regions of the phase diagram

In Fig. 4b, we show the phase diagram of such a cortical column without any adaptation when the external rate ν ext and the balance g between excitation and inhibition are varied. We can see that the dynamics of the cortical column can be maintained in an Asynchronous Irregular regime (AI, Section 2 for details on the classification) with low firing rate when external input is small enough, as already found (Brunel 2000). This large region is termed Asynchronous Irregular because neurons are firing irregularly (high coefficient of variation of their inter spikes intervals), and the network’s firing rate displays no clear oscillations. Similarly, we can see in the diagram a Synchronous Regular (SR) or a Synchronous Irregular (SI) regime. It is commonly assumed that the AI regime is a good candidate for describing cortical dynamics observed in vivo and it has been identified as the operating point of the brain with transient perturbation leading to amplified response with fast recovery to the resting operating point (Renart et al. 2010; Stimberg et al. 2009). Canonical raster plots of the three main dynamical regimes identified in the phase diagram are shown in Fig. 4c. In such AI regime, when input is increased, the dynamics of the network depends on the dominant polarity of the synaptic current. For an excitation dominated column, increasing input destabilizes the asynchronous irregular state so activity is amplified to a persistent saturated state with all neurons firing at their maximal frequency. For an inhibition dominated network, the destabilization of the irregular state through increased stimulation leads to oscillatory dynamics with neurons firing synchronously.

We can see what is the direct effect of the two previously discussed components of adaptation (linear v.s non-linear) in Fig. 5. As we can notice in Fig. 5a, when linear adaptation is turned on, the Synchronous Irregular region of the phase diagram tends to disappear, while the overall structure of the diagram is preserved in case of non-linear adaptation. In both cases, because the average firing rates in those regions is similar (data not shown), it is really the influence of single-cell response curves that is affecting the phase diagram. Linear adaptation, responsible for a shift in the response threshold is decreasing the synchronous activity, thus reducing the amount of correlations in the network.

3.4 Mean field description of the cortical column

To get a better understanding on how adaptation is impacting the phase diagram, we used the fact that the coarse-grained dynamics of the cortical column can be captured, at a macroscopic level, by describing the firing rates (r E , r I ) averaged over homogeneous excitatory and inhibitory neurons. Using a mean field approach, as in Tabak et al. (2000) and Giugliano et al. (2004), the evolution equation for the firing rate dynamics can be reduced to the Wilson-Cowan system:

For more details, see Section 2. To describe the dynamics, we keep the sigmoidal approximation discussed in the previous section for the response curve \(f_{A}(x)=(1+e^{-\alpha (\nu _{\mathrm {A}} -\beta )})^{-1}\). Beyond a fixed point attractor, this system is known to exhibit multistability and limit cycle. The transition among these regimes can be studied through a bifurcation analysis (see Section 2 for details about the related numerical tools). For this matter, as linear and non-linear adaptation were shown to affect the threshold β and the gain α of the response curves, these parameters are taken as bifurcation parameters in Fig. 6 to study the various possible instabilities.

Mean Field dynamic of the cortical column with adaptation a. Diagram showing bifurcations of codimension 1 and 2 as a function of threshold β and gain α of the excitatory response function. The stationary distribution for the activity of the excitatory population under weak stochastic perturbation is also depicted at various points close to the instability curve. b. Dynamics, as a function of time, of the excitatory population at various points in the phase space

Figure 6 depicts the distributions and the temporal traces of the activity for the excitatory sub population in the system under stochastic perturbation close to various bifurcation points (see Methods for a description of the numerical tools used for bifurcation analysis). Close to saddle-node points, the distribution is bimodal with a bias toward up or down state depending on which side of the bistable region is considered (low or high α). Similar bistability is observed between a limit cycle and a fixed point of high, low or moderate level of activity close to the Hopf curve. A prediction of the model is that if sufficient linear adaptation, affecting the threshold parameter β in the coarse-grained model, is at work in the excitatory cell dynamics, a column which would be initially oscillatory or bistable would be silenced to a low activity level in an abrupt fashion. The effect of non-linear adaptation, affecting the gain parameter α, is more subtle with possible transitions along the vertical axis from bistable regime in the upper cusp to various oscillatory regimes, changes in the oscillatory pattern or transition from oscillation to fixed point.

From Fig. 6 we see that the crossing of the Hopf curve is reached with a reasonable change of the threshold value β (related to linear adaptation) whereas a large change in gain α (related to nonlinear adaptation) is necessary to escape from the oscillatory region. This is consistent with previous simulations of spiking neurons where we observed that linear adaptation reduces greatly the synchronous irregular region of the diagram (see Fig. 5).

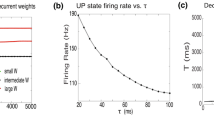

3.5 Dynamical response to external stimuli with or without adaptation

3.5.1 Oscillatory dynamics

To study the effect of adaptation on columnar dynamics modeled with spiking neurons (see Section 2), the network conductances were set so that the mean firing rate of the network is oscillatory when no adaptation is considered (a = 0, b = 0) and adaptation parameters were then varied from this setting. The measures of the activity of the excitatory sub population (firing rate, coefficient of variation of interspike) depending on those parameters are shown on the phase diagrams of Fig. 7a, b, c when the two components a and b of the adaptation are varied. As expected, when linear or non-linear adaptation is increased the mean firing rate activity is decreased but the effects of adaptation is different on the second order statistics for the dynamics at network level. The pairwise spike correlations in the network drop abruptly when linear adaptation is varied (see panel c) whereas much smoother transition is observed when non-linear adaptation is varied. Furthermore, when b is increased, the average firing rate in the column is oscillating at half the period of the original network. We can see on Fig. 7d, e, f examples of the activity in the three canonical regimes: without adaptation, with only linear adaptation (b = 0), or with only non-linear adaptation (a = 0).

Influence of adaptation on the columnar dynamics. a. Measures of the network activity depending on linear and non linear adaptation parameters (a, b). Lower point in the network is set to the parameters of purple sample from Fig. 4 d, e, f. Samples of network activity (spike raster and mean rate) in no adaptation, linear adaptation and non linear adaptation conditions

For a better grasp at the effects of adaptation on the dynamics of a column, we consider the time course of the activity when a stimulus is presented starting from quiescent state. The resulting responses are reported on Fig. 8. As expected from time averaged measures, after the stimulus was presented, the mean firing rate of the column oscillates when no adaptation is considered. Strong adaptation currents result in relaxation to a stationary firing rate. This relaxation occurs at the slow time scale imposed by the adaptation current for linear adaptation whereas it occurs much faster, within an oscillation cycle, for non-linear adaptation currents. To summarize, with subthreshold adaptation a, oscillatory fluctuations first decrease in amplitudes and then for higher values of a the column firing rate also decreases. In contrary, with suprathreshold adaptation b, both oscillatory fluctuations, oscillation frequency and mean firing rate of the columns are decreasing simultaneously.

Columnar dynamics under sustained Poissonian input at constant rate. a. Time course of the mean firing rate of of cortical column for various levels of adaptation currents coded in colors when linear adaptation only is varied (b = 0). The shaded areas represent two standard deviation around the mean. b. Firing rate for various levels of adaptation currents: solid line is the stationary mean and shaded areas represent two standard deviations. The dotted line is the peak response right after the stimulus is presented. c., d. Same as a., b. but when only non-linear adaptation is varied (a = 0)

We showed through theoretical arguments that effects of adaptation on the neuronal dynamics may be related to α and β parameters in the macroscopic model under the diffusion approximation and slow adaptation. The study of the 2 variables macroscopic model is then useful to interpret some aspects of the dynamics like oscillations. There are aspects of changes induced by adaptation which are not discussed in this way because they would go beyond the 2 variables mean field model we consider to discuss qualitative dynamics. For instance chaotic dynamics where found in a macroscopic model including short term plasticity in Cortes et al. (2013).

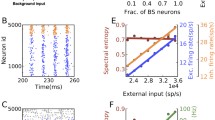

3.5.2 Reliability of spike patterns

In previous sections, we analyzed the stationary responses of a neuronal network with adaptation, and we distinguished the linear v.s the non-linear part of that mechanism, either with simulations of spiking neurons or with a macroscopic approximation. Now we consider the dynamical responses of the same cortical column, but when stimulated with a Poisson process at constant rate, or with one with rate modulated in a sinusoidal manner (see Methods). For both scenarios, we observed the effect of adaptation on the reproducibility of the responses, when the exact same realization of the input spike patterns were replayed to the exact same network. At each trial, initial conditions of the network were different (see Methods), and to visualize the mean response of the column, we computed and plotted the mean voltage over all N neurons within the column, l(t)=<V m (t)> N .

On Fig. 9a, b, we can see the temporal dynamics of the mean membrane potential, averaged over 40 repetitions, and when stimulated with constant Poisson input (Fig. 9a) or time-varying inhomogeneous Poisson input (Fig. 9b) (see Methods for a description of the input stimulus). In both constant and varying rate cases, the variance over trials of the responses are reduced by the adaptation currents. This can be viewed more clearly in the raster plots below Fig. 9a and b, showing the trial-to-trial spiking responses of 3 sampled neurons taken in the excitatory population of the column for the 40 repetitions of the same input.

Reliability of columnar dynamics under repeated stimulus. We consider neurons with no adaptation current in black, with only linear (a = 500nS) in red and with only nonlinear adaptation current (b = 1500nA). a Mean membrane potential ± std averaged over repetitions to a constant Poisson input at 850Hz, without any adaptation (black), with linear only (red), or non-linear only (green). Below are the trial-to-trial raster plots of three representative cells in the column each spiking over 40 repetitions, in those three conditions. b. Same as in a. but in response to an Poisson process with rate varying as a sinus during a period of 200 ms (see Methods). c. Measures of the Coefficient of Variation (CV) of the mean membrane potential in the period for the 40 repetitions of the stimulus averaged across 10 realizations of the network. The measures was computed for constant (solid) or varying (dashed) input rate as a (red) or b (green) were varied. d. Normalized pairwise cross-correlations between repeated response of a neuron, averaged across 100 non-silent cells and over 10 realizations. Line and color codes are same as in c

To quantify this increase in reliability between trials, we used two measures. The first one is the CV of the mean voltage averaged over all N e neurons within the column. We can see on Fig. 9c that this measure is affected similarly by both adaptation currents in the case of the stimulation at constant rate (dashed lines), while it has a minimum at a finite value of nonlinear adaptation current b in the case of a temporally varying stimulation (solid green line). For high values of a, the CV is almost 0, meaning that the reliability is very high. To rule out the fact that this will depend on the average activity, we also computed the normalized averaged cross-correlation coefficient within trials and among neurons spike trains as described in Methods (see Fig. 9d). On Fig. 9d, we can see similar trends as for the average membrane potential: the normalized correlation coefficient is close to 1 for high values of linear adaptation (red curves) and there is an optimal value of b at which maximal reliability is achieved with time-varying input rate.

4 Discussion

In this paper, we analyzed the effects of adaptation in the adaptive exponential integrate-and-fire neuron model, distinguishing linear and non-linear mechanisms, related biologically to voltage-gated and calcium-gated channels. Using mathematical observations and comparisons with simulations, we showed how those two distinct components could lead to different changes of the firing rate response curve for this particular neuron model. In single-cell simulations, we found that linear adaptation affects mostly the threshold at which a neuron starts to fire, while non-linear adaptation tends to lower the slope of the response curve. Extending those observations to cortical networks, we studied the role of adaptation onto the dynamics of a cortical column (a so-called random balanced network) with an activity similar to what can be observed in vivo. We found that linear adaptation introduces a switch from cortical oscillations to a fixed point of stationary low firing rate while non-linear adaptation preserves cortical oscillations but shifts their frequency to lower values. These are thus two possible ways to modulate synchrony in a neuronal network.

From a functional point of view, we showed that the dynamic range of the response of a cortical column is increased when non linear adaptation currents are included and that there is a shift of the threshold and response to lower values when linear adaptation current is included. These aspects may be important to understand the contrast invariant computations at the neuronal level and predictive aspects of the neuronal response (Deneve 2008; Boerlin et al. 2013). We also demonstrate that when oscillatory dynamics arise in a cortical column, both adaptation currents attenuate these oscillations but in different manners. Our study thus sheds lights on the possible ways to modulate oscillatory dynamics with slow currents which is of crucial interest for cognitive neuroscience (Buzsáki 2010). Moreover, we found that adaptation was also able to greatly increase the reliability of the neuronal responses and as observed in vitro (Mainen and Sejnowski 1995) and in vivo (Haider et al. 2010), responses are reliable on a trial-to-trial basis. The linear part of the adaptation mechanism used in the integrate-and-fire model considered here was the one mostly involved in this process. Because this linear adaptation is related to voltage-gated channels, we can establish a link between the temporal precision of the responses and the distribution of those channels in neurons. Interestingly, it is known that the repartition of such channels can also be activity-dependent (Lu et al. 2004), so neurons may have mechanisms to adapt their precision as a function of their inputs.

Adaptation is an ubiquitous phenomena in the brain that can spawn multiple time scales: from time constants of several minutes or even hours, it has been shown to be crucial for homeostasis and stability in neuronal network. In this work, we investigated only adaptation mechanisms in the order of hundreds of milliseconds, so relatively close to the membrane time constant. This form of adaptation is known to rely on the kinetics of the voltage or calcium gated channels, and for a more in depth knowledge, see Marder (2012). Therefore, our study focused only on dynamical responses and equilibrium that could be reached within those time scales. Results are observed for random balanced networks with sparse connectivity (Vogels et al. 2005), and it is likely that differences may be found in denser networks, or when the balance between excitatory and inhibitory conductances is reduced (Vreeswijk et al. 1996; Ostojic 2014). All together, unravelling the effect of adaptation in spiking networks is crucial to understand the computation that can be performed by such dynamical systems. Functionally, one could hypothesize that linear (sub-threshold) adaptation, responsible for a shift in the threshold of the response curve is useful to implement contrast invariant responses, while the change in the slope of the response curve induced by non-linear (supra-threshold) adaptation increases the dynamic range of the neuron and softly modulates the oscillatory dynamics. This findings remains to be tested in vivo.

References

Andrade, R. (2011). Serotonergic regulation of neuronal excitability in the prefrontal cortex. Neuropharmacology, 61(3), 382–6.

Benda, J., & Herz, A.V.M. (2003). A universal model for spike-frequency adaptation. Neural Computation, 15(11), 2523–2564.

Benda, J., Maler, L., & Longtin, A. (2010). Linear versus nonlinear signal transmission in neuron models with adaptation currents or dynamic thresholds. Journal of Neurophysiology, 104, 2806–2820.

Benucci, A., Saleem, A.B., & Carandini, M. (2013). Adaptation maintains population homeostasis in primary visual cortex. Nature Neuroscience, 16(6), 724–9.

Binzegger, T., Douglas, R.J., & Martin, K.A.C. (2007). Stereotypical bouton clustering of individual neurons in cat primary visual cortex. Journal of Neuroscience, 27(45), 12242–54.

Boerlin, M., Machens, C.K., & Denève, S. (2013). Predictive coding of dynamical variables in balanced spiking networks. PLoS Computational Biology, 9.

Brette, R., & Gerstner, W. (2005). Adaptive exponential integrate-and-fire model as an effective description of neuronal activity. Journal of Neurophysiology, 94(5), 3637–42.

Brunel, N, & Hakim, V (1999). Fast global oscillations in networks of integrate-and-fire neurons with low firing rates. Neural Computation, 11(7), 1621–1671.

Brunel, N. (2000). Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. Journal of Computational Neuroscience, 8(8), 183–208.

Buzsáki, G. (2010). Neural syntax: cell assemblies, synapsembles, and readers. Neuron, 4;68(3), 362–85.

Carandini, M., & Ferster, D. (1997). A tonic hyperpolarization underlying contrast adaptation in cat visual cortex. Science, 276(5314), 949–52.

Clewley, R. (2012). Hybrid models and biological model reduction with PyDSTool. PLoS Computational Biology, 8.

Cortes, J.M., Desroches, M., Rodrigues, S., Veltz, R., a Muñoz, M., & Sejnowski, T.J. (2013). Short-term synaptic plasticity in the deterministic Tsodyks-Markram model leads to unpredictable network dynamics. Proceedings of the National Academy of Sciences of the United States of America, 110, 16610–5.

Davison, A., Brüderle, D., Eppler, J., Kremkow, J., Muller, E., Pecevski, D., Perrinet, L., & Yger, P. (2008). PyNN: a common interface for neuronal network simulators. Frontiers in Neuroinformatics, 2, 11.

Deemyad, T., Kroeger, J., & Chacron, M.J. (2012). Sub- and suprathreshold adaptation currents have opposite effects on frequency tuning. The Journal of Physiology, 590, 4839–4858.

Deneve, S. (2008). Bayesian spiking neurons I: inference. Neural Computation, 20, 91–117.

Destexhe, A., Rudolph, M., Fellous, J.M., & Sejnowski, T.J. (2001). Fluctuating synaptic conductances recreate in vivo-like activity in neocortical neurons. Neuroscience, 107(1), 13–24.

Ermentrout, B. (1998). Linearization of F-I curves by adaptation. Neural Computation, 10, 1721–1729.

Ermentrout, B., Pascal, M., & Gutkin, B. (2001). The effects of spike frequency adaptation and negative feedback on the synchronization of neural oscillators. Neural Computation, 13, 1285–1310.

Bard Ermentrout, G., & Terman, D.H. (2010). Mathematical foundations of neuroscience. Springer.

Farkhooi, F., Muller, E., & Nawrot, M.P. (2011). Adaptation reduces variability of the neuronal population code. Physical Review E, 83.

Gewaltig, M.-O., & Diesmann, M. (2007). NEST (NEural Simulation Tool).

Gigante, G., Del Giudice, P., & Mattia, M. (2007). Frequency-dependent response properties of adapting spiking neurons. Mathematical Biosciences, 207, 336–351.

Gigante, G., Mattia, M., & Del Giudice, P. (2007). Diverse population-bursting modes of adapting spiking neurons. Physical Review Letters, 98.

Giugliano, M., Darbon, P., Arsiero, M., Lüscher, H.-R., & Streit, J. (2004). Single-neuron discharge properties and network activity in dissociated cultures of neocortex. Journal of Neurophysiology, 92, 977–996.

Grillner, S. (1997). Ion channels and locomotion. Science, 278(5340), 1087–1088.

Gusnard, D.A., & Raichle, M.E. (2001). Searching for a baseline: functional imaging and the resting human brain. Nature Reviews Neuroscience, 2(10), 685–94.

Haider, B., Krause, M.R., Duque, A., Yu, Y., Touryan, J., Mazer, J.A., & McCormick, D.A. (2010). Synaptic and network mechanisms of sparse and reliable visual cortical activity during nonclassical receptive field stimulation. Neuron, 65(1), 107–21.

Hertäg, L., Durstewitz, D., & Brunel, N. (2014). Analytical approximations of the firing rate of an adaptive exponential integrate-and-fire neuron in the presence of synaptic noise. Frontiers in Computational Neuroscience, 8, 116.

Higgs, M.H., & Spain, W.J. (2011). Kv1 channels control spike threshold dynamics and spike timing in cortical pyramidal neurones. Journal of Physiology, 589(21), 5125–5142.

Horton, J.C., & Adams, D.L. (2005). The cortical column: a structure without a function. Philosophical Transactions Of The Royal Society Of London. Series B, Biological Sciences, 360(1456), 837–62.

Izhikevich, E.M. (2001). Resonate-and-fire neurons. Neural networks: The Official Journal of the International Neural Network Society, 14(6–7), 883–94.

Kuznetsov, Y.A. (1998). Elements of applied bifurcation theory(p. 591). Springer.

La Camera, G., Rauch, A., Lüscher, H.-R., Senn, W., & Fusi, S. (2004). Minimal models of adapted neuronal response to in vivo-like input currents. Neural Computation, 16, 2101–2124.

Ladenbauer, J., Augustin, M., & Obermayer, K. (2014). How adaptation currents change threshold, gain and variability of neuronal spiking. Journal of Neurophysiology, 111, 939–953.

Lánský, P., & Lánská, V. (1987). Diffusion approximation of the neuronal model with synaptic reversal potentials. Biological Cybernetics, 56, 19–26.

Lu, Y., Monsivais, P., Tempel, B.L., & Rubel, E.W. (2004). Activity-Dependent regulation of the potassium channel subunits Kv1.1 and Kv3.1. Journal of Comparative Neurology, 470, 93–106.

Mainen, Z.F., & Sejnowski, T.J. (1995). Reliability of spike timing in neocortical neurons. Science, 268, 1503–1506.

Marder, E. (2012). Neuromodulation of neuronal circuits: back to the future. Neuron, 76(1), 1–11.

Mattia, M., & Sanchez-Vives, M.V. (2012). Exploring the spectrum of dynamical regimes and timescales in spontaneous cortical activity. Cognitive Neurodynamics, 6, 239–250.

Nataraj, K., Le Roux, N., Nahmani, M., Lefort, S., & Turrigiano, G. (2010). Visual deprivation suppresses L5 pyramidal neuron excitability by preventing the induction of intrinsic plasticity. Neuron, 68(4), 750–62.

Nowak, L.G., Azouz, R., Sanchez-Vives, M.V., Gray, C.M., & McCormick, D.A. (2003). Electrophysiological classes of cat primary visual cortical neurons in vivo as revealed by quantitative analyses. Journal of Neurophysiology, 89(3), 1541–66.

Ostojic, S. (2014). Two types of asynchronous activity in networks of excitatory and inhibitory spiking neurons. Nature Neuroscience, (October 2013).

Ostojic, S., & Brunel, N. (2011). From spiking neuron models to linear-nonlinear models. PLoS Computational Biology, 7(1), e1001056.

Papoulis, A. (1965). Probability, random variables, and stochastic processes. McGraw-Hill Kogakusha.

Pospischil, M., Piwkowska, Z., Bal, T., & Alain, D. (2011). Comparison of different neuron models to conductance-based post-stimulus time histograms obtained in cortical pyramidal cells using dynamic-clamp in vitro. Biological Cybernetics, 105(2), 167–80.

Pozzorini, C., Naud, R., Mensi, S., & Gerstner, W. (2013). Temporal whitening by power-law adaptation in neocortical neurons. Nature Neuroscience, 16(7), 942–8.

Prescott, S.A., & Sejnowski, T.J. (2008). Spike-rate coding and spike-time coding are affected oppositely by different adaptation mechanisms. Journal of Neuroscience, 28, 13649–13661.

Renart, A., de la Rocha, J., Bartho, P., Hollender, L., Parga, N., Reyes, A., & Harris, K.D. (2010). The asynchronous state in cortical circuits. Science, 327(5965), 587–90.

Ricciardi, L.M., & Sacerdote, L. (1979). The Ornstein-Uhlenbeck process as a model for neuronal activity. I. Mean and variance of the firing time. Biological Cybernetics, 35(1), 1–9.

Richardson, M.J.E., & Gerstner, W. (2005). Synaptic shot noise and conductance fluctuations affect the membrane voltage with equal significance. Neural Computation, 17(4), 923–947.

Rossant, C., Goodman, D.F.M., Fontaine, B., Platkiewicz, J., Magnusson, A.K., & Brette, R. (2011). Fitting neuron models to spike trains. Frontiers in Neuroscience, 5, 9.

Sacerdote, L., & Giraudo, M.T. (2013). Stochastic integrate and fire models: a review on mathematical methods and their applications. Stochastic Biomathematical Models,Lecture Notes in Mathematics (Vol. 2058).

Schreiber, S., Fellous, J.-M., Tiesinga, P., & Sejnowski, T.J. (2004). Influence of ionic conductances on spike timing reliability of cortical neurons for suprathreshold rhythmic inputs. Journal of Neurophysiology, 91, 194–205.

Stimberg, M., Wimmer, K., Martin, R., Schwabe, L., Mariño, J., Schummers, J., Lyon, D.C., Sur, M., & Obermayer, K. (2009). The operating regime of local computations in primary visual cortex. Cerebral Cortex, 19(9), 2166–2180.

Tabak, J., Senn, W., O’Donovan, M.J., & Rinzel, J. (2000). Modeling of spontaneous activity in developing spinal cord using activity-dependent depression in an excitatory network. Journal of Neuroscience, 20, 3041–3056.

Touboul, J., & Brette, R. (2008). Dynamics and bifurcations of the adaptive exponential integrate-and-fire model. Biological Cybernetics, 99(4–5), 319–334.

Treves, A. (1993). Mean-field analysis of neuronal spike dynamics. Network, 4, 259–284.

Tsodyks, M., Uziel, A., & Markram, H. (2000). Synchrony generation in recurrent networks with frequency-dependent synapses. Journal of Neuroscience, 20, RC50.

Turrigiano, G.G., & Nelson, S.B. (2004). Homeostatic plasticity in the developing nervous system. Nature Reviews. Neuroscience, 5(2), 97–107.

van Vreeswijk, C., & Hansel, D. (2001). Patterns of synchrony in neural networks with spike adaptation. Neural Computation, 13(5), 959–992.

Vogels, T.P., Rajan, K., & Abbott, L.F. (2005). Neural network dynamics. Annual Review of Neuroscience, 28, 357–376.

Vreeswijk, C.v., Sompolinsky, H., & van Vreeswijk, C. (1996). Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science, 274(5293), 1724–1726.

Wang, X.J. (1998). Calcium coding and adaptive temporal computation in cortical pyramidal neurons. Journal of Neurophysiology, 79, 1549–1566.

Wilson, H.R. (2003). Computational evidence for a rivalry hierarchy in vision. Proceedings of the National Academy of Sciences of the United States of America, 100, 14499–14503.

Acknowledgments

This work is supported by the Japan Society for the Promotion of Science. This work is also supported in part by the Platform for Dynamic Approaches to Living System from the Ministry of Education, Culture, Sports, Science, and Technology of Japan. P.Y was supported by the Fondation pour la Recherche Médicale (FRM).

Conflict of interests

The authors declare that they have no conflict of interest.

Author information

Authors and Affiliations

Corresponding author

Additional information

Action Editor: Tim Gollisch

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Colliaux, D., Yger, P. & Kaneko, K. Impact of sub and supra-threshold adaptation currents in networks of spiking neurons. J Comput Neurosci 39, 255–270 (2015). https://doi.org/10.1007/s10827-015-0575-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-015-0575-3