Abstract

Context

Hackathons have become popular events for teams to collaborate on projects and develop software prototypes. Most existing research focuses on activities during an event with limited attention to the evolution of the hackathon code.

Objective

We aim to understand the evolution of code used in and created during hackathon events, with a particular focus on the code blobs, specifically, how frequently hackathon teams reuse pre-existing code, how much new code they develop, if that code gets reused afterwards, and what factors affect reuse.

Method

We collected information about 22,183 hackathon projects from Devpost and obtained related code blobs, authors, project characteristics, original author, code creation time, language, and size information from World of Code. We tracked the reuse of code blobs by identifying all commits containing blobs created during hackathons and identifying all projects that contain those commits. We also conducted a series of surveys in order to gain a deeper understanding of hackathon code evolution that we sent out to hackathon participants whose code was reused, whose code was not reused, and developers who reused some hackathon code.

Result

9.14% of the code blobs in hackathon repositories and 8% of the lines of code (LOC) are created during hackathons and around a third of the hackathon code gets reused in other projects by both blob count and LOC. The number of associated technologies and the number of participants in hackathons increase reuse probability.

Conclusion

The results of our study demonstrates hackathons are not always “one-off” events as the common knowledge dictates and it can serve as a starting point for further studies in this area.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Hackathons are time-bounded events during which individuals form – often ad-hoc – teams and engage in intensive collaboration to complete a project that is of interest to them (Pe-Than et al. 2019). They have become a popular form of intense collaboration with the largest collegiate hackathon league alone reporting that their events attract more than 65,000 participants each year.Footnote 1 The success of hackathons can at least partially be attributed to them being perceived to foster learning (Porras et al. 2019; Fowler 2016; Nandi and Mandernach 2016) and community engagement (Nolte et al. 2020b; Huppenkothen et al. 2018; Taylor et al. 2018; Möller et al. 2014) and tackle civic, environmental and public health issues (Hope et al. 2019; Taylor et al. 2018; Baccarne et al. 2014) which led to them consequently being adopted in various domains including (higher) education (Porras et al. 2019; Gama et al. 2018; Kienzler and Fontanesi 2017), (online) communities (Huppenkothen et al. 2018; Taylor and Clarke 2018; Busby et al. 2016; Craddock et al. 2016), entrepreneurship (Cobham et al. 2017; Nolte 2019), corporations (Pe-Than et al. 2019; Nolte et al. 2018; Komssi et al. 2015; Rosell et al. 2014), and others.

Most hackathon projects focus on creating a prototype that can be presented at the end of an event (Medina Angarita and Nolte 2020). This prototype often takes the form of a piece of software. The creation of software code can, in fact, be considered as one of the main motivations for organizers to run a hackathon event. Scientific and open source communities, in particular, organize such events with the aim of expanding their code base (Pe-Than and Herbsleb 2019; Stoltzfus et al. 2017). It thus appears surprising that the evolution of the code used and developed during a hackathon has not been studied yet, as revealed by a review of existing literature.

In this work, which is an extension of our previous work (Imam et al. 2021), we study the evolution of the code used and created by the hackathon team members by focusing on their origin and subsequent reuse. Similar to the previous work, we focus exclusively on the code blobsFootnote 2 in this study, which is taken as an abstraction for the code itself. It is also worth mentioning that when we talk about code reuse in the context of our study, we are referring to the exact copying of a code blob so that the SHA1 value remains the same, i.e. identical code segments with no changes in comments, layouts, and whitespaces.

First, we study from where the code blobs originate: While teams will certainly develop original code during a hackathon, it can be expected that they will also utilize existing (open source) code as well as code that they might have created themselves prior to the event.

Second, to understand the impact of hackathon code, i.e. code created during a hackathon event by the hackathon team in the hackathon project repository, we aim to study whether and how the code blobs propagate after the event has ended. There are studies on project continuation after an event has ended (Nolte et al. 2018, 2020a). These studies, however, mainly focus on the continuation of a hackathon project in a corporate context (Nolte et al. 2018) and on antecedents of continuous development activity in the same repository that was utilized during the hackathon (Nolte et al. 2020a). The question of where code blobs that have been developed during a hackathon potentially get reused outside of the context of the original hackathon project has not been sufficiently addressed.

Moreover, we aim to understand what factors might influence the reuse of hackathon code blobs, which can be useful for hackathon organizers and participants to foster the impact of the hackathon projects they organize/participate in. These factors would also be of interest to the open source community in general in order to effectively tap into the potential of hackathons as a source of new software code creation.

To address the above-mentioned goals, we conducted an archival analysis of the source code utilized and developed in the context of 22,183 hackathon projects that were listed in the hackathon database Devpost.Footnote 3 To track the origin of the code blobs that were used and developed by each hackathon project and study its reuse after an event has ended we used the open source database World of Code (Ma et al. 2019, 2021) which allows us to track code (blob) usage between repositories. Overall, we looked at over 8.5M blobs, over 3M of which were code blobs, as identified with the help of the GitHublinguistFootnote 4 tool. In order to further test the validity of the findings of the data-driven study, we conducted surveys of 178 hackathon participants whose code was reused, 120 hackathon participants whose code was not reused, and 118 OSS developers who reused some code first used in a hackathon.

Our findings indicate that around 9.14% of the code blobs in hackathon projects are created during an event (8% if we consider the lines of code), which is significant considering the time and team member constraints. Teams tend to reuse a lot of existing code blobs, primarily in the form of packages/frameworks. Many of the projects we studied focus on front-end technologies – JavaScript in particular – which appears reasonable because teams often have to present prototypes at the end of an event, which lends itself to UI design. Most (45.6% overall) of the hackathon participants indicated (through surveys) that they wrote the code themselves during the hackathon and a number of them also indicated they found the code from the web or wrote it together with another participant. Approximately a third of code blobs created during events get reused in other projects, both by the number of blobs and the lines of code, with most being reused in small projects. Most developers who reused some hackathon code (blobs) rated (through the survey) the code to be easy to use. The characteristics of the blobs that were reused, in terms of size (lines of code) and whether the code is template code (see Section 2 for definition) or not, were found to be significantly different from those of the code blobs that were not reused. In most cases, the hackathon code creators did little to foster the reuse of their code and were not aware if it was being reused or not. Moreover, though they expressed satisfaction with the project, they had little intention of continuing to work on it. The number of associated technologies and the number of participants in a project were found to increase the code blobs’ reuse probability, and so did including an Open Source license in the project repository.

In summary, we make the following contributions in the paper: we present an account of code (blob) reuse both by hackathon projects and of the code (blobs) generated during hackathons based on a large-scale study of 22,183 hackathon projects and 1,368,419 projects that reused the hackathon code blobs. We tracked the origins of the code blobs used in hackathon projects, in terms of when they were created and by whom, and also its reuse after an event. We gained further insight into the evolution of the code (blobs) by conducting a series of surveys and also identified a number of project characteristics that can affect hackathon code blob reuse.

The replication package for our study is available at Imam and Dey (2021a), and the processed dataset containing the SHA1 hash values for the final list of blobs under consideration, the ID of the hackathon (in Devpost) the blob is associated with, the GitHub project/repository name (in owner/repository format), and the various attributes of the blobs we identified, viz., when the blob was first created and by whom, whether the blob was reused afterwards, and if so, in what type of project, and if the blob is a “template code” blob or not, is available at https://doi.org/10.5281/zenodo.6578707 (Mahmoud et al. 2022).

2 Research Questions

As mentioned in Section 1, the goal of this study is to understand the evolution of hackathon code blobs and identify factors that affect code blob reuse for these projects.

Our first research question thus addresses the origin of hackathon code blobs:

-

RQ1. Where does the code blobs used in hackathon projects originate from?

Delving deeper into this question, we aim to understand how many of the code blobs used in a hackathon project was actually created before the event and reused in the project, how many of the code blobs were developed during the hackathon, and, since the projects sometimes continue even after the official end date of the hackathon, how many of the code blobs were created after the event. This leads us to the sub-question:

-

RQ1a. When were the code blobs created?

We also aim to understand how many of the code blobs in a hackathon project repository are created by one of the participants, how frequently they reused code blobs created by someone they worked with earlier, and how much of code was created by someone else, leading us to the sub-question:

-

RQ1b. Who were the original creators of the code blobs?

A related question that can lead to a deeper understanding of the hackathon projects and the code is related to the source of the code blobs - was it written by the project participants during the hackathon, did they reuse code blobs from some other projects/from online communities like StackOverflow, or did the code blobs have a different origin? This leads us to the following sub-question:

-

RQ1c. Where did the code blobs originate from?

We also wanted to examine what are the programming languages predominantly used in various hackathon projects and the distribution of languages in the code created before, during, and after the projects:

-

RQ1d. What are the languages of hackathon code created before, during, and after the event?

In addition, to quantify “how much” code in various hackathon projects was created before, during, or after the hackathon, we also want to measure the sizes of the code blobs, leading us to the sub-question:

-

RQ1e. What are the sizes of code blobs created before, during, and after the hackathons?

Finally, we focused on the fact that a lot of developers often use some boilerplate code to initialize their repositories and a good chunk of code in many repositories is actually created by various automated tools, scripts, or are part of some libraries or frameworks. The code blobs related to such code are generally different from code written/reused by developers because these code blobs are meant to be used “as-is”, in contrast to some code blobs a developer in a project might want to reuse “as-is” by their conscious decision. We call such code (blobs) “template code”, which we define as: “ready-to-use” code that is generally intended to be used “as-is”, e.g. code (blobs) that is part of a library/framework, or is a commonly used boilerplate code, e.g. boilerplate code (blobs) used while initializing a project or blobs representing contents of placeholder files. We decided to differentiate such “template code” from code written/reused by human developers because “template code” is developed with the intention of being used “as-is” while non-template code is not, leading us to the sub-question:

-

RQ1f. What fraction of code blobs created before, during, or after the hackathons can be categorized as template code?

Our second research question focuses on the aspect of hackathon code (blob) reuse. As noted in Section 1, existing studies do not address the question of whether and where hackathon code blobs get reused after an event has ended. However, knowing the answer to this question would be crucial for understanding the impact of hackathons on the larger open source community. Some might perceive hackathons as one-off events where people gather and create some code that is never used again, while in fact they might have an impact on the wider scene of software development and create something of value that transcends individual events. This leads us to also ask the following second research question:

-

RQ2. What happens to hackathon code (blobs) after the event?

To address this question, we started by looking at what fraction of the hackathon code blobs get reused and the characteristics of the reused code blobs in terms of size, purpose (whether it is a template code or not), programming language the code was written in, and ease-of-use (based on responses from developers who reused the code blobs). We focused on these factors because previous studies (see Section 3.2) suggested that these are important characteristics of the code blobs that gets reused. So our first sub-question is:

-

RQ2a. How much hackathon code (blobs) gets reused and what are the distributions for size, purpose, language, and ease-of-use of the reused code blobs?

We also wanted to know if the characteristics of the reused code blobs, as mentioned in the last sub-question, differ significantly from that of the code blobs which didn’t get reused, leading to the second sub-question:

-

RQ2b. Are the characteristics of the reused hackathon code blobs significantly different from those of the blobs that do not get reused?

In terms of assessing the impact of the code blobs that were reused, arguably, some code blob that is reused in a large project reaches and ultimately impacts more people, and thus can be perceived to have more impact on and be more useful to the software development community overall, than code blobs reused in a small project, so we wanted to explore how frequently the hackathon code blobs get reused in small/medium/large OSS projects:

-

RQ2c. What are the sizes of the projects that reuse hackathon code blobs?

Next, we wanted to explore if the hackathon project members who created the code somehow foster code reuse, examining if they themselves reused the code (blobs) or shared the code afterwards:

-

RQ2d. How commonly do the hackathon code owners foster code (blob) reuse by actively reusing/sharing the code/using a license in the repository that allows code reuse?

We also wanted to examine if the hackathon code owners are aware that some of the code they created during a hackathon might be getting reused and if they feel that their code is useful for others, which ties to the aspects of transparency and perception of the participants:

-

RQ2e. Are the hackathon code authors aware of code reuse/feel their code might be useful to others?

Finally, though not a form of direct code reuse, we wanted to know if the ideas developed in the hackathon projects are ever reused because this is another form of continuation of the hackathon project which is often hard to capture from purely data-driven studies:

-

RQ2f. Do the ideas that arose from a hackathon project get reused?

Our third research question focuses on understanding how different characteristics of a hackathon project can influence the probability of hackathon code (blob) reuse.Footnote 5 While code reuse in Open Source Software is a topic of much interest, there are only a few studies covering this topic. Moreover, existing studies, e.g. Haefliger et al. (2008), Kawamitsu et al. (2014), Feitosa et al. (2020), and von Krogh et al. (2005) only focus on between 10 and a few hundred projects. For this study, we examined 22,183 hackathon projects, which makes it reasonable to assume that insights from this study – despite them being drawn from hackathon projects only and being focused on reuse at the blob level (i.e. exact copy-paste of code) – would add to the existing knowledge about code reuse in general. Thus, we present this question as:

-

RQ3. How do certain project characteristics influence hackathon code (blob) reuse?

Related to this research question, we formed the following hypotheses that focus on aspects that can reasonably be expected to foster code (blob) reuse:

H1 Familiarity

Projects that are attempted by larger teams will have a higher chance of their code being reused, simply because more people are familiar with the code. Moreover, hackathon events that are co-located offer participants more possibilities for interaction which can contribute to a better understanding of each other’s code, higher code quality, and consequently foster code reuse.

H2 Prolificness

Code from projects involving many different technologies is more likely to be reused, since: (a) they tend to have more general-purpose code than more focused projects, which affects code reuse as discussed by Mockus (2007), and (b) they have a cross-language appeal, opening more possibility for reuse. Similarly, projects with more amount of code created before and during the event (we can not use the code created after the event, for preventing data leakage) should have a higher chance of code reuse by virtue of simply having more code.

H3 Composition

The project composition, i.e. how many blobs in a project are actually code blobs, and how many are non-code blobs, e.g., data, documentation, or others could be another factor that might influence code (blob) reuse. This relationship is likely to be non-linear though, e.g., since we are considering code reuse, a higher percentage of code in the project should increase the probability of reuse, but only up to a certain point, since code from a repository containing only code and no documentation is not very likely to be reused.

H4 Permission

Last but not least, we also focus on if the code (blob) is actually shared with an Open Source License which allows it to be reused because, if the hackathon project repository is not licensed to be reused, the chance of that code (blob) being used elsewhere should be very low.

3 Background

In this section we will situate our work in the context of prior research on hackathon code (Section 3.1) before discussing existing studies on code reuse (Section 3.2).

3.1 Research on Hackathon Code

The rise in popularity of hackathon events has led to an increased interest to study them (Falk Olesen and Halskov 2020). Current research however mainly focuses on the event itself studying how to attract participants (Taylor and Clarke 2018; Hou and Wang 2017), how to engage diverse audiences (Paganini and Gama 2020; Hope et al. 2019; Filippova et al. 2017), how to integrate newcomers (Nolte et al. 2020b), how teams self-organize (Trainer et al. 2016) and how to run hackathons in specific contexts (Möller et al. 2014; Pe-Than et al. 2019; Porras et al. 2019). These studies acknowledge the project that teams work on as an important aspect. The question of where the software code that teams utilize for their project comes from and where it potentially gets reused after an event has not been a strong focus though.

There are also studies that focus on the continuation of software projects after an event has ended (Lapp et al. 2007; Cobham et al. 2017; Nolte et al. 2018; Ciaghi et al. 2016). These studies however mainly discuss how activities of a team during, before, and after a hackathon can foster project continuation (Nolte et al. 2018), how hackathon projects fit to existing projects (Lapp et al. 2007), and the influence of involving stakeholders when planning a hackathon project on its continuation (Cobham et al. 2017; Ciaghi et al. 2016). They do not specifically focus on the code that is being developed as part of a hackathon project.

Few studies have also considered the code that teams develop during a hackathon (Nolte et al. 2020a; Busby et al. 2016). These studies however mainly focus on code availability after an event (Busby et al. 2016) or on how activity before and after an event within the same repository that a team utilized during the hackathon can affect reuse (Nolte et al. 2020a). The question of whether and to what extend teams utilize existing code and whether and where the code that they develop during a hackathon gets reused aside from this specific repository has not been addressed.

3.2 Code Reuse

Code reuse has been a topic of interest and is generally perceived to foster developer effectiveness, efficiency, and reduce development costs (Sojer and Henkel 2010; Haefliger et al. 2008; Feitosa et al. 2020). Existing work so far mainly focuses on the relationship between certain developer traits (Haefliger et al. 2008; Sojer and Henkel 2010; von Krogh et al. 2005) and team and project characteristics such as team size, developer experience, and project size and code reuse (Abdalkareem et al. 2017). Moreover, the aforementioned findings are mainly based on surveys among developers, thus covering their perception rather than actual reuse behavior. In contrast, we aim to study actual code reuse behavior.

There is also existing work that focuses on studying the reuse of the code itself. These, however, are often small scale studies of a few projects (Kawamitsu et al. 2014; Xu et al. 2020) focusing on aspects such as automatically tracking reuse between two projects (Kawamitsu et al. 2014) and identifying reasons why developers might choose reuse over re-implementation (Xu et al. 2020). In contrast, our aim is to study how the code created during a hackathon evolves i.e. where it comes from and whether and where it gets reused.

Large scale studies on code reuse have been scarce. The few existing studies often focus on code dependencies (German 2007) or on technical dept induced based on reuse (Feitosa et al. 2020) which are both not a strong focus for us because our aim is rather to study where hackathon code gets reused. There are studies that discuss the reuse of code on a larger scale (Mockus 2007) and showed that it is mainly code from large established open source projects that get reused, while we aim to study reuse of code that has been developed by a small group of people during a short-term intensive coding event.

This work is an extension of our previous work (Imam et al. 2021; Imam and Dey 2021b) where we conducted a study on the evolution of hackathon-related code, specifically, how much the hackathon teams rely on pre-existing code and how much new code they develop during a hackathon. Moreover, we also focused on understanding if and where that code (blob) gets reused and which project-related aspects predicate code (blob) reuse. However, it did not differentiate between template code that is intended to be reused “as-is” and code that was written by developers with no such intention. Moreover, it only looked at the number of blobs when considering “how much” code was reused, not accounting for the sizes of the code blobs, and also did not investigate the effect of having an Open Source license in the hackathon repository on code (blob) reuse. It did not consider the perception of individuals that created and reused hackathon code either. This study, therefore, makes a novel contribution by providing a more in-depth examination of the creation and reuse of hackathon-related code blobs through additional quantitative and qualitative analysis.

4 Methodology

In this section, we describe the methodology followed in this study. As mentioned in Section 1, we conducted a data-driven study using the Devpost and the World of Code platforms and also a series of three surveys for addressing our research questions.

4.1 Data-Driven Study

The data-driven study we conducted involved studying actual hackathon projects using the Devpost and World of Code platforms. This study was used for answering RQs 1a, 1b, 1d, 1e, 2e, and 3 completely and also for partially answering RQs 2a and 2b.

4.1.1 Data Sources

While hackathon events have risen in popularity in the recent past, many of them remain ad-hoc events, and thus data about those events is not stored in an organized fashion. However, Devpost is a popular hackathon database that is used by corporations, universities, civic engagement groups and others to advertise events and attract participants. It contains data about hackathons including hackathon locations, dates, prizes and information about teams and their projects including the project’s GitHub repositories. Organizers curate the information about hackathons and participants indicate which hackathons they participated in, which teams they were part of and which projects they worked on. Devpost does not conduct accuracy checks.

However, Devpost does not contain all the information required for answering our research questions. We thus leveraged the World of Code dataset for gathering additional information about projects, authors, and code blobs. World of Code is a prototype of an updatable and expandable infrastructure to support research and tools that rely on version control data from the entirety of open source projects that use Git. It contains information about OSS projects, developers (authors), commits, code blobs, file names, and more. World of Code provides maps of the relationships between these entities, which is useful in gathering all relevant information required for this study. We used version S of the dataset for the analysis described in this paper which contains repositories identified until Aug 28, 2020.Footnote 6

4.1.2 Data Collection and Cleaning

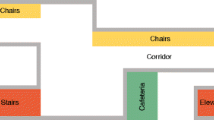

Here we describe how we collected the data required for answering our research questions, along with the details of all the filtering we introduced. An overview of the approach is shown in Fig. 1, which also highlights the different data sources and what data was used for answering each research question.

Selecting Appropriate Hackathon Projects for the Study

We started by collecting information about 60,479 hackathon projects from Devpost. Since the project ID used in Devpost is different from the project names in World of Code, in order to link these hackathon projects to the corresponding projects in World of Code, we looked at the corresponding GitHub URLs, which could be easily mapped to the project names used in World of Code, where the project names are stored as GitHubUserName_RepoName. After filtering out the projects without a GitHub URL, we ended up with 23,522 projects. While trying to match these projects with the corresponding ones in World of Code, we were not able to match 1,339 projects, which might have been deleted or had their names changed afterward. Thus, we ended up with 22,183 projects for further analysis.

Characteristics of the Chosen Hackathon Projects

To understand the characteristics of the chosen hackathon projects, we decided to look at the various technologies associated with the hackathons, the distributions of team sizes for the hackathon teams, and when the hackathons took place, which are shown in Figs. 2, 3, and 4 respectively. We can see that JavaScript, Python, Java are among the more popular technologies used in the hackathons under consideration. Most of the hackathons tend to have up to 4 members and majority of the hackathons under consideration took place in 2016 and 2017.

Gathering the Contents (Blobs) of the Project

Our first step was to identify all code blobs used in the hackathon projects. World of Code does not have a direct map between projects and blobs, so we started by collecting commits for all hackathon projects (repositories) using the project-to-commit (p2c) map in World of Code (in the World of Code terminology, projects essentially refer to repositories), which covers all commits for each hackathon project. For the 22,183 hackathon projects, we collected 1,659,435 commits generated in the hackathon repository. Then, we gathered all the blobs associated with these commits using the commit-to-blob (c2b) map in World of Code, which yielded 8,501,735 blobs, which are all the blobs associated with the hackathon projects under consideration.

Filtering to only Select Code Blobs

The hackathon project repositories, like most other OSS project repositories, have more than just code in them — they also contain images, data, documentation, etc. Since our aim in this project is the identification of the reuse of “code”, we decided to filter the blobs to only have the ones related to “code”. In order to achieve that, we looked at the filenames for each of the blobs in the project (since blobs only store the contents of a file, not the file name) using the blob-to-filename (b2f ) maps in World of Code. After that, we used the linguist tool from GitHub to find out the file types. The linguist tool classifies files into types “data, programming, markup, prose”, with files of type “programming” being what we are focusing on in this study. Additionally, we marked all the files that are not classified by the tool as files of type “Other”, with the presumption that they do not contain any code. Therefore, we focused only on the blobs whose corresponding files are classified as “programming” files by the linguist tool, which reduced the number of blobs under consideration to 3,079,487.

Gathering Data Required to Identify the Origins of Hackathon Code (Blobs)

To address our first research question, we needed information about the first commits associated with each of the 3,079,487 blobs under consideration. Fortunately, it is possible to get information about the first commit that introduced each blob using World of Code. We extracted the author of that first commit, along with the timestamp, which would be useful in identifying when the blob was first created. We have the end date for each of the hackathon events from Devpost, however, it does not include any information about the start date of the hackathon. We consider the start of a hackathon 72 hours before the end date. This assumption appears reasonable since hackathons are commonly hosted over a period of 48 which are often distributed over three days (Nolte et al. 2020c; Cobham et al. 2017). We also conducted a manual investigation of 73 randomly selected hackathons and found that only 2 projects (2.7%) were longer than 3 days, which empirically suggested that our assumption would be valid for most of the hackathons under consideration. Under this assumption, we have the start and end dates of each of the hackathon events, and we used that information to identify if a blob used in a hackathon project was created before, during, or after the hackathon event.

We can identify the first commit that introduced the hackathon blobs under consideration, the author of that commit, and all of the developers who have been a part of the hackathon projectFootnote 7 using World of Code. With this data, we can determine if the blob was first created by a member of the hackathon team or someone else. In order to dig further and understand if the blob was created in another project a member of the hackathon project participated in, we used World of Code to identify the project associated with the first commit for each blob under consideration, identified all developers of that project, and checked if any of them are members of the team that created the hackathon project under consideration. This lets us identify if the blob was created by (a) a developer who is a participant of the hackathon project (thus, they are creating the code/reusing what they had created earlier), (b) a developer who was part of a project one of the participants of the hackathon project also contributed to (which might suggest that they are familiar with the code, which might have influenced the reuse of that code (blob) in the hackathon project), or (c) someone else who has not contributed to any of the projects the hackathon project developers previously contributed to (which would suggest a lack of direct familiarity with the code from the hackathon participants’ perspective).

Code Size Calculation

In order to quantify “how much” code in various hackathon projects was created before, during, or after the hackathon, we focused on calculating the file size. Measuring file size is straightforward and, arguably, reflects the amount of effort that went into creating a file. One of the most common ways to measure file size is to calculate the number of lines of code (LOC) in the file. However, simply counting the lines is often not enough since code files often contain blank lines and comments along with the actual code, and, arguably, they do not represent the same amount of coding effort. Therefore, in order to get a more accurate estimate of the file sizes, which would, in turn, represent the effort that went into creating the code, we decided to only measure the actual lines of “code” and exclude the blanks and commented lines from consideration.

While there are several tools that can be used to get such information, one of the common tools is clocFootnote 8 which is an open-source command-line tool that can measure the lines of code for several programming languages. The cloc tool uses the file name to be able to determine the target programming language and then will use that as input to calculate the file statistics (code, comment, and blank lines). The cloc tool can also work by specifying the language directly instead of the file name. The tool reads the blob content and calculates how many code lines, commented lines, and blank lines are in the file.

We started by identifying the file names for each blob hash using b2f map in World of Code. We noticed that the cloc tool was not able to identify the language directly from file names for all of the collected blobs, thus we decided to use the GitHub Linguist tool to get the language of each blob based on the collected file names. We also collected the content of each blob using World of Code and using the collected data, we were able to run the cloc tool with the language and file content as input to identify code size for each blob.

Template Code Detection

We decided to differentiate between code that is provided as a part of a library/framework/boilerplate template/generated by a tool with the intention of being reused “as-is” and code that is written by a developer without such intention because we expect them to have very different characteristics in terms of where they originate from, where they are reused, and also how much actual “effort” goes into adding this code into a project. However, since we are not limiting our analysis to any one specific language, there is no general way to differentiate between the two with absolute certainty. Therefore, we decided to use a set of three heuristics, formulated by trial-and-error, for detecting template code blobs, described below:Rule 1: Depth - The filename associated with the blob has a depth of 3 or more relative to the parent directory of the project.Example: A file located at work/opt/tmp.py is located at a depth of 3 (the file is located in the opt directory which is inside the work directory relative to the parent directory of the project).Rationale: Based on manual empirical observation of 100 different template files selected randomly from various hackathon projects in different languages, only 3 were found to be located at a depth of 2 and 0 at a depth of 1 (the parent directory of the project).Rule 2: Similar filename - If a blob is associated with n filenames in total and u unique filenames, then we expect ratio u/n to be less than or equal to 0.33 (if an example blob has these file names associated with it: [A,A,A,B,B], we would have n = 5 and u = 2).Example: Using the b2f (blob to filename) map of World of Code, an example blob was found to be associated with 15 filenames (relative to different projects where the blob was used), and only 2 unique filenames. The ratio of u/n in this case was 0.1333, so this rule would mark that blob as template code.Rationale: The template files are often reused in various projects as-is with the same filename (since they are often a part of some standard library/framework), so we would expect the value of n to be relatively large (because of reuse) and the value of u to be relatively small (because code is reused as-is). Based on a manual empirical observation of 100 different template files selected randomly from various hackathon projects in different languages, all of them satisfied the condition u/n <= 0.33.Rule 3: Keywords - Have one of these keywords in the filename path - plugins, frameworks, site-packages, node_modules, vendor.Example: One of the filename associated with the blob 1ff93087f56aeeacece3a3ae4ccf0ba7dff6b8a9 is venv/lib/python2.7/site-packages/django/contrib/auth/__init__.pyc. Since the filename contains the keyword site- packages, it would be marked as a template file according to this rule.Rationale: Based on a manual empirical observation of 100 different template files selected randomly from various hackathon projects in different languages, 83 were found to contain the above-mentioned keywords in at least one of the filenames associated with the blob. This rule addresses the reuse of blobs related to modules/libraries/package files, which fits our definition of “template code”.

We used these three heuristic rules for detecting template code, which were formulated by trial-and-error, and a different set of 100 code blobs were selected randomly in each case. Finally, all code blobs that satisfied at least 2 of the 3 rules mentioned above were marked as template code for our analysis.

In order to check the effectiveness of our proposed heuristics in detecting template code, we manually checked 100 randomly selected blobs marked as “template code” by our overall heuristics (different from the template code blobs used for formulating the three heuristics rules) and 100 more randomly selected blobs marked to be non-template code by examining the filenames, the blob contents, and the first commits that introduced each blob to discern whether it actually is template code or not. The confusion matrix related to the evaluation is presented in Table 1. The associated values of precision, recall (sensitivity), specificity, accuracy, and F1-score are 0.96,0.79,0.95,0.85, and 0.86 respectively. We found that our heuristics are effectively tagging blobs associated with widely used package files, however, it has the problem of wrongly tagging some non-template code blobs as template ones particularly in large projects because the filename might not be that uncommon and they tend to have a sufficiently deep directory structure. We tested a few other ideas than the ones described above, e.g., identifying “common modules” by the number of occurrences with the assumption that “template code” blobs are more common than the rest but they didn’t affect the overall accuracy of our heuristics too much.

Gathering Data to Identify Hackathon Code Blob Reuse

Our second research question focuses on the reuse of hackathon code, which, per our definition (see Section 1), refers to the blobs created during the hackathon event by one of the members of the hackathon team. Therefore, to address this question, we utilized the results of our earlier analysis in order to focus only on the code blobs which satisfy the following two conditions: (a) The blob was first introduced during the hackathon event and (b) the blob was created by one of the hackathon project developers. After identifying 581,579 blobs that met these conditions, we collected all commits containing these blobs from World of Code using the blob-to-commit (b2c) map, and we collected the projects where these commits are used using the commit-to-project (c2p) map. World of Code has the option of returning only the most central repositories associated to each commit, excluding the forked ones (based on the work published in Mockus et al. (2020)), and we used that feature to focus only on the repositories that first introduced these blobs, and excluded the ones that were forked off of that repository later, since most forks are created just to submit a pull request and counting such forks would lead to double-counting of code blob reuse.

In addition to understanding how the blobs get reused, we also wanted to understand if they are reused in very small projects, or if larger projects also reuse these blobs. So, we needed a way to classify the projects into different categories. We focused on two different project characteristics for the purpose of such classification: the number of developers who contributed to that project, and the number of stars it has on GitHub, a measure available from a database (MongoDB) associated with World of Code.

Both the number of developers and stars are quintessential measures of project size and popularity and were found to have a low correlation (Spearman Correlation: 0.26), so we decided to use both measures. Instead of manually classifying the projects using these variables using arbitrary thresholds, we decided to use Hartemink’s pairwise mutual information based discretization method (Hartemink 2001), which was applied to a dataset with log-transformed values of the number of stars and developers for projects, to classify them into three categories: Small, Medium, and Large. We found different thresholds for the number of developers and stars (for no. of developers, \(>2 \rightarrow \) Medium projects and \(>6 \rightarrow \) Large; for stars, \(>1 \rightarrow \) Medium and \(>14 \rightarrow \) Large), and classified a project as “Large” if it is classified as such by either the number of developers or the number of stars, and used a similar approach for classifying them as “Medium”. The remaining projects were classified as “Small”. Overall, we identified 1,368,419 projects that reused at least one of the 581,579 blobs, and using our classification, 1,220,114 (89.2%) projects were classified as “Small”, 116,177 (8.5%) as “Medium”, and 32,128 (2.3%) as “Large”.

Discovering the Licenses Associated with the Hackathon Projects

One of the main factors that could affect code blob reuse is the license associated with the hackathon project (H4 for RQ3 as presented in Section 2). In order to assess the validity of that claim, we had to collect and identify the type of license associated with the hackathon projects.

Although World of Code provides a tremendous amount of information about open source projects, it doesn’t include the license details for projects. In order to be able to collect the license details, we used GitHub REST API to generate calls for collecting license information for the projects under consideration. We used the list of hackathon projects as input and called the license REST API in GitHub on each of them. We found that, unsurprisingly, some projects don’t have a license at all and some other projects got deleted, merged or renamed. We handled such cases and marked projects with no license as ‘NOLICENSE’ and projects which are we could not find in GitHub as ‘NOTFOUND’.

We ran the license collection on 27,419 projects. We have more projects to consider here since this was done in the second phase of the study and the number of projects associated with the hackathon blobs increased within that time. 5,910 of the projects were not found in GitHub, and 17,742 projects were found to not have a license. The license type used most frequently is “MIT License” with 2,301 projects, followed by “Apache License 2.0” license with 441 projects.

Collecting Data for Identifying the Factors that Affect Hackathon Code Blob Reuse

In addition to tracking hackathon code blob reuse (RQ2), we also aimed to study factors that can affect this phenomenon. For this purpose, we collected various characteristics of the hackathon projects, both from Devpost and World of Code, and extracted the variables of interest, per the hypotheses presented in Section 2.

The data we collected for RQ2 was for the blobs, so, in order to find out if code blobs from a project were reused, we investigated how many blobs from a project was reused, and calculated the ratio of the number of reused code blobs and the total number of code blobs in the project. This revealed that almost 60% of projects had none of their code blobs reused. So, we decided to pursue a binary classification problem for predicting if a project has at least one code blob reused or not instead of doing regression analysis.

For the purpose of our analysis, we excluded hackathon projects with a single member, since a hackathon project “team” with a single participant does not really make a lot of sense, and also the projects that were not related to any existing technology, since these likely were non-technical events. By looking for code blob reuse, we also automatically filtered out any project that had no code blobs in its repository. Moreover, projects for which we had missing values for any of the variables of interest (as shown in Table 2) were also removed. After these filterings, we were left with 13,008 hackathon projects.

For the variables related to H3, the composition of the repository, we have 5 categories, 4 of which are dictated by the GitHub linguist tool:Code(programming), Markup, Data, and Prose, and a category Other for all file types not classified by the tool. We looked at what percentage of the blobs in the projects belonged to which type. Since they all sum up to 100%, 4 of these variables are sufficient to describe the fifth variable. In order to remove the resulting redundancy, we decided to remove the entry for type Other, since its effect is sufficiently described by the remaining variables.

As for the license variable associated with H4, not all licenses used by the hackathon projects are Open Source licenses allowing the code to be shared and used by others and the projects without a license are, by default, assumed to be not licensed for reuse, i.e. under exclusive copyright. In order to capture that, we categorized the variables into three categories - projects without a license were put into the “NOLICENSE” category, projects with any of the standard OSS license that allow code-sharing (with some restrictions in some cases, e.g. the “copyleft” clause for GNU GPL) were put in the “OSSLICENSE” category, and projects with non-standard licenses that allow code sharing under very specific conditions or explicit copyright notices were put in the “Other” category.

The description of all the variables along their sources and values are presented in Table 2.

Analysis Method for Identifying Project Characteristics that Affect Code Blob Reuse

As we noted in the hypotheses presented in Section 2, we are expecting some of the project characteristics to have a linear effect on hackathon code blob reuse, while some should have a more complex non-linear effect. The goal of our analysis is not to make the best predictive model that gives the optimum predictive accuracy, instead, we are trying to find out which of the predictors have a significant effect by creating an explanatory model. As noted by Shmueli et al. (2010), these two are very different tasks.

In order to achieve our goal of having linear and non-linear predictors in the same model and be able to infer the significance of each of them, we decided to use Generalized Additive Models (GAM). Specifically, we used the implementation of GAM from the mgcv package in R.

4.2 Surveys

In addition to the data-driven study described above, we also conducted a survey study to validate our findings and further investigate where the code blobs used in a hackathon originate from (RQ 1.c) and aspects of what happens to the code blobs that were created during an event after it had ended (RQ 2). In order to answer these research questions we focused on three separate scenarios:

-

1.

The perception of individuals who had created a code blob during a hackathon that was reused after the event,

-

2.

The perception of individuals who had created at least a code blob during a hackathon, and none of the blobs they created in that hackathon was reused after the event,

-

3.

The perception of individuals who reused a code blob that was created during a hackathon.

These scenarios are useful because they allow us to compare the perceptions of individuals who created code that got reused with those whose code did not, and also assess the perception of individuals about code blobs they reused which was originally created during a hackathon. The survey instrument focuses on commits that contain specific blobs that fit the three aforementioned scenarios.

4.2.1 Survey Design

We developed two separate survey instruments to cover the three scenarios. The first focuses on code blobs that was created during a hackathon thus covering the first two scenarios. The second focuses on code blobs that were originally developed during a hackathon and subsequently reused thus covering the third scenario. In the following, we will outline the content of both survey instruments (Tables 12 and 13 in Appendix A provides an overview).

Although the same survey instrument was used for potential participants from scenarios 1 and 2, for ease of reference, we refer to them as survey 1 and survey 2, and the survey sent out to potential participants in scenario 3 as survey 3. All of the surveys started by asking participants about whether they authored the commit we had attributed to them or not. The aim of this question was to ensure that the respective participant was knowledgeable about the code contained in the blob the survey focused on.

The second question in the surveys focused on the source of the code contained in a blob (RQ 1.c). We provided a list of options that participants could choose from which included options like “From other GitHub repositories”, “From the web (e.g. on Stackoverflow or forums)” and “It was generated by a tool”. Participants could select multiple sources since it is possible that the code contained in a blob is based on different sources. Participants could also provide an alternative source using a text field. The first two surveys also included options related to the hackathon the code was created in including “I wrote it during the hackathon”, “I reused code that I had written before the hackathon” and “My team members wrote it during the hackathon”.

The first two surveys continued with questions examining whether and where participants use their own code after an event and how they foster code reuse (RQ 2.d). Possible answer options included common reuse settings such as “another hackathon”, “school as part of a class, project or thesis” or “In another open source project in my free time”. In addition, participants could state that they did not reuse the code after an event or mention other possible scenarios. Regarding the question of how participants fostered reuse, we included typical approaches such as “sending it to friends or colleagues”, “sharing it online (e.g. Stackoverflow, Medium, Social media)” as well as the option to state a different approach.

We also asked participants in the first two surveys for whom they thought the code they created would be useful and whether they are aware if anyone used their code after the event (RQ 2.d). Possible answer options regarding their perception of the usefulness of the code included “No one”, “For our hackathon project or team” and “For many people outside of the hackathon project or team”. Possible options related to their awareness about who might have reused their code included “my hackathon team members”, “my friends or colleagues” and “someone else used it in an open-source project”. For both questions, participants could again state other possible options using a text field.

In addition to asking about a specific blob, we also included questions regarding the perception of the participant about the hackathon project during which they developed to code in the blob in the first surveys. We specifically focused on their perception of the usefulness of the project and their intentions to continue working on it (RQ 2.a and RQ 2.b). For both aspects, we relied on established scales (Reinig 2003; Bhattacherjee 2001) which and have been successfully applied in research related to hackathons in the past (Filippova et al. 2017; Nolte et al. 2020b).

In addition to the code that was developed participants might also have reused the idea their project was based on in a different context (RQ 2.f). The first surveys thus also included a simple yes/no/not sure question related to this aspect.

Regarding the third survey, we also included a question that focused on how easy they found the using the code contained in the blob we identified (RQ 2.a). For this, we adapted an established scale by Davis (1989).

Finally, all surveys also included common demographic questions, including the age of the participant, their gender, their perception about their minority status, and their experience contributing to open-source projects. We utilized this simple way of assessing perceived minority since minority perception of an individual can be based on many different aspects like race, gender, expertise, background, and others. Table 3 shows summary for the participants demographics.

4.2.2 Procedure

We selected 5,000 individuals to contact for each of the previously discussed scenarios. For each of them, we followed the following procedure - We first selected blobs appropriate for the respective scenario and that contained more than 15 lines of code. We then identified the commits that they were contained in and removed all blobs which could not be reached in GitHub. We proceeded to remove duplicates, sorted the commits by date, and selected the 5,000 most recent ones per scenario. Finally, we ensured that no individual would receive an invitation for more than one survey.

Before sending the surveys we piloted them with 3 individuals to ensure that they are understandable and to remove potential misspellings and other mistakes. We then sent individual invitations to the selected participants. Out of the 15,000 invitations 3,254 could not be delivered (1,036 for survey 1, 1,083 for survey 2, and 1,135 for survey 3). We received a total of 1,479 responses (558 for survey 1, 335 for survey 2, and 586 for survey 3) which amounts to a total response rate of 9.86%. We subsequently removed incomplete responses and responses that lacked appropriateness (e.g. if someone stated that they did not contribute the commit we had attributed to them). We arrived at a total of 416 complete and useful responses (178 for survey 1, 120 for survey 2, and 118 for survey 3) thus having an effective response rate of 2.77%.

In addition to this cleaning process, we also assessed the internal consistency of the three scales we utilized using Cronbach’s alpha (Tavakol and Dennick 2011). The respective values were at 0.865 for perceived usefulness, 0.841 for continuation intentions, and 0.934 for perceived ease of use which makes all scales suitable for further analysis.

The analysis was mainly based on comparing findings from the surveys related to code blobs that were reused after a hackathon (survey 1) and code blobs that were not (survey 2). We particularly compared code origins (RQ 1.c), reuse behavior by code creators (RQ 2.c), code sharing behavior (RQ 2.d), awareness about code reuse, and perceptions about the usefulness of the code (RQ 2.e). In addition, we also compared satisfaction perceptions and continuation intentions on a project level (RQ 2.a and RQ 2.b) as well as reuse of the ideas that arose from the hackathon projects (RQ 2.f). All comparisons were done on a descriptive level. Finally, contributing to RQ 2.a, we also analyzed the perception of participants related to how easy they found using the code based on survey 3.

5 Results

Here we will discuss our findings in relation to our research questions.

5.1 Origins of Hackathon Code Blobs (RQ1)

As mentioned in Section 2, our first research question focuses on several aspects related to the origin of the hackathon code. We draw insights from both the data-driven study on hackathon projects as well as the surveys to address this research question.

5.1.1 When are Code Blobs Used in Hackathon Projects Created (RQ 1.a) and Who are the Original Authors (RQ 1.b) of the Code Blob?

In terms of “when”, we examined if the first creation of the code blob under consideration was Before, During, or After the corresponding hackathon event. In terms of “who”, we checked if the first creator of the code blob was one of the members of the hackathon project (Project Member) or someone who was a contributor to a project in which one of the members of the hackathon project contributed to as well (Co-Contributor), or someone else (Other Author).

The result of the analysis is presented in Fig. 5, showing that, overall, 85.56% of the code blobs used in the hackathon projects was created before an event. Most of these reused blobs were part of a framework/library/package used in the hackathon project, which aligns with the findings of Mockus (2007). Around 9.14% of the blobs were created during events, since participants need to be efficient during an event owing to the time limit (Nolte et al. 2018) which fosters reuse as previously discussed in the context of OSS (Haefliger et al. 2008). We also found that 5.3% of the blobs were created after an event, suggesting that most teams do not add a lot of new content to their hackathon project repositories after the event. This finding is in line with prior work on hackathon project continuation (Nolte et al. 2020a).

Figure 5 shows that, overall, the original creators of most of the code blobs (69.54%) in the hackathon project repositories are someone who is not a part of the team. They are mostly the original creators of some project/package/framework used by the hackathon team. Around one-third (29.47%) of the code blobs were created by the project members, and the reuse of code blobs from co-contributors in other projects is very limited (0.99%). This aspect has not been extensively studied in the context of work on hackathons yet.

5.1.2 Where did the Code Come from? (RQ 1.c)

Our research question RQ 1.c focuses on the origin of code contained in the blobs under consideration in order to understand how the hackathon teams generated the code written during the hackathon. This question can only be answered by directly asking the participants, thus one of the survey questions was targeting that topic by asking about the source of the code committed during the hackathon in the hackathon repository. By analyzing the 178 and 120 responses of that question from survey scenarios 1 and 2 respectively (since they were the hackathon participants who created the code), we found that most participants chose that they wrote the code during the hackathon, team members wrote the code during the hackathon, and/or they got the code from the web. Other options, like I reused code that I had written before the hackathon, My team members reused code they had written before the hackathon, It was generated by a tool, From other GitHub repositories were chosen rarely. Table 4 contains a summary of code origin responses from surveys of hackathon participants whose code was reused (Survey 1) and whose code wasn’t reused (Survey 2). Since only 3 options were chosen most frequently, we combined all other responses in one group called “Others” which include all non commonly chosen options plus the free text option where participants can write something not listed in the options.

The most frequently chosen option was that they wrote the code themselves (76 (42.7%) and 60 (50%) exclusively for Survey 1 and 2 respectively). A number of participants combined 2 options and choose that they wrote the code during the hackathon along with getting the code from the web (20 and 12 for Survey 1 and 2 respectively), which is a common practice during hackathons. Similarly, some participants choose that they write the code during the hackathon along with team members wrote it during the hackathon which indicates teamwork and collaboration during such hackathon events. Some participants provided extra information in the free text option, specifically, 9 and 5 participants utilized the free text field in survey 1 and survey 2 respectively. Participants indicated that some code files were generated by a bot (Microsoft Bot Framework Workshop), setup files for a Flask project, a dataset for fake news competition, code file provided by the hackathon organizers, or they used/adapted examples they discovered through Google search.

5.1.3 Programming Languages Distribution for Hackathon Code with Different Origin (RQ 1.d)

Looking at top languages for the blobs created at different times (Fig. 6), identified by the GitHub linguist tool, we found that most of the code reused by hackathon projects (created before) is JavaScript, and other top languages together indicate that most of the reused code by hackathon projects are related to web development frameworks. JavaScript is also the most common language worked on during the hackathons we studied, followed by Java, Python, Swift, and C#/Smalltalk, indicating that most hackathon projects work on developing web/mobile apps. C++ was the most common language for code developed after the event, followed by Swift and JavaScript, showing a slight shift in the type of work done after the event, favoring Machine Learning applications.

Looking at the top languages for the code created by different authors, as shown in Fig. 7, we can see that, once again, most of the code created by developers not part of the hackathon team is JavaScript, which is similar to the code created before the event (Fig. 6). This is not surprising, since they have a great deal of overlap (Fig. 5). Most of the code created by project members indicates a leaning towards web/mobile app development, and most of the C++ and Python code was found to be related to Machine Learning frameworks.

We noticed that JavaScript is, by far, the most widely used language in terms of blob count. Most of the JavaScript blobs were, in fact, related to some framework and were developed by people not part of the hackathon team. One possible reason for that could be that developing web applications or designing front-end UIs is a common hackathon activity and hackathon participants often reuse standard frameworks for developing their UIs.

5.1.4 Size Distribution of Hackathon Code Blobs (RQ 1.e)

As mentioned in Section 4.1.2, we analyzed the sizes of the hackathon code blobs by measuring the LOC (lines of code). The distributions of code sizes are shown in Fig. 8, with Fig. 8a showing the code size distributions for blobs created before, during, and after a hackathon event and Fig. 8b showing the distributions for blobs created by project members, co-contributors, and others.

The first thing we checked was if the distributions are significantly different from one another. Therefore, we conducted pairwise Mann-Whitney U tests on blobs created before, during, and after the hackathon and also on code created by project members, co-contributors, and others. The results clearly indicated that the sizes of blobs created at different times and by different authors are indeed significantly different, with the p-Values for each test being < 1e − 100.

The descriptive statistics for sizes of blobs created at different times and by different authors is presented in Table 5. It goes to show that project members tend to create more lines of code on average than the rest. Although we saw a number of large outliers in the code created during the hackathons, on average, the sizes of code blobs added after the hackathons also seem to be larger. It is worth noting, however, that we only looked at the lines of code and not, for example, the complexity of the code. For example, it could very well be the case that the code created by project members is larger because they are not optimized very well due to the time constraints of the hackathons.

One curious oddity we noticed is that the minimum LOC for blobs in every category is 0 and we further investigated those blobs. Upon examination, we found 1,150 unique blobs among the ones under study that were identified as code blobs but had 0 lines of code. However, almost all of these blobs were found to contain some commented code which led us to believe that these blobs might have been used sometime during development, e.g. for testing a feature or debugging, and were later commented out because e.g. the team didn’t implement the feature or finished the debugging task.

Overall, we found the total size of code blobs created before, during, and after the hackathon to be 1,125,735,653, 110,921,111, and 203,786,274 respectively. Therefore, considering the total lines of code, 78% of code used in the hackathon projects were created before the hackathons, 8% during the hackathons, and 14% after the hackathons. Similarly, a total of 871,575,852 (60.5%) lines of code were created by the hackathon project members, 19,147,025 (1.3%) lines of code were created by the co-contributors, and 549,720,161 (38.2%) lines of code were created by other developers.

5.1.5 Identifying Template Code (RQ 1.f)

Using the method described in Section 4.1.2 we analyzed 2,684,870 code blobs used in hackathon projects to check if they were template code blobs or not. Our heuristics rely on a blob-to-filename (b2f ) map in order to determine that and the mapping was missing for a number of blobs leading us to exclude them from our analysis. Overall, we found that 800,294 (29.8%) blobs were identified as template code by our method.

The distribution of template code used in the hackathon projects depending on who created the code blob (a project member, a co-contributor, or other developers) and when was the code blob first added to the hackathon repository (before, during, or after the hackathon event) is shown in Fig. 9. The three subplots refer to the timing and the X-axis values in each subplot refer to the author of the code. The percentage numbers show how much of the code is template code (or not) for each author-timing combination.

We notice that most of the code (in terms of blob count) hackathon project members write before, during, and after the event are non-template code and, conversely, most of the code created by the “other” developers used in the hackathon projects is actually template code. Overall, most of the code blobs added to the hackathon projects before the events are template code while the code blobs added during or after the event are mostly non-template code. This shift is even more drastic if we look at the code created by the co-contributors - while the code created by them is almost evenly divided between template and non-template code before the event, most of the code created by them that is added to the hackathon projects during or after the hackathon event are non-template code. This goes to show that the hackathon participants tend to use templates created by other developers in their projects quite often but they themselves create a very small amount of template code, which aligns with our experience about how hackathon events unfold. It is also interesting that hackathon teams do not reuse a lot of non-template code - one might assume that during the hackathons teams might try to use existing code written by other developers working on a similar problem but that doesn’t seem to be true in most cases.

5.2 Hackathon Code Blob Reuse (RQ2)

As discussed in Sections 2 and 4.1.2, our goal while looking for hackathon code blob reuse is to understand the reuse of code blobs that were first created during a hackathon event.

5.2.1 Comparing the Characteristics of Code Blobs that were Reused (RQ 2.a) and Code Blobs that weren’t (RQ 2.b)

By following the procedure outlined in Section 4.1.2, we found that 167,781 (28.8%) of the 581,579 hackathon code blobs got reused in other projects.

A quick analysis into whether the shared blob is a template code blob or not revealed that, in fact, 159,286(95.5%) of the blobs that were reused later on were not “template” code. In contrast, around 29.8% of the blobs used in hackathon projects were template code. A reason for this apparent discrepancy could be that hackathon projects do not typically create template code. As also shown in Fig. 9, only around 2.1% of the code blobs we consider for RQ2 are template code. Given that figure, around 4.5% of the blobs among the ones reused does seem to indicate that template blobs have a higher propensity of being reused. Moreover, only 7,842 (1.9%) blobs among the 413,798 that were not reused later on were found to be template code blobs, which seems to further support the assertion.

We decided to test this assertion by using a logistic regression model where we used if a code blob was reused or not as a binary response variable and if a code blob is template code or not as a binary predictor variable. The result of which, as shown in Table 6, verifies that that template code has a higher probability of being reused.

Considering the total size of the blobs, the reused blobs contained a total of 31,662,234 lines of code, which is around 29% of the total 108,975,835 lines of code contained in the 581,579 blobs under consideration. The size distribution for the relevant blobs that were later reused and the ones which were not reused is shown in Fig. 10 and the descriptive statistics for them is available in Table 7. The blobs that were not reused were found to be, on average, larger than the ones that were reused. A Mann-Whitney U test revealed that the distribution of code sizes of blobs that were reused and blobs that were not is also significantly different (p-Value < 1e − 100).

Another aspect we wanted to understand is if the hackathon participants’ satisfaction with the hackathon event and intention of continuing the project in the future is different depending on whether the code got reused or not. The reason we investigated these two aspects is that we thought this might have an effect on the quality of code they developed and might, consequently, affect the probability of the code being reused later on. These aspects were investigated through the surveys we sent out. We had the participants rate five questions related to their satisfaction with the hackathon event and four questions related to their future intentions about continuing the project work on a 5-point Likert scale (full list of questions are available at Table 12 in Appendix). As discussed in Section 4.2.2, we calculated the Cronbach’s alpha for the responses and found them to be consistent. Finally, we converted their Likert scale-based responses to numerical values and calculated the mean of responses for each participant for the questions related to satisfaction and the questions related to intention for continuation.

The resultant distribution of responses from the participants of surveys 1 and 2 is shown in Fig. 11, with the values in the Y-axis indicating the participants’ satisfaction with the hackathon (5: Very Satisfied, 1: Not at all Satisfied) and if they planned on continuing the project (5: Very likely to continue, 1: Very unlikely to continue). We noticed from Fig. 11 that while most participants were satisfied with the hackathon events they were also unlikely to continue working on the projects. Although it looked like participants from survey 1 were, overall, more satisfied with the event and were more likely to continue working on the project compared to the participants of survey 2, the difference was found to be not statistically significant using a Mann-Whitney U test.

Finally, we also wanted to get a sense of how easy it is to reuse these hackathon code blobs. This can only be done credibly if we ask someone who was not part of the original team and later reused the code. For obvious reasons, we can’t test this for code that wasn’t reused later on. We had a series of questions in the survey that we sent out to developers who reused some hackathon code asking them to rate its ease-of-use on a 5-point Likert scale. The result, as shown in Fig. 12, shows that most of the participants rated the code to be easy to use across different dimensions.

5.2.2 Projects that Reuse Hackathon Code (RQ 2.c)

We further classified the projects that reused these code blobs into Small, Medium, and Large, as discussed in Section 4.1.2. To recap, 89.2% of the projects that reused the hackathon code blobs were classified as Small, 8.5% were Medium, and 2.3% were classified as Large projects. By investigating the blobs reused by these projects we found that, unsurprisingly, there are a number of instances where a blob was reused in projects of different categories. However, such cases were found to be quite rare, in fact, only 8.85% of reused blobs got reused in more than one project. By looking at the size of the projects a blob was reused in, we found that over half (57.73%) of the blobs are only reused in other Small projects, around one-third (32.85%) are reused in Medium projects, and less than a tenth (9.42%) are reused in Large projects.

The top-5 languages for the blobs reused by various projects are shown in Fig. 13. As we can see JavaScript still remains the most common, and Python, C/C++, C#/Smalltalk, Java were among the top ones as well. While most reused blobs are related to web/mobile apps/frameworks, we also found the relatively uncommon Gherkin being the second most common language for Medium projects, and the Small projects reused a lot of blobs related to D/DTrace/Makefile.

We were interested in exploring the temporal dynamics of code blob reuse as well. Therefore, we looked at the reuse of hackathon code blobs over time for the duration of two years (104.3 weeks) after the corresponding hackathon event ended. The result of that analysis is shown in Fig. 14, which shows the weekly hackathon code blob reuse for 2 years after the end of the corresponding hackathon event, with the fraction of the total number of hackathon code blobs (581,579) reused per week on the Y-axis. As we can see from this plot, while overall 28.8% of the hackathon code blobs were reused, over the span of a single week, no more than 0.8% of the blobs got reused. This finding is in line with prior work on hackathon project continuation (e.g. Nolte et al. (2020a) found that continuation activity drops quickly within one week after a hackathon before reaching a stable state) within the same repository that the team used during the hackathons. A clear trend of the code blob reuse dropping and then saturating after some time is visible, which is significant because it indicates that the code created in the hackathon events continue to bear some value even after 2 years have passed after the event. For code blob reuse in Small projects, the knee point comes after around 10–15 weeks, while for the Medium and Large projects, it comes much earlier, in around a month. It is also a bit surprising to see code blob reuse peaking so soon after the event, but this could be due to the participants of the event putting/influencing people they know to put the code blobs they think are valuable to some other project where they think it might be of use. It might also be because, the chances of a code file being modified increases with time, which results in the SHA1 value being changes, effectively giving rise to a new blob. This distinction has not been studied in prior work on hackathon code.

5.2.3 Do the Hackathon Code Creators Foster Reuse? (RQ 2.d)

In order to investigate whether the hackathon project members who created a piece of code took any steps to foster its reuse, we focused on two specific aspects - if they themselves reused the code (blob) elsewhere and if they took any steps for sharing the code.Footnote 9 We gathered the responses from survey scenarios 1 and 2 where these two questions were asked to the participants.

Looking at whether the code creators reused the code (blob) themselves (Fig. 15), we notice that most of the participants did not reuse the code or do not recall whether or not they reused the code though more participants from survey 2 (whose code was not reused as per our collected data) chose the former option. We did notice that a few participants said they reused the code at a different hackathon/at work/at school/in another project and more participants from survey 1 (whose code was reused as per our analysis) indicated that they reused the code. It is worth noting however that some participants from the survey indicated that they reused the code but our analysis indicated that their code wasn’t reused. The reason for this discrepancy could be that the code was modified and reused or was not shared openly in GitHub, both of which our analysis does not cover. This aspect is discussed in further detail in Section 7.

In terms of whether the participants shared the code (blob) with others or not (Fig. 16), we see a clear majority of them said they did not share the code though the number is a bit higher for participants of survey 2. Some participants, however, indicated that they shared it with friends/colleagues or online, most of whom were participants from survey 1.