Abstract

Cloned code often complicates code maintenance and evolution and must therefore be effectively detected. One of the biggest challenges for clone detectors is to reduce the amount of irrelevant clones they found, called false positives. Several benchmarks of true and false positive clones have been introduced, enabling tool developers to compare, assess and fine-tune their tools. Manual inspection of clone candidates is performed by raters that do not have expertise on the underlying code. This way of building benchmarks might be unreliable when considering context-dependent clones i.e., clones valid for a specific purpose. Our goal is to investigate the reliability of rater judgments about context-dependent clones. We randomly select about 600 clones from two projects and ask several raters, including experts of the projects, to manually classify these clones. We observe that judgments of non expert raters are not always repeatable. We also observe that they seldomly agree with each others and with the expert. Finally, we find that the project and the fact that a clone is a true or false positive might have an influence on the agreement between the expert and non experts. Therefore, using non experts to produce clone benchmarks could be unreliable.

Similar content being viewed by others

Notes

These studies mentioned no information about any raters’ expertise of the analyzed code.

References

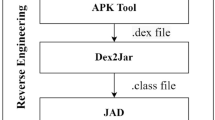

Baker BS (1995) On finding duplication and near-duplication in large software systems. In: Proceedings of the Second Working Conference on Reverse Engineering, WCRE ’95, pp 86–. IEEE Computer Society, Washington, DC, USA

Baxter I, Yahin A, Moura L, Sant’Anna M, Bier L (1998) Clone detection using abstract syntax trees. In: International conference on software maintenance, 1998. Proceedings, pp 368–377. doi:10.1109/ICSM.1998.738528

Bellon S, Koschke R, Antoniol G, Krinke J, Merlo E (2007) Comparison and evaluation of clone detection tools. IEEE Trans Softw Eng 33 (9):577–591. doi:10.1109/TSE.2007.70725

Bissyandé TF, Thung F, Wang S, Lo D, Jiang L, Réveillère L (2013) Empirical Evaluation of Bug Linking. In: Proceedings of the 17th european conference on software maintenance and reengineering (CSMR 2013), pp 1–10, Genova, Italy

Charpentier A, Falleri JR, Lo D, Réveillère L (2015) An empirical assessment of bellon’s clone benchmark. In: Proceedings of the 19th international conference on evaluation and assessment in software engineering, EASE ’15, pp 20:1–20:10. ACM, New York, NY, USA. doi:10.1145/2745802.2745821

Ducasse S, Rieger M, Demeyer S (1999) A language independent approach for detecting duplicated code. In: IEEE international conference on software maintenance, 1999. (ICSM ’99) Proceedings, pp 109–118. doi:10.1109/ICSM.1999.792593

Falleri JR, Morandat F, Blanc X, Martinez M, Monperrus M (2014) Fine-grained and accurate source code differencing. In: Proceedings of the international conference on automated software engineering, pp –. Sweden

Faust D, Verhoef C (2003) Software product line migration and deployment. Software Practice and Experience, vol 33. Wiley, pp 933–955

Gode N, Koschke R (2009) Incremental clone detection. In: 13th european conference on software maintenance and reengineering, 2009. CSMR ’09, pp 219–228. doi:10.1109/CSMR.2009.20

Ihaka R, Gentleman R (1996) R: a language for data analysis and graphics. J Comput Graph Stat 5(3):299–314

Jiang L, Misherghi G, Su Z (2007) Deckard: Scalable and accurate tree-based detection of code clones. In: ICSE, pp 96–105

Kalibera T, Maj P, Morandat F, Vitek J (2014) A fast abstract syntax tree interpreter for R. In: 10th ACM SIGPLAN/SIGOPS International Conference on Virtual Execution Environments, VEE ’14, Salt Lake City, UT, USA, March 01 - 02, 2014, pp 89–102. doi:10.1145/2576195.2576205

Kamiya T, Kusumoto S, Inoue K (2002) Ccfinder: a multilinguistic token-based code clone detection system for large scale source code. IEEE Trans Softw Eng 28 (7):654–670. doi:10.1109/TSE.2002.1019480

Kapser C, Anderson P, Godfrey M, Koschke R, Rieger M, van Rysselberghe F, Weis̈gerber P (2006) Subjectivity in clone judgment: Can we ever agree?. In: Duplication, Redundancy, and Similarity in Software, no. 06301 in Dagstuhl Seminar Proceedings. IBFI, Schloss Dagstuhl, Germany

Kapser C, Godfrey M (2006) cloning considered harmful considered harmful. In: 13th working conference on reverse engineering, 2006. WCRE ’06, pp 19–28. doi:10.1109/WCRE.2006.1

Koschke R, Falke R, Frenzel P (2006) Clone detection using abstract syntax suffix trees. In: Proceedings of the 13th working conference on reverse engineering, WCRE ’06, pp 253–262. IEEE computer society, Washington, DC, USA. doi:10.1109/WCRE.2006.18

Krutz DE, Le W (2014) A code clone oracle. In: Proceedings of the 11th working conference on mining software repositories, MSR 2014, pp 388–391. ACM, New York, NY, USA. doi:10.1145/2597073.2597127

Lague B, Proulx D, Mayrand J, Merlo EM, Hudepohl J (1997) Assessing the benefits of incorporating function clone detection in a development process. In: Proceedings of the international conference on software maintenance, ICSM ’97, pp 314–. IEEE computer society, Washington, DC, USA. http://dl.acm.org/citation.cfm?id=645545.853273

Landis JR, Koch GG (1977) The measurement of observer agreement for categorical data. biometrics:159–174

Li Z, Lu S, Myagmar S, Zhou Y (2006) Cp-miner: finding copy-paste and related bugs in large-scale software code. IEEE Trans Softw Eng 32(3):176–192. doi:10.1109/TSE.2006.28

Martinetz T, Schulten K (1991) A Neural-Gas Network Learns Topologies. In: Artificial Neural Networks, vol. I, pp 397–402

Mende T, Koschke R, Beckwermert F (2009) An evaluation of code similarity identification for the grow-and-prune model. J Softw Maint Evol 21(2):143–169

Nguyen HA, Nguyen TT, Pham N, Al-Kofahi J, Nguyen T (2012) Clone management for evolving software. IEEE Trans Softw Eng 38(5):1008–1026. doi:10.1109/TSE.2011.90

Roy C, Cordy J (2008) Nicad: Accurate detection of near-miss intentional clones using flexible pretty-printing and code normalization. In: The 16th IEEE international conference on program comprehension, 2008. ICPC 2008, pp 172–181. doi:10.1109/ICPC.2008.41

Roy C, Cordy J (2009) A mutation/injection-based automatic framework for evaluating code clone detection tools. In: International conference on software testing, verification and validation workshops, 2009. ICSTW ’09, pp 157–166. doi:10.1109/ICSTW.2009.18

Selim G, Foo K, Zou Y (2010) Enhancing source-based clone detection using intermediate representation. In: 2010 17th Working Conference on Reverse Engineering (WCRE), pp 227–236. doi:10.1109/WCRE.2010.33

Svajlenko J, Islam JF, Keivanloo I, Roy CK, Mia MM (2014) Towards a big data curated benchmark of inter-project code clones. ICSME:5

Walenstein A, Jyoti N, Li J, Yang Y, Lakhotia A (2003) Problems creating task-relevant clone detection reference data. In: 10th working conference on reverse engineering, 2003. WCRE 2003. Proceedings, pp 285–294. doi:10.1109/WCRE.2003.1287259

Wang T, Harman M, Jia Y, Krinke J (2013) Searching for better configurations: A rigorous approach to clone evaluation. In: Proceedings of the 2013 9th joint meeting on foundations of software engineering, ESEC/FSE 2013, pp 455–465. ACM, New York, NY, USA. doi:10.1145/2491411.2491420

Yang J, Hotta K, Higo Y, Igaki H, Kusumoto S (2014) Classification model for code clones based on machine learning. Empir Softw Eng:1–31

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by: Audris Mockus

Rights and permissions

About this article

Cite this article

Charpentier, A., Falleri, JR., Morandat, F. et al. Raters’ reliability in clone benchmarks construction. Empir Software Eng 22, 235–258 (2017). https://doi.org/10.1007/s10664-015-9419-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10664-015-9419-z