Abstract

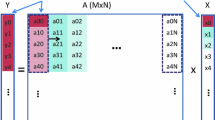

Recent integrated circuit technologies have opened the possibility to design parallel architectures with hundreds of cores on a single chip. The design space of these parallel architectures is huge with many architectural options. Exploring the design space gets even more difficult if, beyond performance and area, we also consider extra metrics like performance and area efficiency, where the designer tries to design the architecture with the best performance per chip area and the best sustainable performance. In this paper we present an algorithm-oriented approach to design a many-core architecture. Instead of doing the design space exploration of the many core architecture based on the experimental execution results of a particular benchmark of algorithms, our approach is to make a formal analysis of the algorithms considering the main architectural aspects and to determine how each particular architectural aspect is related to the performance of the architecture when running an algorithm or set of algorithms. The architectural aspects considered include the number of cores, the local memory available in each core, the communication bandwidth between the many-core architecture and the external memory and the memory hierarchy. To exemplify the approach we did a theoretical analysis of a dense matrix multiplication algorithm and determined an equation that relates the number of execution cycles with the architectural parameters. Based on this equation a many-core architecture has been designed. The results obtained indicate that a 100 mm2 integrated circuit design of the proposed architecture, using a 65 nm technology, is able to achieve 464 GFLOPs (double precision floating-point) for a memory bandwidth of 16 GB/s. This corresponds to a performance efficiency of 71 %. Considering a 45 nm technology, a 100 mm2 chip attains 833 GFLOPs which corresponds to 84 % of peak performance These figures are better than those obtained by previous many-core architectures, except for the area efficiency which is limited by the lower memory bandwidth considered. The results achieved are also better than those of previous state-of-the-art many-cores architectures designed specifically to achieve high performance for matrix multiplication.

Similar content being viewed by others

References

Hofstee, H.P. (2005). Power efficient processor architecture and the cell processor. In HPCA’05: Proceedings of the 11th International Symposium on High-Performance Computer Architecture, Washington: IEEE Computer Society.

Vangal, S., Howard, J., Ruhl, G., Dighe, S., Wilson, H., Tschanz, J., et al. (2008). An 80-tile sub-100w teraflops processor in 65-nm CMOS. IEEE Journal of Solid-State Circuits, 43(1), 29–41.

CSX700 Floating Point Processor. Datasheet 06-PD-1425 Rev 1, ClearSpeed Technology Ltd (2011).

Goto, K., & Geijn, R. (2008). Anatomy of a high-performance matrix multiplication. ACM Transactions on Mathematical Software , 34(3), 12.

Liao, T.G.S.(2002). System design with SystemC.Norwell: Kluwer Academic Publishers

Goto, K., & Geijn, R. (2008). High performance implementation of the level-3 BLAS. ACM Transactions on Mathematical Software , 35(1), 1–14.

Pedram, A., van de Geijn, R., & Gerstlauer, A. (2012). Codesign tradeoffs for high-performance, low-power linear algebra architectures. IEEE Transactions on Computers, 61(12), 1724–1736.

Matam, K., Le, H., & Prasanna, V. (2013). Energy efficient architecture for matrix multiplication on FPGAs, FPL. IEEE Transactions on Very Large Scale Integration (VLSI) Systems, 13(11), 1305–1319

Allada, V., et al. (2009). Performance analysis of memory transfers and GEMM subroutines on NVIDIA Tesla GPU Cluster. Proceedings of IEEE International Conference Cluster Computing and Workshops, pp. 1–9.

Volkov, V., et al. (2008). Benchmarking GPUs to tune dense linear algebra. Proceedings ACM/IEEE Conference on Supercomputing, pp. 1–11.

Tan, G., et al. (2011). Fast implementation of DGEMM on Fermi GPU. Proceedings of International Conference for High Performance Computing, Networking, Storage and Analysis.

Wang, Z., Tan, H., & Ranka, S. (2012). Energy and performance tradeoffs for matrix multiplication on multicore machines. International Conference on Green Computing, pp. 1,6, 4–8 June.

Dou, Y., Vassiliadis, S., Kuzmanov, G., & Gaydadjiev, G. (2005). 64-bit floating-point FPGA matrix multiplication. In ACM/SIGMA 13th International Symposium on Field-Programmable Gate Arrays, pp. 86–95.

Zhuo, L., & Prasanna, Viktor K. (2008). High-performance designs for linear algebra operations on reconfigurable hardware. IEEE Transactions on Computers, 57(8), 1057–1071.

Lin, C., So, H., & Leong, P. (2011). A model for matrix multiplication performance on FPGAs. In International Conference on Field Programmable Logic and Applications, pp. 305–310.

Golub, G., & Ortega, J. (1993). Scientific computing - an introduction with parallel computing. San Diego: Academic Press Inc.

Kanter, D. (Sept. 2009). Inside Fermi: Nvidia’s HPC Push. Technical Report, Real World Technologies.

http://www.anandtech.com/show/6774/nvidias-geforce-gtx-titan-part-2-titans-performance-unveiled/3

http://www.tomshardware.com/reviews/geforce-gtx-titan-gk110-review,3438.html

Ware, M., et al. (2010). Architecting for power management: The IBM POWER7 approach. Proceedings of IEEE 16th International Symposium on High Performance Computer Architecture, pp. 1–11.

Acknowledgments

This work was supported by national funds through FCT, Fundação para a Ciência e Tecnologia, under projects PEst-OE/EEI/LA0021/2013 and PTDC/EEA-ELC/122098/2010.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

José, W.M., Silva, A.R., Véstias, M.P. et al. Algorithm-oriented design of efficient many-core architectures applied to dense matrix multiplication. Analog Integr Circ Sig Process 82, 147–158 (2015). https://doi.org/10.1007/s10470-014-0441-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10470-014-0441-7