Abstract

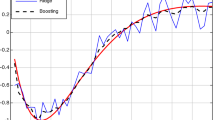

For likelihood-based regression contexts, including generalized linear models, this paper presents a boosting algorithm for local constant quasi-likelihood estimators. Its advantages are the following: (a) the one-boosted estimator reduces bias in local constant quasi-likelihood estimators without increasing the order of the variance, (b) the boosting algorithm requires only one-dimensional maximization at each boosting step and (c) the resulting estimators can be written explicitly and simply in some practical cases.

Similar content being viewed by others

References

Bühlmann P. and Yu B. (2003). Boosting with the L 2 loss: regression and classification. Journal of the American Statistical Association 98: 324–339

Choi E. and Hall P. (1998). On bias reduction in local linear smoothing. Biometrika 85: 333–345

Fan J. (1999). One-step local quasi-likelihood estimation. Journal of the Royal Statistical Society, Ser. B 61: 927–943

Fan J. and Gijbels I. (1996). Local polynomial modelling and its applications. Chapman and Hall, London

Fan J., Heckman N.E. and Wand M.P. (1995). Local polynomial kernel regression for generalized linear models and quasi-likelihood functions. Journal of the American Statistical Association 90: 141–150

Freund Y. (1995). Boosting a weak learning algorithm by majority. Information and Computation 121: 256–285

Freund Y. and Schapire R.E. (1996). Experiments with a new boosting algorithm. In: Saitta, L. (Ed.) Machine Learning: Proceedings of the Thirteenth International Conference, pp 144–156. Morgan Kauffman, San Francisco

Friedman J. (2001). Greedy function approximation: a gradient boosting machine. The Annals of Statistics 29: 1189–1232

Jones M.C., Linton O. and Nielsen J. (1995). A simple bias reduction method for density estimation. Biometrika 82: 327–338

Loader C.R. (1999). Local regression and likelihood. Springer, New York

Marzio M.D. and Taylor C.C. (2004a). Boosting kernel density estimates: A bias reduction technique? Biometrika 91: 226–233

Marzio, M. D., Taylor, C. C. (2004b). Multistep kernel regression smoothing by boosting. www.amsta.leeds.ac.uk/~charles/boostreg.pdf, unpublished manuscript.

Schapire R.E. (1990). The strength of weak learnability. Machine Learning 5: 313–321

Wand M.P. and Jones M.C. (1995). Kernel smoothing. Chapman and Hall, London

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Ueki, M., Fueda, K. Boosting local quasi-likelihood estimators. Ann Inst Stat Math 62, 235–248 (2010). https://doi.org/10.1007/s10463-008-0173-5

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-008-0173-5