Abstract

Interventions to support children with autism often include the use of visual supports, which are cognitive tools to enable learning and the production of language. Although visual supports are effective in helping to diminish many of the challenges of autism, they are difficult and time-consuming to create, distribute, and use. In this paper, we present the results of a qualitative study focused on uncovering design guidelines for interactive visual supports that would address the many challenges inherent to current tools and practices. We present three prototype systems that address these design challenges with the use of large group displays, mobile personal devices, and personal recording technologies. We also describe the interventions associated with these prototypes along with the results from two focus group discussions around the interventions. We present further design guidance for visual supports and discuss tensions inherent to their design.

Similar content being viewed by others

1 Background and introduction

Kanner [28] first described autism in after noticing the shared symptom of a general lack of interest in other people in a group of children who had previously been referenced with various other labels, including simply mental retardation. Since Kanner’s recognition of “Early Infantile Autism,” the scientific and medical communities’ views of autism have changed dramatically, broadening to include other related disorders. Autism spectrum disorders (ASD) are a set of five conditions that begin early in life and often affect daily functioning throughout the lifetime. These disorders appear to affect different ethnic and socioeconomic groups similarly, though boys are nearly five times as likely to be diagnosed with one of these disorders than girls.

The diagnostic criteria for the five autism spectrum disorders (ASD), also known as Pervasive Development Disorders (PDD), are vast and complex and have evolved since they were first created in 1980 [2, 3]. They include impairments in social interaction, communication—both verbal and non-verbal—and stereotypical or repeated behavior, interests, and activities [3]. Autism is one of the five disorders that fall under this umbrella. The Autism Society of America defines autism as “a complex developmental disability that typically appears during the first 3 years of life and is the result of a neurological disorder that affects the normal functioning of the brain, impacting development in the areas of social interaction and communication skills.”Footnote 1 In the common vernacular, autism is also a term used to describe the entire group of complex developmental disorders included in ASD. For the sake of simplicity in this article, we will primarily use the term autism and note that the population for whom we have been designing interventions and technological tools primarily have autism diagnoses, but in some cases, they have other ASD diagnoses. Furthermore, we believe that many of the interventions and tools described here would well apply to individuals on the ASD spectrum who do not necessarily have an autism diagnosis.

In recent years, the Centers for Disease Control (CDC) in the United States responded to the growing rates of individuals with ASD diagnoses through a variety of initiatives including the creation of an Autism and Development Disabilities Monitoring (ADDM) Network. In 2007, the ADDM Network issued its first reports, describing studies from 2000 to 2002. These reports indicated an average of 1 in 150 children affected by an ASD [5, 6]. A recent report by this body using data from 2006, however, indicated a rise in prevalence to 1 in 110 children: 1 in 70 boys and 1 in 310 girls [7]. Although the rise in prevalence of diagnosis is likely due in part to a variety of factors that are not related to an actual rise in prevalence of the disorders (e.g., change in diagnostic criteria, increased vigilance, political pressures), most experts, parents, advocates, and other stakeholders argue that the rise also has significant epidemiological meaning and that we may in fact be in the middle of an epidemic.

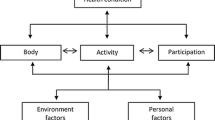

Interventions to support individuals with autism typically begin very early in life—immediately after diagnosis—and often include the use of a wide variety of visual tools. These artifacts draw on words, images, and tangible objects to represent both concrete and abstract real-world concepts. Use of these visual artifacts has been shown to reduce the symptoms associated with cognitive, communication, and social disabilities, in particular for individuals with autism spectrum disorder (ASD) [9]. These visual supports are used frequently to encourage communication and learning in children.

The inherent communicative nature of educational settings makes learning challenging with limited verbal communication. Thus, interventions to support education and learning for individuals with cognitive disabilities often include visual artifacts that demonstrate language. Visual supports are “those things we see that enhance the communication process” [22] and can be an incredible aid for children learning about the world around them.

Visual supports can be the kinds of things that we see in everyday life to support communication, such as body language or natural cues within the environment [22]. They can also be tools explicitly created to support individuals who may have trouble interpreting naturally occurring visual cues (Fig. 1). These constructed artifacts sometimes use images or tangible objects to represent simple everyday needs and elements of basic communication [9]. In these cases, visual supports are used to augment communication, in much the same way that sign language can be a visual representation of language for someone with a hearing impairment. High-tech devices for augmentative and alternative communication can also help children with special needs build language skills over time [22]. These tools typically include speech-generation functionality, eye tracking, and other advanced features, such as those shown in the DynaVox suite of devices.Footnote 2 In other cases, these artifacts represent activities that will take place (or have taken place) arranged in temporal order to augment understanding of time, events, and places, a tool known as a visual schedule [34]. Visual supports have been shown to reduce the symptoms associated with ASD [22].

Paper-based visual supports. (left, counterclockwise from far left) Rewards charts are used to help students visually track their progress and successes; books of small images can be used to provide a mobile form of visual communication; notebooks with Velcro strips on the outside often serve as a platform on which the visually represent a choice. (right) some example images used in visual communication

Despite their impressive benefits, use of visual supports continues to be difficult for many teachers, parents, and other caregivers. There are significant challenges to the use of these analog, and largely paper-based, tools. First, these tools must provide support for children with ASD to improve their communication skills and social skills. Second, they must be flexible enough to support each unique child now and as the child develops. Finally, caregivers often struggle to create, use, and monitor the effectiveness of these tools. Thus, these tools must support the children for which they are designed, with minimal burden to caregiver and support the caregivers in accomplishing their goals as well.

Further, compounding the challenges of implementing an augmentative communication intervention is the extra burden these interventions can place on a family. Chronic illness and disabilities in children typically require the family to play a more significant role than in other situations [14]. Family members jointly suffer from time spent away from school and work, loss of sleep, and time spent in transit to or at physicians’ offices and hospitals [40]. Thus, as opposed to a more traditional assistive technology model that focuses solely on the primary user, we draw on Dawe’s notion that caregiver engagement and ease are fundamental to the adoption of assistive technologies [10]. The long-lasting nature of autism and other developmental disabilities along with the relatively untested nature of the myriad of interventions available means that caregivers must often document diagnostic and evaluative measures over decades. Not only must symptoms, interventions, and progress be documented over very long periods of time, but also they must often be recorded in the middle of everyday life, complete with the challenges of documenting while doing a wide variety of other activities.

Ubicomp technologies are particularly promising for the development of advanced visual supports that address these myriad challenges. Automated capture and access applications [1, 42] can enable monitoring of effectiveness of interventions without significant caregiver effort. Health and behavioral data can be captured, analyzed, and mined over time providing valuable evidence for tracking the progress of interventions [18]. Likewise, large group displays—particularly when integrated with smaller mobile displays—can be leveraged to augment and enhance current practices for displaying educational materials and engaging with students in classrooms. These devices can be used as augmentative communication tools for improving communication and social skills.

In this paper, we present a qualitative study focused on the needs of caregivers. Based on these results, we describe the design of three interventions surrounding novel interactive visual supports that address the needs of the various stakeholders, particularly in terms of communication, record-keeping, visualization, and assessment of interventions. Finally, we present results from focus group discussions with experts in autism, education, and neurodevelopment centered on our novel technological interventions. During these discussions, experts acted as proxies for children with autism using their own experiences and training, to enable a user-centered design process without requiring the children themselves to engage with the prototypes, which could be particularly taxing for this population. This paper advances the state of the art in ubiquitous computing for health care and education, particularly in relation to the need for and design of visual supports for children with autism and other developmental disabilities.

2 Related work

Children with special needs are increasingly using computers for a variety of tasks and activities. However, designing for children, even those who are neurotypical, can be extremely challenging. Children develop and change mentally, emotionally, and physically at a rapid pace. They are particularly vulnerable in terms of safety and ethical considerations. At the same time, computational tools can be significant enablers, particularly for children with special needs. Hourcade [24] provides a thorough overview of the issues and theories surrounding design for children, a scope too large for this paper. In this section, however, we review some projects that are most closely related to this work.

In our past work, we described social and technical considerations in the design of three capture and access technologies for children with autism (2004). The social issues included the cyclical nature of caring for a child with a chronic condition, the need for rich data to document progress, the requirement to collect these data through minimal effort on the part of caregivers, and concerns about privacy and the financial cost of new systems. In the work we present here, we considered these issues and ensured that all of the tools we developed could adapt over time and provide feedback in a cyclical manner for iterative care and education. Also, all of the prototype systems we explored collect rich data automatically with minimal user intervention and an explicit focus on the safety and privacy of both the children and caregivers who might be engaged with our systems. Finally, for the systems we designed and prototyped, we used primarily off the shelf, low-cost components that could be available to schools within their educational and assistive technologies budgets.

Three years later, building on this work, Kientz et al. [30] described four design challenges for creating ubicomp technologies for children with autism. They noted the importance of understanding the domain, making system installation and changes invisible, keeping the technology simple and straightforward, and enabling customization and personalization of interfaces. In our work, we were also concerned with these issues, spending substantial time in classrooms to understand the domain uses of visual supports. Furthermore, the concept of integrating data already in existence figured tightly into our participatory design process, in which we used the images and activities already in use in classrooms to seed the design of the technological artifacts. Finally, we considered issues of customizability and personalization, as are described further in both the descriptions of the prototypes and in the Sect. 5 in which we describe the substantial feedback we received from participants about those issues.

Our designs incorporate lessons from other technologies that have been targeted toward children with ASD for building communications and social skills. For example, Sam is a virtual peer that uses story-authoring features to help develop these skills [41]. SIDES is a tool for helping adolescents with Asperger’s Syndrome practice effective group work skills using a cooperative game on a tabletop computer [37]. In these works, the researchers found that computational agents can serve educational roles in the development of social skills for this population.

3 Methods

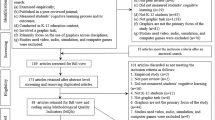

We have taken a mixed method approach to understanding visual supports for children with ASD. We made use of previous research led by the first author, including a multi-year ethnographic study of caregivers of children with autism [18], focus groups centered on children with autism spectrum disorder and their caregivers [17], and an in situ study of the deployment of a new ubiquitous computing technology for classrooms behavior management of children with special needs [19]. Building on these results, we then undertook a qualitative field study to understand the needs of students and teachers in special education classrooms with a specific focus on visual communications and education tools. We worked with three schools in the Orange County, California area: an Interagency Assessment Center for children from 18 months to 3 years old from across the county, a special education preschool, and an integrated elementary school that hosts regular education classrooms, general special education classrooms, and autism-specific classrooms.

First, we interviewed three experts in assistive technology and classroom management. We then observed use of visual supports in nine special education classrooms across these schools, collecting sample artifacts from each classroom. We interviewed ten educators at those sites for approximately one hour each. Copious field notes were taken of the interviews and observations and analyzed collectively by the research team for emergent themes and design considerations.

Following this initial fieldwork, we assembled a participatory design [39] team that included a teacher, an autism specialist, designers, and ubicomp researchers. We conducted iterative design sessions both at our university and on site at one school. We did not include children themselves in these sessions, as is appropriate in many situations in which people are designing for and with children [11]. Rather, we relied on the input of experts who work with the children regularly and artifact analysis from current visual supports due to the challenges inherent to obtaining input from children with ASD, many of who have substantial difficulty in verbal communication [30]. Throughout this process, we designed two new prototype visual supports and developed a visual support intervention around an existing technology. We describe these interventions in Sect. 3.

We then presented these three technological interventions during two focus group demonstration sessions (n = 13 and n = 8). Participants in these focus group discussions included neuroscientists, special educators, assistive technology specialists, and private therapists but again not the children themselves. During these sessions, participants first joined in a general group discussion about the technologies and their accompanying interventions. They then moved freely as individuals or in small groups through a series of “stations” at which each intervention was described in detail, and participants could interact with the technologies directly. Finally, participants joined together again for a group discussion of the specific interventions as well as considerations for the future. These sessions each lasted 120 and 150 min, respectively. During the sessions, each member of the research team took copious notes, which were merged and analyzed by the group after the sessions.

The themes that emerged from the focus group discussions were merged with data from the previous studies and analyzed collectively. The focus group discussions, which centered on the prototype interventions we had designed, often echoed data collected in earlier phases but that could not be fully understood or integrated into the design process without the presence and interaction with the systems. In this way, the systems themselves became tools for the empirical work—technology probes—in much the same way that other instruments—interview questions, surveys, sketches—are [25].

4 Prototype technological interventions

Based on our fieldwork, interviews, and participatory design sessions, we determined three particular areas of focus: mobile communication support, visual schedules, and child-generated media. These particular focus areas were chosen for a variety of reasons, but three in particular led our decisions. First, these domain needs represented some of the most commonly used or requested assistive technologies for the teachers and caregivers with whom we were working. Second, they were particularly amenable to the possibilities enabled by ubiquitous computing technologies. Third, these application areas represented some of the most flexible and adaptable potential technological solutions, a requirement for our secondary goal of using these prototype tools in focus group discussions and eventually in field trials as technology probes to garner further empirical data [25].

To support these three focus areas, we iteratively created two new prototype visual supports: Mocotos, a mobile visual augmentative communication aid and vSked, a multi-device interactive visual schedule system. These tools are based in part on analog tools already in place, and thus the interventions and curriculum used surrounding these tools were modified to include the new features available for the tools. Furthermore, we designed a new communication intervention that makes use of a Ubicomp technology already in existence, the Microsoft SenseCam [23]. In this section, we describe the particular challenges inherent to these domain areas as well as the design for the tools and interventions to address these challenges.

4.1 Mocotos: mobile communication tools

Current analog visual communications tools vary greatly from classroom to classroom, and even from child to child. Each teacher we observed customized the tools in use in her classroom—including the shape, size, type of materials, organization, configuration, and so on (see Fig. 2). Thus, when we were considering the design of new mobile communication tools, we recognized they must provide the added benefits afforded by digital technologies (e.g., automatic data logging, remote collaboration) and support the flexibility and customization teachers have already with their analog tools in classrooms. Further constraining the design space are the physical abilities and disabilities of the children who will use these tools. Many of the children we observed, in particular preschool aged, had only crude motor skills. Thus, some child users may not be able to accurately point to a small object on a display, while others lack the ability to press down rigid buttons. New technologies, such as the capacitive screens on many small touch-screen devices, provide new avenues for interaction and thus became a central focus of our technological design considerations.

(top left) A visual prompt to be shown to a child upon requesting that child to “clean up;” (top center) Options to answer a question about today’s weather; (top-right) An oversized button that plays a recorded sound when pressed for mediated speech functionality; (bottom) A “communication wallet” carried by a child containing a subset of frequently used cards

We also explored the current state of the art in digital assistive technologies in classrooms. A consistent theme in our interviews, however, was the relative bulk and difficulty in handling these devices, in particular for a mobile child-centric model. Furthermore, the configuration and customization—or end-user programming—of these devices was hugely taxing. One expert in assistive technology reported that the programming for a single child for a few months of use could require 8–10 h of work. These findings indicate a huge need for flexible and intuitive interfaces that are much simpler to use and adapt.

Visual communication tools take a variety of forms, from small single picture low-tech cards to advanced computational systems that perform text to speech functionality. The most widely used augmentative visual communication tools in the classrooms we observed are simple laminated pictures with Velcro backs depicting various objects or activities or concepts (Fig. 2). Based on our observations, we defined four categories of use for these tools (see Fig. 2):

- Prompting :

-

During a specific task, the teacher may use a card as a supplementary visual prompt

- Selection :

-

Options are presented to the child as cards when they must answer a question

- Mediated Speech :

-

A visual card can be placed on an electronic audio device, allowing the child to choose the image and play a recording

- Basic Communication :

-

A child may carry a device or collection of cards to communicate needs. A method for this type of interaction is described in [4]

A massive array of material, devices, and methods surround these analog methods for visual communication. Unfortunately, there are many problems inherent with the cards themselves. Teachers and caregivers struggle to manage the large number (typically in the hundreds) of cards being used. Likewise, they must invest significant effort to create the cards. Commercial vendors, such as BoardMaker™ sell sets of prefabricated cards, but these are not flexible enough to meet the needs of many of the caregivers with whom we worked, who instead often opted to create custom cards from physical artifacts or digital imagery. Finally, these paper-based visual tools often have to be used in conjunction with particular devices and for particular (and varied) activities. Each device often serves a different purpose, operates differently, and can require custom configuration. Thus, although they are incredibly useful for caregivers, a more flexible single system was desired if it could be used as successfully as these single purpose tools.

There are several advanced digital technologies for augmentative communication (e.g., GoTalk, Tango, Dynavox, Activity Pad). The teachers and experts we interviewed listed a variety of concerns with these technologies, from usability to lack of flexibility. Furthermore, these devices typically require professional training and expertise, making it difficult for many parents to use them at home. They also carry price tags that most lower and middle-class families cannot afford. In our designs, we were focused on reducing the barrier to entry for these technologies by using familiar platforms, like the mobile phone, and simple end-user programming to create flexible but customized interfaces.

Mocotos are augmentative communication devices that support visual communication, such as the Picture Exchange Communication System (PECS) [4]. These communication strategies typically involve either children initiating communication by choosing particular images or responding to a communicative prompt of images presented as choices. Our prototype system includes a portable device not much larger than popular cell phones, the Nokia N800 (see Fig. 3). Both children and adults can use the touch screen on the device for interactions. Adults can also use a computer-based interface for organizing images, uploading new images, and generally managing the library on the device.

The primary interface metaphor consists of virtual picture cards. Mocotos come with a preinstalled comprehensive library of cards. These cards include the standard iconography used throughout PECS and other visual communication strategies. Despite their nearly universal use in special education classrooms, our fieldwork also revealed the typical practice of photographing common objects of people, uploading them to the computer, printing and laminating them, and eventually making use of these custom real-world cards. Thus, caregivers frequently wind up with massive binders of cards to use with different children for different activities (see Fig. 4, left). Using Mocotos, caregivers can add custom cards to the interface by taking pictures using the built-in camera (see Fig. 4, right), importing digital images from a standard memory card, or by tethering the device to a computer. Cards can have multiple audio cues assigned to them; these cues may be either recorded through the on-board microphone or be synthesized using the built-in text-to-speech function. Each card includes both a name and other customizable metadata, which enables categorization, searching and management, providing rapid access to the library of virtual cards, and real-time and ad hoc setup of new activities (see Fig. 5).

During caregiver-initiated communication, caregivers set up communication choices using the library of “cards” and can offer as few as one choice for directed instruction or as many as eight choices for advanced children (left). The students than make their choices by pushing the appropriate card, which then invokes sound output and optional visual output (center with four choices and right with six)

The prototype system is designed to have the flexibility to handle multiple functions currently supported by different devices inside the classroom. Use of custom audio cues for the cards, flexible layout of the cards—in size and number—on the screen, and custom feedback assignment to input are designed to enable the use of Mocotos to support of a variety of communication types, from highly structured communication during an educational activity to unstructured spontaneous utterances.

4.2 vSked: interactive and intelligent visual schedules

The structure needed to reduce anxiety and support better self-organization around time and activities for individuals with autism spectrum disorder (ASD) and other special needs is often provided through visual schedules. “Visual schedules display planned activities in symbols (words, pictures, photograph, icons, actual objects) that are understood in the order in which they will occur” [27]. They present the abstract concepts of activities and time in concrete forms by using pictures, words, and other visual elements to describe what will happen, in what order, and where. They have been used successfully in classrooms, homes, and private practices to address difficulties with sequential memory, organization of time, and language comprehension and to lessen anxiety [22, 35, 38]. In schools, visual schedules can assist students with transitioning independently between activities and environments by telling them where to go and helping them to know what they will do when they get there. By providing structure, visual schedules reduce anxiety and support behavior intervention plans focused on students with severe behavior problems. They can also support individuals with less severe disabilities in entering the workplace by providing external direction for common workplace phenomena.

Visual schedules can be used at the micro level supporting tasks broken down into sub-elements. For this type of activity, many experts advocate a “First this… Then this…” structure. This structure serves to augment the understanding of time and activities by showing both the sequence of events that compose a larger activity and demonstrating visually a reward or enjoyable event at the end of the task. For example, “Handwashing” can be represented by “First turn on the water and then place your hands under the water,”. A caregiver might end the “handwashing” sequence with a picture of dinner, indicating that once “handwashing” is completed, the enjoyable activity of eating dinner will take place. The First/Then structure can also be used at a more macro level as in “First work, then play.” Similar structures are present in other interventions for person with memory impairments, such as those suggested by Labelle and Mihailidis [31]. An important distinction between visual schedules and those projects, however, is the ultimate goal of using visual schedules. This intervention technique serves not only to augment the ability of individuals to manage the situations to which they are applied, but ultimately they are intended also to teach persons affected by these disabilities to self-manage and understand sequencing of events and time in a more generalized sense.

Because the information must be kept up to date—an extremely onerous task—and the schedules themselves tend to be more effective when they are engaging to the individuals using them, the traditional pen and paper “low-tech” assistive technology approach can be improved. In this work, then, we were focused on making these schedules even more useful and successful with the addition of interactive and intelligent computing technologies.

vSked is an interactive system that augments and enhances visual schedules. Visual schedules present the abstract concepts of activities and time in concrete forms by using pictures, words, and other visual elements to describe what will happen, in what order, and where (see Fig. 6). They have been used successfully in classrooms, homes, and private practices to address difficulties with sequential memory, organization of time, and language comprehension and to lessen anxiety [9]. By providing structure, visual schedules reduce anxiety and support behavior intervention plans focused on students with severe behavior problems (ibid).

Analog visual schedules. (left) A shared classroom calendar is used to show the activities of the day. An individual student helper for each activity is represented by a spider with the student’s name. Spiders were in use at that time, because it was October, and spiders are associated the Halloween in American folklore. (center and right) Individual student schedules include representations for each activity of the day attached via Velcro. Students remove each item as an activity is beginning or ending. Students not present are represented by a schedule with no activities, such as the one in the center here

The vSked system assists teachers in managing their classrooms by providing interfaces for creating, facilitating, and viewing progress of classroom activities based around an interactive visual schedule. vSked includes three different interfaces: a large touch-screen display viewable by the entire classroom, a teacher-centric personal display for administrative control, and a student-centric handheld device for each student (see Fig. 7). The large touch screen, placed at the front of the classroom, acts as a master timetable containing visual schedules for all students. The current activity, denoted by being at the top position, can be activated by the teacher, which in turn starts the activity on the networked students’ handheld devices in the form of choice boards. The choice boards communicate with the large screen, enabling rewards to be delivered locally to students with the correct answers. Likewise, students responding incorrectly or not responding at all receive a prompt to help them identify the correct response, potentially freeing up the teachers to provide help to the students who need more attention. Upon successful completion of a task, each student is presented with a reward chosen specifically for that student, such as an animation of a train traveling across the screen. The combination of prompting students and providing rewards is in use in every special education classroom we have visited and are common instructional techniques both in schools and in private therapies for children with autism.

(left) A student sits at his desk during individual work time, while the large display indicates that everyone is working. (top-right) The large classroom display showing multiple children’s schedules at once. In this case, the schedules are all the same, but that is not necessarily true in all cases. (bottom right) An individual student’s vSked device showing the first activity of the day, picking a reward toward which the child will work

Once all students have completed a task, the schedule automatically advances. If the students have not all completed the task, but its scheduled completion time is drawing near, the teacher can configure the system to provide a prompt to her either on the large shared display or on her own private display.

Using a combination of shared large displays for the whole class and smaller networked displays for individual children, new interaction models are enabled in classrooms, including social and peer learning as well as more efficient and rapid feedback for students and staff about individual progress and abilities. For example, student progress and rewards are echoed on the shared display, thereby alerting students and teaching staff alike to students who may be struggling so that they can be proactive in their help.

Finally, a significant need expressed throughout the years of previous ethnographic work as well as during this more recent focused study is that of documenting and reporting progress. With current analog scheduling systems, it is extremely difficult if not impossible for teachers to document all of the activities and progress in the classroom. In vSked, every interaction is logged and mineable. Thus, teachers can generate reports on individual student progress or that of the entire class across individual activities or many. These reports can be generated daily, weekly, monthly, or yearly, and different templates are supplied for each of these potential lengths of time (Fig. 8).

vSked includes a “Teacher View” to enable custom configuration of the interface, reporting, and so on. (left) The students tab allows for the addition of student information, shown here with characters rather than students due to the sensitivity of the information on this tab. (right) Reports can show how many independent actions were taken, the prompts that are most successful, reward choice over time, and so on

4.3 SenseCam: automatic recording of everyday images

Many children with autism are unable to speak and communicate with parents and teachers verbally; hence they are referenced as being “non-verbal.” As mentioned in the introduction, for non-verbal children with autism, early and consistent intervention is a key component to improving their abilities to communicate and to learn. In turn, for all children with special needs, educational interventions that work toward skill development and independence can improve quality of life. In conducting these interventions, and in fact just in caring for children with special needs in general, a major struggle for caregiver networks is keeping in touch with one another about progress, alterations to treatment, and so on [18]. We have seen in previous work that video can be a powerful tool for enabling caregiver communication and collaboration [17, 19]. Additionally, pictures can support and enable new forms of communication between non-verbal children and their caregivers directly, based on evidence that non-verbal children can and do communicate via pictures already [8]. In these interventions, picture-based communication is enabled through heavyweight manual processes that often do not emulate “real life” images but instead use cartoons and other abstracted images, such as those supported by the Mocotos and vSked prototypes. Thus, we also wanted to explore the use of a child-led, automatic media generation model in which the imagery in use is actually taken from the child’s point of view but without manual burden to the child. In this way, we were able to explore the use of photo-realistic visual supports that most closely mimic what the child him or herself sees.

The Microsoft SenseCam provides an ideal platform for exploring the potential for automatically generated, situated, and contextualized picture-based communication and therapy. SenseCam is a wearable digital camera designed to take photographs of everyday life without user intervention, while it is being worn [23]. Images of everyday activities from the perspective of the individual wearing the camera can be useful visual supports. SenseCam is unlike typical cameras, such as digital cameras and camera phones. It does not have a viewfinder or a display. Therefore, to ensure that interesting images are captured, it is fitted with a wide-angle (fish-eye) lens that maximizes its field-of-view. This lens allows the camera to capture nearly everything in the wearer’s view. It has multiple electronic sensors, including a light sensor, temperature sensor, and accelerometer, which enable SenseCam to automatically capture interesting images at certain changes in sensor readings (Fig. 9).

In this work, in cooperation with the autism experts on our design team, we developed an intervention involving the use of SenseCam at school and in the home. Our intervention builds on past work examining the privacy considerations of SenseCam use for children [26] as well as considerations for secondary stakeholders—those who might be recorded by SenseCam [36]. As such, in the design of our intervention, we considered such issues as control and misuse of images, appropriateness of environments for recording, and perceptions and understanding of recording. Caregivers are encouraged to view and delete any images from the photostream they do not wish to share as well as to develop a routine around when the device will and will not be used. In addition, we integrated the feedback and considerations of the parents and caregivers involved in the participatory design and focus group phases of this work. Echoing Nguyen et al.’s findings, people reported being generally willing to incur any risks to their own privacy and any less of control of their own data to help a child with a severe disability. In contrast to Nguyen et al.’s findings and in concert with Iachello and Abowd’s results, however, the particular constraints of SenseCam use in schools, in large part due to the FERPA regulations present in the United States [13], meant that the intervention had to be redesigned to include a step in which teachers remove any images showing the faces of other children in the classroom before they can be sent home to the parents unless permission to share images is already on file for those children. This additional requirement seems to indicate that de-identifying children’s faces would be a promising solution. However, as Hayes and Abowd [17] found with regard to video images, caregivers in this study largely reported that such images would not be useful. Thus, the intervention on which we finally settled is focused more on the home and private clinics than on use in the public school system.

The intervention requires that the child wear SenseCam for all or part of a typical day. Parents and caregivers at home can then review photographs captured during private therapies or in their own or other people’s homes, and teachers and school staff can review photographs captured outside of school. Additionally, children and caregivers review images together to aid in creating visual social stories that are a part of communication and speech therapy [16]. Caregivers make use of the SenseCam viewing interface to pause the picture stream, ask questions, and so on. In this way, the recorded pictures both serve as a type of log, enabling improved communication between home and school, and as a platform through which to conduct communication therapy with the child.

Certainly, it might also be interesting to create an intervention that focuses on someone other than the child with autism wearing SenseCam. We explicitly avoided such an intervention, however, because it is actually very similar to what teachers and parents can and often already accomplish using cameraphones and other small, mobile digital cameras. Instead, here, we were interested in how child-generated media might be of use in helping these students to find their own “voice” and perspective on the world.

4.4 Relation of our designs to relevant literature

Other researchers have been developing tools to support children with autism that are related to the three interventions we describe here. Particularly related to the Mocotos prototype is Leo and Leroy’s implementation of the Picture Exchange Communication System (PECS) on a Windows SmartPhone [32]. The design of that software is tightly bound to a specific realization of PECS and has been successfully used by children already accustomed to PECS. One of the challenges of use of that tool, however, is the somewhat restrictive nature of PECS. Many of the experts we interviewed requested the need for real-time updating of the picture library, the use of audio cues and other media—even video—and a generally more flexible communications standard that does not necessarily require the training in a particular technique advocated by therapists teaching the PECS method. Thus, with Mocotos, we explicitly targeted a more flexible interaction. For example, the incorporation of audio feedback expands its use to mediated speech applications as opposed to solely visual communication.

SenseCam is not the only wearable camera that has been used to help those with ASD. el Kaliouby and Teeters [12] used a wearable camera to process spontaneous facial expressions of the wearer. The system used facial images to help with individuals with ASD understand social and emotional cues. In contrast, instead of capturing images of the wearer, SenseCam capture images of the wearer’s surroundings.

5 Results

Through fieldwork, interviews, participatory design sessions, and focus group discussions, we designed, developed, and evaluated three novel ubicomp visual supports. In this section, we describe the results of these efforts, both in terms of their evaluation of our interventions and in terms of design implications for the creation of ubicomp technologies in support of children with ASD. We place particular emphasis here on the results of the focus group evaluation. However, as noted in the Sect. 3, it is impossible in such an interactive and iterative design process to completely tease out results that originated in these sessions from those that came about in our discussions with design partners and through our early interviews and fieldwork.

5.1 Flexibility

Challenges, needs, and skills vary by age of a child and severity of diagnosis, and each case of autism is unique. Goals may be set for each child individually, and they change at varying and flexible intervals, depending on individual progress. Therefore, visual supports must be flexible enough to be personalized for each child and to offer the ability to change and adapt over time.

5.1.1 Customizing for each child

Teachers in autism-focused classrooms typically create custom tools for each child based on individual skill level, goals, and physical capabilities. These low-tech tools afford a level of customization teachers need to support each individual child in their classrooms. However, the majority of high-tech assistive technologies [e.g., 15, 20] have extremely limited flexibility, restricting their use to only specific purposes. Thus, a primary goal in this work was to merge the advanced computational functionality inherent to assistive technologies (e.g., playing audio recordings) and the radical customization available through analog tools.

A challenge with individual communication support is finding the appropriate image to adequately express a concept. Teachers and parents often maintain large binders of small cards with images. Having this collection of images on hand allows them to offer children choices on activities, food, and other frequent decisions. The size and weight of these binders typically renders them too unwieldy to be mobile, and therefore multiple copies must be maintained at school, home, and other typical locations. Furthermore, with the introduction of new concepts, new cards must be created and added to the collection. By contrast, Mocotos enables real-time customization through the creation of new picture cards with the camera and voice recorder. The use of search functionality through metadata labels as well as browsing through categories supports rapid retrieval of images.

Similarly, vSked enables addition of new content captured through digital cameras or downloaded from online resources. Activity customization is essential to visual scheduling so that students are able to recognize the actual activities and items in their classroom that are being represented in the system. Furthermore, personalization in a classroom system can help a student learn how the personalized content prepared for him or her relates to a larger whole. For example, vSked uses avatars, colors, themes, and other elements to represent each student individually by drawing on their own personal interests and motivators. These visual elements are mirrored on the classroom display, enabling each child to associate him or herself within the larger classroom activities.

Likewise, a shared activity conducted simultaneously by all students in a class may be presented differently to each individual based on abilities. For instance, in the traditional model, children with limited verbal communication skills may be asked a question (e.g., “What is today’s weather?”). If the students in the classroom have varying capabilities for responding, classroom staff must ensure that the appropriate paper-based choices are distributed to each child for an interaction. vSked supports this kind of customized learning plan through simple rules (e.g., always deliver two choices to David and four choices to Michael).

Currently, classroom staff must observe students closely as they answer questions so as to provide reinforcement for correct answers or prompting for incorrect ones. Remembering the appropriate escalation of prompting for a particular student who might be struggling, or the best reinforcement for a student who has achieved the correct answer can be extremely taxing. Again, through the application of simple logic and the storage of individual preferences in the system, vSked enables customized prompting and reinforcement.

Finally, SenseCam is an interesting case in that the capture technology itself is not easily customized, but the access and use of images can be. Teachers and parents described not only liking the idea of our social-story and communication-centric intervention but also the desire to create new and different interventions using the device. They even described wanting to use images recorded through SenseCam in an integrated way with Mocotos and vSked to get student generate media into the communication interventions supported by those tools.

5.1.2 Enabling growth and change over time

In addition to the flexibility required in tools designed to support a variety of children with different needs and capabilities, another important element of flexibility emerged in our work: adaptability over time. As children grow into adolescence and adulthood, they may outgrow their visual supports. At times, this growth is quite literal in terms of physical progression that changes, for example, the size of keyboard that is appropriate. At other times, the development may be cognitive or emotional. For example, a child who has learned to use PECS for image-based communication and eventually learns to recognize and produce text instead is unlikely to continue use of a device that only supports images. Finally, the obvious appearance of these devices that denotes them as assistive technology may create challenges as students make the transition into mainstream environments.

To provide this adaptability over time, augmentative communication systems should no longer be constrained to dedicated devices. Rather, they should utilize commodity devices like the iPhone or Nokia Tablets, as in this work, which are less expensive, more adaptable, and simply “cooler” looking. Instead of being a prominent indicator of a disability, these devices may actually indicate a kind of worldliness and technical savvy often off limits for individuals with autism. Similarly, by using tablets and digital systems for visual scheduling in classrooms, teachers can apply (and remove) scaffolding of particular activities as student abilities change [33].

In response to our particular interventions and tools, the focus group participants commented that Mocotos and vSked embodied the attractive qualities of these high-tech commodity devices. SenseCam, however, was not considered to be as stylish or attractive, and participants were concerned that the children might not be motivated to wear it. In response, they suggested being able to modify the external appearance of the device by adding child-friendly stickers and coloring or by embedding SenseCam inside a small stuffed animal or other casing.

All three technological tools, however, were perceived by focus group participants as providing the ability to adapt and change over time, supporting growing and maturing users. For example, the soft keyboard option available on Mocotos was seen can enable individuals with autism who can type but cannot speak to move from familiarity with picture-based communication to text-based and eventually generated speech. Similarly, teachers responded that even the name vSked (for visual schedules) might be “too limiting” and that “an entire curriculum could be built around [vSked]” for a variety of ages—much more than just visual scheduling. Finally, throughout our various design and evaluation discussions, SenseCam was repeatedly noted to be particularly flexible in that it simply collects and generates visual records; what is to be done with those images is entirely up to the caregiver and the individual with autism. Thus, parents and teachers alike began to consider ways in which SenseCam could be used to teach about and monitor the learning of wayfinding, job skills, and more—all skills necessary for independent adult living.

5.2 Computer-supported cooperative visual support

Communication is integral to the ways in which we teach and learn. A student who cannot easily communicate simply cannot learn [43, 44]. Thus, it is no surprise that helping children with autism learn to communicate is central to many special education curricula. Furthermore, communication among the myriad of different caregivers can be essential to ensuring proper care and monitoring of interventions over time [18]. In this section, we describe how ubicomp technologies can be used to enhance both a child’s ability to communicate and caregiver collaboration.

5.2.1 Child–caregiver communication

Augmentative communication technologies can facilitate communication directly in a variety of ways. Caregivers may prompt a child to make choices through visual supports or reinforce behaviors by allowing the playing of sounds or the viewing of motivating images through these tools. Access to these tools may also enable student-led independent speech acts. Finally, speech and language pathologists often utilize assistive technologies in their formal speech training.

The three technologies queried in this work all support the traditional goal of enabling communication between children and adult caregivers. Mocotos is explicitly designed to support the kind of visual communication common to current augmentative communication interventions. vSked enables traditional “call and response” teaching for children who cannot verbally respond by delivering visual prompts to personal devices, synchronized with the lessons being presented in the classroom. Finally, SenseCam, by capturing images from a child’s point of view can give that child a voice to describe to a parent what happened at school or to a teacher what happened at home.

5.2.2 Child–child communication

Equally important, and much more difficult to support, however, is the notion of child-to-child communication. Repeatedly, in our interviews and fieldwork, caregivers would describe the challenges of teaching and supporting peer group social interactions for children with ASD.

In classrooms, teachers described using interactive and collaborative games to teach children to take turns, interact with one another, and communicate socially. Again, the current tools available to them had limitations, including the lack of an ability to enforce turn taking—such as explored by Piper et al. [37]—minimal personalization for a particular set of game players, and the lack of capabilities for capture and playback of activities for further lessons. When commenting on vSked, however, teachers began to imagine how this flexible multi-display system could be used for these types of games even more so than the simple communication enabled by Mocotos. For example, children could assemble a story by inputting their own suggestions on their personal devices and having those merge on the large display. These kinds of activities are common in regular education classrooms in which a student might wait his or her turn to speak a portion of a collaboratively developing story. For children with ASD, however, this kind of rapid prompted articulation coupled with waiting for an individual turn to participate may be infeasible. Assistive technologies can provide the visual support needed to recognize and understand turn-taking and other social rules as well as to communicate.

Likewise, many private therapists employ intensive social skills training for children poised to transition into a new environment. For example, one therapist who focuses on high-functioning adolescents with autism described her development and use of a video-based intervention to support peer communication. The youths in a support group were each video-recorded for a few minutes answering specific questions about themselves (e.g., their favorite foods or favorite colors). They then watched these videos in a group to learn to focus on another person, attend to what they are saying, and remember details from the “conversation.” Although this intervention is helpful, the therapist expressed frustration at the amount of overhead required to develop this type of visual support for learning social skills. Furthermore, she described being limited in the types of discussions the group could have and wished they could talk more about what the members do outside of their group sessions. Technologies like SenseCam, which are able to record images automatically and potentially from the perspective of the individual with autism, may support these rich visual interventions for developing social skills using situated, individualized content.

5.2.3 Caregiver–caregiver communication

Caregiver collaboration has been an important theme in other work around technologies for autism [e.g., 18, 29]. In our work, this theme also arose repeatedly as parents and teachers both described the need to increase awareness across an entire team of caregivers, including private therapists, family members, school staff, and more. This need arises from the inherently cooperative approach that special education requires with parents, teachers, and specialists working together to choose goals and implement interventions for each unique child.

In our discussions with caregivers, SenseCam—initially envisioned as a device to help a child find his or her own voice by documenting daily life—emerged from the caregiver’s perspective as a communications device. For example, a divorced mother described wanting to use it in cooperation with her ex-husband as a means for them to view what has happened while their daughter was in the other’s care without having to send extensive notes or engage in a lengthy conversation. In this model, the child is no longer an active participant in the viewing of images, but rather simply the deliverer of the media between interested parties.

Similarly, part of the appeal of switching from traditional assistive technologies to systems like Mocotos and vSked is their automated capture and access functionality. These systems log data about activities—including duration and performance data—as well as data focused on communication initiation and reception. Using these data, caregivers can generate and distribute summarized reports of progress (see Fig. 6). These records can be effective not only for augmenting communication between caregivers, but also for easing the burden on teachers of completing extensive required documentation, as described in the next section.

5.3 Supporting caregivers

The push for assistive technologies, such as visual supports, is often thought of primarily from the perspective of the individual with a disability, a position that tends to overlook the needs of the caregiver. Thus, in service of a better communication experience for the child, a teacher, or parent may have to engage in substantial end-user programming activities to make a device work. Likewise, these devices are often created with only a particular type of intervention in mind. Thus, caregivers must often be trained to use a particular visual support within the protocol of the intervention it was designed to support. Taking not only a child-centric but also a caregiver-centric approach to visual supports, we reveal how current methods and systems sometimes fail and how the application of novel ubicomp technologies can overcome these challenges.

5.3.1 Setting goals and monitoring progress

A fundamental concern for teachers of children with ASD is making progress on their Individual Education Plans (IEP). These documents are legally binding agreements between the school and the parents about what a child’s goals are for any given year and how these goals will be measured. Much like any other curriculum measure since the implementation of No Child Left Behind in the United States, teachers and schools know that their performance will be judged based on their ability to make and document progress. Classroom activities, including those that make use of assistive technologies, must often adhere to specific curricular goals. Thus, teachers described wanting to integrate Mocotos into specific IEP goals surrounding communication. Likewise, they described the need to integrate curriculum libraries into vSked and to create online communities in which they could exchange ideas and activities for use with the system. These concepts echo the current practices of teachers in creating, sharing, and using other educational materials in online environments but with the interesting twist of being able to download and configure these activities directly on the system rather than having to produce them in analog form through substantial effort (printing, coloring, laminating, etc.).

Often, learning to use a new visual support is a significant IEP goal for individual children. In some cases, teachers even described being conflicted between the desire to help children in their classes better communicate and the inherent documentation burden that obtaining a device incurs. Thus, teachers, parents, and autism specialists alike also appreciated that use of digital tools for visual supports simplifies the tracking of their use. With paper-based tools, caregivers must manually document incidences in which a child uses a device, whether the usage was prompted by a caregiver or undertaken independently, any struggles, etc. With tools like Mocotos and vSked, however, this kind of documentation occurs automatically through the inherent capture and access capabilities of the systems. Not only do these activities get logged automatically, but also simple visualizations of their results are available for export into IEP reports, greatly reducing the burden on caregivers of use of these devices.

Another significant challenge to documentation of progress can be seen when considering IEP goals focused on physical or behavioral goals for which the metrics can be difficult to quantify and track. For example, one teacher described teaching a child to brush his teeth. An important goal set by the parents, the teachers, and staff at this school struggled with how to teach him—by breaking down the task into very small subtasks—as well as how to monitor progress. Over the course of 18 months, they were able to teach him this skill by focusing first on just holding the toothbrush, progressing to holding it in his mouth and tolerating the noise of the electric motors, and eventually to using it properly. This kind of progress is both slow and difficult to demonstrate to others. In this case, the lead teacher recorded a short video of him with a handheld camera completing this task 1 day each month and prepared a presentation of the clips, a task she estimated took her several hours over that time as well as the additional organizational overhead to remember to record at regular intervals. In less than 15 min, the parents and school staff were able to observe the incredible progress this child had made over more than a year. Although she reported the effort was worth the burden in this case, the teacher noted that she could not come close to doing this level of monitoring of every goal for every child. This example demonstrates the power of the visual image as well as the struggles teachers have with gathering these kinds of data.

Technologies like SenseCam, thus, were seen not only as working in an assistive capacity for children directly but also in a documentary capacity like with the manual camera use described previously. This documentary functionality enables the generation of progress reports with powerful visual images. In particular, these kinds of automated capture and access technologies can assist in the documentation of behaviors not easily observed by any individual caregiver. For example, an autism specialist described wanting to use SenseCam to gather data on how much eye contact a child displays within a particular time span. Teachers also described wanting to use SenseCam to record images of a child performing a learned task out of the sight of the teacher—either under the care of another staff member or at home with family. In this way, teachers could document even those activities they were unable to observe directly. This result mirrors findings that indicate that while teachers may not trust another individual to tell them about a child’s behavior, they will make use of images selected or recorded by another caregiver [19] or by the child him or herself. As noted previously, we had explicitly wanted to engage the idea of media capture from the child’s perspective and thus presented only an intervention involving the child wearing the device to the experts involved in the focus group discussions. These individuals supported this need and echoed our interest in the child’s perspective. They were also interested in rapid, automated documentation of the child’s activities themselves as described here. Thus, the solution they most often suggested was one that would involve both a child and a caregiver wearing SenseCam and being able to view synchronized images from these two separate feeds—a technological challenge we have not yet addressed but find compelling for future inquiry.

5.3.2 Diagnosing and understanding behaviors

In addition to monitoring behaviors and skills in terms of goals, caregivers and behavioral experts also described using these technologies for evaluative activities. Recorded images can provide additional information to allow careful scrutiny of a child’s behavior patterns and developmental needs. For example, SenseCam could be used to discover triggers for severe problem behavior, such as in one case described in our interviews in which it took years for caregivers to recognize a pattern in the behavior of one child. In that case, the child would physically assault any female of a certain height with brown hair. Similar situations have been reported previously as reasons for using recording technologies to monitor the behavior of children with ASD [19]. Likewise, the ability to provide rewards for appropriate behavior (and to remove them for inappropriate behavior) directly through vSked supports hypothesis testing about which activities are enjoyable or frustrating to a student. Finally, by examining automatically collected records of communication from Mocotos, caregivers may be able to ascertain patterns in when a child needs help, becomes aggravated, etc.

6 Discussion

Development of language is often a fundamental step in learning. In particular, language allows an individual from within a particular cultural group to identify and internalize their cultural beliefs, values, and knowledge—that is, to learn [44]. For children with ASD, however, many of whom are unable to communicate via traditional verbal language, visual supports offer them a way to become a part of their own culture and to learn. To use Vygotsky’s notions of cultural tools, visual supports are symbolic and technological tools that aid in communication [43]. Through visual supports, children with ASD may begin to be able to communicate directly with their teachers, parents, and friends. Visual supports can support educational activities by enabling communication directly or by providing scaffolding by which a student may learn other more advanced means of communicating. Scaffolding provide hints or clues to help a student better approach communication acts or educational challenges in the future. Scaffolding can also provide physical or intellectual aids to solve problems that a child is simply not yet developmentally advanced to handle.

Like its construction namesake, the traditional view of scaffolding in education involves the removal of these tools at a later point when the student is ready for a more advanced challenge. Until recently, analog visual supports were typically made of paper and required somewhat regular replacement. Their inherent fragile and therefore transient nature encouraged teachers and parents to update these tools frequently—and often to make them more challenging. The advent of new technologies—first lamination and then assistive technologies—changed this dynamic. Laminated cards are difficult to destroy, and thus, teachers and other caregivers are not forced to create new cards to replace those that were destroyed, thereby reducing how frequently new cards are created. The additional effort required to make a laminated image even further reduces the likelihood of their update. Thus, this somewhat new technology, which does in many ways reduce effort and overhead for teaching staff can, at times, limits the ability of students to stretch and to grow in their communication. It was not until after the caregivers we interviewed had been using laminated cards for many years that some of them began to see the benefits of the rapidly decaying, non-laminated tools, in terms of forcing regularly changes and updates to out of date materials—in other words, of acting as scaffolding that by itself naturally decays and leaves only the building (independent communication) behind. Commercially produced augmentative communication devices are even more robust, expensive, challenging to customize (program), and difficult to obtain. Thus, the tide has shifted, and these tools are often no longer seen as temporary scaffolding. They instead are often treated as semipermanent supports. For many, goals have shifted from teaching a child to communicate independently by using a series of progressive tools to teaching a child to communicate through tools—advanced, expensive, and often-customized devices. Although there are certainly benefits to permanent communication supports, in particular for students who may never have the ability to communicate independently. However, most experts in both disabilities studies and special education argue that independent communication is the ultimate goal and that such a goal may only be reached by “fading” or removing prompts and other communication supports over time.

During this same time period, visual supports have experienced a similar shift in the tangibility of their elements. Initially, caregivers often had to make use of tangible objects as visual supports. Over time, the ability to take digital photographs and to download images from the Internet has meant that teachers and parents can now find or create a two-dimensional laminated card for nearly anything. Use of technologies like SenseCam for capturing media and vSked and Mocotos for using it would likely only increase this trend. Of course, with these new tools, a wider variety of images become available for use. At the same time, these image-focused supports may lose something the tangible artifacts can deliver. We leave open then for future work the design and development of tangible visual supports enabled with ubicomp sensing and capture technologies to integrate them into systems like ours.

Development of novel ubicomp technologies to serve as visual supports provides the opportunity to develop cultural tools that serve supporting and scaffolding roles simultaneously. Furthermore, animation, multi-modal and tangible interaction, and personalization available through small mobile devices and large displays can enable more engaging interventions. The rapid adaptation enabled by access to flexible software on an inexpensive mobile platform means that teachers, parents, and individuals with autism alike can radically remix their experiences within a safe, predictable framework. This flexibility must be engaged not only in terms of the pedagogical goals of enabling and developing communication, but also in the types of interactions—static, dynamic, and even tangible—as well as who and how those interactions are initiated and completed.

Two tensions inherent to any ubicomp system are even more profound when considering children with developmental disabilities and their caregivers. First, these systems must create a rich experience without overwhelming the users. Second, they must ensure enough user autonomy and control to be customizable for the unique needs of each individual without creating too much burden for caregivers doing the configuration work. Furthermore, the additional educational tension between helping a child to communicate in the easiest way possible and helping a child to push the boundaries of communication skills in the hope of needing fewer and fewer supports in the future arises in the consideration of these particular applications of ubicomp. However—as we saw in the design and evaluation of our prototype interventions—ubicomp applications can enable new forms of interaction for children with ASD and their caregivers. Caregivers and technology designers then must actively engage these tensions in the creation and use of applications that enable the production and use of rich media for communication and learning, the visualization of activities and progress, and the automated recording of diagnostic and evaluative measures.

7 Conclusions and future work

Visual supports can enable children with ASD to communicate and to learn more easily. Traditional tools, however, are challenging to create, use, and maintain. Furthermore, they provide little or no ability to document and monitor use and progress over time. Our goal in this work was to understand the design space surrounding visual interventions for children with autism so as to develop new tools that combine the strengths of the analog tools with the potential for new ubicomp solutions.

Through fieldwork, design activities, and focus group discussions surrounding these interventions, we have uncovered the ways in which advanced interactive visual supports can engage students and support caregivers simultaneously. This focus brought to the forefront specific design requirements for new assistive technologies in this space: flexibility, communication and collaboration capabilities for both children and caregivers, and caregiver support for programming and documentation of use. In an iterative process, we developed three prototype visual interventions that support these goals. Through focus group discussions with autism experts and educators, we then evaluated the prototypes and redesigned them based on this feedback.

There are still a multitude of technical challenges to be considered in this work. A substantial theme during the focus group discussions centered on the need for an end-user programming environment—though the educators and autism experts did not use that particular phrasing—for caregivers to create and to share materials with one another. As these materials are developed either collectively or within individual schools and greater and greater numbers of images and lesson plans are included in the systems, another substantial challenge arises: how to catalog, search, and browse large quantities of media. We leave these challenges open and hope that in the future these tools can incorporate the best practices and algorithms from the search and collective intelligence research communities.

Next steps for this work, of course, include end-user evaluations with more robust systems. Specifically, these systems must be deployed in real-world situations. Asking children with autism to respond in controlled environments is incredibly difficult and often not a realistic approximation for actual behavior in everyday life [19]. Preliminary pilot testing with vSked in summer 2009 indicated the technology can be successfully adopted by teachers, students, and other classroom staff [21]. This study involved a single autism-specific classroom in a public school that made use of vSked over 3 weeks during summer school. Regular observations, interviews, and surveys were used to assess the adoption, usability, and feasibility of vSked use. We are currently designing a larger study of vSked’s use in three classrooms for an entire year.

Similarly, a pilot evaluation in which three families used SenseCam for children with autism for 3–5 weeks each. Our preliminary results indicate that families creatively find SenseCam easy to use and are able to create a wide variety of uses for it on a daily basis. We are currently exploring options for a larger study that might include children with other developmental disabilities or communication difficulties.

Our results also offer the potential for other future work, however. In particular, these results indicate the potential for future examinations focused on how assistive technologies can support and challenge students simultaneously. They also indicate opportunities for the design of new applications that use ubicomp technologies like large displays, mobile devices, and tangible interfaces to merge some of the highly engaging features of physical interventions with the simplified configuration and record-keeping inherent to technological tools.

Finally, although we have in this work explicitly engaged the design space of visual supports for children with autism, one trip to a classroom will easily reveal to any interested reader the quantity of visual supports in use for neurotypical children as well. It is in fact often the case that tools developed for special education can be of use in regular educational settings and traditional classrooms. Thus, although the population in focus here includes children with extremely limited verbal communication, these results may be of use and interest to individuals designing for children and classrooms more generally.

Notes

http://www.autism-society.org/site/PageServer?pagename=about_home, retrieved March 2010.

http://www.dynavoxtech.com/Products/default.aspx, accessed June 2009.

References

Abowd GD, Mynatt ED (2000) Charting past, present and future research in ubiquitous computing. ACM Trans Comput Hum Int 7(1):29–58

American Psychiatric Association (APA) (DC) (1980) APA

American Psychiatric Association (APA) (2000) Diagnostic and statistical manual of mental disorders, 4th edn, text revision. APA, Washington (DC)

Bondy A, Frost L (2001) A picture’s worth PECS and other visual communication strategies in autism. Woodbine House, Bethesda

Centers for Disease Control and Prevention (CDC) (2007) Prevalence of autism spectrum disorders—Autism and Developmental Disabilities Monitoring Network, Six Sites, United States, 2000. MMWR Surveill Summ 2007, 56(No.SS-1)

Centers for Disease Control and Prevention (CDC) (2007) Prevalence of autism spectrum disorders—Autism and Developmental Disabilities Monitoring Network, 14 Sites, United States, 2002. MMWR Surveill Summ 2007, 56(No.SS-1)

Centers for Disease Control and Prevention (CDC) (2009) Prevalence of autism spectrum disorders―Autism and Developmental Disabilities Monitoring Network, United States, 2006. MMWR Surveill Summ 2009, 58(SS-10)

Charlop-Christy MH, Carpenter M, Le L, LeBlanc LA, Kellet K (2002) Using the picture exchange communication system (PECS) with children with autism: assessment of PECS acquisition, speech, social-communicative behavior, and problem behavior. J Appl Behav Anal 35(3):213–231

Cohen MJ, Sloan DL (2007) Visual supports for people with autism: a guide for parents and professionals. Woodbine House, USA

Dawe M (2006) Desperately seeking simplicity: how young adults with cognitive disabilities and their families adopt assistive technologies. In: Proceedings of the CHI 2006, pp 1143–1152