Abstract

The somatic cell count (SCC) of milk is one of the main indicators of the udder health status of lactating mammals and is a hygiene criterion of raw milk used to manufacture dairy products. An increase in SCC is regarded as one of the primary indicators of inflammation of the mammary gland. Therefore, SCC is relevant in food legislation as well as in the payment of ex-farm raw milk and it has a major impact on farm management and breeding programs. Its determination is one of the most frequently performed analytical tests worldwide. Routine measurements of SCC are almost exclusively done using automated fluoro-opto-electronic counting. However, certified reference materials for SCC are lacking, and the microscopic reference method is not reliable because of serious inherent weaknesses. A reference system approach may help to largely overcome these deficiencies and help to assure equivalence in SCC worldwide. The approach is characterised as a positioning system fed by different types of information from various sources. A statistical approach for comparing proficiency tests (PTs) by assessing them using a quality index P Q and assessing participating laboratories using a quality index P L, both deriving from probabilities, is proposed. The basic assumption is that PT schemes are conducted according to recognised guidelines in order to compute performance characteristics, such as z-scores, repeatability and reproducibility standard deviations. Standard deviations are compared with the method validation data from the ISO method. Input quantities close to or smaller than the reference data of the method validation or the assigned value of the PT result in values for P Q and P L close to the maximum value. Evaluation examples of well-known PTs show the practicability of the proposed approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The somatic cell count (SCC) of milk is one of the main indicators of the udder health status of lactating mammals and one of the hygiene criteria of raw milk used to manufacture dairy products. Somatic cells excreted through milk include various types of white blood cells and some epithelial cells. Its composition and concentration change dramatically during periods of inflammation. An increase in SCC is therefore regarded as one of the primary indicators of inflammation of the mammary gland [1]. Therefore, SCC is relevant in food legislation [2–4], in the payment of ex-farm raw milk serving as a price setting quality parameter; when measured in individual animals, it also has a major impact on farm management and breeding programs. Consequently, somatic cell count determination is one of the most frequently performed analytical tests in dairy laboratories worldwide, with an estimated more than 500 000 000 tests per year [5].

SCC data for routine measurements are nowadays almost exclusively obtained through the application of automated fluoro-opto-electronic counting. Guidance on this application is available through ISO 13366-2 | IDF 148-2 [6]. Part of the guidelines focus on calibration and calibration control; however, certified reference materials (CRM) for SCC are lacking. Laboratories therefore calibrate with ‘secondary’ reference materials, which are types of milk, more or less well defined in its properties, using assigned ‘reference values’ for counting. These reference values may derive from the application of the reference method, which is a direct microscopic SCC, according to ISO 13366-1 | IDF 148-1 [7], often in combination with the results of automated counting. Routine testing laboratories usually rely on these secondary reference materials and their assigned values. Others base their calibration on the performance in proficiency tests (PTs), and some rely on the standard settings of the instrument manufacturer. The reasons for lack of full reliance on the microscopic reference method are an insufficient definition of the measurand and a poor precision [5]. To overcome the large uncertainty of the microscopic reference method, reference material providers can additionally rely on a set of routine measurement data, often coming from a selected group of laboratories. However, such reliance bears the risk of circular calibration [8, 9]. If at least a part of the participating laboratories do not also rely on other PTs, they may start correcting their instruments to the assigned value, and an undefined drift within the large uncertainty of the reference method begins. The existing PTs therefore need to be interlinked based on a quantitative scale. At this juncture, there is no ‘true’ value to assess the competence of a laboratory.

A reference system approach may help to largely overcome these deficiencies and help to assure equivalence in somatic cell counting worldwide. A reference system is characterised as a positioning system fed by different types of information from various sources—that is, from reference materials, reference method analysis, routine method results and PT results of laboratories operating in a laboratory network structure [10].

The purpose of this work is to propose a statistical approach for comparing PTs by assessing them using a quality index P Q and assessing participating laboratories using a quality index P L, both deriving from probabilities. The approach was developed in the framework of the SCC Reference System Working Group (International Dairy Federation [IDF] and the International Committee on Animal Recording [ICAR] [5, 10]) by the participating organisations. The basic assumption is that the PT schemes are conducted according to recognised guidelines such as the Harmonized Protocol [11] and ISO 13528 [12] or ISO 5725 [13] in order to compute performance characteristics such as z-scores, repeatability and reproducibility standard deviations. The existence of a CRM (as an estimate of a ‘true value’) is not required in the following considerations. The situation is comparable to the summarising assessment of medical and similar studies, where meta-analysis is a well-proved tool using variances and frequencies for weighting and as objective criteria. However, given the fact that reliable estimates of the population variances are available (see below), we preferred to develop a probabilistic approach.

Method

Assessing PTs by a quality index P Q derived from probabilities

This approach makes use of the precision parameters repeatability standard deviation σ r and reproducibility standard deviation σ R of automated fluoro-optic SCC measurement as reported in the international standard ISO 13366-2 | IDF 148-2 [6].

Assume that in a given PT the estimates s r and s R (or the standard deviation between laboratories, s L) of the repeatability and reproducibility standard deviations, σ r and σ R, respectively, are computed (for one level) using the results from p laboratories. Each laboratory measures the test material n times. Then, a quality index P Q based on the probabilities derived from Chi-square distributions can be constructed.

From standard statistical results, the following equation relating the estimated and the population repeatability variances with the Chi-square distribution with ν degrees of freedom holds for normally distributed measurements (see also ISO 5725-4 [13]):

and similarly

which by \(s_{\text{L}}^{2} = s_{\text{R}}^{2} - s_{\text{r}}^{2}\) is the same as

Therefore, we can estimate the probabilities P (r) and P (L,r):

The known variances \(\sigma_{\text{r}}^{2}\) and \(\sigma_{\text{L}}^{2}\) are derived from the values of σ r and σ R, as published in standard ISO 13366-2 | IDF 148-2 [6].

P (r) and P (L,r) may then be combined to define the PT quality index P Q as the product of these probabilities:

P Q can be (approximately) interpreted as an estimate of the probability that the set of p laboratories within the PT can achieve a repeatability standard deviation as small as σ r and simultaneously a standard deviation between laboratories as small as σ L.

If the reference value θ of the test material is known, or the assigned value θ is accepted as reliable, then the z-scores (based on an accepted standard deviation for proficiency assessment, σ p [11]) of the p laboratories can be combined. To reduce the influence of extreme z-score values, a robust mean estimator \(\bar{z}_{{({\text{rob}})}}\) according to Huber is necessary, known as A15 (without an iterative update of the robust estimation of the standard deviation) or as ‘Huber proposal 2’, or H15 (with an iterative update of the robust estimation of the standard deviation) (Algorithm A, described in Annex C [12]), [14, 15]. The robust sum of z-scores is therefore

and a probability P(Z p ) for Z·\(\sqrt p\) larger than |Z p | may be derived on the basis of the realisation \(\hat{Z}\) of the standard normal random variable Z, i.e. \(\hat{Z} = {{Z_{p} } \mathord{\left/ {\vphantom {{Z_{p} } {\sqrt p }}} \right. \kern-0pt} {\sqrt p }} \sim N(0,1)\):

where P(·) stands for probability and Φ(·) indicates the distribution function of the standard normal distribution.

An alternative combination of z-scores is possible because the sum S p of the squared z-scores is Chi-square distributed with p degrees of freedom [11]: \(S_{p} = {{\sum\nolimits_{i = 1}^{p} z_{i}^{2} \sim \chi_{p}^{2} } }.\)

The quality index P Q has three components in this case: two are related to precision measures and one is related to the trueness of the p mean values.

It is still possible to modify this quality measure by multiplication with a further expression (factor) q = f(q 1, q 2, q 3, …, q m ) made up of the PT-specific quality indices q 1, q 2, q 3, …, q m to obtain

The m quality indices q i1, q i2, q i3, …, q im may be used to model m PT i characterising criteria. The components of q i = f(q i1, q i2, q i3, …, q im ) could be defined in such a way that higher values in the resulting q i indicate higher quality.

To compare up to k PTs in such a way, it may be better to compute normalised values, especially if the P Q values were calculated according to Eq. (10):

Comparing PT schemes over time based on the quality index PQ or its elements

There are various possibilities to construct quality control charts for a given PT scheme.

The following quality or performance characteristics may be plotted versus the number of rounds, 1, 2, …, t:

-

s r or \(s_{\text{r}}^{2}\) or \(\hat{\chi }_{{({\text{r}})}}^{2}\) or P (r)

-

s L or \(s_{\text{L}}^{2}\) (or s R or \(s_{\text{R}}^{2}\)) or \(\hat{\chi }_{{({\text{L,r}})}}^{2}\) or P (L,r)

-

Z p or P(Z p )

-

P Q

-

the fraction of ‘satisfactory’ z-scores, i.e. |z| ≤ 2, as proposed by Gaunt and Whetton [16].

The sums or cumulative averages of these characteristics over t rounds may be used as numerical indices to compare PT schemes quantitatively over time.

Assessing laboratories by a quality index P L derived from probabilities

Again, this approach makes use of the precision parameters repeatability standard deviation σ r and reproducibility standard deviation σ R of automated SCC measurements, as reported in the international standard ISO 13366-2 | IDF 148-2 [6].

Assume that the values of σ r and σ R, as published in standard ISO 13366-2 | IDF 148-2 [6], are known and that an accepted reference value θ has been established.

A single laboratory within a PT can be rated similar to the rating shown above if it provides a repeatability standard deviation s r and a mean value \(\bar{y}\) of n replicates at a given level (estimates of s r and \(\bar{y}\) for σ r and θ, respectively).

With

we can estimate the probability P (r)

The difference \(\bar{y} - \theta\), standardised by \(\left[ {\sigma_{\text{R}}^{2} - \left( {1 - \frac{1}{n}} \right)\sigma_{\text{r}}^{2} } \right]^{{\frac{1}{2}}}\), is a standard normal variate:

which is used to compute the probability

P (r) and \(P(\tilde{z}_{n} )\) may be combined to define the laboratory quality index P L as the product of these probabilities:

P L can be (approximately) interpreted as an estimate of the probability that a certain laboratory having participated in a PT can achieve a repeatability standard deviation as small as σ r and simultaneously a difference between the assigned value of the PT θ and its own mean value \(\bar{y}\) as small as the standard deviation between laboratories σ L.

Again, it is possible to modify this quality measure by multiplication with a further expression (factor) q = f(q 1, q 2, q 3, …, q m ) made up of the laboratory-specific quality indices q 1, q 2, q 3, …, q m to obtain

The components q i1, q i2, q i3, …, q im of q i should be defined in such a way that higher values in the resulting q i indicate higher quality.

A normalised quality index \(\tilde{P}_{{{\text{L}},i}}\) may be preferred to compare a set of p laboratories, especially if the P Ls were calculated according to Eq. (17):

Comparing laboratories over time based on the quality index P L or its elements

There are various possibilities to construct quality control charts for a given laboratory (see also ISO 13528 [12]). The following quality or performance characteristics may be plotted versus the number of rounds, 1, 2, …, t:

-

s r or \(s_{\text{r}}^{2}\) or \(\hat{\chi }_{{({\text{r}})}}^{2}\) or P (r)

-

\(\tilde{z}_{n}\) or \(P(\tilde{z}_{n} )\) (or z-scores as reported by the PT provider)

-

P L

-

the fraction of ‘satisfactory’ z-scores, i.e. |z| ≤ 2, as proposed by Gaunt and Whetton [16].

The sums or cumulative averages of these characteristics over t rounds may be used as numerical indices to compare laboratories quantitatively.

Data

For the testing of the assessment schemes for PTs and laboratories using the probabilistic approach, the data from five national and international PTs were chosen (see Table 1). The PTs took place between September 2010 and October 2011. The data sets were well known, meaning that the evaluation had been finished and feedback had been received.

Each level of a PT was handled as an individual comparison. PTs and laboratories were anonymised, and, where known, the multiple participations of a certain laboratory were each handled as an individual participant.

An Excel® spreadsheet was used for the evaluation. Firstly, the data of the different PTs and levels were arranged according to the necessary information, which included laboratory labels/codes (and the instrument type, if known), number of replicates n, mean values \(\bar{y}\) as reported by the laboratories, repeatability and reproducibility standard deviations s r and s R of the laboratories and reference values (consensus or ‘true’ values) θ as well as the s r of the PT or PT level. Additionally, the robust sum of the z-scores was calculated according to Eq. (7).

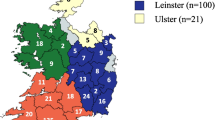

Secondly, the quality indices P Q (assessing PTs) were calculated by inserting the data into the specific Excel® spreadsheets. Additionally, the population repeatability standard deviations σ r and the population reproducibility standard deviations σ R from ISO 13366-2 | IDF 148-2:2006 [6] had to be implemented. As the reference values θ are mostly between the published values in the ISO IDF standard, an interpolation table was used to calculate the relevant σ r and σ R. ISO 13366-2 | IDF 148-2:2006 [6] mentions, e.g. for the levels of 150 000 SCC/mL and 300 000 SCC/mL repeatability values of 6 % and 5 % and reproducibility values of 9 % and 8 %, respectively. For a reference value of 162 000 SCC/mL a s r of 5.92 % or 9 590 SCC/mL and a s R of 8.92 % or 14 450 SCC/mL were interpolated. Quality indices q 1 … q m, as proposed in Eq. (10), were not used because thus far no considerations of the characters and values of the factors have taken place. Therefore, the weight w for the difference 1 − q is of no meaning. The upper part of Fig. 1 shows a calculation example (with p being the number of laboratories participating in the PT).

Thirdly, the quality indices P L (assessing the laboratories) were calculated by inserting the data in the specific Excel® spreadsheets. Additionally, the population repeatability standard deviations σ r and the population reproducibility standard deviations σ R from ISO 13366-2 | IDF 148-2:2006 [6] had to be implemented. As mentioned above, for the calculation of the quality indices P Q for the PTs, an interpolation table is needed to calculate the relevant σ r and σ R. Again, a weight of w ∈ [0,1] for the difference 1 − q could be chosen, but, as mentioned above, thus far no considerations of the characters and values of the factors have taken place. Figures 2 and 3 show graphical evaluation and calculation examples.

Graphical evaluation and calculation example of quality indices P L (assessing laboratories) and parameters influencing it from PT 197 (Cornell, October 2011). Values for parameters s r, \(\bar{y}\), θ, σ r, σ R in somatic cells/µl. The mean of \(\tilde{z}_{n}\) was calculated using the robust estimator A15. If s r is larger than σr, the probability related to the repeatability standard deviation P (r) and the probability related to the inter-laboratory standard deviation P (L,r) become small as well as the as the corresponding quality index P L (dashed circles, laboratory no. 1). In cases where s r is close to or smaller than σ r, the opposite is true, and the quality index P L becomes larger or even close to the maximum value of 1 (solid circles, laboratory no. 6). Calculation is accessible in the ESM

Graphical evaluation and calculation example of quality indices P L (assessing laboratories) and parameters influencing the quality index P L in assessing laboratories from PT 113 (ICAR, September 2011). Values for parameters s r, \(\bar{y}\), θ, σ r, σ R in somatic cells/µl. The mean of \(\tilde{z}_{n}\) was calculated using the robust estimator A15. If \(\bar{y}\) the mean value of the laboratory, is larger or smaller than the reference value (consensus value, ‘true’ value) θ, then \(\left| {\tilde{z}_{n} } \right|\) becomes larger, and the related probability \(P\left( {\tilde{z}_{n} } \right)\) as well as the corresponding quality index P L becomes small (dashed circles, laboratory no. 3). In cases where the mean value \(\bar{y}\) is close or equal to the reference value θ, \(\left| {\tilde{z}_{n} } \right|\) becomes small, and the related probability \(P\left( {\tilde{z}_{n} } \right)\) as well as the corresponding quality index P L becomes large or close to the maximum value of 1 (solid circles, laboratory no. 7)

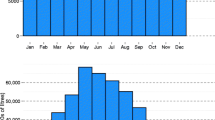

In addition to the evaluation of the participating laboratories in a specific PT by calculating the individual quality indices P L, it is also possible to calculate, for example, the median quality indices from different PTs in order to have an indicator regarding the comparability of a certain laboratory or instrument over time and in different PTs (see Fig. 4).

Discussion

P Q and P L are influenced by their input variables. The three variables and performance characteristics z-score, repeatability and reproducibility standard deviations are calculated according to recognised standards, and they are compared with the specific method validation data from the ISO standard. It follows that input quantities close to or smaller than the reference data of the method validation or the assigned value of the PT result in values for P Q and P L close to the maximum value of 1.

The outcome of a PT is influenced by the competence of the participating laboratories. If the laboratories perform well and the overall repeatability s r of p laboratories is close to or even smaller than σ r of the standard, then the probability P (r) and the quality index P Q of the concerned PT or PT level become larger or close to the maximum value of 1 (solid circle in Fig. 1, PT no. 6). Otherwise, if a larger part or most of the laboratories show a poor performance and s r therefore is larger than σ r, the probability P (r) and the index P Q become smaller (dashed circle, PT no. 16). The same is true for P Q and the probability related to the inter-laboratory standard deviation P (L,r), calculated from the PT’s reproducibility s R (solid and dashed circles in Fig. 1, PTs nos. 4 and 28). If the mean values of the laboratories in the PT are close to the assigned value, then the robust absolute sum of p z-scores |Z p | according to Eq. (7) becomes small, and the related probability \(P\left( {Z_{p} } \right)\) and the index P Q become large or close to the maximum value of 1 (solid circle, PT no. 26). For large values of |Z p |, the probability P(Z p ) and the index P Q become small (dashed circle, PT no. 1). The summarising quality index P Q is almost equally influenced by the probabilities P (r), P (L,r) and P(Z p ) and therefore allows no conclusion on the PT’s performance concerning the repeatability, inter-laboratory standard deviation and z-scores achieved by the participating laboratories.

Regarding the assessment of a laboratory, the influence of its repeatability s r and the mean value of a laboratory \(\bar{y}\) is shown in Figs. 2 and 3. If s r is larger than σ r, the probability related to the repeatability standard deviation P (r) becomes small as well as the corresponding quality index P L. In cases where s r is close to or smaller than σ r, the opposite is true, and the probability P (r) as well as the quality index P L become larger or close to the maximum value of 1. If the mean value \(\bar{y}\) is larger or smaller than the reference value (consensus value, ‘true’ value) θ, then the absolute z-score \(\left| {\tilde{z}_{n} } \right|\) becomes larger, and the related probability \(P\left( {\tilde{z}_{n} } \right)\) as well as the corresponding quality index P L become small. In cases where the mean value \(\bar{y}\) is close or equal to the reference value θ, the absolute z-score \(\left| {\tilde{z}_{n} } \right|\) becomes small, and the related probability \(P\left( {\tilde{z}_{n} } \right)\) as well as the corresponding quality index P L become large or close to the maximum value of 1. The summarising quality index P L is almost equally influenced by the probabilities P (r) and \(P\left( {\tilde{z}_{n} } \right)\) and therefore allows no conclusions on the laboratory’s performance concerning repeatability and comparability to the assigned value (this differentiation is provided by the results of the PTs reported to the participants).

Quality indices P L of laboratories or even of different instruments of a laboratory may be evaluated using, for example, control charts (value vs time) or statistical measures such as mean or median. In applying the test data, a good discrimination of the laboratories and their median values are revealed (Fig. 4). The reasons for the discrimination may be different but are also a result of a differing analytical performance. Figure 5 shows the quality indices P L (median) and their corresponding standard deviations of the laboratories having participated two or more times in a PT or PT level. The data show that some laboratories performed consistently at the same level and that others had greatly varying quality indices. However, frequency of participation seems not to be a determining factor [17]. As stated above, the outcome of a PT is influenced by the competence of all of the participating laboratories. It follows, also, that the outcome of each laboratory in a PT is influenced by the others, and a situation is conceivable where only one laboratory measured the correct value while all others show a bias. However, the well-performing laboratory or instrument will show a mean value \(\bar{y}\) larger or smaller than the ‘biased’ reference value θ and the related probability \(P\left( {\tilde{z}_{n} } \right)\), and the corresponding quality index P L will become small and influence the laboratory’s median. Such influences are difficult to control. With some experience, a laboratory will participate preferably in well-known and broadly supported PTs. If the PT also disposes an acceptable quality index P Q, as proposed in this paper, it could be a driver for a laboratory to participate in such a PT. But as mentioned above the quality indices P Q, and P L are influenced by different factors and therefore do not allow detailed conclusions on performance details of PTs and laboratories. The approach described in this paper allows an easy general and long-term comparison of PTs and laboratories participating in PTs. It is limited to this and for a detailed assessment of an individual PT or PT scheme or laboratory further information will be necessary, e.g. such as used to calculate the indices mentioned in this paper or by the analysis of the individual results.

Graphical representation of the median quality indices P L of all participating laboratories and instruments in the test data sets (61 laboratories or instruments, 5 PTs and 28 PT levels, none of the laboratories participated in all PTs). Brackets mark groups of laboratories and instruments and their number of times of participation

In Eqs. (10) and (17), the possibility to modify the quality measure by multiplication with further expressions is mentioned. Such expressions (factors) q = f(q 1, q 2, q 3, …, q m ) made up of m PT and laboratory-specific quality indices q i1, q i2, q i3, …, q im may be used to model m PT i characterising criteria (e.g. frequency of the PT, number of participants, number of test levels, inter-linkage to other PTs, [summarised] competence index of participating laboratories and of the PT provider, frequency of laboratories’ PT participation, competence of the laboratory and laboratory bias [by considering the z-score, e.g. q i (z i ) = 2(1 − Φ|z i |)]). Further criteria are mentioned by Golze [18]. The components of q i need to be defined in such a way that higher values in the resulting q i indicate higher quality. As yet, no experts in the field of automated somatic cell counting have established such indices and experience in this regard is lacking. The need for using such indices might appear as soon as a system like that described in this paper is set up, and more data than are presented here are integrated. The brackets in the graphical evaluation of the median quality indices in Fig. 5 mark groups of laboratories and instruments and their numbers of times of participation. The median quality indices show a tendency to decline with higher numbers of times of participation. If such a tendency were to become obvious with more data sets, the use of specific quality indices might be necessary.

A model such as that described here can be used for all types of PTs where measurands are quantified. To set up a system as described here, a neutral and trustworthy body is needed to collect the sensitive data from PT trial organisers. Participating laboratories need to give authorisation for the evaluation of their data. Results must be anonymised, and it would be in the responsibility of PT providers and laboratories to communicate their codes to their customers in order to demonstrate their competence.

References

Pyörälä S (2003) Indicators of inflammation in the diagnosis of mastitis. Vet Res 34:565–578

Regulation (EC) (2004) No 853/2004 of the European Parliament and of the Council of 29 April 2004 laying down specific hygiene rules for on the hygiene of foodstuffs. Off J Eur Union. 139/55 Annex II, Section IX, Brussels

Grade “A” (2009) Pasteurized Milk Ordinance, 2009 Revision. US Department of Health and Human Services, Public Health Service, Food and Drug Administration, Silver Spring

Beal R, Eden M, Gunn I, Hook I, Lacy-Hulbert J, Morris G, Mylrea G, Woolford M (2001) Managing mastitis: a practical guide for New Zealand dairy farmers. Livestock Improvement, Hamilton

Baumgartner C (2008) Architecture of reference systems, status quo of somatic cell counting and concept for the implementation of a reference system for somatic cell counting. Bull IDF 427

ISO 13366-2 | IDF 148-2:2006. Milk—enumeration of somatic cells, Part 2—guidance on the operation of fluoro-opto-electronic counters. International Organization for Standardization, Geneva and International Dairy Federation, Brussels

ISO 13366-1 | IDF 148-1:2008. Milk—enumeration of somatic cells, Part 1—microscope method (Reference method). International Organization for Standardization, Geneva and International Dairy Federation, Brussels

Petley BW (1985) Fundamental physical constants and the frontier of measurement. Adam Hilger, Bristol

Pendrill LR (2005) Meeting future needs for metrological traceability—a physicist’s view. Accred Qual Assur 10:133–139

Orlandini S, van den Bijgaart H (2011) Reference system for somatic cell counting in milk. Accred Qual Assur 16:415–420

Thompson M, Ellison SLR, Wood R (2006) The international harmonized protocol for the proficiency testing of analytical chemistry laboratories. Pure Appl Chem 78:145–196

ISO 13528:2005. Statistical methods for use in proficiency testing by interlaboratory comparisons. International Organization for Standardization, Geneva

ISO 5725:1994. Accuracy (trueness and precision) of measurement methods and results. Parts 2, 4, 6. International Organization for Standardization, Geneva

Analytical Methods Committee of the Royal Society of Chemistry (1989) Robust statistics: How not to reject outliers. Part 1. Basic concepts. Analyst 114:1693–1697

Analytical Methods Committee of the Royal Society of Chemistry (2001) MS EXCEL add-in for robust statistics. (http://www.rsc.org/Membership/Networking/InterestGroups/Analytical/AMC/Software/RobustStatistics.asp)

Gaunt W, Whetton M (2009) Regular participation in proficiency testing provides long term improvements in laboratory performance: an assessment of data over time. Accred Qual Assur 14:449–454

Thompson M, Lowthian PJ (1998) The frequency of rounds in a proficiency test: Does it affect the performance of participants? Analyst 123:2809–2812

Golze M (2001) Information system and qualifying criteria for proficiency testing schemes. Accred Qual Assur 6:199–202

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Berger, T.F.H., Luginbühl, W. Probabilistic comparison and assessment of proficiency testing schemes and laboratories in the somatic cell count of raw milk. Accred Qual Assur 21, 175–183 (2016). https://doi.org/10.1007/s00769-016-1207-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00769-016-1207-y