Abstract

Building on the inequalities for homogeneous tetrahedral polynomials in independent Gaussian variables due to R. Latała we provide a concentration inequality for not necessarily Lipschitz functions \(f:\mathbb {R}^n \rightarrow \mathbb {R}\) with bounded derivatives of higher orders, which holds when the underlying measure satisfies a family of Sobolev type inequalities

Such Sobolev type inequalities hold, e.g., if the underlying measure satisfies the log-Sobolev inequality (in which case \(C(p) \le C\sqrt{p}\)) or the Poincaré inequality (then \(C(p) \le Cp\)). Our concentration estimates are expressed in terms of tensor-product norms of the derivatives of \(f\). When the underlying measure is Gaussian and \(f\) is a polynomial (not necessarily tetrahedral or homogeneous), our estimates can be reversed (up to a constant depending only on the degree of the polynomial). We also show that for polynomial functions, analogous estimates hold for arbitrary random vectors with independent sub-Gaussian coordinates. We apply our inequalities to general additive functionals of random vectors (in particular linear eigenvalue statistics of random matrices) and the problem of counting cycles of fixed length in Erdős–Rényi random graphs, obtaining new estimates, optimal in a certain range of parameters.

Similar content being viewed by others

1 Introduction

Concentration of measure inequalities are one of the basic tools in modern probability theory (see the monograph [46]). The prototypic result for all concentration theorems is arguably the Gaussian concentration inequality [14, 62], which asserts that if \(G\) is a standard Gaussian vector in \(\mathbb {R}^n\) and \(f:\mathbb {R}^n \rightarrow \mathbb {R}\) is a 1-Lipschitz function, then for all \(t > 0\),

Over the years the above inequality has found numerous applications in the analysis of Gaussian processes, as well as in asymptotic geometric analysis (e.g. in modern proofs of Dvoretzky type theorems). Its applicability in geometric situations comes from the fact that it is dimension free and all norms in \(\mathbb {R}^n\) are Lipschitz with respect to one another. However, there are some probabilistic or combinatorial situations, when one is concerned with functions that are not Lipschitz. The most basic case is the probabilistic analysis of polynomials in independent random variables, which arise naturally, e.g., in the study of multiple stochastic integrals, in discrete harmonic analysis as elements of the Fourier expansions on the discrete cube or in numerous problems of random graph theory, to mention just the famous subgraph counting problem [22, 23, 26, 27, 35, 36, 49].

The concentration of measure or more generally integrability properties for polynomials have attracted a lot of attention in the last forty years. In particular Bonami [13] and Nelson [55] provided hypercontractive estimates (Khintchine type inequalities) for polynomials on the discrete cube and in the Gauss space, which have been later extended to other random variables by Kwapień and Szulga [41] (see also [42]). Khintchine type inequalities have been also obtained in the absence of independence for polynomials under log-concave measures by Bourgain [19], Bobkov [10], Nazarov-Sodin-Volberg [54] and Carbery-Wright [21].

Another line of research is to provide two-sided estimates for moments of polynomials in terms of deterministic functions of the coefficients. Borell [15] and Arcones-Giné [5] provided such two-sided bounds for homogeneous polynomials in Gaussian variables. They were expressed in terms of expectations of suprema of certain empirical processes. Talagrand [64] and Bousquet-Boucheron-Lugosi-Massart [17, 18] obtained counterparts of these results for homogeneous tetrahedralFootnote 1 polynomials in Rademacher variables and Łochowski [48] and Adamczak [1] for random variables with log-concave tails. Inequalities of this type, while implying (up to constants) hypercontractive bounds, have a serious downside as the analysis of the empirical processes involved is in general difficult. It is therefore important to obtain two-sided bounds in terms of purely deterministic quantities. Such bounds for random quadratic forms in independent symmetric random variables with log-concave tails have been obtained by Latała [43] (the case of linear forms was solved earlier by Gluskin and Kwapień [29], whereas bounds for quadratic forms in Gaussian variables were obtained by Hanson-Wright [32], Borell [15] and Arcones-Giné [5]). Their counterparts for multilinear forms of arbitrary degree in nonnegative random variables with log-concave tails have been derived by Latała and Łochowski [45]. As for the symmetric case, the general problem is still open. An important breakthrough has been obtained by Latała [44], who proved two-sided estimates for Gaussian chaos of arbitrary order, that is for homogeneous tetrahedral polynomials of arbitrary degree in independent Gaussian variables (we recall his bounds below as they are the starting point for our investigations). For general symmetric random variables with log-concave tails similar bounds are known only for chaos of order at most three [2].

Polynomials in independent random variables have been also investigated in relation with combinatorial problems, e.g. with subgraph counting [22, 23, 26, 27, 35, 36, 49]. The best known result for general polynomials in this area has been obtained by Kim and Vu [37, 65], who presented a family of powerful inequalities for \([0,1]\)-valued random variables. Over the last decade they have been applied successfully to handle many problems in probabilistic combinatorics. Some recent inequalities for polynomials in subexponential random variables have been also obtained by Schudy and Sviridenko [59, 60]. They are a generalization of the special case of exponential random variables in [45] and are expressed in terms of quantities similar to those considered by Kim-Vu.

Since it is beyond the scope of this paper to give a precise account of all the concentration inequalities for polynomials, we refer the Reader to the aforementioned sources and recommend also the monographs [24, 42], where some parts of the theory are presented in a uniform way. As already mentioned we will present in detail only the results from [44], which are our main tool as well as motivation.

As for concentration results for general non-Lipschitz functions, the only reference we are aware of, which addresses this question is [30], where the Authors obtain interesting inequalities for stationary measures of certain Markov processes and functions satisfying a Lyapunov type condition. Their bounds are not comparable to the ones which we present in this paper. On the one hand they work in a more general Markov process setting, on the other hand, it seems that their results are restricted to the Gaussian-exponential concentration and do not apply to functionals with heavier tails (such as polynomials of degree higher than two). Since the language of [30] is very different from ours, we will not describe the inequalities obtained therein and refer the interested Reader to the original paper.

Let us now proceed to the presentation of our results. To do this we will first formulate a two-sided tail and moment inequality for homogeneous tetrahedral polynomials in i.i.d. standard Gaussian variables due to Latała [44]. To present it in a concise way we need to introduce some notation which we will use throughout the article. For a positive integer \(n\) we will denote \([n] = \{1,\ldots ,n\}\). The cardinality of a set \(I\) will be denoted by \(\# I\). For \(\mathbf{i}= (i_1,\ldots ,i_d) \in [n]^d\) and \(I\subseteq [d]\) we write \(\mathbf{i}_{I}=(i_{k})_{k\in I}\). We will also denote \(|\mathbf{i}| = \max _{j\le d} {i_j}\) and \(|\mathbf{i}_I| = \max _{j \in I} i_j\).

Consider thus a \(d\)-indexed matrix \(A = (a_{i_1,\ldots ,i_d})_{i_1,\ldots ,i_d = 1}^n\), such that \(a_{i_1,\ldots ,i_d} = 0\) whenever \(i_j = i_k\) for some \(j\ne k\), a sequence \(g_1,\ldots ,g_n\) of i.i.d. \(\mathcal {N}(0,1)\) random variables and define

Without loss of generality we can assume that the matrix \(A\) is symmetric, i.e., for all permutations \(\sigma :[d]\rightarrow [d], a_{i_1,\ldots ,i_d} = a_{i_{\sigma (1)},\ldots ,i_{\sigma (d)}}\).

Let now \(P_d\) be the set of partitions of \([d]\) into nonempty, pairwise disjoint sets. For a partition \(\mathcal {J} =\{J_1,\ldots ,J_k\}\), and a \(d\)-indexed matrix \(A = (a_\mathbf{i})_{\mathbf{i}\in [n]^d}\) (not necessarily symmetric or with zeros on the diagonal), define

where \(\left\| (x_{\mathbf{i}_{J_l}})\right\| _2 = \sqrt{\sum _{|\mathbf{i}_{J_l}|\le n} x_{\mathbf{i}_{J_l}}^2}\). Thus, e.g.,

From the functional analytic perspective the above norms are injective tensor product norms of \(A\) seen as a multilinear form on \( (\mathbb {R}^{n})^d\) with the standard Euclidean structure.

We are now ready to present the inequalities by Latała. Below, as in the whole article by \(C_d\) we denote a constant, which depends only on \(d\). The values of \(C_d\) may differ between occurrences.

Theorem 1.1

(Latała [44]) For any \(d\)-indexed symmetric matrix \(A = (a_{\mathbf{i}})_{\mathbf{i}\in [n]^d}\) such that \(a_\mathbf{i}= 0\) if \(i_j = i_k\) for some \(j\ne k\), the random variable \(Z\), defined by (1) satisfies for all \(p \ge 2\),

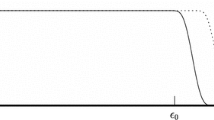

As a consequence, for all \(t > 0\),

It is worthwhile noting that for \(\#\mathcal {J} > 1\), the norms \(\Vert A\Vert _{\mathcal {J}}\) are not unconditional in the standard basis (decreasing coefficients of the matrix may not result in decreasing the norm). Moreover, for specific matrices they may not be easy to compute. On the other hand, for any \(d\)-indexed matrix \(A\) and any \(\mathcal {J} \in P_d\), we have \(\Vert A\Vert _\mathcal {J} \le \Vert A\Vert _{\{1,\ldots ,d\}} = \sqrt{\sum _{\mathbf{i}} a_\mathbf{i}^2}\). Using this fact in the upper estimates above allows to recover (up to constants depending on \(d\)) hypercontractive estimates for homogeneous tetrahedral polynomials due to Nelson.

Our main result is an extension of the upper bound given in the above theorem to more general random functions and measures. Below we present the most basic setting we will work with and state the corresponding theorems. Some additional extensions are deferred to the main body of the article.

We will consider a random vector \(X\) in \(\mathbb {R}^n\), which satisfies the following family of Sobolev inequalities. For any \(p \ge 2\) and any smooth integrable function \(f:\mathbb {R}^n \rightarrow \mathbb {R}\),

for some constant \(L\) (independent of \(p\) and \(f\)), where \(|\cdot |\) is the standard Euclidean norm on \(\mathbb {R}^n\). It is known (see [3] and Theorem 3.4 below) that if \(X\) satisfies the logarithmic Sobolev inequality (15) with constant \(D_{LS}\), then it satisfies (3) with \(L = \sqrt{D_{LS}}\). We remark that there are many criteria for a random vector to satisfy the logarithmic Sobolev inequality (see e.g. [7, 8, 11, 39, 46]), so in particular our assumption (3) can be verified for many random vectors of interest.

Our first result is the following theorem, which provides moment estimates and concentration for \(D\)-times differentiable functions. The estimates are expressed by \(\Vert \cdot \Vert _{\mathcal {J}}\) norms of derivatives of the function (which we will identify with multi-indexed matrices). We will denote the \(d\)-th derivative of \(f\) by \(\mathbf {D}^d f\).

Theorem 1.2

Assume that a random vector \(X\) in \(\mathbb {R}^n\) satisfies the inequality (3) with constant \(L\). Let \(f:\mathbb {R}^n \rightarrow \mathbb {R}\) be a function of the class \(\mathcal {C}^D\). For all \(p \ge 2\) if \(\mathbf {D}^Df(X) \in L^p\), then

In particular if \(\mathbf {D}^Df (x)\) is uniformly bounded on \(\mathbb {R}^n\), then setting

we obtain for \(t > 0\),

The above theorem is quite technical, so we will now provide a few comments, comparing it to known results.

1. It is easy to see that if \(D = 1\), Theorem 1.2 reduces (up to absolute constants) to the Gaussian-like concentration inequality, which can be obtained from (3) by Chebyshev’s inequality (applied to general \(p\) and optimized).

2. If \(f\) is a homogeneous tetrahedral polynomial of degree \(D\), then the tail and moment estimates of Theorem 1.2 coincide with those from Latała’s Theorem. Thus Theorem 1.2 provides an extension of the upper bound from Latała’s result to a larger class of measures and functions (however we would like to stress that our proof relies heavily on Latała’s work).

3. If \(f\) is a general polynomial of degree \(D\), then \(\mathbf {D}^D f(x)\) is constant on \(\mathbb {R}^n\) (and thus equal to \(\mathbb E\mathbf {D}^D f(X)\)). Therefore in this case the function \(\eta _f\) appearing in Theorem 1.2 can be written in a simplified form

4. For polynomials in Gaussian variables, the estimates given in Theorem 1.2 can be reversed, like in Theorem 1.1. More precisely we have the following theorem, which provides an extension of Theorem 1.1 to general polynomials.

Theorem 1.3

If \(G\) is a standard Gaussian vector in \(\mathbb {R}^n\) and \(f:\mathbb {R}^n \rightarrow \mathbb {R}\) is a polynomial of degree \(D\), then for all \(p \ge 2\),

Moreover for all \(t > 0\),

where

5. It is well known that concentration of measure for general Lipschitz functions fails e.g. on the discrete cube and one has to impose some additional convexity assumptions to get sub-Gaussian concentration [63]. It turns out that if we restrict to polynomials, estimates in the spirit of Theorems 1.1 and 1.2 still hold. To formulate our result in full generality recall the definition of the \(\psi _2\) Orlicz norm of a random variable \(Y\),

By integration by parts and Chebyshev’s inequality \(\Vert Y\Vert _{\psi _2} < \infty \) is equivalent to a sub-Gaussian tail decay for \(Y\). We have the following result for polynomials in sub-Gaussian random vectors with independent components.

Theorem 1.4

Let \(X = (X_1,\ldots ,X_n)\) be a random vector with independent components, such that for all \(i \le n, \Vert X_i\Vert _{\psi _2} \le L\). Then for every polynomial \(f:\mathbb {R}^n \rightarrow \mathbb {R}\) of degree \(D\) and every \(p \ge 2\),

As a consequence, for any \(t > 0\),

where

6. To give the Reader a flavour of possible applications let us mention the Hanson-Wright inequality [32]. Namely, for a random vector \(X = (X_1,\ldots ,X_n)\) in \(\mathbb {R}^n\) with square integrable and mean-zero components and a real symmetric matrix \(A = (a_{ij})_{i,j \le n}\), consider the random variable

If the components of \(X\) are independent and \(\Vert X_i\Vert _{\psi _2} \le L\) for \(i=1,\ldots ,n\), then it follows immediately from Theorem 1.4 that for all \(t>0\),

Similarly, Theorem 1.2 implies (5) under the assumption that \(X\) satisfies the log-Sobolev inequality (15) with constant \(L^2\) (no independence of components of \(X\) is assumed). Moreover, if \(X\) is a standard Gaussian vector in \(\mathbb {R}^n\), then by Theorem 1.3 the tail estimate (5) can be reversed up to numerical constants. We postpone further applications of our theorems to subsequent sections of the article and here we announce only that apart from polynomials we apply Theorem 1.2 to additive functionals and \(U\)-statistics of random vectors, in particular to linear eigenvalue statistics of random matrices, obtaining bounds which complement known estimates by Guionnet and Zeitouni [31]. Theorem 1.4 is applied to the problem of subgraph counting in large random graphs. In a special case when one counts copies of a given cycle in a random graph \(G(n,p)\), our result allows to obtain a tail inequality which is optimal whenever \(p \ge n^{-\frac{k-2}{2(k-1)}} \log ^{-\frac{1}{2}} n\), where \(k\) is the length of the cycle. To the best of our knowledge this is the sharpest currently known result for this range of \(p\).

7. Let us now briefly discuss optimality of our inequalities. The lower bound in Theorem 1.3 clearly shows that Theorem 1.2 is optimal in the class of measures and functions it covers up to constants depending only on \(D\). As for Theorem 1.4, it is similarly optimal in the class of random vectors with independent sub-Gaussian coordinates. In concrete combinatorial applications, for \(0\)–\(1\) random variables this theorem may be however suboptimal. This can be seen already for \(D = 1\), for a linear combination of independent Bernoulli variables \(X_1,\ldots ,X_n\) with \(\mathbb P(X_i = 1) = 1 - \mathbb P(X_i=0) = p\). When \(p\) becomes small, the tail bound for such variables given e.g. by the Chernoff inequality is more subtle than what can be obtained from general inequalities for sums of sub-Gaussian random variables and the fact that \(\Vert X_i\Vert _{\psi _2}\) is of order \((\log (2/p))^{-1/2}\). Roughly speaking, this is the reason why in our estimates for random graphs we have the restriction on how small \(p\) can be. At the same time our inequalities still give results comparable to what can be obtained from other general inequalities for polynomials. As already noted in the survey [36], bounds obtained from various general inequalities for the subgraph-counting problem may not be directly comparable, i.e. those performing well in one case may exhibit worse performance in some other cases. Similarly, our inequalities cannot be in general compared e.g. to the estimates by Kim and Vu [37, 38]. For this reason and since it would require introducing new notation, we will not discuss their estimates and just indicate, when presenting applications of Theorem 1.4, several situations when our inequalities perform in a better or worse way than those by Kim and Vu. Let us only mention that the Kim-Vu inequalities similarly as ours are expressed in terms of higher order derivatives of the polynomials. However, Kim and Vu (as well as Schudy and Sviridenko) look at maxima of absolute values of partial derivatives, which does not lead to tensor-product norms which we consider. While in the general sub-Gaussian case we consider, such tensor product norms cannot be avoided (in view of Theorem 1.3), it is not necessarily the case for \(0\)–\(1\) random variables.

8. A version of Theorem 1.2 for vectors of independent random variables satisfying the modified logarithmic Sobolev inequality (see e.g. [28]) instead of the classical log-Sobolev inequality is also discussed. In particular, in Theorem 3.4 we relate the modified log-Sobolev inequality to a certain Sobolev-type inequality with a non-Euclidean norm of the gradient and with the constant independent of the dimension.

The organization of the paper is as follows. First, in Sect. 2, we introduce the notation used in the paper, next in Sect. 3 we give the proof of Theorem 1.2 together with some generalizations and examples of applications. In Sect. 4 we prove Theorem 1.3, whereas in Sect. 5 we present the proof of Theorem 1.4 and applications to the subgraph counting problems. In Sect. 6 we provide further refinements of estimates from Sect. 3 in the case of independent random variables satisfying modified log-Sobolev inequalities (they are deferred to the end of the article as they are more technical than those of Sect. 3). In the Appendix we collect some additional facts used in the proofs.

2 Notation

Sets and indices For a positive integer \(n\) we will denote \([n] = \{1,\ldots ,n\}\). The cardinality of a set \(I\) will be denoted by \(\# I\).

For \(\mathbf{i}= (i_1,\ldots ,i_d)\in [n]^d\) and \(I\subseteq [d]\) we write \(\mathbf{i}_{I}=(i_{k})_{k\in I}\). We will also denote \(|\mathbf{i}| = \max _{j\le d} {i_j}\) and \(|\mathbf{i}_I| = \max _{k\in I} i_k\).

For a finite set \(A\) and an integer \(d \ge 0\) we set

(i.e. \(A^{\underline{d}}\) is the set of \(d\)-indices with pairwise distinct coordinates). Accordingly we will denote \(n^{\underline{d}} = n(n-1)\cdots (n-d+1)\).

For a finite set \(I\), by \(P_I\) we will denote the family of partitions of \(I\) into nonempty, pairwise disjoint sets. For simplicity we will write \(P_d\) instead of \(P_{[d]}\).

For a finite set \(I\) by \(\ell _2(I)\) we will denote the finite dimensional Euclidean space \(\mathbb {R}^I\) endowed with the standard Euclidean norm \(|x|_2 = \sqrt{\sum _{i\in I} x_i^2}\). Whenever there is no risk of confusion we will denote the standard Euclidean norm simply by \(|\cdot |\).

Multi-indexed matrices For a function \(f:\mathbb {R}^n \rightarrow \mathbb {R}\) by \(\mathbf {D}^d f(x)\) we will denote the (\(d\)-indexed) matrix of its derivatives of order \(d\), which we will identify with the corresponding symmetric \(d\)-linear form. If \(M = (M_\mathbf{i})_{\mathbf{i}\in [n]^d}, N = (N_\mathbf{i})_{\mathbf{i}\in [n]^d}\) are \(d\)-indexed matrices, we define \(\langle M,N\rangle =\sum _{\mathbf{i}\in [n]^d} M_\mathbf{i}N_\mathbf{i}\). Thus for all vectors \(y^{1},\ldots ,y^{d} \in \mathbb {R}^n\) we have \(\mathbf {D}^d f(x) (y^{1},\ldots ,y^{d}) = \langle \mathbf {D}^d f(x),y^{1}\otimes \cdots \otimes y^{d}\rangle \), where \(y^{1}\otimes \cdots \otimes y^{d} = (y^{1}_{i_1}y^{2}_{i_2}\ldots y^{d}_{i_d})_{\mathbf{i}\in [n]^d}\).

We will also define the Hadamard product of two such matrices \(M\circ N\) as a \(d\)-indexed matrix with entries \(m_{\mathbf{i}} = M_\mathbf{i}N_\mathbf{i}\) (pointwise multiplication of entries).

Let us also define the notion of “generalized diagonals” of a \(d\)-indexed matrix \(A = (a_\mathbf{i})_{\mathbf{i}\in [n]^d}\). For a fixed set \(K \subseteq [d]\), with \(\#K > 1\), the “generalized diagonal” corresponding to \(K\) is the set of indices \(\{\mathbf{i}\in [n]^d:i_k = i_l\;\text {for all}\; k,l \in K\}\).

Constants We will use the letter \(C\) to denote absolute constants and \(C_a\) for constants depending only on some parameter \(a\). In both cases the values of such constants may differ between occurrences.

3 A concentration inequality for non-Lipschitz functions

In this Section we prove Theorem 1.2. Let us first state our main tool, which is an inequality by Latała in a decoupled version.

Theorem 3.1

(Latała [44]) Let \(A = (a_\mathbf{i})_{\mathbf{i}\in [n]^d}\) be a \(d\)-indexed matrix with real entries and let \(G_1,G_2,\ldots ,G_d\) be i.i.d. standard Gaussian vectors in \(\mathbb {R}^n\). Let \(Z = \langle A, G_1\otimes \cdots \otimes G_d\rangle \). Then for every \(p\ge 2\),

Thanks to general decoupling inequalities for \(U\)-statistics [25], which we recall in the “Appendix” (Theorem 7.1), the above theorem is formally equivalent to Theorem 1.1. In fact in [44] Latała first proves the above version. In the proof of Theorem 3.3, which is a slight generalization of Theorem 1.2, we will need just Theorem 3.1 (in particular in this part of the article we do not need any decoupling inequalities).

From now on we will work in a more general setting than in Theorem 1.2 and assume that \(X\) is a random vector in \(\mathbb {R}^n\), such that for all \(p\ge 2\) there exists a constant \(L_X(p)\) such that for all bounded \(\mathcal {C}^1\) functions \(f :\mathbb {R}^n \rightarrow \mathbb {R}\),

Clearly in this situation the above inequality generalizes to all \(\mathcal {C}^1\) functions (if the right-hand side is finite then the left-hand side is well defined and the inequality holds).

Let now \(G\) be a standard \(n\)-dimensional Gaussian vector, independent of \(X\). Using the Fubini theorem together with the fact that for some absolute constant \(C\), all \(x \in \mathbb {R}^n\) and \(p \ge 2, C^{-1}\sqrt{p}|x| \le \Vert \langle x, G\rangle \Vert _p \le C\sqrt{p}|x|\), we can linearise the right-hand side above and write (6) equivalently (up to absolute constants) as

We remark that similar linearisation has been used by Maurey and Pisier to provide a simple proof of the Gaussian concentration inequality [57, 58] (see the remark following Theorem 3.3 below). Inequality (7) has an advantage over (6) as it allows for iteration leading to the following simple proposition.

Proposition 3.2

Consider \(p \ge 2\) and let \(X\) be an \(n\)-dimensional random vector satisfying (6). Let \(f :\mathbb {R}^n \rightarrow \mathbb {R}\) be a \(\mathcal {C}^D\) function. Let moreover \(G_1,\ldots ,G_D\) be independent standard Gaussian vectors in \(\mathbb {R}^n\), independent of \(X\). Then for all \(p \ge 2\), if \(\mathbf {D}^D f(X) \in L^p\), then

Proof

Induction on \(D\). For \(D = 1\) the assertion of the proposition coincides with (7), which (as already noted) is equivalent to (6). Let us assume that the proposition holds for \(D-1\). Applying thus (8) with \(D-1\) instead of \(D\), we obtain

Applying now the triangle inequality in \(L^p\), we get

Let us now apply (7) conditionally on \(G_1,\ldots ,G_{D-1}\) to the function \(f_1(x) = \Big \langle \mathbf {D}^{D-1} f(x),G_1\otimes \cdots \otimes G_{D-1}\Big \rangle \). Since \(\Big \langle \mathbf {D}^{D-1} f(X) - \mathbb E_X \mathbf {D}^{D-1}f(X),G_1\otimes \cdots \otimes G_{D-1}\Big \rangle = f_1(X) - \mathbb E_X f_1(X)\) and \(\langle \nabla f_1(X),G_D\rangle = \langle \mathbf {D}^D f(X),G_1\otimes \cdots \otimes G_D\rangle \), we obtain

To finish the proof it is now enough to integrate this inequality with respect to the remaining Gaussian vectors and combine the obtained estimate with (9) and (10).\(\square \)

Let us now specialize to the case when \(L_X(p) = Lp^\gamma \) for some \(L>0,\gamma \ge 1/2\). Combining the above proposition with Latała’s Theorem 3.1, we obtain immediately the following theorem, a special case of which is Theorem 1.2.

Theorem 3.3

Assume that \(X\) is a random vector in \(\mathbb {R}^n\), such that for some constants \(L>0,\gamma \ge 1/2\), all smooth bounded functions \(f\) and all \(p \ge 2\),

For any smooth function \(f:\mathbb {R}^n \rightarrow \mathbb {R}\) of class \(\mathcal {C}^D\) and \(p \ge 2\) if \(\mathbf {D}^D f(X) \in L^p\), then

Moreover, if \(\mathbf {D}^D f \) is bounded uniformly on \(\mathbb {R}^n\), then for all \(t > 0\),

where

Proof

The first part is a straightforward combination of Proposition 3.2 and Theorem 3.1. The second part follows from the first one by Chebyshev’s inequality \(\mathbb P\Big (|Y|\ge e\Vert Y\Vert _p\Big ) \le \exp (-p)\) applied with \(p = \eta _f(t)/C_D\) (note that if \(\eta _f(t)/C_D\le 2\) then one can make the tail bound asserted in the theorem trivial by adjusting the constants).\(\square \)

Remark

In [57, 58] Pisier presents a stronger inequality than (11) with \(\gamma = 1/2\). More specifically, he proves that if \(X,G\) are independent standard centered Gaussian vectors in \(\mathbb {R}^n\), \(E\) is a Banach space and \(f :\mathbb {R}^n \rightarrow E\) is a \(\mathcal {C}^1\) function, then for every convex function \(\Phi :E \rightarrow \mathbb {R}\),

where \(L = \frac{\pi }{2}\). As noted in [47], Caffarelli’s contraction principle [20] implies that, e.g., a random vector \(X\) with density \(e^{-V}\), where \(V:\mathbb {R}^n \rightarrow \mathbb {R}\) satisfies \(D^2 V \ge \lambda \mathrm {Id}, \lambda > 0\) satisfies the above inequality with \(L = \frac{\pi }{2\sqrt{\lambda }}\) (where \(G\) is still a standard Gaussian vector independent of \(X\)). Therefore in this situation a similar approach as in the proof of Proposition 3.2 can be used for functions \(f\) with values in a general Banach space. Moreover, a counterpart of Latała’s results is known for chaos with values in a Hilbert space (to the best of our knowledge this observation has not been published, in fact it can be quite easily obtained from the version for real valued chaos). Thus in this case we can obtain a counterpart of Theorem 3.3 (with \(\gamma = 1/2\)) for Hilbert space valued-functions. In the case of a general Banach space two-sided estimates with deterministic quantities for Gaussian chaos are not known. Still, one can use some known inequalities (like hypercontraction or Borell-Arcones-Giné inequality) instead of Theorem 3.1 and thus obtain new concentration bounds. We remark that if one uses hypercontraction, one can obtain explicit dependence of the constants on the degree of the polynomial, since explicit constants are known for hypercontractive estimates of (Banach space-valued) Gaussian chaos and one can keep track of them during the proof. We skip the details.

In view of Theorem 3.3 a natural question arises: for what measures is the inequality (11) satisfied? Before we provide examples, for technical reasons let us recall the definition of the length of the gradient of a locally Lipschitz function. For a metric space \((\mathcal {X},d)\), a locally Lipschitz function \(f:\mathcal {X} \rightarrow \mathbb {R}\) and \(x \in \mathcal {X}\), we define

If \(\mathcal {X} = \mathbb {R}^n\) with a Euclidean metric and \(f\) is differentiable at \(x\), then clearly \(|\nabla f|(x)\) coincides with the Euclidean length of the usual gradient \(\nabla f(x)\). For this reason, with a slight abuse of notation, we will write \(|\nabla f(x)|\) instead of \(|\nabla f|(x)\). We will consider only measures on \(\mathbb {R}^n\), however since we allow measures which are not necessarily absolutely continuous with respect to the Lebesgue measure, at some points in the proofs we will work with the above abstract definition.

Going back to the question of measures satisfying (11), it is well known (see e.g. [52]) that if \(X\) satisfies the Poincaré inequality

for all locally Lipschitz bounded functions, then \(X\) satisfies (11) with \(\gamma = 1\) and \(L = C\sqrt{D_{Poin}}\) (recall that \(C\) always denotes a universal constant). Assume now that \(X\) satisfies the logarithmic Sobolev inequality

for locally Lipschitz bounded functions, where for a nonnegative random variable \(Y\),

Then, by the results from [3], it follows that \(X\) satisfies (11) with \(\gamma = 1/2\) and \(L = \sqrt{D_{LS}}\).

We will now generalize this observation to measures satisfying the modified logarithmic Sobolev inequality (introduced in [28]). We will present it in greater generality than needed for proving (11), since we will use it later (in Sect. 6) to prove refined concentration results for random vectors with independent Weibull coordinates.

Let \(\beta \in [2,\infty )\). We will say that a random vector \(Y \in \mathbb {R}^k\) satisfies a \(\beta \)-modified logarithmic Sobolev inequality if for every locally Lipschitz bounded positive function \(f :\mathbb {R}^k \rightarrow (0,\infty )\),

Let us also introduce two quantities, measuring the length of the gradient in product spaces. Consider a locally Lipschitz function \(f :\mathbb {R}^{mk} \rightarrow \mathbb {R}\), where we identify \(R^{mk}\) with the \(m\)-fold Cartesian product of \(\mathbb {R}^k\). Let \(x = (x_1,\ldots ,x_m)\), where \(x_i \in \mathbb {R}^k\). For each \(i=1,\ldots ,m\), let \(|\nabla _i f(x)|\) be the length of the gradient of \(f\), treated as a function of \(x_i\) only, with the other coordinates fixed. Now for \(r \ge 1\), set

Note that if \(f\) is differentiable at \(x\), then \(|\nabla f(x)|_2 = |\nabla f(x)|\) (the Euclidean length of the “true” gradient), whereas for \(k = 1\) (and \(f\) differentiable), \(|\nabla f(x)|_r\) is the \(\ell _r^m\) norm of \(\nabla f(x)\).

Theorem 3.4

Let \(\beta \in [2,\infty )\) and \(Y\) be a random vector in \(\mathbb {R}^k\), satisfying (16). Consider a random vector \(X = (X_1,\ldots ,X_m)\) in \(\mathbb {R}^{mk}\), where \(X_1,\ldots ,X_m\) are independent copies of \(Y\). Then for any locally Lipschitz \(f :\mathbb {R}^{mk} \rightarrow \mathbb {R}\) such that \(f(X)\) is integrable, and \(p \ge 2\),

where \(\alpha = \frac{\beta }{\beta -1}\) is the Hölder conjugate of \(\beta \).

In particular using the above theorem with \(m = 1\) and \(k = n\), we obtain the following

Corollary 3.5

If \(X\) is a random vector in \(\mathbb {R}^n\) which satisfies the \(\beta \)-modified log-Sobolev inequality (16), then it satisfies (11) with \(\gamma = \frac{\beta -1}{\beta } \ge \frac{1}{2}\) and \(L = C_\beta \max (D_{LS_\beta }^{1/2},D_{LS_\beta }^{1/\beta })\).

We remark that in the class of logarithmically concave random vectors, the \(\beta \)-modified log-Sobolev inequality is known to be equivalent to concentration for 1-Lipschitz functions of the form \(\mathbb P\Big (|f(X) - \mathbb Ef(X)| \ge t \Big ) \le 2\exp \Big (-c t^{\beta /(\beta -1)}\Big )\) [53].

Proof of Theorem 3.4

By the tensorization property of entropy (see e.g. [46], Proposition 5.6) we get for all positive locally Lipschitz bounded functions \(f :\mathbb {R}^{mk} \rightarrow (0,\infty )\),

Following [3], consider now any locally Lipschitz bounded \(f > 0\) and denote \(F(t) = \mathbb Ef(X)^t\). For \(t > 2\),

and

By (18) applied to the function \(g = f^{t/2} = \varphi \circ f \) where \(\varphi (u) = |u|^{t/2}\),

By the chain rule and the Hölder inequality for the pair of conjugate exponents \(t/2, t/(t-2)\),

Similarly, for \(t \ge \beta \),

Thus we get for \(\beta \le t \le p\),

Denote \( a = \frac{D_{LS_\beta }}{2}\Big \Vert |\nabla f(X)|_2\Big \Vert _p^2, b = \frac{D_{LS_\beta }}{2^{\beta -1}}\Big \Vert |\nabla f(X)|_\beta \Big \Vert _p^\beta , g(t) = \left( \mathbb Ef(X)^t \right) ^{2/t}\). The above inequality can be written as

for \(t \in [\beta ,p]\) or, denoting \(G = g^{\beta /2}\),

For \(\varepsilon > 0\) consider now the function \(H_\varepsilon (t) = (g(\beta ) + a (t-\beta ) + b^{2/\beta } t^{2 - 2/\beta }+\varepsilon )^{\beta /2}\). We have

and

where we used the assumption \(\beta \ge 2\). Using the last three inequalities together with the fact that for \(t \ge 0\) the function \(x \mapsto x^{(\beta -2)/2}a + t^{\beta -2}b\) is increasing on \([0,\infty )\) we obtain that \(G(t) \le H_\varepsilon (t)\) for all \(t \in [\beta ,p]\), which by taking \(\varepsilon \rightarrow 0^+\) implies that for \(p \ge \beta \),

i.e.,

The above inequality has been proved so far for strictly positive, locally Lipschitz functions (the boundedness assumption can be easily removed by truncation and passage to the limit). For the case of a general locally Lipschitz function \(f\), take any \(\varepsilon >0\) and consider \(\tilde{f} = |f| + \varepsilon \). Since \(\tilde{f}\) is strictly positive and locally Lipschitz, the above inequality holds also for \(\tilde{f}\). Taking \(\varepsilon \rightarrow 0^+\), we can now extend (19) to arbitrary locally Lipschitz \(f\).

Finally, assume \(f :\mathbb {R}^{mk} \rightarrow \mathbb {R}\) is locally Lipschitz and \(f(X)\) is integrable. Applying (19) to \(f - \mathbb Ef(X)\) instead of \(f\) and taking the square root, we obtain

for \(p \ge \beta \). For \(p \in [2, \beta ]\), since (16) implies the Poincaré inequality with constant \(D_{LS_\beta }/2\) (see Proposition 2.3. in [28]), we get

(see the remark following (14)). These two estimates yield (17) with \(C_\beta = C \sqrt{\beta }\).\(\square \)

3.1 Applications of Theorem 1.2

Let us now present certain applications of estimates established in the previous section. For simplicity we will restrict to the basic setting presented in Theorem 1.2.

3.1.1 Polynomials

A typical application of Theorem 1.2 would be to obtain tail inequalities for multivariate polynomials in the random vector \(X\). The constants involved in such estimates do not depend on the dimension, but only on the degree of the polynomial. As already mentioned in the introduction, our results in this setting can be considered as a transference of inequalities by Latała from the tetrahedral Gaussian case to the case of not necessarily product random vectors and general polynomials.

3.1.2 Additive functionals and related statistics

We will now consider three classes of additive statistics of a random vector, often arising in various problems.

Additive functionals Let \(X\) be a random vector in \(\mathbb {R}^n\) satisfying (3). For a function \(f:\mathbb {R}\rightarrow \mathbb {R}\) define the random variable

It is classical and follows from (3) by a simple application of the Chebyshev inequality that if \(f\) is smooth with \(\Vert f'\Vert _\infty \le \alpha \), then for all \(t > 0\),

Using Theorem 1.2 we can easily obtain inequalities which hold if \(f\) is a polynomial-like function, i.e., if \(\Vert f^{(D)}\Vert _\infty < \infty \) for some \(D\). Note that the derivatives of the function \(F(x_1,\ldots ,x_n) = f(x_1)+\cdots +f(x_n)\) have a very simple diagonal form. In consequence, calculating their \(\Vert \cdot \Vert _\mathcal {J}\) norms is simple. More precisely, we have

where \(\mathrm{diag}_d(x_1,\ldots ,x_n)\) stands for the \(d\)-indexed matrix \((a_{\mathbf{i}})_{\mathbf{i}\in [n]^d}\) such that \(a_\mathbf{i}= x_i\) if \(i_1 = \cdots = i_d = i\) and \(0\) otherwise. It is easy to see that if \(\mathcal {J} = \{[d]\}\), then \(\Vert \mathrm{diag}_d(x_1,\ldots ,x_n)\Vert _\mathcal {J} = \sqrt{x_1^2+\cdots +x_n^2}\) and if \(\# \mathcal {J} \ge 2\), then \(\Vert \mathrm{diag}_d(x_1,\ldots ,x_n)\Vert _\mathcal {J} = \max _{i\le n}|x_i|\). Therefore we obtain the following corollary to Theorem 1.2. We will apply it in the next section to linear eigenvalue statistics of random matrices.

Corollary 3.6

Let \(X\) be a random vector in \(\mathbb {R}^n\) satisfying (3), \(f :\mathbb {R}\rightarrow \mathbb {R}\) a \(\mathcal {C}^D\) function, such that \(\Vert f^{(D)}\Vert _\infty < \infty \) and let \(Z_f\) be defined by (20). Then for all \(t > 0\),

Clearly the case \(D=1\) of the above corollary recovers (21) up to constants. Moreover using the (yet unproven) Theorem 1.3 one can see that for \(f(x) = x^D\) and \(X\) being a standard Gaussian vector in \(\mathbb {R}^n\), the estimate of the corollary is optimal up to absolute constants (in this case, since \(Z_f\) is a sum of independent random variables, one can also use estimates from [33]).

Additive functionals of partial sums Let us now consider a slightly more involved additive functional of the form

Such random variables arise e.g., in the study of additive functionals of random walks (see e.g. [16, 61]). For simplicity we will only discuss what can be obtained directly for Lipschitz functions \(f\) and what Theorem 1.2 gives for \(f\) with bounded second derivative. Let thus \(F(x) = \sum _{i=1}^n f\Big (\sum _{j=1}^i x_j\Big )\). We have \(\frac{\partial }{\partial x_i} F(x) = \sum _{l\ge i} f^{\prime }\Big (\sum _{j\le l} x_j\Big )\). Therefore

which, when combined with (3) and Chebyshev’s inequality yields

Now, let us assume that \(f \in \mathcal {C}^2\) and \(f''\) is bounded. We have

Moreover

and thus

Since \(\mathbf {D}^2 F\) is a symmetric bilinear form, we have

Using the above estimates and Theorem 1.2 we obtain

To effectively bound the sub-Gaussian coefficient in the above inequality one should use some additional information about the structure of the vector \(X\). For a given function \(f\) it is of order at most \(n^5,\) but if, e.g., the function \(f\) is even and \(X\) is symmetric, it clearly vanishes. In this case we get

One can check that if for instance \(X\) is a standard Gaussian vector in \(\mathbb {R}^n\) and \(f(x) = x^2\) then this estimate is tight up to the value of the constant \(C\).

\(U\) -statistics Our last application in this section will concern \(U\)-statistics (for simplicity of order 2) of the random vector \(X\), i.e., random variables of the form

where \(h_{ij}:\mathbb {R}^2 \rightarrow \mathbb {R}\) are smooth functions. Without loss of generality let us assume that \(h_{ij}(x,y) = h_{ji}(y,x)\).

A simple application of Chebyshev’s inequality and (3) gives that if partial derivatives of \(h_{i,j}\) are uniformly bounded on \(\mathbb {R}^2\) then for all \(t > 0\),

For \(h_{ij}\) of class \(\mathcal {C}^2\) with bounded derivatives of second order, a direct application of Theorem 1.2 gives

where

In particular, if \(h_{ij} = h\), a function with bounded derivatives of second order, we get \(\alpha ^2 = \mathcal {O}(n^3), \beta ^2 = \mathcal {O}(n^3), \gamma = \mathcal {O}(n)\), which shows that the oscillations of \(U\) are of order at most \(\mathcal {O}(n^{3/2})\). In the case of \(U\)-statistics of independent random variables, generated by bounded \(h\), this is a well known fact, corresponding to the CLT and classical Hoeffding inequalities for \(U\)-statistics. We remark that in the non-degenerate case, i.e. when \(\mathrm{Var\,}(\mathbb E_X h(X,Y)) > 0, n^{3/2}\) is indeed the right normalization in the CLT for \(U\)-statistics (see e.g. [24]).

3.1.3 Linear statistics of eigenvalues of random matrices

We will now use Corollary 3.6 to obtain tail inequalities for linear eigenvalue statistics of Wigner random matrices. We remark that one could also apply to the random matrix case the other inequalities considered in the previous section, obtaining in particular estimates on \(U\)-statistics of eigenvalues (which have been recently investigated by Lytova and Pastur [50]). We will focus on linear eigenvalues statistics (additive functionals in the language of the previous section) and obtain inequalities involving a Sobolev norm of the function \(f\) with respect to the semicircle law (the limiting spectral distribution for Wigner ensembles) as a sub-Gaussian term. We refer the Reader to the monographs [4, 6, 51, 56] for basic facts concerning random matrices.

Consider thus a real symmetric \(n\times n\) random matrix \(A\) (\(n \ge 2\)) and let \(\lambda _1\le \cdots \le \lambda _n\) be its eigenvalues. We will be interested in concentration inequalities for functionals of the form

In [31] Guionnet and Zeitouni obtained concentration inequalities for \(Z\) with Lipschitz \(f\) assuming that the entries of \(A\) are independent and satisfy the log-Sobolev inequality with some constant \(L\). More specifically, they prove that for all \(t > 0\),

(In fact they treat a more general case of banded matrices, but for simplicity we will focus on the basic case.)

As a corollary to Theorem 1.2 we present below an inequality which compliments the above result. Our aim is to replace the strong parameter \(\Vert f'\Vert _\infty \) controlling the sub-Gaussian tail by a weaker Sobolev norm with respect to the semicircular law

(recall that this is the limiting spectral distribution for Wigner matrices). Imposing additional smoothness assumptions on the function \(f\) it can be done in a window \(|t| \le c_f n\), where \(c_f\) depends on \(f\).

Proposition 3.7

Assume the entries of the matrix \(A\) are independent (modulo symmetry conditions) real valued, mean zero and variance one random variables, satisfying the logarithmic Sobolev inequality (15) with constant \(L^2\). If \(f\) is \(\mathcal {C}^2\) with bounded second derivative, then for all \(t > 0\),

Remark

The case \(f(x) = x^2\) shows that under the assumptions of Proposition 3.7 one cannot expect a tail behaviour better than exponential for large \(t\). Indeed, since \(Z = \frac{1}{n}(\lambda _1^2 + \cdots + \lambda _n^2) = \frac{1}{n}\sum _{i,j \le n} A_{ij}^2\), even if \(A\) is a matrix with standard Gaussian entries, then for all \(t > 0, \mathbb P(|Z-\mathbb EZ| \ge t) > \frac{1}{C} \exp ( -C (t^2 \wedge nt))\).

Remark

A similar inequality to (23) holds in the case of Hermitian matrices with independent entries as well. In the proof given below one should invoke an appropriate result concerning the speed of convergence of the spectral distribution of Wigner matrices to the semicircular law.

Proof

Let us identify the random matrix \(A\) with a random vector \(\tilde{A} = (A_{ij})_{1\le i\le j\le n}\) having values in \(\mathbb {R}^{n(n+1)/2}\) endowed with the standard Euclidean norm \(|\tilde{A}| = \left( \sum _{1 \le i \le j \le n} A_{ij}^2\right) ^{1/2}\). Note that \(\Vert A\Vert _{\mathrm{HS}} \le \sqrt{2} |\tilde{A}|\). By independence of coordinates of \(\tilde{A}\) and the tensorization property of the logarithmic Sobolev inequality (see, e.g., [46, Corollary 5.7]), \(\tilde{A}\) also satisfies (15) with constant \(L^2\). Furthermore, by the Hoffman-Wielandt inequality (see, e.g., [4, Lemma 2.1.19]) which asserts that if \(B, C\) are two \(n \times n\) real symmetric (or Hermitian) matrices and \(\lambda _i(B), \lambda _i(C)\) resp. their eigenvalues arranged in nondecreasing order, then

we get that the map \(\tilde{A} \mapsto (\lambda _1/\sqrt{n}, \ldots , \lambda _n/\sqrt{n}) \in \mathbb {R}^n\) is \(\sqrt{2/n}\)-Lipschitz. Therefore, the random vector \((\lambda _1/\sqrt{n}, \ldots , \lambda _n/\sqrt{n})\) satisfies (15) with constant \(2L^2/n\). In consequence, by the results from [3] (see also Theorem 3.4), \((\lambda _1/\sqrt{n}, \ldots , \lambda _n/\sqrt{n})\) also satisfies (3) with constant \(\sqrt{2}L/\sqrt{n}\). Applying Corollary 3.6 with \(D=2\) we obtain

In what follows we shall estimate from above the term \(n^{-1}\sum _{i=1}^n (\mathbb Ef'(\lambda _i/\sqrt{n}))^2\) from (24). First, by Jensen’s inequality

where \(\mu \) is the expected spectral measure of the matrix \(n^{-1/2}A\). According to Wigner’s theorem, for a fixed \(f, \mu \) converges to the semicircular law as \(n \rightarrow \infty \) and thus \(\int _\mathbb {R}(f')^2 \, d\mu \rightarrow \int _{-2}^2 (f')^2 \, d\rho \). A non-asymptotic bound on the term \(\int _\mathbb {R}f'^2 \, d\mu \) can be obtained using the result of Bobkov, Götze and Tikhomirov [12] on the speed of convergence of the expected spectral distribution of real Wigner matrices to the semicircular law. Since each entry of \(A\) satisfies the logarithmic Sobolev inequality with constant \(L^2\), it also satisfies the Poincaré inequality with the same constant (see e.g. [46, Chapter 5]). Therefore Theorem 1.1 from [12] gives

where \(F_\mu \) and \(F_\rho \) are the distribution functions of \(\mu \) and \(\rho \), respectively.

The decay of \(1-F_\mu (x)\) and \(F_\mu (x)\) as \(x \rightarrow \infty \) and \(x \rightarrow -\infty \) (resp.) can be obtained using the sub-Gaussian concentration of \(\lambda _n/\sqrt{n}\) and \(\lambda _1/\sqrt{n}\), which is, e.g., a consequence of (3) for the vector of eigenvalues of \(n^{-1/2} A\). For example, for any \(t \ge 0\),

Using the classical technique of \(\delta \)-nets for estimating the operator norm of a matrix (see e.g. [58]) and the fact that the entries of \(A\) are sub-Gaussian (as they satisfy the logarithmic Sobolev inequality) one gets \(\mathbb E\lambda _n \le \mathbb E\Vert A\Vert _{\text {op}} \le C L \sqrt{n}\), which together with (27) yields

for all \(t \ge 0\). Clearly, the same inequality holds for \(F(-CL - t)\). Integrating by parts, we get

Combining the uniform estimate (26) with (28) and using an elementary inequality \(2 x y \le x^2 + y^2\), we estimate the last integral in (29) as follows:

where

We proceed to estimate the two last terms from (30). Take \(r > 0\) such that

or put \(r=0\) if no such \(r\) exists. Note that if we assume \(C_L \ge 1\), as we obviously can, then

We shall need the following estimates, which are easy consequences of the standard estimate for a Gaussian tail:

and

Now, (31), (32) and (33) yield

We shall also need the estimate for \(\int _\mathbb {R}x^2 \, d\nu (x)\) which follows from (31), (32) and (34):

In order to estimate \(\int _\mathbb {R}f'^2 \, d\nu \), take any \(x_0 \in [-2,2]\) such that \(|f'(x_0)|^2 \le \int _{-2}^2 f'^2 \, d\rho \), and use \(|f'(x)| \le |f'(x_0)| + |x-x_0| \left\| f'' \right\| _\infty \) to obtain

Plugging (35) and (36) into the above yields

In turn, plugging (35) and (37) into (30) and then combining with (29) we finally get

which combined with (24) and (25) completes the proof.\(\square \)

Remarks

1. The factor \(n^{-2/3}\) in (23) comes only from (26) and in some situations can be improved, provided one can obtain better speed of convergence to the semicircle law.

2. With some more work (using truncations or working directly on moments) one can extend the above proposition to the case \(|f''(x)| \le a(1+|x|^k)\) for some non-negative integer \(k\) and \(a \in \mathbb {R}\). In this case we obtain

We also remark that to obtain the inequality (24) one does not have to use independence of the entries of \(A\), it is enough to assume that the vector \(\tilde{A}\) satisfies the inequality (3).

4 Two-sided estimates of moments for Gaussian polynomials

We will now prove Theorem 1.3, showing that in the case of general polynomials in Gaussian variables, the estimates of Theorem 1.2 are optimal (up to constants depending only on the degree of the polynomial). In the special case of tetrahedral polynomials this follows from Latała’s Theorem 1.1 and the following result by Kwapień.

Theorem 4.1

(Kwapień, Lemma 2 in [40]) If \(X = (X_1,\ldots ,X_n)\) where \(X_i\) are independent symmetric random variables, \(Q\) is a multivariate tetrahedral polynomial of degree \(D\) with coefficients in a Banach space \(E\) and \(Q_d\) is its homogeneous part of degree \(d\), then for any symmetric convex function \(\Phi :E \rightarrow \mathbb {R}_+\) and any \(d \in \{0,1, \ldots , D\}\),

Indeed, when combined with Theorem 1.1 and the triangle inequality, the above theorem gives the following

Corollary 4.2

Let

where \(A_d = (a^{(d)}_\mathbf{i})_{\mathbf{i}\in [n]^d}\) is a \(d\)-indexed symmetric matrix of real numbers such that \(a_\mathbf{i}= 0\) if \(i_k = i_l\) for some \(k\ne l\) (we adopt the convention that for \(d=0\) we have a single number \(a^{(0)}_\emptyset \)). Then for any \(p\ge 2\),

The strategy of proof of Theorem 1.3 is very simple and relies on infinite divisibility of Gaussian random vectors, which will help us approximate the law of a general polynomial in Gaussian variables by the law of a tetrahedral polynomial, for which we will use Corollary 4.2.

It will be convenient to have the polynomial \(f\) represented as a combination of multivariate Hermite polynomials:

where

and \(h_m(x) = (-1)^m e^{x^2/2} \frac{d^m}{dx^m} e^{-x^2/2}\) is the \(m\)-th Hermite polynomial.

Let \((W_t)_{t \in [0,1]}\) be a standard Brownian motion. Consider standard Gaussian random variables \(g = W_1\) and, for any positive integer \(N\),

For any \(d \ge 0\), we have the following representation of \(h_d(g) = h_d(W_1)\) as a multiple stochastic integral (see [34, Example 7.12 and Theorem 3.21]),

Approximating the multiple stochastic integral leads to

where the limit is in \(L^2(\Omega )\) (see [34, Theorem 7.3. and formula (7.9)]) and actually the convergence holds in any \(L^p\) (see [34, Theorem 3.50]). We remark that instead of multiple stochastic integrals with respect to the Wiener process we could use the CLT for canonical \(U\)-statistics (see [24, Chapter 4.2]), however the stochastic integral framework seems more convenient as it allows to put all the auxiliary variables on the same probability space as the original Gaussian sequence.

Now, consider \(n\) independent copies \((W_t^{(i)})_{t \in [0,1]}\) of the Brownian motion (\(i=1, \ldots , n\)) together with the corresponding Gaussian random variables: \(g^{(i)} = W_1^{(i)}\) and, for \(N \ge 1\),

In the lemma below we state the representation of a multivariate Hermite polynomial in the variables \(g^{(1)}, \ldots , g^{(n)}\) as a limit of tetrahedral polynomials in the variables \(g_{j, N}^{(i)}\). To this end let us introduce some more notation. Let

be a Gaussian vector with \(n \times N\) coordinates. We identify here the set \([nN]\) with \([n]\times [N]\) via the bijection \((i,j) \leftrightarrow (i-1)N+j\). We will also identify the sets \(([n]\times [N])^d\) and \([n]^d\times [N]^d\) in a natural way. For \(d \ge 0\) and \(\mathbf{d}\in \Delta _d^n\), let

and define a \(d\)-indexed matrix \(B_{\mathbf{d}}^{(N)}\) of \(n^d\) blocks each of size \(N^d\) as follows: for \(\mathbf{i}\in [n]^d\) and \(\mathbf{j}\in [N]^d\),

Lemma 4.3

With the above notation, for any \(p > 0\),

Proof

Using (39) for each \(h_{d_i}(g^{(i)})\),

For each \(N\), the right-hand side equals

since \(\# I_{\mathbf{d}} = \frac{d!}{d_1! \cdots d_n!}\).\(\square \)

Note that \(B_\mathbf{d}^{(N)}\) is symmetric, i.e., for any \(\mathbf{i}\in [n]^d, \mathbf{j}\in [N]^d\) if \(\pi :[d] \rightarrow [d]\) is a permutation and \(\mathbf{i}' \in [n]^d, \mathbf{j}' \in [N]^d\) are such that \(\forall _{k \in [d]} \; i'_k = i_{\pi (k)}\) and \(j'_k = j_{\pi (k)}\), then

Moreover, \(B_\mathbf{d}^{(N)}\) has zeros on “generalized diagonals”, i.e., \(\big ( B_\mathbf{d}^{(N)} \big )_{(\mathbf{i},\mathbf{j})} = 0\) if \((i_k, j_k) = (i_l, j_l)\) for some \(k \ne l\).

Proof of Theorem 1.3

Let us first note that it is enough to prove the moment estimates, the tail bound follows from them by the Paley-Zygmund inequality (see e.g. the proof of Corollary 1 in [44]). Moreover, the upper bound on moments follows directly from Theorem 1.2. For the lower bound we use Lemma 4.3 to approximate the \(L^p\) norm of \(f(G)-\mathbb Ef(G)\) with that of a tetrahedral polynomial, for which we can use the lower bound from Corollary 4.2.

Assuming \(f\) is of the form (38), Lemma 4.3 together with the triangle inequality implies

for any \(p > 0\), where \(G = (g^{(1)}, \ldots , g^{(n)} )\). It therefore remains to relate \(\big \Vert \sum _{\mathbf{d}\in \Delta _d^n} a_\mathbf{d}B_\mathbf{d}^{(N)}\big \Vert _{\mathcal {J}}\) to \(\left\| \mathbb E\mathbf {D}^d f(G) \right\| _{\mathcal {J}}\) for any \(d \ge 1\) and \(\mathcal {J} \in P_d\). In fact we shall prove that

which will end the proof.

Fix \(d \ge 1\) and \(\mathcal {J} \in P_d\). For any \(\mathbf{d}\in \Delta _d^n\) define a symmetric \(d\)-indexed matrix \((b_\mathbf{d})_{\mathbf{i}\in [n]^d}\) as

and a symmetric \(d\)-indexed matrix \((\tilde{B}_\mathbf{d}^{(N)})_{(\mathbf{i}, \mathbf{j}) \in ([n] \times [N])^d}\) as

It is a simple observation that

On the other hand, for any \(\mathbf{d}\in \Delta _d^n\), the matrices \(\tilde{B}_\mathbf{d}^{(N)}\) and \(B_\mathbf{d}^{(N)}\) differ at no more than \(\# I_\mathbf{d}\cdot \#([N]^d{\setminus }[N]^{\underline{d}})\) entries. More precisely, if \(\mathcal {J}_0 = \{ [d] \}\) (the trivial partition of \([d]\) into one set), then

Thus the triangle inequality for the \(\Vert \cdot \Vert _\mathcal {J}\) norm together with (41) yields

Finally, note that

Indeed, using the identity on Hermite polynomials, \(\frac{d}{dx} h_k(x) = k h_{k-1}(x)\) (\(k \ge 1\)), we obtain \(\mathbb E\frac{d^l}{dx^l} h_k(g) = k! \mathbf {1}_{\{k=l\}}\) for \(k,l\ge 0\), and thus, for any \(d, l \le D\) and \(\mathbf{d}\in \Delta _l^n\),

Now, (43) follows by linearity. Combining it with (42) proves (40).\(\square \)

Remark

Note that the above infinite-divisibility argument can be also used to prove the upper bound on moments in Theorem 1.3 (giving a proof independent of the one relying on Theorem 1.2).

5 Polynomials in independent sub-Gaussian random variables

In this section we prove Theorem 1.4. Before we proceed with the core of the proof we will need to introduce some auxiliary inequalities for the norms \(\Vert \cdot \Vert _\mathcal {J}\) as well as some additional notation.

5.1 Properties of \(\Vert \cdot \Vert _\mathcal {J}\) norms

The first inequality we will need is pretty standard and given in the following lemma (it is a direct consequence of the definition of the norms \(\Vert \cdot \Vert _{\mathcal {J}}\)). Below \(\circ \) denotes the Hadamard product of \(d\)-indexed matrices, as defined in Sect. 2.

Lemma 5.1

For any \(d\)-indexed matrix \(A = (a_\mathbf{i})_{\mathbf{i}\in [n]^d}\) and any vectors \(v_1,\ldots ,v_d \in \mathbb {R}^n\) we have for all \(\mathcal {J} \in P_d\),

To formulate subsequent inequalities we need some auxiliary notation concerning \(d\)-indexed matrices. We will treat matrices as functions from \([n]^d\) into the real line, which in particular allows us to use the notation of indicator functions and for a set \(C \subseteq [n]^d\) write \(\mathbf {1}_{C}\) for the matrix \((a_\mathbf{i})\) such that \(a_\mathbf{i}= 1\) if \(\mathbf{i}\in C\) and \(a_\mathbf{i}= 0\) otherwise.

Note that for \(\#\mathcal {J} > 1, \Vert \cdot \Vert _\mathcal {J}\) is not unconditional in the standard basis, i.e., in general it is not true that \(\Vert A\circ \mathbf {1}_{C}\Vert _\mathcal {J} \le \Vert A\Vert _\mathcal {J}\). One situation in which this inequality holds is when \(C\) is of the form \(C = \{\mathbf{i}:i_{k_1} = j_1,\ldots ,i_{k_l} = j_l\}\) for some \(1 \le k_1<\ldots <k_l \le d\) and \(j_1,\ldots ,j_l \in [n]\) (which follows from Lemma 5.1). This corresponds to setting to zero all coefficients which are outside a “generalized row” of a matrix and leaving the coefficients in this row intact.

Later we will need another inequality of this type, which will allow us to select a “generalized diagonal” of a matrix. The corresponding estimate is given in the following

Lemma 5.2

Let \(A = (a_\mathbf{i})_{\mathbf{i}\in [n]^d}\) be a \(d\)-indexed matrix, \(K \subseteq [d]\) and let \(C \subseteq [n]^d\) be of the form \(C = \{\mathbf{i}:i_k = i_l \;\text {for all} \; k,l \in K\}\). Then for every \(\mathcal {J} \in P_d, \Vert A\circ \mathbf {1}_{C}\Vert _\mathcal {J} \le \Vert A\Vert _\mathcal {J}\).

Proof

Since \(\mathbf {1}_{C_1\cap C_2} = \mathbf {1}_{C_1} \circ \mathbf {1}_{C_2}\), it is enough to consider the case \(\#K = 2\), i.e. \(C = \{\mathbf{i}:i_k = i_l\}\) for some \(1\le k<l\le d\). Let \(\mathcal {J} = \{J_1,\ldots ,J_m\}\). We will consider two cases.

1. The numbers \(k\) and \(l\) are separated by the partition \(\mathcal {J}\). Without loss of generality we can assume that \(k\in J_1, l\in J_2\). Then

For any \(x^{(3)}_{\mathbf{i}_{J_3}},\ldots ,x^{(m)}_{\mathbf{i}_{J_m}}\), consider the matrix

acting from \(\ell _2([n]^{J_1})\) to \(\ell _2([n]^{J_2})\).

For fixed \(x^{(3)}_{\mathbf{i}_{J_3}},\ldots ,x^{(m)}_{\mathbf{i}_{J_m}}\) the inner supremum on the right hand side of (44) is the operator norm of the block-diagonal matrix obtained from \(B\) by setting to zero entries in off-diagonal blocks. Therefore it is not greater than the operator norm of \(B\), which allows us to write

2. There exists \(j\) such that \(k,l \in J_j\). Without loss of generality we can assume that \(j = 1\). We have

\(\square \)

For a partition \(\mathcal {K} = \{K_1,\ldots ,K_m\} \in P_d\) define

Thus \(L(\mathcal {K})\) is the set of all indices for which the partition into level sets is equal to \(\mathcal {K}\).

Corollary 5.3

For any \(\mathcal {J,K} \in P_d\) and any \(d\)-indexed matrix \(A\),

Proof

By Lemma 5.2 and the triangle inequality for any \(k< l\),

Now it is enough to note that \(L(\mathcal {K})\) can be expressed as an intersection of \(\#\mathcal {K}\) “generalized diagonals” and \(\#\mathcal {K}(\#\mathcal {K}-1)/2\) sets of the form \(\{\mathbf{i}:i_k\ne i_l\}\) where \(k < l\) and use again Lemma 5.2 together with (46).\(\square \)

5.2 Proof of Theorem 1.4

Let us first note that the tail bound of Theorem 1.4 follows from the moment estimate and Chebyshev’s inequality in the same way as in Theorems 1.2 or 3.3. We will therefore focus on the moment bound.

The method of proof will rely on the reduction to the Gaussian case via decoupling inequalities, symmetrization and the contraction principle. To carry out this strategy we will need the following representation of \(f\):

where the coefficients \(c_{(i_1,k_1),\ldots ,(i_m,k_m)}^{(d)}\) satisfy

for all permutations \(\pi :[m] \rightarrow [m]\). At this point we would like to explain the convention regarding indices which we will use throughout this section. It is rather standard, but we prefer to draw the Reader’s attention to it, as we will use it extensively in what follows. Namely, we will treat the sequence \(\mathbf{k}= (k_1,\ldots ,k_m)\) as a function acting on \([m]\) and taking values in positive integers. In particular if \(m=0\), then \([m] = \emptyset \) and there exists exactly one function \(\mathbf{k}:[m]\rightarrow \mathbb {N}{\setminus }\{0\}\) (the empty function). Moreover by convention this function satisfies \(\sum _{i=1}^m k_i = 0\) (as the summation runs over an empty set). Therefore, for \(d=0\) and \(m=0\) the subsum over \(k_1,\ldots ,k_m\) and \(\mathbf{i}\) above is equal to the free coefficient of the polynomial (which can be denoted by \(c_\emptyset ^{(0)}\)), since the summation over \(k_1,\ldots ,k_m\) runs over a one-element set containing the empty index/function and for this index there is exactly one index \(\mathbf{i}:[m]\rightarrow \{1,\ldots ,n\}\), which belongs to \([n]^{\underline{m}}\) (again the empty-index). Here we also use the convention that a product over an empty set is equal to one. On the other hand, for \(d>0\), the contribution from \(m=0\) is equal to zero (as the empty index \(\mathbf{k}\) does not satisfy the constraint \(k_1+\cdots +k_m = d\) and so the summation over \(k_1,\ldots ,k_m\) runs over the empty set).

Using (47) together with independence of \(X_1,\ldots ,X_n\), one may write

Rearranging the terms and using (48) together with the triangle inequality, we obtain

where

Note that (48) implies that for every permutation \(\pi :[a]\rightarrow [a]\),

Let now \(X^{(1)},\ldots ,X^{(D)}\) be independent copies of the random vector \(X\) and \((\varepsilon _i^{(j)})_{i\le n,j\le D}\) an array of i.i.d. Rademacher variables independent of \((X^{(j)})_j\). For each \(k_1,\ldots ,k_a\), by decoupling inequalities (Theorem 7.1 in the “Appendix”) applied to the functions

and standard symmetrization inequalities (applied conditionally \(a\) times) we obtain,

(note that in the first part of Theorem 7.1 one does not impose any symmetry assumptions on the functions \(h_\mathbf{i}\)).

We will now use the following standard comparison lemma (for Reader’s convenience its proof is presented in the “Appendix”).

Lemma 5.4

For any positive integer \(k\), if \(Y_1,\ldots ,Y_n\) are independent symmetric variables with \(\Vert Y_i\Vert _{\psi _{2/k}} \le M\), then

where \(g_{ij}\) are i.i.d. \(\mathcal {N}(0,1)\) variables.

Note that for any positive integer \(k\) we have \(\Vert X_i^k\Vert _{\psi _{2/k}} = \Vert X_i\Vert _{\psi _2}^k \le L^k\), so (50) together with the above lemma (used repeatedly and conditionally) yield

where \((g_{i,k}^{(j)})\) is an array of i.i.d. standard Gaussian variables. Consider now multi-indexed matrices \(B_1,\ldots ,B_D\) defined as follows. For \(1\le d\le D\), and a multi-index \(\mathbf{r}= (r_1,\ldots ,r_d)\in [n]^d\), let \(\mathcal {I} = \{I_1,\ldots ,I_a\}\) be the partition of \(\{1,\ldots ,d\}\) into the level sets of \(\mathbf{r}\) and \(i_1,\ldots ,i_a\) be the values corresponding to the level sets \(I_1,\ldots ,I_a\). Define moreover

(note that thanks to (49) this definition does not depend on the order of \(I_1,\ldots ,I_a\)). Finally, define the \(d\)-indexed matrix \(B_d = (b^{(d)}_{\mathbf{r}})_{\mathbf{r}\in [n]^d}\).

Let us also define for \(k_1,\ldots ,k_a > 0, \sum _{i=1}^a k_i = d\) the partition \(\mathcal {K}(k_1,\ldots ,k_a) \in P_d\) by splitting the set \(\{1,\ldots ,d\}\) into consecutive intervals of length \(k_1,\ldots ,k_a\), i.e., \(\mathcal {K} = \{K_1,\ldots ,K_a\}\), where for \(l = 1,\ldots ,a, K_l = \{1+\sum _{i=1}^{l-1} k_i,2+\sum _{i=1}^{l-1} k_i, \ldots ,\sum _{i=1}^{l} k_i\}\).

Applying Theorem 3.1 to the right hand side of (51), we obtain

Note that for all \(k_1,\ldots ,k_a\) by Corollary 5.3 we have \(\Vert B_d\circ \mathbf {1}_{L(\mathcal {K}(k_1,\cdots ,k_a))}\Vert _\mathcal {J} \le C_d \Vert B_d\Vert _\mathcal {J}\). Thus we obtain

Our next goal is to replace \(B_d\) in the above inequality by \(\mathbb E\mathbf {D}^d f(X)\). To this end we will analyse the structure of the coefficients of \(B_d\) and compare them with the integrated partial derivatives of \(f\).

Let us first calculate \(\mathbb E\mathbf {D}^d f(X)\). Consider \(\mathbf{r}\in [n]^d\), such that \(i_1,\ldots ,i_a\) are its distinct values, taken \(l_1,\ldots ,l_a\) times respectively. We have

where we have used (48).

By comparing this with the definition of \(b^{(d)}_{r_1,\cdots ,r_d}\) and \(d^{(k_1,\ldots ,k_a)}_{i_1,\ldots ,i_a}\) one can see that the sub-sum of the right hand side above corresponding to the choice \(k_1 = l_1,\ldots ,k_a = l_a\) is equal to \(a!l_1!\cdots l_a! b^{(d)}_{r_1,\ldots ,r_d}\).

In particular for \(d=D\), since \(l_1+\cdots +l_a = D\), we have

and so

where in the last inequality we used Corollary 5.3. Therefore if we prove that for all \(d < D\) and all partitions \(\mathcal {I} = \{I_1,\ldots ,I_a\},\mathcal {J} = \{J_1,\ldots ,J_b\} \in P_d\),

then by simple reverse induction (using again Corollary 5.3) we will obtain

which will end the proof of the theorem.

Fix any \(d < D\) and partitions \(\mathcal {I} = \{I_1,\ldots ,I_a\}, \mathcal {J} = \{J_1,\ldots ,J_b\} \in P_d\). Denote \(l_i = \# I_i\). For every sequence \(k_1,\ldots ,k_a\) such that \(k_i \ge l_i\) for \(i\le a\) and there exists \(i \le a\) such that \(k_i > l_i\), let us define a \(d\)-indexed matrix \(E^{(d,k_1,\ldots ,k_a)}_\mathcal {I} = (e^{(d,k_1,\ldots ,k_a)}_\mathbf{r})_{\mathbf{r}\in [n]^d}\), such that \(e^{(d,k_1,\ldots ,k_a)}_\mathbf{r}= 0\) if \(\mathbf{r}\notin L(\mathcal {I})\) and for \(\mathbf{r}\in L(\mathcal {I})\),

where \(i_1,\ldots ,i_a\) are the values of \(\mathbf{r}\) corresponding to the level sets \(I_1,\ldots ,I_a\). We then have

Since we do not pay attention to constants depending on \(D\) only, by the above formula and the triangle inequality, to prove (52) it is enough to show that for all sequences \(k_1,\ldots ,k_a\) such that \(k_1+\cdots +k_a \le D, k_i \ge l_i\) for \(i\le a\) and there exists \(i \le a\) such that \(k_i > l_i\), one has

for some partition \(\mathcal {K}\in P_{k_1+\cdots +k_a}\) with \(\# \mathcal {K} = \#\mathcal {J}\) (note that \(\sum _{j\le a} l_j = d\)). Therefore in what follows we will fix \(k_1,\ldots ,k_a\) as above and to simplify the notation we will write \(E^{(d)}\) instead of \(E^{(d,k_1,\ldots ,k_a)}_\mathcal {I}\) and \(e^{(d)}_\mathbf{r}\) instead of \(e^{(d,k_1,\ldots ,k_a)}_\mathbf{r}\).

Fix therefore any partition \(\tilde{\mathcal {I}} = \{\tilde{I}_1,\ldots ,\tilde{I_a}\} \in P_{k_1+\cdots +k_a}\) such that \(\#\tilde{I}_i = k_i\) and \(I_i \subseteq \tilde{I}_i\) for all \(i \le a\) (the specific choice of \(\tilde{\mathcal {I}}\) is irrelevant). Finally define a \((k_1+\cdots +k_a)\)-indexed matrix \(\tilde{E}^{(k_1+\cdots +k_a)} = (\tilde{e}^{(k_1+\cdots +k_a)}_\mathbf{r})_{\mathbf{r}\in [n]^d}\) by setting

In other words, the new matrix is created by embedding the \(d\)-indexed matrix into a “generalized diagonal” of a \((k_1+\cdots +k_a)\)-indexed matrix by adding \(\sum _{j\le a} (k_j-l_j)\) new indices and assigning to them the values of old indices (for each \(j \le a\) we add \(k_j-l_j\) times the common value attained by \(\mathbf{r}_{[d]}\) on \(I_j\)).

Recall now the definition of the coefficients \(b^{(d)}_\mathbf{r}\) and note that for any \(\mathbf{r}\in L(\mathcal {\tilde{I}})\subseteq [n]^{k_1+\ldots +k_a}\) we have \(\tilde{e}^{(k_1+\cdots +k_a)}_\mathbf{r}= b^{(k_1+\cdots +k_a)}_\mathbf{r}\prod _{j=1}^a\mathbb EX_{i_j}^{k_j-l_j}\), where for \(j\le a, i_j\) is the value of \(\mathbf{r}\) on its level set \(\tilde{I}_j\). This means that \(\tilde{E}^{(k_1+\cdots +k_a)} = (B_{k_1+\cdots +k_a}\circ \mathbf {1}_{L(\mathcal {\tilde{I}})})\circ (\otimes _{s=1}^{k_1+\cdots +k_a} v_s)\), where \(v_s = (\mathbb EX_i^{k_j-l_j})_{i\le n}\) if \(s \in \{\min I_1,\ldots ,\min I_a\}\) and \(v_s = (1,\ldots ,1)\) otherwise. Since \(\Vert v_s\Vert _\infty \le (C_DL)^{k_j-l_j}\) if \(s \in \{\min I_j\}_{j\le a}\) and \(\Vert v_s\Vert _\infty = 1\) otherwise, by Lemma 5.1 this implies that for any \(\mathcal {K} \in P_{k_1+\cdots +k_a}\),

where in the last inequality we used Corollary 5.3.

We will now use the above inequality to prove (53). Consider the unique partition \(\mathcal {K} = \{K_1,\ldots ,K_b\}\) satisfying the following two conditions:

-

for each \(j\le b, J_j \subseteq K_j\),

-

for each \(s \in \{d+1,\ldots ,k_1+\cdots +k_a\}\) if \(s \in \tilde{I}_j\) and \(\pi (s) := \min \tilde{I}_j\in J_k\), then \(s \in K_k\).

In other words, all indices \(s\), which in the construction of \(\tilde{\mathcal {I}}\) were added to \(I_j\) (i.e., elements of \(\tilde{I}_j{\setminus } I_j\)) are now added to the unique element of \(\mathcal {J}\) containing \(\pi (s) = \min \tilde{I}_j = \min I_j\).

Now, it is easy to see that \(\Vert E^{(d)}\Vert _\mathcal {J}\le \Vert \tilde{E}^{(k_1+\cdots +k_a)}\Vert _\mathcal {K}\). Indeed, consider an arbitrary \(x^{(j)} = (x_{\mathbf{r}_{J_j}}^{(j)})_{|\mathbf{r}_{J_j}|\le n}, j=1,\ldots ,b\), satisfying \(\Vert x^{(j)}\Vert _2\le 1\). Define \(y^{(j)} = (y_{\mathbf{r}_{K_j}}^{(j)})_{|\mathbf{r}_{K_j}|\le n}, j =1,\ldots ,b\) with the formula

We have \(\Vert y^{(j)}\Vert _2 = \Vert x^{(j)}\Vert _2\le 1\). Moreover, by the construction of the matrix \(\tilde{E}^{(k_1+\cdots +k_a)}\) (recall (54)), we have

(in the last equality we used the fact that if \(\mathbf{r}\in L(\tilde{\mathcal {I}})\), then for \(s > d, r_{\pi (s)} = r_s\) and so \(y^{(j)}_{\mathbf{r}_{K_j}} = x^{(j)}_{\mathbf{r}_{K_j\cap [d]}} = x^{(j)}_{\mathbf{r}_{J_j}}\)). By taking the supremum over \(x^{(j)}\) one thus obtains \(\Vert E^{(d)}\Vert _\mathcal {J}\le \Vert \tilde{E}^{(k_1+\cdots +k_a)}\Vert _\mathcal {K}\). Combining this inequality with (55) proves (53) and thus (52). This ends the proof of Theorem 1.4.

5.3 Application: subgraph counting in random graphs

We will now apply results from Sect. 5 to some special cases of the problem of subgraph counting in Erdős-Rényi random graphs \(G(n,p)\), which is often used as a test model for deviation inequalities for polynomials in independent random variables. More specifically we will investigate the problem of counting cycles of a fixed length.

It turns out that Theorem 1.4 gives optimal inequalities in some range of parameters (leading to improvements of known results), whereas in some other regimes the estimates it gives are suboptimal.

Let us first describe the setting (we will do it in a slightly more general form that needed for our example). We will consider undirected graphs \(G = (V,E)\), where \(V\) is a finite set of vertices and \(E\) is the set of edges (i.e. two-element subsets of \(V\)). By \(V_G = V(G)\) and \(E_G = E(G)\) we mean the set of vertices and edges (respectively) of a graph \(G\). Also, \(v_G = v(G)\) and \(e_G = e(G)\) denote the number of vertices and edges in \(G\). We say that a graph \(H\) is a subgraph of a graph \(G\) (which we denote by \(H \subseteq G\)) if \(V_H \subseteq V_G\) and \(E_H \subseteq E_G\) (thus a subgraph is not necessarily induced). Graphs \(H\) and \(G\) are isomorphic if there is a bijection \(\pi :V_H \rightarrow V_G\) such that for all distinct \(v,w \in V_H, \{\pi (v),\pi (w)\} \in E_G\) iff \(\{v,w\} \in E_H\).

For \(p \in [0,1]\) consider now the Erdős-Rényi random graph \(G = G(n,p)\), i.e., a graph with \(n\) vertices (we will assume that \(V_G = [n]\)) whose edges are selected independently at random with probability \(p\). In what follows we will be concerned with the number of copies of a given graph \(H = ([k],E_H)\) in the graph \(G\), i.e., the number of subgraphs of \(G\) which are isomorphic to \(H\). We will denote this random variable by \(Y_H(n,p)\). To relate \(Y_H(n,p)\) to polynomials, let us consider the family \(C(n,2)\) of two-element subsets of \([n]\) and the family of independent random variables \(X = (X_{e})_{e \in C(n,2)}\), such that \(\mathbb P(X_{e} = 1) = 1 - \mathbb P(X_{e} = 0) = p\) (i.e., \(X_{e}\) indicates whether the edge \(e\) has been selected or not). Denote moreover by \(\mathrm{Aut}(H)\) the group of isomorphisms of \(H\) into itself and note that

The right-hand side above is a homogeneous tetrahedral polynomial of degree \(e_H\). Moreover the variables \(X_{\{v,w\}}\) satisfy

and

which implies that \(\Vert X_{\{v,w\}}\Vert _{\psi _2} \le (\log (1/p))^{-1/2}\wedge (\log (2))^{-1/2} \!\le \! \sqrt{2} (\log (2/p))^{-1/2}\).

We can thus apply Theorem 1.4 to \(Y_H(n,p)\) and obtain

where \(L_p = \sqrt{2} \big (\log (2/p)\big )^{-1/2}\) and \(f :\mathbb {R}^{C(n,2)} \rightarrow \mathbb {R}\) is given by

Deviation inequalities for subgraph counts have been studied by many authors, to mention [22, 26, 27, 35–38, 65]. As it turns out the lower tail \(\mathbb P(Y_H(n,p) \le \mathbb EY_H(n,p) - t)\) is easier than the upper tail \(\mathbb P(Y_H(n,p) \ge \mathbb EY_H(n,p) +t)\). The lower tail turns out to be also lighter than the upper one. Since our inequalities concern \(|Y_H(n,p) - \mathbb EY_H(n,p)|\), we cannot hope to recover optimal lower tail estimates, however we can still hope to get bounds which in some range of parameters \(n,p\) will agree with optimal upper tail estimates.

Of particular importance in literature is the law of large numbers regime, i.e., the case when \(t = \varepsilon \mathbb EY_H(n,p)\). In [35] the Authors prove that for every \(\varepsilon >0\) such that \(\mathbb P\big (Y_H(n,p) \ge (1+\varepsilon )\mathbb EY_H(n,p)\big ) > 0\),

for certain constants \(c(H,\varepsilon ), C(H,\varepsilon )\) and a certain function \(M_H^*(n,p)\). Since the general definition of \(M_H^*\) is rather involved we will skip the details (in the examples considered in the sequel we will provide specific formulas). Note that if one disregards the constants depending on \(H\) and \(\varepsilon \) only, the lower and upper estimate above differ by the factor \(\log (1/p)\) in the exponent. To our best knowledge providing a lower and upper bound for general \(H\), which would agree up to multiplicative constants in the exponent (depending on \(H\) and \(\varepsilon \) only) is an open problem (see the remark below).

We will now specialize to the case when \(H\) is a cycle. For simplicity we will first present the case of the triangle \(K_3\) (the clique with three vertices). For this graph the upper bound from [35] has been recently strengthened to match the lower one (up to a constant depending only on \(\varepsilon \)) by Chatterjee [22] and DeMarco and Kahn [27] (who also obtained a similar result for general cliques [26]). In the next section we show that if \(p\) is not too small, the inequality (56) also allows to recover the optimal upper bound. In Section 5.3.2 we provide an upper bound for cycles of arbitrary (fixed) length \(k\), which is optimal for \(p \ge n^{-\frac{k-2}{2(k-1)}} \log ^{-\frac{1}{2}} n\).

Remark (Added in revision) Very recently, after the first version of this article was submitted, a major breakthrough was obtained by Chatterjee-Dembo and Lubetzky-Zhao [23, 49], who strengthened the upper bound to \(\exp (-C(H,\varepsilon )M_H^*(n,p)\log \frac{1}{p})\) for general graphs and \(p \ge n^{-c(H)}\). In the case of cycles which we consider in the sequel, our bounds are valid in a larger range of \(p \rightarrow 0\), than those which can be obtained from the present versions of the aforementioned papers. We would also like to point out, that the methods of [23, 49] rely on large deviation principles and not on inequalities for general polynomials in independent random variables. Obtaining general inequalities for polynomials, which would yield optimal bound for general graphs is an interesting and apparently still open research problem.

5.3.1 Counting triangles

Assume that \(H = K_3\) and let us analyse the behaviour of \(\Vert \mathbb E\mathbf {D}^d f(X)\Vert _\mathcal {J}\) for \(d = 1,2,3\). Of course in this case \(\#\mathrm{Aut}(H) = 6\).

We have for any \(e = \{v,w\}, v,w \in [n]\),

and so \(\Vert \mathbb E\mathbf {D}f(X)\Vert _{\{1\}}= (n-2)p^2 \sqrt{n(n-1)/2} \le n^2p^2\).

For \(e_1 = e_2\) or when \(e_1\) and \(e_2\) do not have a common vertex, we have \(\frac{\partial ^2}{\partial x_{e_1}\partial x_{e_2}} f = 0\), whereas for \(e_1,e_2\) sharing exactly one vertex, we have

where \(v,w\) are the vertices of \(e_1,e_2\) distinct from the common one. Therefore

Using the fact that \(\mathbb E\mathbf {D}^2 f(X)\) is symmetric and for each \(e_1\) the sum of entries of \(\mathbb E\mathbf {D}^2 f(X)\) in the row corresponding to \(e_1\) equals \(2p(n-2)\), we obtain \(\Vert \mathbb E\mathbf {D}^2 f(X)\Vert _{\{1\}\{2\}} = 2p(n-2) \le 2pn\). One can also easily see that \(\Vert \mathbb E\mathbf {D}^2 f(X)\Vert _{\{1,2\}} = p\sqrt{n(n-1)(n-2)} \le pn^{3/2}\).

Finally

and thus \(\Vert \mathbb E\mathbf {D}^3f(X)\Vert _{\{1,2,3\}} = \sqrt{n(n-1)(n-2)} \le n^{3/2}\). Moreover, due to symmetry we have

Consider arbitrary \((x_{e_1})_{e_1 \in C(n,2)}\) and \((y_{e_2,e_3})_{e_2,e_3 \in C(n,2)}\) of norm one. We have

where the first two inequalities follow by the Cauchy-Schwarz inequality and the last one from the fact that for each \(e_2,e_3\) there is at most one \(e_1\) such that \(e_1,e_2,e_3\) form a triangle. We have thus obtained \(\Vert \mathbb E\mathbf {D}^3 f(X)\Vert _{\{1,2\}\{3\}} = \Vert \mathbb E\mathbf {D}^3 f(X)\Vert _{\{1,3\}\{2\}} = \Vert \mathbb E\mathbf {D}^3 f(X)\Vert _{\{2,3\}\{1\}} \le \sqrt{2n}\).

It remains to estimate \(\Vert \mathbb E\mathbf {D}^3 f(X)\Vert _{\{1\}\{2\}\{3\}}\). For all \((x_e)_{e\in C(n,2)}, (y_e)_{e\in C(n,2)}, (z_e)_{e\in C(n,2)}\) of norm one we have by the Cauchy-Schwarz inequality

which gives \(\Vert \mathbb E\mathbf {D}^3 f(X)\Vert _{\{1\}\{2\}\{3\}} \le 2^{3/2}\).

Using (56) together with the above estimates, we obtain

Proposition 5.5

For any \(t >0\),

where \(L_p = \big (\log (2/p)\big )^{-1/2}\).

In particular for \(t = \varepsilon \mathbb EY_{K_3}(n,p) = \varepsilon \left( {\begin{array}{c}n\\ 3\end{array}}\right) p^3\),

Thus for \(p \ge n^{-\frac{1}{4}}\log ^{-\frac{1}{2}} n\) we obtain