Abstract

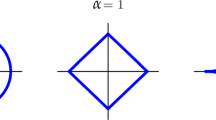

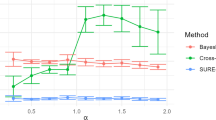

We consider bridge regression models, which can produce a sparse or non-sparse model by controlling a tuning parameter in the penalty term. A crucial part of a model building strategy is the selection of the values for adjusted parameters, such as regularization and tuning parameters. Indeed, this can be viewed as a problem in selecting and evaluating the model. We propose a Bayesian selection criterion for evaluating bridge regression models. This criterion enables us to objectively select the values of the adjusted parameters. We investigate the effectiveness of our proposed modeling strategy with some numerical examples.

Similar content being viewed by others

References

Akaike H (1974) A new look at the statistical model identification. IEEE Trans Autom Contr AC-19:716–723

Antoniadis A, Gijbels I, Nikolova M (2011) Penalized likelihood regression for generalized linear models with non-quadratic penalties. Ann Inst Stat Math 63:585–615

Antoniadis A, Gijbels I, Lambert-Lacrolx S (2013) Penalized estimation in additive varying coefficient models using grouped regularization. Stat Pap. doi:10.1007/s00362-013-0522-1

Armagan A (2009) Variational bridge regression. In: Proceedings of the 12th international conference artificial intelligence statistics, vol 5, pp 17–24

Bühlmann P, van de Geer S (2011) Statistics for high-dimensional data. Springer, New York

Craven P, Wahba G (1979) Smoothing noisy data with spline functions. Numer Math 31:377–403

Eilers PHC, Marx BD (1996) Flexible smoothing with B-splines and penalties. Stat Sci 11:89–121

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc 96:1348–1360

Fan J, Lv J (2008) Sure independence screening for ultrahigh dimensional feature space. J R Stat Soc Ser B 70:849–911

Frank JE, Friedman JH (1993) A statistical view of some chemometrics regression tools. Technometrics 35:109–148

Fu WJ (1998) Penalized regression: the bridge versus the lasso. J Comput Graph Stat 7:397–416

Hastie T, Tibshirani R (1990) Generalized additive models. Chapman & Hall, London

Hoerl AE, Kennard RW (1970) Ridge regression: biased estimation for nonorthogonal problems. Technometrics 12:55–67

Huang J, Horowitz JL, Ma S (2008) Asymptotic properties of bridge estimators in sparse high-dimensional regression models. Ann Stat 36:587–613

Huang J, Ma S, Xie H, Zhang C-H (2009) A group bridge approach for variable selection. Biometrika 96:339–355

Hurvich CM, Tsai C-H (1989) Regression and time series model selection in small samples. Biometrika 76:297–307

Hurvich CM, Simonoff JS, Tsai C-H (1998) Smoothing parameter selection in nonparametric regression using an improved Akaike information criterion. J R Stat Soc Ser B 60:271–293

Ishiguro M, Sakamoto Y, Kitagawa G (1997) Bootstrapping log likelihood and EIC, an extension of AIC. Ann Inst Stat Math 49:411–434

Knight K, Fu WJ (2000) Asymptotics for lasso-type estimators. Ann Stat 28:1356–1378

Konishi S, Ando T, Imoto S (2004) Bayesian information criteria and smoothing parameter selection in radial basis function networks. Biometrika 91:27–43

Liu Y, Zhang H, Park C, Ahn J (2007) Support vector machines with adaptive \(L_q\) penalty. Comput Stat Data Anal 51:6380–6394

Lv J, Fan Y (2009) A unified approach to model selection and sparse recovery using regularized least squares. Ann Stat 37:3498–3528

McDonald G, Schwing R (1973) Instabilities of regression estimates relating air pollution to mortality. Technometrics 15:463–482

Neykov NM, Filzmoser P, Neytchev PN (2013) Ultrahigh dimensional variable selection through the penalized maximum trimmed likelihood estimator. Stat Pap. doi:10.1007/s00362-013-0516-z

Park C, Yoon YJ (2011) Bridge regression: adaptivity and group selection. J Stat Plan Inference 141:3506–3519

Polson NG, Scott JG, Windle J (2011) The Bayesian bridge. arXiv:1109.2279

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6:461–464

Shimamura T, Imoto S, Yamaguchi R, Miyano S (2007) Weighted lasso in graphical Gaussian modeling for large gene network estimation based on microarray data. Genome Inform 19:142–153

Sugiura N (1978) Further analysis of the data by Akaike’s information criterion and finite corrections. Comm Stat Theory Methods A7:13–26

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Stat Soc Ser B 58:267–288

Tierney L, Kadane JB (1986) Accurate approximations for posterior moments and marginal densities. J Am Stat Assoc 81:82–86

Yuan M, Lin Y (2006) Model selection and estimation in regression with grouped variables. J R Stat Soc Ser B 68:49–67

Zhang C-H (2010) Nearly unbiased variable selection under minimax concave penalty. Ann Stat 38:894–942

Zou H, Hastie T (2005) Regularization and variable selection via the elastic net. J R Stat Soc Ser B 67:301–320

Zou H, Li R (2008) One-step sparse estimates in nonconcave penalized likelihood models. Ann Stat 36:1509–1533

Acknowledgments

The author would like to thank the anonymous reviewers for their constructive and helpful comments. This work was supported by the Ministry of Education, Science, Sports and Culture, Grant-in-Aid for Young Scientists (B), \(\#\)24700280, 2012–2015.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kawano, S. Selection of tuning parameters in bridge regression models via Bayesian information criterion. Stat Papers 55, 1207–1223 (2014). https://doi.org/10.1007/s00362-013-0561-7

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-013-0561-7