Abstract

Population managers will often have to deal with problems of meeting multiple goals, for example, keeping at specific levels both the total population and population abundances in given stage-classes of a stratified population. In control engineering, such set-point regulation problems are commonly tackled using multi-input, multi-output proportional and integral (PI) feedback controllers. Building on our recent results for population management with single goals, we develop a PI control approach in a context of multi-objective population management. We show that robust set-point regulation is achieved by using a modified PI controller with saturation and anti-windup elements, both described in the paper, and illustrate the theory with examples. Our results apply more generally to linear control systems with positive state variables, including a class of infinite-dimensional systems, and thus have broader appeal.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Regulation by feedback arises in numerous areas of science and engineering; such as acoustics, electrical circuits, aviation and biological systems. According to the report of Murray et al. (2003): “Feedback is an enabling technology in a variety of application areas and has been reinvented and patented many times in different contexts”. Ubiquitous to the design and synthesis of modern feedback control systems are (P)roportional, (I)ntegral, (D)erivative controllers. These dynamical models incorporate current (P part), past (I part) and predictive (D part) information about a measured variable or variables and create from this information a signal, termed an input or control, which is then fed back into the to-be-controlled system to achieve some desired dynamic behaviour. PID controllers are widely used in industrial processes (Lunze 1989; Åström and Hägglund 1995) and have been described as one of the “Success Stories in Control” (Samad and Annaswamy 2011, p. 103). The special case of integral control was developed in the 1970s as a technique for regulating the measured variables of a stable, but controlled, linear system to a fixed and chosen set-point. Early contributions to the theory of proportional and integral (PI) control are found in the control engineering literature and include Davison (1975, 1976), Lunze (1985), Morari (1985) and Grosdidier et al. (1985). Whilst grounded in the field of process engineering, applications of PI control are multiple and varied. Indeed, established examples in engineering are complemented by emerging examples in biology, such as the regulation of blood sugar by insulin (Saunders et al. 1998), bacterial chemotaxis in living cells (Yi et al. 2000), calcium homeostasis (El-Samad et al. 2002) and, recently in Guiver et al. (2015), ecological management—the continued focus of the present work.

In ecological management, PI control provides a suite of techniques for management by the addition or removal of individuals from an ecological process, such as a population. In applied contexts, addition may correspond to captive-release schemes, translocation or replanting and removal may correspond to harvesting, culling or coppicing. Consequently, applications of PI control are broad in scope and importance, including pest or resource management, agriculture, horticulture and conservation. Its scope potentially extends to key and immensely timely societal challenges of the twenty-first century, such as food security (Godfray and Garnett 2014). Indeed, UNESCO’s Mathematics of Planet Earth’s 2013 programmeFootnote 1 was “born from the will of the world mathematical community to learn more about the challenges faced by our planet and the underlying mathematical problems, and to increase the research effort on these issues” including “[a] growing population competing for the same global resources”. In addition to the potential applications, our motivation for exploring the utility of PI control in ecological management is twofold: (a) their ease of computation and implementation, with very little knowledge required of the to-be-controlled system, and; (b) their inherent robustness to various forms of uncertainty. We further elaborate on (a) and (b) in the manuscript and contend that these facets make PI control ideally suited for ecological management where processes are subject to unknown disturbances and dynamic models are (possibly) highly uncertain. The PI controllers that we propose here do not seek to use measured data to update the underlying ecological model over time, by inferring parameters for instance, but the control does change in response to a measured variable. In this sense and context, feedback control has parallels to adaptive management, an approach well known in the resource and ecological management literature (Holling 1978; Walters 1986; Williams 2011). Other authors have noted this connection as well: Heinimann (2010) proposes principles from control theory as a concept for scholars and practitioners in adaptive ecosystem management.

Our earlier paper, Guiver et al. (2015), introduces integral control and PI control, in a context of single management goals, for structured population models. These deterministic population or meta-population models stratify individuals according to some discrete or continuous age-, size- or stage-structure and include matrix (P)opulation (P)rojection (M)odels (Caswell 2001; Cushing 1998) and (I)ntegral (P)rojection (M)odels (Easterling et al. 2000; Ellner and Rees 2006; Briggs et al. 2010). Guiver et al. (2015) considers regulation of single (scalar) observations or measurements to a prescribed set-point, or, in ecological modelling parlance, achieves a single management goal or objective. It is reasonable to request, however, that more than one measurement is regulated, and that more than one per time-step management action is permitted. For example, when designing a replanting programme to conserve a declining plant population, regulating total abundance may not be as beneficial as thought when the composition of the resulting stratified population is dominated by the seed stage-class. It may be more desirable to control both total abundance and abundance of a given stage-class, for instance, flowering plants. Alternatively, in sustainable harvesting, it may be desirable to harvest (that is, remove from) certain stage-classes whilst replenishing others, and still maintain a desired abundance of certain stages.

The application of PI control to the above multi-objective management problem is novel itself and, we believe, a useful and timely contribution to the suite of tools available to population managers, conservation biologists and other end users. To present such a solution requires new mathematical results in control theory for two reasons. First, population level models, such as matrix PPMs and IPMs, are examples of positive dynamical systems and existing “off-the-shelf” PI control need not respect the necessary nonnegativity constraints. In an applied context the controller could instruct management actions that are counter-intuitive or, worse, meaningless, such as removing more individuals than are currently present. Second, when the measured-variables are naturally constrained to be nonnegative, it is clear that not every nonnegative vector with more than one component is a feasible set-point. For example, if one measurement is always required to be larger than another, then this ordering must be preserved in the candidate set-point, as is the case in the plant example alluded to above.

Therefore, in the present contribution we apply low-gain PI control with multiple management goals (so-called multi-input, multi-output systems) to examples in ecological management and develop low-gain PI control for discrete-time, positive state linear systems. The models and terminology is further explained throughout the manuscript. The material we present is an extension of Guiver et al. (2015), where an existing suite of results in control theory was drawn upon and further developed to address the nuanced situation of positive state variables and input constraints. Analogously, in regulating multiple outputs with multiple saturating inputs, we need to develop a different set of tools, described in the manuscript, and in particular draw upon recent positive state results in Guiver et al. (2014). Our results apply to situations outside of ecology, adding to their appeal, and are novel, although there are similarities to the results of Nersesov et al. (2004). We compare and contrast their approach with ours in Remark 4.8.

Owing to its dual focus, the manuscript has the following deliberate structure. Section 2 seeks to further motivate PI control as a tool for ecological management, informally states our main result and illustrates its application through an example. The subsequent Sects. 3 and 4 form the technical heart of the manuscript and develop the mathematics summarised in Sect. 2. In order to extend the appeal of this contribution, including to a possibly non-mathematical audience, we have deliberately placed proofs of all novel results in “Appendix C”. A second example is presented in Sect. 5 and the manuscript is concluded by Sect. 6 with a discussion. “Appendices A and B” contain model parameters used in the examples that are not given in the main text for ease of presentation and preliminary material required for the proofs of our results, respectively.

2 Motivation, main result and illustrative example

This section contains an informal overview of our main results and demonstrates their possible application. We seek as well to further motivate the present contribution by briefly discussing the distinction between robust and optimal control, particularly in the context of ecological management. A larger, more comprehensive, introduction to PI control in the same context is contained in our earlier manuscript (Guiver et al. 2015, Section 2) which, to avoid repetition, we have not reproduced fully here. We mention that Guiver et al. (2015, Section 2.1) compares and contrasts PI control with other theoretical approaches to ecological management available in the literature.

For the situation considered here, the key ingredients are:

-

a managed population or resource that is changing over time (referred to as the to-be-controlled system or just system);

-

the possibly disturbed observations or measurements (referred to as outputs);

-

a management strategy that permits the addition or removal of individuals (referred to as control actions).

The outputs provide information about aspects of the population, say abundance of a strata, and the present PI control problem is to choose a series of control actions to subsequently manage these outputs, that is, to regulate them to prescribed quantities. A PI controller is, in essence, a mathematical model that uses functions of the measurements to determine present and future control actions.

To describe PI control, a model of the to-be-controlled system is required. We shall assume that the population is modelled by a deterministic, linear, stratified population model, typically a matrix PPM (Caswell 2001). PPMs are structured population models, meaning that the modelled population is partitioned into discrete age-, size- or developmental stage-classes (the latter may include larval, pupal, adult, etc.). A linear, time-invariant matrix PPM is given by

where x(t) denotes the structured population, in integer n stage-classes, with initial population distribution \(x^0\) and A is an \(n \times n\) componentwise nonnegative matrix. The time-steps t in (2.1) are assumed fixed: a week, month, or breeding cycle, for instance. The matrix A in (2.1) is often called the projection matrix, and contains life-history parameters of the population, such as recruitment, survival and transitions between stage-classes.

The inclusion of measurements y(t) and control actions u(t) in (2.1) leads to the model

where the input vector u(t) with integer m components is to-be-determined by the modeller. The terms B and C in (2.2) are \(n \times m\) and \(p \times m\) matrices, respectively, where p denotes the number of per time-step measurements taken. We note that, at any given time-step t, the entire population distribution (the state) x(t) may not be known (or known precisely), and consequently may not be used to help determine u(t). This is not necessarily a problem for feedback control—as we explain in Sect. 4.4.1, knowledge of x(t) is not required for PI control to succeed (knowledge of the matrix A is not required either) and PI control provides so-called global results in that they hold for any initial population distribution \(x^0\). What is crucial to the efficacy of feedback control is access to the measured variable y(t). The key difference between the present contribution and Guiver et al. (2015) is that in the latter we restricted attention to \(m=p=1\) but here the situation \(m,p>1\) is permitted, implying that numerous measurements are recorded and management actions taken—so-called management with multiple goals in ecological terminology or the multi-input, multi-output case in control theoretic terminology.

Matrix PPMs (2.1) are examples of discrete-time, positive dynamical systems—“positivity” refers to the property that the state-variables take only nonnegative values, typically denoting abundances, densities or concentrations. Positive dynamical systems form the appropriate framework for a variety of physically meaningful mathematical models and arise as models in a diverse range of fields from biology, chemistry, ecology and economics to genetics, medicine and engineering (Haddad et al. 2010, p. xv). Owing to their importance in mathematical modelling positive dynamical systems are well-studied with textbooks by, for example, Berman et al. (1989), Krasnosel’skij et al. (1989) and Berman and Plemmons (1994). The theory of linear positive dynamical systems is rooted in the seminal works of Perron (1907) and Frobenius (1912) on nonnegative matrices (for a recent treatment see, for example, Berman and Plemmons 1994, Chapter 2). Control of positive dynamical systems leads to positive input control systems (Farina and Rinaldi 2000), where the input variables are also assumed to be positive. Presently, only the state x(t) and output y(t) in (2.2) need take componentwise nonnegative values, so called so-called positive state systems (Guiver et al. 2014). Accordingly, u(t) may take negative values, provided that a nonnegative number or distribution remains. Such a framework allows the modelling of control actions (or disturbances) such as harvesting, culling, pest management or predation; actions which, importantly, fall outside the existing positive systems theory.

As a concrete and illustrative example, we explore the potential utility of low-gain PI control by applying it to the management of a pronghorn (Antilocapra americana) population based on matrix models from Berger and Conner (2008). Pronghorn are native to Canada, Mexico and the US, and currently occur in western North America from Canada through to northern Mexico. Managed populations are found in Yellowstone National Park and across the continent numbers are generally stable, having recovered from near extinction in the 1920s. The species is susceptible, however, to habitat loss from urban and agricultural expansion and restriction of seasonal movements from fencing (Hoffmann et al. 2008). Pronghorn is legally hunted with permits, although the subspecies Sonoran pronghorn is endangered and populations in Arizona and Mexico are protected under the US Endangered Species Act.

The example also seeks to highlight the drawbacks of “off-the-shelf” PI control in this particular applied context and to motivate additional novel features we develop in Sect. 4. The pronghorn projection matrix model is an age-structured model, with time-steps denoting years, and is based on Berger and Conner (2008, Table 4, wolf-free site). The models provided there are for female pronghorn, although presently we have included males in the population as well. Consequently there are six stage-classes denoting female and male, neonates, yearlings and prime adults. The model parameters used may be found in “Appendix A”. The spectral radius of the projection matrix A in (2.1) or (2.2) is \(\lambda = 0.9222 <1\) so that the uncontrolled population x(t) specified by (2.1) [or (2.2) with \(u(t) =0\) for every t] is declining asymptotically. Suppose, therefore, that the hypothetical management objectives are to raise abundance of female and male prime adults to 120 and 100, respectively, from their initial abundances of c. 95 and c. 30, assuming a total initial population abundance of 300. In order to be regulated to the chosen set-point

the female and male prime adults stage-classes must be observed each time-step, determining the matrix C in (2.2). To affect these changes at least two per-time step management actions (the same number as observations) are required, and we assume that we may replenish female and male neonates, determining B in (2.2). The first and second component of the vector-valued input variable u(t) in (2.2) now denote how many female and male individuals are released per time-step, respectively. The first difficulty to overcome is to determine, given the particular A, B and C specified by the pronghorn model, whether it is possible to choose an input u(t) such that the output y(t) does indeed converge to r in (2.3)? Note that the state and output variables must remain nonnegative for a meaningful model and this nonnegativity requirement in turn imposes geometric constraints on the set of possible inputs u(t). For the sequel we record this problem as:

- (P1):

-

which nonnegative set-points can be tracked asymptotically whilst preserving nonnegative state and output variables?

Informally, we say that set-points that may be asymptotically tracked with nonnegative state and output variables are feasible and we demonstrate in the “Appendix A” that the set-point r in (2.3) is indeed feasible.

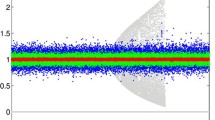

Figure 1 shows simulation results obtained by applying low-gain integral control to the pronghorn model. Although the output, here denoting measured abundance of each stage-class, converges to the chosen set-point r over time, four deficiencies of the “off-the-shelf” integral controller are demonstrated:

-

(i)

during time-steps 50–150, substantially more than 200 female neonates must be added to the population per time-step (Fig. 1a), which may be too large to be practical;

-

(ii)

during the same time-steps, the integral controller is instructing the removal of male neonates, that is, \(u_2(t) <0\) (Fig. 1a), which seems unnecessary and wasteful;

-

(iii)

most crucially, the resulting measurements \(y_2(t)\) of male prime adults are negative for some t (Fig. 1b), which is absurd for this model, and;

-

(iv)

the performance is very slow, predicting at least 500 years(!) to converge to the desired set-point.

Whilst we acknowledge that the model parameters have been chosen somewhat pathologically to emphasise these deficiencies, they do help motivate the present contribution quite markedly.

Simulations of the low-gain integral control model (I) (see Sect. 3.2) applied to the pronghorn matrix model. a, b Contain the inputs and outputs, respectively. The dotted lines denote the components of the limiting input (a) and the chosen set-point (b)

Deficiencies (i)–(iii) above are addressed by considering a modified low-gain PI control model that includes input saturation. In words, negative inputs u(t) (that is, when the management strategy suggests removal of individuals) are replaced by zero and a per time-step maximum bound is imposed for u(t), reflecting limited per time-step resources or management capability. As we explain in Sect. 4, doing so introduces a nonlinearity into the feedback model and establishing convergence of the output to the set-point is more challenging. For the sequel, we record:

- (P2):

-

how can input saturation be included in low-gain PI control and still ensure that the desired set-point is tracked asymptotically by the output?

Deficiency (iv) of rate of convergence of the feedback model may be adjusted by the use of a (P)roportional component as well as an (I) component, as we describe in the manuscript. Our main results are low-gain PI control models for positive state linear systems that address issues (P1) and (P2), stated as Theorem 4.6 and Corollary 4.7. We establish several robustness results in Sect. 4.4, that capture how the low-gain PI control systems can handle uncertainty. Figure 2 contains simulation results obtained by applying low-gain integral control with input saturation to the pronghorn model. From the simulations we see that each of issues (i)–(iv) present in Fig. 1 do not appear and a robust solution to the stated management problem is provided.

Simulations of the low-gain integral control model (Iaw) (see Sect. 4.2) applied to the pronghorn matrix model. a, b Contain the inputs and outputs, respectively. The dotted lines denote the components of the limiting input (a) and the chosen set-point (b). The dotted–crossed lines in a denote per time-step input saturation limits

Having outlined a low-gain PI control solution to the above management problem, we comment on how the solution may be additionally combined with other management approaches present in the literature and, moreover, how feedback control differs from optimal control. These latter observations are intended to further motivate the present exploration of the utility of PI controllers in ecological management.

Remark 2.1

-

(i)

An existing suite of management strategies proposed in ecological matrix modelling are based on tools from perturbation theory. Typically, modelled vital rates are altered with a view to obtaining some asymptotically desired dynamic behaviour (such as stasis or growth in conservation), which is described by replacing A in (2.1) with \(A + {\Delta }\), for some perturbation matrix \({\Delta }\). Sensitivity (Demetrius 1969) or elasticity (Kroon et al. 1986) analyses are often employed and use methods from calculus to determine the effect of small changes in particular vital rates on the resulting asymptotic behaviour. These calculations are used to inform where potential management or conservation strategies should invest their efforts. Numerous examples are present in the literature and we highlight, for example, shark conservation (Otway et al. 2004) and the effects of Brazil nut tree seed extraction on its demography (Zuidema and Boot 2002). Biologically, perturbation analysis denotes improving or degrading vital rates through environmental or demographic changes, the former for instance, through improved quality or access to food or decreased mortality rates by protecting habitats. These methods are not directly comparable to PI control, as they do not denote the addition or removal of individuals, but may be combined with PI control approaches. We revisit the above pronghorn example in Sect. 5 and combine low-gain PI control proposed here with a second management strategy.

-

(ii)

Low-gain PI control is an example of feedback control. Complementary to feedback control is optimal control which, to some audiences, may be synonymous with control theory itself. Here an input is chosen to achieve some desired dynamic behaviour as well as to minimise a prescribed functional; typically denoting the cost or effort of the management strategy in ecological applications. Optimal control has proven very popular in mathematical biology (Lenhart and Workman 2007). Pontryagin’s celebrated maximum principle (see, for example, Liberzon 2011, Chapter 4) has been employed in models for the optimal control of HIV (Kirschner et al. 1997), epidemics (Hansen and Day 2011) and vector-borne diseases (Blayneh et al. 2009). Techniques from optimal control have appeared extensively in the mathematical ecology, conservation and resource management literature where an input to a control system denotes a management strategy that is applied to a ecological process, such as a modelled population. To name but a few examples, research by Hastings and collaborators has tackled optimal management of deterministic models for the invasive perennial deciduous grass Spartina by applying linear programming (Hastings et al. 2006), so-called linear quadratic optimal control (Blackwood et al. 2010) or dynamic programming (Lampert et al. 2014). Elsewhere applications of Pontryagin’s maximum principle have appeared in the fisheries management literature (Kellner et al. 2011; Moeller and Neubert 2013). Solutions to population management problems have also been proposed by optimising prescribed cost-functionals in the situation when the underlying dynamics are assumed stochastic, such as those given by (P)artially (O)bservable (M)arkov (D)ecision (P)rocesses (Monahan 1982). Stochastic dynamic programming techniques are then used to numerically compute optimal strategies. Substantial research has been undertaken by Possingham and collaborators, including Shea and Possingham (2000), Chadès et al. (2011) and Regan et al. (2011).

Whilst the design of management strategies via optimal control have an appeal in that they would minimise some specified cost, there are downsides. First, computing optimal controls is often analytically intractable or computationally highly expensive [suffering from, for example, the “curse of dimensionality”, coined in Bellman (1957), see more recently Powell (2007)] and so optimal controls can be impractical to implement. Second, and often overlooked, it is not always clear that “off-the-shelf” optimal control approaches will respect positivity of the system states (although one exception we are aware of appearing in the control literature is Nersesov et al. (2004)). Third, and a more serious and pressing obstacle, population-level ecological models are typically highly uncertain. Uncertainty is a broad term in ecology and ecological modelling, although in this context both Regan et al. (2002) and Williams (2001) contain helpful and interesting codices of the term. Presently, uncertainty encompasses choice of model structure (for example, type of model, number of stage-classes or any modelled density-dependence), parametric uncertainty (for instance, how to accurately fit vital rates for a chosen model) and unknown disturbances of the dynamics (such as unmodelled immigration or sampling error). Therefore, we argue that it is essential that ecological management strategies, be it for sustainable harvesting, pest management or conservation, are designed to be robust. Informally, a control scheme is robust with respect to a source of uncertainty if it performs as intended in spite of that uncertainty. Another facet of robust control is quantifying the extent to which a control objective fails when operating in uncertain or unknown operating conditions. The study of robust control [with textbooks by, for example, Green and Limebeer (1995) or Zhou and Doyle (1998)] was in part born out of the hugely important observation by control engineers in the 1970s that optimal control techniques need not be robust and, moreover, over-optimisation leads to fragility (Doyle 1978). Indeed, as we sought to emphasise in Guiver et al. (2015), so-thought optimal controls can have disastrous performance when applied to an uncertain model and hence our current continued exploration of robust feedback control in ecological management.

3 Problem formulation: multi-input, multi-output low-gain PI control

Sections 3 and 4 contain the technical heart of the manuscript where we formulate both the problem exposited in Sect. 2 and its solution. Specifically, in this section we recap so-called multi-input, multi-output low-gain PI control and in the next we extend known results to address the issues (P1) and (P2). Recall that proofs of all novel stated results are contained in “Appendix C”.

3.1 Notation

We introduce some notation, although most notation we use is standard, or is defined as it is introduced. Briefly, we let \(\mathbb {N}_0\), \(\mathbb {N}\), \(\mathbb {R}\) and \(\mathbb {C}\) denote the sets of nonnegative integers, positive integers, real and complex numbers, respectively. For positive integer n, denoted \(n \in \mathbb {N}\), we let \(\mathbb {R}^n\) and \(\mathbb {C}^n\) denote real and complex n-dimensional Euclidean space, respectively, equipped with the usual two-norm, always denoted by \(\Vert \cdot \Vert \). As usual, we let \(\mathbb {R}^1 = \mathbb {R}\) and \(\mathbb {C}^1 = \mathbb {C}\). For \(m \in \mathbb {N}\), \(\mathbb {R}^{n \times m}\) and \(\mathbb {C}^{n \times m}\) denote the sets of \(n \times m\) matrices with real and complex entries, respectively. We shall denote by I the identity matrix, used consistently without specifying its dimensions. The notation \(\Vert \cdot \Vert \) also denotes the operator two-norm induced from \(\Vert \cdot \Vert \) on \(\mathbb {C}^n\) or \(\mathbb {R}^n\). We denote by r(A) the spectral radius of \(A \in \mathbb {C}^{n \times n}\) which, recall, is given by

where \(\sigma (A)\) denotes the spectrum of A—its set of eigenvalues when A is a matrix. The state x of the uncontrolled linear model (2.1) converges to zero or diverges to infinity when \(r(A) <1\) or \(r(A) >1\), respectively (the latter at least for some nonzero initial states \(x^0\)).

The symbols \(\mathbb {R}_+^n\) and \(\mathbb {R}^{n \times m}_+\) denote the sets of componentwise nonnegative vectors and matrices, respectively. A vector z in \(\mathbb {R}^n\) belongs to \(\mathbb {R}^n_+\) if \(z_k \ge 0\) for every k, where \(z_k\) denotes the \(k{\mathrm{th}}\) component of z. We call vectors \(z \in \mathbb {R}^n_+\) nonnegative and say that \(z \in \mathbb {R}^n_+\) is positive if \(z_k >0\) for every k. For vector \(z \in \mathbb {R}^n\), the term \(\Vert z \Vert _1\) denotes the vector one-norm of z, and is defined as

The superscript \({}^T\) denotes matrix or vector transposition, so that if \(z \in \mathbb {R}^n\) then \(z^T\) is a row vector.

3.2 Multi-input, multi-output low-gain PI control

For the most part in the present manuscript we consider the discrete-time linear model (2.2) where

for \(n,m,p \in \mathbb {N}\) and given \(x^0 \in \mathbb {R}^n\). The variables u, x and y denote the input, state and output of (2.2), respectively. Although our motivating applications are the management of ecological models where the input, state and output typically have clear biological interpretations, here we are describing the more general situation. In particular, PI control does not require nonnegativity assumptions on A, B or C. We shall impose additional structure on (2.2) and (3.1) in Sect. 4.

The transfer function G of the linear system (2.2) [also of the triple (A, B, C)] is the function of a complex variable, defined as

where recall that I in (3.2) is the (here \(n \times n\)) identity matrix. The function G is certainly well-defined for every complex z that is not an eigenvalue of A and, moreover, provides a relationship between an input u and the resulting output y related by (2.2). More information about G is contained in “Appendix B” but, it suffices here to note that if \(r(A) <1\) then G(1) is well-defined and has the property that if u has a limit \(u^\infty \) then, for any initial state \(x^0\), y in (2.2) has the limit

From the Neumann series definition

and the limit relationship (3.3) it follows that the \((i,j){\mathrm{th}}\) entry of G(1) is the eventual \(i{\mathrm{th}}\) measurement when the \(j{\mathrm{th}}\) input variable is one for all times. The interpretation is somewhat similar to that of the fundamental matrix in matrix population modelling (Caswell 2001, p. 112). By conducting controlled experiments, such as in applications in electrical circuits, it is sometimes possible to obtain an estimate of G(1) (Penttinen and Koivo 1980; Lunze 1985), although this is possibly inappropriate in ecological management.

Integral control has been developed in the situation \(r(A)<1\) to solve the so-called set-point regulation problem or objective, namely, to generate an input u such that the resulting outputs y of (2.2) converge to a prescribed set-point \(r \in \mathbb {R}^p\). The objective should be achieved independently of the initial state \(x^0\) and with only knowledge of y and G(1). The internal model principle (Francis and Wonham 1976) dictates that in order to achieve the set-point regulation objective via feedback control, the control strategy must contain an integrator, or synonymously an integral controller which, when connected via feedback to (2.2), leads to:

Equation (Ia) is model (2.2)—the to-be-controlled system with the measured output y(t). Equation (Ib) is the integral controller model, with \(x_c(t) \in \mathbb {R}^m\) for each \(t \in \mathbb {N}_0\) denoting its state with initial state \(x_c^0\). Equation (Ic) is a feedback connection from (Ib) to (Ia) via the input u(t). The terms \(K \in \mathbb {R}^{m \times p}\), \(g>0\) and \(x_c^0 \in \mathbb {R}^m\) in (Ib) are design parameters and r is the desired set-point.

The following “low-gain” result for integral control is well-known and based on, for example, Logemann and Townley (1997, Theorem 2.5, Remark 2.7). The term “low-gain” refers to the fact that the positive parameter g in (I) (often called a “gain”) is required to be sufficiently small.

Theorem 3.1

(Low-gain integral control) Suppose that the integral control system (I) with \(m=p\) satisfies

-

(A1)

\(r(A) <1\), and;

-

(A2)

K and G(1) are such that every eigenvalue of the product KG(1) has positive real part.

Then, there exists \(g^*>0\) such that for all \(g \in (0,g^*)\), all \(r \in \mathbb {R}^p\) and all \((x^0, x^0_c) \in \mathbb {R}^n \times \mathbb {R}^m\) the solution \((x,x_c)\) of (I) has the properties:

-

(a)

\(\displaystyle {\lim \nolimits _{t\rightarrow \infty }}x_c(t)= x_c^\infty : = G(1)^{-1}r\);

-

(b)

\(\displaystyle {\lim \nolimits _{t\rightarrow \infty }}x(t)= x^\infty := (I-A)^{-1}BG(1)^{-1}r\);

-

(c)

\(\displaystyle {\lim \nolimits _{t\rightarrow \infty }}y(t)= \displaystyle {\lim \nolimits _{t\rightarrow \infty }}Cx(t)= r\).

When \(r(A) \ge 1\) then the conclusions of Theorem 3.1 do not apply to (I). However, in this situation (I) can be modified by including a (P)roportional feedback component. Specifically, the feedback connection (Ic) is replaced by

if the state x is known and available to the modeller, or by

when only the output y is available. The matrices \(F_1 \in \mathbb {R}^{m \times n}\) and \(F_2 \in \mathbb {R}^{m \times m}\) are additional design parameters. We denote by (PI1) and (PI2) the combinations of (Ia), (Ib) and (3.4) or (Ia), (Ib) and (3.5), respectively. For completeness, we record that (PI1) is given by

while in (PI2) the third line of (PI1) is replaced by (3.5). Inserting the expression for u in (PI1) into the dynamic equation for x also in (PI1) and introducing the new input variable \(v := x_c\) yields

demonstrating that (PI1) is an instance of (I), only with A replaced by \(A-BF_1\). The same argument shows that (PI2) simplifies to (I) as well, now with A replaced by \(A-BF_2C\). We do not give the details. The upshot is that Theorem 3.1 is applicable to (PI1) provided that \(F_1\) can be chosen such that \(A-BF_1\) satisfies (A1) and K can be chosen such that K and the transfer function of \((A_1,B,C)\) together satisfy (A2). In usual situations the crucial requirements is the choice of \(F_1\) such that \(r(A-BF_1) <1\), as here a suitable K in (PI1) is given by \(K = (C(I -A_1)^{-1}B)^{-1}\) (see Remark 3.2 below). The analogous statements are true for (PI2).

Theorem 3.1 is the basis for the robust feedback control solution to the multiple management goals problem, motivated in Sect. 2. Additional features need to be included in the model (I) to cope with the demands of ecological management, and are done so in the next section. We conclude the current section by making some remarks on the roles of the dimensions of the input and output spaces, m and p, respectively, and also assumption (A2) that appears in the above theorem.

Remark 3.2

-

(i)

In the case that \(r(A)<1\), we see from (3.3) that the range of possible limiting outputs is equal to the image of G(1), which is at most m-dimensional. For every \(r \in \mathbb {R}^p\) to belong to this image then necessarily we require that \(m \ge p\) and that G(1) is surjective. In words, as many control actions are needed as observations are to be regulated. When \(m > p\) then there is some redundancy, or non-uniqueness, in the choice of inputs.

-

(ii)

For any \(m, p \in \mathbb {N}\), assumption (A2) implies that KG(1) is invertible, as zero is not an eigenvalue of KG(1). In this case G(1) must be injective as if \(G(1)v = 0\) for some \(v \in \mathbb {R}^m\) then \(KG(1)v = 0\) and thus \(v=0\). Therefore, by the rank-nullity theorem, \(m \le p\). In order for every reference \(r \in \mathbb {R}^p\) to be a candidate limit of the output, we require that G(1) is surjective hence \(m \ge p\) (as noted in (i)). Combined we see that necessarily \(m=p\). Therefore, \(m =p \) and (A2) together imply that G(1) is invertible, and hence the inverses in parts (a) and (b) of Theorem 3.1 make sense. Consequently, the spectrum condition (A2) implies that \( K = KG(1)\cdot G(1)^{-1}\) is invertible as well.

-

(iii)

Conversely, if (as usual) \(m = p\) and G(1) is invertible, then assumption (A2) is not restrictive. A candidate K is \(G(1)^{-1}\) which clearly satisfies \(\sigma (KG(1)) = \sigma (I) = \{1\}\) with positive real part. We note that \(K = G(1)^{-1}\) requires knowledge of G(1). If G(1) is not known exactly then K can be based on an estimate of (the inverse of) G(1) which we investigate further in Sect. 4.4.3.

4 Multi-input, multi-output low-gain PI control for positive systems with input saturation

Having recapped low-gain PI control for linear systems in Sect. 3 we now introduce additional structure that arises from considering positive state linear systems, our primary focus, and present a low-gain, multi-input, multi-output PI controller. Specifically, we additionally assume that (A, B, C) in (2.2) satisfy

and all initial states \(x^0\) are componentwise nonnegative, so that \(x^0 \in \mathbb {R}^n_+\). As in Theorem 3.1, in our subsequent low-gain PI control results, we shall assume that \(m=p\) (see Remark 3.2 for motivation of this choice).

The framework (2.2) and (4.1) includes matrix PPMs where the input, state and output of (2.2) denote the control action, the stage- or age-structured population abundances, and some measurement or observation of the population, respectively. In this applied context the assumption that \(m=p\) means that as many per time-step measurements of the population are made as are available per-time step management actions.

We seek a version of Theorem 3.1 for asymptotic tracking of a chosen nonnegative set-point \(r \in \mathbb {R}^m_+\). As motivated in Sect. 2, the two issues recorded there as (P1) and (P2) must be overcome. To that end, in Sect. 4.1 we describe the set of feasible set-points—these are candidate limits of the output of a positive state linear system where nonnegativity of the state and output variables is preserved (P1). Then, in Sect. 4.2, we establish stability of a low-gain integral control system with the additional feature that the input to the state equation is saturated (P2). Saturating the input introduces a nonlinearity into the feedback system, and that the conclusions of Theorem 3.1 still hold must be derived. Recall that the motivation for saturating the input is to avoid removing individuals when conservation is the ultimate goal and to reflect the realistic constraint of per-time step resource or capacity limits.

4.1 Feasible set-points for positive state control systems

In this section we answer the question (P1): to which nonnegative set-points can the output y of (2.2) and (4.1) converge? Although we shall apply these results to inputs u generated by a PI controller, for now it suffices to consider convergent inputs. For that reason we do not need to impose the restriction \(m=p\) in this section. We introduce some terminology and notation.

Definition 4.1

For (A, B, C) as in (3.1) we say that \(r \in \mathbb {R}^p\) is trackable if there exists a convergent input u such that the output y of (2.2) converges to r as t tends to infinity. Supposing further that (A, B, C) satisfy (4.1) we say that \(r \in \mathbb {R}^p_+\) is trackable with positive state if r is trackable and moreover the state x(t) of (2.2) is componentwise nonnegative for every \(t \in \mathbb {N}_0\). We call the set of such r the set of trackable outputs of (A, B, C) with positive state.

We seek to characterise the set of trackable outputs of (A, B, C) with positive state. For \(X \in \mathbb {R}^{s \times t}_+\), where \(s,t \in \mathbb {N}\), the set \(\langle X \rangle _+\) denotes all nonnegative linear combinations of the columns of X, which is a subset of \(\mathbb {R}^{s}_+\). We also denote componentwise nonnegativity of a matrix X or vector v by \(X \ge 0\) or \(v \ge 0\) (respectively, also \(0\le X\) and \(0 \le v \)).

We remind the reader that the subsequent claims are proved in “Appendix C”.

Lemma 4.2

Suppose that (A, B, C) is given by (4.1) and that \(r(A) <1\). Then

-

(a)

\(G_{CAB}(1) := C(I-A)^{-1}B \ge 0\);

-

(b)

for each \(F \in \mathbb {R}^{n \times m}, F \ge 0\) such that \(A_1 := A-BF \ge 0\), the set of trackable outputs of (A, B, C) with positive state contains \(\langle G_{C A_1B}(1) \rangle _+\).

Next we recall an assumption from Guiver et al. (2014),Footnote 2 which pertains to a nonnegative pair \((A,B) \in \mathbb {R}^{n \times n}_+ \times \mathbb {R}^{n \times m}_+\):

- (H):

-

There exists \(F \in \mathbb {R}^{n \times m}, F \ge 0\) such that \(A_1 := A-BF \ge 0\) and for any \(v \in \mathbb {R}^n_+\) and \(w \in \mathbb {R}^m\), if \(A_1 v + Bw \ge 0\), then \(w \ge 0\).

Assumption (H) for the pair (A, B) captures the situation whereby for any nonnegative x it is possible to choose negative u such that \(Ax + Bu\) is “as small as possible”, yet still nonnegative. Indeed, the choice of u that achieves this is \(u = -Fx\). Assumption (H) always holds if \(B = b = e_i\), the \(i{\mathrm{th}}\) standard basis vector, as then the required \(F = f^T\) is the \(i{\mathrm{th}}\) row of A. For instance, with

then \(F = f^T = \begin{bmatrix}f_1&f_2&f_3 \end{bmatrix} \ge 0\) gives

and so if \(A_1v + bw \ge 0 \) then by inspection of the first component, necessarily \(w \ge 0\). Assumption (H) always holds for any \(A \in \mathbb {R}^{n \times n}_+\) when \(B =[ c_{i_1} e_{i_1}, \ldots ,c_{i_k} e_{i_k}]\) for some distinct \(i_j \in \{1,2,\dots , n\}\) with \(c_{i_j} >0\) for each j. Furthermore, Guiver et al. (2014, Lemma 2.1) contains a constructive algorithm for checking whether assumption (H) holds for any pair (A, B), and determines the required F (which is unique) when it exists.

We have recalled assumption (H) because if the (A, B) component of (2.2) satisfies (H) then there exists a characterisation of the set of trackable outputs of (A, B, C) with positive state.

Proposition 4.3

Suppose that (A, B, C) is given by (4.1), \(r(A) <1\) and additionally that the pair (A, B) satisfies assumption (H). Then the set of trackable outputs of (A, B, C) with positive state is precisely equal to

where \(A_1\) is as in (H).

The next result provides a recipe for enlarging the guaranteed set of possible trackable outputs with positive state, particularly in the case that (H) is not satisfied.

Lemma 4.4

Suppose that (A, B, C) is given by (4.1) and that \(r(A) < 1\). If \(F \in \mathbb {R}^{n \times m}_+\) is such that \(A_1 := A-BF \ge 0\) then

-

(a)

\(I - G_{F A_1 B}(1)\) is invertible, and;

-

(b)

\(\langle G_{CAB}(1) \rangle _+ \subseteq \langle G_{C A_1B}(1) \rangle _+.\)

Remark 4.5

A straightforward adjustment to the proof of Lemma 4.4 demonstrates that the sets \(\langle G_{C AB}(1) \rangle _+\) have a monotonically decreasing nested structure with respect to the partial ordering of componentwise nonnegativity on A, in that

where \(A \le {\bar{A}}\) means that \(0\le {\bar{A}} - A\). The largest possible set that can be achieved by this process is \(\langle CB \rangle _+\) and occurs when \(F \ge 0\) can be chosen such that \(A - BF = 0\). In this case the set of trackable outputs of (A, B, C) with positive state must contain \(\langle CB \rangle _+\). Proposition 4.3 demonstrates that, when assumption (H) holds, \(\langle G_{C A_1B}(1) \rangle _+\) is the largest possible set for tracking with positive state.

4.2 Low-gain integral control with input saturation

In this section we address question (P2) by demonstrating that suitable adjustments to the integral control model (I), incorporating saturation on the input, achieve set-point regulation, as well as bounding the per time-step input and preserving nonnegativity of the state and output variables. Recall that the three-faceted motivation for saturating the input is to: (i) allow for the inclusion of per time-step bounds representing resource or capacity constraints associated with the implementation of a management strategy; (ii) prevent negative control signals particularly problematic when conservation is the desired outcome, and; (iii) prevent (meaningless) negative state and output variables. These three issues are all exhibited in Fig. 1 yet are resolved in Fig. 2.

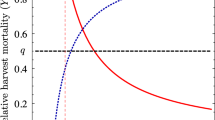

Graph of the saturation function \(\mathrm{sat}_{U_i}\) defined in (4.2)

We next introduce the input saturation function which is incorporated into a low-gain integral control model in (Iaw). For given \(U >0\) define the function \(\mathrm{sat}\,_{U}\) by

an example of which is graphed in Fig. 3. The diagonal saturation function \(\mathrm{sat}\,\) is defined as the componentwise combination of \(\mathrm{sat}_{U_i}\) functions as follows:

Here the constants \(U_i>0\) for \(i \in \{1,2,\dots ,m\}\) are chosen and in applications denote the per time-step bound on the \(i{\mathrm{th}}\) component of the input. To incorporate input saturation into a low-gain integral control model we consider:

where \(E \in \mathbb {R}^{m \times m}\) is a design parameter additional to those appearing in (I) and is discussed in more detail in Sect. 4.3. Our main result of the manuscript is Theorem 4.6 below, which mirrors Theorem 3.1, and guarantees that the low-gain integral control model with input saturation (Iaw) achieves asymptotic tracking of the output of (Iaw) to a prescribed set-point under the (same, previously employed) assumptions (A1) and (A2) and a known choice of E. The theorem provides solutions to problems (P1) and (P2).

Theorem 4.6

Suppose that (Iaw) satisfies (A1) and (A2) and choose

where \(g >0\) is as in (Iaw). Then, there exists \(g^*>0\) such that for all \(g \in (0,g^*)\), all \(r \in \langle G(1)\rangle _+\) such that

and all \((x^0, x^0_c) \in \mathbb {R}^n_+ \times \mathbb {R}^m_+\), the solution \((x,x_c)\) of (Iaw) satisfies \(x(t) \ge 0\) for each \(t \in \mathbb {N}_0\) and has the properties:

-

(a)

\(\displaystyle {\lim \nolimits _{t\rightarrow \infty }}x_c(t)= x_c^\infty : = G(1)^{-1}r\);

-

(b)

\(\displaystyle {\lim \nolimits _{t\rightarrow \infty }}x(t)= x^\infty := (I-A)^{-1}BG(1)^{-1}r\);

-

(c)

\(\displaystyle {\lim \nolimits _{t\rightarrow \infty }}y(t)= \displaystyle {\lim \nolimits _{t\rightarrow \infty }}Cx(t)= r\).

By appealing to the results of Sect. 4.1, including a proportional component in the feedback law in (Iaw) gives rise to a larger set of candidate set-points. Let (PI1aw) denote the feedback system

which differs from (Iaw) only by the inclusion of an additional proportional state-feedback \(-F_1x(t)\) to the updated input \(u = -F_1 x +\mathrm{sat}\,(x_c)\). As before, \(F_1 \in \mathbb {R}^{m \times n}\) is another design parameter. We let (PI2aw) denote the feedback system (PI1aw) with \(-F_1x(t)\) instead replaced by \(-F_2y(t) = -F_2Cx(t)\), that is, an output-feedback which replaces u in (PI1aw) \(u = -F_2 C x +\mathrm{sat}\,(x_c)\). Again, \(F_2 \in \mathbb {R}^{m \times m}\) is a design parameter. We present the following corollary for the low-gain PI control systems (PI1aw) and (PI2aw).

Corollary 4.7

The low-gain PI systems (PI1aw) and (PI2aw) specified by (A, B, C) satisfying (4.1) are equal to (Iaw) specified by \((A_1,B,C)\) and \((A_2,B,C)\), where \( A_1 := A - BF_1\) and \( A_2 := A - BF_2C\), respectively. If \(F_1\) is such that \(A_1 \ge 0\) and \((A_1,B,C)\) satisfy (A1) and (A2) then the conclusions of Theorem 4.6 apply to (Iaw) specified by \((A_1,B,C)\), and similarly for \(F_2\).

4.3 Comparing and contrasting low-gain feedback systems (I) and (Iaw)

In this section we record some observations on the low-gain integral control system (Iaw), the above theorem and corollary, and their relation to other published results. The integral control scheme (Iaw) differs from (I) by the saturation function in the definition of u, and by the term involving E appended to controller state dynamics as well. The term involving E is crucial and, intuitively, acts as a correction term, activating at time-steps t when the integral control state \(x_c(t)\) saturates meaning that \(x_c(t) \ne \mathrm{sat}\,(x_c(t))\). The input is not saturated when \(\mathrm{sat}\,(x_c(t)) = x_c(t)\) and for these time-steps the term in (Iaw) involving E is zero and plays no role. Loosely speaking, at these times (Iaw) is behaving as though there is no saturation, the resulting model is linear and Theorem 3.1 applies. Theorem 4.6 makes the previous assertion rigorous.

The feedback system (Iaw) with \(E =0\) was considered in Guiver et al. (2015) in the specific so-called single-input, single-output case (meaning \(m = p = 1\)), so that \(B = b\) and \(C = c^T\) are vectors. Here assumption (A1) is as before, and assumption (A2) reduces to \(G(1) > 0\) (it suffices to take \(K = 1\)). However, in contrast to the situation in Guiver et al. (2015), saturating a multi-input (\(m > 1\)) control signal can be inherently destabilising, resulting in the desired set-point regulation objective not being achieved. Roughly, if \(E=0\) then the control signal may get ‘stuck’ in the saturating region, and the resulting failure is attributed to what is known as “actuator saturation” or “integrator windup” in control engineering literature (Johanastrom and Rundqwist 1989). Anti-windup control refers to the study of mechanisms to alleviate or remove windup in PI controllers and, owing to its importance in applications, is a hugely well-studied topic. The already 20-year-old chronological bibliography of Bernstein and Michel (1995) contains 250 references. We refer the reader to Tarbouriech and Turner (2009) for a recent overview of anti-windup control. There are many possible such mechanisms, for example in how to choose the matrix E that appears in (Iaw), also known as a static anti-windup component. The advantages of our choice of E in Theorem 4.6 and elsewhere are that it:

-

(i)

is straightforward to compute and thus implement;

-

(ii)

possesses demonstrable robustness to model uncertainty, and;

-

(iii)

can be extended to a class of infinite-dimensional systems.

For readers less familiar with (or indeed interested in) anti-windup control, the key feature of the present discussion is that the term involving E in (Iaw) is a crucial feature and should not be omitted. We reiterate that although our results are aimed at ecological models, they apply to any positive state linear system described by (2.2). As far as we know, the anti-windup method we propose and its proof is novel in a control theory context as well.

One approach to anti-windup control present in the literature determines the anti-windup component E via the solution of a set of certain linear matrix inequalities (LMIs) see, for example, Mulder et al. (2001) or Silva and Tarbouriech (2006). Although these LMIs can often be solved numerically and can result in other performance criteria being met (such as so-called “bumpless transfer”), they introduce another level of complexity for the modeller. Moreover, since they use Lyapunov based arguments, they seemingly do not extend across to systems that have infinite-dimensional Banach spaces as state-spaces (thus precluding IPMs, for instance).

Remark 4.8

-

(i)

Although when \(r(A) <1\) no \(F_1\) or \(F_2\) component is required to apply Theorem 4.6, the use of (PI1aw) or (PI2aw) often results in faster convergence than that of (Iaw), highlighted as issue (iv) in Sect. 2. Moreover, if \(F_1 \in \mathbb {R}^{n \times n}\) is such that

$$\begin{aligned} 0 \le A- BF_1 \le A, \end{aligned}$$then Lemma 4.4 (b) implies that there is a larger choice of possible references achievable by (PI1aw) than by (Iaw), which thus encourages the use of PI control even in the case that \(r(A) <1\). Similar comments apply to \(F_2\) for the (PI2aw) system.

-

(ii)

If K and G(1) are such that KG(1) is positive semi-definite then it can be shown that the conclusions of Theorem 4.6 hold for (Iaw) with \(E = 0\), that is, with no anti-windup component. Although the choice \(K = G(1)^{-1}\) guarantees this condition, such a choice requires exact knowledge of G(1) and the requirement that KG(1) is positive semi-definite is very non-robust to parameter uncertainty. For this reason we have, therefore, insisted on including the anti-windup component \(E(x_c - \mathrm{sat}\,(x_c))\) in (Iaw).

-

(iii)

As mentioned in the introduction, feedback control that preserves nonnegativity of state and solves a non-zero state-regulation problem has been considered in Nersesov et al. (2004). The goals of that paper and ours here are similar, but there the authors work in continuous-time, and use a feedback derived from a constrained optimal control problem (as opposed to a low-gain integral controller) to steer the state to a prescribed non-zero equilibrium. They do not consider input saturation to avoid negative states but instead constrain the structure of the inputs. Their work builds on that of Leenheer and Aeyels (2001). Roszak and Davison (2009) solve the continuous-time, nonnegative output regulation problem (also called the servomechanism problem, hence their title) using low-gain integral control. There, the authors determine the model parameter K in (I) using optimal control results—a different approach to ours. Another key difference between that work and ours is that the input is not saturated (that is, bounded) from above and thus, as we understand, “integrator windup” is not an issue.

4.4 Robustness of low-gain PI control

The efficacy of the low-gain PI control systems considered so far is predicated on several modelling assumptions:

-

(U1)

that the system of interest is accurately modelled by (2.2) and (4.1);

-

(U2)

there are no external signals or noises affecting the dynamics of the state x or the input u;

-

(U3)

there is no measurement or sampling error in y;

-

(U4)

the steady-state gain G(1) is known.

In practice, all four of these assumptions are likely to be violated and thus here we quantify to what extent low-gain PI control is robust to failures of (U1)–(U4). By doing so we seek to describe how concepts from robust feedback control apply to sources of uncertainty that arise in ecological modelling. A more detailed discussion may be found in Guiver et al. (2015, Section 3.1), but briefly, Table 1 [based on Guiver et al. (2015, Table 1)] connects the list above with sources of uncertainty described in the ecology literature.

4.4.1 Robustness to choice of model structure and model parameters

When modelling ecological processes, such as managed populations, there are often a plethora of models to choose from that all attempt to capture the same underlying dynamics. Within structured population models of the form (2.1) there are age- or size- based models, that partition the life cycle (perhaps a continuum of stages) into predetermined discrete stage-classes. The above choices imply that there is choice, or indeed, uncertainty in A, B and C in (2.1), challenging (U1). The state dimension n may even be uncertain. Low-gain PI control is robust to this source of uncertainty in the sense that knowledge of A, B and C is not required to implement it. The measured variable y(t) is required, and it is assumed that \(y(t) = C x(t)\), for some choice of C, but C itself is not needed. Rather, A, B and C are required to satisfy the assumptions (A1) and (A2). Assumption (A1) does not need A to be known, and simply means that the population of interest is in decline. Recall that when seeking to use PI control to reduce a growing population, then assumption (A1) amounts to the requirement that the population can be stabilised (that is, made to decline) by state- or output-feedback—see (PI1) and the discussion below. Assumption (A2) does require knowledge of G(1) to determine a suitable K (and E for (Iaw)), which may be determined from A, B and C, but may also be known by experiment or experience. As we explain in Sect. 4.4.3, an estimate of G(1) maybe sufficient to determine a K that together satisfy (A2). Finally, we comment that since assumptions (A1) and (A2) are necessary for low-gain integral control (as well as sufficient), we cannot allow any greater model uncertainty.

4.4.2 Robustness to external disturbances

External dynamics affecting the state, input and output may be included in the original model (2.2) by writing:

where \(d_1(t) \in \mathbb {R}^n\) and \(d_2(t) \in \mathbb {R}^p\) are typically unknown. In a population model \(d_1\) may denote either a disturbance to the population such as (unmodelled) immigration, emigration or predation or an input error, meaning that the intended input u(t) is disturbed. Similarly, \(d_2\) denotes some form of measurement or sampling error. The inclusion of \(d_1\) and \(d_2\) seeks to address the assumptions (U2) and (U3). A reasonably general framework is to assume that \(d_1\) and \(d_2\) in (4.6) are bounded, and of course are such that x and y remain nonnegative. We refer the reader to Eager et al. (2014) and the references therein for more information on the impacts of nonnegative disturbances on populations modelled by matrix PPMs.

When only boundedness of \(d_1\) and \(d_2\) is assumed then we cannot in general expect the same convergence of the output y of the feedback system (4.6) connected with a low-gain PI controller as that exhibited by (Iaw), (PI1aw) or (PI2aw). The next result provides upper bounds on the difference of the state and output from their respective asymptotic limits in terms of the initial error and the maximum values of \(d_1\) and \(d_2\). The result is an (I)nput-to-(S)tate-(S)tability estimate and we refer the reader to Sontag (2008) for more background on ISS.

Proposition 4.9

Suppose that the low-gain integral control system with disturbances

with bounded disturbances \(d_1\) and \(d_2\) satisfies (A1) and (A2), and choose \(E := gKG(1)\) where \(g >0\) is as in (Iaw). Then, there exists \(g^*>0\) such that for all \(g \in (0,g^*)\), all r as in (4.5) and all \((x^0, x^0_c) \in \mathbb {R}^n_+ \times \mathbb {R}^m_+\), the solution \((x,x_c)\) of (4.7) satisfies

for some constant \(\gamma \in (0,1)\) and \(M_0,M_1,M_2>0\) and where \(x^\infty _c\) and \(x^\infty \) are as in (a) and (b) of Theorem 3.1, respectively. The constants \(\gamma , M_0,M_1\) and \(M_2\) depend on A, B, C, g and K, but not on r, \(x^0\), \(x_c^0\), \(d_1\) or \(d_2\). Furthermore, \(g^*\) is independent of the disturbances \(d_1\) and \(d_2\).

Low-gain PI control without saturation or positivity constraints is known to have the desirable property that convergent input disturbances \(d_1 = B f_1\), for some disturbance \(f_1\), are rejected by the integral controller, meaning that the output still converges to the desired set-point. Meanwhile, convergent output disturbances \(d_2\) result in asymptotic tracking of the output to the set-point offset by the limit of the disturbance. A convergent output disturbance includes constant disturbances which may, for example, correspond to a systematic or persistent measurement error. The next corollary demonstrates that, broadly speaking, the same disturbance rejection and offset in the set-point properties hold for the low-gain integral control model (Iaw) with input saturation.

Corollary 4.10

Suppose that the low-gain integral control system with disturbances (4.7) satisfies (A1) and (A2), and choose \(E := gKG(1)\) where \(g >0\) is as in (Iaw). Suppose that \(f_1\) and \(d_2\) are convergent with respective limits \(f_1^\infty \) and \(d_2^\infty \). Then, there exists \(g^*>0\) such that for all \(g \in (0,g^*)\), all \(r \in \mathbb {R}^m_+\) such that

and all \((x^0, x^0_c) \in \mathbb {R}^n_+ \times \mathbb {R}^m_+\), the solution \((x,x_c)\) of (4.7) satisfies:

-

(a)

\(\displaystyle {\lim \nolimits _{t\rightarrow \infty }}x_c(t) = G(1)^{-1}(r-d_2^\infty ) = u_+ + f_1^\infty \),

-

(b)

\(\displaystyle {\lim \nolimits _{t\rightarrow \infty }}x(t) = (I-A)^{-1}BG(1)^{-1}(r-d_2^\infty ) = (I-A)^{-1}B(u_+ + f_1^\infty )\),

-

(c)

\(\displaystyle {\lim \nolimits _{t\rightarrow \infty }}y(t) = \displaystyle {\lim \nolimits _{t\rightarrow \infty }}Cx(t)= r-d_2^\infty \).

The constant \(g^*\) is independent of \(f_1\) and \(d_2\).

4.4.3 Robustness to uncertainty in the steady-state gain G(1)

In the final part of our material on robustness with respect to various forms of uncertainty, here we consider the situation where the steady-state gain matrix G(1) is not known precisely, meaning that (U1) is violated. Knowledge of G(1) is used in low-gain integral control and PI control in three separate situations: first, in determining K to satisfy (A2) that appears in the original integral control model (I), the PI models (PI1), (PI2) and, the focus of the present study, (Iaw). Second, G(1) is used in determining E that appears in (Iaw). Both of the components K and E are required to ensure that low-gain PI control as presented is effective as described in Sect. 4.3. Recall that the choice \(K = G(1)^{-1}\) satisfies (A2) and \(E = gKG(1)\) is sufficient to ensure that the conclusions of Theorem 4.6 (and Corollaries 4.7, 4.10, Proposition 4.9) hold. Third, knowledge of G(1) is used in Lemma 4.2 to help determine the set of trackable outputs with positive state, which in turn provides feasible set-points.

Uncertainty in G(1) typically arises from uncertainty in the parameters or even the dimensions of A, B or C. Throughout this section we shall assume that the unknown transfer function G in (3.2) can be decomposed as

where \({\hat{G}}\) is known and \({\Delta } G\) is expected to be “small”. More generally, in this section variables with hats shall always denote known quantities and capital deltas denote uncertain terms.

Lemmas 4.11–4.13 below are technical preliminary results gathering sufficient conditions for the main result of the section, Corollary 4.15. This latter result states that if a known nominal estimate \({\hat{G}}\) is close to the unknown G, meaning that \(\Vert G - {\hat{G}}\Vert _\infty \) is smallFootnote 3, then basing the design of K and E on the nominal estimate \({\hat{G}}(1)\) of G(1) is sufficient for low-gain PI control to succeed.

We first demonstrate how the decomposition (4.10) arises from parametric uncertainty in A, B and C. We let \(\rho (A) = \mathbb {C}{\setminus } \sigma (A)\) denote the resolvent set of A, (when A is a matrix then \(\rho (A)\) is the set of all complex numbers that are not eigenvalues of A).

Lemma 4.11

Suppose that \((A,B,C) \in \mathbb {R}^{n \times n} \times \mathbb {R}^{n \times m} \times \mathbb {R}^{m \times n}\) for \(m,n \in \mathbb {N}\) admit the decompositions

then

which is defined for all \(z \in \mathbb {C}\cap \rho (A) \cap \rho ({\hat{A}})\) and is in the form (4.10) with \({\hat{G}} = G_{{\hat{C}} {\hat{A}} {\hat{B}}} \) and \({\Delta } G\) the sum of the remaining three terms on the right hand side of (4.11).

Lemma 4.12

Suppose that G admits the decomposition (4.10) and that \({\hat{G}}(1)\) is invertible. Choose \(K = Q \hat{G}(1)^{-1}\), where \(Q \in \mathbb {C}^{m \times m}\) is such that \(\sigma (Q) \subseteq \mathbb {C}_0^+\). Then assumption (A2) is satisfied for K and G(1) if

If \(Q =I\), the \(m \times m\) identity matrix, then K and G(1) satisfy (A2) if

A sufficient condition for (4.13) is that

Lemma 4.13

Let X denote a bounded operator on a Hilbert space (such as a square matrix with real or complex entries), with \(-1 \in \rho (X)\), so that \(I+X\) is invertible. Then the conditions

-

(a)

\( \Vert X \Vert < \tfrac{1}{2}\), or;

-

(b)

\(\Vert X \Vert \le 1\) and \(\Vert (I-X)(I+X)^{-1} \Vert \le 1\);

are sufficient for

Remark 4.14

An estimate of the form (4.15) appears as a condition on X in Corollary 4.15 below, hence the inclusion of sufficient conditions here. We comment that (a) and (b) do not imply one another as \(X = -\tfrac{1}{4}I\) satisfies (a) but not (b) and \(X = I\) satisfies (b) but not (a).

Corollary 4.15

Suppose that (A, B, C) as in (4.1) satisfy (A1) and the associated transfer function G admits the decomposition (4.10), where \({\hat{G}}\) is known and K and \({\hat{G}}\) together satisfy (A2) and choose

where \(g >0\) is as in (Iaw). Then, there exists \(M^*>0\) and \(g^*>0\) (which in general depends on \(M^*\)) such that for all \({\Delta } G\) in (4.10) with

and

all \(g \in (0,g^*)\), all \(r \in \langle G(1)\rangle _+\) as in (4.5) and all \((x^0, x^0_c) \in \mathbb {R}^n_+ \times \mathbb {R}^m_+\), the solution \((x,x_c)\) of (Iaw) satisfies \(x(t) \ge 0\) for each \(t\in \mathbb {N}_0\) and has the properties (a), ( b) and (c) of Theorem 3.1.

Remark 4.16

Corollary 4.15 can easily be extended to the PI systems (PI1aw) or (PI2aw), considered in Corollary 4.7, by replacing A by \(A_1\) and \(A_2\) as appropriate.

4.5 Low-gain PI control with input saturation for a class of infinite-dimensional systems

We have so far focussed on solving the robust set-point regulation problem with multiple management goals by applying low-gain PI control in the situation where the underlying (ecological) model is assumed finite-dimensional. Abstractly, we have developed low-gain PI control with input saturation for discrete-time positive-state linear systems. In this section we demonstrate that many of the results presented extend to a class of discrete-time, infinite-dimensional linear systems which includes the class of IPMs. IPMs were introduced by Easterling et al. (2000) (see also Ellner and Rees 2006; Rees and Ellner 2009 or Briggs et al. 2010) as a tool for population modelling where the n discrete age-, size- or stage-classes of a PPM are replaced by a continuous variable. As a concrete example, a shrub or tree population model may partition individuals according to a continuous variable denoting height or stem diameter. An IPM is a discrete-time linear system on the function space \(L^1({\varOmega })\) specified by integral operator:

for some nonnegative-valued kernel

where, for simplicity say, \({\varOmega }\) is the closure of some bounded set in \(\mathbb {R}^n\), \(n \in \mathbb {N}\). At each time-step \(t \in \mathbb {N}_0\), the state of an IPM is a function of the continuous variable \(\xi \in {\varOmega }\).

To formulate integral control in a possibly infinite-dimensional setting let \(\mathcal {X}\) denote an ordered real Banach space, so that \(\mathcal {X}\) is equipped with a partial order \(\le \) (also \(\ge \)) that respects vector space addition and multiplication by nonnegative scalars. The positive cone \(\mathcal {C}\) induced by \((\mathcal {B},\ge )\) is the set of \(x \in \mathcal {X}\) such that \(x \ge 0\) and is a closed, convex set (so that if \(x, y \in \mathcal {C}\) and \(\alpha \ge 0\) then \(x+y, \alpha x \in \mathcal {C}\)) with the property that \(x, -x \in \mathcal {C}\) implies that \(x =0\). For real Banach spaces \(\mathcal {X}_1, \mathcal {X}_2\) with respective positive cones \(\mathcal {C}_1, \mathcal {C}_2\) a bounded linear operator \(T:\mathcal {X}_1 \rightarrow \mathcal {X}_2\) is called positive if \(T \mathcal {C}_1 \subseteq \mathcal {C}_2\). In words, T is positive if every positive element of \(\mathcal {X}_1\) is mapped to a positive element of \(\mathcal {X}_2\).

Example 4.17

-

(i)

The situation considered throughout the manuscript thus far has taken \(\mathcal {X}= \mathbb {R}^n\) for \(n \in \mathbb {N}\) with partial order \(\ge \) denoting usual componentwise nonnegativity, so that \(x \in \mathbb {R}^n\), \(x \ge 0\) if \(x_k \ge 0\) for every \(k \in \{1,2,\dots ,n\}\). As such, the positive cone of \(\mathbb {R}^n\) and this partial ordering is the nonnegative orthant \(\mathcal {C}=\mathbb {R}^n_+\).

-

(ii)

To model IPMs we choose \(\mathcal {X}= L^1({\varOmega })\) with the partial ordering \(\ge \) of almost everywhere pointwise inequality, that is \(f \in L^1({\varOmega })\), \(f \ge 0\) if \(f(\xi ) \ge 0\) for almost all \(\xi \in {\varOmega }\). With this choice of partial ordering the nonnegativity assumption in (4.20) implies that A in (4.19) is a positive operator. Moreover, by Krasnosel’skij et al. (1989, Theorem 2.1), Eq. (4.20) is sufficient to infer that A in (4.19) is a bounded operator. \(\square \)

Consider the linear system (2.2) where now

are bounded, positive, linear operators and \(\mathcal {X}\) is as above. The state-space \(\mathcal {X}\) may now be infinite-dimensional but, for simplicity, the input and output spaces are still assumed to be \(\mathbb {R}^m\). Since B and C are bounded and finite-rank, then necessarily they can be written

for some \(b_i \in \mathcal {X}\) and \(c_j : \mathcal {X}\rightarrow \mathbb {R}\), linear functionals on \(\mathcal {X}\). Using the expression (4.22), B is positive if, and only if, \(b_i \in \mathcal {C}\) for every \(i \in \{1,2,\dots , m\}\). Similarly, C is positive if, and only if, \(c_j\) is positive for every \(j \in \{1,2,\dots , m\}\), here meaning that \(c_j(\mathcal {C}) \subseteq \mathbb {R}_+\).

The low-gain integral control system with input saturation is still defined by (Iaw), with design parameters \(E,K \in \mathbb {R}^{m \times m}\), \(g >0\) and \(x_c^0 \in \mathbb {R}^m\). Note that for each time-step \(t \in \mathbb {N}_0\), the integral controller state \(x_c(t) \in \mathbb {R}^m\) is still finite-dimensional and thus readily computable. The expression (3.2) for the transfer function G is well-defined when A, B and C are as in (4.21), and consequently assumptions (A1) and (A2) are as before.

To include a (P)roportional feedback in the control law as in (PI1aw) or (PI2aw) requires bounded linear operators

respectively. When \(F_1\) and \(F_2\) are bounded then so are

as the composition and difference of bounded operators.

The main result of this section demonstrates that the low-gain integral controller (Iaw) still achieves the robust set-point regulation problem in the more general case when (A, B, C) are as in (4.21). By noting that the PI system (PI1aw) with \(F_1\) or \(F_2\) as in (4.24) reduces to (Iaw) with A replaced by \(A_1\) or \(A_2\) given by (4.25), the next result includes both the state- and output-feedback cases.

Theorem 4.18

Assume that the low-gain integral control feedback system (Iaw) specified by positive operators (A, B, C) in (4.21) satisfies assumptions (A1) and (A2) with \(E = gKG(1)\) in (Iaw). Then, there exists \(g^*>0\) such that for all \(g \in (0,g^*)\), all r as in (4.5) and all \((x^0, x^0_c) \in \mathcal {C}\times \mathbb {R}^m_+\), the solution \((x,x_c)\) of (Iaw) has the properties (a), (b) and (c) of Theorem 3.1 and furthermore \(x(t) \in \mathcal {C}\) for every \(t \in \mathbb {N}_0\).

The robustness results Proposition 4.9, Corollary 4.10 and Corollary 4.15 also apply when (Iaw) is specified by positive operators (A, B, C) in (4.21).

The proofs of the above results are exactly the same as the earlier named results; none of the arguments used there required that \(\mathcal {X}\) is finite-dimensional.

Remark 4.19

The results of Sect. 4.1 on feasible nonnegative set-points translate to the situation when \(\mathcal {X}\) is a real, partially ordered Banach space. Again, none of the proofs explicitly use that \(\mathcal {X}\) is finite-dimensional. However, assumption (H) should be replaced by:

- \(({\mathrm{H}}^{\prime })\) :

-

Let \(\mathcal {X}\) denote a real, partially ordered Banach space with positive cone \(\mathcal {C}\). Given the pair of bounded, linear, positive operators \(A:\mathcal {X}\rightarrow \mathcal {X}\), \(B:\mathbb {R}^m \rightarrow \mathcal {X}\) there exists a bounded, positive operator \(F :\mathcal {X}\rightarrow \mathbb {R}^m\) such that defining \({\hat{A}} := A-BF\) it follows that \({\hat{A}}\) is positive and for any \(v \in \mathcal {C}\) and \(w \in \mathbb {R}^m\), if \({\hat{A}} v + Bw \in \mathcal {C}\) then \(w \in \mathbb {R}_+^m\).

Importantly, the constructive characterisation Guiver et al. (2014, Lemma 2.1) does not hold in the general Banach space case, however, as it is truly a finite-dimensional result.

5 Examples

Example 5.1

Matrix projection models for the sustainable harvesting of two species of palm trees in Mexico are considered in Olmsted and Alvarez-Buylla (1995). We use a matrix PPM from there of the palm species Coccothrinax readii to demonstrate how a potential harvesting and conservation strategy could be based on a low-gain PI control law. The projection matrix A is given by:

The nine stages denote seedlings, saplings I and II, juveniles I–V, and adult trees and the time-steps correspond to years. We refer the reader to Olmsted and Alvarez-Buylla (1995) for details on the phenology of C. readii.

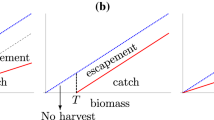

The spectral radius of A in (5.1) is \(1.0549 >1\), so that the uncontrolled population (2.1) is predicted to grow asymptotically. As with the pronghorn example in Sect. 2, we assume that we do not know the entire population distribution at each time-step exactly and again only have access to some part of the state. For simplicity, we consider the case where just two per time-step measurements are made and correspondingly have access to two stages for replenishment. We assume that the seedlings and adult tree stage classes may be restocked and harvested, respectively, leading to the B matrix:

Furthermore, we assume that we are able to measure the abundances of the final two stages; the largest juvenile trees and adult trees so that:

The set-point regulation objective is to determine a feedback \(F_2\) in (PI2aw) and reference r such that the low-gain PI control system (PI2aw) drives the population to some non-zero level, and to determine the resulting adult tree harvest. The input u(t) is given by:

where \(u_1(t)\) denotes the number of seedlings planted at time-step \(t \in \mathbb {N}_0\), and is desired to be nonnegative. Similarly, \(u_2(t)\) denotes the number of adult trees harvested at time-step \(t \in \mathbb {N}_0\), and should be negative. Indeed, we do not want to harvest seedlings or plant adult trees. Roughly speaking, the negative term \(-F_2Cx\) on the right hand side of (5.2) determines the harvesting yield and the positive term \(\mathrm{sat}\,(x_c)\) from the integral control law determines the replanting scheme.

We require \(F_2 \in \mathbb {R}^{2 \times 2}\) such that

Then, for each \(r = \begin{bmatrix}r_1&r_2 \end{bmatrix}^T = G_{C A_0 B}(1) v \in \mathbb {R}^2_+\) for \(v \in \mathbb {R}^2_+\), the following asymptotic yields are obtained

First, we construct \(F_2 \in \mathbb {R}^{2 \times 2}\) to satisfy (5.3). By considering the product \(B F_2 C\), we seek to replace the ninth row of A by zero, which necessitates

and yields

Choosing \(F_2\) as in (5.7) satisfies (5.3) from which we compute

It remains to determine r, or equivalently v. In terms of components the reference \(r = G(1)v\) is given by

Of the four quantities \(r_1, r_2\), \(v_1\) and \(v_2\), two are free to be chosen, provided that \(0 \le v_1 \le U_1\) and \(0 \le v_2 \le U_2\), and the remaining two are determined by (5.9). Rewriting (5.5) in components gives

Therefore, from (5.10) we see that \(v_1 \ge 0\) is the asymptotic replanting level, and from (5.9) that \(v_2 = r_2\) is the desired asymptotic adult tree abundance. For given \(v_1, v_2\), the expression \(u_2^\infty \le 0\) in (5.10) determines the asymptotic number of adult trees harvested per time-step. The asymptotic abundance of the penultimate stage-class is \(r_1\). These relations are summarised in Table 2.

Projections of the replanting and harvesting scheme for the matrix PPM of C. readii from Sect. 5. See the main text for more description. In each plot the solid, dashed, dashed–dotted and solid-crossed correspond to g values of 0.01, 0.005, 0.0025 and 0.001, respectively. The dotted lines are the references

For the following numerical simulation we suppose an initial population distribution with no adult trees, so that

As an example, we take

meaning that, asymptotically, per time-step 10 seedlings are planted, almost 40 adult trees are harvested with eight adult trees remaining in the population. Of course, in practice only an integer number of trees can be harvested per year and we believe that the \(39.8 = -u^\infty _2\) is an artefact of the model considered. The asymptotic harvest yield can be altered by tuning \(v_1\) and \(v_2\) as explained above. To see the role of input saturation, we take

meaning particularly that at most 50 seedlings may be planted per time-step. We repeat the projections for different g values, \(g \in \{0.01, 0.005,0.0025, 0.001 \}\) and always take