Abstract

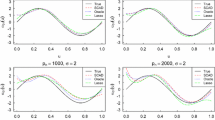

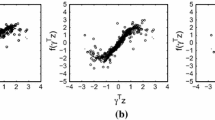

In this paper, we consider the problem of simultaneous variable selection and estimation for varying-coefficient partially linear models in a “small \(n\), large \(p\)” setting, when the number of coefficients in the linear part diverges with sample size while the number of varying coefficients is fixed. Similar problem has been considered in Lam and Fan (Ann Stat 36(5):2232–2260, 2008) based on kernel estimates for the nonparametric part, in which no variable selection was investigated besides that \(p\) was assume to be smaller than \(n\). Here we use polynomial spline to approximate the nonparametric coefficients which is more computationally expedient, demonstrate the convergence rates as well as asymptotic normality of the linear coefficients, and further present the oracle property of the SCAD-penalized estimator which works for \(p\) almost as large as \(\exp \{n^{1/2}\}\) under mild assumptions. Monte Carlo studies and real data analysis are presented to demonstrate the finite sample behavior of the proposed estimator. Our theoretical and empirical investigations are actually carried out for the generalized varying-coefficient partially linear models, including both Gaussian data and binary data as special cases.

Similar content being viewed by others

References

Cai Z, Fan J, Li R (2000) Efficient estimation and inferences for varying-coefficient models. J Am Stat Assoc 95(451):941–956

Chiang CT, Rice JA, Wu C (2001) Smoothing spline estimation for varying coefficient models with repeatedly measured dependent variables. J Am Stat Assoc 96(454):605–619

Chiaretti S, Li X, Gentleman R, Vitale A, Vignetti M, Mandelli F, Ritz J, Foa R (2004) Gene expression profile of adult T-cell acute lymphocytic leukemia identifies distinct subsets of patients with different response to therapy and survival. Blood 103(7):2771–2778

De Boor C (2001) A practical guide to splines. Springer, New York, rev. edition (2001)

Eubank RL, Huang C, Maldonado YM, Wang N, Wang S, Buchanan RJ (2004) Smoothing spline estimation in varying-coefficient models. J R Stat Soc Ser B Stat Methodol 66:653–667

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc 96(456):1348–1360

Fan J, Lv J (2011) Nonconcave penalized likelihood with NP-dimensionality. IEEE Trans Inf Theory 57:5467–5484

Fan J, Peng H (2004) Nonconcave penalized likelihood with a diverging number of parameters. Ann Stat 32(3):928–961

Fan J, Zhang W (1999) Statistical estimation in varying coefficient models. Ann Stat 27(5):1491–1518

Fan J, Zhang J (2000) Two-step estimation of functional linear models with applications to longitudinal data. J R Stat Soc Ser B Stat Methodol 62:303–322

Fan J, Feng Y, Song R (2011) Nonparametric independence screening in sparse ultra-high-dimensional additive models. J Am Stat Assoc 106:544–557

Frank I, Friedman J (1993) A statistical view of some chemometrics regression tools. Technometrics 35: 109–135

Hastie T, Tibshirani R (1993) Varying-coefficient models. J R Stat Soc Ser B Methodol 55(4):757–796

Huang JZ, Wu C, Zhou L (2002) Varying-coefficient models and basis function approximations for the analysis of repeated measurements. Biometrika 89(1):111–128

Huang JZ, Wu C, Zhou L (2004) Polynomial spline estimation and inference for varying coefficient models with longitudinal data. Stat Sin 14(3):763–788

Huang J, Horowitz J, Ma S (2008) Asymptotic properties of bridge estimators in sparse high-dimensional regression models. Ann Stat 36(2):587–613

Huang J, Horowitz J, Wei F (2010) Variable selection in nonparametric additive models. Ann Stat 38(4):2282–2313

Kim Y, Choi H, Oh H (2008) Smoothly clipped absolute deviation on high dimensions. J Am Stat Assoc 103(484):1665–1673

Lam C, Fan J (2008) Profile-kernel likelihood inference with diverging number of parameters. Ann Stat 36(5):2232–2260

Li R, Liang H (2008) Variable selection in semiparametric regression modeling. Ann Stat 36(1):261–286

McCullagh P, Nelder JA (1989) Generalized linear models, 2nd edn. Chapman and Hall, London, New York

Tibshirani R (1996) Regression shrinkage and selection via the Lasso. J R Stat Soc Ser B Methodol 58(1):267–288

van der Geer SA (2000) Applications of empirical process theory. Cambridge University Press, Cambridge

Wang H, Xia Y (2009) Shrinkage estimation of the varying coefficient model. J Am Stat Assoc 104(486):747–757

Wang L, Li H, Huang JZ (2008) Variable selection in nonparametric varying-coefficient models for analysis of repeated measurements. J Am Stat Assoc 103(484):1556–1569

Wang L, Wu Y, Li R (2012) Quantile regression for analyzing heterogeneity in ultra-high dimension. J Am Stat Assoc 107(497):214–222

Wang L, Liu X, Liang H, Carroll R (2011) Estimation and variable selection for generalized additive partially linear models. Ann Stat 39:1827–1851

Wei F, Huang J, Li H (2011) Variable selection in high-dimensional varying-coefficient models. Stat Sin 21:1515–1540

Xie H, Huang J (2009) SCAD-penalized regression in high-dimensional partially linear models. Ann Stat 37(2):673–696

Yuan M, Lin Y (2007) On the non-negative garrotte estimator. J R Stat Soc Ser B Stat Methodol 69:143–161

Zhang C (2010) Nearly unbiased variable selection under minimax concave penalty. Ann Stat 38(2):894–942

Zou H (2006) The adaptive lasso and its oracle properties. J Am Stat Assoc 101(476):1418–1429

Zou H, Li R (2008) One-step sparse estimates in nonconcave penalized likelihood models. Ann Stat 36(4):1509–1533

Acknowledgments

The authors sincerely thank the two referees for their insightful comments and suggestions that have lead to improvements on the original manuscript. The research of Heng Lian is supported by Singapore MOE Tier 1 Grant.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Proof of Theorem 1

1 Let \(X_i^{(1)}=(X_{i1},\ldots ,X_{is})^T\) be the subvector of \(X_i\) associated with nonzero coefficients, and correspondingly let \(\beta _0^{(1)}=(\beta _{01},\ldots ,\beta _{0s})^T\). Since Theorem 1 only considers the oracle estimator, we will omit the superscript \((.)^{(1)}\) in the following. Let \(a_0^*=(a_0^T,\beta _0^T)^T\), \(\hat{a}^*=(\hat{a}^{oT},\hat{\beta }^{oT})^T\), and note that \(U_i=(Z_i^T,X_i^T)^T\). Since \(\hat{a}^*=(\hat{a}^o,\hat{\beta }^o)\) minimizes

with respect to \(a^*\), \(\hat{a}^*\) satisfies the first-order condition

Using Taylor expansion at \(U_i^Ta_0^*\) for the left hand side of (9), we get

where \(z_i\) lies between \(U_i^Ta_0^*\) and \(U_i^T\hat{a}^*\).

First, note that the eigenvalues of \(\sum _i q_2(z_i,Y_i)U_iU_i^T\) are of order \(n\). Furthermore, we will show that

and thus (10) implies \(\Vert \hat{a}^*-a_0^*\Vert =O_P(\sqrt{(K+s)/n}+1/K^{d})\), which in turn immediately implies \(\sum _j\Vert \hat{\alpha }_j-a_{0j}^TB\Vert +\Vert \hat{\beta }^o-\beta _0\Vert =O_P(\sqrt{(K+s)/n}+1/K^d)\).

Now what is left is to demonstrate (11). Using the notation

and

\(\Vert \sum _i{q}_1(U_ia_0^*,Y)^TU_i\Vert \) can be written as \(\Vert \mathbf q _1(U^Ta_0^*,Y)^TU\Vert \). For an arbitrary \(v\in R^{qK+s}\), we have \(|\mathbf q _1(U^Ta_0^*,Y)^TUv|^2\le \Vert P_U\mathbf q _1(U^Ta_0^*,Y)\Vert ^2\cdot \Vert Uv\Vert ^2\), where \(P_U=U(U^TU)^{-1}U^T\) is a projection matrix.

Obviously \(\Vert Uv\Vert ^2=O_P(n\Vert v\Vert ^2)\). Besides, we have

where \(\mathbf m =(m_1,\ldots ,m_n)^T\) with \(m_i=W_i^T\alpha _0(T_i)+X_i^T\beta _0\). The first term is of order \(O_P(tr(P_U))=O_P(K+s)\) since \(\mathbf q _1(\mathbf m ,Y)\) has mean zero conditional on the predictors. The second term is bounded by, using Taylor expansion and (C2), \(O_P(n/K^{2d})\).

\(\square \)

Proof of Theorem 2

2 As in the previous theorem, we still omit the superscript \((1)\) here. Let \(\tilde{\mathcal{G }}\) be the subset of \(\mathcal G \) where \(h_j\)’s are constrained to be polynomial splines. The functions \(\Gamma _{j}\in \mathcal G \) can be approximated by \(\hat{\Gamma }_{j}\in \tilde{\mathcal{G }}\) with \(\Vert \hat{\Gamma }_{j}-\Gamma _{j}\Vert _\infty =O(K^{-d})\). Let \(\hat{\Gamma }=(\hat{\Gamma }_1,\ldots ,\hat{\Gamma }_s)^T\). Consider the following functional

where \(\hat{m}_i=Z_i^T\hat{a}^o+X_i^T\hat{\beta }^o\) and \(\nu =(\nu _1,\ldots ,\nu _s)^T\). Obviously, the above functional is minimized by \(\nu =0\) which leads to the first-order condition

Since

where \(q_2(.,Y_i)\) is evaluated at some point between \(\hat{m}_i\) and \(m_i\), we can replace \(\hat{\Gamma }\) in (12) by \(\Gamma \) to get

Now we have

Using Theorem 1, we have \(\sum _iq_2(m_{i},Y_i)(Z_i^T\hat{a}^o-W_i^T\alpha (T_i))(X_i-\Gamma (W_i,T_i))=o_p(\sqrt{n})\) and \(\sum _iq_2^{\prime }(.,Y_i)(\hat{m}_i-m_{i})^2(X_i-\Gamma (W_i,T_i))=o_p(\sqrt{n})\). Also, it is easy to see that

by central limit theorem, and that

and asymptotic normality of \(\hat{\beta }\) follows. \(\square \)

The proof of Theorem 3 is based on the following proposition, which is a direct extension of Theorem 1 in Fan and Lv (2011) to the case of quasi-likelihood (but specialized to the SCAD penalty). A similar second-order sufficiency was also used in Kim et al. (2008) in linear models (see the proof of their Theorem 1). Thus the proof of the following proposition is omitted.

Proposition 1

\((a^T,\beta ^T)\in R^{qK+p}\) is a local minimizer of the SCAD-penalized quasi-likelihood (3) if

where \(Z_{ij}=(W_{ij}B_{1}(T_{i}),\ldots ,W_{ij}B_{K}(T_i))^T\in R^K\).

Proof of Theorem 3

3 We will show that \((\hat{a}^T,\hat{\beta }^T)=(\hat{a}^o, \hat{\beta }^{(1)}=\hat{\beta }^o,\hat{\beta }^{(2)}=0)\) satisfies (14)–(16). This will immediately imply all the results stated in Theorem 3.

Denote \(\hat{a}^*=(\hat{a}^o, \hat{\beta }^{(1)})\) and \(a_0^*=(a_0,\beta _0^{(1)})\). It trivially holds that \(\sum _iq_1(Z_i^T\hat{a}^o+X_i^T\hat{\beta }^o,Y_i)Z_{ij}=0, j=1,\ldots ,q\) and \(\sum _iq_1(Z_i^T\hat{a}^o+X_i^T\hat{\beta }^o,Y_i)X_{ij}=0, j=1,\ldots ,s\) by the definition of \(\hat{a}^o,\hat{\beta }^o\). Furthermore, note that \(|\hat{\beta }_j|\ge a\lambda \) is implied by

and both equations above are implied by (C7) as well as Theorem 1.

For \(j=s+1,\ldots ,p\), \(|\hat{\beta }_j|< \lambda \) is trivial since \(\hat{\beta }_j=0\). Furthermore, we have

where in the last step above we used (10).

Denote \(e=(1,\ldots ,1)^T\), \(\delta _j=(X_{1j}q_1(U_1^Ta_0^*,Y_1),\ldots ,X_{nj}q_1(U_n^Ta_0^*,Y_n))^T\), and \(P=(p_{ii^{\prime }})_{n\times n}\) with \(p_{ii^{\prime }}\!=\!q_2(z_i,Y_i)U_i^T(\sum _{i^{\prime }}q_2(z_{i^{\prime }},Y_{i^{\prime }})U_{i^{\prime }}U_{i^{\prime }}^T)^{-1}U_{i^{\prime }}\). By Taylor expansion, we can write \(\delta _j=\epsilon _j+\gamma _j\) with \(\epsilon _j=(X_{1j}q_1(m_{1},Y_1),\ldots ,X_{nj}q_1(m_{n},Y_n))^T\) and \(\gamma _j=(X_{1j}q_2(.,Y_1)(U_1^Ta_0^*-m_{1}),\ldots ,X_{nj}q_2(.,Y_n)(U_n^Ta_0^*-m_{n}))^T\), where \(m_{i}=\sum _j\alpha _{0j}(X_{ij})\) and \(q_2(.,Y_i)\) is evaluated at some point between \(U_i^Ta_0^*\) and \(m_{i}\).

Using these notations, (17) can be written as \(e^T(I-P)\delta _j=e^T(I-P)\epsilon _j+e^T(I-P)\gamma _j\). In Lemma 1 below we show

and

Thus (C5) implies \(\max _{j\ge s+1}\) \(|\sum _iq_1(U_i^T\hat{a}^*,Y_i)X_{ij}|=o_P(n\lambda )\) which completes the proof. \(\square \)

Lemma 1

Here we show that

and

Proof of Lemma 1

1 First, it is easy to see that all the eigenvalues of the matrix \(P\) are bounded by \(1\) (in fact the eigenvalue is either 0 or 1), and thus \(\Vert e^T(I-P)\Vert \le \sqrt{n}\). Write the vector \(e^T(I-P)\) as \(b=(b_1,\ldots ,b_n)^T\) and then \(e^T(I-P)\epsilon _j\) is written as \(\sum _ib_i\epsilon _{ij}\) with \(\epsilon _{ij}=X_{ij}q_1(m_{i},Y_i)\). By assumption (C6), we have

and thus

using that \(\Vert b\Vert ^2\le n\). Thus by Theorem 8.9 (Bernstein’s inequality) in van der Geer (2000), together with a simple union bound, we get

Thus if \(c=C\sqrt{n}\log (p\vee n)\) for sufficiently large \(C>0\), the above probability converges to zero, showing the validity of (18).

For the proof of (19), we only need to note that \(|e^T(I-P)\gamma _j|\le \Vert b\Vert \cdot \Vert \gamma _j\Vert =O_P(\sqrt{n}\cdot \sqrt{n}K^{-d})\) by (C2).

Rights and permissions

About this article

Cite this article

Hong, Z., Hu, Y. & Lian, H. Variable selection for high-dimensional varying coefficient partially linear models via nonconcave penalty. Metrika 76, 887–908 (2013). https://doi.org/10.1007/s00184-012-0422-8

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-012-0422-8