Abstract

We define a distribution on the unit sphere \(\mathbb {S}^{d-1}\) called the elliptically symmetric angular Gaussian distribution. This distribution, which to our knowledge has not been studied before, is a subfamily of the angular Gaussian distribution closely analogous to the Kent subfamily of the general Fisher–Bingham distribution. Like the Kent distribution, it has ellipse-like contours, enabling modelling of rotational asymmetry about the mean direction, but it has the additional advantages of being simple and fast to simulate from, and having a density and hence likelihood that is easy and very quick to compute exactly. These advantages are especially beneficial for computationally intensive statistical methods, one example of which is a parametric bootstrap procedure for inference for the directional mean that we describe.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A natural way to define a distribution on the unit sphere \(\mathbb {S}^{d-1}\) is to embed \(\mathbb {S}^{d-1}\) in \(\mathbb {R}^d\), specify a distribution for a random variable \(z \in \mathbb {R}^d\), then consider the distribution of z either conditioned to lie on, or projected onto, \(\mathbb {S}^{d-1}\). The general Fisher–Bingham and angular Gaussian distributions, defined respectively in Mardia (1975) and Mardia and Jupp (2000) can both be constructed this way by taking z to be multivariate Gaussian in \(\mathbb {R}^d\). Then the Fisher–Bingham distribution is the conditional distribution of z conditioned on \(\Vert z\Vert = 1\), and the angular Gaussian is the distribution of the projection \(z/ \Vert z\Vert \). The choice of the mean, \({\mu }\), and covariance matrix, \(V\), of z controls the concentration and the shape of the contours of the induced probability density on \(\mathbb {S}^{d-1}\).

It is usually not practical to work with the general Fisher–Bingham or angular Gaussian distributions, however, because they have too many free parameters to be identified well by data. This motivates working instead with subfamilies that have fewer free parameters and stronger symmetries.

In the spherical case, \(d=3\), the general distributions have 8 free parameters. Respective subfamilies with 3 free parameters are the Fisher and the isotropic angular Gaussian (IAG) distributions. Both are “isotropic” in the sense that they are rotationally symmetric about the mean direction, i.e., contours on the sphere are small circles centred on the mean direction. Respective subfamilies with 5 free parameters are the Bingham and the central angular Gaussian distributions, both of which are antipodally symmetric.

An important member of this Fisher–Bingham family is the Kent distribution (Kent, 1982). For \(d=3\), it has 5 free parameters, and it has ellipse-like contours on the sphere. This offers a level of complexity well suited to many applications, since the distribution is flexible enough to model anisotropic data yet its parameters can usually be estimated well from data. To our knowledge, nobody to date has considered its analogue in the angular Gaussian family. The purpose of this paper is to introduce such an analogue, which we call the elliptically symmetric angular Gaussian (ESAG), and establish some of its basic properties.

The motivation for doing so is that in some ways the angular Gaussian family (and hence ESAG) is much easier to work with than the Fisher–Bingham family (and hence the Kent distribution). In particular, simulation is easy and fast, not requiring rejection methods (which are needed for the Fisher–Bingham family Kent et al. 2013), and the density is free of awkward normalising constants, so the likelihood can be computed quickly and exactly. Hence in many modern statistical settings the angular Gaussian family is the more natural choice; see for example Presnell et al. (1998) who use it in a frequentist approach for circular data, and Wang and Gelfand (2013) and Hernandez-Stumpfhauser et al. (2017) who use it in Bayesian approaches for circular and spherical data, respectively.

In the following section, we introduce ESAG, first for general d before specialising to the case \(d=3\).

2 The elliptically symmetric angular Gaussian distribution (ESAG)

2.1 The general angular Gaussian distribution

The angular Gaussian distribution is the marginal distribution of the directional component of the multivariate normal distribution. Let

denote the multivariate normal density in \(\mathbb {R}^d\) with \(d \times 1\) mean vector \(\mu \), \(d \times d\) covariance matrix V, assumed non-singular, and where |V| denotes the determinant of V. Then, writing \(z=ry\), where \(r=\Vert z\Vert =(z^\top z)^{1/2}\) and \(y=z/\Vert z\Vert \in \mathcal {S}^{d-1}\), and using \(\mathrm {d}z=r^{d-1} \mathrm {d} r\, \mathrm {d} y\), where \(\mathrm {d} y\) denotes Lebesgue, or geometric, measure on the unit sphere \(\mathcal {S}^{d-1}\), and integrating r over \(r>0\), leads to

where \(C_d=1/(2\pi )^{(d-1)/2}\) and, for real \(\alpha \),

Direct calculations show that

where \(\phi (\cdot )\) and \(\Phi (\cdot )\) are the standard normal probability density function and cumulative density function, respectively; more details about the \(\mathcal {M}_d(\alpha )\) are given in Sect. A.1.

2.2 An elliptically symmetric subfamily

The subfamily of (2) that we shall call the elliptically symmetric angular Gaussian distribution (ESAG) is defined by the two conditions

under which the angular Gaussian density (2) simplifies to

From (5), the positive definite matrix V has a unit eigenvalue. If the other eigenvalues are

then the inverse of V can be written

where \(\xi _1\), ..., \(\xi _{d-1}\) and \(\xi _d=\mu /\Vert {\mu }\Vert \) is a set of mutually orthogonal unit vectors. Moreover, constraint (6) means that

Once the d parameters in \({\mu }\) are fixed, then from (5) and (6) there are \(d-2\) remaining degrees of freedom for the eigenvalues of V, and \(d(d-1)/2 - (d-1)\) degrees of freedom for its unit eigenvectors. The total number of free parameters is thus \((d-1)(d+2)/2\), the same as for the multivariate normal in a tangent space \(\mathbb {R}^{d-1}\) to the sphere.

Condition (5) imposes symmetry about the eigenvectors of V. Without loss of generality, suppose that the eigenvectors are parallel to the coordinates axes; that is, each element of the vector \(\xi _j\) equals 0 except the jth which equals 1. Then if \(y=(y_1, \ldots , y_d)^\top \),

In this case, the density (7) depends only on \(y_j\) through \(y_j^2\) for \(j=1, \ldots , d-1\). Consequently, the density is invariant with respect to sign changes of the \(y_1,\dots ,y_{d-1}\), that is,

which implies reflective symmetry about 0 along the axes defined by \(\xi _1\), ..., \(\xi _{d-1}\). This type of symmetry is implied by ellipse-like contours of constant density inscribed on the sphere, and such contours arise when the density (7) is unimodal. Whether the density is unimodal depends on the nature of the stationary point at \(y=\mu /\Vert \mu \Vert \), which is characterised by the following proposition.

Proposition 1

Write \(\alpha =\Vert \mu \Vert \) and assume without loss of generality that (8) holds. Then (i) the ESAG always has a stationary point at \(y=\xi _d=\mu /\alpha \), and (ii) the stationary point at \(y=\xi _d\) is a local maximum if \(\rho _{d-1}\le \mathcal {H}_d(\alpha )\) and a local minimum if \(\rho _{d-1} > \mathcal {H}_d (\alpha )\), where

The proof of Proposition 1 is given in Appendix A. 2.

We conjecture that if the stationary point at \(y=\xi _d\) is a local maximum, then it is also a global maximum, and that in this case the distribution is unimodal; and in the case that the stationary point is a local minimum, then the distribution is bimodal. A rigorous proof appears difficult, but the conjecture is strongly supported by some extensive numerical investigations that we have performed and describe as follows. For each of a wide variety of combinations of ESAG parameters with \(d=3\) — in particular, choosing for \((\alpha , \gamma _1, \gamma _2)^\top \) values on a \(9 \times 9 \times 9\) rectangular lattice on \([0.2,20] \times [-5,5] \times [-5,5]\) — we performed numerical maximisation to find

Using the Manopt implementation (Boumal et al., 2014) of the trust region approach of Absil et al. (2007), for each \((\alpha , \gamma _1, \gamma _2)^\top \), we performed the optimisation multiple times from distinct starting points with the rationale that if the distribution is indeed multimodal, then optimisations from different starting points will converge to different local optima. We chose to use 42 different starting points since this enabled the points to be exactly equi-spaced on \(\mathbb {S}^2\) using the method of Teanby (2006). For each \((\alpha , \gamma _1, \gamma _2)^\top \), we hence computed \(y^\text {max}_1,\dots ,y^\text {max}_{42}\). Instances that converged to the same mode had values of \(y^\text {max}\) that were not quite identical, owing to the finite tolerance of the numerical optimisation. To account for this, we identified the number of modes according to clustering of the \(\{y_i^\text {max}\}\), designating the distribution unimodal if and only if \(\Vert y_i^\text {max} - \bar{y}^\text {max} \Vert < 10^{-6}\) for all i, where \(\bar{y}^\text {max} = (1/42) \sum _i y_i^\text {max}\). In cases identified as non-unimodal by this criterion, we used k-means clustering to identify \(k=2\) clusters; in each such case, every \(y_i^\text {max}\) was within a distance \(10^{-6}\) of its cluster centre indicating bimodality. In agreement with the conjecture, amongst the \(9^3 = 729\) parameter cases we considered, in every 553 cases with \(\rho _{d-1}\le \mathcal {H}_d(\alpha )\), the foregoing procedure identified the distribution to be unimodal, and in every 176 cases with \(\rho _{d-1} > \mathcal {H}_d(\alpha )\), it identified the distribution to be bimodal.

The next proposition concerns the limiting distribution of a sequence of unimodal ESAG distributions as the sequence becomes more highly concentrated. Without loss of generality, we fix \(\xi _d=(0,\ldots ,0,1)^\top \), take \(\xi _1, \ldots , \xi _{d-1}\) to be the other coordinate axes and define

Neglecting the hemisphere defined by \(y_d\) negative is no drawback when considering \(\alpha =\Vert \mu \Vert \rightarrow \infty \) as follows, because in this limit the distribution becomes increasingly concentrated about \(y=\xi _d\).

Proposition 2

Assume the conditions (5) and (6), and therefore (10), hold, where the \(\rho _j\) are assumed to be fixed, and suppose that \(\alpha =\Vert \mu \Vert \rightarrow \infty \). Then

where \(\tilde{y} = (y_1,\dots ,y_{d-1})^T\) and \(\Omega =\text {diag}\{\rho _1^{-1}, \ldots , \rho _{d-1}^{-1}\} = \sum _{j=1}^{d-1} \rho _j^{-1} \xi _j \xi _j^T\).

Remark 1

In the general case, we replace the coordinate vectors \(\xi _1, \ldots , \xi _d\) by an arbitrary orthonormal basis, and then the limit distribution lies in the vector subspace spanned by \(\xi _1, \ldots , \xi _{d-1}\).

Remark 2

Proposition 2 is noteworthy because it is atypical for high-concentration limits within the angular Gaussian family to be Gaussian.

2.3 A parameterisation of ESAG for \(d=3\)

An important practical question is how to specify a convenient parameterisation for the matrix V so that it satisfies the constraints (5) and (6). With \(d=3\), such a V has two free parameters.

We first define a pair of unit vectors \(\tilde{\xi }_1\) and \(\tilde{\xi }_2\) which are orthogonal to each other and to the mean direction \({\xi }_3 = {\mu }/ \Vert {\mu }\Vert \):

where \(\mu =(\mu _1, \mu _2, \mu _3)^\top \) and \(\mu _0=(\mu _2^2+\mu _3^2)^{1/2}\); then \(\tilde{\xi }_1\) and \(\tilde{\xi }_2\) in (14) are smooth functions of \(\mu \) except at \(\mu _2=\mu _3=0\), where there is indeterminacy. To enable the axes of symmetry, \(\xi _1\) and \(\xi _2\), to be an arbitrary rotation of \(\tilde{\xi }_1\) and \(\tilde{\xi }_2\), we define

where \(\psi \in (0, \pi ]\) is the angle of rotation. Substituting \(\xi _1\) and \(\xi _2\) from (15) into (9), and putting \(\rho _1=\rho \) and \(\rho _2=1/\rho \) where \(\rho \in (0,1]\), gives the parameterisation

The disadvantage that \(\rho \) and \(\psi \) are restricted can be resolved by writing (16) in terms of unrestricted parameters \(\gamma _1\) and \(\gamma _2\) as follows.

Lemma 1

Define \({\gamma }=(\gamma _1, \gamma _2)^\top \) by

then \(V^{-1}\) in (16) is

Henceforth, we will use this parameterisation. For a random variable y with ESAG distribution, we will write \(y \sim {\text {ESAG}}({\mu }, {\gamma })\). The rotationally symmetric isotropic angular Gaussian corresponds to

hence we will write \(\text {ESAG}({\mu },0) \equiv \text {IAG}({\mu })\).

Remark 3

(Simulation.) To simulate \(y \sim \text {ESAG}(\mu , \gamma )\), simulate \(z \sim N(\mu , V)\) where \(V=V(\mu , \gamma )\) is defined in (18) then set \(y = z / \Vert z \Vert \).

Figure 1 shows some examples of samples from the ESAG distribution for various values of the parameters \(\mu \) and \(\gamma \).

Samples of 100 observations from some example ESAG distributions with parameters (clock-wise from top left): \({\mu }= (-1, -2, 2)^\top , {\gamma }= (-1, 1)^\top \); \({\mu }= (-2, -4, 4)^\top , {\gamma }= (-1, 1)^\top \); \({\mu }= (-2, -4, 4)^\top , {\gamma }= (0, 0)^\top \); \({\mu }= (-2, -4, 4)^\top , {\gamma }= (-3, 1)^\top \)

Remark 4

(A test for rotational symmetry.) For a sample of observations \(y_1,\dots ,y_n\) assumed independent and identically distributed, a standard large-sample likelihood ratio test can be used to test \(H_0: y_i \sim \text {IAG}({\mu })\) vs \(H_1: y_i \sim \text {ESAG}({\mu },{\gamma })\). Let \(\hat{l}_0\) and \(\hat{l}_1\) be the values of the maximised log-likelihoods under \(H_0\) and \(H_1\), respectively. The models are nested and differ by two degrees of freedom, and by Wilks’ theorem, when n is large the statistic \(T = 2(\hat{l}_1 - \hat{l}_0)\) has approximately a \(\chi ^2_2\) distribution if \(H_0\) is true, and \(H_0\) is rejected for large values of T. The null distribution can alternatively be approximated using simulation by the parametric bootstrap, that is, by simulating a sample of size n from the null model \(H_0\) at the maximum likelihood estimate of the parameters, computing the test statistic, T, and then repeating this a large number, say B, times. The empirical distribution of the resulting bootstrapped statistics \(T_1^*,\dots ,T_B^*\) approximates the null distribution of T.

Estimates of the Earth’s historic magnetic pole position; see Sect. 2.5 for a description of the data. (Left) shows contours of constant probability density for fitted models corresponding to 99, 95 and 50% coverage and (Right) shows 95% confidence regions for the mean direction calculated as described in Sect. 2.4. ESAG is shown with solid blue lines and the IAG with black dotted lines

Remark 5

(Parameter orthogonality.) The parameter vectors \(\mu \) and \(\gamma \) are orthogonal, in the sense that

where \(\mathcal {I}_{\mu , \gamma }= -\text {E}_{\mu ,\gamma }\left[ \partial ^2 \log f_1/\partial \mu \partial {\gamma }^\top \right] \). Moreover, the directional and magnitudinal components \(\mu \) and \(\gamma \), that is, \(\Vert \mu \Vert \), \(\mu /\Vert \mu \Vert \), \(\Vert \gamma \Vert \) and \({\gamma }/\Vert \gamma \Vert \), are all mutually orthogonal. The proofs follow easily from the symmetries of \(f_\text {ESAG}\) and are omitted.

Often in applications, there is particular interest in the directional mean, \(m = {\mu }/ \Vert {\mu }\Vert \). A parametric bootstrap procedure to construct confidence regions for m, which exploits both the ease of simulation and the parameter orthogonality, is as follows.

2.4 Parametric bootstrap confidence regions for \(m = {\mu }/ \Vert {\mu }\Vert \)

Let \(l({\mu },{\gamma })\) denote the log-likelihood for a sample \(y_1,\dots ,y_n\) each of which is assumed to be an independent \(\text {ESAG}(\mu , \gamma )\) random variable and define

Denote the maximum likelihood estimate of \(({{\mu }}, {{\gamma }})\) by \((\hat{{\mu }}, \hat{{\gamma }})\), let \(\hat{m}=\hat{{\mu }}/||\hat{{\mu }}||\), \(\hat{\Sigma }=\Sigma (\hat{{\mu }}, \hat{{\gamma }})\) and let \(\hat{{\xi }}_1\) and \(\hat{{\xi }}_2\) denote the maximum likelihood estimates of the axes of symmetry (15), such that \(\hat{m}\), \(\hat{{\xi }}_1\) and \(\hat{{\xi }}_2\) are mutually orthogonal unit vectors. Define the matrix \(\hat{\xi }=(\hat{{\xi }}_1 , \hat{{\xi }}_2)^\top \), then test statistic

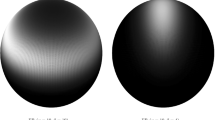

A comparison of ESAG and Kent densities with parameters matched by fitting each distribution to the same dataset; see Sect. 3 for details. (Top) shows a tangent plane projection, in terms of tangent plane coordinates \(\nu _1\) and \(\nu _2\), of contours of constant probability density for coverage levels 95, 90, 50, 25, 10, 5%. (Centre) and (Bottom) show transects of the two probability density functions with \(\nu _2=0\) and \(\nu _1 = 0\), respectively

is suitable for defining confidence regions for m as \(\left\{ m \in \mathbb {S}^2: \, T(m) \le c \right\} \); see Fisher et al. (1996) for discussion of test statistics of this form in the context of non-parametric bootstrap procedures. For a given significance level, \(\alpha \), the constant c can be determined as follows: simulate B bootstrap samples each of size n from ESAG(\(\hat{{\mu }},\hat{{\gamma }}\)), and hence with \(m = \hat{m}\), and for each sample compute the test statistic (19), with \(\hat{\xi }, \hat{{\mu }}, \hat{\Sigma }\) replaced by corresponding quantities calculated from the bootstrap sample; then c is the \((1-\alpha )\) quantile of the resulting statistics \(T_1^*(\hat{m}), \dots , T_B^*(\hat{m})\). Examples of confidence regions calculated by this algorithm are shown in Fig. 2 (right).

2.5 An example: estimates of the historic position of Earth’s magnetic pole

This dataset contains estimates of the position of the Earth’s historic magnetic pole calculated from 33 different sites in Tasmania (Schmidt 1976). The data are shown in Fig. 2 with contours of fitted IAG and ESAG distributions. The maximum likelihood estimates of the parameters are respectively

and

Twice the difference in maximised log-likelihoods equals 14.12, which when referred to a \(\chi ^2_2\) distribution (see Remark 4) corresponds to a p-value of less than \(10^{-3}\), indicating strong evidence in favour of ESAG over the IAG.

3 A comparison of ESAG with the Kent distribution

Figure 3 shows contours and transects of the densities of ESAG and Kent distributions. The parameter values for each are computed by fitting the two models to a large sample of independent and identically distributed data from ESAG(\({\mu }\),\({\gamma }\)), with \({\mu }= (0, 0, 2.5)^\top \) and \({\gamma }= (0.75, 0)^\top \). For the inner contours ESAG is more anisotropic than the matched Kent distribution and appears slightly more peaked at the mean. Besides these small differences, the figure shows that ESAG and Kent distributions are very similar distributions in this example, as we have found them to be more generally. Indeed, preliminary results, not presented here, suggest that for typical sample sizes it is usually very difficult to distinguish between them using a statistical criterion. This warrants making the modelling choice between using the Kent distribution or the ESAG on grounds of practical convenience. The Kent distribution is a member of the exponential family, but its density involves a non-closed-form normalising constant, and simulation requires a rejection algorithm (Kent et al. 2013). The ESAG distribution has a density that is less tidy than the Kent density, hence less suited to computing moment estimators, etc., but this is not much of a drawback given that its density can be computed exactly so that the exact likelihood can be easily maximised. Moreover, simulating from ESAG is particularly quick and easy (see Remark 3).

Table 1 shows the results of a simulation study, including computational timings, for fitting ESAG and Kent densities to ESAG and Kent simulated data. To approximate the Kent normalising constant when fitting the Kent distribution, we use a saddlepoint approximation method (see Kume et al. 2013), and for simulating from the Kent distribution, we use the rejection method of Kent et al. (2013). A notion of accuracy of the fitted model is how well the mean direction of the fitted model, \(\hat{m}\), corresponds with the population mean direction, m. A measure we use for this is

with the expectation approximated by Monte Carlo; hence in the tables, we report

where \(\hat{m}_{(i)}\) is the mean direction of the fitted model for the ith run out of b Monte Carlo runs. We also consider accuracy of the major and minor axes of the fitted model. Since the signs of \(\hat{\xi }_1\) and \(\hat{\xi }_2\) are arbitrary, in this case we define

and similar for \(\hat{\xi }_2\).

Note that in interpreting the results in Table 1, the different simulation times of ESAG and Kent should be compared across columns, whereas the fitting times and accuracies should be compared across rows.

The results show, as expected, that the accuracy of \(\hat{m}\), \(\hat{\xi }_1\), and \(\hat{\xi }_2\) is typically better when the data-generating model is fitted. However, the accuracy is not dramatically worse when the non-data-generating model is fitted, i.e., when ESAG is fitted to Kent data, or the Kent distribution is fitted to ESAG data. There is a very notable difference in computation times between ESAG and Kent: for both simulation and fitting, ESAG is typically more than an order of magnitude faster than Kent.

4 Conclusion

In the pre-computer days of statistical modelling, the Fisher–Bingham family was perhaps favoured over the angular Gaussian family on account of having a simpler density, which makes it more amenable to constructing classical estimators such as moment estimators. However, in the era of computational statistics, the less simple form of the angular Gaussian density is hardly a barrier and is more than compensated by having a normalising constant that is trivial to evaluate. The likelihood can consequently be computed quickly and exactly, and maximised directly. Wang and Gelfand (2013) have recently argued in favour of the general angular Gaussian distribution as a model for Bayesian analysis of circular data. For spherical data, a major obstacle to using the general angular Gaussian distribution is that its parameters are poorly identified by the data. The ESAG subfamily overcomes this problem, and is a direct analogy of the Kent subfamily of the general Fisher–Bingham distribution. Besides having a tractable likelihood, the ease and speed with which ESAG can be simulated makes it especially well suited to methods of simulation-based inference. Natural wider applications of ESAG include using it as an error distribution for spherical regression models with anisotropic errors; for classification on the sphere (as a model for class-conditional densities); and for clustering spherical data (based on ESAG mixture models). Code written in MATLAB for performing calculations in this paper is available at the second author’s web page.

References

Absil, P.A., Baker, C.G., Gallivan, K.A.: Trust-region methods on Riemannian manifolds. Found. Comput. Math. 7(3), 303–330 (2007). doi:10.1007/s10208-005-0179-9

Boumal, N., Mishra, B., Absil, P.A., Sepulchre, R.: Manopt, a Matlab toolbox for optimization on manifolds. J. Mach. Learn. Res. vol. 15, pp. 1455–1459. http://www.manopt.org (2014)

Fisher, N., Hall, P., Jing, B.Y., Wood, A.T.A.: Improved pivotal methods for constructing confidence regions with directional data. J. Am. Stat. Assoc. 91(435), 1062–1070 (1996)

Hernandez-Stumpfhauser, D., Breidt, F., van der Woerd, M.: The general projected normal distribution of arbitrary dimension: modeling and Bayesian inference. Bayesian Anal. 12(1), 113–133 (2017)

Kent, J.T.: The Fisher-Bingham distribution on the sphere. J. R. Stat. Soc. Series B 44, 71–80 (1982)

Kent, J.T., Ganeiber, A., Mardia, K.V.: A new method to simulate the Bingham and related distributions in directional data analysis with applications. arXiv preprint arXiv:1310.8110 (2013)

Kume, A., Preston, S.P., Wood, A.T.A.: Saddlepoint approximations for the normalizing constant of Fisher-Bingham distributions on products of spheres and Stiefel manifolds. Biometrika 100(4), 971–984 (2013)

Mardia, K.V.: Statistics of directional data (with discussion). J. R. Stat. Soc. Series B 37, 349–393 (1975)

Mardia, K.V., Jupp, P.E.: Directional Statistics. Wiley, Hoboken (2000)

Presnell, B., Morrison, S.P., Littell, R.C.: Projected multivariate linear models for directional data. J. Am. Stat. Assoc. 93, 1068–1077 (1998)

Schmidt, P.: The non-uniqueness of the Australian Mesozoic palaeomagnetic pole position. Geophys. J. Int. 47(2), 285–300 (1976)

Teanby, N.: An icosahedron-based method for even binning of globally distributed remote sensing data. Comput. Geosci. 32(9), 1442–1450 (2006)

Wang, F., Gelfand, A.: Directional data analysis under the general projected normal distribution. Stat. Methodol. 10(1), 113–127 (2013)

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the Engineering and Physical Sciences Research Council (grant number EP/K022547/1).

Proofs

Proofs

1.1 Properties of the function \(\mathcal {M}_{d}(\alpha )\)

Integrating (3) by parts, we obtain

which implies that

Moreover, differentiating (3) with respect to \(\alpha \), and exchanging the order of differentiation and integration on the RHS, we obtain

where a prime denotes differentiation; and, using (21) and (22) together, it is found that

Further points to note, which are easily established by induction, are that we can write

where \(\mathcal {P}_d(\alpha )\) is a polynomial of order d and \(\mathcal {Q}_d\) is a polynomial of order \(d-1\); and \(\mathcal {P}_d(\alpha )\) inherits the properties (21) and (23) of \(\mathcal {M}_d(\alpha )\), while \(\mathcal {Q}_d(\alpha )\) inherits (21) but not (23); instead of (23), we have \(\mathcal {Q}_d^\prime (\alpha )=\mathcal {Q}_{d+1}(\alpha ) - \mathcal {P}_d(\alpha )\). One relevant property of \(\mathcal {P}_d(\alpha )\) is that the coefficient of its leading term \(\alpha ^d\) is 1; this follows from either (21) or (23) applied repeatedly to \(\mathcal {P}_{d}(\alpha )\). As an easy consequence, we obtain a result that is used below: for any fixed integer \(d \ge 0\),

1.2 Proof of Proposition 1

From (8) it follows that \(1/\rho _{d-1} \le 1/\rho _j\) for \(j=1,\ldots , d-2\). As a consequence, we can restrict attention to y of the form \(y=(0,\ldots , u, (1-u^2)^{1/2})\) where u is in a neighbourhood of 0. Substituting this form of y into (7) and differentiating, it is seen that

and, using (22), it is found that

where \(\delta =(\rho _{d-1}-1)/\rho _{d-1}\). The point \(u=0\), which corresponds to \(y=\xi _d\), is a maximum or minimum depending on whether (25) is negative or positive and, using this fact, Proposition 2.1 follows after some elementary further manipulations. \(\square \)

1.3 Proof of Proposition 2

First, consider the exponent in (7). Using (9) it follows after some elementary calculations that

Now define \(u_j=\alpha y_j\), \(j=1,\ldots , d-1\) and suppose that \(\alpha \rightarrow \infty \) while the \(u_j\) remain fixed. Then, since \(\sum _{j=1}^d \left( y^\top \xi _j \right) ^2 = 1\), it follows that as \(\alpha \rightarrow \infty \), \(y \rightarrow 1\). Consequently as the \(\rho _j\) are remaining fixed, it is easy to see that

Moreover, from (12) it follows that \(y^\top \mu \sim \alpha \) and therefore, from (24),

Letting \(\alpha \rightarrow \infty \) and substituting (26), (27) and (28) into (7) we obtain pointwise convergence to the \((d-1)\)-dimensional multivariate Gaussian density \(\phi _{d-1}(u |0_{d-1}, \Omega )\) multiplied by a factor \(\alpha ^{d-1}\); this factor is the Jacobian of the transformation from \((y_1, \ldots , y_{d-1})^\top \) to \((u_1, \ldots , u_{d-1})^\top \). \(\square \)

1.4 Proof of Lemma 1

Using the standard trigonometric results \(\cos ^2 \psi = (1+\cos 2 \psi )/2\) and \(\sin ^2 \psi =(1-\cos 2\psi )/2\), and making use of the fact that \(\tilde{\xi }_1\tilde{\xi }_1^\top +\tilde{\xi }_2 \tilde{\xi }_2^\top +\xi _3 \xi _3^\top = I_3\) because \(\tilde{\xi }_1\), \(\tilde{\xi }_2\) and \(\xi _3\) are mutually orthogonal unit vectors, (16) becomes

From (17), \((\gamma _1^2 +\gamma _2^2)^{1/2}=(\rho ^{-1}-\rho )/2\), and we may solve for \(\rho \) to obtain \(\rho =(1+\gamma _1^2+\gamma _2^2)^{1/2}-(\gamma _1^2+\gamma _2^2)^{1/2}\), and with some elementary further calculation we see that

Finally, substituting (17) and (30) into (29), we obtain (18).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Paine, P.J., Preston, S.P., Tsagris, M. et al. An elliptically symmetric angular Gaussian distribution. Stat Comput 28, 689–697 (2018). https://doi.org/10.1007/s11222-017-9756-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-017-9756-4