Abstract

We consider a branching particle system consisting of particles moving according to the Ornstein–Uhlenbeck process in \(\mathbb {R}^d\) and undergoing a binary, supercritical branching with a constant rate \(\lambda >0\). This system is known to fulfill a law of large numbers (under exponential scaling). Recently the question of the corresponding central limit theorem (CLT) has been addressed. It turns out that the normalization and the form of the limit in the CLT fall into three qualitatively different regimes, depending on the relation between the branching intensity and the parameters of the Ornstein–Uhlenbeck process. In the present paper, we extend those results to \(U\)-statistics of the system, proving a law of large numbers and CLT.

Similar content being viewed by others

1 Introduction

We consider a single particle located at time \(t=0\) at \(x \in \mathbb {R}^d\), moving according to the Ornstein–Uhlenbeck process and branching after an exponential time independent of the spatial movement. The branching is binary and supercritical, with probability \(p > 1/2\) the particle is replaced by two offspring, and with probability \(1-p\) it vanishes.

The offspring particles follow the same dynamics (independently of each other). We will refer to this system of particles as the OU branching process and denote it by \(X = \left\{ X_t \right\} _{t\ge 0}\).

We identify the system with the empirical process, i.e., \(X\) takes values in the space of Borel measures on \(\mathbb {R}^d\) and for each Borel set \(A, X_t(A)\) is the (random) number of particles at time \(t\) in \(A\). We refer to [14] for the general construction of \(X\) as a measure-valued stochastic process.

It is well known (see, e.g., [16]) that the system satisfies the law of large numbers, i.e., for any bounded continuous function \(f\), conditionally on the set of non-extinction

where \(|X_t|\) is the number of particles at time \(t, \left\{ X_t(1), X_t(2),\ldots , X_t(|X_t|) \right\} \) are their positions, \(\left\langle X_t, f \right\rangle := \sum _{i=1}^{|X_t|}f(X_t(i))\) and \(\varphi \) is the invariant measure of the Ornstein–Uhlenbeck process.

In a recent article [1], we investigated second-order behavior of this system and proved central limit theorems (CLT) corresponding to (1). We found three qualitatively different regimes, depending on the relation between the branching intensity and the parameters of the Ornstein–Uhlenbeck process.

In the present article, we extend these results on the LLN and CLT to the case of \(U\)-statistics of the system of arbitrary order \(n \ge 1\), i.e., to random variables of the form

(note that \(U^1_t(f)=\left\langle X_t, f \right\rangle \)). Our investigation parallels the classical and well-developed theory of \(U\)-statistics of independent random variables; however, we would like to point out that in our context, additional interest in this type of functionals of the process \(X\) stems from the fact that they capture “average dependencies” between particles of the system. This will be seen from the form of the limit, which turns out to be more complicated than in the i.i.d. case. This is one of the motivations for studying \(U\)-statistics in a more general context than for independent random variables. Another one is that while being structurally the simplest generalization of additive functionals (which are \(U\)-statistics of degree one), they may be considered building blocks for other, more complicated statistics as they appear naturally in their Taylor expansions (see [7], where in the i.i.d. context such expansions are used in the analysis of some statistical estimators). In another context, they also appear in the study of tree-based expansions and propagation of chaos in interacting particle systems (see [9–11, 23]).

The organization of the paper is as follows. After introducing the basic notation and preliminary facts in Sect. 2, we describe the main results of the paper in Sect. 3. Next (Sect. 4), we restate the results in the special case of \(n=1\) (as proven in [1]) to serve as a starting point for the general case. Finally, in Sect. 5, we provide proofs for arbitrary \(n\), postponing some of the technical details (which may obscure the main ideas of the proofs) to Sect. 6. We conclude with some remarks concerning the so-called non-degenerate case (Sect. 7).

2 Preliminaries

2.1 Notation

For a branching system \(\left\{ X_t \right\} _{t\ge 0}\), we denote by \(|X_t|\) the number of particles at time \(t\) and by \(X_t(i)\)—the position of the \(i\)th (in a certain ordering) particle at time \(t\). We sometimes use \(\mathbb {E}{}_x\) or \(\mathbb {P}_x\) to denote the fact that we calculate the expectation for the system starting from a particle located at \(x\). We use also \(\mathbb {E}{}\) and \(\mathbb {P}\) when this location is not relevant.

By \(\rightarrow ^d \), we denote the convergence in law. We use \(\lesssim \) to denote the situation when an inequality holds with a constant \(c>0\), which is irrelevant to calculations, e.g., \(f(x)\lesssim g(x)\) means that there exists a constant \(c>0\) such that \(f(x) \le c g(x)\).

By \(x \circ y = \sum _{i=1}^d x_i y_i\), we denote the standard scalar product of \(x,y\in \mathbb {R}^d\), by \(\Vert \cdot \Vert \) the corresponding Euclidean norm. By \(\otimes ^n\), we denote the \(n\)-fold tensor product.

We use also \(\left\langle f, \mu \right\rangle :=\int _{\mathbb {R}^d} f(x) \mu {({\mathrm{d}}x) }\). We will write \(X \sim \mu \) to describe the fact that a random variable \(X\) is distributed according to the measure \(\mu \), similarly \(X\sim Y\) will mean that \(X\) and \(Y\) have the same law.

For a subset \(A\) of a linear space by \(\mathrm{span}(A)\), we denote the set of finite linear combinations of elements of \(A\).

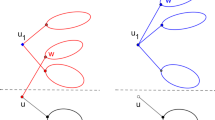

In the paper, we will use Feynman diagrams. A diagram \(\gamma \) on a set of vertices \(\left\{ 1,2,\ldots ,n \right\} \) is a graph on \(\left\{ 1,2,\ldots ,n \right\} \) consisting of a set of edges \(E_\gamma \) not having common endpoints and a set of unpaired vertices \(A_\gamma \). We will use \(r(\gamma )\) to denote the rank of the diagram, i.e., the number of edges. For properties and more information, we refer to [21, Definition 1.35].

In the paper, we will use the space

We endow this space with the following norm

where \(n(x):=\exp \left( -\sum _{i=1}^d |x_i| \right) \). We will also use

to denote the space of continuous compactly supported functions.

Given a function \(f\in \mathcal {P}^{}(\mathbb {R}^d)\), we will implicitly understand its derivatives (e.g., \(\frac{ \partial f}{\partial x_i}\)) in the space of tempered distributions (see, e.g., [25, p. 173]).

By \(f(a\cdot )\), we denote the function \(x\mapsto f(ax)\).

Let \(\mu _1, \mu _2\) be two probability measures on \(\mathbb {R}\), and \({{\mathrm{Lip}}}(1)\) be the space of 1-Lipschitz functions \(\mathbb {R}\mapsto [-1,1]\). We define

It is well known that \(m\) is a distance metrizing the weak convergence (see, e.g., [12, Theorem 11.3.3]). One easily checks that when \(\mu _1, \mu _2\) correspond to two random variables \(X_1,X_2\) on the same probability space then we have

2.2 Basic Facts on the Galton–Watson Process

The number of particles \(\left\{ |X_t| \right\} _{t\ge 0}\) is the celebrated Galton–Watson process. We present basic properties of this process used in the paper. The main reference in this section is [2]. In our case, the expected total number of particles grows exponentially at the rate

The process becomes extinct with probability (see [2, Theorem I.5.1])

We will denote the extinction and non-extinction events by \(Ext\) and \(Ext^c\), respectively. The process \(V_t := e^{-\lambda _p t} |X_t|\) is a positive martingale. Therefore, it converges (see also [2, Theorem 1.6.1])

We have the following simple fact (we refer to [1] for the proof).

Proposition 1

We have \(\left\{ V_\infty = 0 \right\} = Ext\) and conditioned on non-extinction \(V_\infty \) has the exponential distribution with parameter \(\frac{2p-1}{p}\). We have \(\mathbb {E}{}(V_\infty ) =1\) and \( {{\mathrm{Var}}}(V_\infty ) = \frac{1}{2p-1}\). \(\mathbb {E}{}e^{-4\lambda _p t} |X_t|^4\) is uniformly bounded, i.e., there exists \(C>0\) such that for any \(t\ge 0\) we have \(\mathbb {E}{}e^{-4\lambda _p t}|X_t|^4 \le C\). Moreover, all moments are finite, i.e., for any \(n\in \mathbb {N}\) and \(t\ge 0\) we have \(\mathbb {E}{} |X_t|^n < +\infty \).

We will denote the variable \(V_\infty \) conditioned on non-extinction by \(W\).

2.3 Basic Facts on the Ornstein–Uhlenbeck Process

We recall that the Ornstein–Uhlenbeck process with parameters \(\sigma ,\mu >0\) is a time-homogeneous Markov process with the infinitesimal operator

The corresponding semigroup will be denoted by \(\mathcal {T}_{}\). The density of the invariant measure of the Ornstein–Uhlenbeck process is given by

i.e., it is a centered Gaussian distribution with the covariance matrix \(\frac{\sigma ^2}{2\mu }\mathrm{Id}\). For simplicity, we will denote both this measure and its density with the same letter \(\varphi \).

2.4 Basic Facts Concerning \(U\)-Statistics

We will now briefly recall basic notation and facts concerning \(U\)-statistics. A \(U\)-statistic of degree \(n\) based on an \(\mathcal {X}\)-valued sample \(X_1,\ldots ,X_N\) and a function \(f :\mathcal {X}^n \rightarrow \mathbb {R}\) is a random variable of the form

The function \(f\) is usually referred to as the kernel of the \(U\)-statistic. Without loss of generality, it can be assumed that \(f\) is symmetric, i.e., invariant under permutation of its arguments. We refer the reader to [7, 22] for more information on \(U\)-statistics of sequences of independent random variables.

In our case, we will consider \(U\)-statistics based on positions of particles from the branching system as defined by (2). We will be interested in weak convergence of properly normalized \(U\)-statistics when \(t \rightarrow \infty \). Similarly as in the classical theory, the asymptotic behavior of \(U\)-statistics depends heavily on the so-called order of degeneracy of the kernel \(f\), which we will briefly recall in Sect. 5.2.

A function \(f\) is called completely degenerate or canonical (with respect to some measure of reference \(\varphi \), which in our case will be the stationary measure of the Ornstein–Uhlenbeck process) if

for all \(x_1,\ldots ,x_{k-1},x_{k+1},\ldots ,x_n \in \mathcal {X}\). The complete degeneracy may be considered a centeredness condition, in the classical theory of \(U\)-statistics canonical kernels are counterparts of centered random variables from the theory of sums of independent random variables. Their importance stems from the fact that each \(U\)-statistic can be decomposed into a sum of canonical \(U\)-statistics of different degrees, a fact known as the Hoeffding decomposition (see Sect. 5.2). Thus, in the main part of the article, we prove results only for canonical \(U\)-statistics. Their counterparts for general \(U\)-statistics, which can be easily obtained via the Hoeffding decomposition, are stated in Sect. 7.

3 Main Results

This section is devoted to the presentation of our results. The proofs are deferred to Sect. 5.

We start with the following law of large numbers (throughout the article when dealing with \(U\)-statistics of order \(n\) we will identify \(\underbrace{\mathbb {R}^d\times \cdots \times \mathbb {R}^d}_{n}\) with \(\mathbb {R}^{nd}\)).

Theorem 2

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^d\). Let us assume that \(f:\mathbb {R}^{nd} \mapsto \mathbb {R}\) is a bounded continuous function. Then, on the set of non-extinction \(Ext^c\) there is the convergence

Moreover, when \(f\in \mathcal {P}^{}(\mathbb {R}^{nd}) \), then the above convergence holds in probability.

Having formulated the law of large numbers, let us now pass to the corresponding CLTs. We recall that \(\mu \) is the drift parameter in (9) and \(\lambda _p\) is the growth rate (7). As already mentioned in the introduction, the form of the limit theorems depends on the relation between \(\lambda _p\) and \(\mu \), more specifically, we distinguish three cases: \(\lambda _p < 2\mu , \lambda _p = 2\mu \) and \(\lambda _p > 2\mu \). We refer the reader to [1] (Introduction and Section 3) for a detailed discussion of this phenomenon as well as its heuristic explanation and interpretation. Here, we only stress that the situation for \(\lambda _p > 2\mu \) differs substantially from the remaining two cases, as we obtain convergence in probability and the limit is not Gaussian even for \(n=1\). Intuitively, this is caused by large branching intensity which lets local correlations between particles prevail over the ergodic properties of the Ornstein–Uhlenbeck process.

3.1 Slow Branching Case: \(\lambda _p < 2\mu \)

Let \(Z\) be a Gaussian stochastic measure on \(\mathbb {R}^{d+1}\) with intensity

defined according to [21, Definition 7.17], where \(\delta _0\) is the Dirac measure concentrated at \(0\). We denote the stochastic integral with respect to \(Z\) by \(I\) and the corresponding multiple stochastic integral by \(I_n\) [21, Section 7.2]. We assume that \(Z\) is defined on some probability space \((\varOmega , \mathcal {F}, \mathbb {P})\).

For \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\) we define (we recall that \(\mathcal {T}_{}\) is the semigroup of the Ornstein–Uhlenbeck process)

It will be useful to treat this function as a function of \(n\) variables of type \(z_i:=(s_i, x_i) \in \mathbb {R}_+ \times \mathbb {R}^d\). For a Feynman diagram \(\gamma \) labeled by \(\left\{ 1,2, \ldots ,n \right\} \) and \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\) we define

where \(u_i = z_{j,k}\) if \((j,k)\in E_\gamma \) and (\(i=j\) or \(i=k\)) and \(u_i = z_i \) if \(i \in A_\gamma \). Less formally, for each pair \((j,k)\), we integrate over diagonal of coordinates \(j\) and \(k\) with respect to \(\mu _2\). The function obtained in this way is integrated using the multiple stochastic integral \(I_{|A_\gamma |}\). We define

where the sum spans over all Feynman diagrams labeled by \(\left\{ 1, 2, \ldots , n \right\} \).

We are now ready to formulate our main result for processes with small branching rate. Recall (8) and that \(W\) is \(V_\infty \) conditioned on \(Ext^c\).

Theorem 3

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^d\). Let us assume that \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\) is a canonical kernel and \(\lambda _p<2\mu \). Then conditionally on the set of non-extinction \(Ext^c\) there is the convergence

where \(G_1\sim \mathcal {N}(0, 1/(2p-1))\) and \(W, G_1, L_1(f)\) are independent random variables.

3.2 Critical Branching Case: \(\lambda _p = 2\mu \)

Before we present the main results in the critical branching case, we need to introduce some additional notation.

Consider the orthonormal Hermite basis \(\{h_i\}_{i\ge 0}\) for the measure \(\gamma = \mathcal {N}(0,\frac{\sigma ^2}{2\mu })\) (i.e, \(h_0 = 1, h_i\) is a polynomial of degree \(i\) and \(\int h_i h_j d\gamma = \delta _{ij}\)). Then for any positive integer \(n\), the set \(\{h_{i_1}\otimes \cdots \otimes h_{i_{nd}}\}_{i_1,\ldots ,i_{nd}\ge 0}\) of multivariate Hermite polynomials is an orthonormal basis in \(L_2(\mathbb {R}^{nd},\varphi ^{\otimes n})\). For a function \(f \in L_2(\mathbb {R}^{nd},\varphi ^{\otimes n})\) let \(\tilde{f}_{i_1,\ldots ,i_{nd}}\) be the sequence of coefficients of \(f\) with respect to this basis, i.e., \(f = \sum _{i_1,\ldots ,i_{nd}\ge 0} \tilde{f}_{i_1,\ldots ,i_{nd}} h_{i_1}\otimes \cdots \otimes h_{i_{nd}}\).

Let \(\mathcal {H}^n = \mathrm{span}\{h_{i_1}\otimes \cdots \otimes h_{i_{nd}}:\sum _{j=1}^d i_{kd + j} = 1, k=0,\ldots ,n-1\}\) be the subspace of \(L_2(\mathbb {R}^{nd},\varphi ^{\otimes n})\). In other words, \(\mathcal {H}^1\) is the subspace of \(L_2(\mathbb {R}^d,\varphi )\) spanned by Hermite polynomials of degree \(1\) on \(\mathbb {R}^d\) and \(\mathcal {H}^n = (\mathcal {H}^1)^{\otimes n}\).

Consider now a centered Gaussian process \((G_f)_{f\in L_2(\mathbb {R}^d,\varphi )}\) on \(L_2(\mathbb {R}^d,\varphi )\), with the covariance structure given by

where \(P\) is the orthogonal projection from \(L_2(\mathbb {R}^d,\varphi )\) onto \(\mathcal {H}^1\).

We will identify this process with a map \(I:L_2(\mathbb {R}^d,\varphi ) \rightarrow L_2(\varOmega ,\mathcal {F},\mathbb {P})\), such that \(I(f) = G_f\). One can easily check that \(I\) is a bounded linear operator. Moreover, \(I = IP\). In fact \(I\) is the stochastic integral of \(Pf\) with respect to the random Gaussian measure on \(\mathbb {R}^d\) with intensity \(2\lambda p\varphi \) (however, we will not use this fact in the sequel).

Since \(\mathcal {H}^n = (\mathcal {H}^1)^{\otimes n}\), there exists a unique linear operator \(\tilde{L}_2:\mathcal {H}^n \rightarrow L_2(\varOmega ,\mathcal {F},\mathbb {P})\) such that for any functions \(f_1,\ldots ,f_n \in \mathcal {H}^1\),

(we used here the fact that Gaussian variables have all moments finite).

Let now \(P_n :L_2(\mathbb {R}^{nd},\varphi ^{\otimes n}) \rightarrow \mathcal {H}^n\) be the orthogonal projection onto \(\mathcal {H}^n\). We have \(P_n = P^{\otimes n}\) and using the fact that \(\mathcal {H}^n\) are finite dimensional we obtain that the linear operator \(L_2 :L_2(\mathbb {R}^{nd},\varphi ^{\otimes n}) \rightarrow L_2(\varOmega ,\mathcal {F},\mathbb {P})\) defined as

is bounded. Note that for \(f_1,\ldots ,f_n \in L_2(\mathbb {R}^d,\varphi )\) we have

We are now ready to formulate the theorem, which describes the asymptotic behavior of \(U\)-statistics in the critical case.

Theorem 4

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^d\). Let us assume that \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\) is a canonical kernel and \(\lambda _p=2\mu \). Then conditionally on the set of non-extinction \(Ext^c\) there is the convergence

where \(G\sim \mathcal {N}(0, 1/(2p-1))\) and \(W, G, L_2(f)\) are independent random variables.

Remark 5

One can express \(L_2(f)\) in terms of the Hermite expansion of the function \(f\), which might give more insight into the structure of the limiting law. Indeed, define \(\tilde{h}_i :\mathbb {R}^d \rightarrow \mathbb {R}, i = 1,\ldots , d\), by \(\tilde{h}_i (x_1,\ldots ,x_d) = h_1(x_i)\) (thus, \(\tilde{h}_i = h_0^{\otimes (i-1)}\otimes h_1\otimes h_0^{\otimes (d-i)}\)). Then for \(f \in L_2(\mathbb {R}^{dn},\varphi ^{\otimes n})\),

where \(G_{i} = (2\lambda p)^{-1/2} G_{\tilde{h}_i}\). In particular \((G_1,\ldots ,G_d)\) is a vector of independent standard Gaussian variables (this follows easily from the covariance structure of the process \((G_f)\) and the fact that the functions \(\tilde{h}_i\) form an orthonormal system).

3.3 Fast Branching Case: \(\lambda _p > 2\mu \)

In order to describe the limit, we introduce an \(\mathbb {R}^d\)-valued process \(\left\{ H_t \right\} _{t\ge 0}\) by

The following two facts have been proved in [1, Propositions 3.9,3.10].

Proposition 6

\(H\) is a martingale with respect to the filtration of the OU branching system starting from \(x\in \mathbb {R}^d\). Moreover for \(\lambda _p>2\mu \), we have \(\sup _t \mathbb {E}{\left\| H_t \right\| _{ } ^{ 2 }} <+\infty \), therefore there exists \(H_\infty := \lim _{t\rightarrow +\infty } H_t\) (a.s. limit) and \(H_\infty \in L_2\). When the OU branching system starts from \(0\), then martingales \(V_t\) and \(H_t\) are orthogonal.

It is worthwhile to note that the distribution of \(H_\infty \) depends on the starting conditions.

Proposition 7

Let \(\left\{ X_t \right\} _{t\ge 0}\) and \(\{\tilde{X}_t\}_{t\ge 0}\) be two OU branching processes, the first one starting from \(0\) and the second one from \(x\). Let us denote the limit of the corresponding martingales by \(H_\infty ,\tilde{H}_\infty \), respectively. Then

where \(V_\infty \) is the limit given by (8) for the system \(X\).

\(H_\infty \) is \(\mathbb {R}^d\)-valued, we denote its coordinates by \(H^i_\infty \). Let \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\). We define

where we adopted the convention that \(x_{j,l}\) is the \(l\)th coordinate of the \(j\)th variable. By \(L_3(f)\), we will denote \(\tilde{L}_3(f)\) conditioned on \(Ext^c\).

Theorem 8

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^d\). Let us assume that \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\) is a canonical kernel and \(\lambda _p>2\mu \). Then conditionally on the set of non-extinction \(Ext^c\) there is the convergence

where \(G_1\sim \mathcal {N}(0, 1/(2p-1))\) and \((W,L_3(f)), G_1\) are independent. Moreover

3.4 Remarks on the CLT for \(U\)-Statistics of i.i.d. Random Variables

For comparison purposes, we will now briefly recall known results on the CLT for \(U\)-statistics of independent random variables. \(U\)-statistics were introduced in the 1940s in the context of unbiased estimation by Halmos [19] and Hoeffding who obtained the CLT for non-degenerate (degenerate of order 0, see Sect. 5.2) kernels [20]. The full description of the CLT was obtained in [15, 24] (see also the article [18] where the CLT is proven for a related class of \(V\)-statistics). Similarly as in our case, the asymptotic behavior of \(U\)-statistics based on a function \(f :\mathcal {X}^n\rightarrow \mathbb {R}\) and an i.i.d. \(\mathcal {X}\)-valued sequence \(X_1,X_2,\ldots \) is governed by the order of degeneracy of the function \(f\) (see Sect. 5.2) with respect to the law of \(X_1\) (call it \(P\)). The case of general \(f\) can be reduced to the canonical one, for which one has the weak convergence

where \(J_n\) is the \(n\)-fold stochastic integral with respect to the so-called isonormal process on \(\mathcal {X}\), i.e., the stochastic Gaussian measure with intensity \(P\).

For the small branching rate case, the behavior of \(U\)-statistics in our case resembles the classical one as the limit is a sum of multiple stochastic integrals of different orders. In the remaining two cases, the behavior differs substantially. This can be regarded as a result of the lack of independence. Although asymptotically the particles’ positions become less and less dependent, in short timescale, offspring of the same particle stay close one to another.

Let us finally mention some results for \(U\)-statistics in dependent situations, which have been obtained in the last years. In [6], the authors analyzed the behavior of \(U\)-statistics of stationary absolutely regular sequences and obtained the CLT in the non-degenerate case (with Gaussian limit). In [5], the authors considered \(\alpha \) and \(\varphi \) mixing sequences and obtained a general CLT for canonical kernels. Interesting results for long-range dependent sequences have been also obtained in [8]. A more recent interesting work (already mentioned in the introduction) is [9–11, 23], where the authors consider \(U\)-statistics of interacting particle systems.

4 The Case of \(n=1\)

In the special case of \(n=1\), the results presented in the previous section were proven in [1]. Although this case obviously follows immediately from the results for general \(n\), it is actually a starting point in the proof of the general result (similarly as in the case of \(U\)-statistics of i.i.d. random variables). Therefore, for the reader’s convenience, we will now restate this case in a simpler language of [1], not involving multiple stochastic integrals.

We start with the law of large numbers

Theorem 9

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^d\). Let us assume that \(f \in \mathcal {P}^{}(\mathbb {R}^d)\). Then

or equivalently on the set of non-extinction, \(Ext^c\), we have

Moreover, if \(f\) is bounded then the almost sure convergence holds.

4.1 Small Branching Rate: \(\lambda _p < 2\mu \)

We denote \(\tilde{f}(x):=f(x)- \left\langle f, \varphi \right\rangle \) and

Let us also recall (8) and that \(W\) is \(V_\infty \) conditioned on \(Ext^c\). In this case, the behavior of \(X\) is given by the following

Theorem 10

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^d\). Let us assume that \(\lambda _p<2\mu \) and \(f\in \mathcal {P}^{}(\mathbb {R}^d)\). Then \(\sigma _f^2 <+\infty \) and conditionally on the set of non-extinction \(Ext^c\), there is the convergence

where \(G_1\sim \mathcal {N}(0, 1/(2p-1)), G_2\sim \mathcal {N}(0,\sigma _f^2)\) and \(W, G_1, G_2\) are independent random variables.

4.2 Critical Branching Rate: \(\lambda _p = 2\mu \)

Recall the notation related to Hermite polynomials introduced in Sect. 3.2 and denote

(where the first equality follows from the form of the Gaussian density and its relation to Hermite polynomials, whereas the second one from the definition of \(P\)).

Note that the same symbol \(\sigma _f^2\) has already been used to denote the asymptotic variance in the small branching case. However, since these cases will always be treated separately, this should not lead to ambiguity.

Theorem 11

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^d\). Let us assume that \(\lambda _p=2\mu \) and \(f\in \mathcal {P}^{}(\mathbb {R}^d)\). Then \(\sigma _f^2 <+\infty \) and conditionally on the set of non-extinction \(Ext^c\) there is the convergence

where \(G_1\sim \mathcal {N}(0, 1/(2p-1)), G_2\sim \mathcal {N}(0,\sigma _f^2)\) and \(W, G_1, G_2\) are independent random variables.

4.3 Fast Branching Rate: \(\lambda _p > 2\mu \)

In the following theorem, we use the notation introduced in Sect. 3.3.

Theorem 12

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^d\). Let us assume that \(\lambda _p>2\mu \) and \(f\in \mathcal {P}^{}(\mathbb {R}^d)\). Then conditionally on the set of non-extinction \(Ext^c\), there is the convergence

where \(G\sim \mathcal {N}(0, 1/(2p-1)), (W,J), G\) are independent and \(J\) is \(H_\infty \) conditioned on \(Ext^c\). Moreover

5 Proofs of Main Results

5.1 Outline of the Proofs

We will now pass to the proofs of the results announced in Sect. 3. Their general structure is similar to the case of \(U\)-statistics of independent random variables, i.e., all the theorems will be proved first for linear combinations of tensor products and then via suitable approximations extended to the function space \(\mathcal {P}^{}(\mathbb {R}^{nd})\). Below we provide a brief outline of the proofs, common for all the cases considered in the paper.

-

1.

Using the one-dimensional versions of the results, presented in Sect. 4, and the Cramér–Wold device, one proves convergence for functions \(f\), which are linear combinations of tensor products. This class is shown to be dense in \(\mathcal {P}^{}\).

-

2.

Using algebraic properties of the covariance, one obtains explicit formulas for the limit, which are well defined for any function \(f\in \mathcal {P}^{}\). Further, one shows that they depend on \(f\) in a continuous way.

-

3.

One obtains a uniform in \(t\) bound on the distance between the laws of \(U^n_t(f)\) and of \(U^n_t(g)\) in terms of the distance between \(f\) and \(g\) in \(\mathcal {P}^{}\). This is the most involved and technical step as it relies on the analysis of moments of \(U\)-statistics. It turns out that the formulas for moments can be expressed in terms of auxiliary branching processes indexed by combinatorial structures, more specifically by labeled trees of a special type (introduced in Sect. 6.3). Having this representation, one can then obtain moment bounds via combinatorial arguments.

-

4.

Combining the above three steps, one can easily conclude the proofs by standard metric-theoretic arguments. By step 3, a general U-statistic based on a function \(f\) can be approximated (uniformly in \(t\)) by a U-statistics based on special functions \(f_n\) whose laws converge by step 1 as \(t\rightarrow \infty \) to some limiting measure \(\mu _n\). By step 2, when the approximation becomes finer and finer (\(n\rightarrow \infty )\), one has \(\mu _n \rightarrow \mu \) for some probability measure \(\mu \). Finally, it is easy to see that \(\mu \) is the limiting measure for the original \(U\)-statistic.

The organization of the rest of the paper is as follows. First, we recall some basic facts about \(U\)- and \(V\)-statistics and Hoeffding projections, which we will need already at step 1. Then we present the proof of the law of large numbers and CLTs. In the latter proofs, we formulate and use the estimates related to step 3 without proving them. Only later in Sect. 6 do we introduce the necessary notation and combinatorial arguments which give those estimates.

We choose this way of presentation since it allows the readers to see the structure of the proofs without being distracted by rather heavy notation and quite lengthy technical arguments related to step 3.

From now on, we will often work conditionally on the set of non-extinction \(Ext^c\), which will not be explicitly mentioned in the proofs (however, should be clear from the context).

5.2 Basic Facts on \(U\)- and \(V\)-Statistics

We will now briefly recall one of the standard tools of the theory of \(U\)-statistics, which we will use in the sequel, namely the Hoeffding decomposition.

Let us introduce for \(I \subseteq \{1,\ldots ,n\}\) the Hoeffding projection of \(f:\mathbb {R}^{nd} \rightarrow \mathbb {R}\) corresponding to \(I\) as the function \(\varPi _I f :\mathbb {R}^{|I|d} \rightarrow \mathbb {R}\), given by the formula

One can easily see that for \(|I| \ge 1\), \(\varPi _I f\) is a canonical kernel. Moreover \(\varPi _\emptyset f = \int _{\mathbb {R}^{nd}} f(x_1,\ldots ,x_n)\prod _{i=1}^n\varphi ({\mathrm{d}}x_i)\).

Note that if \(f\) is symmetric (i.e., invariant with respect to permutations of arguments), \(\varPi _I f\) depends only on the cardinality of \(f\). In this case, we speak about the \(k\)th Hoeffding projection (\(k = 0,\ldots ,n\)), given by

A symmetric kernel in \(n\) variables is called degenerate of order \(k-1\) (\(1 \le k \le n\)) iff \(k = \min \{i> 0 :\varPi _i f \not \equiv 0 \}\). The order of degeneracy is responsible for the normalization and the form of the limit in the CLT for \(U\)-statistics, e.g., if the kernel is non-degenerate, i.e., \(\varPi _1 f \not \equiv 0\), then the corresponding \(U\)-statistic of an i.i.d. sequence behaves like a sum of independent random variables and converges to a Gaussian limit. The same phenomenon will be present also in our situation (see Sect. 7).

In the particular case \(k = n\), the definition of the Hoeffding projection reads as

One easily checks that

which gives us the aforementioned Hoeffding decomposition of \(U\)-statistics

which in the case of symmetric kernels simplifies to

where we use the convention \(U_t^0(a) = a\) for any constant \(a\).

For technical reasons, we will also consider the notion of a \(V\)-statistic which is closely related to \(U\)-statistics, and is defined as

The corresponding Hoeffding decomposition is

where again we set \(V_t^0(a) = a\) for any constant \(a\).

In the proofs of our results, we will use a standard observation that a \(U\)-statistic can be written as a sum of \(V\)-statistics. More precisely, let \(\mathcal {J}\) be the collection of partitions of \(\{1,\ldots ,n\}\) i.e., of all sets \(J = \{J_1,\ldots ,J_k\}\), where \(J_i\)’s are non-empty, pairwise disjoint and \(\bigcup _i J_i = \{1,\ldots ,n\}\). For \(J\) as above let \(f_J\) be a function of \(|J|\) variables \(x_1,\ldots ,x_{|J|}\), obtained by substituting \(x_i\) for all the arguments of \(f\) corresponding to the set \(J_i\), e.g., for \(n = 3\) and \(J = \{\{1,2\},\{3\}\}, f_J(x_1,x_2) = f(x_1,x_1,x_2)\). An easy application of the inclusion–exclusion formula yields that

where \(a_J\) are some integers depending only on the partition \(J\). Moreover one can easily check that if \(J = \{ \left\{ 1 \right\} ,\ldots ,\left\{ n \right\} \}\), then \(a_J = 1\), whereas if \(J\) consists of sets with at most two elements then \(a_J = (-1)^{k}\) where \(k\) is the number of two-element sets in \(J\). Let us also note that partitions consisting only of one- and two-element sets can be in a natural way identified with Feynman diagrams (defined in Sect. 2.1).

5.3 Proof of the Law of Large Numbers

Proof of Theorem 2

Consider the random probability measure \(\mu _t = |X_t|^{-1} X_t\) (recall that formally we identify \(X_t\) with the corresponding counting measure). By Theorem 9 with probability one (conditionally on \(Ext^c\)), \(\mu _t\) converges weakly to \(\varphi \). Thus, by Theorem 3.2 in [3], \(\mu _t^{\otimes n}\) converges weakly to \(\varphi ^{\otimes n}\).

Let \(f\) be bounded and continuous. We notice that \(\langle f,\mu _t^{\otimes n}\rangle = |X_t|^{-n}V_t^n(f)\), which gives the almost sure convergence \(|X_t|^{-n}V_t^n(f) \rightarrow \langle f,\varphi \rangle \). Now it is enough to note that the number of “off-diagonal” terms in the sum (25) defining \(V_t^n(f)\) is of order \(|X_t|^{n-1}\) and use the fact that \(|X_t| \rightarrow \infty \) a.s. on \(Ext^c\).

We note that in the proofs below we will use this fact only in the version for \(f\in \mathcal {C}(\mathbb {R}^{nd})\) which we have just proven. The proof of convergence in probability for \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\) follows directly from the CLT presented in Sect. 7. \(\square \)

5.4 Approximation

Before we proceed to the proofs of CLTs, we will demonstrate the simple fact that any function in \(\mathcal {P}^{}(\mathbb {R}^{nd})\) can be approximated by tensor functions.

Lemma 13

Let \(A := \left\{ \otimes _{i=1}^n g_i : g_i \; \mathrm bounded continuous \right\} \) and \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\) be a canonical kernel. For every \(m > 0\) there exists a sequence \(\left\{ f_k \right\} \subset \mathrm{span}(A)\) such that each \(f_k\) is canonical and

Proof

First, we prove that \(\mathrm{span}(A)\) is dense in \(\mathcal {P}^{}(\mathbb {R}^{nd})\). Let us notice that given a function \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\) it suffices to approximate it uniformly on some box \([-M,M]^d, M>0\). The box is a compact set and an approximation exists due to the Stone–Weierstrass theorem.

Now, let \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\). We may find a sequence \(\left\{ h_k \right\} \subset \mathrm{span}(A)\) such that \(h_k(m\cdot ) \rightarrow f(m\cdot )\) in \(\mathcal {P}^{}\). Let us recall the Hoeffding projection (24) and denote \(I=\left\{ 1,2,\ldots ,n \right\} \). Now direct calculation (using exponential integrability of Gaussian variables) reveals that the sequence \(f_k := \varPi _{I} h_k\) fulfills the conditions of the lemma. \(\square \)

5.5 Small Branching rate: Proof of Theorem 3

Let us first formulate two crucial facts, whose rather technical proofs we defer to Sect. 6.4. The first one corresponds to Step 2 in the outline of the proof presented in Sect. 5.1. Recall the definition of \(L_1\) given in (14).

Proposition 14

For any canonical \(f \in \mathcal {P}^{}(\mathbb {R}^{nd})\) we have \(\mathbb {E}{L_1(f)}^2 <+\infty \). Moreover \(L_1\) is a continuous function \(L_1:\text {Can} \mapsto L_2(\varOmega , \mathcal {F}, \mathbb {P})\), where

and \(\text {Can}\) is endowed with the norm \(\left\| \cdot \right\| _{ \mathcal {P}^{} } ^{ }\).

The other fact we will use allows for a uniform in \(t\) approximation of general canonical \(U\)-statistics by those, whose kernels are sums of tensor products. This corresponds to step 3 of the outline. Recall the distance \(m\) given by (5).

Proposition 15

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^{d}\) and \(\lambda _p<2\mu \). For any \(n \ge 2 \) there exists a function \(l_n:\mathbb {R}_+ \mapsto \mathbb {R}_+\), fulfilling \(\lim _{s\searrow 0} l_n(s) = 0\) and such that for any \(f_1, f_2 \in \text {Can}\) and any \(t > 1\) we have

where \(\nu _1 \sim |X_t|^{-n/2} U_t^n(f_1), \nu _2 \sim |X_t|^{-n/2} U_t^n(f_2)\) (the \(U\)-statistics are considered here conditionally on \(Ext^c\)).

We can now proceed with the proof of Theorem 3.

Proof of Theorem 3

For simplicity, we concentrate on the third coordinate. The joint convergence can be easily obtained by a straightforward modification of the arguments below (using the joint convergence in Theorem 10 for \(n=1\)). In the whole proof, we work conditionally on the set of non-extinction \(Ext^c\).

Let us consider bounded continuous functions \(f_i^l:\mathbb {R}^{d}\mapsto \mathbb {R}, l=1,\ldots ,m,\,i=1,\ldots ,n\), which are centered with respect to \(\varphi \) and set \({f}_l:=\otimes _{i=1}^n{f_i^l} \) and \(f := \sum _{l=1}^m f_l\). In this case the \(U\)-statistic (2) writes as

Let \(\gamma \) be a Feynman diagram labeled by \(\left\{ 1,2,\ldots ,n \right\} \), with edges \(E_\gamma \) and unpaired vertices \(A_\gamma \). Let

Decomposition (27) writes here as

where the sum spans over all Feynman diagrams labeled by \(\left\{ 1, 2, \ldots , n \right\} \) (note that when \(\gamma \) has no edges, then \(S_t(\gamma ) = V_t^n(f)\)), and the remainder \(R\) is the sum of \(V\)-statistics corresponding to partitions of \(\{1,\ldots ,n\}\) containing at least one set with more than two elements. First, we will prove that \(|X_t|^{-(n/2)} R_t \rightarrow 0\).

To this end, let us consider a partition \(J = \{A_r\}_{1\le r\le m_1}\cup \{B_r\}_{1\le r\le m_2}\cup \{C_r\}_{1\le r\le m_3}\) of the set \(\left\{ 1,2,\ldots , n \right\} \), in which \(|A_r|\ge 3, |B_r|=2\) and \(|C_r|=1\). Assume that \(m_1 \ge 1\) and recall the definition of \(f_J\) used in (27). For any \(l=1,\ldots ,m\), we have

By the first part of Theorem 9, the first product on the right-hand side converges almost surely to \(0\) and the second one converges to a finite limit. Each factor of the third product, by Theorem 10, converges (in law) to a Gaussian random variable. We conclude that \(|X_t|^{-(n/2)} V_t^{|J|}(f^l_{J}) \rightarrow ^d 0\). Thus, only the first summand of (28) is relevant for the asymptotics of \(|X_t|^{-(n/2)}U_t^n(f)\).

Consider now

Let us denote \(Z_{f_j^l}(t):=|X_t|^{-1/2}\sum _{i=1}^{|X_t|}{f}_{j}^l(X_t(i))\). By Theorem 10 and the Cramér-Wold device, we get that

where \((G_{f_j^l})_{1\le j \le n, 1\le l\le m}\) is a centered Gaussian vector with the covariances

Let \(D := \mathbb {R}_+\times \mathbb {R}^d\) and note that

(recall that \(I_1\) is the Gaussian stochastic integral with respect to the random Gaussian measure with intensity \(\mu _1\)). Thus, without loss of generality, we can assume that \(G_{f_j^l} = I_1(H(f_j^{l}))\) for all \(l,j\).

On the other hand by Theorem 9, one easily obtains

We conclude that

Thus, by decomposition (28) and the considerations above, we obtain

We will now show that \(L\) is equal to \(L_1(f)\) given by (14). By linearity of \(L_1(f)\) it is enough to consider the case of \(m=1\). We will therefore drop the superscript and write \(f_i\) instead of \(f_i^l\). We recall (12) and denote \(P(f_i, f_j):= \int _D H(f_i \otimes f_j)(z,z) \mu _2({\mathrm{d}}z)\), where \(D = \mathbb {R}_+\times \mathbb {R}^d\). By (29) and the definition of \(\mu _2\) given in (13)

We now adopt the notation that \(\eta \subset \gamma \) when \(E_\eta \subset E_\gamma \) and write

Let us notice that the inner sum can be written as

where \(\sigma \) runs over all Feynman diagrams on the set of vertices \(A_\eta \). Thus, by [21, Theorem 3.4 and Theorem 7.26] this equals \( I_{|A_\eta |}\left( H(\otimes _{i\in A_\eta } f_i ) \right) \) and in consequence

It is easy to see that in the case of \(f = \otimes _{i=1}^n f_i\), the expression above is equivalent to (14). Thus, for each \(f\), which is a finite sum of tensors, we have \(L = L_1(f)\) and in consequence \(|X_t|^{-n/2}U_t^n(f) \rightarrow ^d L_1(f)\).

Let us now consider a general canonical function \(f \in \mathcal {P}^{}\). We put \(h(x) := f(2n x)\). By Lemma 13 we may find a sequence of canonical functions \(\left\{ f_k \right\} _k \subset \mathrm{span}(A)\) such that \(f_k(2n\cdot ) \rightarrow h\) in \(\mathcal {P}^{}\). Now by Proposition 15, we may approximate \(|X_t|^{-(n/2)} U^n_t(f)\) with \(|X_t|^{-(n/2)} U^n_t(f_k)\) uniformly in \(t>1\). This together with Proposition 14 and standard metric-theoretic considerations concludes the proof.\(\square \)

5.6 Critical Branching Rate: Proof of Theorem 4

For the critical case, we will need the following counterpart of Proposition 15, which will be proved in Sect. 6.5.

Proposition 16

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^{d}\) and \(\lambda _p=2\mu \). For any \(n \ge 2 \) there exists a function \(l_n:\mathbb {R}_+ \mapsto \mathbb {R}_+\), fulfilling \(\lim _{s\rightarrow 0} l_n(s) = 0\) and such that for any canonical \(f_1, f_2 \in \mathcal {P}^{}(\mathbb {R}^{nd})\) and any \(t > 1\) we have

where \(\nu _1 \sim (t|X_t|)^{-n/2} U_t^n(f_1), \nu _2 \sim (t|X_t|)^{-n/2} U_t^n(f_2)\) (the \(U\)-statistics are considered here conditionally on \(Ext^c\)).

Proof of Theorem 4

As in the subcritical case, we will focus on the third coordinate.

The proof is slightly easier than the one of Theorem 3 as, because of larger normalization, the notion of \(U\)-statistics and \(V\)-statistics coincide in the limit. Indeed, let us consider bounded continuous functions \(f_1^l, f_2^l, \ldots , f_n^l,\) \(l = 1,\ldots ,m\), which are centered with respect to \(\varphi \) and denote \(f:=\sum _{l=1}^m \otimes _{i=1}^n f_i^l \). By (28) we have

simply by the fact that the Feynman diagram without edges corresponds to \(V^n_t(f)\). Analogously as in the proof of Theorem 3, we have \((t|X_t|)^{-n/2}R_t\rightarrow ^d 0\). Let us now fix some diagram \(\gamma \) with at least one edge. Without loss of generality, we assume that \(E_\gamma = \left\{ (1,2),(2,3),\ldots ,(2k-1,2k) \right\} \) for \(k\ge 1\). We have

By Theorem 11 each of the factors in the second product converges in distribution, whereas by the first part of Theorem 2 each factor in the first product converges almost surely to \(0\), in consequence \((t|X_t|)^{-n/2} S(\gamma )\) converges in probability to 0, which shows that \( (t|X_t|)^{-n/2}U^n_t(f)\) and \((t|X_t|)^{-n/2}V^n_t(f)\) are asymptotically equivalent.

Let us denote \(Z_{f_j^l}(t):=(t|X_t|)^{-1/2}\sum _{i=1}^{|X_t|}{f}_{j}^l(X_t(i))\). By Theorem 11 and the Cramér–Wold device, we get that

where \((G_{f_i^l})_{i\le n,l\le m}\) is centered Gaussian with the covariances given by (16). Thus,

where \(L_2\) is defined by (17).

Now we pass to general canonical functions \(f\in \mathcal {P}^{}\). By Lemma 13, we can approximate \(f\) by canonical \(f_k\) from \(\mathrm{span}(A)\) in such a way that \(\Vert f - f_k\Vert _{\mathcal {P}^{}} \le \Vert f(2n\cdot ) - f_k(2n\cdot )\Vert _{\mathcal {P}^{}} \rightarrow 0\). Thus, by Proposition 16, the law of \((t|X_t|)^{-1/2}U_t^n(f_k)\) converges to the one of \((t|X_t|)^{-1/2}U_t^n(f)\) as \(k \rightarrow \infty \) uniformly in \(t>1\). Moreover by the fact that \(L_2\) is bounded on \(L_2(\mathbb {R}^{nd},\varphi ^{\otimes n})\) and there exists \(C<\infty \) such that \(\Vert \cdot \Vert _{L_2(\mathbb {R}^{dn},\varphi ^{\otimes n})} \le C\Vert \cdot \Vert _{\mathcal {P}^{}}\) (which follows easily from exponential integrability of Gaussian variables), we obtain \(L_2(f_k) \rightarrow L_2(f)\) in the space \(L_2(\varOmega ,\mathcal {F},\mathbb {P})\). The proof may now be concluded by standard metric-theoretic arguments.\(\square \)

5.7 Large Branching Rate: Proof of Theorem 8

As in the previous two cases, we start with a fact, which allows to approximate general \(U\)-statistics, by those with simpler kernels. It is slightly different than the corresponding statements in the small and critical branching case, which is related to a different type of convergence and a deterministic normalization which we have for large branching. The proof is deferred to Sect. 6.6.

Proposition 17

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system with \(\lambda _p>2\mu \). There exist constants \(C, c>0\) such that for any canonical \(f \in \mathcal {P}^{}(\mathbb {R}^{nd})\) we have

Proof of Theorem 8

Again we concentrate on the third coordinate. The joint convergence can be easily obtained by a modification of the arguments below (using the joint convergence in Theorem 12 for \(n=1\)).

First, note that \(U\)-statistics and \(V\)-statistics are asymptotically equivalent. The argument is analogous to the one presented in the proof of Theorem 4, since under the assumption \(\lambda _p>2\mu \) we have

as \(t \rightarrow \infty \) and consequently we can disregard the sum over all multi-indices \((i_1,\ldots ,i_n)\) in which the coordinates are not pairwise distinct.

Let us consider bounded continuous functions \(f_1^l, f_2^l, \ldots , f_n^l :\mathbb {R}^d \rightarrow \mathbb {R},\) \(l = 1,\ldots ,m\), which are centered with respect to \(\varphi \) and denote \(f:=\sum _{l=1}^m \otimes _{i=1}^n f_i^l \). By Theorem 12 for \(n=1\) we have

in probability. Before our final step, we recall that the convergence in probability can be metrized by \(d(X,Y) := \mathbb {E}{}\left( \frac{|X-Y|}{|X-Y|+1} \right) \le \Vert X-Y\Vert _1\). Let us now consider a function \(f \in \mathcal {P}^{}\). By Lemma 13 we may find a sequence of canonical functions \(\left\{ f_k \right\} \subset \mathrm{span}(A)\) such that \(f_k(2n\cdot ) \rightarrow f(2n\cdot )\) in \(\mathcal {P}^{}\). Now by Proposition 17, we may approximate \(e^{-n(\lambda _p-\mu )t} U^n_t(f)\) with \(e^{-n(\lambda _p-\mu )t} U^n_t(f_k)\) uniformly in \(t\) in the sense of the metric \(d\). Moreover, one can easily show that \(\lim _{k\rightarrow +\infty }d(\tilde{L}_3(f_k), \tilde{L}_3(f))= 0\). This concludes the proof.\(\square \)

6 Proofs of Technical Lemmas

We will now provide the proofs of the technical facts formulated in Sect. 5. The proofs are quite technical and require several preparatory steps. In what follows, we first recall some additional properties of the Ornstein–Uhlenbeck process, and then we introduce certain auxiliary combinatorial structures which will play a prominent role in the proofs.

6.1 Auxiliary Facts on the Ornstein–Uhlenbeck Process

The semigroup of the Ornstein–Uhlenbeck process can be represented by

where

Let us recall (10). We denote \(\mathrm{ou}(t) := \sqrt{1- e^{-2\mu t}}\) and let \(G\sim \varphi \). Then (30) can be written as

We also denote

6.2 Smoothing Things Out

It is well known that the Ornstein–Uhlenbeck semigroup increases the smoothness of a function. We will now introduce some simple auxiliary lemmas which quantify this statement and give bounds on the \(\Vert \cdot \Vert _{\mathcal {P}^{}}\) norms of derivatives of certain functions obtained from \(f\) by applying the Ornstein–Uhlenbeck semigroup on a subset of coordinates. Such bounds will be useful, since they will allow us to pass in the analysis to smooth functions.

Let \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\) and \(I \subset \left\{ 1,2,\ldots , n \right\} \) with \(|I|= k\). We define

where \(z_i = y_i\) if \(i\in I, z_i = x_i\) otherwise and \(g_1\) is given by (31). We have

Lemma 18

Let \(f \in \mathcal {P}^{}(\mathbb {R}^{nd})\) and \(l\in \mathbb {N}\). Then for any \(I \subset \left\{ 1,2,\ldots , n \right\} \) the function \(\hat{f}_I\) is smooth with respect to coordinates in \(I\). For any multi-index \(\varLambda = (i_1,\ldots ,i_l) \subset \{1,\ldots ,nd\}^l\) such that \(\{\lceil i_j /d\rceil :j = 1,\ldots ,l\} \subset I\) we have

where \(C>0\) does not depend on \(f\) and depends only on the parameters of the system (that is \(\sigma ,\mu , d,l,n\)). Moreover, when \(f\) is canonical, so is \(\hat{f}_{I}\).

Proof

Let us fix some \(I\) and \(\varLambda \). Using (33) we get

Therefore, by (4), the properties of the Gaussian density \(g_1\) and easy calculations we arrive at

for some constant \(C_{\varLambda }\). We recall that function \(n\) is defined after (3).

To prove (34), it is enough to take the maximum over all admissible pairs \(I,\varLambda \).

Let us now assume that \(f\) is canonical. We would like to check that for any \(j\in \left\{ 1,2,\ldots , n \right\} \) we have

There are two cases, the first when \(j\notin I\). Then we have

The second case is when \(j \in I\). Then

where the second equality holds by the fact that \(\varphi \) is the invariant measure of the Ornstein–Uhlenbeck process. Now the proof reduces to the first case. \(\square \)

We will also need the following simple identity. We consider \(\left\{ x_i \right\} _{i=1,2,\ldots ,n}, \left\{ \tilde{x}_i \right\} _{i=1,2,\ldots ,n}\). By induction one easily checks that the following lemma holds (we slightly abuse the notation here, e.g., \(\frac{\partial }{\partial y_i}\) denotes the derivative in direction \(\tilde{x}_i - x_i\) and \(\int _a^b\) the integral over the segment \([a,b] \subset \mathbb {R}^d\)).

Lemma 19

Let \(f\) be a smooth function, then

6.3 Bookkeeping of Trees

We will now introduce the “bookkeeping of trees” technique (for similar considerations see, e.g., [13, Section 2] or [4]), which via some combinatorics and introduction of auxiliary branching processes will allow us to pass from equations on the Laplace transform in the case of \(n=1\) to estimates of moments of \(V\)-statistics and consequently \(U\)-statistics, which will be crucial for proving Propositions 15, 16 and 17.

Our starting point is classical. We will use the equation on the Laplace transform of the branching process to obtain, via integration, recursive formulas for moments of \(V\)-statistics generated by tensors.

Recall thus (25). Let \(f_1,f_2, \ldots , f_n \in \mathcal {C}^{}_c(\mathbb {R}^d)\) and \(f_i \ge 0\). We would like to calculate

Let \(\varLambda \subset \left\{ 1,2,\ldots , n \right\} \), slightly abusing notation for \(\alpha _i \ge 0, i\in \varLambda \) we denote

Note that this differentiation is valid by Proposition 1 and properties of the Laplace transform (e.g., [17, Chapter XIII.2]). By the calculations from Section 4.1. in [1] we know that

It is easy to check that

Assume that \(|\varLambda |>0\). We denote by \(P_1(\varLambda )\) all pairs \((\varLambda _1, \varLambda _2)\) such that \(\varLambda _1 \cup \varLambda _2 = \varLambda \) and \(\varLambda _1 \cap \varLambda _2 =\emptyset \), and by \(P_2(\varLambda )\subset P_1(\varLambda )\) pairs with an additional restriction that \(\varLambda _1 \ne \emptyset \) and \( \varLambda _2 \ne \emptyset \). Using (36) we easily check that

We evaluate it at \(\alpha =0\), (let us notice that \(\frac{\partial ^{|\varLambda _1|} }{\partial \alpha _{\varLambda _1}} w_\varLambda (x, s, 0) = \frac{\partial ^{|\varLambda _1|} }{\partial \alpha _{\varLambda _1}} w_{\varLambda _1}(x, s, 0) = w_{\varLambda _1}(x,s)\)), multiply both sides by \((-1)^{|\varLambda |}\) and use the definition of \(v_\varLambda (x,t)\) to get

This can be easily transformed to (recall that \(\mathcal {T}_{s}^{\lambda _p} f(x) \!=\! e^{\lambda _p s} \mathcal {T}_{s}f(x), \lambda _p \!=\! \lambda (2p\!-\!1)\))

The last formula is much easier to handle if written in terms of auxiliary branching processes. Firstly, we introduce the following notation. For \(n \in \mathbb {N} \setminus \left\{ 0 \right\} \) we denote by \(\mathbb {T}_{n}\) the set of rooted trees described below. The root has a single offspring. All inner vertices (we exclude the root and the leaves) have exactly two offspring. For \(\tau \in \mathbb {T}_{n}\), by \(l(\tau )\) we denote the set of its leaves. Each leaf \(l\in l(\tau )\) is assigned a label, denoted by \({{\mathrm{lab}}}(l)\), which is a non-empty subset of \(\left\{ 1,2,\ldots ,n \right\} \). The labels fulfill two conditions:

In other words, the labels form a partition of \(\left\{ 1,2,\ldots ,n \right\} \). For a given \(\tau \in \mathbb {T}_{n}\) let \(i(\tau )\) denote the set of inner vertices (we exclude the root and the leaves), clearly \(|i(\tau )| = |l(\tau )|-1\) (as usual \(|\cdot |\) denotes the cardinality). Let us identify the vertices of \(\tau \) with \(\left\{ 0,1,2,\ldots , |\tau |-1 \right\} \) in such a way, that for any vertex \(i\) its parent, denoted by \(p(i)\), is smaller. Obviously, this implies that \(0\) is the root and that the inner vertices have numbers in the set \(\left\{ 1, 2, \ldots , |\tau |-1 \right\} \). We denote also

leaves with singleton and non-singleton label sets, respectively.

Given \(\tau \in \mathbb {T}_{n}\) and \(t \in \mathbb {R}_+\) and \(\left\{ t_i \right\} _{i \in i(\tau )}\), we consider an Ornstein–Uhlenbeck branching walk on \(\tau \) as follows. The initial particle is placed at time \(0\) at location \(x\), it evolves up to the time \(t-t_1\) according to the Ornstein–Uhlenbeck process and splits into two offspring, the first one is associated with the left branch of vertex \(1\) in tree \(\tau \) and the second one with the right branch. Further each of them evolves independently until time \(t-t_i\), where \(i\) is the first vertex in the corresponding subtree, when it splits and so on. At time \(t\), the particles are stopped and their positions are denoted by \(\left\{ Y_i \right\} _{i\in l(\tau )}\) (the number of particles at the end is equal to the number of leaves). The construction makes sense provided that \(t_i \le t\) and \(t_i \le t_{p(i)}\) for all \(i \in i(\tau )\) (which we implicitly assume). We define

where \(j(a) = l \in l(\tau )\) is the unique leaf such that \(a\in {{\mathrm{lab}}}(l)\). We also define

where we set \(t_0 = t\). The reason to study the above objects becomes apparent by the following statement.

Proposition 20

Let \(\varLambda _n = \left\{ 1,2,\ldots ,n \right\} \). The following identity holds

Proof

The claim is a consequence of the identity

where \(\varLambda \subset \left\{ 1,2,\ldots ,n \right\} \) and \(\mathbb {T}_{}(\varLambda )\) is the set of trees, as \(\mathbb {T}_{|\varLambda |}\), with the exception that the labels are in the set \(\varLambda \). This identity in turn will follow by induction with respect to the cardinality of \(\varLambda \). For \(\varLambda =\left\{ i \right\} \) Eq. (38) reads as \(v_{\varLambda }(x,t) = e^{\lambda _p t}\mathcal {T}_{t} f_i(x)\) (note that \(P_2(\varLambda )=\emptyset \)). The space \(\mathbb {T}_{}(\varLambda )\) contains only one tree, denoted by \(\tau _s\), consisting of the root and a single leaf labeled by \(\{i\}\). We have \(i(\tau ) = \emptyset \) and \(S(\tau _s, t,x) = e^{\lambda _p t}\mathcal {T}_{t} f_i(x)\) so (43) follows.

Let now \(|\varLambda | = k >1\) and assume that (43) holds for all sets of cardinality at most \(k-1\). Apply again (38). Similarly as before, the first term corresponds to \(\tau _s\). Let \(p\) be the transition density of the Ornstein–Uhlenbeck semigroup. By induction the second term of (38) can be written as

By (41) the above expression equals

Now we create a new tree \(\tau \) by setting \(\tau _1\) and \(\tau _2\) to be descendants of the vertex born from the root at time \(t-t_1\) (thus this vertex is assigned the split time \(t_1\)). We keep labels and the remaining split times unchanged. Consider the branching random walk on \(\tau \) with the initial position of the first particle equal to \(x\). Note that by the branching property the evolution of this process on subtrees \(\tau _1\) and \(\tau _2\) is conditionally independent given the evolution of the first particle up to time \(t-t_1\). Thus, by the Markov property of the Ornstein–Uhlenbeck process, we can identify the branching random walk on \(\tau _1\) and \(\tau _2\) with the branching random walk on \(\tau \). More precisely we have

Using the Fubini theorem together with the equality \(|i(\tau )|=|i(\tau _1)|+|i(\tau _2)|+1\) we see that the summand corresponding to \(\tau _1,\tau _2\) in (44) equals \(S(\tau ,t,x)\). It is also easy to check that the described correspondence is a bijection from the set of pairs \((\tau _1, \tau _2)\) [as in (44)] to \({\mathbb {T}_{n}\setminus \left\{ \tau _s \right\} }\) and therefore the expression (44) is equal to \(\sum _{\mathbb {T}_{n}\setminus \left\{ \tau _s \right\} } S(\tau , t,x)\), which ends the proof.\(\square \)

The calculations will be more tractable when we derive an explicit formula for \(\left\{ Y_i \right\} _{i\in l(\tau )}\). Let us recall the notation introduced in (32) and consider a family of independent random variables \(\left\{ G_i \right\} _{i\in \tau }\), such that \(G_i \sim \varphi \) for \(i\ne 0\) and \(G_0 \sim \delta _x\). Recall also that \(\mathrm{ou}(t) = \sqrt{1 - e^{-2\mu t}}\). The following proposition follows easily from the construction of the branching walk on \(\tau \) and (32).

Proposition 21

Let \(\left\{ Y_i \right\} _{i \in l(\tau )}\) be positions of particles at time \(t\) of the Ornstein–Uhlenbeck process on tree \(\tau \) with labels \(\left\{ t_i \right\} _{t\in i(\tau )}\). We have

where for any \(i\in l(\tau )\) we put

where \(P(i) := \left\{ \text {predecessors of } i \right\} \), by convention we set \(t_0 = t\) and \(\mathrm{ou}(t_{p(0)} - t_0) = 1\).

We are now ready to prove an extended version of Proposition 20. This result will be instrumental in proving bounds needed to implement step 3 of the outline presented in Sect. 5.1.

Proposition 22

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^d\) and \(f\in \mathcal {P}^{}(\mathbb {R}^{nd}) \). Then

where in (41) we extend the definition of \(\mathrm{OU}\) in (40) by putting

Moreover all the quantities above are finite.

Proof

(Sketch) Using Proposition 20, Proposition 1, (35) and Lebesgue’s monotone convergence theorem one may prove that (45) is valid for \(f \equiv C, C>0\). Using standard methods, we may drop the positivity assumption in (35) and (42). Therefore, by the Stone–Weierstrass theorem, linearity and Lebesgue’s dominated convergence theorem, (45) is valid for any \(f\in \mathcal {C}^{}_c(\mathbb {R}^{nd})\). Let now \(f\in \mathcal {P}^{}(\mathbb {R}^{nd}), f\ge 0\). We notice that for any \(\tau \in \mathbb {T}_{n}\) the expression \(\mathrm{OU}(f, \tau , t, \left\{ t_i \right\} _{i \in i(\tau )},x)\) is finite, which follows easily from Proposition 21. Further, one can find a sequence \(\left\{ f_k \right\} \) such that \(f_k \in \mathcal {C}^{}_c(\mathbb {R}^{nd}), f_k\ge 0\) and \(f_k\nearrow f\) (pointwise). Appealing to Lebesgue’s monotone convergence theorem yields that (45) still holds (and both sides are finite). To conclude, once more we remove the positivity condition.\(\square \)

As a simple corollary we obtain

Corollary 23

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system, then for any \(n\ge 1\) there exists \(C_n\) such that

Proof

We apply the above proposition with \(f=1\). Using the definition (41) and the inequality \(|i(\tau )| \le n-1\) for \(\tau \in \mathbb {T}_{n}\), it is easy to check that for any \(t\in \mathbb {T}_{n}\) we have \(S(\tau , t, x)\le C_\tau e^{n \lambda _p t}\), for a certain constant depending only on \(\tau \) and \(p,\lambda \). \(\square \)

Let us recall the notation of (39). The following proposition will be crucial in proving moment estimates for \(V\)- and \(U\)-statistics.

Proposition 24

For any \(n>0\) there exist \(C, c>0\), such that for any \(\tau \in \mathbb {T}_{n}\), any split times \(\left\{ t_i \right\} _{i\in i(\tau )}\) and any canonical \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\) we have

Proof

Let \(k\le n\). Without loss of generality we may assume that \(I:=\left\{ 1,2,\ldots , k \right\} \) are single numbers (i.e., \(j(i)\in s(\tau )\)) and \(\left\{ k+1, \ldots , n \right\} \) are multiple ones. Let us also assume for a moment that for \(i\in \left\{ 1,2,\ldots , k \right\} \) we have \(t_{p(j(i))}\ge 1\). Let \(Z_i\) and \(G_i\) be as in Proposition 21. We have \(\mathbb {E}{} f(Y_{j(1)}, Y_{j(2)}, \ldots , Y_{j(n)})= \mathbb {E}{} f(Z_{j(1)}, Z_{j(2)}, \ldots , Z_{j(n)})\). For \(i\le k\) we define

Moreover, let \(\hat{f} := \hat{f}_{I}\) be given by (33). By the semigroup property of \(\mathcal {T}_{}\) we have

By Lemma 18, \(\hat{f}\) is smooth with respect to coordinates from \(I\) and canonical. Combining this with Lemma 19 we obtain

From now on, we restrict to the case \(d=1\). The proof for general \(d\) proceeds along the same lines but it is notationally more cumbersome. Using Lemma 18 and applying the Schwarz inequality multiple times we have

Note that by the definition of \(\tilde{Z}_i\) we have \(\tilde{Z}_i - G_i = H_i e^{-\mu (t_{p(i)}-1)} + \left( \mathrm{ou}(t_{p(i) }-1) - 1 \right) G_i \), where \(H_i\) is independent of \(G_i\) and \(H_i \sim \mathcal {N}(x_i, \sigma _i^2)\) with \(\sigma _i \le \sigma /\sqrt{2\mu }\) and \(\left\| x_i \right\| _{ } ^{ } \le \left\| x \right\| _{ } ^{ }\). Thus, \(\tilde{Z}_i - G_i\) is a Gaussian variable with the mean bounded by \(C\left\| x_i \right\| _{ } ^{ }e^{-\mu t_{p(i)}}\) and the standard deviation of order \(e^{-\mu t_{p(i)}}\). In particular \(\Vert \tilde{Z}_i-G_i\Vert _l \le C_l\exp (C_l\Vert x\Vert -\mu t_{p(i)})\). Since

the proof can be concluded by yet another application of the Schwarz inequality and standard facts on exponential integrability of Gaussian variables.

Finally, if some \(i\)’s do not fulfill \(t_{p(i)}\ge 1\), we repeat the above proof with \(s(\tau )\) replaced by the set \(s'\) of indices from \(s(\tau )\) for which additionally \(t_{p(i)}\ge 1\). In this way, we obtain (46) with \(\sum _{i\in s'} t_{p(i)}\) instead of \(\sum _{i\in s(\tau )} t_{p(i)}\). In our setting

hence (46) still holds (with a worse constant \(C\)).\(\square \)

6.4 Small Branching Rate: Proofs of Propositions 14 and 15

Proof of Proposition 14

The sum (14) is finite hence it is enough to prove our claim for one \(L(f, \gamma )\). Without loss of generality let us assume that \(E_\gamma =\left\{ (1,2), (3,4), (2k-1,2k) \right\} \) and \(A_\gamma = \left\{ 2k+1, \ldots , n \right\} \) (we recall notation in Sect. 2.1). Using the same notation as in (13) we write

where \(D:=\mathbb {R}_+\times \mathbb {R}^d\). We have \(L(f, \gamma ) = I_{n-2k}(J(x_{2k+1}, \ldots , x_n))\). By the properties of the multiple stochastic integral [21, Theorem 7.26] we know that \(\mathbb {E}{L(f, \gamma )}^2 \lesssim \left( \prod _{i\in \left\{ 2k+1, \ldots , n \right\} } \int _D \mu _1({\mathrm{d}}z_i) \right) |J(z_{2k+1}, \ldots , z_n)|^2 \). Therefore, we need to estimate

We will now estimate \(H(f)(z_1, z_2, \ldots , z_n)\). Let \(Y_1, Y_2, \ldots , Y_n\) be i.i.d., \(Y_i \sim \varphi \). We define \(Y_i(t) = x_i e^{-\mu t} + \mathrm{ou}(t) Y_i \). Recall our notation \(z_i = (s_i,x_i)\) and let \(I = \{i \in \{1,\ldots ,n\} :s_i \ge 1\}, I^c = \{1,\ldots ,n\}\setminus I\). Let \(\hat{f} := \hat{f}_{I}\) be defined according to (33).

Using (32), Lemmas 18, 19, the assumption that \(f\) is canonical and the semigroup property, we can rewrite (12) as

By Lemma 18, we have (in order to simplify the notation, we calculate for \(d=1\), the general case is an easy modification)

For any \(x,y \in \mathbb {R}\) we have \(\max \left( \exp (x),\exp (y) \right) \le \exp (x)+\exp (y)\). Therefore, by the mean value theorem, we get

Using the Schwarz inequality and performing easy calculations, we get

Similarly

Since we also have \(\mathbb {E}{} \exp (|Y_i(s_i)|) \lesssim \exp (|x_i|)\), we have thus proved that

We use the above inequality to estimate (47). To this end let us denote \(D_0 = [0,1)\times \mathbb {R}^d, D_1 = [1,\infty )\times \mathbb {R}^d\). To simplify the notation let us introduce the following convention. For subsets \(I_1,I_2 \subset \{1,\ldots ,k\}, I_3 \subset \{2k+1,\ldots ,n\}\) and \(i \in \{1,\ldots ,n\}\) we will write \(I_j(i) = 1\) if \(i \in I_j\) and \(I_j(i) = 0\) otherwise. Let us also denote

for \(\mathbf {z} = (z_1^1,\ldots ,z_k^1,z_1^2,\ldots ,z_k^2,z_{2k+1},\ldots ,z_n)\).

With this notation, we can estimate (47) as follows

Now, using (48) in combination with the Fubini theorem, the definition of the measures \(\mu _i\) (given in Sect. 3.1) and our assumption \(\lambda _p < 2\mu \), we get

To conclude the proof, we use the fact that \(f\mapsto L(f,\gamma )\) is linear and \(\left\| \cdot \right\| _{ \mathcal {P}^{} } ^{ }\) is a norm.\(\square \)

Our next goal is to prove Proposition 15, which is the last remaining ingredient used in the proof of Theorem 3. This is where we will use for the first time the bookkeeping of trees technique introduced in Sect. 6.3. We will proceed in three steps. First we will obtain \(L_2\) bounds on \(V\)-statistics with deterministic normalization (Proposition 25), then we will pass to \(L_1\) bound of \(U\)-statistics with random normalization, restricted to the subset of the probability space, where \(|X_t|\) is large (Corollary 26). Finally, we will obtain bounds on the distance between the distribution of two \(U\)-statistics (with random normalization) in terms of the distance in \(\mathcal {P}^{}\) of the generating kernels (proof of Proposition 15).

Proposition 25

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching particle system with \(\lambda _p<2\mu \). There exist \(C,c >0\) such that for any canonical kernel \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\) we have

Proof

We need to estimate

Obviously the function \(f \otimes f\) is canonical. Moreover, it is easy to check, that \(\left\| f\otimes f \right\| _{ \mathcal {P}^{}(\mathbb {R}^{2nd}) } ^{ } \le \left\| f \right\| _{ \mathcal {P}^{}(\mathbb {R}^{nd}) } ^{ 2 }\).

By Proposition 22, it suffices to show that for each \(\tau \in \mathbb {T}_{2n}\) there exist \(C,c>0\) such that for the function \(f\otimes f\) and any \(t>0\) we have \(e^{-n\lambda _p t}S(\tau , t,x) \le C\exp \left\{ c\left\| x \right\| _{ } ^{ } \right\} \left\| f \right\| _{ \mathcal {P}^{} } ^{ 2 }\).

Let us fix \(\tau \in \mathbb {T}_{2n}\) and denote by \(P_1(\tau )\) and \(P_2(\tau )\) the sets of inner vertices of \(\tau \) with, respectively, one and two children in \(s(\tau )\) [we recall (39)]. Set also \(P_3(\tau ) := i(\tau )\setminus (P_1(\tau )\cup P_2(\tau ))\).

By the definition of \(S(\tau ,t,x)\), Proposition 24 and the assumption \(\lambda _p < 2\mu \), we get

To end the proof, it is thus sufficient to show that \(|P_1(\tau )| + 2|P_3(\tau )| \le 2n-2\).

Note that \(|P_1(\tau )| + 2|P_2(\tau )| = |s(\tau )|\) and \(\sum _{i=1}^3|P_i(\tau )| = |i(\tau )| = |l(\tau )|-1\). Thus, \(|P_1(\tau )| + 2|P_3(\tau )| = 2|l(\tau )| - 2 - |s(\tau )|=|l(\tau )| + |m(\tau )| - 2 \le 2n -2\) (recall that \(m(\tau )\) denotes the set of leaves with multiple labels). This ends the proof. \(\square \)

The next corollary is a technical step toward the proof of Proposition 15. Since we would like to normalize the \(U\)-statistic by the random quantity \(|X_t|^{n/2}\), we need to restrict the range of integration in the moment bound to the set on which \(|X_t|\) is relatively large. It will not be an obstacle in the proof of Proposition 15, since the probability that \(|X_t|\) is small will be negligible (on the set of non-extinction), which will allow us to pass from restricted \(L_1\) estimates to bounds on the distance between distributions.

Corollary 26 Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system with \(\lambda _p<2\mu \). There exist constants \(C, c>\) such that for any canonical \(f \in \mathcal {P}^{}(\mathbb {R}^{nd})\) and \(r \in (0,1)\) we have

Proof

Let \(\mathcal {J}\) be the collection of partitions of \(\{1,\ldots ,n\}\), i.e., of all sets \(J = \{J_1,\ldots ,J_k\}\), where \(J_i\)’s are non-empty, pairwise disjoint and \(\bigcup _i J_i = \{1,\ldots ,n\}\). Using (27) and the notation introduced there, we have

where \(a_J\) are some integers depending only on the partition \(J\). Since the cardinality of \(\mathcal {J}\) depends only on \(n\), it is enough to show that for each \(J \in \mathcal {J}\) and some constants \(C,c>0\) we have

Let us thus consider \(J = \{J_1,\ldots ,J_k\}\) and let us assume that among the sets \(J_i\) there are exactly \(l\) sets of cardinality \(1\), say \(J_1,\ldots ,J_l\). We would like to use Proposition 25. To this end, we have to express \(V_t^{k}(f_J)\) as a sum of \(V\)-statistics with canonical kernels. This can be easily done by means of Hoeffding’s decomposition (26). Since \(f_J\) is already degenerate with respect to variables \(x_1,\ldots ,x_l\), we get

where \(I^c := \left\{ 1,\ldots , k \right\} \setminus I\). Let us notice that \(n \ge 2k-l\), so \(n - k \ge k - l \ge |I|\), which gives

We consider a single term of (50). We have

for \(I \ne \{1,\ldots ,k\}\), where in the third inequality we used Proposition 25. One can check that for any \(n\ge 2\) there exists \(C>0\) such that for any \(I,J\) we have \(\left\| \varPi _I f_J \right\| _{ \mathcal {P}^{}(\mathbb {R}^{|I|d}) } ^{ } \le C \left\| f_J \right\| _{ \mathcal {P}^{}(\mathbb {R}^{|J|d}) } ^{ }\) and \(\left\| f_J \right\| _{ \mathcal {P}^{}(\mathbb {R}^{|J|d}) } ^{ } \le \left\| f(2n\cdot ) \right\| _{ \mathcal {P}^{}(\mathbb {R}^{n d}) } ^{ }\). Therefore, it remains to bound the contribution from \(I = \{1,\ldots ,k\}\) (in the case \(l=0\)). But in this case \(I^c = \emptyset \), so \(|V_t^{|I^c|}(\varPi _{I^c} f_J)| = |\varPi _{I^c} f_J| =| \langle \varphi ^{\otimes k},f_J\rangle | \le C\Vert f_J\Vert _\mathcal {P}^{}\) and \(\exp (-\lambda _pt(n-2|I|)) \le 1\), which easily gives the desired estimate. \(\square \)

Proof of Proposition 15

Let us fix \(g \in {{\mathrm{Lip}}}(1)\). We consider

Let \(h(x):=f_1(2nx)-f_2(2nx)\), take \(r := \Vert h\Vert _{\mathcal {P}^{}}^{1/n}\) and assume that \(r < 1\). Then by Corollary 26 we get

On the other hand,

Since on \(Ext^c\), we have \(|X_t|\ge 1\) and \(|X_t|e^{-\lambda _p t}\) converges to an absolutely continuous random variable,

which ends the proof. \(\square \)

6.5 Critical Branching Rate: Proof of Proposition 16

As the proofs in this section follow closely the line of those in the subcritical case, we present only outlines, emphasizing differences.

Proposition 27

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching particle system with \(\lambda _p=2\mu \). There exist \(C,c >0\) such that for any canonical kernel \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\) and \(t > 1\) we have

Proof

We will use similar ideas as in the proof of Proposition 25 as well as the notation introduced therein. Consider any \(\tau \in \mathbb {T}_{2n}\). By the definition of \(S(\tau ,t,x)\), Proposition 24 and the assumption \(\lambda _p = 2\mu \), we obtain

where we used the fact that \(|P_2(\tau )| \le n\) and the estimate \(|P_1(\tau )| + 2|P_3(\tau )| \le 2n-2\) obtained in the proof of Proposition 25.\(\square \)

Now we can repeat the proof of Corollary 26 using Proposition 27 instead of Proposition 25 and obtain the following corollary, whose role is analogous to the one played by Corollary 26 in the slow branching case.

Corollary 28

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching system with \(\lambda _p=2\mu \). There exist constants \(C, c\) such that for any canonical \(f \in \mathcal {P}^{}(\mathbb {R}^{nd})\) and \(r \in (0,1)\) we have for \(t \ge 1\),

Using the above corollary, we can now obtain Proposition 16 in an analogous way as we derived Proposition 15 from Corollary 26.

6.6 Supercritical Branching Case: Proof of Proposition 17

The proofs in this section diverge slightly from those in the critical and subcritical cases, and hence, we present more details.

Proposition 29

Let \(\left\{ X_t \right\} _{t\ge 0}\) be the OU branching particle system with \(\lambda _p>2\mu \). There exist \(C,c >0\) such that for any canonical kernel \(f\in \mathcal {P}^{}(\mathbb {R}^{nd})\) we have

Proof

As in the previous cases, consider any \(\tau \in \mathbb {T}_{2n}\). We use the same notation as in the proof of Proposition 25. We have

Thus, it is enough to prove that

where for simplicity we write \(P_i\) instead of \(P_i(\tau )\) (in the rest of the proof we will use the same convention with other characteristics of \(\tau \)). Using the equality \(|s| = |P_1| + 2|P_2|\), we may rewrite (52) as

so by the inequalities \(2n \ge |s|\) and \(\lambda _p - \mu > \lambda _p/2\) it is enough to prove that

But \(|P_3| = |i| - |P_2| - |P_1|\) and so

which ends the proof.\(\square \)

Proof of Proposition 17

Using the notation from the proof of Corollary 26, we get

Let us note that by \(\lambda _p > 2\mu \) and (51) we get \(n(\lambda _p - \mu ) - \lambda _p|I| \ge n(\lambda _p - \mu ) - 2 (\lambda _p - \mu )|I| \ge |I^c|(\lambda _p - \mu )\). Thus, the summands on the right-hand side above for \(I \ne \{1,\ldots ,k\}\) can be bounded using Corollary 23 and Proposition 29 by

(the last inequality is analogous as in the proof of Corollary 26).

The contribution from \(I = \{1,\ldots ,k\}\) (in the case \(l=0\)) also can be bounded like in Corollary 26. Namely, \(I^c = \emptyset \), so \(|V_t^{|I^c|}(\varPi _{I^c} f_J)| = |\varPi _{I^c} f_J| =| \langle \varphi ^{\otimes k},f_J\rangle | \le C\Vert f_J\Vert _\mathcal {P}^{} \le C^2 \left\| f(2n\cdot ) \right\| _{ \mathcal {P}^{} } ^{ }\) and \(\exp (-n(\lambda _p-\mu )t + \lambda _p |I| t) \le \exp (-(n-2|I|)(\lambda _p-\mu ))\le 1\), which easily gives the desired estimate.\(\square \)

7 Remarks on the Non-degenerate Case

As in the case of \(U\)-statistics of i.i.d. random variables, by combining the results for completely degenerate \(U\)-statistics with the Hoeffding decomposition, we can obtain limit theorems for general \(U\)-statistics, with normalization, which depends on the order of degeneracy of the kernel. For instance, in the slow branching case Theorem 3, the Hoeffding decomposition and the fact that \(\varPi _k :\mathcal {P}^{}(\mathbb {R}^{nd}) \rightarrow \mathcal {P}^{}(\mathbb {R}^{kd})\) is continuous, give the following

Corollary 30

Let \(\{X_t\}_{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^d\). Assume that \(\lambda _p <2\mu \) and let \(f \in \mathcal {P}^{}(\mathbb {R}^{nd})\) be symmetric and degenerate of order \(k-1\). Then conditionally on \(Ext^c, |X_t|^{-(n-k/2)}U_t^n(f - \langle f, \varphi ^{\otimes n}\rangle )\) converges in distribution to \(\left( {\begin{array}{c}n\\ k\end{array}}\right) L_1(\varPi _k f)\).

Similar results can be derived in the remaining two cases. Using the fact that on the set of non-extinction \(|X_t|\) grows exponentially in \(t\), we obtain

Corollary 31

Let \(\{X_t\}_{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^d\). Assume that \(\lambda _p =2\mu \) and let \(f \in \mathcal {P}^{}(\mathbb {R}^{nd})\) be symmetric and degenerate of order \(k-1\). Then conditionally on \(Ext^c, t^{-k/2}|X_t|^{-(n-k/2)}U_t^n(f - \langle f, \varphi ^{\otimes n}\rangle )\) converges in distribution to \(\left( {\begin{array}{c}n\\ k\end{array}}\right) L_2(\varPi _k f)\).

Similarly, using (8) and the definition of \(W\) we obtain

Corollary 32

Let \(\{X_t\}_{t\ge 0}\) be the OU branching system starting from \(x\in \mathbb {R}^d\). Assume that \(\lambda _p >2\mu \) and let \(f \in \mathcal {P}^{}(\mathbb {R}^{nd})\) be symmetric and degenerate of order \(k-1\). Then conditionally on \(Ext^c, \exp (-(\lambda _pn - \mu k)t)U_t^n(f - \langle f, \varphi ^{\otimes n}\rangle )\) converges in probability to \(\left( {\begin{array}{c}n\\ k\end{array}}\right) W^{n-k}L_3(\varPi _k f)\).

Since in all the corollaries above the normalization is strictly smaller than \(|X_t|^n\), they in particular imply that \(|X_t|^{-n} U_t^n(f - \langle f,\varphi ^{\otimes n}\rangle ) \rightarrow 0\) in probability, which proves the second part of Theorem 9 (as announced in Sect. 5.3).

References

Adamczak, R., Miłoś, P.: CLT for Ornstein–Uhlenbeck branching particle system. arXiv:1111.4559 (2011)

Athreya, K.B., Ney, P.E.: Branching Processes. Springer, New York (1972). Die Grundlehren der mathematischen Wissenschaften, Band 196

Billingsley, P.: Convergence of Probability Measures. Wiley, New York (1999)

Birkner, M., Zähle, I.: A functional CLT for the occupation time of state-dependent branching random walk. Ann. Probab. 35(6), 2063–2090 (2007)

Borisov, I.S., Volodko, N.V.: Orthogonal series and limit theorems for canonical \(U\)- and \(V\)-statistics of stationarily connected observations. Mat. Tr. 11(1), 25–48 (2008)

Borovkova, S., Burton, R., Dehling, H.: Limit theorems for functionals of mixing processes with applications to \(U\)-statistics and dimension estimation. Trans. Am. Math. Soc. 353(11), 4261–4318 (2001). (electronic)

de la Peña, V.H., Giné, E.: Decoupling. Probability and Its Applications. From Dependence to Independence. Randomly Stopped Processes. \(U\)-statistics and Processes. Martingales and Beyond. Springer, New York (1999)

Dehling, H., Taqqu, M.S.: The limit behavior of empirical processes and symmetric statistics for stationary sequences. In: Proceedings of the 46th Session of the International Statistical Institute, vol. 4 (Tokyo, 1987), vol. 52, pp. 217–234 (1987)

Del Moral, P., Doucet, A., Peters, G.W.: Sharp propagation of chaos estimates for Feynman—Kac particle models. Teor. Veroyatn. Primen. 51(3), 552–582 (2006)

Del Moral, P., Patras, F., Rubenthaler, S.: Tree based functional expansions for Feynman–Kac particle models. Ann. Appl. Probab. 19(2), 778–825 (2009)