Abstract

This paper argues that the IPCC has oversimplified the issue of uncertainty in its Assessment Reports, which can lead to misleading overconfidence. A concerted effort by the IPCC is needed to identify better ways of framing the climate change problem, explore and characterize uncertainty, reason about uncertainty in the context of evidence-based logical hierarchies, and eliminate bias from the consensus building process itself.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

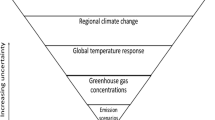

The challenge of framing and communicating uncertainty about climate change is a symptom of the broader challenge of understanding and reasoning about the complex climate system. Complexity of the climate system arises from the very large number of degrees of freedom, the number of subsystems and complexity in linking them, and the nonlinear and chaotic nature of the atmosphere and ocean. Our understanding of the complex climate system is hampered by myriad uncertainties, ignorance, and cognitive biases. The epistemology of computer simulations of complex systems is a new and active area of research among scientists, philosophers, and the artificial intelligence community. How to reason about uncertainties in the complex climate system and its computer simulations is not simple or obvious.

How has the IPCCFootnote 1 dealt with the challenge of uncertainty of the complex climate system? Prior to the Moss and Schneider (2000) Guidance paper, uncertainty was dealt with by the IPCC in an ad hoc manner. The Moss-Schneider guidelines raised a number of important issues regarding the identification and communication of uncertainties. However, the actual implementation of this guidance adopted a subjective perspective or “judgmental estimates of confidence.” Defenders of the IPCC uncertainty characterization argue that subjective consensus expressed using simple terms is more easily understood by policy makers.

The consensus approach used by the IPCC to characterize uncertainty has received a number of criticisms. Van der Sluijs et al. (2010a) find that the IPCC consensus strategy underexposes scientific uncertainties and dissent, making the chosen policy vulnerable to scientific error and limiting the political playing field. Van der Sluijs (2010b) argues that matters on which no consensus can be reached continue to receive too little attention by the IPCC, even though this dissension can be highly policy-relevant. Oppenheimer et al. (2007) point out the need to guard against overconfidence and argue that the IPCC consensus emphasizes expected outcomes, whereas it is equally important that policy makers understand the more extreme possibilities that consensus may exclude or downplay. Gruebler and Nakicenovic (2001) opine that “there is a danger that the IPCC consensus position might lead to a dismissal of uncertainty in favor of spuriously constructed expert opinion.”

The consensus approach being used by the IPCC has failed to produce a thorough portrayal of the complexities of the problem and the associated uncertainties in our understanding. While the public may not understand the complexity of the science or be culturally predisposed to accept the consensus, they can certainly understand the vociferous arguments over the science portrayed by the media. Better characterization of uncertainty and ignorance and a more realistic portrayal of confidence levels could go a long way towards reducing the “noise” and animosity portrayed in the media that fuels the public distrust of climate science and acts to stymie the policy process. An improved characterization of uncertainty and ignorance would promote better overall understanding of the science and how to best target resources to improve understanding. Further, improved understanding and characterization of uncertainty is critical information for the development of robust policy options.

2 Indeterminacy and framing of the climate change problem

An underappreciated aspect of uncertainty is associated with the questions that do not even get asked. Wynne (1992) argues that scientific knowledge typically investigates “a restricted agenda of defined uncertainties—ones that are tractable—leaving invisible a range of other uncertainties, especially about the boundary conditions of applicability of the existing framework of knowledge to new situations.” Wynne refers to this as indeterminacy, which arises from the “unbounded complexity of causal chains and open networks.” Indeterminacies can arise from not knowing whether the type of scientific knowledge and the questions posed are appropriate and sufficient for the circumstances in which the knowledge is applied.

Such indeterminacy is inherent in how climate change is framed. De Boer et al. (2010) state that: “Frames act as organizing principles that shape in a ‘hidden’ and taken-for-granted way how people conceptualize an issue.” De Boer et al. further state that such frames can direct how a problem is stated, who should make a statement about it, what questions are relevant, and what answers might be appropriate.

The UNFCCCFootnote 2 Treaty provides the rationale for framing the IPCC assessment of climate change and its uncertainties, in terms of identifying dangerous climate change and providing input for decision making regarding CO2 stabilization targets. In the context of this framing, key scientific questions receive little attention. In detecting and attributing 20th century climate change, the IPCC AR4Footnote 3 all but dismisses natural internal multidecadal variability in the attribution argument. The IPCC AR4 conducted no systematic assessment of the impact of uncertainty in 20th century solar variability on attribution, and indirect solar impacts on climate are little known and remain unexplored in any meaningful way. The IPCC WG II Report focuses on possible dangerous anthropogenic warming impacts, with little summary mention of how warming might be beneficial to certain regions or sectors.

The framework associated with setting a CO2 stabilization target focuses research and analysis on using expert judgment to identify a most likely value of sensitivity/ warming and narrowing the range of expected values, rather than fully exploring the uncertainty and the possibility for black swans (Taleb 2007) and dragon kings (Sornette 2009). The concept of imaginable surprise was discussed in the Moss-Schneider uncertainty guidance documentation, but consideration of such possibilities seems largely to have been ignored by the AR4 report. A key issue is to identify potential black swans in natural climate variation under no human influence, over time scales of one to two centuries (e.g. Ferrari 2009). States Ferrari: “Weather has these Black Swans too. It follows that blindly assuming continued warming climate trends will lead to the observation of slightly warmer temperatures year over year may work for a while, until a cold snap like the one that hit the eastern US at the beginning of last winter produces one of the coldest starts to winter in many years.” Sharp conflicts over both the science and policy reflect this overly narrow framing of the climate change problem. Until the climate change problem is reframed or the IPCC considers multiple frames, both scientific and policy debates will continue to ignore crucial elements of climate, while formulating confidence levels about anthopogenic climate change that are too high and potentially misleading.

3 Uncertainty, ignorance and confidence

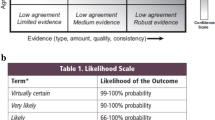

The Uncertainty Guidance Paper by Moss and Schneider (2000) recommended a common vocabulary to express quantitative levels of confidence based on the amount of evidence (number of sources of information) and the degree of agreement (consensus) among experts. This assessment strategy omits any systematic analysis of the types and levels uncertainty and quality of the evidence, and more importantly dismisses indeterminacy and ignorance as important factors in assessing these confidence levels. In context of the narrow framing of the problem, this uncertainty assessment strategy can promote the consensus into becoming a self-fulfilling prophecy. For example, the IPCC’s framing of the climate change problem in the context of anthropogenic forcing has resulted in substantial elaboration of this line of research, with the multi-decadal modes of natural internal climate variability being all but dismissed as an important explanation of any aspect of the 20th century global climate change attribution.

The uncertainty guidance provided for the IPCC AR4 distinguished between levels of confidence in scientific understanding and the likelihoods of specific results. In practice, primary conclusions in the AR4 included a mixture of likelihood and confidence statements that are ambiguous. Curry and Webster (2011) have raised specific issues with regards to the statement “Most of the observed increase in global average temperatures since the mid-20th century is very likely due to the observed increase in anthropogenic greenhouse gas concentrations,” that are related to apparent circular reasoning in the attribution argument and ambiguity in the attribution statement itself. Risbey and Kandlikar (2007) describe ambiguities in actually applying likelihood and confidence, e.g. situations where likelihood and confidence cannot be fully separated and likelihood levels contain implicit confidence levels.

Numerous methods of categorizing risk and uncertainty have been described in the context of different disciplines and various applications (e.g. Bammer and Smithson 2008). Of particular relevance for climate change are schemes for analyzing uncertainty when conducting risk analyses. My primary concerns about the IPCC’s characterization of uncertainty are twofold:

-

lack of discrimination between statistical uncertainty and scenario uncertainty

-

failure to meaningfully address the issue of ignorance

Ignorance is that which is unknown. Walker et al. (2003) categorize the following different levels of ignorance. Total ignorance implies a deep level of uncertainty, to the extent that we do not even know that we do not know. Recognized ignorance refers to fundamental uncertainty in the mechanisms being studied and a weak scientific basis for developing scenarios. Reducible ignorance may be resolved by conducting further research, whereas irreducible ignorance implies that research cannot improve knowledge (e.g. what happened prior to the big bang). Bammer and Smithson (2008) further distinguish between conscious ignorance (where we know we don’t know what we don’t know), versus unacknowledged or meta-ignorance (where we don’t even consider the possibility of error). The IPCC AR4 uncertainty guidanceFootnote 4 neglects to include ignorance in its characterization of uncertainty, although inclusion of ignorance was explicitly recommended by Kandlikar et al. (2005). Overconfidence is an inevitable result of neglecting ignorance.

Following Walker et al. (2003), statistical uncertainty is distinguished from scenario uncertainty, whereby scenario uncertainty implies that it is not possible to formulate the probability of occurrence particular outcomes. A scenario is a plausible but unverifiable description of how the system and/or its driving forces may develop in the future. Scenarios may be regarded as a range of discrete possibilities with no a priori allocation of likelihood. Whereas the IPCC reserves the term “scenario” for emissions scenarios, Betz (2009) argues for the logical necessity of considering each climate model simulation as a modal statement of possibility, stating what is possibly true about the future climate system, which is consistent with scenario uncertainty.

Stainforth et al. (2007) argue that model inadequacy and an insufficient number of simulations in the ensemble preclude producing meaningful probability distributions from the frequency of model outcomes of future climate. Stainforth et al. state: “Given nonlinear models with large systematic errors under current conditions, no connection has been even remotely established for relating the distribution of model states under altered conditions to decision-relevant probability distributions. . . Furthermore, they are liable to be misleading because the conclusions, usually in the form of PDFs,Footnote 5 imply much greater confidence than the underlying assumptions justify.”

Stainforth et al. make a statement that is equivalent to Betz’s modal statement of possibility: “Each model run is of value as it presents a ‘what if’ scenario from which we may learn about the model or the Earth system.” Insufficiently large initial condition ensembles combined with model parameter and structural uncertainty preclude forming a PDF from climate model simulations that has much meaning in terms of establishing a mean value or confidence intervals. In the presence of scenario uncertainty, which characterizes climate model simulations, attempts to produce a PDF for climate sensitivity (e.g. Annan and Hargreaves 2009) are arguably misguided and misleading.

A comprehensive approach to uncertainty management and elucidation of the elements of uncertainty is described by the NUSAPFootnote 6 scheme, which includes methods to determine the pedigree and quality of the relevant data and methods used (e.g. van der Sluijs et al. 2005a,b). The complexity of the NUSAP scheme arguably precludes its widespread adoption by the IPCC. The challenge is to characterize uncertainty in a complete way while retaining sufficient simplicity and flexibility for its widespread adoption. In the context of risk analysis, Spiegelhalter and Riesch (2011) describe a scheme for characterizing uncertainty that covers the range from complete numerical formalization of probabilities to indeterminancy and ignorance, and includes the possibility of unspecified but surprising events. Quality of evidence is an important element of both the NUSAP scheme and the scheme described by Spiegelhalter and Riesch (2011). The GRADE scale of Guyatt et al. (2008) provides a simple yet useful method for judging quality of evidence, with a more complex scheme for judging quality utilized by NUSAP.

4 Consensus and disagreement

The uncertainty associated with climate science and the range of decision making frameworks and policy options provides much fodder for disagreement. Here I argue that the IPCC’s consensus approach enforces overconfidence, marginalization of skeptical arguments, and belief polarization. The role of cognitive biases (e.g. Tversky and Kahneman 1974) has received some attention in the context of the climate change debate, as summarized by Morgan et al. (2009). However, the broader issues of the epistemology and psychology of consensus and disagreement have received little attention in the context of the climate change problem.

Kelly (2005, 2008) provides some general insights into the sources of belief polarization that are relevant to the climate change problem. Kelly (2008) argues that “a belief held at earlier times can skew the total evidence that is available at later times, via characteristic biasing mechanisms, in a direction that is favorable to itself.” Kelly (2008) also finds that “All else being equal, individuals tend to be significantly better at detecting fallacies when the fallacy occurs in an argument for a conclusion which they disbelieve, than when the same fallacy occurs in an argument for a conclusion which they believe.” Kelly (2005) provides insights into the consensus building process: “As more and more peers weigh in on a given issue, the proportion of the total evidence which consists of higher order psychological evidence [of what other people believe] increases, and the proportion of the total evidence which consists of first order evidence decreases . . . At some point, when the number of peers grows large enough, the higher order psychological evidence will swamp the first order evidence into virtual insignificance.” Kelly (2005) concludes: “Over time, this invisible hand process tends to bestow a certain competitive advantage to our prior beliefs with respect to confirmation and disconfirmation. . . In deciding what level of confidence is appropriate, we should taken into account the tendency of beliefs to serve as agents in their own confirmation.”

So what are the implications of Kelly’s arguments for the IPCC? Cognitive biases in the context of an institutionalized consensus building process have arguably resulted in the consensus becoming increasingly confirmed in a self-reinforcing way. The consensus process of the IPCC has marginalized dissenting skeptical voices, who are commonly dismissed as “deniers” (e.g. Hasselman 2010). Experiences of several IPCC reviewers in this regard have been publicly documented (e.g. McKitrick 2008; McKitrick,Footnote 7 McIntyre,Footnote 8 ChristyFootnote 9). This “invisible hand” that marginalizes skeptics is operating to the substantial detriment of climate science, as well as biasing policies that are informed by climate science. The importance of skepticism is aptly summarized by Kelly (2008): “all else being equal, the more cognitive resources one devotes to the task of searching for alternative explanations, the more likely one is hit upon such an explanation, if in fact there is an alternative to be found.”

The intense disagreement between scientists that support the IPCC consensus and skeptics becomes increasingly polarized as a result of the “invisible hand” described by Kelly (2005, 2008). Disagreement itself can be evidence about the quality and sufficiency of the evidence. Disagreement can arise from disputed interpretations as proponents are biased by excessive reliance on a particular piece of evidence. Disagreement can result from ‘conflicting certainties,’ whereby competing hypotheses are each buttressed by different lines of evidence, each of which is regarded as ‘certain’ by its proponents. Conflicting certainties arise from differences in chosen assumptions, neglect of key uncertainties, and the natural tendency to be overconfident about how well we know things (e.g. Morgan et al. 2009).

5 Reasoning about uncertainty

The IPCC objective is to assess existing knowledge about climate change. The IPCC assessment process combines a compilation of evidence with subjective Bayesian reasoning. This process is described by Oreskes (2007) as presenting a ‘consilience of evidence’ argument, which consists of independent lines of evidence that are explained by the same theoretical account. Oreskes draws an analogy for this approach with what happens in a legal case.

The consilience of evidence argument is not convincing unless it includes parallel evidence-based analyses for competing hypotheses. Any system that is more inclined to admit one type of evidence or argument rather than another tends to accumulate variations in the direction towards which the system is biased. In a Bayesian analysis with multiple lines of evidence, you could conceivably come up with enough multiple lines of evidence to produce a high confidence level for each of two opposing arguments, which is referred to as the ambiguity of competing certainties. If you acknowledge a substantial level of uncertainty and ignorance, the competing certainties disappear.

To be convincing, the arguments for climate change need to change from the one-sided consilience of evidence model to parallel evidence-based analyses of competing hypotheses. The rationale for parallel evidence-based analyses of competing hypotheses is eloquently described by Kelly’s (2008) key epistemological fact:

“For a given body of evidence and a given hypothesis that purports to explain that evidence, how confident one should be that the hypothesis is true on the basis of the evidence depends on the space of alternative hypotheses of which one is aware. In general, how strongly a given body of evidence confirms a hypothesis is not solely a matter of the intrinsic character of the evidence and the hypothesis. Rather, it also depends on the presence or absence of plausible competitors in the field. It is because of this that the mere articulation of a plausible alternative hypothesis can dramatically reduce how likely the original hypothesis is on one’s present evidence.”

Parallel evidence-based analyses of competing hypotheses provides an explicit and important role for skeptics. Further, such an analysis provides a framework whereby scientists with a plurality of viewpoints participate in an assessment. Disagreement then becomes the basis for focusing research in a certain area, and so moves the science forward. The IPCC detection and attribution analysis is conducted using forced and unforced climate model simulations, which seems consistent with this idea. However, the inferences drawn from these simulations in the attribution assessment neglect to seriously consider natural variability (forced and unforced) in a manner that accounts for uncertainty in natural forcing and a coherent signal from multi-decadal internal oscillations (e.g. Curry and Webster 2011).

Reasoning about uncertainty in the context of evidence-based analyses is not at all straightforward for the climate problem. Because of the complexity of the climate problem, Van der Sluijs et al. (2005) argue that uncertainty methods such as subjective probability or Bayesian updating alone are not suitable for this class of problems, because the unquantifiable uncertainties and ignorance dominate the quantifiable uncertainties. Any quantifiable Bayesian uncertainty analysis “can thus provide only a partial insight into what is a complex mass of uncertainties” (Van der Sluijs et al. 2005).

Expert judgments about confidence levels are made by the IPCC on issues that are dominated by unquantifiable uncertainties. Because of the complexity of the issues, individual experts use different mental models and heuristics for evaluating the interconnected evidence. Biases can abound when reasoning and making judgments about such a complex problem. Bias can occur (e.g. Curry and Webster 2011) by excessive reliance on a particular piece of evidence (e.g. CO2 forcing), the presence of cognitive biases in heuristics (e.g. anchoring of climate sensitivity values to a narrow range or most likely value), failure to account for indeterminacy and ignorance (endemic throughout the IPCC assessment process), and logical fallacies and errors including circular reasoning (e.g. inverse calculations of aerosol optical depth used to draw conclusions regarding attribution). Further, the consensus building process itself can be a source of bias (Kelly 2005).

Identifying the most important uncertainties and introducing a more objective assessment of confidence levels requires introducing a more disciplined logic into the climate change assessment process. A useful approach would be the development of hierarchical logical hypothesis models that provides a structure for assembling the evidence and arguments in support of the main hypotheses or propositions. A logical hypothesis hierarchy (or tree) links the root hypothesis to lower level evidence and hypotheses. While developing a logical hypothesis tree is somewhat subjective and involves expert judgments, the evidential judgments are made at a lower level in the logical hierarchy. Essential judgments and opinions relating to the evidence and the arguments linking the evidence are thus made explicit, lending structure and transparency to the assessment. To the extent that the logical hypothesis hierarchy decomposes arguments and evidence to the most elementary propositions, the sources of disputes are easily illuminated and potentially minimized.

Bayesian Network Analysis using weighted binary tree logic is one possible choice for such an analysis. However, a weakness of Bayesian Networks is its two-valued logic and inability to deal with ignorance, whereby evidence is either for or against the hypothesis. An influence diagram is a generalization of a Bayesian Network that represents the relationships and interactions between a series of propositions or evidence (Spiegelhalter 1986). Cui and Blockley (1990) introduce interval probability three-valued logic into an influence diagram. The three-valued logic has an explicit role for uncertainties (the so-called “Italian flag”) that recognizes that evidence may be incomplete or inconsistent, of uncertain quality or meaning. Combination of evidence proceeds generally as for a Bayesian combination, but are modified by the factors of sufficiency, dependence and necessity.

Hall et al. (2005) apply influence diagrams and interval probability theory to a study seeking the probability that the severe 2000 UK floods were attributable to climate change. Hall et al. used influence diagrams to represent the relevant evidential reasoning and uncertainties. Three approaches to uncertainty in influence were compared, including Bayesian belief networks and the interval probability methods Interval Probability Theory and Support Logic Programming. Hall et al. argue that “interval probabilities represent ambiguity and ignorance in a more satisfactory manner than the conventional Bayesian alternative . . . and are attractive in being able to represent in a straightforward way legitimate imprecision in our ability to estimate probabilities.”

Hall et al. conclude that influence diagrams can help to synthesize complex and contentious arguments of relevance to climate change. Breaking down and formalizing expert reasoning can facilitate dialogue between experts, policy makers, and other decision stakeholders. The procedure used by Hall et al. supports transparency and clarifies uncertainties in disputes, in a way that expert judgment about high level root hypotheses fails to do.

6 Conclusions

I have argued that the IPCC has oversimplified the issue of dealing with uncertainty in the climate system, which can lead to misleading overconfidence. Consequently, the IPCC has neither thoroughly portrayed the complexities of the problem nor the associated uncertainties in our understanding. Improved understanding and characterization of uncertainty and ignorance would promote a better overall understanding of the science and how to best target resources to improve understanding. A concerted effort by the IPCC is needed to identify better ways of framing the climate change problem, exploring and characterizing uncertainty, reasoning about uncertainty in the context of evidence-based logical hierarchies, and eliminate bias from the consensus building process itself. The IPCC should seek advice from the broader community of scientists, engineers, statisticians, social scientists and philosophers in strategizing about ways to improve its understanding and assessment of uncertainty.

Improved characterization of uncertainty and ignorance and a more realistic portrayal of confidence levels could go a long way towards reducing the “noise” and animosity portrayed in the media that fuels the public distrust of climate science that is clouding the policy process. Once a better characterization of uncertainty is accomplished (including indeterminacy and ignorance), then the challenge of communicating uncertainty is much more tractable and ultimately more convincing.

Improved understanding and characterization of uncertainty is critical information for the development of robust policy options. When working with policy makers and communicators, it is essential not to fall into the trap of acceding to inappropriate demands for certainty; the intrinsic limitations of the knowledge base need to be properly assessed and presented to decision makers. Wynne (1992) makes an erudite statement: “the built-in ignorance of science towards its own limiting commitments and assumptions is a problem only when external commitments are built on it as if such intrinsic limitations did not exist.”

Notes

Intergovernmental Panel on Climate Change, http://www.ipcc.ch/

United Nations Framework Convention on Climate Change, http://unfccc.int/2860.php

IPCC 4th Assessment Report, Chapter 9, http://www.ipcc.ch/publications_and_data/ar4/wg1/en/ch9s9-4-1-5.html

Guidance Notes for Lead Authors of the IPCC Fourth Assessment Report on Addressing Uncertainties, http://www.ipccwg1.unibe.ch/publications/supportingmaterial/uncertainty-guidancenote.pdf

PDF: probability density function

Ross McKittrick, “Fix the IPCC”, in the Financial Post, 8/27/10 http://opinion.financialpost.com/2010/08/27/fix-the-ipcc-process/#ixzz0y0p4sz8u

Steve McIntyre, “Yamal and IPCC AR4 Review Comments,” http://climateaudit.org/2009/10/05/yamal-and-ipcc-ar4-review-comments/

John R. Christy, Testimony to the House Science, Space and Technology Committee, “Examining the Process Concerning Climate Change Assessments,” http://science.house.gov/sites/republicans.science.house.gov/files/documents/hearings/ChristyJR_written_110331_all.pdf

References

Annan JD, Hargreaves JC (2009) On the generation and interpretation of probabilistic estimates of climate sensitivity. Clim Chang 104:423–436

Bammer G and Smithson M (2008) Uncertainty and risk: multidisciplinary perspectives. Earthscan Publications Ltd, 400 pp

Betz G (2009) Underdetermination, model-ensembles and surprises: on the epistemology of scenario-analysis in climatology. J Gen Phil Science 40:3–21

Cui W, Blockley DI (1990) Interval probability theory for evidential support. Int J Intelligence Systems 5:183–192

Curry JA and Webster PJ (2011) ‘Climate science and the uncertainty monster’. Bull Amer Meteorol Soc in revision

De Boer J, Wardekker JA, van der Sluijs JP (2010) Frame-based guide to situated decision making on climate change. Glob Environ Chang 20:502–510

Ferrari M (2009) Anticipating the climate black swan. Weather Trends International. http://www.wxtrends.com/files/whitepapers/anticipating_the_climate_black_swan.pdf

Gruebler A and Nakicenovic N (2001) Identifying dangers in an uncertain climate: we need to research all the potential outcomes, not try to guess which is likeliest to occur. Nature 412: 15

Guyatt GH, Oxman AD, Vist GE, Kunz R, Falck-Ytter Y, Alonso-Coello P (2008) GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ 336:924–926

Hall J, Twyman C, Kay A (2005) Influence diagrams for representing uncertainty in climate-related propositions. Clim Chang 69:343–365

Hasselman K (2010) The climate change game. Nat Geosci 3:511–512

Kandlikar M, Risbey J, Dessai S (2005) Representing and communicating deep uncertainty in climate change assessment. Comptes Rendus Geoscience 337:443–455

Kelly T (2005) ‘The epistemic significance of disagreement.’ In: Hawthorne J (ed) Philosophical perspectives vol 19: Epistemology, 179–209

Kelly T (2008) ‘Disagreement, dogmatism and belief polarization’. J Philosophy CV 611–633

McKitrick R (2008) “Response to David Henderson’s ‘Governments and Climate Change Issues: the Flawed Consensus’” In: Todd, Walker and Kerry Lynch, (eds) American Institute of Economic Research Economic Education Bulletin, vol. 48 no. 5 (May 2008) Special Issue “The Global Warming Debate: Science, Economics and Policy.” pp. 83–104. http://rossmckitrick.weebly.com/uploads/4/8/0/8/4808045/mckitrick.final.pdf

Morgan G, Dowlatabadi H, Henrion M, Keith D, Lempert R, McBride S, Small M, Wilbanks T (2009) Best practice approaches for characterizing, communicating, and Incorporating scientific uncertainty in climate decision making. U.S. Climate Change Science Program Synthesis and Assessment Product 5.2., NOAA, Washington, D.C. http://www.climatescience.gov/Library/sap/sap5-2/final-report/default.htm

Moss RH and Schneider SH (2000) ‘Uncertainties in the IPCC TAR: recommendations to lead authors for more consistent assessment and reporting.’ In: Pachauri R, Taniguchi T and Tanaka K (eds) Guidance papers on the cross cutting issues of the third assessment report of the IPCC. World Meteorological Organization, Geneva, pp. 33–51

Oppenheimer M, O’Neill BC, Webster M, Agrawala S (2007) The limits of consensus. Science 317:1505–1506

Oreskes N (2007) ‘The scientific consensus on climate change: how do we know we’re not wrong?’ In: DiMento JF, Doughman P (eds) Climate change. MIT Press

Risbey JS, Kandlikar M (2007) Expressions of likelihood and confidence in the IPCC uncertainty assessment process. Clim Chang 85:19–31

Sornette D (2009) Dragon-Kings, Black Swans and the Prediction of Crises. Swiss Finance Institute Research Paper No. 09–36. Available at SSRN: http://ssrn.com/abstract=1470006

Spiegelhalter DJ (1986) A statistical view of uncertainty in expert systems. In: Kanal LN, Lemmer JF (eds) Uncertainty in artificial intelligence. North-Holland, New York, pp 17–48

Spiegelhalter DJ and Riesch H (2011) ‘Don’t know, can’t know: embracing scientific uncertainty when analyzing risk’. Phil Trans Roy Soc A in press

Stainforth DA, Allen MR, Tredger ER, Smith LA (2007) Confidence, uncertainty, and decision-support relevance in climate prediction. Phil Trans Roy Soc A 365:2145–2161

Taleb N (2007) The black swan: the impact of the highly improbable. Random House, New York

Tversky A, Kahneman D (1974) Judgments under uncertainty: heuristics and biases. Science 185:1124–1131

Van der Sluijs JP, Craye M, Funtowicz S, Kloprogge P, Ravetz J, Risbey J (2005) Combining quantitative and qualitative measures of uncertainty in model-based environmental assessment: the NUSAP system. Risk Anal 25:481–492

Van der Sluijs JP, van Est R, Riphagen M (2010a) Room for climate debate: perspectives on the interaction between climate politics, science and the media. Rathenau Instituut-Technology Assessment, The Hague. 96 pp http://www.rathenau.nl/uploads/tx_tferathenau/Room_for_climate_debate.pdf

van der Sluijs JP, van Est R, Riphagen M (2010b) Beyond consensus: reflections from a democratic perspective on the interaction between climate politics and science. Curr Opin Environ Sustain 2(5–6):409–415

Walker WE, Harremoes P, Rotmans J, van der Sluijs JP, van Asselt MBA, Janssen P, Krayer von Krauss MP (2003) Defining uncertainty: a conceptual basis for uncertainty management in model based decision support. Integr Assess 4:5–17

Wynne B (1992) Uncertainty and environmental learning: reconceiving science and policy in the preventive paradigm. Glob Environ Chang 62:111–127

Acknowledgements

I would like to acknowledge the contributions of the Denizens of my blog Climate Etc. (judithcurry.com) for their insightful comments and discussions on the numerous uncertainty threads. I would also like to acknowledge helpful comments from the editors in improving the manuscript. This research was supported by NOAA through a subcontract from STG.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Curry, J. Reasoning about climate uncertainty. Climatic Change 108, 723 (2011). https://doi.org/10.1007/s10584-011-0180-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10584-011-0180-z