Abstract

Partial match queries constitute the most basic type of associative queries in multidimensional data structures such as \(K\)-d trees or quadtrees. Given a query \(\mathbf {q}=(q_0,\ldots ,q_{K-1})\) where s of the coordinates are specified and \(K-s\) are left unspecified (\(q_i=*\)), a partial match search returns the subset of data points \(\mathbf {x}=(x_0,\ldots ,x_{K-1})\) in the data structure that match the given query, that is, the data points such that \(x_i=q_i\) whenever \(q_i\not =*\). There exists a wealth of results about the cost of partial match searches in many different multidimensional data structures, but most of these results deal with random queries. Only recently a few papers have begun to investigate the cost of partial match queries with a fixed query \(\mathbf {q}\). This paper represents a new contribution in this direction, giving a detailed asymptotic estimate of the expected cost \(P_{{n},\mathbf {q}}\) for a given fixed query \(\mathbf {q}\). From previous results on the cost of partial matches with a fixed query and the ones presented here, a deeper understanding is emerging, uncovering the following functional shape for \(P_{{n},\mathbf {q}}\)

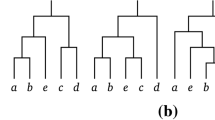

(l.o.t. lower order terms, throughout this work) in many multidimensional data structures, which differ only in the exponent \(\alpha \) and the constant \(\nu \), both dependent on s and K, and, for some data structures, on the whole pattern of specified and unspecified coordinates in \(\mathbf {q}\) as well. Although it is tempting to conjecture that this functional shape is “universal”, we have shown experimentally that it seems not to be true for a variant of \(K\)-d trees called squarish \(K\)-d trees.

Similar content being viewed by others

Notes

The algorithm to perform a partial match query is a partial match search; however, sometimes we will abuse the terminology and use the term partial match query when we should actually say partial match search.

For brevity, we do not make the distinction between regular and extreme coordinates; \(\ell _0\), ..., \(\ell _{s-1}\) give the indices of specified coordinates.

From now on, we shall omit the subscript \(\mathbf {u}\) of f to simplify notation.

References

Bentley, J.L.: Multidimensional binary search trees used for associative retrieval. Commun. ACM 18(9), 509–517 (1975)

Bentley, J.L., Finkel, R.A.: Quad trees: a data structure for retrieval on composite keys. Acta Inform. 4(1), 1–9 (1974)

Broutin, N., Neininger, R., Sulzbach, H.: A limit process for partial match queries in random quadtrees and 2-d trees. Ann. Appl. Probab. 23(6), 2560–2603 (2013)

Chanzy, P., Devroye, L., Zamora-Cura, C.: Analysis of range search for random \(k\)-d trees. Acta Inform. 37(4–5), 355–383 (2001)

Chern, H.-H., Hwang, H.-K.: Partial match queries in random \(k\)-d trees. SIAM J. Comput. 35(6), 1440–1466 (2006)

Curien, N., Joseph, A.: Partial match queries in two-dimensional quadtrees: a probabilistic approach. Adv. Appl. Probab. 43(1), 178–194 (2011)

Cunto, W., Lau, G., Flajolet, Ph.: Analysis of \(k\)d-trees improved by local reorganisations. In: Dehne, F., Sack, J.-R., Santoro, N. (eds.), Workshop on Algorithms and Data Structures (WADS’89), Volume 382 of Lecture Notes in Computer Science, pp. 24–38. Springer (1989)

Duch A., Estivill-Castro, V., Martínez, C.: Randomized \(k\) International Symposium on Algorithms and Computation (ISAAC), Volume 1533 of Lecture Notes in Computer Science, pp. 199–208. Springer (1998)

Duch, A., Jiménez, R.M., Martínez, C.: Selection by rank in \(k\)-dimensional binary search trees. Random Struct. Algorithms 45(1), 14–37 (2014)

Devroye, L., Jabbour, J., Zamora-Cura, C.: Squarish \(k\)-d trees. SIAM J. Comput. 30(5), 1678–1700 (2000)

Duch, A., Lau, G., Martínez, C.: On the average performance of fixed partial match queries in random relaxed \(k\) International Meeting on Probabilistic, Combinatorial and Asymptotic Methods for the Analysis of Algorithms (AofA). Discrete Mathematics & Theoretical Computer Science (Proceedings), pp. 103–114 (2014)

Duch, A., Martínez, C.: On the average performance of orthogonal range search in multidimensional data structures. J. Algorithms 44(1), 226–245 (2002)

Feller, W.: An Introduction to Probability Theory and Its Applications. Wiley, New York (1971)

Flajolet, Ph, Odlyzko, A.: Singularity analysis of generating functions. SIAM J. Discrete Math. 3(1), 216–240 (1990)

Flajolet, Ph, Puech, C.: Partial match retrieval of multidimensional data. J. ACM 33(2), 371–407 (1986)

Flajolet, Ph, Sedgewick, R.: Analytic Combinatorics. Cambridge University Press, Cambridge (2009)

Johnson, N.L., Kotz, S., Kemp, A.W.: Univariate Discrete Distributions, 2nd edn. Wiley, New York (1992)

Martínez, C., Panholzer, A., Prodinger, H.: Partial match queries in relaxed multidimensional search trees. Algorithmica 29(1–2), 181–204 (2001)

Acknowledgments

We are very thankful to the two anonymous reviewers of this manuscript for their detailed reports and useful suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work has been partially supported by funds from the Spanish Ministry for Economy and Competitiveness (MINECO), the European Union (FEDER funds) under Grant COMMAS (Ref. TIN2013-46181-C2-1-R), and the Catalan Agency for Management of Research and University Grants (AGAUR) Grant SGR 2014:1034 (ALBCOM).

Appendices

Appendix 1: Getting the Integral Equation (8)

The hypothesis of Proposition 1 is that there exists some \(\gamma \) such that

exists and is not identically null, with \(z_i = \lim _{n\rightarrow \infty } r_i/n\in (0,1)\), \(0\le i < t\). We shall also assume here that all \(r_i \le n/2\), for otherwise we can replace \(r_i\) by \(n-r_i\).

First of all, in the asymptotic regime, when \(r_i=o(n)\), the probability that we recursively continue the PM search in the right subtree is o(1), so we can assume all extremal ranks are \(r_i=0\) (\(t\le i < s\)), and thus we can rewrite the recurrence as

A second simplification comes from the realization that \(\pi _L^{({i,j})}(\mathbf {r},\mathbf {r'})\), \(\pi _R^{({i,j})}(\mathbf {r},\mathbf {r'})\) are highly concentrated around the expected value of \(\mathbf {r'}\); in particular,

where \(\overleftarrow{r_i}=r_i\) and \(\overleftarrow{r_k}= \frac{j}{n}r_k\) for \(k\not = i\).

Similarly,

where \(\overrightarrow{r_i}=r_i-j-1\) and \(\overrightarrow{r_k}= \frac{n-1-j}{n}r_k\) for \(k\not = i\). Last but not least,

where \(\overleftrightarrow {r_k}= \frac{j}{n}r_k\), \(0\le k < s\). Setting \(C_{{n},\mathbf {r}}:= 0\) if \(n=0\), and \(C_{{n},\mathbf {r}}:= n^{-\gamma }P_{{n},\mathbf {r}}\) if \(n > 0\),

Notice that when \(t\le i < s\), we assume \(r_i=0\) and thus \(\overleftarrow{r_i}=0\) as well. Now, since \(t> 0\),

exists and it is not identically null, by hypothesis. If we substitute \(C_{{n},\mathbf {r}}\) by \(f\left( \frac{r_0}{n},\ldots ,\frac{r_{t-1}}{n}\right) \) then

Passing to the limit when \(n\rightarrow \infty \), with \(z_i = \lim _{n\rightarrow \infty } (r_i/n)\), we replace sums by integrals and thus

which can be further manipulated to give

Hence

with

Furthermore,

with the substitution \(z:=z_i/z\) in the first integral and \(z:=(z_i-z)/(1-z)\) in the second.

Besides the integral equation (8), the properties of \(P_{{n},\mathbf {r}}\) translate into several constraints that \(f(z_0,\ldots ,z_{t-1})\) must satisfy:

-

(a)

The function f is symmetric with respect to any permutation of its arguments.

-

(b)

For any i, \(0\le i < t\), and \(z_i\in (0,1)\), \(f(z_0,\ldots ,z_{i-1},z_i,z_{i+1},\ldots ,z_{t-1}) = f(z_0,\ldots ,z_{i-1},1-z_i,z_{i+1},\ldots ,z_{t-1})\).

-

(c)

For any i, \(0\le i < t\),

$$\begin{aligned}&\lim _{z_i\rightarrow 0} f(z_0,\ldots ,z_{i-1},z_i,z_{i+1},\ldots ,z_{t-1})\\&\qquad =\lim _{z_i\rightarrow 1} f(z_0,\ldots ,z_{i-1},z_i,z_{i+1},\ldots ,z_{t-1}) = 0. \end{aligned}$$ -

(d)

$$\begin{aligned} \int _0^1\cdots \int _0^1 f(y_0,\ldots ,y_{t-1})\,dy_0\cdots dy_{t-1} = \beta (\rho ,\rho _0). \end{aligned}$$

Constraint (d) follows because of (6). In fact, we must have \(\gamma =\alpha (\rho ,\rho _0)\), for otherwise we would have a contradiction with our hypothesis: either we have that \(\lim _{n\rightarrow \infty } P_{{n},\mathbf {r}}/n^{\gamma }=0\) or that limit does not exist (\(\rightarrow \infty \)). Also, because \(\gamma =\alpha (\rho ,\rho _0)=: \alpha \) we must have,

since

Constraint (c) follows from inductive reasoning. Suppose that for any rank vector \(\mathbf {r'}\) with \(s_0+1\) extreme values we have \(P_{{n},\mathbf {r'}}=\Theta \left( n^{\alpha (\rho ,\rho _0+1/K)}\right) \). Since setting \(z_i=0\) or \(z_i=1\) corresponds to one more extreme rank, dividing \(P_{{n},\mathbf {r}}\) by \(n^{-\alpha (\rho ,\rho _0)}\) yields that f is 0, because \(\alpha (\rho ,\rho _0+1/K) < \alpha (\rho ,\rho _0)\). To prove the basis of this induction, we must analyze the case when all the specified coordinates of a query are extreme. The recurrence for \(P_{{n},\mathbf {r}}\) in this case (\(s_0 = s\)) is greatly simplified. Indeed, for such queries we have

as the query (actually, its rank vector) does not change as we proceed recursively with the PM search; moreover, whenever the discriminant at the root is one of the specified extreme coordinates we will systematically continue in the left subtree. The solution of the recurrence above is straightforward:

that is, \(P_{{n},\mathbf {r}}=\Theta \left( n^{\alpha (\rho _0,\rho _0)}\right) \), as we wanted to show. It is also interesting to note that constraint (c) can also be proved as a consequence of the symmetries (a) and (b), and the symmetries of the weights of the recurrence lead to constraints (a) and (b).

Appendix 2: Solving the Integral Equation (8)

In order to solve the integral equation (8) given in Proposition 1, together with constraints (a)–(d) we transform it into an equivalent partial differential equation (PDE).

For any function \(f(z_0,z_1,\ldots ,z_{t-1})\) let

and, similarly let

If we set \(T:= \lambda \sum _{i=0}^{t-1}(L_i + R_i)\) where \(\lambda = \frac{\alpha +2}{2t}\) then the function f we are looking for is a non-trivial solution to the fix-point equation \(f = T[f]\) with the constraints (a)–(d).

Let us now assume that the solution to the integral equation is a function in separable variables, namely \(f(z_0,z_1,\ldots ,z_{t-1}) = \phi _0(z_0)\cdot \phi _1(z_1)\cdots \phi _{t-1}(z_{t-1})\). Because of the symmetry of f (constraint (a)), it follows that we can safely assume \(\phi _0 = \phi _1 = \cdots = \phi _{t-1} =: \phi \). Furthermore, because of constraint (b), we must have \(\phi (z) = \phi (1-z)\) for any \(z\in (0,1)\). We must also have \(\lim _{z\rightarrow 0} \phi (z) = 0\) to satisfy constraint (c).

Going back to the integral equation, if we denote \(\phi _i:= \phi (z_i)\) we must have

If, for all i, \(0\le i < t\),

then

The solution of (14), namely, the solution of

can be obtained by solving the equivalent ordinary differential equation that we obtain applying the operator

to both sides. The linear operator \(\Phi \) allows us to remove the integrals in \(L_i\) and \(R_i\):

In particular, we obtain the following ODE for \(\phi (z)\), after rearranging:

or more conveniently,

with the initial condition \(\phi (0)=0\). Again we have a second order linear hypergeometric ODE, and without too much effort, as in [9], we can obtain the solution \(\phi (z) = \mu \left( z(1-z)\right) ^{\alpha /2}\), for some constant \(\mu \) and \(\alpha =\alpha (\rho ,\rho _0)\). We have thus

with \(\nu _{s,t, K}:= \mu ^{t}\). This family of solutions (parameterized by the “arbitrary” \(\nu _{s,t, K}\)) obviously satisfies constraints (a), (b) and (c). Constraint (d) yields the sought function, as we impose

Appendix 3: Bounding the Errors

Once we have an explicit form for \(f(\mathbf {z}):= f(z_0,\ldots ,z_{t-1})\), we can compute error bounds for the successive approximations that led us from the recurrence in (7) to the integral equation (8). Our knowledge of the function \(f(z_0,\ldots ,z_{t-1})\) and its derivatives in (0, 1) is the key to find these bounds. First, we can use the trapezoid rule or the Euler-Maclaurin summation formula to bound the error in passing from sums to integrals; for instance

and similarly for the other integrals.

Now, if we compare recurrence (7) for \(P_{{n},\mathbf {r}}\) to the recurrence (13) for \(C_{{n},\mathbf {r}}\), apart from the normalizing factor \(n^\gamma \), the difference comes from the splitting probabilities \(\pi _L^{({i,j})}(\mathbf {r},\mathbf {r'})\), \(\pi _R^{({i,j})}(\mathbf {r},\mathbf {r'})\) and \(\pi _{B}^{({i,j})} (\mathbf {r},\mathbf {r'},\mathbf {r''})\), which we argued are highly concentrated around their respective means. Here, Laplace’s method for summations can be used to bound the error in that step. For instance, take

for some j such that \(j/n\rightarrow c\), for some constant \(0 < c < 1\). Now, the right-hand side above can be re-written as

and we can deal with each factor separately (here, the fact that \(f(z_0,\ldots ,z_{t-1})=\phi (z_0)\cdots \phi (z_{t-1})\) greatly simplifies the proof). With our assumption that \(r_k/n\rightarrow z_k\) for some \(0 < z_k < 1\), we need just to show that

The splitting probabilities are given by products of the hypergeometric distribution (owing to the independence with which coordinates of each data point are drawn)

and then we can apply the following approximation to the binomial distribution [17] as long as \(r_k=z_k n + o(n)\) and \(j=cn + o(n)\)

where \(\overline{r}_k=r_k\frac{j}{n}\) is the mean value of the hypergeometric distribution.

If we divide the range of summation of \(r'_k\) into three parts, from 0 to \(\overline{r}_k-\Delta -1\), from \(\overline{r}_k-\Delta \) to \(\overline{r}_k+\Delta \) and from \(\overline{r}_k+\Delta +1\) to \(r_k\), we can consider the three parts separately, with the main contribution coming from the middle range. In particular, we need \(\Delta ^3/n^2\rightarrow 0\) as \(n\rightarrow \infty \), that is \(\Delta =o(n^{2/3})\), to be able to apply the de Moivre–Laplace limit theorem to the middle sum. With \(\sigma =r_k\frac{j}{n}\left( 1-\frac{j}{n}\right) \), we have that the middle sum is

where we have also expressed the error bounds for the approximation of the hypergeometric distribution in terms of \(\Delta \); we need \(\Delta =o(\sqrt{n})\) too for the approximation to be of any use. Using \(e^x=1+O(x)\) we can write the sum above as

since \(O(\Delta ^3/n^2)=O(\Delta /n)\) for \(\Delta =o(\sqrt{n})\). Finally, we can expand \(\phi (r'_k/j)=\phi (\overline{r}_k/j + y/j)\) for \(y= r'_k-\overline{r}_k\), \(y\in [-\Delta ,\Delta ]\) as \(\phi (r'_k/j)=\phi (\overline{r}_k/j)+O(\Delta /n)\) to get

To complete this part of the analysis we only need to show that the other two sums (with \(r'_k<\overline{r}_k-\Delta \) and \(r'_k > \overline{r}_k+\Delta \)) are negligible as \(n\rightarrow \infty \). This immediately follows since \(\phi (r'_k/j)\) is bounded by a constant, and we only need to note that the tails of the hypergeometric distribution (or its binomial approximation) decay polynomially as we move away from the mean \(\overline{r}_k\), then exponentially. To have an error bound as small as possible it helps to take \(\Delta \) as large as possible, as long as it remains \(o(\sqrt{n})\).

We handle the other inner sums (for rank vectors in \(\mathcal {L}^{({i,j})}_\mathbf {r}\), \(\mathcal {R}^{({i,j})}_\mathbf {r}\) and \(\mathcal {B}^{({i,j})}_\mathbf {r}\)) in (7) analogously; we have thus that the error bound inside each summation on j is \((1+O(\Delta ^2/n))\), but the approximations are not valid if \(j=o(n)\). However these can be disregarded as their total contribution is negligible, since the tail of the hypergeometric distribution decays exponentially. This also justifies the assumption that all extreme ranks are \(r_i=0\) when we actually have extreme ranks \(r_i=o(n)\) (or \(r_i=n-o(n)\), but then we can take \(r_i:= n-r_i\) because of the symmetry).

Altogether, these computations show that \(C_{{n},\mathbf {r}}'=f(\mathbf {r}/n)+o(1)\) satisfies

and hence \(f(\mathbf {r}/n)\cdot n^\alpha +o(n^\alpha )\) satisfies the full recurrence (7), with toll function \(o(n^\alpha )\) instead of the toll function \(\tau _{n,\mathbf {r}}=1\).

Rights and permissions

About this article

Cite this article

Duch, A., Lau, G. & Martínez, C. On the Cost of Fixed Partial Match Queries in K-d Trees. Algorithmica 75, 684–723 (2016). https://doi.org/10.1007/s00453-015-0097-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00453-015-0097-4