Abstract

In a scheduling problem, denoted by 1|prec|∑C i in the Graham notation, we are given a set of n jobs, together with their processing times and precedence constraints. The task is to order the jobs so that their total completion time is minimized. 1|prec|∑C i is a special case of the Traveling Repairman Problem with precedences. A natural dynamic programming algorithm solves both these problems in 2n n O(1) time, and whether there exists an algorithms solving 1|prec|∑C i in O(c n) time for some constant c<2 was an open problem posted in 2004 by Woeginger. In this paper we answer this question positively.

Similar content being viewed by others

1 Introduction

It is commonly believed that no NP-hard problem is solvable in polynomial time. However, while all NP-complete problems are equivalent with respect to polynomial time reductions, they appear to be very different with respect to the best exponential time exact solutions. In particular, most NP-complete problems can be solved significantly faster than the (generic for the NP class) obvious brute-force algorithm that checks all possible solutions; examples are Independent Set [11], Dominating Set [11, 23], Chromatic Number [4] and Bandwidth [8]. The area of moderately exponential time algorithms studies upper and lower bounds for exact solutions for hard problems. The race for the fastest exact algorithm inspired several very interesting tools and techniques such as Fast Subset Convolution [3] and Measure&Conquer [11] (for an overview of the field we refer the reader to a recent book by Fomin and Kratsch [10]).

For several problems, including TSP, Chromatic Number, Permanent, Set Cover, #Hamiltonian Cycles and SAT, the currently best known time complexity is of the formFootnote 1 O ∗(2n), which is a result of applying dynamic programming over subsets, the inclusion-exclusion principle or a brute force search. The question remains, however, which of those problems are inherently so hard that it is not possible to break the 2n barrier and which are just waiting for new tools and techniques still to be discovered. In particular, the hardness of the k-SAT problem is the starting point for the Strong Exponential Time Hypothesis of Impagliazzo and Paturi [15], which is used as an argument that other problems are hard [7, 19, 22]. Recently, on the positive side, O(c n) time algorithms for a constant c<2 have been developed for Capacitated Domination [9], Irredundance [1], Maximum Induced Planar Subgraph [12] and (a major breakthrough in the field) for the undirected version of the Hamiltonian Cycle problem [2].

In this paper we extend this list by one important scheduling problem. The area of scheduling algorithms originates from practical questions regarding scheduling jobs on single- or multiple-processor machines or scheduling I/O requests. It has quickly become one of the most important areas in algorithmics, with significant influence on other branches of computer science. For example, the research of the job-shop scheduling problem in 1960s resulted in designing the competitive analysis [13], initiating the research of online algorithms. Up to today, the scheduling literature consists of thousands of research publications. We refer the reader to the classical textbook of Brucker [5].

Among scheduling problems one may find a bunch of problems solvable in polynomial time, as well as many NP-hard ones. For example, the aforementioned job-shop problem is NP-complete on at least three machines [17], but polynomial on two machines with unitary processing times [14].

Scheduling problems come in numerous variants. For example, one may consider scheduling on one machine, or many uniform or non-uniform machines. The jobs can have different attributes: they may arrive at different times, may have deadlines or precedence constraints, preemption may or may not be allowed. There are also many objective functions, for example the makespan of the computation, total completion time, total lateness (in case of deadlines for jobs) etc.

Let us focus on the case of a single machine. Assume we are given a set of jobs V, and each job v has its processing time t(v)∈[0,+∞). For a job v, its completion time is the total amount of time that this job waited to be finished; formally, the completion time of a job v is defined as the sum of processing times of v and all jobs scheduled earlier. If we are to minimize the total completion time (i.e, the sum of completion times over all jobs), it is clear that the jobs should be scheduled in order of increasing processing times. The question of minimizing the makespan of the computation (i.e., maximum completion time) is obvious in this setting, but we note that minimizing makespan is polynomially solvable even if we are given a precedence constraints on the jobs (i.e., a partial order on the set of jobs is given, and a job cannot be scheduled before all its predecessors in the partial order are finished) and jobs arrive at different times (i.e., each job has its arrival time, before which it cannot be scheduled) [16].

Lenstra and Rinnooy Kan [18] in 1978 proved that the question of minimizing total completion time on one machine becomes NP-complete if we are given precedence constraints on the set of jobs. To the best of our knowledge the currently smallest approximation ratio for this case equals 2, due to independently discovered algorithms by Chekuri and Motwani [6] as well as Margot et al. [20]. The problem of minimizing total completion time on one machine, given precedence constraints on the set of jobs, can be solved by a standard dynamic programming algorithm in time O ∗(2n), where n denotes the number of jobs. In this paper we break the 2n-barrier for this problem.

Before we start, let us define formally the considered problem. As we focus on a single scheduling problem, for brevity we denote it by SCHED. We note that the proper name of this problem in the Graham notation is 1|prec|∑C i .

If u<v for u,v∈V (i.e., u≤v and u≠v), we say that u precedes v, u is a predecessor or prerequisite of v, u is required for v or that v is a successor of u. We denote |V| by n.

SCHED is a special case of the precedence constrained Traveling Repairman Problem (prec-TRP), defined as follows. A repairman needs to visit all vertices of a (directed or undirected) graph G=(V,E) with distances d:E→[0,∞) on edges. At each vertex, the repairman is supposed to repair a broken machine; a cost of a machine v is the time C v that it waited before being repaired. Thus, the goal is to minimize the total repair time, that is, ∑ v∈V C v . Additionally, in the precedence constrained case, we are given a partial order (V,≤) on the set of vertices of G; a machine can be repaired only if all its predecessors are already repaired. Note that, given an instance (V,≤,t) of SCHED, we may construct equivalent prec-TRP instance, by taking G to be a complete directed graph on the vertex set V, keeping the precedence constraints unmodified, and setting d(u,v)=t(v).

The TRP problem is closely related to the Traveling Salesman Problem (TSP). All these problems are NP-complete and solvable in O ∗(2n) time by an easy application of the dynamic programming approach (here n stands for the number of vertices in the input graph). In 2010, Björklund [2] discovered a genuine way to solve probably the easiest NP-complete version of the TSP problem—the question of deciding whether a given undirected graph is Hamiltonian—in randomized O(1.66n) time. However, his approach does not extend to directed graphs, not even mentioning graphs with distances defined on edges.

Björklund’s approach is based on purely graph-theoretical and combinatorial reasonings, and seem unable to cope with arbitrary (large, real) weights (distances, processing times). This is also the case with many other combinatorial approaches. Probably motivated by this, Woeginger at International Workshop on Parameterized and Exact Computation (IWPEC) in 2004 [24] has posed the question (repeated in 2008 [25]), whether it is possible to construct an O((2−ε)n) time algorithm for the SCHED problem.Footnote 2 This problem seems to be the easiest case of the aforementioned family of TSP-related problems with arbitrary weights. In this paper we present such an algorithm, thus affirmatively answering Woeginger’s question. Woeginger also asked [24, 25] whether an O((2−ε)n) time algorithm for one of the problems TRP, TSP, prec-TRP, SCHED implies O((2−ε)n) time algorithms for the other problems. This problem is still open.

The most important ingredient of our algorithm is a combinatorial lemma (Lemma 2.6) which allows us to investigate the structure of the SCHED problem. We heavily use the fact that we are solving the SCHED problem and not its more general TSP related version, and for this reason we believe that obtaining O((2−ε)n) time algorithms for other problems listed by Woeginger is much harder.

2 The Algorithm

2.1 High-Level Overview—Part 1

Let us recall that our task in the SCHED problem is to compute an ordering σ:V→{1,2,…,n} that satisfies the precedence constraints (i.e., if u<v then σ(u)<σ(v)) and minimizes the total completion time of all jobs defined as

We define the cost of job v at position i to be T(v,i)=(n−i+1)t(v). Thus, the total completion time is the total cost of all jobs at their respective positions in the ordering σ.

We begin by describing the algorithm that solves SCHED in O ⋆(2n) time, which we call the DP algorithm—this will be the basis for our further work. The idea—a standard dynamic programming over subsets—is that if we decide that a particular set X⊆V will (in some order) form the prefix of our optimal σ, then the order in which we take the elements of X does not affect the choices we make regarding the ordering of the remaining V∖X; the only thing that matters are the precedence constraints imposed by X on V∖X. Thus, for each candidate set X⊆V to form a prefix, the algorithm computes a bijection σ[X]:X→{1,2,…,|X|} that minimizes the cost of jobs from X, i.e., it minimizes T(σ[X])=∑ v∈X T(v,σ[X](v)). The value of T(σ[X]) is computed using the following easy to check recursive formula:

Here, by max(X) we mean the set of maximum elements of X—those which do not precede any element of X. The bijection σ[X] is constructed by prolonging σ[X∖{v}] by v, where v is the job at which the minimum is attained. Notice that σ[V] is exactly the ordering we are looking for. We calculate σ[V] recursively, using formula (1), storing all computed values σ[X] in memory to avoid recomputation. Thus, as the computation of a single σ[X] value given all the smaller values takes polynomial time, while σ[X] for each X is computed at most once the whole algorithm indeed runs in O ⋆(2n) time.

The overall idea of our algorithm is to identify a family of sets X⊆V that—for some reason—are not reasonable prefix candidates, and we can skip them in the computations of the DP algorithm; we will call these unfeasible sets. If the number of feasible sets is not larger than c n for some c<2, we will be done—our recursion will visit only feasible sets, assuming T(σ[X]) to be ∞ for unfeasible X in formula (1), and the running time will be O ⋆(c n). This is formalized in the following proposition.

Proposition 2.1

Assume we are given a polynomial-time algorithm \(\mathcal{R}\) that, given a set X⊆V, either accepts it or rejects it. Moreover, assume that the number of sets accepted by \(\mathcal{R}\) is bounded by O(c n) for some constant c. Then one can find in time O ⋆(c n) an optimal ordering of the jobs in V among those orderings σ where σ −1({1,2,…,i}) is accepted by \(\mathcal{R}\) for all 1≤i≤n, whenever such ordering exists.

Proof

Consider the following recursive procedure to compute optimal T(σ[X]) for a given set X⊆V:

-

1.

if X is rejected by \(\mathcal{R}\), return T(σ[X])=∞;

-

2.

if X=∅, return T(σ[X])=0;

-

3.

if T(σ[X]) has been already computed, return the stored value of T(σ[X]);

-

4.

otherwise, compute T(σ[X]) using formula (1), calling recursively the procedure itself to obtain values T(σ[X∖{v}]) for v∈max(X), and store the computed value for further use.

Clearly, the above procedure, invoked on X=V, computes optimal T(σ[V]) among those orderings σ where σ −1({1,2,…,i}) is accepted by \(\mathcal {R}\) for all 1≤i≤n. It is straightforward to augment this procedure to return the ordering σ itself, instead of only its cost.

If we use balanced search tree to store the computed values of σ[X], each recursive call of the described procedure runs in polynomial time. Note that the last step of the procedure is invoked at most once for each set X accepted by \(\mathcal{R}\) and never for a set X rejected by \(\mathcal{R}\). As an application of this step results in at most |X|≤n recursive calls, we obtain that a computation of σ[V] using this procedure results in the number of recursive calls bounded by n times the number of sets accepted by \(\mathcal{R}\). The time bound follows. □

2.2 The Large Matching Case

We begin by noticing that the DP algorithm needs to compute σ[X] only for those X⊆V that are downward closed, i.e., if v∈X and u<v then u∈X. If there are many constraints in our problem, this alone will suffice to limit the number of feasible sets considerably, as follows. Construct an undirected graph G with the vertex set V and edge set E={uv:u<v∨v<u}. Let \(\mathcal {M}\) be a maximum matchingFootnote 3 in G, which can be found in polynomial time [21]. If X⊆V is downward closed, and \(uv \in \mathcal {M}\), u<v, then it is not possible that u∉X and v∈X. Obviously checking if a subset is downward closed can be performed in polynomial time, thus we can apply Proposition 2.1, accepting only downward closed subsets of V. This leads to the following lemma:

Lemma 2.2

The number of downward closed subsets of V is bounded by \(2^{n-2|\mathcal {M}|}\* 3^{|\mathcal {M}|}\). If \(|\mathcal {M}| \geq \varepsilon _{1} n\), then we can solve the SCHED problem in time

Note that for any small positive constant ε 1 the complexity T 1(n) is of required order, i.e., T 1(n)=O(c n) for some c<2 that depends on ε 1. Thus, we only have to deal with the case where \(|\mathcal {M}| < \varepsilon _{1} n\).

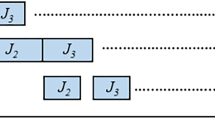

Let us fix a maximum matching \(\mathcal {M}\), let M⊆V be the set of endpoints of \(\mathcal {M}\), and let I 1=V∖M. Note that, as M is a maximum matching in G, no two jobs in I 1 are bound by a precedence constraint, and |M|≤2ε 1 n, |I 1|≥(1−2ε 1)n. See Fig. 1 for an illustration.

2.3 High-Level Overview—Part 2

We are left in the situation where there is a small number of “special” elements (M), and the bulk remainder (I 1), consisting of elements that are tied by precedence constraints only to M and not to each other.

First notice that if M was empty, the problem would be trivial: with no precedence constraints we should simply order the tasks from the shortest to the longest. Now let us consider what would happen if all the constraints between any u∈I 1 and w∈M would be of the form u<w—that is, if the jobs from I 1 had no predecessors. For any prefix set candidate X we consider X I =X∩I 1. Now for any x∈X I , y∈I 1∖X I we have an alternative prefix candidate: the set X′=(X∪{y})∖{x}. If t(y)<t(x), there has to be a reason why X′ is not a strictly better prefix candidate than X—namely, there has to exist w∈M such that x<w, but \(y\not< w\).

A similar reasoning would hold even if not all of I 1 had no predecessors, but just some constant fraction J of I—again, the only feasible prefix candidates would be those in which for every x∈X I ∩J and y∈J∖X I there is a reason (either t(x)<t(y) or an element w∈M which requires x, but not y) not to exchange them. It turns out that if |J|>ε 2 n, where ε 2>2ε 1, this observation suffices to prove that the number of possible intersections of feasible sets with J is exponentially smaller than 2|J|. This is formalized and proved in Lemma 2.6, and is the cornerstone of the whole result.

A typical application of this lemma is as follows: say we have a set K⊆I 1 of cardinality |K|>2j, while we know for some reason that all the predecessors of elements of K appear on positions j and earlier. If K is large (a constant fraction of n), this is enough to limit the number of feasible sets to (2−ε)n. To this end it suffices to show that there are exponentially fewer than 2|K| possible intersections of a feasible set with K. Each such intersection consists of a set of at most j elements (that will be put on positions 1 through j), and then a set in which every element has a reason not to be exchanged with something from outside the set—and there are relatively few of those by Lemma 2.6—and when we do the calculations, it turns out the resulting number of possibilities is exponentially smaller than 2|K|.

To apply this reasoning, we need to be able to tell that all the prerequisites of a given element appear at some position or earlier. To achieve this, we need to know the approximate positions of the elements in M. We achieve this by branching into 4|M| cases, for each element w∈M choosing to which of the four quarters of the set {1,…,n} will σ opt (w) belong. This incurs a multiplicative costFootnote 4 of 4|M|, which will be offset by the gains from applying Lemma 2.6.

We will now repeatedly apply Lemma 2.6 to obtain information about the positions of various elements of I 1. We will repeatedly say that if “many” elements (by which we always mean more than εn for some ε) do not satisfy something, we can bound the number of feasible sets, and thus finish the algorithm. For instance, look at those elements of I 1 which can appear in the first quarter, i.e., none of their prerequisites appear in quarters two, three and four. If there is more than \((\frac{1}{2}+\delta)n\) of them for some constant δ>0, we can apply the above reasoning for j=n/4 (Lemma 2.10). Subsequent lemmata bound the number of feasible sets if there are many elements that cannot appear in any of the two first quarters (Lemma 2.8), if less than \((\frac{1}{2}-\delta)n\) elements can appear in the first quarter (Lemma 2.10) and if a constant fraction of elements in the second quarter could actually appear in the first quarter (Lemma 2.11). We also apply similar reasoning to elements that can or cannot appear in the last quarter.

We end up in a situation where we have four groups of elements, each of size roughly n/4, split upon whether they can appear in the first quarter and whether they can appear in the last one; moreover, those that can appear in the first quarter will not appear in the second, and those that can appear in the fourth will not appear in the third. This means that there are two pairs of parts which do not interact, as the set of places in which they can appear are disjoint. We use this independence of sorts to construct a different algorithm than the DP we used so far, which solves our problem in this specific case in time O ⋆(23n/4+ε) (Lemma 2.12).

As can be gathered from this overview, there are many technical details we will have to navigate in the algorithm. This is made more precarious by the need to carefully select all the epsilons. We decided to use symbolic values for them in the main proof, describing their relationship appropriately, using four constants ε k , k=1,2,3,4. The constants ε k are very small positive reals, and additionally ε k is much smaller than ε k+1 for k=1,2,3. At each step, we shortly discuss the existence of such constants. We discuss the choice of optimal values of these constants in Sect. 2.9, although the value we perceive in our algorithm lies rather in the existence of an O ⋆((2−ε)n) algorithm than in the value of ε (which is admittedly very small).

2.4 Technical Preliminaries

We start with a few simplifications. First, we add a few dummy jobs with no precedence constraints and zero processing times, so that n is divisible by four. Second, by slightly perturbing the jobs’ processing times, we can assume that all processing times are pairwise different and, moreover, each ordering has different total completion time. This can be done, for instance, by replacing time t(v) with a pair (t(v),(n+1)π(v)−1), where π:V→{1,2,…,n} is an arbitrary numbering of V. The addition of pairs is performed coordinatewise, whereas comparison is performed lexicographically. Note that this in particular implies that the optimal solution is unique, we denote it by σ opt . Third, at the cost of an n 2 multiplicative overhead, we guess the jobs \(v_{begin} = \sigma_{opt}^{-1}(1)\) and \(v_{end}=\sigma_{opt}^{-1}(n)\) and we add precedence constraints v begin <v<v end for each v≠v begin ,v end . If v begin or v end were not in M to begin with, we add them there.

A number of times our algorithm branches into several subcases, in each branch assuming some property of the optimal solution σ opt . Formally speaking, in each branch we seek the optimal ordering among those that satisfy the assumed property. We somewhat abuse the notation and denote by σ opt the optimal solution in the currently considered subcase. Note that σ opt is always unique within any subcase, as each ordering has different total completion time.

For v∈V by pred(v) we denote the set {u∈V:u<v} of predecessors of v, and by succ(v) we denote the set {u∈V:v<u} of successors of v. We extend this notation to subsets of V: pred(U)=⋃ v∈U pred(v) and succ(U)=⋃ v∈U succ(v). Note that for any set U⊆I 1, both pred(U) and succ(U) are subsets of M.

In a few places in this paper we use the following simple bound on binomial coefficients that can be easily proven using the Stirling’s formula.

Lemma 2.3

Let 0<α<1 be a constant. Then

In particular, if α≠1/2 then there exists a constant c α <2 that depends only on α and

2.5 The Core Lemma

We now formalize the idea of exchanges presented at the beginning of Sect. 2.3.

Definition 2.4

Consider some set K⊆I 1, and its subset L⊆K. If there exists u∈L such that for every w∈succ(u) we can find v w ∈(K∩pred(w))∖L with t(v w )<t(u) then we say L is succ-exchangeable with respect to K, otherwise we say L is non-succ-exchangeable with respect to K.

Similarly, if there exists v∈(K∖L) such that for every w∈pred(v) we can find u w ∈L∩succ(w) with t(u w )>t(v), we call L pred-exchangeable with respect to K, otherwise we call it non-pred-exchangeable with respect to K.

Whenever it is clear from the context, we omit the set K with respect to which its subset is or is not pred- or succ-exchangeable.

Let us now give some more intuition on the exchangeable sets. Let L be a non-succ-exchangeable set with respect to K⊆I 1 and let u∈L. By the definition, there exists w∈succ(u), such that for all v w ∈(K∩pred(w))∖L we have t(v w )≥t(u); in other words, all predecessors of w in K that are scheduled after L have larger processing time than u—which seems like a “correct” choice if we are to optimize the total completion time.

On the other hand, let \(L = \sigma_{opt}^{-1}(\{1,2,\ldots,i\}) \cap K\) for some 1≤i≤n and assume that L is a succ-exchangeable set with respect to K with a job u∈L witnessing this fact. Let w be the job in succ(u) that is scheduled first in the optimal ordering σ opt . By the definition, there exists v w ∈(K∩pred(w))∖L with t(v w )<t(u). It is tempting to decrease the total completion time of σ opt by swapping the jobs v w and u in σ opt : by the choice of w, no precedence constraint involving u will be violated by such an exchange, so we need to care only about the predecessors of v w .

We formalize the aforementioned applicability of the definition of pred- and succ-exchangeable sets in the following lemma:

Lemma 2.5

Let K⊆I 1. If for all v∈K,x∈pred(K) we have that σ opt (v)>σ opt (x), then for any 1≤i≤n the set \(K \cap\sigma_{opt}^{-1}(\{1, 2,\ldots, i\})\) is non-succ-exchangeable with respect to K.

Similarly, if for all v∈K,x∈succ(K) we have σ opt (v)<σ opt (x), then the sets \(K \cap\sigma_{opt}^{-1}(\{1, 2,\ldots, i\})\) are non-pred-exchangeable with respect to K.

Proof

The proofs for the first and the second case are analogous. However, to help the reader get intuition on exchangeable sets, we provide them both in full detail. See Fig. 2 for an illustration on the succ-exchangeable case.

Non-succ-exchangeable sets. Assume, by contradiction, that for some i the set \(L = K \cap\sigma_{opt}^{-1}(\{1,2,\ldots,i\} )\) is succ-exchangeable. Let u∈L be a job witnessing it. Let w be the successor of u with minimum σ opt (w) (there exists one, as v end ∈succ(u)). By Definition 2.4, we have v w ∈(K∩pred(w))∖L with t(v w )<t(u). As v w ∈K∖L, we have σ opt (v w )>σ opt (u). As v w ∈pred(w), we have σ opt (v w )<σ opt (w).

Consider an ordering σ′ defined as σ′(u)=σ opt (v w ), σ′(v w )=σ opt (u) and σ′(x)=σ opt (x) if x∉{u,v w }; in other words, we swap the positions of u and v w in the ordering σ opt . We claim that σ′ satisfies all the precedence constraints. As σ opt (u)<σ opt (v w ), σ′ may only violates constraints of the form x<v w and u<y. However, if x<v w , then x∈pred(K) and σ′(v w )=σ opt (u)>σ opt (x)=σ′(x) by the assumptions of the Lemma. If u<y, then σ′(y)=σ opt (y)≥σ opt (w)>σ opt (v w )=σ′(u), by the choice of w. Thus σ′ is a feasible solution to the considered SCHED instance. Since t(v w )<t(u), we have T(σ′)<T(σ opt ), a contradiction.

Non-pred-exchangeable sets. Assume, by contradiction, that for some i the set \(L = K \cap\sigma_{opt}^{-1}(\{1,2,\ldots,i\} )\) is pred-exchangeable. Let v∈(K∖L) be a job witnessing it. Let w be the predecessor of v with maximum σ opt (w) (there exists one, as v begin ∈pred(v)). By Definition 2.4, we have u w ∈L∩succ(w) with t(u w )>t(v). As u w ∈L, we have σ opt (u w )<σ opt (v). As u w ∈succ(w), we have σ opt (u w )>σ opt (w).

Consider an ordering σ′ defined as σ′(v)=σ opt (u w ), σ′(u w )=σ opt (v) and σ′(x)=σ opt (x) if x∉{v,u w }; in other words, we swap the positions of v and u w in the ordering σ opt . We claim that σ′ satisfies all the precedence constraints. As σ opt (u w )<σ opt (v), σ′ may only violates constraints of the form x>u w and v>y. However, if x>u w , then x∈succ(K) and σ′(u w )=σ opt (v)<σ opt (x)=σ′(x) by the assumptions of the Lemma. If v>y, then σ′(y)=σ opt (y)≤σ opt (w)<σ opt (u w )=σ′(v), by the choice of w. Thus σ′ is a feasible solution to the considered SCHED instance. Since t(u w )>t(v), we have T(σ′)<T(σ opt ), a contradiction. □

Lemma 2.5 means that if we manage to identify a set K satisfying the assumptions of the lemma, the only sets the DP algorithm has to consider are the non-exchangeable ones. The following core lemma proves that there are few of those (provided that K is big enough), and we can identify them easily.

Lemma 2.6

For any set K⊆I 1 the number of non-succ-exchangeable (non-pred-exchangeable) subsets with regard to K is at most \(\sum_{l \leq|M|} \binom{|K|}{l}\). Moreover, there exists an algorithm which checks whether a set is succ-exchangeable (pred-exchangeable) in polynomial time.

The idea of the proof is to construct a function f that encodes each non-exchangeable set by a subset of K no larger than M. To show this encoding is injective, we provide a decoding function g and show that g∘f is an identity on non-exchangeable sets.

Proof

As in Lemma 2.5, the proofs for succ- and pred-exchangeable sets are analogous, but for the sake or clarity we include both proofs in full detail.

Non-succ-exchangeable sets. For any set Y⊆K we define the function f Y :M→K∪{nil} as follows: for any element w∈M we define f Y (w) (the least expensive predecessor of w outside Y) to be the element of (K∖Y)∩pred(w) which has the smallest processing time, or nil if (K∖Y)∩pred(w) is empty. We now take f(Y) (the set of the least expensive predecessors outside Y) to be the set {f Y (w):w∈M}∖{nil}. We see that f(Y) is indeed a set of cardinality at most |M|.

Now we aim to prove that f is injective on the family of non-succ-exchangeable sets. To this end we define the reverse function g. For a set Z⊆K (which we think of as the set of the least expensive predecessors outside some Y) let g(Z) be the set of such elements v of K that there exists w∈succ(v) such that for any z w ∈Z∩pred(w) we have t(z w )>t(v). Notice, in particular, that g(Z)∩Z=∅, as for v∈Z and w∈succ(v) we have v∈Z∩pred(w).

First we prove g(f(Y))⊆Y for any Y⊆K. Take any v∈K∖Y and consider any w∈succ(v). Then f Y (w)≠nil and t(f Y (w))≤t(v), as v∈(K∖Y)∩pred(w). Thus v∉g(f(Y)), as for any w∈succ(v) we can take a witness z w =f Y (w) in the definition of g(f(Y)).

In the other direction, let us assume that Y does not satisfy Y⊆g(f(Y)). This means we have u∈Y∖g(f(Y)). Then we show that Y is succ-exchangeable. Consider any w∈succ(u). As u∉g(f(Y)), by the definition of the function g applied to the set f(Y), there exists z w ∈f(Y)∩pred(w) with t(z w )≤t(u). But f(Y)∩Y=∅, while u∈Y; and as all the values of t are distinct, t(z w )<t(u) and z w satisfies the condition for v w in the definition of succ-exchangeability.

Non-pred-exchangeable sets. For any set Y⊆K we define the function f Y :M→K∪{nil} as follows: for any element w∈M we define f Y (w) (the most expensive successor of w in Y) to be the element of Y∩succ(w) which has the largest processing time, or nil if Y∩succ(w) is empty. We now take f(Y) (the set of the most expensive successors in Y) to be the set {f Y (w):w∈M}∖{nil}. We see that f(Y) is indeed a set of cardinality at most |M|.

Now we aim to prove that f is injective on the family of non-pred-exchangeable sets. To this end we define the reverse function g. For a set Z⊆K (which we think of as the set of most expensive successors in some Y) let g(Z) be the set of such elements v of K that for any w∈pred(v) there exists a z w ∈Z∩succ(w) with t(z w )≥t(v). Notice, in particular, that g(Z)⊆Z, as for v∈Z the job z w =v is a good witness for any w∈pred(v).

First we prove Y⊆g(f(Y)) for any Y⊆K. Take any v∈Y and consider any w∈pred(v). Then f Y (w)≠nil and t(f Y (w))≥t(v), as v∈Y∩succ(w). Thus v∈g(f(Y)), as for any w∈pred(v) we can take z w =f Y (w) in the definition of g(f(Y)).

In the other direction, let us assume that Y does not satisfy g(f(Y))⊆Y. This means we have v∈g(f(Y))∖Y. Then we show that Y is pred-exchangeable. Consider any w∈pred(v). As v∈g(f(Y)), by the definition of the function g applied to the set f(Y), there exists z w ∈f(Y)∩succ(w) with t(z w )≥t(v). But f(Y)⊆Y, while \(v \not\in Y\); and as all the values of t are distinct, t(z w )>t(v) and z w satisfies the condition for u w in the definition of pred-exchangeability.

Thus, in both cases, if Y is non-exchangeable then g(f(Y))=Y (in fact it is possible to prove in both cases that Y is non-exchangeable iff g(f(Y))=Y). As there are \(\sum_{l = 0}^{|M|} \binom{|K|}{l}\) possible values of f(Y), the first part of the lemma is proven. For the second, it suffices to notice that succ- and pred-exchangeability can be checked in time O(|K|2|M|) directly from the definition. □

Example 2.7

To illustrate the applicability of Lemma 2.6, we analyze the following very simple case: assume the whole set M∖{v begin } succeeds I 1, i.e., for every w∈M∖{v begin } and v∈I 1 we have \(w \not< v\). If ε 1 is small, then we can use the first case of Lemma 2.5 for the whole set K=I 1: we have pred(K)={v begin } and we only look for orderings that put v begin as the first processed job. Thus, we can apply Proposition 2.1 with algorithm \(\mathcal{R}\) that rejects sets X⊆V where X∩I 1 is succ-exchangeable with respect to I 1. By Lemma 2.6, the number of sets accepted by \(\mathcal {R}\) is bounded by \(2^{|M|} \sum_{l \leq|M|} \binom{|I_{1}|}{l}\), which is small if |M|≤ε 1 n.

2.6 Important Jobs at n/2

As was already mentioned in the overview, the assumptions of Lemma 2.5 are quite strict; therefore, we need to learn a bit more on how σ opt behaves on M in order to distinguish a suitable place for an application. As |M|≤2ε 1 n, we can afford branching into few subcases for every job in M.

Let A={1,2,…,n/4}, B={n/4+1,…,n/2}, C={n/2+1,…,3n/4}, D={3n/4+1,…,n}, i.e., we split {1,2,…,n} into quarters. For each w∈M∖{v begin ,v end } we branch into two cases: whether σ opt (w) belongs to A∪B or C∪D; however, if some predecessor (successor) of w has been already assigned to C∪D (A∪B), we do not allow w to be placed in A∪B (C∪D). Of course, we already know that σ opt (v begin )∈A and σ opt (v end )∈D. Recall that the vertices of M can be paired into a matching; since for each w 1<w 2, w 1,w 2∈M we cannot have w 1 placed in C∪D and w 2 placed in A∪B, this branching leads to \(3^{|M|/2} \leq3^{\varepsilon _{1} n}\) subcases, and thus the same overhead in the time complexity. By the above procedure, in all branches the guesses about alignment of jobs from M satisfy precedence constraints inside M.

Now consider a fixed branch. Let M AB and M CD be the sets of elements of M to be placed in A∪B and C∪D, respectively.

Let us now see what we can learn in a fixed branch about the behavior of σ opt on I 1. Let

that is \(W_{\mathrm {half}}^{AB}\) (resp. \(W_{\mathrm {half}}^{CD}\)) are those elements of I 1 which are forced into the first (resp. second) half of σ opt by the choices we made about M (see Fig. 3 for an illustration). If one of the W half sets is much larger than M, we have obtained a gain—by branching into at most \(3^{\varepsilon _{1} n}\) branches we gained additional information about a significant (much larger than (log23)ε 1 n) number of other elements (and so we will be able to avoid considering a significant number of sets in the DP algorithm). This is formalized in the following lemma:

Lemma 2.8

Consider a fixed branch. If \(W_{\mathrm {half}}^{AB}\) or \(W_{\mathrm {half}}^{CD}\) has at least ε 2 n elements, then the DP algorithm can be augmented to solve the instance in the considered branch in time

Proof

We describe here only the case \(|W_{\mathrm {half}}^{AB}| \geq \varepsilon _{2} n\). The second case is symmetrical.

Recall that the set \(W_{\mathrm {half}}^{AB}\) needs to be placed in A∪B by the optimal ordering σ opt . We use Proposition 2.1 with an algorithm \(\mathcal{R}\) that accepts sets X⊆V such that the set \(W_{\mathrm {half}}^{AB} \setminus X\) (the elements of \(W_{\mathrm {half}}^{AB}\) not scheduled in X) is of size at most max(0,n/2−|X|) (the number of jobs to be scheduled after X in the first half of the jobs). Moreover, the algorithm \(\mathcal{R}\) tests if the set X conforms with the guessed sets M AB and M CD, i.e.:

Clearly, for any 1≤i≤n, the set \(\sigma_{opt}^{-1}(\{ 1,2,\ldots,i\})\) is accepted by \(\mathcal{R}\), as σ opt places \(M^{AB} \cup W_{\mathrm {half}}^{AB}\) in A∪B and M CD in C∪D.

Let us now estimate the number of sets X accepted by \(\mathcal{R}\). Any set X of size larger than n/2 needs to contain \(W_{\mathrm {half}}^{AB}\); there are at most \(2^{n-|W_{\mathrm {half}}^{AB}|} \leq2^{(1-\varepsilon _{2})n}\) such sets. All sets of size at most \(n/2 - |W_{\mathrm {half}}^{AB}|\) are accepted by \(\mathcal{R}\); there are at most \(n\binom{n}{(1/2 - \varepsilon _{2})n}\) such sets. Consider now a set X of size n/2−α for some \(0 \leq\alpha \leq|W_{\mathrm {half}}^{AB}|\). Such a set needs to contain \(|W_{\mathrm {half}}^{AB}|-\beta\) elements of \(W_{\mathrm {half}}^{AB}\) for some 0≤β≤α and \(n/2 - |W_{\mathrm {half}}^{AB}| - (\alpha-\beta)\) elements of \(V \setminus W_{\mathrm {half}}^{AB}\). Therefore the number of such sets (for all possible α) is bounded by:

The last inequality follows from the fact that the function \(x \mapsto 2^{x} \binom{n-x}{n/2}\) is decreasing for x∈[0,n/2]. The bound T 2(n) follows. □

Note that we have \(3^{\varepsilon _{1} n}\) overhead so far, due to guessing placement of the jobs from M. By Lemma 2.3, \(\binom{(1-\varepsilon _{2}) n}{n/2} = O((2-c(\varepsilon _{2}))^{(1-\varepsilon _{2}) n})\) and \(\binom{n}{(1/2 - \varepsilon _{2})n} = O((2-c'(\varepsilon _{2}))^{n})\), for some positive constants c(ε 2) and c′(ε 2) that depend only on ε 2. Thus, for any small fixed ε 2 we can choose ε 1 sufficiently small so that \(3^{\varepsilon _{1} n} T_{2}(n) = O(c^{n})\) for some c<2. Note that \(3^{\varepsilon _{1} n} T_{2}(n)\) is an upper bound on the total time spent on processing all the considered subcases.

Let \(W_{\mathrm {half}}= W_{\mathrm {half}}^{AB} \cup W_{\mathrm {half}}^{CD}\) and I 2=I 1∖W half. From this point we assume that \(|W_{\mathrm {half}}^{AB}|, |W_{\mathrm {half}}^{CD}| \leq \varepsilon _{2} n\), hence |W half|≤2ε 2 n and |I 2|≥(1−2ε 1−2ε 2)n. For each \(v \in M^{AB} \cup W_{\mathrm {half}}^{AB}\) we branch into two subcases, whether σ opt (v) belongs to A or B. Similarly, for each \(v \in M^{CD} \cup W_{\mathrm {half}}^{CD}\) we guess whether σ opt (v) belongs to C or D. Moreover, we terminate branches which are trivially contradicting the constraints.

Let us now estimate the number of subcases created by this branch. Recall that the vertices of M can be paired into a matching; since for each w 1<w 2, w 1,w 2∈M we cannot have w 1 placed in a later segment than w 2; this gives us 10 options for each pair w 1<w 2. Thus, in total they are at most \(10^{|M|/2} \leq10^{\varepsilon _{1} n}\) ways of placing vertices of M into quarters without contradicting the constraints. Moreover, this step gives us an additional \(2^{|W_{\mathrm {half}}|} \leq2^{2\varepsilon _{2} n}\) overhead in the time complexity for vertices in W half. Overall, at this point we are considering at most \(10^{\varepsilon _{1} n} 2^{2\varepsilon _{2} n} n^{ O(1)}\) subcases.

We denote the set of elements of M and W half assigned to quarter Γ∈{A,B,C,D} by M Γ and \(W_{\mathrm {half}}^{\varGamma}\), respectively.

2.7 Quarters and Applications of the Core Lemma

In this section we try to apply Lemma 2.6 as follows: We look which elements of I 2 can be placed in A (the set P A) and which cannot (the set P ¬A). Similarly we define the set P D (can be placed in D) and P ¬D (cannot be placed in D). For each of these sets, we try to apply Lemma 2.6 to some subset of it. If we fail, then in the next subsection we infer that the solutions in the quarters are partially independent of each other, and we can solve the problem in time roughly O(23n/4). Let us now proceed with a more detailed argumentation.

We define the following two partitions of I 2:

In other words, the elements of P ¬A cannot be placed in A because some of their requirements are in M B, and the elements of P ¬D cannot be placed in D because they are required by some elements of M C (see Fig. 4 for an illustration). Note that these definitions are independent of σ opt , so sets P Δ for Δ∈{A,¬A,¬D,D} can be computed in polynomial time. Let

Note that p Γ≤n/4 for every Γ∈{A,B,C,D}. As \(p^{A} = n/4 - |M^{A} \cup W_{\mathrm {half}}^{A}|\), \(p^{D} = n/4 - |M^{D} \cup W_{\mathrm {half}}^{D}|\), these values can be computed by the algorithm. We branch into (1+n/4)2 further subcases, guessing the (still unknown) values p B and p C.

Let us focus on the quarter A and assume that p A is significantly smaller than |P A|/2 (i.e., |P A|/2−p a is a constant fraction of n). We claim that we can apply Lemma 2.6 as follows. While computing σ[X], if |X|≥n/4, we can represent X∩P A as a disjoint sum of two subsets \(X^{A}_{A}, X^{A}_{BCD} \subseteq P^{A}\). The first one is of size p A, and represents the elements of X∩P A placed in quarter A, and the second represents the elements of X∩P A placed in quarters B∪C∪D. Note that the elements of \(X^{A}_{BCD}\) have all predecessors in the quarter A, so by Lemma 2.5 the set \(X^{A}_{BCD}\) has to be non-succ-exchangeable with respect to \(P^{A} \setminus X_{A}^{A}\); therefore, by Lemma 2.6, we can consider only a very narrow choice of \(X^{A}_{BCD}\). Thus, the whole part X∩P A can be represented by its subset of cardinality at most p A plus some small information about the rest. If p A is significantly smaller than |P A|/2, this representation is more concise than simply remembering a subset of P A. Thus we obtain a better bound on the number of feasible sets.

A symmetric situation arises when p D is significantly smaller than |P D|/2; moreover, we can similarly use Lemma 2.6 if p B is significantly smaller than |P ¬A|/2 or p C than |P ¬D|/2. This is formalized by the following lemma.

Lemma 2.9

If p Γ<|P Δ|/2 for some (Γ,Δ)∈{(A,A),(B,¬A),(C,¬D), (D,D)} and ε 1≤1/4, then the DP algorithm can be augmented to solve the remaining instance in time bounded by

Proof

We first describe in detail the case Δ=Γ=A, and, later, we shortly describe the other cases that are proven analogously. An illustration of the proof is depicted on Fig. 5.

On a high-level, we want to proceed as in Proposition 2.1, i.e., use the standard DP algorithm described in Sect. 2.1, while terminating the computation for some unfeasible subsets of V. However, in this case we need to slightly modify the recursive formula used in the computations, and we compute σ[X,L] for X⊆V, L⊆X∩P A. Intuitively, the set X plays the same role as before, whereas L is the subset of X∩P A that was placed in the quarter A. Formally, σ[X,L] is the ordering of X that attains the minimum total cost among those orderings σ for which L=P A∩σ −1(A). Thus, in the DP algorithm we use the following recursive formula:

In the next paragraphs we describe a polynomial-time algorithm \(\mathcal {R}\) that accepts or rejects pairs of subsets (X,L), X⊆V, L⊆X∩P A; we terminate the computation on rejected pairs (X,L). As each single calculation of σ[X,L] uses at most |X| recursive calls, the time complexity of the algorithm is bounded by the number of accepted pairs, up to a polynomial multiplicative factor. We now describe the algorithm \(\mathcal{R}\).

First, given a pair (X,L), we ensure that we fulfill the guessed sets M Γ and \(W_{\mathrm {half}}^{\varGamma}\), Γ∈{A,B,C,D}, that is: E.g., we require \(M^{B}, W_{\mathrm {half}}^{B} \subseteq X\) if |X|≥n/2 and \((M^{B} \cup W_{\mathrm {half}}^{B}) \cap X = \emptyset\) if |X|≤n/4. We require similar conditions for other quarters A, C and D. Moreover, we require that X is downward closed. Note that this implies X∩P ¬A=∅ if |X|≤n/4 and P ¬D⊆X if |X|≥3n/4.

Second, we require the following:

-

1.

If |X|≤n/4, we require that L=X∩P A and |L|≤p A; as p A≤|P A|/2, there are at most \(2^{n-|P^{A}|} \binom {|P^{A}|}{p^{A}} n\) such pairs (X,L);

-

2.

Otherwise, we require that |L|=p A and that the set X∩(P A∖L) is non-succ-exchangeable with respect to P A∖L; by Lemma 2.6 there are at most \(\sum_{l \leq|M|} \binom{|P^{A} \setminus L|}{l} \leq n \binom {n}{|M|}\) (since |M|≤2ε 1 n≤n/2) non-succ-exchangeable sets with respect to P A∖L, thus there are at most \(2^{n-|P^{A}|} \binom{|P^{A}|}{p^{A}} \binom{n}{|M|} n\) such pairs (X,L).

Let us now check the correctness of the above pruning. Let 0≤i≤n and let \(X = \sigma_{opt}^{-1}(\{1,2,\ldots,i\})\) and \(L = \sigma_{opt}^{-1}(A) \cap X \cap P^{A}\). It is easy to see that Lemma 2.5 implies that in case i≥n/4 the set X∩(P A∖L) is non-succ-exchangeable and the pair (X,L) is accepted.

Let us now shortly discuss the case Γ=B and Δ=¬A. Recall that, due to the precedence constraints between P ¬A and M B, the jobs from P ¬A cannot be scheduled in the segment A. Therefore, while computing σ[X] for |X|≥n/2, we can represent X∩P ¬A as a disjoint sum of two subsets \(X^{\neg A}_{B}, X^{\neg A}_{CD}\): the first one, of size p B, to be placed in B, and the second one to be placed in C∪D. Recall that in Sect. 2.6 we have ensured that for any v∈I 2, all predecessors of v appear in M AB and all successors of v appear in M CD. We infer that all predecessors of jobs in \(X^{\neg A}_{CD}\) appear in segments A and B and, by Lemma 2.5, in the optimal solution the set \(X^{\neg A}_{CD}\) is non-succ-exchangeable with respect to \(P^{\neg A} \setminus X^{\neg A}_{B}\), Therefore we may proceed as in the case of (Γ,Δ)=(A,A); in particular, while computing σ[X,L]:

-

1.

If |X|≤n/4, we require that L=X∩P ¬A=∅;

-

2.

If n/4<|X|≤n/2, we require that L=X∩P ¬A and |L|≤p B;

-

3.

Otherwise, we require that |L|=p B and that the set X∩(P ¬A∖L) is non-succ-exchangeable with respect to P ¬A∖L.

The cases (Γ,Δ)∈{C,¬D),(D,D)} are symmetrical: L corresponds to jobs from P Δ scheduled to be done in segment Γ and we require that X∩(P Δ∖L) is non-pred-exchangeable (instead of non-succ-exchangeable) with respect to P Δ∖L. The recursive definition of T(σ[X,L]) should be also adjusted. □

Observe that if any of the sets P Δ for Δ∈{A,¬A,¬D,D} is significantly larger than n/2 (i.e., larger than \((\frac {1}{2}+\delta)n\) for some δ>0), one of the situations in Lemma 2.9 indeed occurs, since p Γ≤n/4 for Γ∈{A,B,C,D} and |M| is small.

Lemma 2.10

If 2ε 1<1/4+ε 3/2 and at least one of the sets P A, P ¬A, P ¬D and P D is of size at least (1/2+ε 3)n, then the DP algorithm can be augmented to solve the remaining instance in time bounded by

Proof

The claim is straightforward; note only that the term \(2^{n-|P^{\varDelta}|} \binom{|P^{\varDelta}|}{p^{\varGamma}}\) for p Γ<|P Δ|/2 is a decreasing function of |P Δ|. □

Note that we have \(10^{\varepsilon _{1} n} 2^{2\varepsilon _{2} n} n^{ O(1)}\) overhead so far. As \(\binom{(1/2+\varepsilon _{3})n}{n/4} = O((2-c(\varepsilon _{3}))^{(1/2 + \varepsilon _{3})n})\) for some constant c(ε 3)>0, for any small fixed ε 3 we can choose sufficiently small ε 2 and ε 1 to have \(10^{\varepsilon _{1} n} 2^{2\varepsilon _{2} n} n^{ O(1)} T_{3}(n) = O(c^{n})\) for some c<2.

From this point we assume that |P A|,|P ¬A|,|P ¬D|,|P D|≤(1/2+ε 3)n. As P A∪P ¬A=I 2=P ¬D∪P D and |I 2|≥(1−2ε 1−2ε 2)n, this implies that these four sets are of size at least (1/2−2ε 1−2ε 2−ε 3)n, i.e., they are of size roughly n/2. Having bounded the sizes of the sets P Δ from below, we are able to use Lemma 2.9 again: if any of the numbers p A, p B, p C, p D is significantly smaller than n/4 (i.e., smaller than \((\frac{1}{4}-\delta)n\) for some δ>0), then it is also significantly smaller than half of the cardinality of the corresponding set P Δ.

Lemma 2.11

Let ε 123=2ε 1+2ε 2+ε 3. If at least one of the numbers p A, p B, p C and p D is smaller than (1/4−ε 4)n and ε 4>ε 123/2, then the DP algorithm can be augmented to solve the remaining instance in time bounded by

Proof

As, before, the claim is a straightforward application of Lemma 2.9, and the fact that the term \(2^{n-|P^{\varDelta}|} \binom {|P^{\varDelta}|}{p^{\varGamma}}\) for p Γ<|P Δ|/2 is a decreasing function of |P Δ|. □

So far we have \(10^{\varepsilon _{1} n} 2^{2\varepsilon _{2} n} n^{ O(1)}\) overhead. Similarly as before, for any small fixed ε 4 if we choose ε 1,ε 2,ε 3 sufficiently small, we have \(\binom{(1/2-\varepsilon _{123})n}{(1/4-\varepsilon _{4})n} = O((2-c(\varepsilon _{4}))^{(1/2-\varepsilon _{123})n})\) and \(10^{\varepsilon _{1} n} 2^{2\varepsilon _{2} n} n^{ O(1)}T_{4}(n) = O(c^{n})\) for some c<2.

Thus we are left with the case when p A,p B,p C,p D≥(1/4−ε 4)n.

2.8 The Remaining Case

In this subsection we infer that in the remaining case the quarters A, B, C and D are somewhat independent, which allows us to develop a faster algorithm. More precisely, note that p Γ≥(1/4−ε 4)n, Γ∈{A,B,C,D}, means that almost all elements that are placed in A by σ opt belong to P A, while almost all elements placed in B belong to P ¬A. Similarly, almost all elements placed in D belong to P D and almost all elements placed in C belong to P ¬D. As P A∩P ¬A=∅ and P ¬D∩P D=∅, this implies that what happens in the quarters A and B, as well as C and D, is (almost) independent. This key observation can be used to develop an algorithm that solves this special case in time roughly O(23n/4).

Let \(W_{\mathrm {quarter}}^{B} = I_{2} \cap(\sigma_{opt}^{-1}(B) \setminus P^{\neg A})\) and \(W_{\mathrm {quarter}}^{C} = I_{2} \cap(\sigma_{opt}^{-1}(C) \setminus P^{\neg D})\). As p B,p C≥(1/4−ε 4)n we have that \(|W_{\mathrm {quarter}}^{B}|,|W_{\mathrm {quarter}}^{C}| \leq \varepsilon _{4} n\). We branch into at most \(n^{2} \binom{n}{\varepsilon _{4} n}^{2}\) subcases, guessing the sets \(W_{\mathrm {quarter}}^{B}\) and \(W_{\mathrm {quarter}}^{C}\). Let \(W_{\mathrm {quarter}}= W_{\mathrm {quarter}}^{B} \cup W_{\mathrm {quarter}}^{C}\), I 3=I 2∖W quarter, Q Δ=P Δ∖W quarter for Δ∈{A,¬A,¬D,D}. Moreover, let \(W^{\varGamma}= M^{\varGamma}\cup W_{\mathrm {half}}^{\varGamma}\cup W_{\mathrm {quarter}}^{\varGamma}\) for Γ∈{A,B,C,D}, using the convention \(W_{\mathrm {quarter}}^{A} = W_{\mathrm {quarter}}^{D} = \emptyset\).

Note that in the current branch for any ordering and any Γ∈{A,B,C,D}, the segment Γ gets all the jobs from W Γ and q Γ=n/4−|W Γ| jobs from appropriate Q Δ (Δ=A,¬A,¬D,D for Γ=A,B,C,D, respectively). Thus, the behavior of an ordering σ in A influences the behavior of σ in C by the choice of which elements of Q A∩Q ¬D are placed in A, and which in C. Similar dependencies are between A and D, B and C, as well as B and D (see Fig. 6). In particular, there are no dependencies between A and B, as well as C and D, and we can compute the optimal arrangement by keeping track of only three out of four dependencies at once, leading us to an algorithm running in time roughly O(23n/4). This is formalized in the following lemma:

Lemma 2.12

If 2ε 1+2ε 2+ε 4<1/4 and the assumptions of Lemmata 2.2 and 2.8–2.11 are not satisfied, the instance can be solved by an algorithm running in time bounded by

Proof

Let (Γ,Δ)∈{(A,A),(B,¬A),(C,¬D),(D,D)}. For each set Y⊆Q Δ of size q Γ, for each bijection (partial ordering) σ Γ(Y):Y∪W Γ→Γ let us define its cost as

Let \(\sigma_{opt}^{\varGamma}(Y)\) be the partial ordering that minimizes the cost (recall that it is unique due to the initial steps in Sect. 2.4). Note that if we define \(Y_{opt}^{\varGamma}= \sigma_{opt}^{-1}(\varGamma ) \cap Q^{\varDelta}\) for (Γ,Δ)∈{(A,A),(B,¬A),(C,¬D),(D,D)}, then the ordering σ opt consists of the partial orderings \(\sigma_{opt}^{\varGamma}(Y_{opt}^{\varGamma})\).

We first compute the values \(\sigma_{opt}^{\varGamma}(Y)\) for all (Γ,Δ)∈{(A,A),(B,¬A), (C,¬D),(D,D)} and Y⊆Q Δ, |Y|=q Γ, by a straightforward modification of the DP algorithm. For fixed pair (Γ,Δ), the DP algorithm computes \(\sigma_{opt}^{\varGamma}(Y)\) for all Y in time

The last inequality follows from the assumption 2ε 1+2ε 2+ε 4<1/4.

Let us focus on the sets Q A∩Q ¬D, Q A∩Q D, Q ¬A∩Q ¬D and Q ¬A∩Q D. Without loss of generality we assume that Q A∩Q ¬D is the smallest among those. As they all are pairwise disjoint and sum up to I 2, we have |Q A∩Q ¬D|≤n/4. We branch into at most \(2^{|Q^{A} \cap Q^{\neg D}|+|Q^{\neg A} \cap Q^{D}|}\) subcases, guessing the sets

Then, we choose the set

that optimizes

Independently, we choose the set

that optimizes

To see the correctness of the above step, note that \(Y_{opt}^{A} = Y_{opt}^{AC} \cup Y_{opt}^{AD}\), and similarly for other quarters.

The time complexity of the above step is bounded by

and the bound T 5(n) follows. □

So far we have \(10^{\varepsilon _{1} n} 2^{2\varepsilon _{2} n} n^{ O(1)}\) overhead. For sufficiently small ε 4 we have \(\binom{n}{\varepsilon _{4} n} = O(2^{n/16})\) and then for sufficiently small constants ε k , k=1,2,3 we have \(10^{\varepsilon _{1} n} 2^{2\varepsilon _{2} n}n^{ O(1)} T_{5}(n) = O(c^{n})\) for some c<2.

2.9 Numerical Values of the Constants

Table 1 summarizes the running times of all cases of the algorithm. Using the following values of the constants:

we get that the running time of our algorithm is bounded by:

3 Conclusion

We presented an algorithm that solves SCHED in O((2−ε)n) time for some small ε. This shows that in some sense SCHED appears to be easier than resolving CNF-SAT formulae, which is conjectured to need 2n time (the so-called Strong Exponential Time Hypothesis). Our algorithm is based on an interesting property of the optimal solution expressed in Lemma 2.6, which can be of independent interest. However, our best efforts to numerically compute an optimal choice of values of the constants ε k , k=1,2,3,4 lead us to an ε of the order of 10−10. Although Lemma 2.6 seems powerful, we lost a lot while applying it. In particular, the worst trade-off seems to happen in Sect. 2.6, where ε 1 needs to be chosen much smaller than ε 2. The natural question is: can the base of the exponent be significantly improved?

Notes

The O ∗( ) notation suppresses factors polynomial in the input size.

Although Woeginger in his papers asks for an O(1.99n) algorithm, the intention is clearly to ask for an O((2−ε)n) algorithm.

Even an inclusion-maximal matching, which can be found greedily, is enough.

Actually, this bound can be improved to 10|M|/2, as M are endpoints of a matching in the graph corresponding to the set of precedences.

References

Binkele-Raible, D., Brankovic, L., Cygan, M., Fernau, H., Kneis, J., Kratsch, D., Langer, A., Liedloff, M., Pilipczuk, M., Rossmanith, P., Wojtaszczyk, J.O.: Breaking the 2n-barrier for irredundance: Two lines of attack. J. Discrete Algorithms 9(3), 214–230 (2011)

Björklund, A.: Determinant sums for undirected hamiltonicity. In: 51th Annual IEEE Symposium on Foundations of Computer Science (FOCS), pp. 173–182. IEEE Comput. Soc., Los Alamitos (2010)

Björklund, A., Husfeldt, T., Kaski, P., Koivisto, M.: Fourier meets Möbius: fast subset convolution. In: 39th Annual ACM Symposium on Theory of Computing (STOC), pp. 67–74 (2007). doi:10.1145/1250790.1250801

Björklund, A., Husfeldt, T., Koivisto, M.: Set partitioning via inclusion–exclusion. SIAM J. Comput. 39(2), 546–563 (2009)

Brucker, P.: Scheduling Algorithms, 2nd edn. Springer, Heidelberg (1998)

Chekuri, C., Motwani, R.: Precedence constrained scheduling to minimize sum of weighted completion times on a single machine. Discrete Appl. Math. 98(1-2), 29–38 (1999)

Cygan, M., Nederlof, J., Pilipczuk, M., Pilipczuk, M., van Rooij, J.M.M., Wojtaszczyk, J.O.: Solving connectivity problems parameterized by treewidth in single exponential time. In: 52nd Annual Symposium on Foundations of Computer Science (FOCS), pp. 150–159. IEEE Press, New York (2011)

Cygan, M., Pilipczuk, M.: Exact and approximate bandwidth. Theor. Comput. Sci. 411(40-42), 3701–3713 (2010)

Cygan, M., Pilipczuk, M., Wojtaszczyk, J.O.: Capacitated domination faster than O(2n). Inf. Process. Lett. 111, 1099–1103 (2011)

Fomin, F., Kratsch, D.: Exact Exponential Algorithms. Springer, Berlin (2010)

Fomin, F.V., Grandoni, F., Kratsch, D.: A measure & conquer approach for the analysis of exact algorithms. J. ACM 56(5), 1–32 (2009). doi:10.1145/1552285.1552286

Fomin, F.V., Todinca, I., Villanger, Y.: Exact algorithm for the maximum induced planar subgraph problem. In: 19th Annual European Symposium on Algorithms (ESA). Lecture Notes in Computer Science, vol. 6942, pp. 287–298. Springer, Berlin (2011)

Graham, R.: Bounds for certain multiprocessing anomalies. Bell Syst. Tech. J. 45, 1563–1581 (1966)

Hefetz, N., Adiri, I.: An efficient optimal algorithm for the two-machines unit-time jobshop schedule-length problem. Math. Oper. Res. 7, 354–360 (1982)

Impagliazzo, R., Paturi, R.: On the complexity of k-SAT. J. Comput. Syst. Sci. 62(2), 367–375 (2001)

Lawler, E.L.: Optimal sequencing of a single machine subject to precedence constraints. Manag. Sci. 19, 544–546 (1973)

Lenstra, J.K., Kan, A.H.G.R., Brucker, P.: Complexity of machine scheduling problems. Ann. Discrete Math. 1, 343–362 (1977)

Lenstra, J.K., Kan, A.H.G.R.: Complexity of scheduling under precedence constraints. Oper. Res. 26, 22–35 (1978)

Lokshtanov, D., Marx, D., Saurabh, S.: Known algorithms on graphs of bounded treewidth are probably optimal. In: Twenty-Second Annual ACM-SIAM Symposium on Discrete Algorithms (SODA), pp. 777–789 (2011)

Margot, F., Queyranne, M., Wang, Y.: Decompositions, network flows, and a precedence constrained single-machine scheduling problem. Oper. Res. 51(6), 981–992 (2003)

Mucha, M., Sankowski, P.: Maximum matchings via Gaussian elimination. In: 45th Symposium on Foundations of Computer Science (FOCS), pp. 248–255. IEEE Comput. Soc., Los Alamitos (2004)

Patrascu, M., Williams, R.: On the possibility of faster SAT algorithms. In: Twenty-First Annual ACM-SIAM Symposium on Discrete Algorithms (SODA), pp. 1065–1075 (2010)

van Rooij, J.M.M., Nederlof, J., van Dijk, T.C.: Inclusion/exclusion meets measure and conquer. In: 17th Annual European Symposium (ESA). Lecture Notes in Computer Science, vol. 5757, pp. 554–565. Springer, Berlin (2009)

Woeginger, G.J.: Space and time complexity of exact algorithms: Some open problems (invited talk). In: First International Workshop on Parameterized and Exact Computation (IWPEC). Lecture Notes in Computer Science, vol. 3162, pp. 281–290. Springer, Berlin (2004)

Woeginger, G.J.: Open problems around exact algorithms. Discrete Appl. Math. 156(3), 397–405 (2008)

Acknowledgements

We thank Dominik Scheder for very useful discussions on the SCHED problem during his stay in Warsaw. Moreover, we greatly appreciate the detailed comments of anonymous reviewers, especially regarding presentation issues and minor optimizations in our algorithm.

Open Access

This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

Author information

Authors and Affiliations

Corresponding author

Additional information

An extended abstract of this paper appears at 19th European Symposium on Algorithms, Saarbrücken, Germany, 2011.

M. Cygan and Marcin Pilipczuk were supported by Polish Ministry of Science grant no. N206 355636 and Foundation for Polish Science.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Cygan, M., Pilipczuk, M., Pilipczuk, M. et al. Scheduling Partially Ordered Jobs Faster than 2n . Algorithmica 68, 692–714 (2014). https://doi.org/10.1007/s00453-012-9694-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00453-012-9694-7