Abstract

We consider a family of one-dimensional diffusions, in dynamical Wiener mediums, which are random perturbations of the Ornstein–Uhlenbeck diffusion process. We prove quenched and annealed convergences in distribution and under weigh-ted total variation norms. We find two kind of stationary probability measures, which are either the standard normal distribution or a quasi-invariant measure, depending on the environment, and which is naturally connected to a random dynamical system. We apply these results to the study of a model of time-inhomogeneous Brox’s diffusions, which generalizes the diffusion studied by Brox (Ann Probab 14(4):1206–1218, 1986) and those investigated by Gradinaru and Offret (Ann Inst Henri Poincaré Probab Stat, 2011). We point out two distinct diffusive behaviours and we give the speed of convergences in the quenched situations.

Similar content being viewed by others

1 Introduction

Random walks (RWs) in random environments (REs) and their continuous-time counterparts, the diffusions in random environment, pave the way for the study of a multitude of interesting cases, which have been tackled since the 70’s in a large section of the literature.

Concerning the genesis of the theory, we allude to [27, 46], as regards the discrete-time situation, and to [9, 26, 44], as regards the continuous-time one. For more recent refinements and generalizations, we refer to [10, 12–14, 23, 24, 34, 42, 45, 49] and for a general review of the topic, we refer to [50].

Here we investigate one-dimensional diffusions evolving in dynamical Wiener media, which have some common features with those studied in [9, 21]. We give, under weighted total variation norms, quenched and annealed diffusive scaling limits, which may depend on the environment, and thus, which are not always normal distributions. We also give the speeds of convergence under the quenched distributions. In addition, we bring out a phase transition phenomenon, which is the analogue in RE, to a particular situation considered in [21].

RWs in dynamical REs have been widely and intensively considered in the past few years under several assumptions. Initially, space–time i.i.d. REs have been introduced and studied in [6, 7, 39]. Further difficulties arise when the fluctuations of the REs are i.i.d. in space and Markovian in time, case addressed in [5, 16], and major one arise when we consider space–time mixing REs, case recently studied in [4, 8, 15]. However, continuous-time diffusions in time-varying random environment have been sparsely investigate. Nevertheless, we can mention [29, 30, 32, 40] concerning the homogenization of diffusions in time-dependent random flows.

1.1 The Wiener space

Introduce the space

endowed by the standard \(\sigma \)-field \(\fancyscript{B}\) generated by the Borel cylinder sets. It is classical that there exists a unique probability measure \(\fancyscript{W}\) on \((\varTheta ,\fancyscript{B})\) such that the processes \(\{\theta (\pm \,x) : \theta \in \varTheta , x\ge 0\}\) are two independent standard Brownian motions. The probability distribution \(\fancyscript{W}\) is called the Wiener measure. We denote by \(\{S_\lambda : \lambda >0\}\) the scaling transformations on \(\varTheta \) defined by

Note that \(\varTheta \) is naturally endowed with a structure of separable Banach space, such that \(\fancyscript{B}\) coincides with the Borel \(\sigma \)-field \({\fancyscript{B}}_\varTheta \).

1.2 Schumacher and Brox’s results

Brox makes sense in [9] to solution of the informal diffusion equation

where \(\theta \in \varTheta \) and \(B\) is a standard one-dimensional Brownian motion independent of the Brownian environment \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\). Denoting by \(\mathbb{P }_\theta \) and \(\widehat{\mathbb{P }}\), respectively the quenched and annealed distributions (the expectation of \(\mathbb{P }_\theta \) under \(\fancyscript{W}\)) of such solution, Schumacher and Brox show, independently in [43, 44] and [9], that there exists a family of measurable functions \(\{b_h : h>0\}\) on \((\varTheta ,\fancyscript{B})\) such that the following convergence holds in probability

The Wiener measure being invariant under the scaling transformations, if we denote by \(\hat{b}_1\) the distribution of \(b_1\) under \(\fancyscript{W}\), the following annealed convergence holds in distribution

The key to prove these results is to take full advantage of the representation of \(X\) in terms of a one-dimensional Brownian motion changed in scale and time, and of the invariance of the Brownian motions \(B\) and \(\theta \) under the scaling transformations \(S_{\lambda }\). The authors prove that the diffusion is localized in the valleys of the potential \(\theta \), which are themselves characterized by \(b_1\).

1.3 Phase transition in a \(2\)-stable deterministic environment

Set \(W(x):=|x|^{{1}/{2}}\) and consider, for any \(\beta \in \mathbb{R }\), the particular time-inhomogeneous singular stochastic differential equation (SDE) studied in [21] and which is given by

The authors show in [21] the existence of a pathwise unique strong solution and prove diffusive and subdiffusive scaling limits in distribution, depending on the position of \(\beta \) with respect to \(1/4\). More precisely, they prove that

and

\(k_c\) and \(k_u\) being two normalization positive constants. In fact, to obtain the convergences in (1.7), they study the diffusion equation

This process is naturally related to Eq. (1.6) by setting \(r:=\beta -1/4\), via a well chosen scaling transformation taking full advantage of the scaling property of the Brownian motion \(B\) and of the deterministic scaling property of the potential \(W\). For more details, we refer to [21]. We can expect to obtain similar results by replacing \(W\) in Eq. (1.6) by a typical Brownian path \(\theta \in \varTheta \), a \(2\)-stable random process, and this is one of the main objects of this article.

1.4 Overview of the article

The paper is organized as follows: in Sect. 2, we introduce a diffusion equation (2.2) in a dynamical Wiener potential, which generalizes Eq. (1.9). Then we state our main results and we give the general strategy of the proofs. In Sect. 3, we apply these results to a model of time-inhomogeneous Brox’s diffusions. This is a generalization of Eqs. (1.6) and (1.3) and we obtain similar asymptotic behaviours as in (1.7). Thereafter, in Sect. 4, we introduce some linear perturbations of Eq. (2.2). We show some properties, related to these ones, which are used in Sects. 5 and 6 to prove existence, uniqueness and nonexplosion for the diffusion process (2.2) (Theorem 2.1) and also to prove that this process is a strongly Feller diffusion satisfying the lower local Aronson estimate and a kind of cocycle property (Theorem 2.2). In Sect. 7, we prove some technical results in order to obtain the quenched and annealed convergences (Theorems 2.3 and 2.4) in the two last Sections.

2 Model and statement of results

2.1 Diffusions in a fluctuating Ornstein–Uhlenbeck potential

In the present paper, we study Brownian motions dynamics, in time-dependent Wiener media, given by the underlying dynamical random environment

The family \(\{T_t : t\in \mathbb{R } \}\) is a one-parameter group of transformations leaving invariant \(\fancyscript{W}\) and such that, under this probability measure, \(\{T_{t}\theta (x) : t\in \mathbb{R }\}\) is a stationary Ornstein–Uhlenbeck process having \(\fancyscript{N}(0,x)\) as stationary distribution. Moreover, the dynamical system \((\varTheta ,\fancyscript{B},\fancyscript{W}, (T_t)_{t\in \mathbb{R }})\) is ergodic (see Proposition 7.5).

We consider, for any \(r\in \mathbb{R }\), the diffusion process \(Z\), solution of the informal SDE driven by a standard Brownian motion \(B\), independent of \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\),

with

Note that when \(\theta \) is equal to \(W\), defined in (1.6), \(T_t\theta \) in (2.3) is simply equal to \(\theta \) and Eq. (2.2) is nothing but Eq. (1.9). The diffusion process \(Z\) can be seen as a Brownian motion immersed in the random time-varying potential \(\{V_{\theta }(t,\cdot ) : t\in \mathbb{R }\}\), as well as an Ornstein–Uhlenbeck diffusion process, whose potential is perturbed by the dynamical Wiener medium \(\{e^{-r t}\,T_t\theta : t\in \mathbb{R }\}\). Moreover, one can see \(Z\) as a distorted Brownian motion, whose drift is a Gaussian field \(\{\varGamma (t,x) : t,x\in \mathbb{R }\}\) having mean function \(m_{\varGamma }\) and covariance function \(C_{\varGamma }\) (here a Dirac measure) given by

We need to give a correct sense to solution of Eq. (2.2). Formally, we can see \(Z\) as the diffusion process, whose conditional infinitesimal generator, given \(\theta \in \varTheta \), is

The domain and the so-called generalized domain of \(L_\theta \) are defined by

where \(\mathrm{C}^{1}\) and \(\mathrm{W}^{1,\infty }_\mathrm{loc}\) denote the space of real continuous functions \(F(t,x)\) on \([s,\infty )\times \mathbb{R }\) such that the partial derivatives \(\partial _t F\) and \(\partial _x F\) (in the sense of distributions) exist and are respectively continuous functions and locally bounded functions.

This kind of diffusion operators, with distributional drift, have been already study in [20, 41] in the case where the coefficients of the SDE do not depend on time. Rigorously speaking, a weak solution to Eq. (2.2) is a solution to the martingale problem related to \((L_\theta ,D(L_\theta ))\).

Definition 1.1 A continuous stochastic process \(\{Z_t : t\ge s\}\) defined on a given filtered probability space is said to be a weak solution to Eq. (2.2) if \(Z_s=z\) and if there exists an increasing sequence of stopping times \(\{\tau _n : n\ge 0\}\) such that, for all \(n\ge 0\) and \(F\in D(L_{\theta })\),

is a local martingale, with

A weak solution is global when the explosion time satisfies \(\tau _e=\infty \) a.s. and we said that the weak solution is unique if all the weak solutions have the same distribution.

We are now able to state our first result.

Theorem 2.1

For any \(r\in \mathbb{R },\,\theta \in \varTheta ,\,s\ge 0\) and \(z\in \mathbb{R }\), there exists a unique global weak solution \(Z\) to Eq. (2.2). Moreover, there exists a standard Brownian motion \(B\) such that, for all \(F\in \overline{D}(L_\theta )\),

Since the one-dimensional Eq. (2.2) is not time-homogeneous, there are not simple conditions which characterize the nonexplosion as in [9, 20, 41]. Therefore, the main difficulty is to construct Lyapunov functions. To this end, we consider some linear perturbations of Eq. (2.2), given in (4.1), for which we are able, when the potential (4.2) is sufficiently confining, to construct suitable Lyapunov functions (see Proposition 4.2). Then we prove (see Theorem 5.1) nonexplosion, existence and uniqueness (in a more general setting) by using the Girsanov transformation and by considering the SDE (4.6). This equation is connected to Eq. (4.1), when the associated potential is attractive, via the pseudo-scale function \(S_\theta \) defined in (4.4) (see Proposition 4.1). This method is a generalization in the time-inhomogeneous setting of that employed in [9, 20, 41] and which uses the effective scale function.

2.2 Strong Feller property, cocycle property and lower local Aronson estimate

In the following, we denote by \(\mathbb{P }_{s,z}(\theta )\) the distribution of the weak solution to Eq. (2.2), called the quenched distribution, which existence is stated in Theorem 2.1. We introduce the canonical process \(\{X_t: t\ge 0\}\) on the space of continuous functions from \([0,\infty )\) to \(\mathbb{R }\), endowed with its standard Borel \(\sigma \)-field \({\fancyscript{F}}\), and we denote by \(P_\theta (s,z;t,\mathrm{d}x)\) and \(P_{s,t}(\theta )\), the probability transition kernel and the associated Markov kernel defined, for all measurable nonnegative function \(F\) on \(\mathbb{R }\) by

Theorem 2.2

For any \(r\in \mathbb{R }\) and all \(\theta \in \varTheta \), the family \(\{\mathbb{P }_{s,z}(\theta ) : s\ge 0,\, z\in \mathbb{R }\}\) is strongly Feller continuous. Moreover, the associated time-inhomogeneous semigroups \(\{P_{s,t}(\theta ): t\ge s\ge 0,\,\theta \in \varTheta \}\) satisfy

Besides, \(P_\theta (s,z;t,\mathrm{d}x)\) admits a density \(p_\theta (s,z;t,x)\), which is measurable with respect to \((\theta ,s,t,z,x)\) on \(\varTheta \times \{t>s\ge 0\}\times \mathbb{R }^{2}\), and which satisfies the lower local Aronson estimate: for all \(\theta \in \varTheta ,\,T>0\) and compact set \(C\subset \mathbb{R }\), there exists \(M>0\) such that, for all \(0\le s<t\le T\) and \(z,x \in C\),

The idea is to study the more general equivalent SDE (4.6) and to prove, by using standard technics, the analogous theorem for this diffusion (see Theorem 6.1).

Besides, the transition density being measurable with respect to \(\theta \), we can define the annealed distribution \({\widehat{\mathbb{P }}}_{s,z}\) and the associated Markov kernel \({\widehat{P}}_{s,t}\) as

We point out that \(X\) is not a Markov process under \({\widehat{\mathbb{P }}}_{s,z}\). Moreover, in the light of (2.10), we can assume without loss of generality that \(s=0\) in (2.2) and we set

Furthermore, we can see that the case \(r=0\) is of particular interest since the relation (2.10) can be written in this situation

Roughly speaking, the Eq. (2.2) is time-homogeneous in distribution since from the scaling property \(\fancyscript{W}\) is \((T_t)\)-invariant. Relation (2.12) is called the cocycle property and it induces (see [1] for a definition) a random dynamical system (RDS) over \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}}, (T_t))\) on the set \({\fancyscript{M}}\) of signed measures on \(\mathbb{R }\), by setting, for all \(\nu \in {\fancyscript{M}}\),

Note that the subset of probability measures \(\fancyscript{M}_{1}\subset \fancyscript{M}\) is invariant under this RDS.

2.3 Quasi-invariant and stationary probability measures

To state our next important results, we need to introduce some additional notations. We said that \(\mu \) is a random probability measure on \(\mathbb R \), over \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\), if \(\mu _{\theta }\in {\fancyscript{M}}_{1}\) for \({\fancyscript{W}}\)-almost all \(\theta \), and if \(\theta \longmapsto \mu _\theta (A)\) is measurable for all Borel set \(A\). For such random probability measure \(\mu \), we introduce the probability measure \(\hat{\mu }\) defined by

Let \(\alpha \in \mathbb{R }\) and \(U_\alpha ,\,V_\alpha \) be the functions on \(\mathbb{R }\) defined by

The \(F\)-total variation norm, \(F\in \{U_\alpha ,V_{\alpha }\}\), of a signed measures \(\nu \), is defined by

Note that if \(\nu \in {\fancyscript{M}}_1\) then \(\Vert \nu \Vert _{F}=\nu (F)\). In addition, we set

Theorem 2.3

Assume that \(r=0\). There exists a random probability measure \(\mu \) on \(\mathbb{R }\) over \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\), unique up to a \({\fancyscript{W}}\)-null set, such that, for all \(t\ge 0\),

Moreover, for all \(\alpha \in (0,1)\), the quasi-invariant measure satisfies

Furthermore, there exists \(\lambda >0\) such that, for all \(\nu \in {\fancyscript{M}}_{1,U_{\alpha }}\) and \(\hat{\nu }\in {\fancyscript{M}}_{1,V_{\alpha }}\),

and

Linear RDSs have been studied in an extensive body of the literature. The dynamics (in particular the Lyapunov exponents) in the case where the discrete-time linear RDS acts on a finite dimensional space (the case of infinite products of random matrices) have been well understood for a long time, for instance in [22, 37], whereas the situation where the general linear RDS acts on a separable Banach space has been newly studied in [33].

Our goal in Theorem 2.3 is to obtain a quasi-invariant probability measure for the random Markov kernels \(P_t(\theta )\) and to give convergence results in the separable Banach spaces \({\fancyscript{M}}_{U_\alpha }\) (exponential convergence) and \({\fancyscript{M}}_{V_{\alpha }}\). We need a kind of random Perron-Frobenius theorem, which has been, for example, obtained in [2] for infinite products of nonnegative matrices, and more recently in [28] for infinite products of stationary Markov kernels over a compact set.

However, the Markov operators that we consider act on the infinite dimensional space \({\fancyscript{M}}\) and are defined over the noncompact set \(\mathbb{R }\). To overcome this problem, we need to see that \(U_\alpha \) and \(V_\alpha \) are Foster–Lyapunov functions (see Propositions 7.2 and 7.3). More precisely, we show that Lyapunov exponents can be chosen independently of the environment \(\theta \), while keeping a control on the expectation of the \(U_\alpha \)-norm and the \(V_\alpha \)-norm. The classical method to construct Foster–Lyapunov functions for Markov kernels is to construct Lyapunov functions for the infinitesimal generators (see Lemma 7.1 and 7.2). Nonetheless, we stress that neither \(U_\alpha \) nor \(V_\alpha \) belong to the generalized domain \(\overline{D}(L_\theta )\) and we need to approximate uniformly these functions by functions of this domain, while keeping a control on the expectation under the Wiener measure. This is possible by using the Hölder continuity of Brownian paths (see Proposition 7.1).

Then, we use the explicit bound on convergence of time-inhomogeneous Markov chains (see Proposition 7.4), obtained from [17], via coupling constructions, Foster–Lyapunov conditions and the cocycle property, together with the ergodicity of the underlying dynamical system \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}},(T_t)_{t\in \mathbb{R }})\). We point out that the Aronson estimate (2.11) is necessary to the coupling constructions.

Furthermore, let us denote by \(\{U_t : t\ge 0\}\) the canonical process on the space \(\Xi \) of continuous functions from \([0,\infty )\) to \(\varTheta \), endowed with its standard Borel \(\sigma \)-field \({\fancyscript{G}}\), and introduce the Markov kernels \(\varPi _{\theta ,z}\) on \((\Xi \times \Omega ,{\fancyscript{G}}\otimes {\fancyscript{F}})\), and the probability measure \(\overline{\mu }\) on \((\varTheta \times \mathbb{R },{\fancyscript{B}}\otimes {\fancyscript{B}}(\mathbb{R }))\), defined by the product and disintegration formula

Then we can see that \(\{(U_t,X_t) : t\ge 0\}\) is a time-homogeneous Markov process under \(\varPi _{\theta ,z}\) such that \(\overline{\mu }\) is an invariant initial distribution. This process is called the skew-product Markov process (see [11, 36] for the discrete-time situation). By applying standard results on general time-homogeneous Markov processes (see for instance [35]) we deduce that for all \(F\in \mathrm{L}^1(\varTheta \times \mathbb{R },\overline{\mu }),\,z\in \mathbb{R }\) and \({\fancyscript{W}}\) almost all \(\theta \in \varTheta \),

Note that Eq. (2.15) provides some information on the tails of \(\mu _\theta \) and \(\hat{\mu }\).

Theorem 2.4

Assume that \(r>0\). For any \(z\in \mathbb{R }\) and for \({\fancyscript{W}}\)-almost all \(\theta \in \varTheta \), the following convergence holds under the quenched distribution \(\mathbb{P }_z(\theta )\),

Here the space–time mixing environment is, contrary to Theorem 2.3, asymptotically negligible and the diffusion behaves, in long time, as the underlying Ornstein–Uhlenbeck process. Since the cocycle property (2.12) is no longer satisfied, we loss the structure of linear RDS. To prove this result, we use once-again Proposition 7.4 but we also need to apply [21, Lemma 4.5] to the more general equivalent SDE (4.6).

Following the terminology used in [21], it is not difficult to see that this equation is asymptotically time-homogeneous and \(S_*\varGamma \)-ergodic, with \(S\) the scale function of the Ornstein–Uhlenbeck diffusion process having \(\varGamma \sim {\fancyscript{N}}(0,1)\) as stationary distribution and \(S_*\varGamma \) the pushforward distribution of \(\varGamma \) by \(S\). As they mention in [21], the main difficulty to apply this lemma is usually to show the boundedness in probability. To this end, we need to use again the Foster–Lyapunov functions \(U_\alpha \) and \(V_\alpha \).

3 Application to time-inhomogeneous Brox’s diffusions

3.1 Associated models

We turn now to our main application, the study of the socalled time-inhomogeneous Brox’s diffusion. We consider, for any \(\beta \in \mathbb{R }\), the informal SDE driven by a standard Brownian motion \(B\), independent of the Brownian environment \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\),

A weak solution to Eq. (3.1) is, in the same manner as in Definition 2.1, the diffusion whose conditional generator, given \(\theta \in \varTheta \), is

As for Eq. (2.2), where we can assume without loss of generality that \(s=0\), we can assume that \(u=1\) in Eq. (3.1). Moreover, as in (1.9), we assume that \(\beta =r+{1}/{4}\) and we define, for all continuous functions \(\omega \) on \([1,\infty )\) and all measurable function \(G\) on \([1,\infty )\times \mathbb{R },\,\Phi _e(\omega )(t):={\omega (e^t)}/{e^{t/2}}\) and \({\fancyscript{E}} G (t,x):=G(e^t,e^{t/2}x)\).

It is a simple calculation to see that \({\fancyscript{E}} : D({\fancyscript{L}}_\theta )\longrightarrow D(L_\theta )\) is a bijection and that \(L_\theta = {\fancyscript{E}}\circ {\fancyscript{L}}_\theta \circ {\fancyscript{E}}^{-1}\). In the same way as in [21, Proposition 2.1 and Section 2.2.1] we deduce that \(\{Y_t : t\ge 1\}\) is a weak solution to Eq. (3.1) if and only if \(\{Z_t:=\Phi _{\mathtt{e}}(Y_t) : t\ge 0\}\) is a weak solution to Eq. (2.2). Then a direct application of Theorem 2.1 gives that for all \(\theta \in \varTheta \), there exists a unique irreducible strongly Feller diffusion process solution to Eq. (3.1).

Let \(\mathbb{Q }_{y}(\theta )\) be its quenched distribution and denote by \(\{R_t(\theta ) : t\ge 1\}\), the time-inhomogeneous semigroup associated to \(\{X_t/\sqrt{t} : t\ge 1\}\) under \(\mathbb{Q }_{y}(\theta )\), and by \({\widehat{\mathbb{Q }}}_{y}\) and \(\{{\widehat{R}}_t : t\ge 1\}\), there annealed counterparts.

3.2 Associated asymptotic behaviours

The following two corollaries are the analogous of Theorems 2.3 and 2.4. We recall that \(S_\lambda \) is defined in (1.2).

Corollary 3.1

Assume that \(\beta =1/4\). For all \(\alpha \in (0,1)\) there exists \(\lambda >0\) such that, for all \(\nu \in {\fancyscript{M}}_{1,U_{\alpha }}\) and \(\hat{\nu }\in {\fancyscript{M}}_{1,V_{\alpha }}\),

and

Corollary 3.2

Assume that \(\beta >1/4\). For any \(y\in \mathbb{R }\) and for \({\fancyscript{W}}\)-almost all \(\theta \in \varTheta \), the following convergence holds under the quenched distribution \(\mathbb{Q }_y(\theta )\),

The scaling limits (3.2), (3.3) and (3.4) are to be compared with the two convergences presented in (1.7) (the deterministic situation studied in [21]) and convergences (1.4) and (1.5) (the random time-homogeneous situation considered in [9]). These results have some commons features with those presented in [21] and [9] and also with those presented in [7, 29, 30, 32, 39, 40, 42, 49] concerning the quenched central limit theorem (3.4). There is still a phase transition phenomenon for \(\beta =1/4\) and we obtain distinct quenched and annealed scaling limits for the critical point. Moreover, we are more accurate concerning the speed of convergence, which is polynomial here, and exponential in Theorem 2.3.

Nevertheless, the case \(\beta <1/4\) seems to be out of range of the present technics. In fact, we expect a stronger localization phenomenon and a subdiffusive behaviour of order \(t^{2\beta }\log ^2(t)\) when \(\beta \ge 0\) and an almost sure convergence when \(\beta <0\) (which can seen as a generalization and mixture of results presented in (1.4), (1.5) and (1.8)). Note that in the case where \(\beta <0\), Eq. (3.1) is (via a simple change of time) a damped SDE in random environment.

Furthermore, some methods elaborated in this paper can be used to study a similar interesting situation where we replace the Brownian environment \(\theta \) in (3.1) by an another self-similar process. These situations are object of some works in progress. The case of a multiplicative noise or similar equations in higher dimension seems to be more difficult.

4 Preliminaries of Theorems 2.1 and 2.2

4.1 Linear perturbations of equation (2.2)

We consider, for any \(a\in \mathbb{R }\), the informal SDE

with the more general potential than (2.2) given by

Here once again \(r\in \mathbb{R }\) and \(B\) denotes a standard Brownian motion independent of the Wiener space \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\). This equation coincides with Eq. (2.2) for \(a=1\). The conditional infinitesimal generator \(A_{\theta }\) and its associated domains are given as in (2.4) and (2.5), replacing \(V_{\theta }\) by \(Q_{\theta }\). Moreover, it is not difficult to check that

We get that the domains of \(A_{\theta }\) and \(L_{\theta }\) are equals, in particular, the domains of \(A_{\theta }\) do not depend on \(a\). A weak solution to Eq. (4.1) is, in the same way as in Definition 2.1, a solution to the martingale problem related to \((A_{\theta },D(A_{\theta }))\). In the sequel, we set

4.2 Equivalent SDE and martingale problem

We assume that \(a>0\) and we introduce an auxiliary SDE on \(\mathbb{R }\), which is naturally connected to Eq. (4.1). Let \(S\) and \(H\) be the functions on \(\varTheta \times \mathbb{R }^2\) defined by

Note that \(H_{\theta }\) is well defined since \(a>0\) and in this case, the socalled pseudo-scale function \(x\longmapsto S_{\theta }(t,x)\) is an increasing bijection of \(\mathbb{R }\). Moreover, by using the second representation of \(S\), obtained by the change of time \(z:=e^{{t}/{2}}y\), we can see that \(S_{\theta }(t,x)\) and \(H_{\theta }(t,x)\) are continuously differentiable with respect to \((t,x)\in \mathbb{R }^2\) and we can set

In addition, remark that, for all \((\theta ,s,t,x)\in \varTheta \times \mathbb{R }^{3}\),

We can consider, for any \(\theta \in \varTheta \), the SDE on \(\mathbb{R }\) with continuous coefficients and driven by a standard Brownian motion \(B\), independent of \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\),

Let \(\mathrm{C}^{1,2}\) be the space of continuous functions \(F(t,x)\) on \([s,\infty )\times \mathbb{R }\) such that \(\partial _t F,\,\partial _x F\) and \(\partial _{xx}^2 F\) exist and are continuous functions and introduce

Note that \(S_{\theta }\) and \(H_{\theta }\) induce two bijections from the space of measurable functions on \([s,\infty )\times \mathbb{R }\) into itself, inverse to each other, by setting

By restriction, we get that \({\fancyscript{S}}_{\theta }\) and \({\fancyscript{H}}_{\theta }\) induce bijections

where \(\mathrm{W}^{1,2,\infty }_\mathrm{loc}\) denote the Sobolev space of continuous functions \(F(t,x)\) on \([s,\infty )\times \mathbb{R }\) such that the partial derivatives \(\partial _t F,\,\partial _x F,\,\partial _t(\partial _x F)\) and \(\partial _{xx}^2 F\) exist and are locally bounded functions. Moreover, the infinitesimal generators \(A_\theta \) and \({\widetilde{A}}_{\theta }\) are equivalent. More precisely, they satisfy

Proposition 4.1

For any \(r\in \mathbb{R },\,\theta \in \varTheta ,\,s\ge 0\) and \(z,{\tilde{z}}\in \mathbb{R }\) such that \({\tilde{z}}:=S_{\theta }(s,z),\,\{Z_{t} : t\ge s\}\) is a weak solution to Eq. (4.1) if and only if \(\{{\widetilde{Z}}_t:=S_{\theta }(t,Z_t): t\ge s\}\) is a weak solution, up to the explosion time \(\tau _{e}\), to SDE (4.6). Furthermore, there exists a unique weak solution \(({\widetilde{Z}},B)\) and, for all \(G\in \mathrm{W}^{1,2,\infty }_\mathrm{loc}\) and \(s\le t< \tau _e\),

Proof

Assume that \({\widetilde{Z}}\) is a weak solution to (4.6). By using the Ito formula, \({\widetilde{Z}}\) solves the martingale problem related to \(({\widetilde{A}}_\theta ,\mathrm{C^{1,2}})\). Therefore, \({\widetilde{Z}}_s={\tilde{z}}\) and there exists an increasing sequence of stopping time \(\{\tau _n : n\ge 0\}\) such that, for all \(n\ge 0\) and \(G\in \mathrm{C}^{1,2}\),

is a local martingale, with

We deduce from relation (4.7) that \(\{Z_t:=H_\theta (t,{\widetilde{Z}}_t) : t\ge s\}\) is a weak solution to (4.1) since \(Z_s=z\), for all \(n\ge 0\) and \(F\in D(L_\theta ),\,G:= {\fancyscript{H}}_\theta F\in \mathrm{C}^{1,2}\), and

A similar reasoning allow us to show that if \( Z\) is a weak solution to (4.1) then \(\{{\widetilde{Z}}_t:=S_\theta (t, Z_t):t\ge s\}\) is a weak solution to (4.6). Moreover, Eq. (4.6) has continuous coefficients \(\sigma _\theta \) and \(d_\theta \) and is strictly elliptic \((\sigma _\theta >0)\) and we deduce, by using classical arguments of localization (see, for instance, [48, pp. 250–251]), that there exists a unique weak solution \(({\widetilde{Z}},B)\). Furthermore, by using the Ito–Krylov formula (see, for instance, [31, Chapter 10] or [18, p. 134]), we obtain (4.8). \(\square \)

4.3 Chain rules and nonexplosion

To construct Lyapunov functions for the infinitesimal generator \(L_\theta \), or more generally for \(A_{\theta }\) associated to (4.1), we need to give the associated chain rules. For all \(\theta \in \varTheta \) and \(\varphi \in \mathrm{W}^{1,\infty }_\mathrm{loc}\) (the space of real continuous functions such that the partial derivatives in the sense of distributions exist and are locally bounded functions) define

By standard computations, we get the following chain rules

and

Proposition 4.2

Assume that \(a>1\). For any \(r\in \mathbb{R },\,\theta \in \varTheta ,\,s\ge 0\) and \(z\in \mathbb{R }\), any weak solution \(Z\) to (4.1) is global and, for all \(T>s\) and \(0<\beta <(a-1)/2\),

Proof

Let \(0<\alpha <a-1\) and \(U_\alpha \) be the function defined in (2.13) and set

We shall prove that \(U_{\theta }\) is a Lyapunov function, in the sense that, for all \(T>s\), there exists \(\lambda >0\) such that, for all \(0\le t\le T\) and \(x\in \mathbb{R }\),

First note that the second relation in (4.13) is clear since \(\lim _{|x|\rightarrow \infty }\theta (x)/x^2=0\). Moreover, by using (4.11) and (4.10), we can see that

and

In addition, since \(0<\alpha <a-1\), we can write for \(x\) sufficiently large,

Then we get from (4.16) and (4.14) that there exist \(L_{1}>0\) and a compact set \(C\) such that, for all \(0\le t\le T\) and \(x\in \mathbb{R }\),

Besides, we can see that there exists \(L_{2}>0\) such that, for all \(0\le t\le T\) and \(y\in \mathbb{R }\),

We deduce from (4.18), (4.17) and (4.15) that (4.13) is satisfied with \(\lambda := L_{1}+ L_{2}\). By using a classical argument (see, for instance, [48, Theorem 10.2.1]) we get that the explosion time is infinite a.s. Furthermore, the right-hand side of (4.13) implies that \(\{ e^{-\lambda t} U_{\theta ,\alpha }(t,Z_{t}) : s\le t\le T\}\) is a positive supermartingale. By using the maximal inequality (obtain from the optional stopping theorem) we get that, for all \(R>0\),

Besides, we can check that, for all \(\beta <\alpha /2\), there exists \(c>0\) such that, for all \(s\le t\le T\) and \(x\in \mathbb{R },\,c\,U_{\theta ,\alpha }(t,x)\ge \exp (\beta x^{2})\). Then by using (4.19), we obtain

Since \(\beta \) and \(\alpha \) are arbitrary parameters satisfying \(\beta <\alpha /2<(a-1)/2\), we deduce from the last inequality that (4.12) holds for any \(\beta <(a-1)/2\). \(\square \)

5 Proof of Theorem 2.1

Theorem 2.1 will be a direct consequence of Theorem 5.1 below.

Theorem 5.1

For any \(a,r\in \mathbb{R },\,\theta \in \varTheta ,\,s\ge 0\) and \(z\in \mathbb{R }\), there exists a unique global weak solution to Eq. (4.1). Moreover, there exists a standard Brownian motion \(B\) such that, for all \(F\in \overline{D}(A_{\theta })\),

Proof

First of all, when \(a>1\), the proof is a direct consequence of Propositions (4.2) and (4.1). More generally than relation (4.3), we note that, for any \(a_{1},a_{2}\in \mathbb{R }\),

where \(A^{(i)}\) denotes the infinitesimal generator associated to \(a_{i},\,i\in \{1,2\}\). By using this relation, it is not difficult to see that the Girsanov transformation induces, by localization, a linear bijection between the weak solutions associated to parameters \(a_{1}\) and \(a_{2}\). Since for all \(a_{2}>1\) there exists a unique weak solution, we obtain that, for all \(a_{1}\le 1\) there exists a unique weak solution. Therefore, to complete the proof, it suffices to show that there exists a global weak solution. Remark that since uniqueness holds for the martingale problems, any weak solution is a Markov process.

Let \(a_{1}\le 1<a_{2}\) be and consider for \(a_{2}\) a global weak solution \((Z,W)\) on a given filtered probability space \((\Omega ,{\fancyscript{F}},\mathbb{P }_{2})\). We set \(k:=(a_{2}-a_{1})/2\) and, for all \(t\ge s\),

By using the moment inequality (4.12) and the Novikov criterion, we can see that \(\{ D_{t} : s\le t\le s+T\}\) is a martingale for any \(0<T<(a_{2}-1)/k^{2}\). The Girsanov theorem applies and \(\{B_{t} : s\le t\le s+T\}\) is a standard Brownian motion under the probability measure \(\mathbb{P }_{1}\), defined by the Radon–Nykodym derivatives

Moreover, for all \(F\in \overline{D}( A_{\theta }^{(2)})\equiv \overline{D}(A_{\theta }^{(1)})\) and \(s\le t\le s+T\),

Then \(\{(Z_{t},B_{t}) : s\le t\le s+T\}\) is a weak solution, which does not explode, on the filtered probability space \((\Omega ,{\fancyscript{F}},\mathbb{P }_{1})\). Since the life time \(T\) is independent on the initial state \((s,z)\), we deduce by using the Markov property that the unique weak solution associated to \(a_{1}\) is global. This completes the proof. \(\square \)

6 Proof of Theorem 2.2

We first show that it suffices to prove the analogous theorem for the more general equivalent SDE (4.6) (see Theorem 6.1). Thereafter, we prove this theorem.

Let \({\widetilde{\mathbb{P }}}_{s,{\tilde{z}}}(\theta )\) be the distribution of the global weak solution to the SDE (4.6), which existence is stated in Proposition (6.1), and denote by \({\widetilde{P}}_\theta (s,{\tilde{z}};t,\mathrm{d}x)\) and \({\widetilde{P}}_{s,t}(\theta )\) the associated transition kernels and Markov kernels.

Theorem 6.1

For any \(r\in \mathbb{R }\) and all \(\theta \in \varTheta \), the family \(\{{\widetilde{\mathbb{P }}}_{s,{\tilde{z}}}(\theta ) : s\ge 0,\, {\tilde{z}}\in \mathbb{R }\}\) is strongly Feller continuous. Moreover, the associated time-inhomogeneous semigroups \(\{{\widetilde{P}}_{s,t}(\theta ): t\ge s\ge 0,\,\theta \in \varTheta \}\) satisfy

Besides, \({\widetilde{P}}_\theta (s,{\tilde{z}};t,\mathrm{d}x)\) admits a density \({\tilde{p}}_\theta (s,{\tilde{z}};t,x)\), which is measurable with respect to \((\theta ,s,t,{\tilde{z}},x)\) on \(\varTheta \times \{t>s\ge 0\}\times \mathbb{R }^{2}\), and which satisfies the lower local Aronson estimate: for all \(\theta \in \varTheta ,\,T>0\) and compact set \(C\subset \mathbb{R }\), there exists \(M>0\) such that, for all \(0\le s<t\le T\) and \({\tilde{z}},x \in C\),

Denote by \(\mathbb{P }_{s,z}(\theta )\) the distribution of the unique global weak solution to the Eq. (4.1), which is given in Theorem 5.1, and by \(P_\theta (s,z;t,\mathrm{d}x)\) and \( P_{s,t}(\theta )\) the associated transition kernels and Markov kernels. Assume first that \(\{{\widetilde{P}}_{s,{\tilde{z}}}(\theta ) : s\ge 0,\, {\tilde{z}}\in \mathbb{R }\}\) is strongly Feller continuous. One get by using Proposition 4.1 that, for all bounded measurable function \(F\) on \([0,\infty )\times \mathbb{R },\,t\ge s\ge 0\) and \(z\in \mathbb{R }\),

Since \(S_\theta \) is continuous on \(\mathbb{R }^2\), we deduce that \(\{\mathbb{P }_{s,z}(\theta ) : s\ge 0,\, z\in \mathbb{R }\}\) is also strongly Feller continuous. Secondly, assume that \(\{{\widetilde{P}}_{s,t}(\theta ) : t\ge s\ge 0\}\) satisfies relations (6.1). We get from (4.5) and Proposition 4.1 that, for all nonnegative function \(F\) on \(\mathbb{R },\,s,t\ge 0\) and \(z\in \mathbb{R }\),

By using the Markov property, we obtain relations (2.10). Finally, assume that the transition kernels \({\widetilde{P}}_\theta (s,{\tilde{z}};t,\mathrm{d}x)\) admits a measurable density \({\tilde{p}}_\theta (s,{\tilde{z}};t,x)\) which satisfies the lower local Aronson estimate (6.2). Once again, Proposition 4.1 applies and gives that \({ P}_\theta (s,z;t,\mathrm{d}x)\) admits a density \( p\) such that

Since \(S_\theta \) is a locally Lipschitz function, we deduce that \( p_\theta (s,z;t,\mathrm{d}x)\) is also measurable and satisfies the lower local Aronson estimate. In particular, Theorem 6.1 implies Theorem 2.2. This ends the proof, excepted for Theorem 6.1.

Proof of Theorem 6.1

Since Eq. (4.6) is strictly elliptic \((\sigma _\theta >0)\) and has continuous coefficients, it is classical (see for instance [48, Corollary 10.1.4]) that its unique weak solution is a strongly Feller continuous diffusion, which admits transition densities \({\tilde{p}}_\theta (s,{\tilde{z}}; t,x)\) measurable with respect to \((s,t,{\tilde{z}},x)\in \{t>s\ge 0\}\times \mathbb{R }^2\) for each \(\theta \in \varTheta \). Moreover, we can see that relations (6.1) are direct consequences of the Markov property and of (4.5). We need to prove the measurability of \(\tilde{p}\) on \(\varTheta \times \{t>s\ge 0\}\times \mathbb{R }^2\) and the lower local Aronson estimate (6.2). Set, for all \({\delta }\ge 0\),

with

Here \({\widetilde{\mathbb{P }}}_{s,{\tilde{z}}}^{({\delta })}(\theta )\) denotes the distribution of the truncated diffusion process whose coefficients are given on \([s,\infty )\times \mathbb{R }\) by

Then the fundamental solution \(\tilde{p}_{\theta }^{({\delta })}\) of the associated partial differential equation (PDE) satisfies the local Aronson estimates. Indeed, even if the associated partial differential operator is not of divergence form, we can see that it is equivalent to a uniformly elliptic divergence type operator, with bounded coefficients, employing the change of scale defined on \([s,\infty )\times \mathbb{R }\) by

Therefore, the results in [3] or [38] apply, and the fundamental solution \({\tilde{q}}_{\theta }^{({\delta })}\) of the associated PDE satisfies the global Aronson estimates. Besides, since

and \(k_{\theta }^{({\delta })}\) is locally Lipschitz, we get that \(\tilde{p}_{\theta }^{({\delta })}\) satisfies the local Aronson estimates.

Then, following exactly the same lines as the proof of [47, Theorem II.1.3] in the time-homogeneous situation, we can prove that the kernel \({\widetilde{P}}_{{\delta },\theta }\) admits a density \(\tilde{p}_{\delta ,\theta }\) such that, for all \(0<\eta <1\), there exists \(M>0\) such that, for all \(0\le s<t\le T,\,|\tilde{z}|\le \eta \delta ,\,|x|\le \eta \delta \) and \(|t-s|\le (\eta \delta )^2\),

Since \(\tilde{p}\ge \tilde{p}_\delta \), we deduce that \(\tilde{p}\) satisfies (6.2) by taking \(\delta \) sufficiently large.

It remains to prove the measurability of \(\tilde{p}\). We shall apply [48, Theorem 11.1.4]. Since \((\theta ,s,x)\longmapsto \sigma _\theta (s,x)\) and \((\theta ,s,x)\longmapsto d_\theta (s,x)\) are continuous on \(\varTheta \times \mathbb{R }^2\), we can see that, for all convergent sequence \(\theta _{n}\longrightarrow \theta \) in \(\varTheta \) and all \(T,R>0\),

We can check that the assumptions of [48, Theorem 11.1.4] are satisfied and we conclude that, for all convergent sequence \((s_{n},\tilde{z}_{n})\longrightarrow (s,\tilde{z})\) in \([0,\infty )\times \mathbb{R }\) and all bounded continuous function \(G\) on the canonical space \(\Omega \),

We deduce that \((\theta ,s,\tilde{z})\longmapsto {\widetilde{\mathbb{E }}}_{s,\tilde{z}}(\theta )[G]\) is continuous on \(\varTheta \times [0,\infty )\times \mathbb{R }\). In particular, the family of probability measures \(\{{\widetilde{\mathbb{P }}}_{s,\tilde{z}}(\theta ) : s\ge 0,\,\tilde{z}\in \mathbb{R },\,\theta \in \varTheta \}\) is tight and we can see that, for all bounded measurable function \(F\) on \(\mathbb{R },\,(\theta ,s,\tilde{z},t)\longmapsto {\widetilde{\mathbb{E }}}_{s,\tilde{z}}(\theta )[F(X_t)]\) is measurable on \(\varTheta \times \{ t> s\ge 0\}\times \mathbb{R }\). To this end, assume furthermore that \(F\) is \(L\)-Lipschitz. We can write, for all compact set \(K\) of the canonical space \(\Omega \),

By letting \((s,\tilde{z},\theta ,t)\longrightarrow (s_0,\tilde{z}_0,\theta _0,t_0)\) and by using the tightness of the family of probability measure \(\{{\widetilde{\mathbb{P }}}_{s,\tilde{z}}(\theta ) : s\ge 0,\,\tilde{z}\in \mathbb{R },\,\theta \in \varTheta \}\), we get the continuity and we deduce our claim, since any measurable bounded function is the bounded pointwise limit of a sequence of Lipschitzian functions.

Therefore, we can define the measure \(\nu \) on the product measurable space \(\varTheta \times \mathbb{R }^4\) by setting, for all \(B\in {\fancyscript{B}}\) and \(I_1,I_{2},I_{3},I_{4}\in {\fancyscript{B}}(\mathbb{R })\),

By standard results on disintegration of measures, the Radon–Nykodym derivative of \(\nu \) with respect to \({\fancyscript{W}}(\mathrm{d}\theta )\,\mathrm{d}s\,\mathrm{d}\tilde{z}\,\mathrm{d}t\,\mathrm{d}x\), which is nothing but \(\tilde{p}_\theta (s,\tilde{z},t,x)\), is measurable.

7 Preliminaries of Theorems 2.3 and 2.4

7.1 Uniform affine approximations of the environment

In the following, set for all \(\gamma \in (0,1/2)\) and \(\theta \in \varTheta \),

with, for all \(n\ge 0\) and \(x\in \mathbb{R }\),

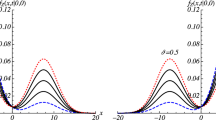

In addition, denote for all \(\varepsilon >0\) by \(A_{\gamma ,\epsilon }(\theta )\) (see Fig. 1) the piecewise linear approximation of \(\theta \), associated to the subdivision \(S_{\gamma ,\varepsilon }:=\{x_{n,k} : n\in \mathbb{Z },\,0\le k\le m_n\}\), defined by \(m_{n} :=h_{n}^{-1}:=[{L^{{1}/{\gamma }}(n)}{\varepsilon ^{-1}}]+1\in \mathbb{N },\,x_{n,k}:=n + k\, h_{n}\) and \(x_{-n,k}:=-x_{n,k}\). Then introduce the random affine approximation \(W_{\gamma ,\varepsilon }\) defined, for all \(\theta \in \varTheta _\gamma \) by

and set

Proposition 7.1

For all \(\gamma \in (0,1/2)\), the subset \(\varTheta _\gamma \subset \varTheta \) is \((T_t)\)-invariant and of full measure. Furthermore, there exists \(\alpha >0\) such that

Besides, for all \(\varepsilon >0\) and \(\theta \in \varTheta _\gamma \),

Proof

Clearly \(H_\gamma : \varTheta \rightarrow [0,\infty ]\) is a seminorm and to get inequality (7.5) it suffices to apply the Fernique theorem presented in [19, Theorem 1.3.2, p. 11]. To this end, we need to check that \({\fancyscript{W}}(H_\gamma <\infty )>0\). By using the Hölder continuity of the Brownian motion on compact sets, the seminorm defined on \(\varTheta \) by \(N(\theta ):=\Vert \theta ^+\Vert _{\gamma ,1}+\Vert \theta ^-\Vert _{\gamma ,1}\) is finite \({\fancyscript{W}}\)-a.s. Moreover, by using the Fernique theorem and the Markov inequality, we deduce that there exists \(c,\beta >0\) such that, for \(r\) sufficiently large,

Besides, the random variables \((\theta \mapsto \Vert \theta ^+\Vert _{\gamma ,n}+\Vert \theta ^-\Vert _{\gamma ,n}),\,n\ge 0\), being i.i.d. by using again the Markov property, we get that

Fernique’s theorem applies and we deduce (7.5). The fact that \(\varTheta _\gamma \) is \((T_t)\)-invariant is obtained by noting that, for all \(\theta \in \varTheta \) and \(t\in \mathbb{R }\),

Furthermore, let \(\varepsilon >0,\,n\ge 0\) and \(x,y\in \mathbb{R }\) be such that \(n\le x,y\le n+1\) and \(|y-x|\le h_{n}\), where \(h_n\) denotes the step of the subdivision \(S_{\gamma ,\varepsilon }\) defined in Fig. 1. We can see that

and, when \(|y-x|=h_n\), we get

Therefore, we obtain that

Replacing in the two last inequalities \(\varepsilon \) by \(\eta _{\gamma ,\varepsilon }(\theta )\), defined in (7.3), we deduce the proposition. \(\square \)

7.2 Random Foster–Lyapunov drift conditions

7.2.1 For the infinitesimal generators

Let \(\varphi \) be a twice continuously differentiable function from \([1,\infty )\) into itself such that, \(\varphi (v)=1\) on \([1,2],\,\varphi (v)=v\) on \([3,\infty )\) and \(\varphi (v)\le v\) on \([1,\infty )\). In the sequel, we set

and

Here we use \(G_\alpha =\varphi (V_\alpha )\) in (7.8) instead of \(V_\alpha \) because \(V_\alpha ^\prime \) do not belong to \(\mathrm{W}^{1,\infty }_{loc}\) (there is a singularity in \(0\)) contrary to \(U_\alpha ^\prime \) in (7.7).

Lemma 7.1

For all \(r\in \mathbb{R },\,\alpha \in (0,1),\,\gamma \in (0,1/2),\,T>0\) and \(\lambda >0\), there exists \(\overline{\varepsilon }>0\) such that, for all \(0<\varepsilon <\overline{\varepsilon }\), there exist a random variable \(B: \varTheta \longrightarrow [1,\infty )\) and \(p,k,c>0\) such that, for all \(\theta \in \varTheta _\gamma ,\,0\le t\le T\) and \(x\in \mathbb{R }\),

Proof

The proof will be a consequence of the following two steps.

Step 1

For all \(0<\delta <1\) and \(R\ge 1\), there exists \(\varepsilon _1>0\) such that, for all \(0<\varepsilon <\varepsilon _1\) and \(0<\ell <1\), there exist a map \(R_1: \varTheta \longrightarrow [R,\infty )\) and \(c_1>0\) such that, for all \(\theta \in \varTheta _\gamma ,\,0\le t\le T\) and \(|x|\ge R_{1}(\theta )\),

First of all, by using chain rule (4.10), with \(a=1\), we obtain that

which can be written

Moreover, we can see that

Recall that \(D_{\gamma ,\varepsilon }\) is defined in (7.4). In order to simplify our calculations, introduce

Note that

Besides, we can choose \(\varepsilon _1>0\) and \(D\ge R\) such that

Then we deduce the left-hand side of (7.10) by using (7.16), (7.15), (7.13), (7.12) and by setting, for any \(0< \varepsilon < \varepsilon _1\),

Furthermore, the right-hand side of (7.10) is obtained by using the right-hand side of (7.6) and by choosing \(c_1\) sufficiently large.

Step 2

For all \(0<\delta <1\) and \(R\ge 1\), there exists \(\varepsilon _2>0\) such that, for all \(0<\varepsilon <\varepsilon _2\), there exists a constant \(R_2\ge R\) such that, for all \(\theta \in \varTheta _\gamma ,\,0\le t\le T\) and \(|x|\ge R_{2}\),

By using chain rule (4.11), we get that

We can write, by integration by parts in the third term of the right-hand side of (7.19),

Besides, by using (7.15) and the left-hand side of (7.6), we can see that

and

We deduce from the two previous inequalities, (7.20) and (7.15) that

with \(\kappa :=|r|+{1}/{4}\). Inequality (7.18) is then a simple consequence of (7.21) by taking \(\varepsilon _2>0\) and \(R_2\ge R\) such that, for all \(x\ge R_2\),

Proof of Lemma 7.1

We deduce Lemma 7.1 from (7.18) and (7.10). Indeed, we can choose \(0<\delta < 1\) and \(R\ge 1\) such that

Then we get the left-hand side of (7.9) by using (7.22) and by setting \(\overline{\varepsilon }:=\varepsilon _1\wedge \varepsilon _2\) and

Moreover, by using inequalities (7.21), (7.15), (7.13) and (7.11), we can see that there exists \(C>0\) such that

We obtain the right-hand side of (7.9) by taking \(p:=2/(\gamma (1-\ell )),\,k,c\) sufficiently large and by using (7.24), (7.23) and the right-hand sides of (7.10) and (7.6). \(\square \)

Lemma 7.2

For all \(r\in \mathbb{R },\,\alpha \in (0,1),\,\gamma \in (\alpha /2,1/2),\,T>0,\,\varepsilon >0\) and \(\lambda >0\), there exist a random variable \(B : \varTheta \longrightarrow [1,\infty ),\,k,c>0\) and \(0<p<2\) such that, for all \(\theta \in \varTheta _\gamma ,\,0\le t\le T\) and \(x\in \mathbb{R }\),

Proof

This proof uses similar ideas as the proof of Lemma 7.1 and we only give the main lines. Once again, the proof will be a consequence of the following two steps.

Step 1

For all \(0<\delta <1,\,R\ge 1\) and \(0<\ell <1\), there exist \(R_1 : \varTheta \longrightarrow [R,\infty )\) and \(c_1>0\) such that, for all \(\theta \in \varTheta _\gamma ,\,0\le t\le T\) and \(|x|\ge R_1(\theta )\),

By using chain rule (4.10), with \(a=1\), we can see that, for all \(x\in \{V_\alpha > 3\}\),

Moreover, we can choose \(D\ge 1\) such that \(\{V_\alpha \le 3\}\subset [-D,D]\) and

Then by setting \(R_1\) as in (7.17) we can deduce (7.26).

Step 2

For all \(\delta >0\) and \(R\ge 1\), there exists a constant \(R_2\ge R\) such that, for all \(\theta \in \varTheta _\gamma ,\,0\le t\le T\) and \(|x|\ge R_2\),

By using chain rule (4.11) we can see that, for all \(x\in \{V_{\alpha }>3\}\),

Then we can obtain (7.27) by using similar methods as in the proof of (7.18).

Proof of Lemma 7.2

We deduce Lemma 7.2 from (7.27) and (7.26) in the same manner as we get Lemma 7.1 from (7.18) and (7.10). The main variation is that we need to choose \(0<\ell <1\) in (7.26) such that \(p:=\alpha /(\gamma (1-\ell ))<2\). \(\square \)

7.2.2 For the Markov kernels

Proposition 7.2

For all \(r\in \mathbb{R },\,\alpha \in (0,1),\,\gamma \in (0,1/2)\) and \(\eta ,\tau ,T>0\), there exists a random variable \(B : \varTheta \longrightarrow [1,\infty )\) and \(k,c,p>0\) such that, for all \(\kappa >0,\,\theta \in \varTheta _\gamma ,\,0\le s\le t\le T\) and \(x\in \mathbb{R }\),

with

Proof

Let \(\lambda >e^q\) and \(0<\overline{\varepsilon }<1\) be as in Lemma 7.1 and \(0<\varepsilon <\overline{\varepsilon }\) be such that \(e^{-\lambda \tau + 2q}\le \eta \) and \(e^{2q\varepsilon }\le \eta +1\), where \(q\) is defined in (7.14). One can see by using Ito’s formula (2.8) that there exists a Brownian motion \(W\) such that, under \(\mathbb{P }_{s,x}\),

Besides, we get from Lemma 7.1 that there exist a random variable \(B : \varTheta \longrightarrow [1,\infty ),\,k,c,p>0\) such that, for all \(\theta \in \varTheta _\gamma ,\,0\le s\le t\le T\) and \(x\in \mathbb{R }\),

Then one can see by taking the expectation in (7.30) and by using (7.15) that, for all \(\theta \in \varTheta _\gamma ,\,0\le s \le t\le T\) and \(x\in \mathbb{R }\),

and we deduce that inequalities (7.28) and (7.29) hold for any \(\kappa >0\). \(\square \)

Proposition 7.3

For all \(r\in \mathbb{R },\,\alpha \in (0,1),\,\gamma \in (\alpha /2,1/2)\) and \(\eta ,\tau ,T>0\), there exist a random variable \(B : \varTheta \longrightarrow [1,\infty ),\,k,c>0\) and \(0<p<2\) such that, for all \(\kappa >0,\,\theta \in \varTheta _\gamma ,\,0\le s\le t\le T\) and \(x\in \mathbb{R }\),

with

Proof

The proof follows the same lines as the proof of Proposition 7.2 and we only give the main ideas. Once again, by using Ito’s formula and Lemma 7.2, we can prove that there exist a random variable \(B: \varTheta \longrightarrow [0,\infty ),\,k,c>0\) and \(0<p<2\) such that, for all \(\theta \in \varTheta _\gamma ,\,0\le s\le t\le T\) and \(x\in \mathbb{R }\),

Moreover, since \(G_\alpha \le V_\alpha \) and \(G_\alpha (x)=V_\alpha (x)\), for \(x\in \{V\ge 3\}\), we obtain that

This is enough to complete the proof. \(\square \)

7.3 Coupling method

7.3.1 Coupling construction

We say that \(C\) is a random \((1,\varepsilon )\)-coupling set associated to the random Markov kernel \(P\) and the random probability measure \(\nu \) over \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\) on \(\mathbb{R }\), if \(\varepsilon : \varTheta \longrightarrow (0,1/2]\) is a measurable map, \(C_\theta \) is a compact set of \(\mathbb{R }\) for \({\fancyscript{W}}\)-almost all \(\theta \in \varTheta \) and

Given a random \((1,\varepsilon )\)-coupling set \(C\) associated to the random probability measure \(\nu \), we construct a random Markov kernel \(P^\star \) on \(\mathbb{R }\times \mathbb{R }\) as follows. Let \(\overline{R}\) and \( \overline{P}\) be two random Markov kernels on \(\mathbb{R }\times \mathbb{R }\) satisfying, for all \(x,y\in C_{\theta }\) and \(A, B\in {\fancyscript{B}}(\mathbb{R })\),

and

Note that we can assume that \(\overline{P}\) is a random coupling Markov kernel over \(P\), in the sense that, for all \(\theta \in \varTheta ,\,x,y\in \mathbb{R }\) and \(A\in {\fancyscript{B}}(\mathbb{R })\),

Then we define,

7.3.2 The Douc–Moulines–Rosenthal bound

In order to simplify our claims, we set

Moreover, we denote for any function \(F : \varTheta \longrightarrow (0,\infty ),\,n\in \mathbb{N }\) and \(j\in \{0,\cdots ,n\}\),

Proposition 7.4

For all \(r\in \mathbb{R },\,\alpha \in (0,1),\,\gamma \in (0,1/2)\) and \(\rho \in (0,\infty )\), there exist a random variable \(B: \varTheta \longrightarrow [1,\infty )\), with \(\log (B)\in \mathrm{L}^1(\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\), and a random \((1,\varepsilon )\)-coupling set \(C\) over \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\) on \(\mathbb{R }\) such that, for all \(\theta \in \varTheta _\gamma \),

Moreover, for all \(n\in \mathbb{N },\,j\in \{1,\cdots ,n+1\}\) and \(\nu _1,\nu _2\in {\fancyscript{M}}_1\),

Proof

Let \(\eta \) and \(\kappa \) be two positive constants such that \(\rho =\eta +2\kappa \) and use the Proposition 7.2 to obtain \({\widetilde{B}}:\varTheta \longrightarrow [1,\infty )\) and \(k,c,p>0\) such that, for all \(\theta \in \varTheta _\gamma \),

The same arguments as in the proof of [17, Proposition 11, p. 1660] apply. Indeed, we can write, for any random Markov kernel \(\overline{P}\) satisfying (7.34),

Since \({\widetilde{B}}_{\theta }\le 2 \kappa \overline{U}_{\alpha }\) on \(C_{\theta }^{\mathtt{c}}\times C_{\theta }\) and \(C_{\theta }\times C_{\theta }^{\mathtt{c}}\), we obtain from the last inequality that

Then we deduce that (7.37) is satisfied by setting \(B_\theta :=({(\rho \kappa ^{-1}{\widetilde{B}}_\theta + {\widetilde{B}}_\theta )}{\rho ^{-1}})\vee {\widetilde{B}}_\theta \) and by using (7.39), (7.35) and (7.33). Besides, \(\log ({\widetilde{B}})\in \mathrm{L}^1(\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\) by using (7.5) and thus similarly for \(\log (B)\). Moreover, for all \(\theta \in \varTheta _{\gamma },\,C_{\theta }\) is a compact set and we get from the lower local Aronson estimate (2.11) that \(C\) is a random \((1,\varepsilon )\)-coupling set associated to the random distribution \(\nu \) defined, for all \(\theta \in \varTheta _{\gamma }\) and \(A\in {\fancyscript{B}}(\mathbb{R })\) by

Furthermore, we can write by using (2.10) that

and therefore, a direct application of [17, Theorem 8, p. 1656] gives (7.38). \(\square \)

7.4 Ergodicity and exponential stability of the RDS

7.4.1 Ergodicity

Proposition 7.5

The dynamical system \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}},(T_t)_{t\in \mathbb{R }})\) is ergodic.

Proof

Introduce three measurable maps \(U^\pm : \varTheta \longrightarrow \varTheta \) and \(S_t : \varTheta \longrightarrow \varTheta \) defined by

It is classical that the distribution of \(U^\pm \) under the Wiener measure \(\fancyscript{W}\), denoted by \(\varGamma \), is the distribution of the stationary Ornstein–Uhlenbeck process having the standard normal distribution as stationary distribution. This one is an ergodic process and, as a consequence, the dynamical system \((\varTheta ,\fancyscript{B},\varGamma , (S_t)_{t\in \mathbb{R }})\) is ergodic (see, for instance, [25, Theorem 20.10]). Besides, it is clear that the following diagram is commutative:

Let \(A\in \fancyscript{B}\) be such that \(T^{-1}_t (A) =A\), with \(t\ne 0\). By using the ergodicity of the dynamical system \((\varTheta ,\fancyscript{B},\varGamma , (S_t)_{t\in \mathbb{R }})\), it follows that

Moreover, we can see that

We conclude that \({\fancyscript{W}}(A)=0 \) or \( =1\) and the proof is finished.

7.4.2 Exponential stability

Lemma 7.3

Assume that \(r=0\). Let \(F\) be such that \((\log (F)\vee 0)\in \mathrm{L}^{1}(\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\) and \(F^{\pm }\) as in (7.36).

-

1.

If \({\fancyscript{W}}(F<1)=1\) then, for all \(L\ge 1\), there exists \(\lambda >0\) such that

$$\begin{aligned} \limsup _{n\rightarrow \infty }\,e^{\lambda n}\,{F_{\left[\frac{n}{L}\right],n}^\pm }(\theta )=0\quad {\fancyscript{W}}\text{-a.s.} \end{aligned}$$(7.40) -

2.

If \({\fancyscript{W}}(F\ge 1)>0\) then, for all \(\eta >0\), there exists \(L>0\) such that

$$\begin{aligned} \limsup _{n\rightarrow \infty }\,{e^{-\eta n}}\,{F_{\left[\frac{n}{L}\right],n}^\pm (\theta )}=0\quad {\fancyscript{W}}\text{-a.s.} \end{aligned}$$(7.41)

Proof

We prove the lemma only for \(F^+\) since the proof for \(F^-\) is obtained replacing \(\theta \) by \(T^{-1}\theta \) and \(T\) by \(T^{-1}\). We set, for all \(c\ge 0\) and \(k\ge 1\),

Assume that \({\fancyscript{W}}(F<1)=1\). We can see that there exist \(0<c<1\) and \(\ell >0\) such that

By applying the ergodic theorem to the ergodic dynamical system \((\varTheta ,\fancyscript{B},\fancyscript{W},T)\) we obtain that, for \({\fancyscript{W}}\)-almost all \(\theta \in \varTheta \) and all integer \(n\) sufficiently large,

Then we deduce the first point by taking \(0<\lambda <\ell \). Assume that \({\fancyscript{W}}(F\ge 1)>0\). Note that if \(F\) is bounded \({\fancyscript{W}}\)-a.s. the second point of the lemma is obvious. Moreover, when \(F\) is unbounded with positive probability, it is not difficult to see that there exist \(0<\kappa <\eta ,\,c\ge 1\) and \(L\ge 1\) such that

Once again, the ergodic theorem allow us to obtain the second point since, for \(\fancyscript{W}\)-almost all \(\theta \in \varTheta \) and all integer \(n\) sufficiently large,

Proposition 7.6

Assume that \(r=0\). For all \(\alpha \in (0,1)\) there exists \(\lambda >0\) such that, for all families \(\{\nu _t^\pm : t\ge 0\}\) of random distribution on \(\mathbb{R }\) over \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\) satisfying

the following discrete-time convergences hold:

and

Proof

We prove only (7.44) since the proof of (7.43) follows the same lines and employs the same arguments. Let \(0<\rho <1\) be and, following Proposition 7.4, write that, for all \(\theta \in \varTheta _\gamma ,\,t\ge 0\) and \(j\in \{0,\ldots ,[t]+1\}\),

Since \(\log B\in \mathrm{L}^1(\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\), the ergodic theorem allows us to see that, for all \(\eta >0\),

Besides, one can see by using Lemma 7.3 that there exist \(L\ge 1\) and \(\ell >0\) such that

Therefore, we deduce from (7.45) the exponential convergence (7.44). \(\square \)

8 Proof of Theorem 2.3

Theorem 2.3 will be a consequence of Propositions 8.1 and 8.2. In the sequel, we introduce, for any operator \(P\) acting on \({\fancyscript{M}}_{F},\,F\in \{U_\alpha ,V_{\alpha }\}\), the subordinated norm

8.1 Exponential weak ergodicity and quasi-invariant measure

Proposition 8.1

Assume that \(r=0\). For all \(\alpha \in (0,1)\) there exists \(\lambda >0\) such that, for all \(\nu _1,\nu _2\in {\fancyscript{M}}_{1,U_\alpha }\),

Furthermore, there exists a unique (up to a \(\fancyscript{W}\)-null set) random probability measure \(\mu \) over \((\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\) on \(\mathbb{R }\) such that, for all \(\alpha \in (0,1)\) there exists \(\lambda >0\) such that, for all \(\nu \in {\fancyscript{M}}_{1,U_\alpha }\),

Moreover, for all \(t\ge 0\),

Proof

By using relation (2.12) we can write \(P_t(\theta )=P_{[t]}(\theta )P_{\{t\}}(T^{[t]}\theta )\) and we get

Moreover, by using Propositions 7.2 and 7.1 and the ergodic theorem, we obtain

Besides, a direct application of Proposition 7.6 gives that there exists \(\lambda >0\), independent of \(\nu _1\) and \(\nu _2\), such that

We deduce inequality (8.1) from (8.6), (8.5) and (8.4). Furthermore, one can see by using again Propositions 7.6, 7.2 and 7.1 and similar arguments that

We obtain that, for \(\fancyscript{W}\)-almost all \(\theta \in \varTheta ,\,\{\nu P_{n}(T^{-n}\theta ) : n\ge 0\}\) is a Cauchy sequence in the separable Banach space \({\fancyscript{M}}_{U_\alpha }\). We get that there exist \(\lambda >0\) and a random probability measure \(\mu _\theta \in {\fancyscript{M}}_{U_\alpha }\) such that, for all \(\nu \in {\fancyscript{M}}_{1,U_\alpha }\),

We deduce (8.2) from (8.7) in the same way as we obtain (8.1) from (8.6). Finally, (8.3) is a consequence of (8.2) and the cocycle property since

8.2 Annealed convergences

Proposition 8.2

For all \(\alpha \in (0,1)\) and \(\hat{\nu }\in {\fancyscript{M}}_{1,V_\alpha }\),

Proof

Let \(0<\rho <1\) be and apply Proposition 7.3 to see that, for all \(0\le u\le 1\),

We get from the latter inequality and (8.3) that \(\mu _{T\theta }(V_\alpha ) \le \rho \mu _\theta (V_\alpha ) + B_\theta \) \({\fancyscript{W}}\)-a.s. and, by taking the expectation of the last inequality, we obtain the left-hand side of (8.8). Besides, since the Wiener measure is \((T_{t})\)-invariant, we can see that

Moreover, the relation (8.3) and the cocycle property (2.12) allow us to write

Then similar arguments as for the proofs of (8.2) and (8.1) hold and we get that

Furthermore, by using (8.9) and the cocycle property, it is not difficult to see that

Noting that the two previous bounds belong to \(\mathrm{L}^1(\varTheta ,{\fancyscript{B}},{\fancyscript{W}})\) (see Proposition 7.3) and are independent of \(t\ge 0\), the dominate convergence theorem applies and we deduce from (8.11) and (8.10) the right-hand side of (8.8). \(\square \)

9 Proof of Theorem 2.4

Recall that under \(\mathbb{P }_z(\theta )\) (see Proposition 4.1) \(\{S_\theta (t,X_t) : t\ge 0 \}\) is a solution of the SDE (4.6), with \(a=1\). Moreover, since \(r>0\), we can see by using (4.5) that

uniformly on compact sets. Following [21, Lemma 4.5] and denoting by \(\varGamma \) the standard normal distribution, \(\{S_{\theta }(t,X_{t}) : t\ge 0\}\) is asymptotically time-homogeneous and \(S_{*}\varGamma \)-ergodic. According to the cited Lemma, if in addition \(\{S_{\theta }(t,X_{t}) : t \ge 0\}\) is bounded in probability, it converges in distribution towards \(S_{*}\varGamma \):

We shall prove that \(\{X_t : t\ge 0\}\) is bounded in probability, which shall imply the boundedness in probability of \(\{S_{\theta }(t,X_t) : t\ge 0\}\). By using Proposition 7.2, we can find \(0<\rho <1,\,L>0,\,B:\varTheta \longrightarrow [1,\infty )\) and \(k,c,p>0\) such that, for all \(0\le u\le 1\),

Then relations (2.10) and the ergodic theorem allow us to write that, for all \(t\ge 0\),

Thereafter, the Markov inequality implies that

Therefore, we get that \(\{X_t : t\ge 0\}\) is bounded in probability and since

we obtain also the boundedness in probability of \(\{S_{\theta }(t,X_t) : t\ge 0\}\). We deduce that [21, Lemma 4.5] applies and this completes the proof. \(\square \)

References

Arnold, L.: Random dynamical systems. Springer Monographs in Mathematics. Springer, Berlin (1998)

Arnold, L., Gundlach, V.M., Demetrius, L.: Evolutionary formalism for products of positive random matrices. Ann. Appl. Probab. 4(3), 859–901 (1994). http://projecteuclid.org/DPubS?service=UI&version=1.0&verb=Display&handle=euclid.aoap/1177004975

Aronson, D.G.: Non-negative solutions of linear parabolic equations. Ann. Scuola Norm. Sup. Pisa (3) 22, 607–694 (1968)

Avena, L., den Hollander, F., Redig, F.: Law of large numbers for a class of random walks in dynamic random environments. Electron. J. Probab. 16(21), 587–617 (2011). doi:10.1214/EJP.v16-866

Bandyopadhyay, A., Zeitouni, O.: Random walk in dynamic Markovian random environment. ALEA Lat. Am. J. Probab. Math. Stat. 1, 205–224 (2006)

Boldrighini, C., Ignatyuk, I.A., Malyshev, V.A., Pellegrinotti, A.: Random walk in dynamic environment with mutual influence. Stoch. Process. Appl. 41(1), 157–177 (1992). doi:10.1016/0304-4149(92)90151-F

Boldrighini, C., Minlos, R.A., Pellegrinotti, A.: Random walks in quenched i.i.d. space-time random environment are always a.s. diffusive. Probab. Theory Relat. Fields 129(1), 133–156 (2004). doi:10.1007/s00440-003-0331-x

Bricmont, J., Kupiainen, A.: Random walks in space time mixing environments. J. Stat. Phys. 134(5–6), 979–1004 (2009). doi:10.1007/s10955-009-9689-1

Brox, T.: A one-dimensional diffusion process in a Wiener medium. Ann. Probab. 14(4), 1206–1218 (1986). http://projecteuclid.org/DPubS?service=UI&version=1.0&verb=Display&handle=euclid.aop/1176992363

Cheliotis, D.: One-dimensional diffusion in an asymmetric random environment. Ann. Inst. H. Poincaré Probab. Statist. 42(6), 715–726 (2006). doi:10.1016/j.anihpb.2005.08.004

Cogburn, R.: On direct convergence and periodicity for transition probabilities of Markov chains in random environments. Ann. Probab. 18(2), 642–654 (1990). http://www.jstor.org/stable/2244308

Comets, F., Gantert, N., Zeitouni, O.: Quenched, annealed and functional large deviations for one-dimensional random walk in random environment. Probab. Theory Relat. Fields 118(1), 65–114 (2000)

Dembo, A., Gantert, N., Peres, Y., Shi, Z.: Valleys and the maximum local time for random walk in random environment. Probab. Theory Relat. Fields 137(3–4), 443–473 (2007). doi:10.1007/s00440-006-0005-6

Diel, R.: Almost sure asymptotics for the local time of a diffusion in Brownian environment. Stoch. Process. Appl. 121(10), 2303–2330 (2011). doi:10.1016/j.spa.2011.06.002

Dolgopyat, D., Keller, G., Liverani, C.: Random walk in Markovian environment. Ann. Probab. 36(5), 1676–1710 (2008). doi:10.1214/07-AOP369

Dolgopyat, D., Liverani, C.: Non-perturbative approach to random walk in Markovian environment. Electron. Commun. Probab. 14, 245–251 (2009). doi:10.1214/ECP.v14-1467

Douc, R., Moulines, E., Rosenthal, J.S.: Quantitative bounds on convergence of time-inhomogeneous Markov chains. Ann. Appl. Probab. 14(4), 1643–1665 (2004). doi:10.1214/105051604000000620

Elworthy, K.D., Truman, A., Zhao, H.: Generalized Itô formulae and space-time Lebesgue-Stieltjes integrals of local times. In: Séminaire de Probabilités XL. Lecture Notes in Mathematics, vol. 1899, pp. 117–136. Springer, Berlin (2007). doi:10.1007/978-3-540-71189-6

Fernique, X.: Regularité des trajectoires des fonctions aléatoires gaussiennes. In: École d’Été de Probabilités de Saint-Flour, IV-1974, pp. 1–96. Lecture Notes in Mathematics, vol. 480. Springer, Berlin (1975)

Flandoli, F., Russo, F., Wolf, J.: Some SDEs with distributional drift. I. General calculus. Osaka J. Math. 40(2), 493–542 (2003). http://projecteuclid.org/getRecord?id=euclid.ojm/1153493096

Gradinaru, M., Offret, Y.: Existence and asymptotic behaviour of some time-inhomogeneous diffusions. Ann. Inst. H. Poincaré Probab. Stat. (2011). doi:10.1214/11-AIHP469

Guivarc’h, Y., Raugi, A.: Propriétés de contraction d’un semi-groupe de matrices inversibles. Coefficients de Liapunoff d’un produit de matrices aléatoires indépendantes. Isr. J. Math. 65(2), 165–196 (1989). doi:10.1007/BF02764859

Hu, Y., Shi, Z.: The limits of Sinai’s simple random walk in random environment. Ann. Probab. 26(4), 1477–1521 (1998). doi:10.1214/aop/1022855871

Hu, Y., Shi, Z., Yor, M.: Rates of convergence of diffusions with drifted Brownian potentials. Trans. Am. Math. Soc. 351(10), 3915–3934 (1999). doi:10.1090/S0002-9947-99-02421-6

Kallenberg, D.: Foundations of Modern Probability, 2nd edn. Probability and its Applications. Springer, New York (2002)

Kawazu, K., Tamura, Y., Tanaka, H.: Limit theorems for one-dimensional diffusions and random walks in random environments. Probab. Theory Relat. Fields 80(4), 501–541 (1989). doi:10.1007/BF00318905

Kesten, H., Kozlov, M.V., Spitzer, F.: A limit law for random walk in a random environment. Compositio Math. 30, 145–168 (1975)

Kifer, Y.: Perron-Frobenius theorem, large deviations, and random perturbations in random environments. Math. Z. 222(4), 677–698 (1996). doi:10.1007/PL00004551

Komorowski, T., Olla, S.: On homogenization of time-dependent random flows. Probab. Theory Relat. Fields 121(1), 98–116 (2001). doi:10.1007/PL00008799

Komorowski, T., Olla, S.: On the superdiffusive behavior of passive tracer with a Gaussian drift. J. Statist. Phys. 108(3–4), 647–668 (2002). doi:10.1023/A:1015734109076

Krylov, N.V.: Controlled diffusion processes. In: Stochastic Modelling and Applied Probability, vol. 14. Springer, Berlin (2009). Translated from the 1977 Russian original by A. B. Aries. Reprint of the 1980 edition

Landim, C., Olla, S., Yau, H.T.: Convection-diffusion equation with space-time ergodic random flow. Probab. Theory Relat. Fields 112(2), 203–220 (1998). doi:10.1007/s004400050187

Lian, Z., Lu, K.: Lyapunov exponents and invariant manifolds for random dynamical systems in a Banach space. Mem. Am. Math. Soc. 206(967), vi+106 (2010). doi:10.1090/S0065-9266-10-00574-0

Mathieu, P.: Zero white noise limit through Dirichlet forms, with application to diffusions in a random medium. Probab. Theory Relat. Fields 99(4), 549–580 (1994). doi:10.1007/BF01206232

Meyn, S.P., Tweedie, R.L.: Markov chains and stochastic stability. Communications and Control Engineering Series. Springer, London (1993)

Orey, S.: Markov chains with stochastically stationary transition probabilities. Ann. Probab. 19(3), 907–928 (1991). http://projecteuclid.org/DPubS?service=UI&version=1.0&verb=Display&handle=euclid.aop/1176990328

Oseledec, V.I.: A multiplicative ergodic theorem. Characteristic Ljapunov, exponents of dynamical systems. Trudy Moskov. Mat. Obšč. 19, 179–210 (1968)

Porper, F.O., Èĭdel’man, S.D.: Two-sided estimates of the fundamental solutions of second-order parabolic equations and some applications of them. Uspekhi Mat. Nauk. 39(3(237)), 107–156 (1984)

Rassoul-Agha, F., Seppäläinen, T.: An almost sure invariance principle for random walks in a space-time random environment. Probab. Theory Relat. Fields 133(3), 299–314 (2005). doi:10.1007/s00440-004-0424-1

Rhodes, R.: On homogenization of space-time dependent and degenerate random flows. Stoch. Process. Appl. 117(10), 1561–1585 (2007). doi:10.1016/j.spa.2007.01.010

Russo, F., Trutnau, G.: Some parabolic PDEs whose drift is an irregular random noise in space. Ann. Probab. 35(6), 2213–2262 (2007). doi:10.1214/009117906000001178

Schmitz, T.: Diffusions in random environment and ballistic behavior. Ann. Inst. H. Poincaré Probab. Statist. 42(6), 683–714 (2006). doi:10.1016/j.anihpb.2005.08.003

Schumacher, S.: Diffusions with Random Coefficients (Environment). ProQuest LLC., Ann Arbor (1984). http://gateway.proquest.com/openurl?url_ver=Z39.88-2004&rft_val_fmt=info:ofi/fmt:kev:mtx:dissertation&res_dat=xri:pqdiss&rft_dat=xri:pqdiss:8428566. Ph.D. Thesis, University of California, Los Angeles

Schumacher, S.: Diffusions with random coefficients. In: Particle Systems, Random Media and Large Deviations (Brunswick, Maine, 1984). Contemp. Math., vol. 41, pp. 351–356. American Mathematical Society, Providence (1985)

Shi, Z.: Sinai’s walk via stochastic calculus. In: Milieux aléatoires, Panor. Synthèses, vol. 12, pp. 53–74. Soc. Math. France, Paris (2001)

Sinaĭ, Y.G.: The limit behavior of a one-dimensional random walk in a random environment. Teor. Veroyatnost. i Primenen. 27(2), 247–258 (1982)

Stroock, D.W.: Diffusion semigroups corresponding to uniformly elliptic divergence form operators. In: Séminaire de Probabilités, XXII. Lecture Notes in Mathematics, vol. 1321, pp. 316–347. Springer, Berlin (1988). doi:10.1007/BFb0084145

Stroock, D.W., Varadhan, S.R.S.: Multidimensional diffusion processes. Classics in Mathematics. Springer, Berlin (2006). Reprint of the 1997 edition

Sznitman, A.S., Zeitouni, O.: An invariance principle for isotropic diffusions in random environment. Invent. Math. 164(3), 455–567 (2006). doi:10.1007/s00222-005-0477-5

Zeitouni, O.: Random walks in random environments. J. Phys. A 39(40), R433–R464 (2006). doi:10.1088/0305-4470/39/40/R01

Acknowledgments

The author is grateful to the Referee for careful reading and valuable comments and remarks which have significantly improve the manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Offret, Y. Invariant distributions and scaling limits for some diffusions in time-varying random environments. Probab. Theory Relat. Fields 158, 1–38 (2014). https://doi.org/10.1007/s00440-012-0475-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00440-012-0475-7

Keywords

- Time-dependent random environment

- Time-inhomogeneous Brox’s diffusion

- Random dynamical system

- Foster–Lyapunov drift condition

- Fluctuating stationary distribution