Abstract

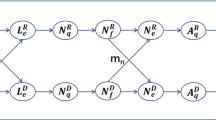

Ticks play a critical role as vectors in the transmission and spread of Lyme disease, an emerging infectious disease which can cause severe illness in humans or animals. To understand the transmission dynamics of Lyme disease and other tick-borne diseases, it is necessary to investigate the population dynamics of ticks. Here, we formulate a system of delay differential equations which models the stage structure of the tick population. Temperature can alter the length of time delays in each developmental stage, and so the time delays can vary geographically (and seasonally which we do not consider). We define the basic reproduction number \({\mathcal R}_0\) of stage structured tick populations. The tick population is uniformly persistent if \({\mathcal R}_0>1\) and dies out if \({\mathcal R}_0<1\). We present sufficient conditions under which the unique positive equilibrium point is globally asymptotically stable. In general, the positive equilibrium can be unstable and the system show oscillatory behavior. These oscillations are primarily due to negative feedback within the tick system, but can be enhanced by the time delays of the different developmental stages.

Similar content being viewed by others

References

Awerbuch TE, Sandberg S (1995) Trends and oscillations in tick population dynamics. J Theor Biol 175:511–516

Awerbuch-Friedlander T, Levins R, Predescu M (2005) The role of seasonality in the dynamics of deer tick populations. Bull Math Biol 67:467–486

Busenberg SN, Cooke KL (1980) The effect of integral conditions in certain equations modelling epidemics and population growth. J Math Biol 10:13–32

Caraco T, Glavanakov S, Chen G, Flaherty JE, Ohsumi TK, Szymanski BK (2002) Stage-structured infection transmission and a spatial epidemic: a model for Lyme disease. Am Nat 160:348–359

Fan G, Lou Y, Thieme HR, Wu J (2014) Stability and persistence in ODE models for populations with many stages. Math Biosci Engin (to appear)

Ghosh M, Pugliese A (2004) Seasonal population dynamics of ticks, and its influence on infection transmission: a semi-discrete approach. Bull Math Biol 66:1659–1684. doi:10.1016/j.bulm.2004.03.007

Gourley SA, Thieme HR, van den Driessche P (2009) Stability and persistence in a model for bluetongue dynamics. SIAM J Appl Math 71:1280–1306

Hale JK, Verdyun Lunel SM (1993) Introduction to functional differential equations. Springer, New York

Hartemink NA, Randolph SE, Davis SA, Heesterbeek JAP (2008) The basic reproduction number for complex disease systems: defining \(R_0\) for tick-borne infections. Am Nat 171:743–754

Hirsch MW, Hanisch H, Gabriel JP (1985) Differential equation models for some parasitic infections; methods for the study of asymptotic behavior. Comm Pure Appl Math 38:733–753

McDonald JN, Weiss NA (1999) A course in real analysis. Academic Press, San Diego

Mwambi HG, Baumgartner J, Hadeler KP (2000) Ticks and tick-borne diseases: a vectorhost interaction model for the brown ear tick. Stat Methods Med Res 9:279–301

Norman R, Bowers RG, Begon M, Hudson PJ (1999) Persistence of tick-borne virus in the presence of multiple host species: tick reservoirs and parasite mediated competition. J Theor Biol 200:111–118. doi:10.1006/jtbi.1999.0982

Ogden NH, Bigras-Poulin M, O’Callaghan CJ, Barker IK, Lindsay LR, Maarouf A, Smoyer-Tomic KE, Waltner-Toews D, Charron D (2005) A dynamic population model to investigate effects of climate on geographic range and seasonality of the tick Ixodes scapularis. Int J Parasit 35:375–389

Rosà R, Pugliese A, Normand R, Hudson PJ (2003) Thresholds for disease persistence in models for tick-borne infections including non-viraemic transmission, extended feeding and tick aggregation. J Theor Biol 224:359–376

Rosà R, Pugliese A (2007) Effects of tick population dynamics and host densities on the persistence of tick-borne infections. Math Biosci 208(1):216–240

Smith HL, Thieme HR (2011) Dynamical systems and population persistence. Graduate Studies in Mathematics, V118, American Mathematical Society, Providence, Rhode Island

Thieme HR (2003) Mathematics in population biology. Princeton series in theoretical and computational biology. Princeton university press, Princeton and Oxford

Wu X, Duvvuri VR, Lou Y, Ogden NH, Pelcat Y, Wu J (2013) Developing a temperature-driven map of the basic reproductive number of the emerging tick vector of Lyme disease Ixodes scapularis in Canada. J Theor Biol 319:50–61

Zhao X-Q (2012) Global dynamics of a reaction and diffusion model for Lyme disease. J Math Biol 65:787–808

Acknowledgments

The work by Guihong Fan and Huaiping Zhu was partially supported by the Pilot Infectious Disease Impact and Response Systems Program of Public Health Agency of Canada (PHAC) and Natural Sciences and Engineering Research Council (NSERC) of Canada. Guihong Fan visited Arizona State University when the paper was mainly written. The authors thank the handling editor, Sebastian Schreiber, and two anonymous referees for their helpful comments.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Proofs for Sect. 4

Proof of Theorem 4.1

Consider system (3.3) with nonnegative initial data (3.8) and birth function \(G(s)\) in (3.4). Any solution of (3.3) is nonnegative, becomes strictly positive at some time, and remains positive thereafter.

Without loss of generality, we assume that the number of adult ticks \(x_{30}(\theta )\) is not identically zero for \(\theta \in [-\tau _3,\ 0]\). There exists \(\theta ^*\in (-\tau _3,\ 0)\) such that \(x_{30}(\theta ^*)>0\). By the continuity of the function, there exists a neighborhood \((\theta ^*-\delta ,\theta ^*+\delta )\) such that \(x_{30}(\theta )>0\), where \(\delta \) is a small positive number. For any \(t^*\in [0,\ \tau _3]\) and \(t^*>\theta ^*+\tau _3+\delta \),

After time \(t^*\), \(x_1(t)\) can never become zero again since

If \(\theta ^*=-\tau _3\) or \(0\), we can modify the proof a little bit and take the neighborhood \((-\tau _3,-\tau _3+\delta )\) or \((-\delta ,0)\). Our proof still works. Similarly we can prove that \(x_2(t)\) and \(x_3(t)\) are strictly positive at some \(\bar{t}>0\) and remain strictly positive thereafter.

Let \(T> 0\) be arbitrary. From the integral equation for \(x_1\) like in (8.1), we have that

After integrating the differential equation for \(x_2\),

We integrate the differential equation for \(x_3\),

with

Assume that \({\mathcal R}_\infty < 1\) and let \(x >0\) such that \({\mathcal R}(x) <1\). Since \(\psi \) is decreasing, from (3.4),

So

where \(c_x = \sup _{0\le s\le x} G(x)\). By (8.2), for some \(\tilde{c}_x>0\), which does not depend on the solution,

We reorganize,

Since the right hand side does not depend on \(T\), \(x_3\) is bounded on \([-\tau _3, \infty )\) and

Notice from (8.3) that this is a uniform bound for a bounded set of initial data. Now \(x_1\) and \(x_2\) are bounded as well with the bounds being uniform for bounded sets of initial data. \(\square \)

Note that the method we used to prove the positiveness of solutions is also used in (Gourley et al. 2009).

Next we show that there is a bounded attractor for all solutions of the model.

Proof of Theorem 4.2

Assume that \({\mathcal R}_\infty < 1\). Let \(x \ge 0\) and \({\mathcal R}(x)<1\). Then \(\limsup _{t \rightarrow \infty } x_3(t) \le x\) holds for any solution of (3.3).

By Theorem 4.1, any solution is bounded. By the fluctuation method [Hirsch et al. (1985); Smith and Thieme 2011, A.3], [Thieme 2003, Prop.A.22]), for each \(j \in \{1,2,3\}\), there exists a sequence \((t_k)\) with \(t_k \rightarrow \infty \),

From (3.3),

We substitute these inequalities into each other,

We can assume that \(x_3^\infty > 0\). Since \(x_3\) is bounded on \({ \mathbb {R}}_+\), after choosing a subsequence, \(x_3(t_k - \tau _3) \rightarrow s\) for some \( s \in [0, x_3^\infty ]\). Since \(G\) is continuous,

Since \(G(0) =0\), \(s \in (0, x_3^\infty ]\). By definition of \(G\),

Since we have assumed that \(x_3^\infty > 0\), this implies

Since \(0 < s \le x_3^\infty \) and \({\mathcal R}\) is decreasing and \({\mathcal R}(x) < 1\) we have \(x_3^\infty \le x\). By (8.4),

\(\square \)

Appendix 2: Proofs for Sect. 5

Proof of Theorem 5.1

At first, we will consider the equilibria of system (3.3). Suppose \((x_1^*,x_2^*,x_3^*)\) is an equilibrium, then

It is easy to see \(x_1^*=\frac{\eta _2}{\gamma _1p_1}x_2^*\), \(x_2^*=\frac{\eta _3}{\gamma _2p_2}x_3^*\) and \(x_3^*\) satisfies

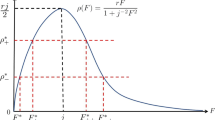

Solving (9.1), we have \(x_3^*=0\) if \(\mathcal {R}_0\le 1\) and \(x_3^*=0\) and any \(x_3^*>0\) with \(\psi (x_3^*)=\frac{\psi (0)}{\mathcal {R}_0}\) if \(\mathcal {R}_0> 1\) since \(\psi \) is (not necessarily strictly) monotone decreasing and continuous on \([0,\infty )\). If \(\psi '(x_3^*) < 0\), \(x_3^*\) is uniquely determined and the nonzero equilibrium is unique.

Conversely, if \({\mathcal R}_0 >1 > {\mathcal R}_\infty \), there exists a number \(x^*_3 >0\) satisfying \({\mathcal R}(x_3^*) = 1\), and defining \(x_1^*\) and \(x_2^*\) as above provides an equilibrium of system (3.3) in \((0,\infty )^3\).

If \(\mathcal {R}_0 < 1\), then \(\limsup _{t\rightarrow \infty } x_3(t) =0\) by Theorem 4.2. If \({\mathcal R}_0 =1\) and \(\psi (s) < \psi (0)\), for all \(s >0\), then \({\mathcal R}(s) < 1\) for all \(s>0\). By Theorem 4.2, \(\limsup _{t\rightarrow \infty } x_3(t)<s \) for all \(s >0\) and so equals 0. It follows from the proof of Theorem 4.2 that \(x_j(t) \rightarrow 0\) as \(t \rightarrow \infty \) for \(j=1,2\).

Local asymptotic stability of the trivial equilibrium if \({\mathcal R}_0< 1\) follows from a standard analysis of a characteristic equation which is similar to the one we will do for the positive equilibrium and is therefore omitted.

Let \(\mathcal {R}_0>1\). To study the local asymptotic stability of the positive equilibrium, we use the principle of linearized stability [see Hale and Verdyun Lunel (1993), e.g.]. The linearization of system (3.3) at the positive equilibrium leads to the characteristic equation

By (4.1) and \({\mathcal R}(x_3^*)=1\),

Since \(\psi '(x_3^*) < 0\), there is no nonnegative root.

Suppose that there is a root \(\lambda \) with \(\mathfrak {R}\lambda \ge 0\) and \(\mathfrak {I}\lambda \ne 0\). Then, by taking absolute values,

This implies

By contraposition, the interior equilibrium is locally asymptotically stable if

\(\square \)

1.1 Persistence

Proof of Theorem 5.2

If \({\mathcal R}_0 > 1\), the questing adult ticks are uniformly weakly persistent: There exists some \(\epsilon >0\) such that \(x_3^{\infty }\geqslant \epsilon \) for any solution whose initial data satisfy (3.8).

Let \(\epsilon >0\). Assume that there exists a solution \((x_1(t),x_2(t),x_3(t))\) satisfying (3.8) and

Then we have \( x_3(t-\tau _3)< \epsilon \) for sufficiently large \(t\). Since \(\psi (x_3)\) is a decreasing function, we have \(\psi (x_3(t-\tau _3))\geqslant \psi (\epsilon )\). After a shift forward in time, we have the following inequality

Using the method in (Smith and Thieme 2011), we take the Laplace transform in both sides of the above inequality with \(\lambda > 0\),

We drop the term \(\int _{-\tau }^0e^{-\lambda s}x_3(s)\mathrm {d}s\) because \(x_3(s)\) is nonnegative. Rearranging gives

Similarly, we take Laplace transform on both sides of equations for \(x_2(t)\) and \(x_3(t)\) in (3.3). Simplification gives

and

We combine (9.8), (9.9) and (9.10),

Noting that \(\fancyscript{L}(x_1(t))>0\) because \(x_1(t)\) is eventually positive, we divide by \(\fancyscript{L}(x_1(t))\) on both sides and obtain

If the questing adults ticks are not uniformly weakly persistent as formulated in the theorem, the last inequality holds for all \(\lambda >0\) and \(\epsilon >0\). Letting \(\lambda \rightarrow 0\) and \(\epsilon \rightarrow 0\), we obtain

Recall (4.1) and (4.2). By contraposition, the questing adult ticks are uniformly weakly persistent if \(\mathcal {R}_0>1\). \(\square \)

Proof of Theorem 5.3

Consider system (3.3). If \(\mathcal {R}_0 >1> {\mathcal R}_\infty \), the tick population is uniformly persistent: There exists some \(\epsilon >0\) such that \(x_{1\infty }\geqslant \epsilon \), \(x_{2\infty }\geqslant \epsilon \) and \(x_{3\infty }\geqslant \epsilon \) for any solution whose initial data satisfy (3.8).

We will use Theorem 4.12 in (Smith and Thieme 2011) to prove the uniform persistence for questing adult ticks.

Choose the state space \(X\) as the cone of nonnegative functions in \(C [-\tau _1,0] \times C[-\tau _2,0] \times C[-\tau _3,0]\).

Assume that \(x(t)=(x_1(t),\ x_2(t),\ x_3(t))\) is a solution of (3.3) with nonnegative initial data \(\phi \in X\). Define a subset

where \(\tau = \max _{i=1}^3 \tau _i\), \(x_t^i(\phi )\) is the \(i\)-th component of \(x_t\) and the \(M_i\) have been chosen that \(\limsup _{t \rightarrow \infty } x_i(t) < M_i\) (see Theorem 4.2).

It is easy to verify that \(D\) is a bounded subset of \(X\) and, by construction, attracts all \(x\in X\). It follows that \(|x'(t)|\) is uniformly bounded by a constant \(\bar{M}=\bar{M}(M_1,M_2,M_3)\) on \(\{t\geqslant \tau \}\) independent of the solution in \(D\). From the Ascoli-Arzela Theorem [Ch.8.3 in McDonald and Weiss (1999)], the subset \(D\) has compact closure because \(\{x_t(\phi )\in D, t\geqslant \tau \}\) is an equicontinuous and equibounded subset of \(C [-\tau _1,0] \times C[-\tau _2,0] \times C[-\tau _3,0]\).

So we have found a compact set that attracts all points in \(X\).

We define \(\rho :X\rightarrow \mathbb {R}_+\) by

Then \(\rho \) is continuous and \(\rho (x_t(\phi ))=x_3(t)\).

We check the three assumptions \(\hat{\heartsuit }_1-\hat{\heartsuit }_3\) in [Smith and Thieme (2011) Thm.4.13]. Assumption \(\hat{\heartsuit }_1\) and \(\hat{\heartsuit }_2\) are true because the set \(D\) attracts all \(x\in X\) and the closure of \(D\) is compact. By (3.3), \(\rho (x_0)>0\) implies \(\rho (x_t(\phi ))>0\) for all \(t\geqslant \tau \). This verifies \(\hat{\heartsuit }_3\). By Theorem 5.2, system (3.3) is uniformly weakly \(\rho \)-persistent.

By Theorem 4.12 in (Smith and Thieme 2011), the system (3.3) is uniformly \(\rho \)-persistent whenever it is uniformly weakly \(\rho \)-persistent. So there exists some \(\epsilon _3 >0 \) such that

for all solutions of (3.3) whose initial data satisfy (3.8).

We apply the fluctuation method to the differential equation for \(x_1\),

There exists a sequence \((t_k)\) with \(t_k \rightarrow \infty \), \(x_1(t_k) \rightarrow x_{1\infty }\), \(x_1'(t_k ) \rightarrow 0\) as \(k \rightarrow \infty \). So

Similarly, one finds some \(\epsilon _2 >0\) that does not depend on the initial conditions such that \(x_{2\infty } \ge \epsilon _2\).

By Theorem 4.1, any solution whose initial data satisfies (3.8) fulfills \(\rho (x_3(r)) >0\) for some \(r >0\) and so \(x_{j\infty } \ge \epsilon _j\) for \(j =1,2,3\). \(\square \)

1.2 Global stability of the positive equilibrium

To prove the global stability result for the interior equilibrium, we will rewrite (3.3) as a scalar integral equation for \(x_3\) and use Theorem B.40 in (Thieme 2003).

All equations of the system (3.3) are of the form

By the variation of constants formula,

Then \(u\) can be written as

where

and

Notice that \(u_0(t) \rightarrow 0\) as \(t \rightarrow \infty \) and

Now let \(v\) also be given in the form

with \(v_0(s) \rightarrow 0\) as \(s \rightarrow \infty \). Then

We change the order of integration

After a substitution,

with

Finally,

with

and

Notice that

We apply this procedure to (3.3),

with \(\tilde{x}_j(t) \rightarrow 0\) and

We substitute these integral equations into each other; by the procedure above we obtain the integral equation

with \(\bar{x}_3(t) \rightarrow 0\) as \(t \rightarrow \infty \) and

All solution of (3.3) satisfying (3.8) are solutions of (9.17) that are bounded and bounded away from zero. The latter follows from Theorem 5.3.

To bring this equation into the form of Theorem B.40 in (Thieme 2003), we normalize \(K\) and set

By (4.1),

We see that a fixed point of \(f\), \(f(x_3) = x_3\), corresponds to the third coordinate of an interior equilibrium for which \({\mathcal R}(x_3)=1\). Since \({\mathcal R}_0 > 1\), \(f(x_3) > x_3\) if \(x_3 > 0\) is small and \(f(x_3) < x_3\) if \(x_3 >0\) is large. By Theorem B.40 in (Thieme 2003), all solutions of (9.17) that are bounded and bounded away from zero converge to \(x_3^*\) if \(x^*_3\) is the only \(z > 0\) with \(f(f(z)) = z\).

The latter condition is satisfied if all solutions of the difference equation \(z_{n+1} = f(z_n)\) converge to \(x_3^*\) if the initial datum satisfies \(z_0 >0\).

Proof of Corollaries 5.5 and 5.4

If \(s^2 \psi (s)\) is a strictly increasing function of \(s\), i.e., \(s f(s)\) is a strictly increasing function of \(s \ge 0\), this follows from [Thieme (2003), Cor.9.9].

If \(\psi (s) = \beta e^{-\alpha s}\), we rescale \(z_n = x^* y_n\). Notice that \({\mathcal R}_0 = e^{\alpha x_3^*}\). Then

By [Thieme (2003), Thm.9.16], all solutions \((y_n)\) of this difference equation converge to 1 for \(y_0 > 0\) if and only if \(2 \ge \alpha x_3^* = \ln {\mathcal R}_0\). This proves that the interior equilibrium attracts all solutions with nontrivial initial conditions.

For the local stability, Notice that \(\psi '(x_3^*)=-\alpha \beta e^{-\alpha x^*}=-\alpha \psi (x_3^*)<0\). The stability condition in Theorem 5.1 becomes \(\alpha x_3^* \le 2\). Since \(\psi (x_3^*)= \frac{\psi (0)}{{\mathcal R}_0}\), \(e^{-\alpha x_3^*} = 1/{\mathcal R}_0\) and \(\alpha x_3^* = \ln {\mathcal R}_0\). This implies the assertion. \(\square \)

Rights and permissions

About this article

Cite this article

Fan, G., Thieme, H.R. & Zhu, H. Delay differential systems for tick population dynamics. J. Math. Biol. 71, 1017–1048 (2015). https://doi.org/10.1007/s00285-014-0845-0

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00285-014-0845-0

Keywords

- Tick populations

- Stage structure

- Delay differential systems

- Local stability

- Global stability

- Persistence

- Basic reproduction number

- Integral equations