Abstract

The time-evolution of continuous-time discrete-state biochemical processes is governed by the Chemical Master Equation (CME), which describes the probability of the molecular counts of each chemical species. As the corresponding number of discrete states is, for most processes, large, a direct numerical simulation of the CME is in general infeasible. In this paper we introduce the method of conditional moments (MCM), a novel approximation method for the solution of the CME. The MCM employs a discrete stochastic description for low-copy number species and a moment-based description for medium/high-copy number species. The moments of the medium/high-copy number species are conditioned on the state of the low abundance species, which allows us to capture complex correlation structures arising, e.g., for multi-attractor and oscillatory systems. We prove that the MCM provides a generalization of previous approximations of the CME based on hybrid modeling and moment-based methods. Furthermore, it improves upon these existing methods, as we illustrate using a model for the dynamics of stochastic single-gene expression. This application example shows that due to the more general structure, the MCM allows for the approximation of multi-modal distributions.

Similar content being viewed by others

References

Ascher UM, Petzold LR (1998) Computer methods for ordinary differential equations and differential-algebraic equations. SIAM, Philadelphia

Brown PN, Hindmarsh AC, Petzold LR (1994) Using Krylov methods in the solution of large-scale differential-algebraic systems. SIAM J Sci Comput 15(6):1467–1488. doi:10.1137/0915088

Brown PN, Hindmarsh AC, Petzold LR (1998) Consistent initial condition calculation for differential-algebraic systems. SIAM J Sci Comput 19(5):1495–1512. doi:10.1137/S1064827595289996

Byrne GD, Hindmarsh AC (1975) A polyalgorithm for the numerical solution of ordinary differential equations. ACM Trans Math Softw 1(1):71–96. doi:10.1145/355626.355636

Engblom S (2006) Computing the moments of high dimensional solutions of the master equation. Appl Math Comp 180:498–515. doi:10.1016/j.amc.2005.12.032

Feller W (1940) On the integro-differential equation of purely discontinous Markoff processes. Trans Am Math Soc 48:4885–4915

Friedman N, Cai L, Xie XS (2006) Linking stochastic dynamics to population distribution: an analytical framework of gene expression. Phys Rev Lett 97(16):168,302

Gandhi SJ, Zenklusen D, Lionnet T, Singer RH (2011) Transcription of functionally related constitutive genes is not coordinated. Natl Struct Mol Biol 18(1):27–35. doi:10.1038/nsmb.1934

Gardiner CW (2011) Handbook of stochastic methods: for physics, chemistry and natural sciences, 4th edn. Springer Series in Synergetics, Berlin

Gillespie DT (1977) Exact stochastic simulation of coupled chemical reactions. J Phys Chem 81(25):2340–2361. doi:10.1021/j100540a008

Gillespie DT (1992) A rigorous derivation of the chemical master equation. Phy A 188(1):404–425. doi:10.1016/0378-4371(92)90283-V

Golding I, Paulsson J, Zawilski SM, Cox EC (2005) Real-time kinetics of gene activity in individual bacteria. Cell 123(6):1025–1036. doi:10.1016/j.cell.2005.09.031

Hasenauer J, Löhning M, Khammash M, Allgöwer F (2012) Dynamical optimization using reduced order models: a method to guarantee performance. J Process Control 22(8):1490–1501. doi:10.1016/j.jprocont.2012.01.017

Hasenauer J, Waldherr S, Doszczak M, Radde N, Scheurich P, Allgöwer F (2011a) Analysis of heterogeneous cell populations: a density-based modeling and identification framework. J Process Control 21(10):1417–1425. doi:10.1016/j.jprocont.2011.06.020

Hasenauer J, Waldherr S, Doszczak M, Radde N, Scheurich P, Allgöwer F (2011b) Identification of models of heterogeneous cell populations from population snapshot data. BMC Bioinf 12(125). doi:10.1186/1471-2105-12-125

Hellander A, Lötstedt P (2007) Hybrid method for the Chemical Master Equation. J Comput Phys 227:100–122. doi:10.1016/j.jcp.2007.07.020

Henzinger TA, Mikeev L, Mateescu M, Wolf V (2010) Hybrid numerical solution of the chemical master equation. In: Proceedings of the 8th international conference on computational methods in systems biology. ACM, New York, pp 55–65. doi:10.1145/1839764.1839772

Hespanha J (2008) Moment closure for biochemical networks. In: Proceeding of international symposis on communications, control and, signal processing, pp. 42–147. doi:10.1109/ISCCSP.2008.4537208

Hespanha JP (2007) Modeling and analysis of stochastic hybrid systems. IEE Proc Control Theory Appl Spec Issue Hybrid Syst 153(5):520–535. doi:10.1049/ip-cta:20050088

Hindmarsh AC, Brown PN, Grant KE, Lee SL, Serban R, Shumaker DE, Woodward CS (2005) SUNDIALS: suite of nonlinear and differential/algebraic equation solvers. ACM Trans Math Softw 31(3):363–396

Hodgkin AL, Huxley AF (1952) A quantitative description of membrane current and its application to conduction and excitation in nerve. J Physiol 117(4):500–544

Jahnke T (2011) On reduced models for the Chemical Master Equation. Multiscale Model Simul 9(4):1646–1676

Jahnke T, Huisinga W (2007) Solving the chemical master equation for monomolecular reaction systems analytically. J Math Biol 54(1):1–26. doi:10.1007/s00285-006-0034-x

Kepler TB, Elston TC (2001) Stochasticity in transcriptional regulation: origins, consequences, and mathematical representations. Biophys J 81(6):3116–3136. doi:10.1016/S0006-3495(01)75949-8

Klipp E, Herwig R, Kowald A, Wierling C, Lehrach H (2005) Systems biology in practice. Wiley-VCH, Weinheim

Koeppl H, Zechner C, Ganguly A, Pelet S, Peter M (2012) Accounting for extrinsic variability in the estimation of stochastic rate constants. Int J Robust Nonlinear Control 22(10):1–21. doi:10.1002/rnc

Krishnarajah I, Cook A, Marion G, Gibson G (2005) Novel moment closure approximations in stochastic epidemics. Bull Math Biol 67(4):855–873. doi:10.1016/j.bulm.2004.11.002

Lee CH, Kim KH, Kim P (2009) A moment closure method for stochastic reaction networks. J Chem Phys 130(13):134107. doi:10.1063/1.3103264

Mateescu M, Wolf V, Didier F, Henzinger T (2010) Fast adaptive uniformisation of the chemical master equation. IET Syst Biol 4(6):441–452

Matis HJ, Kiffe TR (1999) Effects of immigration on some stochastic logistic models: a cumulant truncation analysis. Theor Popul Biol 56(2):139–161

Matis JH, Kiffe TR (2002) On interacting bee/mite populations: a stochastic model with analysis using cumulant truncation. Envirom Ecol Stat 9(3):237–258. doi:10.1023/A:1016288125991

McNaught AD, Wilkinson A (1997) IUPAC Compendium of chemical terminology, 2nd edn. Blackwell Sci. doi:10.1351/gooldbook

Menz S, Latorre JC, Schütte C, Huisinga W (2012) Hybrid stochastic deterministic solution of the Chemical Master Equation. SIAM J Multiscale Model Simul 10(4):1232–1262. doi: 10.1137/110825716

Mikeev L, Wolf V (2012) Parameter estimation for stochastic hybrid models of biochemical reaction networks. In: Proceeding of the 15th ACM international conference on hybrid systems: computation and control. ACM, New York, pp 155–166. doi:10.1145/2185632.2185657

Milner P, Gillespie CS, Wilkinson DJ (2012) Moment closure based parameter inference of stochastic kinetic models. Stat Comp. doi:10.1007/s11222-011-9310-8

Munsky B, Khammash M (2006) The finite state projection algorithm for the solution of the chemical master equation. J Chem Phys 124(4): 044,104. doi:10.1063/1.2145882

Munsky B, Khammash M (2008) The finite state projection approach for the analysis of stochastic noise in gene networks. IEEE Trans Autom Control 53:201–214. doi:10.1109/TAC.2007.911361

Munsky B, Neuert G, von Oudenaarden A (2012) Using gene expression noise to understand gene regulation. Science 336(6078):183–187. doi:10.1126/science.1216379

Munsky B, Trinh B, Khammash M (2009) Listening to the noise: random fluctuations reveal gene network parameters. Mol Syst Biol 5(318). doi:10.1038/msb.2009.75

Nedialkov NS, Pryce JD (2007) Solving differential-algebraic equations by Taylor series (III): the DAETS code. J Numer Anal Ind Appl Math 1(1):1–30

Peccoud J, Ycart B (1995) Markovian modelling of gene product synthesis. Theor Popul Biol 48(2):222–234. doi:10.1006/tpbi.1995.1027

Pryce JD (1998) Solving high-index DAEs by Taylor series. Num Alg 19(1–4):195–211. doi:10.1023/A:1019150322187

Raser JM, O’Shea EK (2004) Control of stochasticity in eukaryotic gene expression. Science 304(5678):1811–1814. doi:10.1126/science.1098641

Ruess J, Milias A, Summers S, Lygeros J (2011) Moment estimation for chemically reacting systems by extended Kalman filtering. J Chem Phys 135(165102). doi:10.1063/1.3654135

Shahrezaei V, Swain PS (2008) Analytical distributions for stochastic gene expression. Proc Natl Acad Sci U S A 105(45):17256–17261. doi:10.1073/pnas.0803850105

Sidje R, Burrage K, MacNamara S (2007) Inexact uniformization method for computing transient distributions of Markov chains. SIAM J Sci Comput 29(6):2562–2580

Singh A, Hespanha JP (2006) Lognormal moment closures for biochemical reactions. In: Proceeding IEEE Conference on Decision and Control (CDC), pp 2063–2068. doi:10.1109/CDC.2006.376994

Singh A, Hespanha JP (2011) Approximate moment dynamics for chemically reacting systems. IEEE Trans Autom Control 56(2):414–418. doi:10.1109/TAC.2010.2088631

Strasser M, Theis FJ, Marr C (2012) Stability and multiattractor dynamics of a toggle switch based on a two-stage model of stochastic gene expression. Biophys J 1(4):19–29. doi:10.1016/j.bpj.2011.11.4000

Taniguchi Y, Choi PJ, Li GW, Chen H, Babu M, Hearn J, Emili A, Xie X (2010) Quantifying E. coli proteome and transcriptome with single-molecule sensitivity in single cells. Science 329(5991):533–538

van Kampen NG (2007) Stochastic processes in physics and chemistry, 3rd revised edn. Amsterdam, North-Holland

Whittle P (1957) On the use of the normal approximation in the treatment of stochastic processes. J R Stat Soc B 19(2):268–281

Zechner C, Ruess J, Krenn P, Pelet S, Peter M, Lygeros J, Koeppl H (2012) Moment-based inference predicts bimodality in transient gene expression. Proc Natl Acad Sci U S A 109(21):8340–8345. doi:10.1073/pnas.1200161109

Acknowledgments

The authors would like to acknowledge financial support from the German Federal Ministry of Education and Research (BMBF) within the Virtual Liver project (Grant No. 0315766) and LungSys II (Grant No. 0316042G), and the European Union within the ERC grant “LatentCauses”.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Proof of Lemma 1

The differentiation of \(\mathbb E _{z}\left. \left[ T(Z,t)\right| y,t\right] p(y|t)\) results in

which we reformulate as

by substitution of \(\frac{\partial }{\partial t} p(y,z|t)\) with (9) and the use of the multiplication axiom (3). Next we change the order of summation and substituting in the first sum \(z \rightarrow z + \nu _{j,z}\), yielding

This equation can be reformulated further by exploiting the fact that the CME is proper, meaning that \(h_{j}(z) = 0\) whenever \(z \ngeq \nu _{j,z}^-\). Accordingly, the limit of the first summation over \(z, z \ge \nu _{j,z}^-\), can be set to zero, \(z \ge 0\). Using the definition of the conditional expectation (8) we obtain (10) which concludes the proof. \(\square \)

Note that the manipulations of infinite sums are allowed under absolute convergence, which holds for any test-function \(T(z,t)\) which is polynomial in \(z\) if for all \(t\) sufficiently many moments of \(p(y,z|t)\) with respect to \(z\) exist (Engblom 2006). Note that Lemma 1 is a generalization of a result by Engblom (2006, Lemma 2.1).

Appendix B: Proof of Proposition 2

We consider the conditional mean weighted by the corresponding probability, \(\mu _{i,z}(y,t) p(y|t) = \sum _{z \ge 0} z_i p(y,z|t)\). By differentiating this product with respect to \(t\) we readily obtain

The unknown derivative \(\frac{\partial }{\partial t} \left( \mu _{i,z}(y,t) p(y|t)\right) \) follows from Lemma 1 by choosing the test function \(T(Z,t) = Z_i\),

This derivative depends on \(\mathbb E _{z}\left. \left[ (Z_i+\nu _{ij,z}) h_{j}(Z)\right| y-\nu _{j,y},t\right] \) and \(\mathbb E _{z}\left. \left[ Z_i h_{j}(Z)\right| y,t\right] \). By adding and subtracting the conditional means we can reformulate these conditional expectations to

and

Substitution of these reformulated conditional expectations into (40) followed by the insertion of (40) into (39) yields the evolution equation for the conditional mean (16), which concludes the proof of Proposition 2. \(\square \)

Appendix C: Proof of Proposition 3

We consider the product \(C_{I,z}(y,t) p(y|t)\) and differentiate it with respect to time, which readily yields

Using Lemma 1 with \(T(Z,t) = (Z-\mu _{z}(y,t))^I\), we obtain

where the third sum corresponds to the term \(\mathbb E _{z}\left. \left[ \frac{\partial }{\partial t} T(Z,t)\right| y,t\right] p(y|t)\) in (10) and

After substituting (43) and (45) into (44), it remains for us to prove that (23) holds. Therefore, we add and subtract \(\mu _{z}(y-\nu _{j,y},t)\) in \((Z+\nu _{j,z}-\mu _{z}(y-\nu _{j,y},t))^I\) and apply the multinomial theorem, yielding

where the summation runs over all vectors \(k \in \mathbb N _0^{n_{s,z}}\) for which \( k_i \in [0,I_i]\) for all \(i\). By substituting (46) into \(\mathbb E _{z}\left. \left[ (Z+\nu _{j,z}-\mu _{z}(y,t))^I h_{j}(Z)\right| y-\nu _{j,y},t\right] \) and employing that the expectation of a sum is the sum of the expectations, we arrive at (23), which concludes the proof. \(\square \)

Appendix D: Proof of Proposition 4

For \(i \in \{1,{n_{s,y}}\}\) Eq. (29) states merely the definition of the mean. The result for \({n_{s,y}} < i \le {n_{s}}\) follows from

which concludes the proof of (29). The result for the centered moment \({\bar{C}}_{I}(t)\) is obtained by a reordering of the sums and the application of the multiplication axiom (3):

In order to arrive at the term \((z - \mu _{z}(y,t))^{I_z}\), we add and subtract \(\mu _{z}(y,t)\). This yields \((z - \mu _{z}(y,t) + \mu _{z}(y,t) - \bar{\mu }_{z}(t))^{I_z}\) and reformulation in terms of \((\mu _{z}(y,t)- \bar{\mu }_z(t))\) and \((z - \mu _{z}(y,t))\) using the multinomial theorem gives

Finally, we exchange the two inner sums and substitute \(\sum _{z\ge 0} (z - \mu _{z}(y,t))^{k} p(z|y,t)\) by \(C_{k,z}(y,t)\). The modified equation for \({\bar{C}}_{I}(t)\) becomes (30) which concludes the proof. \(\square \)

Appendix E: Initial conditions for states \(y\) with \(p(y|0) = 0\)

Proposition 5

Given an initial distribution \(p(y,z|0)\), a state \(y\) with \(p(y|0) = 0\), and the differentiation index \(K_y\) with \(\forall k \in \{1,\ldots ,K_y-1\}: \partial _{t}^{k}p(y|0) = 0\) and \(\partial _{t}^{K_y}p(y|0) \ne 0\), the initial conditional moments for (24) are

and

and

Proof

To prove Proposition 5, we consider a general test function \(T(Z,t)\) and its conditional expectation \(\mathbb E _{z}\left. \left[ T(Z,t)\right| y,t\right] \). It can be shown using Leibniz rule that for any \(L \in \mathbb N \),

Furthermore, by applying the differentiation operator \(\frac{\partial ^{L-1}}{\partial t^{L-1}}\) to (10) it follows from Lemma 1 that

Using the general Leibniz rule this equation can be reformulated to

By evaluating (51) and (52) at \(t = 0\) for \(L = K_y\) and employing that \(\forall k \in \{1,\ldots ,K_y-1\}: \partial _{t}^{k}p(y|0) = 0\) we obtain

As \(\partial _{t}^{K_y}p(y|t)\) is non-zero, (53) defines the initial values \(\mathbb E _{z}\left. \left[ T(Z,0)\right| y,0\right] \). The Eqs. (47) and (48) for the initial conditions \(\mu _{i,z}(y,0)\) and \(C_{I,z}(y,0)\) follow for \(T(Z,t) = Z_i\) and \(T(Z,t) = (Z - \mu _{z}(y,t))^I\), respectively.

To derive equations for the initial derivatives \(\dot{\mu }_{i,z}(y,0)\) and \(\dot{C}_{I,z}(y,0)\) we evaluate (51) and (52) at \(t = 0\) for \(L = K_y+1\). Employing \(\forall k \in \{1,\ldots ,K_y-1\}: \partial _{t}^{k}p(y|0) = 0\), this yields

As \(\partial _{t}^{K_y}p(y|t)\) is non-zero, (54) defines the initial derivative \(\dot{\mathbb{E }}_{z}\left. \left[ T(Z,0)\right| y,0\right] \). Thus, by selecting \(T(Z,t) = Z_i\) we obtain (49) which allows for the calculation of \(\dot{\mu }_{i,z}(y,0)\). To obtain (50), we finally choose \(T(Z,t) = (Z - \mu _{z}(y,t))^I\).

To determine the initial values using (47)-(50) we evaluate the \((K_y-1)\)-th derivatives of \(\mathbb E _{z}\left. \left[ (Z+\nu _{j,z})^{e_i} h_{j}(Z)\right| y-\nu _{j,y},t\right] p(y-\nu _{j,y}|t)\) and \(\mathbb E _{z}\left. \left[ (Z + \nu _{j,z}- \mu _{z}(y,t))^I h_{j}(Z)\right| y-\nu _{j,y},t\right] p(y-\nu _{j,y}|t)\) at \(t=0\). Therefore, we merely employ (52) with the appropriate test function \(T(Z,t), L = K_y-1\), and the substitution \(y \rightarrow y-\nu _{j,y}\). The resulting derivatives are replaced using the same approach and all other conditional expectations are expressed in terms of centered moments using a Taylor series representation similar to (17). While the resulting equation is extremely lengthy, and therefore not stated here, it is straight forward to construct them for any problem using a simple recursion. Employing the structure of (52), it can be shown that the derivatives merely depend on the marginal probabilities and the initial conditional moments of states \(\tilde{y}\) with \(p(\tilde{y}|0) > 0\). These conditional moments can be computed directly from \(p(y,z|0)\), hence, the right-hand sides of (47)–(50) can be evaluated which concludes the proof. \(\square \)

Some numerical schemes, e.g., DAE solvers based on Taylor series methods, might require higher-order derivatives at the initial time point. These higher-order derivatives can also be constructed using the results of Proposition 5. Therefore, one merely employs (52) with the required order \(L\).

Appendix F: Comparison of DAE and approximative ODE formulation of the conditional moment equation

The conditional moment equation is a DAE, \(M(\xi ) \dot{\xi } = F(\xi )\), with the state vector \(\xi \in \mathbb R ^{n_{\xi }}\) and mass matrix \(M(\xi ) \in \mathbb R ^{{n_{\xi }}\times {n_{\xi }}}\). The state vector contains the marginal probabilities, the conditional means and higher-order conditional moments (for \(m\ge 2\)). The class of DAEs is more general than the class of ODEs, \(\dot{\xi } = f(\xi )\). Only if \(M(\xi )\) is invertible for all \(\xi \) the DAE can be reformulated to an ODE, namely \(f(\xi ) = M^{-1}(\xi )F(\xi )\). This invertibility is not ensured for the conditional moment equation. Thus, the conditional moment equation is not an ODE and can also not be simply restated as one.

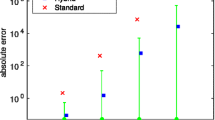

Different approaches exist to approximate DAEs with ODEs. The most common approximation is probably \(\dot{\xi } = (M(\xi ) + \delta I)^{-1}F(\xi )\) where \(I\) is the identity matrix. The constant \(\delta \in \mathbb R _+\) should be as small as possible to achieve a good approximation, but large enough to ensure invertibility. Clearly, even for small \(\delta \), the ODE solution is merely an approximation of the DAE solution. To illustrate this we depict in Fig. 13 the error of different methods for the three-stage gene expression model with \(y = ([{\hbox {D}}_{\mathrm{off}}],[\hbox {D}_{\mathrm{on}}],[\hbox {R}])\) and \(z = [\hbox {P}]\) (see Sect. 5.3). The error is evaluated with respect to the FSP solution which we consider as a gold standard. Figure 13a depicts the error between the FSP solution and the solution of the conditional moment equation computed using a DAE solver. Figure 13b, c depict the error between the FSP solution and the solution of the approximated conditional moment equation, \(\dot{\xi } = (M(\xi ) + \delta I)^{-1}F(\xi )\), computed using an ODE solver for \(\delta = 10^{-6}\) and \(\delta = 10^{-10}\), respectively. It can be seen that the error in the marginal probabilities is small for all three methods, but the error in the conditional moments is indeed very large for the ODE approximations. Interestingly, a smaller \(\delta \) results only in a shift of the error into large mRNA numbers, thus small marginal probabilities, but does not decrease the maximal error.

Error in the marginal probabilities (left) and in the conditional mean of protein number (right) for scenario 2, \(\theta ^{(2)}\). The subplots depict the error between the solution of the MCM with \(m=2\) computed using different numerical methods and the FSP solution of the CME. The individual lines represent the errors in the conditional means in the off-state (blue solid line), in the on-state (red solid line), and in the overall marginal probabilities (black solid line). (Remark The y-axis scales of the different plots are different.) a DAE solver. b ODE solver with \(\delta = 10^{-6}\). c ODE solver with \(\delta = 10^{-10}\). d DAE solver with approximated initial conditions (\(\delta = 10^{-6}\)) (color figure online)

Besides the error introduced by the approximation of the DAE with an ODE, we would like to mention that the reformulation in terms of an ODE might not always be numerically advantageous. DAEs can also be solved for \(p(y|t) = 0\), when the corresponding equations provide equality constraints for the dynamic variables. In case of \(p(y|t) \ll 1\), the DAE has the advantage that the multiplication by a small value is numerically more stable than the division by a small value. Beyond the simulation of the dynamics, also the treatment of the initial conditions might be critical. For small marginal probabilities \(p(y|t)\) the evaluation of (35) and (36) might become numerically unstable. In this situation it can be advantageous to accept a small error in the initial conditions and to use

As the states for which the error is introduced possess very low marginal probabilities, in our experience the error decays quickly. This is also shown in Fig. 13d, which shows the simulation results obtained using a DAE solver with an approximation of the initial conditions with \(\delta = 10^{-6}\).

To sum up, in our experience the simulation of the conditional moment equation using a DAE solver is superior to the approximation of the conditional moment equation by an ODE followed by the simulation of the ODE using ODE solvers. Furthermore, errors introduced in the initial conditional moments of states with lower marginal probabilities in general decay quickly.

Rights and permissions

About this article

Cite this article

Hasenauer, J., Wolf, V., Kazeroonian, A. et al. Method of conditional moments (MCM) for the Chemical Master Equation. J. Math. Biol. 69, 687–735 (2014). https://doi.org/10.1007/s00285-013-0711-5

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00285-013-0711-5