Abstract

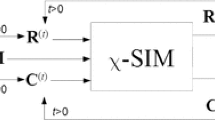

Clustering data is challenging especially for two reasons. The dimensionality of the data is often very high which makes the cluster interpretation hard. Moreover, with high-dimensional data the classic metrics fail in identifying the real similarities between objects. The second challenge is the evolving nature of the observed phenomena which makes the datasets accumulating over time. In this paper we show how we propose to solve these problems. To tackle the high-dimensionality problem, we propose to apply a co-clustering approach on the dataset that stores the occurrence of features in the observed objects. Co-clustering computes a partition of objects and a partition of features simultaneously. The novelty of our co-clustering solution is that it arranges the clusters in a hierarchical fashion, and it consists of two hierarchies: one on the objects and one on the features. The two hierarchies are coupled because the clusters at a certain level in one hierarchy are coupled with the clusters at the same level of the other hierarchy and form the co-clusters. Each cluster of one of the two hierarchies thus provides insights on the clusters of the other hierarchy. Another novelty of the proposed solution is that the number of clusters is possibly unlimited. Nevertheless, the produced hierarchies are still compact and therefore more readable because our method allows multiple splits of a cluster at the lower level. As regards the second challenge, the accumulating nature of the data makes the datasets intractably huge over time. In this case, an incremental solution relieves the issue because it partitions the problem. In this paper we introduce an incremental version of our algorithm of hierarchical co-clustering. It starts from an intermediate solution computed on the previous version of the data and it updates the co-clustering results considering only the added block of data. This solution has the merit of speeding up the computation with respect to the original approach that would recompute the result on the overall dataset. In addition, the incremental algorithm guarantees approximately the same answer than the original version, but it saves much computational load. We validate the incremental approach on several high-dimensional datasets and perform an accurate comparison with both the original version of our algorithm and with the state of the art competitors as well. The obtained results open the way to a novel usage of the co-clustering algorithms in which it is advantageous to partition the data into several blocks and process them incrementally thus “incorporating” data gradually into an on-going co-clustering solution.

Similar content being viewed by others

References

Anagnostopoulos A, Dasgupta A, Kumar R (2008) Approximation algorithms for co-clustering. In: Proceedings of PODS 2008. ACM Press, Vancouver, pp 201–210

Banerjee A, Dhillon I, Ghosh J, Merugu S, Modha D (2007) A generalized maximum entropy approach to Bregman co-clustering and matrix approximation. J Mach Learn Res 8: 1919–1986

Chakrabarti D, Papadimitriou S, Modha DS, Faloutsos C (2004) Fully automatic cross-associations. In: Proceedings of the ACM SIGKDD 2004. ACM Press, Seattle, pp 79–88

Cherukuri VS, Candan K (2008) Propagation-vectors for trees (PVT): concise yet effective summaries for hierarchical data and trees. In: Proceeding of LSDS-IR 2008. ACM Press, Napa Valley, pp 3–10

Cho H, Dhillon IS, Guan Y, Sra S (2004) Minimum sum-squared residue co-clustering of gene expression data. In: Proceedings of SIAM SDM 2004, Lake Buena Vista, USA

Costa G, Manco G, Ortale R (2008) A hierarchical model-based approach to co-clustering high-dimensional data. In: Proceedings of ACM SAC 2008. ACM Press, Fortaleza, pp 886–890

Deodhar M, Ghosh J (2010) SCOAL: a framework for simultaneous co-clustering and learning from complex data. Trans Knowl Discov Data 4(3): 11:1–11:31

Dhillon IS, Mallela S, Modha DS (2003) Information-theoretic co-clustering. In: Proceedings of ACM SIGKDD 2003. ACM Press, Washington, pp 89–98

Ester M, Kriegel HP, Sander J, Wimmer M, Xu X (1998) Incremental clustering for mining in a data warehousing environment. In: Proceedings of VLDB 1998. Morgan Kaufmann, New York, pp 323–333

Fisher D (1987) Knowledge acquisition via incremental conceptual clustering. Mach Learn 2(2): 139–172

Forman G (2003) An extensive empirical study of feature selection metrics for text classification. J Mach Learn Res 3: 1289–1305

George T, Merugu S (2005) A scalable collaborative filtering framework based on co-clustering. In: Proceedings of ICDM 2005. IEEE Computer Society, Houston, pp 625–628

Gong J, Oard D (2009) Selecting hierarchical clustering cut points for web person-name disambiguation. In: Proceedings of ACM SIGIR 2009. ACM Press, Boston, pp 778–779

Goodman LA, Kruskal WH (1954) Measures of association for cross classification. J Am Stat Assoc 49: 732–764

Goodman LA, Kruskal WH (1959) Measure of association for cross classification II: further discussion and references. J Am Stat Assoc 54: 123–163

Han J, Kamber M (2000) Data mining: concepts and techniques. The Morgan Kaufmann series in data management systems. Morgan Kaufmann, Burlington

Hartigan JA (1972) Direct clustering of a data matrix. J Am Stat Assoc 67(337): 123–129

Heard NA, Holmes CC, Stephens DA, Hand DJ, Dimopoulos G (2005) Bayesian coclustering of anopheles gene expression time series: study of immune defense response to multiple experimental challenges. Proc Natl Acad Sci 102(47): 16939–16944

Hosseini M, Abolhassani H (2007) Hierarchical co-clustering for web queries and selected urls. In: Proceedings of WISE 2007, LNCS, vol 4831. Springer, Nancy, pp 653–662

Hubert L, Arabie P (1985) Comparing partitions. J Classif 2(1): 193–218

Ienco D, Pensa R, Meo R (2009) Parameter-free hierarchical co-clustering by n-ary splits. In: Proceedings of ECML PKDD 2009, part I. Lecture notes in computer science, vol 5781. Springer, Bled, pp 580–595

Ienco D, Robardet C, Pensa R, Meo R (2012) Parameter-less co-clustering for star-structured heterogeneous data. Data Min Knowl Discov. doi:10.1007/s10618-012-0248-z

Jaszkiewicz A (2002) Genetic local search for multi-objective combinatorial optimization. Eur J Oper Res 137(1): 50–71

Khoshneshin M, Street W (2010) Incremental collaborative filtering via evolutionary co-clustering. In: Proceedings of ACM RecSys 2010. ACM Press, Barcelona, pp 325–328

Kim J, Candan K (2006) CP/CV: concept similarity mining without frequency information from domain describing taxonomies. In: Proceedings of ACM CIKM 2006. ACM Press, Arlington, pp 483–492

Kluger Y, Basri R, Chang J, Gerstein M (2003) Spectral biclustering of microarray data: coclustering genes and conditions. Genome Res 13: 703–716

Kriegel HP, Kröger P, Zimek A (2009) Clustering high-dimensional data: a survey on subspace clustering, pattern-based clustering, and correlation clustering. ACM Trans Knowl Discov Data 3(1): 1:1– 1:58

Paquete L, Stützle T (2006) A study of stochastic local search algorithms for the biobjective qap with correlated flow matrices. Eur J Oper Res 169(3): 943–959

Robardet C (2002) Contribution à à la classification non supervisée: proposition d’une methode de bi-partitionnement. Ph.D. thesis, Université Claude Bernard, Lyon 1

Robardet C, Feschet F (2001a) Comparison of three objective functions for conceptual clustering. In: Proceedings of PKDD 2001, LNCS, vol 2168. Springer, Freiburg, pp 399–410

Robardet C, Feschet F (2001b) Efficient local search in conceptual clustering. In: Proceedings of DS 2001, LNCS, vol 2226. Springer, Washington, DC, pp 323–335

Sahoo N, Callan J, Krishnan R, Duncan G, Padman R (2006) Incremental hierarchical clustering of text documents. In: Proceedings of ACM CIKM 2006. ACM Press, Arlington, pp 357–366

Slonim N, Tishby N (2000) Document clustering using word clusters via the information bottleneck method. In: Proceedings of ACM SIGIR 2000. ACM Press, Athens, pp 208–215

Strehl A, Ghosh J (2002) Cluster ensembles—a knowledge reuse framework for combining multiple partitions. J Mach Learn Res 3: 583–617

Zhang Y, Li T (2011) Extending consensus clustering to explore multiple clustering views. In: Proceedings of SIAM SDM 2011, pp 920–931

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Ian Davidson.

Rights and permissions

About this article

Cite this article

Pensa, R.G., Ienco, D. & Meo, R. Hierarchical co-clustering: off-line and incremental approaches. Data Min Knowl Disc 28, 31–64 (2014). https://doi.org/10.1007/s10618-012-0292-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10618-012-0292-8