Abstract

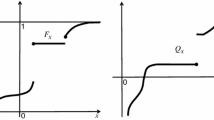

In this paper, we revisit the concentration inequalities for the supremum of the cumulative distribution function (CDF) of a real-valued continuous distribution as established by Dvoretzky, Kiefer, Wolfowitz and revisited later by Massart in in two seminal papers. We focus on the concentration of the local supremum over a sub-interval, rather than on the full domain. That is, denoting \(U\) the CDF of the uniform distribution over \([0,1]\) and \(U_{n}\) its empirical version built from \(n\) samples, we study \(\mathbb{P}\Big{(}\sup_{u\in[\underline{u},\overline{u}]}U_{n}(u)-U(u)>\varepsilon\Big{)}\) for different values of \(\underline{u},\overline{u}\in[0,1]\). Such local controls naturally appear for instance when studying estimation error of spectral risk-measures (such as the conditional value at risk), where \([\underline{u},\overline{u}]\) is typically \([0,\alpha]\) or \([1-\alpha,1]\) for a risk level \(\alpha\), after reshaping the CDF \(F\) of the considered distribution into \(U\) by the general inverse transform \(F^{-1}\). Extending a proof technique from Smirnov, we provide exact expressions of the local quantities \(\mathbb{P}\Big{(}\sup_{u\in[\underline{u},\overline{u}]}U_{n}(u)-U(u)>\varepsilon\Big{)}\) and \(\mathbb{P}\Big{(}\sup_{u\in[\underline{u},\overline{u}]}U(u)-U_{n}(u)>\varepsilon\Big{)}\) for each \(n,\varepsilon,\underline{u},\overline{u}\). Interestingly these quantities, seen as a function of \(\varepsilon\), can be easily inverted numerically into functions of the probability level \(\delta\). Although not explicit, they can be computed and tabulated. We plot such expressions and compare them to the classical bound \(\sqrt{\frac{\ln(1/\delta)}{2n}}\) provided by Massart inequality. We then provide an application of such result to the control of generic functional of the CDF, motivated by the case of the conditional value at risk. Last, we extend the local concentration results holding individually for each \(n\) to time-uniform concentration inequalities holding simultaneously for all \(n\), revisiting a reflection inequality by James, which is of independent interest for the study of sequential decision making strategies.

Similar content being viewed by others

Notes

The superscript \(r\) stands for rewards, and \(\ell\) for losses.

REFERENCES

C. Acerbi, ‘‘Spectral measures of risk: A coherent representation of subjective risk aversion,’’ Journal of Banking and Finance 26 (7), 1505–1518 (2002).

P. Billingsley, Convergence of probability measures (John Wiley and Sons, 1968).

O. Cappé, A. Garivier, O.-A. Maillard, R. Munos, and G. Stoltz, ‘‘Kullback–Leibler Upper Confidence Bounds For Optimal Sequential Allocation,’’ Annals of Statistics 41 (3), 1516–1541 (2013).

M. D. Donsker, ‘‘Justification and Extension of Doob’s Heuristic Approach to the Kolmogorov-Smirnov Theorems,’’ The Annals of Mathematical Statistics 23 (2), 277–281, 06 (1952).

R. M. Dudley, Uniform central limit theorems, Vol. 142 (Cambridge university press, 1999).

A. Dvoretzky, J. Kiefer, and J. Wolfowitz, ‘‘Asymptotic minimax character of the sample distribution function and of the classical multinomial estimator,’’ The Annals of Mathematical Statistics, 642–669 (1956).

B. R. James, ‘‘A functional law of the iterated logarithm for weighted empirical distributions,’’ The Annals of Probability 3 (5), 762–772 (1975).

A. Kolmogorov, ‘‘Sulla determinazione empirica di una legge di distribuzione,’’ Giornale dell’Istituto Italiano degli Attuari 4, 83–91 (1933).

A. N. Kolmogorov, ‘‘On Skorokhod convergence,’’ Theory of Probability and Its Applications 1 (2), 215–222 (1956).

B. B. Mandelbrot, ‘‘The variation of certain speculative prices,’’ in Fractals and Scaling in Finance, pp. 371–418, Springer (1997).

P. Massart, ‘‘The tight constant in the Dvoretzky–Kiefer–Wolfowitz inequality,’’ The Annals of Probability, 1269–1283 (1990).

D. Pollard, Convergence of stochastic processes (Springer Science and Business Media, 1984).

Ralph T. Rockafellar and Stanislav Uryasev, ‘‘Optimization of conditional value-at-risk,’’ Journal of Risk 2, 21–42 (2000).

G. R. Shorack and J. A. Wellner, Empirical processes with applications to statistics (Society for Industrial and Applied Mathematics, 2009).

N. V. Smirnov, ‘‘Approximate laws of distribution of random variables from empirical data,’’ Uspekhi Matematicheskikh Nauk 10, 179–206 (1944).

P. Thomas and E. Learned-Miller, ‘‘Concentration inequalities for conditional value at risk,’’ International Conference on Machine Learning, pp. 6225–6233 (2019).

ACKNOWLEDGMENTS

This work has been supported by CPER Nord-Pas-de-Calais/FEDER DATA advanced data science and technologies 2015-2020, the French Ministry of Higher Education and Research, Inria, the French Agence Nationale de la Recherche (ANR) under grant ANR-16-CE40-0002 (the BADASS project), the MEL, the I-Site ULNE regarding project R-PILOTE-19-004-APPRENF, and the Inria A.Ex. SR4SG project.

Author information

Authors and Affiliations

Corresponding author

Appendices

PROOFS OF THE MAIN RESULTS

Proof of Lemma 2. We let \([n]=\{1,\dots,n\}\) for all \(n\in\mathbb{N}_{\star}\).

Left tail, step 1. Let first recall the following remark by Smirnov [15, p. 10], showing that if \(u_{(1)},\dots,u_{(n)}\) denotes the order samples received from the uniform distribution, then

When restricting the supremum to \([\alpha,\beta]\), this equality needs to be modified. First of all, it holds that

Hence, we deduce that

where we introduced the term \(u_{(n+1)}=1\) in the last line and used that \(\varepsilon+\alpha\geqslant 0\) to exclude the term \(u_{(0)}=0\). Using the distribution of the order statistics, we deduce that

Left tail, step 2. Following [15], we introduce the notation \(t_{k}=u_{n-k+1}\), constant \(\gamma_{k}=(n-k+1)/n-\varepsilon\) (non-negative for \(k\leqslant n-\lfloor\varepsilon n\rfloor\)) as well as \(\beta_{k}=\min(\gamma_{k},\beta)\). We thus have the following rewriting

which further reduces to \([0,1]\) when \(\gamma_{k}\leqslant\alpha\). We let \(n_{\alpha,\varepsilon}=n(1-\varepsilon-\alpha)\), \(\overline{n}_{\alpha,\varepsilon}=\lceil n_{\alpha,\varepsilon}\rceil\) and remark that \(\gamma_{k}\leqslant\alpha\) iff \(k\geqslant n_{\alpha,\varepsilon}+1\) . Also, \(\gamma_{k}\leqslant\alpha\) as soon as \(k>\overline{n}_{\alpha,\varepsilon}\). Let us also note that \(n-k+1>n(\varepsilon+\alpha)\) iff \(k<n_{\alpha,\varepsilon}+1\), and that \(\overline{n}_{\alpha,\varepsilon}<n_{\alpha,\varepsilon}+1\). This means in particular that if \(k\geqslant\overline{n}_{\alpha,\varepsilon}+1\), then both conditions in the integral vanish (so contribute to \(1\) in the integral)

where we integrated out all terms for \(k>\overline{n}_{\alpha,\varepsilon}\) into the short-hand notation \(J_{k}(x)=\frac{x^{k}}{k!}\). In the integral, we note that if \(t_{k}\leqslant\alpha\), then so must be all terms \(t_{k^{\prime}}\) for \(k^{\prime}\geqslant k\).

We now proceed with integration. Starting with \(t_{1}\), we see that if \(t_{1}\leqslant\alpha\), then this implies \(\alpha\in[t_{1},t_{0}]\). Hence, the corresponding terms are \(0\), and it remains to integrate \(t_{1}\) on \((\alpha,1]\), that is on \([\beta_{1},1]\).

Regarding \(t_{2}\), if \(t_{2}\leqslant\alpha<t_{1}\), this contradicts \(\alpha\notin[t_{2},t_{1}]\), hence it remains it remains to integrate \(t_{2}\) on \((\alpha,1]\), that is on \([\beta_{2},1]\). Proceeding similarly, for all \(k\leqslant\overline{n}_{\alpha,\varepsilon}\) we obtain that

In order to compute the multiple integral, similarly to [15], we make use of the following variant of the Taylor expansion

which, using the \(I_{k}\) notation, yields the following form

This is applied to the function \(f(x)=x^{n}\), \(k=n-\overline{n}_{\alpha,\varepsilon}\). Indeed, we then get \(f^{(n-k)}(x)=\frac{n!x^{k}}{k!}=\frac{n!x^{n-\overline{n}_{\alpha,\varepsilon}}}{(n-\overline{n}_{\alpha,\varepsilon})!}=n!J_{n-\overline{n}_{\alpha,\varepsilon}}(x)\). This in turns yields

using the convention that \(I_{0}(x;\emptyset)=1\). This completes the proof regarding the left tail concentration.

Right tail. We proceed similarly for the right tail. First, using our notation we note that

To be more precise, we let \((\eta_{k})_{k\in[n]}>0\) be arbitrary small constants. We also let \(\eta_{0}<\min_{k\in[n]}\eta_{k}\) and define \(\overline{\eta}=\max_{k\in[n]}\eta_{k}\). We further introduce, for each \(k\in[n]\), \(u^{-}_{(k)}\) such that \(u_{(k)}-\eta=u^{-}_{(k)}<u_{(k)}\) and \(\beta^{-}\) such that \(\beta-\eta_{0}=\beta^{-}<\beta\). Then, we introduce the notation

Before proceeding, we note that \(\forall n\in\mathbb{N},\lim_{\overline{\eta}\to 0}\mathbb{P}(\min_{k\in[n]}u_{(k)}-u_{(k-1)}>\eta_{k})=1\) Indeed, it holds

In the following, we use a construction similar to that of [9] for Skorokhod convergence. Note that under the event that \(\Omega_{n}=\big{\{}\min_{k\in[n]}u_{(k)}-u_{(k-1)}>\eta_{k}\big{\}}\) (where \(u_{(0)}=0\)) we have the following rewriting

In the last line, we used that \(\beta^{-}=\beta-\eta_{0}\) and \(\eta_{0}<\min_{k\in[n]}\eta_{k}\) to rewrite \(\sum_{k=1}^{n}\mathbb{I}\{u_{(k)}\leqslant\beta^{-}\}\), in terms of \(u_{(k)}\leqslant\beta^{-}<u_{(k+1)}\). Then we shifted \(k\) by \(1\), and used the fact that \(\beta\leqslant 1\) implies \(1\geqslant\beta^{-}-\varepsilon\) in order to exclude the term \(u_{(n+1)}=1\).

We let \(\tilde{\beta}=1-\beta,\tilde{\alpha}=1-\alpha\), \(\tau_{k}=1-u_{k}\) and introduce for all \(k\) the constant \(\rho_{k}=1-\varepsilon-k/n\) (non-negative for all \(k\leqslant n(1-\varepsilon)\) as well as \(\tilde{\alpha}_{k}=\min(\rho_{k-1},\tilde{\alpha})\). We also let \(\tilde{\beta}^{+}=1-\tilde{\beta}^{-}\). Finally, we let \(\tau_{k}<_{k}\tau_{k-1}\) if and only if \(\tau_{k}+\eta_{k}<\tau_{k-1}\). Using the distribution of the order statistics together with these notations,we then naturally study the quantity \(\lim_{\overline{\eta}\to 0}\mathbb{P}^{\eta}\Big{(}\sup_{u\in[\alpha,\beta]}U(u)-U_{n}(u)\leqslant\varepsilon\Big{)}\) where

Now, \([0,\tilde{\beta}]\cup[\min(\rho_{k-1},\tilde{\alpha}),1]\) reduces to \([0,1]\) when \(\rho_{k-1}\leqslant\tilde{\beta}\). We let \(n_{\beta,\varepsilon}=n(\beta-\varepsilon)\), \(\overline{n}_{\beta,\varepsilon}=\lceil n_{\beta,\varepsilon}\rceil\) and remark that \(\rho_{k-1}\leqslant\tilde{\beta}\) iff \(k-1\geqslant n_{\beta,\varepsilon}\). We first deal with the case when \(n_{\beta,\varepsilon}\in\mathbb{N}\). In this situation, provided that \(\eta_{0}\) is sufficiently small, then \(\overline{n}_{\beta^{-},\varepsilon}=\overline{n}_{\beta,\varepsilon}=n_{\beta,\varepsilon}\) and also, \(k-1<n_{\beta^{-},\varepsilon}\) iff \(k\leqslant\overline{n}_{\beta^{-},\varepsilon}\). If \(k>\overline{n}_{\beta,\varepsilon}\), then \(\rho_{k-1}\leqslant\tilde{\beta}\) and both restrictions disappear in the integral. In the general situation when \(n_{\beta,\varepsilon}\notin\mathbb{N}\), then \(\overline{n}_{\beta,\varepsilon}>n_{\beta,\varepsilon}\) and \(\overline{n}_{\beta^{-},\varepsilon}=\overline{n}_{\beta,\varepsilon}\). Also, \(k-1<n_{\beta^{-},\varepsilon}\) iff \(k\leqslant\overline{n}_{\beta^{-},\varepsilon}\). If \(k>\overline{n}_{\beta,\varepsilon}\) then \(\rho_{k-1}\leqslant\tilde{\beta}\) and again restrictions disappear in the integral. We deduce that provided that \(\overline{\eta}\) is sufficiently small,

where we integrated out all terms for \(k>\overline{n}_{\beta,\varepsilon}\) in the term \(J^{\eta}_{m}(x)\), that satisfies \(\lim_{\overline{\eta}\to 0}J^{\eta}_{m}(x)=\frac{x^{m}}{m!}\).

We now proceed with integration. Starting with \(\tau_{1}\), we see that if \(\tau_{1}\leqslant\tilde{\beta}\), then this implies \(\tilde{\beta}\in[\tau_{1},\tau_{0})\). The case when \(\tilde{\beta}^{+}\geqslant\tau_{0}=1\), that is \(\beta^{-}\leqslant 0\) is excluded by the assumption that \(\beta>0\). Hence, this in turns implies \(\tilde{\beta}^{+}\in[\tau_{1},\tau_{0})\), provided that \(\eta_{0}<\tau_{0}-\tilde{\beta}=\beta\). Since this event is excluded by the indicator function, the corresponding terms are \(0\), and it remains to integrate \(\tau_{1}\) on \((\tilde{\beta},1]\), that is on \([\tilde{\alpha}_{1},1]\). Regarding \(\tau_{2}\), if \(\tau_{2}\leqslant\tilde{\beta}^{+}<\tau_{1}\), this contradicts \(\tilde{\beta}^{+}\notin[\tau_{2},\tau_{1})\), hence it remains to integrate \(\tau_{2}\) on \((\tilde{\beta}^{+},1]\), that is on \([\tilde{\alpha}_{2},1]\). We proceed similarly for all \(k\leqslant\overline{n}_{\alpha,\varepsilon}\). We obtain that for \(\overline{\eta}\) sufficiently small,

Now, we remark that \(\lim_{\overline{\eta}\to 0}\mathbb{I}\bigg{\{}0\leqslant\tau_{\overline{n}_{\beta,\varepsilon}}<_{{\overline{n}_{\beta,\varepsilon}}}\dots\tau_{1}<_{1}1\bigg{\}}=\mathbb{I}\bigg{\{}0\leqslant\tau_{\overline{n}_{\beta,\varepsilon}}\leqslant\dots\tau_{1}\leqslant 1\bigg{\}}\), and so

In order to compute the multiple integral, we resort to a Taylor expansion as for the left tail, and deduce that

It remains to note that \(\lim_{\overline{\eta}\to 0}\mathbb{P}\Big{(}\sup^{\eta}_{u\in[\alpha,\beta]}U(u)-U_{n}(u)\leqslant\varepsilon\cap\Omega_{n}^{c}\Big{)}\leqslant\lim_{\overline{\eta}\to 0}\mathbb{P}\Big{(}\Omega_{n}^{c}\Big{)}=0\) and thus

This shows that the limit of \(\mathbb{P}\Big{(}\sup^{\eta}_{u\in[\alpha,\beta]}U(u)-U_{n}(u)\Big{)}\) indeed exists and hence gives the value of \(\mathbb{P}\Big{(}\sup_{u\in[\alpha,\beta]}U(u)-U_{n}(u)\Big{)}\). \(\Box\)

Proof of Theorem 3. We now compute for \(\ell\geqslant 1\) the quantity

We further let \(n_{\beta}=n(1-\beta-\varepsilon)\), \(\underline{n}_{\beta}=\lfloor n_{\beta}\rfloor\) and note that \(\beta_{k}=\min((n-k+1)/n-\varepsilon,\beta)\) is equal to \(\beta\) iff \(k\leqslant n_{\beta}+1\). Also, \(\beta_{k}=\beta\) as soon as \(k\leqslant\underline{n}_{\beta}+1\). Last, \(\gamma_{k}=(n-k+1)/n-\varepsilon\).

Case 1. When \(n_{\beta}<0\), then \(\beta_{k}=\gamma_{k}\) for all \(k\geqslant 1\). In this case, since \(\gamma_{k}-\gamma_{k-1}=-1/n\), we deduce that

and hence since \(\gamma_{1}=1-\varepsilon\) and \(\gamma_{\ell+1}=1-\ell/n-\varepsilon\),

Case 2. We now consider the general case when \(\underline{n}_{\beta}\geqslant 0\). For instance if \(n_{\beta}\geqslant 0\) but \(\underline{n}_{\beta}=0\) (that is, \(0\leqslant n_{\beta}<1\)), then, we deduce that \(\beta_{k}=\gamma_{k}\) for all \(k\geqslant 2\), while \(\beta_{1}=\beta\). Hence, we deduce that

Likewise, when \(\underline{n}_{\beta}=1\), then we deduce that \(\beta_{k}=\gamma_{k}\) for all \(k\geqslant 3\), while \(\beta_{1}=\beta_{2}=\beta\), and so

More generally, for a generic \(\underline{n}_{\beta}\geqslant 0\), we deduce (using the convention that \(t_{0}=1\)) that

Further, since \(\gamma_{\ell}-\gamma_{\ell-1}=-1/n\) for all \(\ell\), and introducing \(\ell_{\beta}=\ell-\underline{n}_{\beta}-1\) we also have (see [15])

where in the second line, we also introduced \(C_{\ell_{\beta}}=\beta-\gamma_{\underline{n}_{\beta}+2}+\ell_{\beta}/n=(\beta+\varepsilon)-(n-\ell)/n\). In particular, \(C_{\ell_{\beta}}=(\ell-n_{\beta})/n>0\) for \(\ell>\underline{n}_{\beta}+1\). From this expression, we deduce that if \(\ell>\underline{n}_{\beta}+1\), then

In order to compute both terms, we use the following inequality for given \(k,\ell,C\),

Hence, we deduce that

After reorganizing the terms, and remarking that \(C_{\ell_{\beta}}=b-1+\varepsilon+\ell/n=(\ell-n_{\beta})/n\), we obtain that then if \(\ell>\underline{n}_{\beta}+1\), then

Combining all steps together, we deduce that if \(\underline{n}_{\beta}\geqslant 0\) and \(\lfloor n_{\beta}\rfloor+1\leqslant\overline{n}_{\alpha,\varepsilon}-1\), then

where \(\beta_{k}=\min((n-k+1)/n-\varepsilon,b)\), \(\overline{n}_{\alpha,\varepsilon}=\lceil n(1-\alpha-\varepsilon)\rceil\) \(\underline{n}_{\beta}=\lfloor n(1-\beta-\varepsilon)\rfloor\). Introducing the term \(m_{\beta}=\min\{\lfloor n_{\beta}\rfloor+1,\overline{n}_{\alpha,\varepsilon}-1\}\), we get more generally when \(n_{\beta}>0\),

\(\Box\)

Proof of Lemma 4. We let \(\tilde{\beta}=1-\beta,\tilde{\alpha}=1-\alpha\), \(\tau_{k}=1-u_{k}\) and consider for all \(k\) the constant \(\rho_{k}=1-\varepsilon-k/n\) (non-negative for all \(k\leqslant n(1-\varepsilon)\). We recall that \(\tilde{\alpha}_{k}=\min(\rho_{k-1},\tilde{\alpha})\). We let \(n_{\beta,\varepsilon}=n(\beta-\varepsilon)\), \(\overline{n}_{\beta,\varepsilon}=\lceil n_{\beta,\varepsilon}\rceil\) and remark that \(\rho_{k}\leqslant\tilde{\beta}\) iff \(k\geqslant n_{\beta,\varepsilon}\). Also, \(\rho_{k}\leqslant\tilde{\beta}\) as soon as \(k-1>\overline{n}_{\beta,\varepsilon}\).

We now compute the quantity

where \(\tilde{\alpha}_{k}=\min(\rho_{k-1},\tilde{\alpha})\). We further let \(n_{\tilde{\alpha}}=n(\alpha-\varepsilon)\), \(\underline{n}_{\alpha}=\lfloor n_{\alpha}\rfloor\) and note that \(\tilde{\alpha}_{k}=\min(1-\varepsilon-(k-1)/n,\tilde{\alpha})\) is equal to \(\tilde{\alpha}\) iff \(k\leqslant n_{\tilde{\alpha}}+1\). Also, \(\tilde{\alpha}_{k}=\tilde{\alpha}\) as soon as \(k\leqslant\underline{n}_{\tilde{\alpha}}+1\).

Case 1. When \(n_{\tilde{\alpha}}<0\), then \(\tilde{\alpha}_{k}=\rho_{k-1}\) for all \(k\geqslant 1\). In this case, since \(\rho_{k}-\rho_{k-1}=-1/n\), we deduce that

and hence since \(\rho_{0}=1-\varepsilon\) and \(\rho_{\ell}=1-\ell/n-\varepsilon\), it comes

Case 2. We now consider the general case when \(\underline{n}_{\tilde{\alpha}}\geqslant 0\). For instance if \(n_{\tilde{\alpha}}\geqslant 0\) but \(\underline{n}_{\tilde{\alpha}}=0\) (that is, \(0\leqslant n_{\tilde{\alpha}}<1\)), then, we deduce that \(\tilde{\alpha}_{k}=\rho_{k-1}\) for all \(k\geqslant 2\), while \(\tilde{\alpha}_{1}=\tilde{\alpha}\). Hence, we deduce that

Likewise, when \(\underline{n}_{\tilde{\alpha}}=1\), then we deduce that \(\tilde{\alpha}_{k}=\rho_{k-1}\) for all \(k\geqslant 3\), while \(\tilde{\alpha}_{1}=\tilde{\alpha}_{2}=\tilde{\alpha}\), and so

More generally, for a generic \(\underline{n}_{\tilde{\alpha}}\geqslant 0\), we deduce (using the convention that \(t_{0}=1\)) that

Further, since \(\rho_{\ell}-\rho_{\ell-1}=-1/n\) for all \(\ell\), and introducing \(\ell_{\tilde{\alpha}}=\ell-\underline{n}_{\tilde{\alpha}}-1\) we also have (see [15])

where in the second line, we also introduced \(C_{\ell_{\tilde{\alpha}}}={\tilde{\alpha}}-\rho_{\underline{n}_{\tilde{\alpha}}+1}+\ell_{\tilde{\alpha}}/n=(1-\alpha+\varepsilon)-(n-\ell)/n\).

In particular, it holds that \(C_{\ell_{\tilde{\alpha}}}=(\ell-n_{\tilde{\alpha}})/n>0\) for \(\ell>\underline{n}_{\tilde{\alpha}}+1\). From this expression, we deduce that if \(\ell>\underline{n}_{\tilde{\alpha}}+1\), then

Hence, we deduce that

where we used that \(\ell_{\tilde{\alpha}}+\underline{n}_{\tilde{\alpha}}+1=\ell\). After reorganizing the terms, and remarking that \(C_{\ell_{\tilde{\alpha}}}=\varepsilon-\alpha+\ell/n=(\ell-n_{\tilde{\alpha}})/n\), we obtain that then if \(\ell>\underline{n}_{\tilde{\alpha}}+1\), then

Combining all steps together, we deduce that if \(\underline{n}_{\tilde{\alpha}}\geqslant 0\) and \(\lfloor n_{\tilde{\alpha}}\rfloor+1\leqslant\overline{n}_{\beta,\varepsilon}-1\), then

where \({\tilde{\alpha}}_{k}=\min((n-(k-1))/n-\varepsilon,\tilde{\alpha})\), \(\overline{n}_{\beta,\varepsilon}=\lceil n(\beta-\varepsilon)\rceil\) \(\underline{n}_{\tilde{\alpha}}=\lfloor n(\alpha-\varepsilon)\rfloor\). Introducing the term \(m_{\beta}=\min\{\lfloor n_{\tilde{\alpha}}\rfloor+1,\overline{n}_{\beta,\varepsilon}-1\}\), we get more generally when \(n_{\tilde{\alpha}}>0\),

\(\Box\)

MONTE CARLO SIMULATIONS OF THE CONFIDENCE BOUNDS

TECHNICAL DETAILS REGARDING THE CVAR

Proposition 7 is a consequence of the following more general results.

Proposition 17 (conditional value at risk). Any solution \(x^{\star}\) to the following problem

must satisfy \(1-\alpha\in[F(x^{\star})-\mathbb{P}(X=x^{\star}),F(x^{\star})]\) . Further, it holds

Proof of Proposition 17. Let us introduce the function \(H:x\mapsto x+\frac{1}{\alpha}\mathbb{E}[\max(X-x,0)]\). This is a convex function. Let \(\partial H(x)\) denotes its subdifferential at point \(x\). In particular, for \(y\in\partial H(x)\), we must have \(\forall x^{\prime},H(x^{\prime})\leqslant H(x)+y(x^{\prime}-x)\), and \(x\) is a minimum of \(H\) if \(0\in\partial H(x)\). Using Minkowski set notations, we first have

hence we focus on computing \(\partial\mathbb{E}[(X-x)\mathbb{I}\{X>x\}]\). To this end, we look at the \(y\) such that

Remarking that if \(x>x^{\prime}\), then \(\mathbb{I}\{X>x^{\prime}\}-\mathbb{I}\{X>x\}=\mathbb{I}\{X\in(x^{\prime},x]\}\), while if \(x^{\prime}>x\) then \(\mathbb{I}\{X>x^{\prime}\}-\mathbb{I}\{X>x\}=-\mathbb{I}\{X\in(x,x^{\prime}]\}\), and reorganizing the terms, this means we must have

Further, note that if \(x^{\prime}>x\), then \(\frac{(X-x^{\prime})}{x^{\prime}-x}\mathbb{I}\{X\in(x,x^{\prime}]\}\in(-\mathbb{I}\{X\in(x,x^{\prime}]\},0]\), while if \(x>x^{\prime}\), then \(\frac{(X-x^{\prime})}{x^{\prime}-x}\mathbb{I}\{X\in(x^{\prime},x]\}\in[-\mathbb{I}\{X\in(x^{\prime},x]\},0)\). Hence, we deduce that such \(y\) must satisfy

Hence, \(-\mathbb{P}(X>x)\geqslant y\geqslant-\mathbb{P}(X\geqslant x)\), \(\partial\mathbb{E}[(X-x)\mathbb{I}\{X>x\}]\subset[-\mathbb{P}(X\geqslant x),-\mathbb{P}(X>x)]=[F(x)-1-\mathbb{P}(X=x),F(x)-1]\), from which we deduce that

This means that a minimum \(x^{\star}\) of \(H\) should at least satisfy that \(1-\alpha\in[F(x^{\star})-\mathbb{P}(X=x^{\star}),F(x^{\star})]\). Finally, the value of the optimization is given by

\(\Box\)

Proposition 18 (expected shorfall). Any solution \(x^{\star}\) to the following problem

must satisfy \(\alpha\in[F(x^{\star})-\mathbb{P}(X=x^{\star}),F(x^{\star})]\) . Further, it holds

Proof of Proposition 18. Let us introduce the function \(H:x\mapsto\frac{1}{\alpha}\mathbb{E}[\min(X-x,0)]+x\). This is a concave function. Let \(\partial H(x)\) denotes its subdifferential at point \(x\). In particular, for \(y\in\partial H(x)\), we must have \(\forall x^{\prime},H(x^{\prime})\geqslant H(x)+y(x^{\prime}-x)\), and \(x\) is a minimum of \(H\) if \(0\in\partial H(x)\). Using Minkowski set notations, we first have

hence we focus on computing \(\partial\mathbb{E}[(X-x)\mathbb{I}\{X<x\}]\). To this end, we look at the \(y\) such that

i.e.

Remarking that if \(x>x^{\prime}\), then \(\mathbb{I}\{X<x^{\prime}\}-\mathbb{I}\{X<x\}=-\mathbb{I}\{X\in[x^{\prime},x)\}\), while if \(x^{\prime}>x\) then \(\mathbb{I}\{X<x^{\prime}\}-\mathbb{I}\{X<x\}=\mathbb{I}\{X\in[x,x^{\prime})\}\), and reorganizing the terms, this means we must have

Further, note that if \(x^{\prime}>x\), then \(\frac{(X-x^{\prime})}{x^{\prime}-x}\mathbb{I}\{X\in[x,x^{\prime})\}\in(-\mathbb{I}\{X\in[x,x^{\prime})\},0]\), while if \(x>x^{\prime}\), then \(\frac{(X-x^{\prime})}{x^{\prime}-x}\mathbb{I}\{X\in[x^{\prime},x)\}\in[-\mathbb{I}\{X\in[x^{\prime},x)\},0)\). Hence, we deduce that such \(y\) must satisfy

Hence, \(-\mathbb{P}(X\leqslant x)\leqslant y\leqslant-\mathbb{P}(X<x)\), \(\partial\mathbb{E}[(X-x)\mathbb{I}\{X<x\}]\subset[-\mathbb{P}(X\leqslant x),-\mathbb{P}(X<x)]=[-F(x),-F(x)+\mathbb{P}(X=x)]\), from which we deduce that

This means that a minimum \(x^{\star}\) of \(H\) should at least satisfy that \(\alpha\in[F(x^{\star})-\mathbb{P}(X=x^{\star}),F(x^{\star})]\). Finally, the value of the optimization is given by

\(\Box\)

Proposition 19 (integrated and optimization forms). Let \(X\) be a real-valued random variable with distribution \(\nu\) and CDF \(F\). Let \(a,b\in\overline{\mathbb{R}}\) be such that \(\mathbb{P}_{\nu}(a\leqslant X\leqslant b)=1\). Let \(\alpha\in[0,1]\) and \(x^{\star}\) be any solution to the optimization problem \(\mathsf{CVaR}_{1-\alpha}(\nu)\). Let \((\chi_{i})_{i\in\mathbb{Z}}\) denotes the discontinuity points of \(F\) (empty when \(F\) is continuous), and let \(\kappa=1-\alpha\). Then, if \(a\geqslant 0\), the following rewriting holds

In particular if \(X\) is continuous, \(a\geqslant 0\) and \(b<\infty\) , then

Proof of Proposition 19. Indeed, we first have that

Now, if \(a\geqslant 0\), then \(Y=X\mathbb{I}\{X>x^{\star}\}\) is a non-negative random variable, hence we can use the following rewriting \(\mathbb{E}[Y]=\int\limits_{0}^{b}\mathbb{P}(Y\geqslant y)dy=a+\int\limits_{a}^{b}\mathbb{P}(Y\geqslant y)dy\). Hence,

where the last line is by monotony of \(F\). We conclude remarking that \(1-\max(F(x),F(x^{\star}))=\alpha-\max\big{(}F(x)-(\kappa),F(x^{\star})-(\kappa)\big{)}\). \(\Box\)

Proposition 20 (integrated and optimization forms). Let \(X\) be a real-valued random variable with distribution \(\nu\) and CDF \(F\). Let \(a,b\in\overline{\mathbb{R}}\) be such that \(\mathbb{P}_{\nu}(a\leqslant X\leqslant b)=1\). Let \(\alpha\in[0,1]\) and \(x^{\star}\) be any solution to the optimization problem \(\mathsf{CVaR}_{\alpha}(\nu)\). Let \((\chi_{i})_{i\in\mathbb{Z}}\) denotes the discontinuity points of \(F\) (empty when \(F\) is continuous). Then, if \(a\geqslant 0\), the following rewriting holds

In particular if \(X\) is continuous, \(a\geqslant 0\) and \(b<\infty\) , then

Proof of Proposition 20. Indeed, we first have that

Now, if \(a\geqslant 0\), then \(Y=X\mathbb{I}\{X<x^{\star}\}\) is a non-negative random variable, hence we can use the following rewriting \(\mathbb{E}[Y]=\int_{0}^{b}\mathbb{P}(Y\geqslant y)dy=a+\int\limits_{a}^{b}\mathbb{P}(Y\geqslant y)dy\). Hence,

Hence, we deduce that

\(\Box\)

OTHER RESULT

We provide below for the interested reader some examples of functions \(g\) satisfying \(\sum\limits_{t=1}^{\infty}\frac{1}{g(t)}\leqslant 1\).

Lemma 21 (controlled sums). The following functions \(g\) satisfy \(\sum\limits_{t=1}^{\infty}\frac{1}{g(t)}\leqslant 1\).

-

\(g(t)=3t^{3/2}\), \(g(t)=t(t+1)\), \(g(t)=\frac{(t+1)\ln^{2}(t+1)}{\ln(2)}\), \(g(t)=\frac{(t+2)\ln(t+2)(\ln\ln(t+2))^{2}}{\ln\ln(3)}\),

-

For each \(m\in\mathbb{N}\) , \(g_{m}(t)=C_{m}(\overline{\ln}^{\bigcirc{m}}(t))^{2}\prod_{i=0}^{m-1}\overline{\ln}^{\bigcirc{i}}(t)\) , where \(f^{\bigcirc{m}}\) denotes the \(m\) -fold composition of function \(f\) , \(\overline{\ln}(t)=\max\{\ln(t),1\}\) , and we introduced the constants \(C_{1}=2+\ln(2)+1/e\) , \(C_{2}=2.03+\ln(e^{e}-1)\) as well as \(C_{m}=2+\ln\Big{(}\exp^{\bigcirc{m}}(1)\Big{)}\) for \(m\geqslant 3\) .

Proof of Lemma 21. Note that \(\overline{\ln}^{\bigcirc{m}}(t)=\ln^{\bigcirc{m}}(t)\) for \(t\geqslant\exp^{\bigcirc{m}}(1)\) and \(1\) else. Using that \(g(t)=C_{m}(\overline{\ln}^{\bigcirc{m}}(t))^{2}\times\prod_{i=0}^{m-1}\overline{\ln}^{\bigcirc{i}}(t)\), and that \(t\mapsto-\frac{1}{\ln^{\bigcirc{m}}(t)}\) has derivative \(t\mapsto\frac{1}{(\ln^{\bigcirc{m}}(t))^{2}\prod_{i=0}^{m-1}\ln^{\bigcirc{i}}(t)}\), it comes

\(\Box\)

About this article

Cite this article

Maillard, OA. Local Dvoretzky–Kiefer–Wolfowitz Confidence Bands. Math. Meth. Stat. 30, 16–46 (2021). https://doi.org/10.3103/S1066530721010038

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3103/S1066530721010038