Abstract

Objective

To use deep learning to segment the mandible and identify three-dimensional (3D) anatomical landmarks from cone-beam computed tomography (CBCT) images, the planes constructed from the mandibular midline landmarks were compared and analyzed to find the best mandibular midsagittal plane (MMSP).

Methods

A total of 400 participants were randomly divided into a training group (n = 360) and a validation group (n = 40). Normal individuals were used as the test group (n = 50). The PointRend deep learning mechanism segmented the mandible from CBCT images and accurately identified 27 anatomic landmarks via PoseNet. 3D coordinates of 5 central landmarks and 2 pairs of side landmarks were obtained for the test group. Every 35 combinations of 3 midline landmarks were screened using the template mapping technique. The asymmetry index (AI) was calculated for each of the 35 mirror planes. The template mapping technique plane was used as the reference plane; the top four planes with the smallest AIs were compared through distance, volume difference, and similarity index to find the plane with the fewest errors.

Results

The mandible was segmented automatically in 10 ± 1.5 s with a 0.98 Dice similarity coefficient. The mean landmark localization error for the 27 landmarks was 1.04 ± 0.28 mm. MMSP should use the plane made by B (supramentale), Gn (gnathion), and F (mandibular foramen). The average AI grade was 1.6 (min–max: 0.59–3.61). There was no significant difference in distance or volume (P > 0.05); however, the similarity index was significantly different (P < 0.01).

Conclusion

Deep learning can automatically segment the mandible, identify anatomic landmarks, and address medicinal demands in people without mandibular deformities. The most accurate MMSP was the B-Gn-F plane.

Similar content being viewed by others

Introduction

Mandibular deviation is a common deformity in orthodontic clinics and may be caused by differences in the size and shape of the mandible or by positional deviation of the mandible [1, 2]. According to the different mechanisms of deviation, the solution also varies. Therefore, accurate identification of the deviation mechanism is crucial for treatment. The craniomaxillary median sagittal plane (CMSP) was used as the sagittal plane for assessing mandibular symmetry in traditional methods; however, this plane has limitations when the position of the mandible changes, such as during rotation or translation [1, 3].

With the rapid development of computer and 3D reconstruction technology, 3D measurement methods have more advantages than two-dimensional (2D) methods for asymmetry evaluation [2, 4]. The methods for craniofacial symmetry analysis are based mainly on anatomical landmarks, original-mirror alignment, and deep learning algorithms. Conventional anatomic landmark approaches used to be the main option for evaluating craniofacial asymmetry. A reference plane is frequently created by dividing the lines between bilateral landmarks or by connecting median landmarks, which determine both sides of 2D or 3D quantitative measurements. Several studies have analyzed the planes constructed by B, G, and Me to explore the differences between the two sides of the mandible [5, 6]. The accuracy of this plane was not verified. A mandibular-specific sagittal midline plane for 3D mandibular analysis. This study was limited by the use of dental landmarks, which are unreliable at atrophic alveolar ridges [7]. The original-mirror alignment was processed by overlapping and aligning the original and mirror models through algorithmic 3D spatial coordinate transformations, and the best sagittal plane was mathematically computed. The iterative closest point (ICP) approach was based on the least squares principle and iteratively matched the closest point with a minimum distance between the original and the matching mirror images [8, 9]. However, this method has several limitations. Point clouds for asymmetric regions were added to the computation, reducing the algorithm's accuracy [4, 8]. Like in the ICP algorithm, the Procrustes analysis (PA) algorithm selects the point cloud of the symmetric region and transforms the original model with the corresponding coordinates of the mirror model to achieve the best match [8, 9]. Nevertheless, it was still unavoidable for both approaches to add a subjective element of human intervention. Zhu et al. constructed a 3D facial median sagittal plane by implementing this approach with the weighted Procrustes analysis (WPA) algorithm. The average angle error between the WPA and the manually defined planes was 0.73° ± 0.50° [10]. The PRS-Net model based on the ShapeNet dataset automated the creation of the 3D point cloud data symmetry plane, which was used as an important reference for the automated generation of the face median sagittal plane [11].

However, the use of deep learning techniques to construct sagittal planes is still in its infancy. In addition, research on constructing MMSP based on deep learning algorithms is rare. The purpose of this study was to automatically determine the mandibular midsagittal plane by segmenting it from CBCT images and accurately identifying its 3D landmarks using a deep learning algorithm (Fig. 1), thus providing a simple and accurate method for the clinical judgment of mandibular symmetry.

Methods

Data collection

Four hundred subjects aged 18 to 45 years were enrolled from the Anhui Medical University Stomatological Hospital from 2018 to 2022. All of the subjects were randomly divided into a training group (n = 360) and a validation group (n = 40). Exclusion criteria: mandible fractures or resorption, malformations, or incomplete CBCT images. Fifty craniofacial 3D images of morphologically normal people were obtained in the test group. Ethical approval was obtained from the Anhui Medical University Stomatological Hospital (PJ: T2020010). Written informed consent was obtained from all participants. The inclusion criteria were as follows: (1) aged 18 to 45 years (28 females and 22 males, mean age 28.02 ± 8.03 years); (2) 0° < ANB angle < 4°, normal overjet, and overbite; (3) had no missing teeth except for the third molars; (4) had a complete CBCT scan covering the lower 2/3 of the maxillofacial region. Exclusion criteria: (1) systemic or genetic disorders that would influence mandible growth; (2) no cleft lip or palate, craniofacial syndromes, or deformities resulting from trauma or tumor; (3) no previous craniofacial surgery, facial fractures, or facial surgery.

Image acquisition

All the CBCT images were acquired from a Meyer software (mDX-13STSP1A, Hefei, Anhui) at the following settings: 5 mA, 120 kV, exposure time of 20 s, scanning area of 23 × 18 cm, and focal point nominal of 0.5 × 0.5 mm. CBCT data were exported to Digital Imaging and Communications in Medicine (DICOM) files and imported into MyDentViewer (a tool for dental image processing software that supports image browsing, measurement annotation, and digital implant simulation; version 1.0; Meyer, Hefei, Anhui) to reconstruct 3D images.

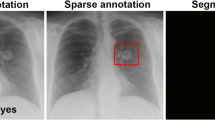

PointRend and PoseNet development

The PointRend algorithm architecture for automatic mandible segmentation from CBCT images is shown in Additional file 1 (Figure 1). To avoid affecting mandibular measurements, the algorithm removes the crown and alveolar bone (detailed description in Additional file 1). The identification of the 3D landmarks of the mandible using the PoseNet algorithm is shown in Additional file 2. 3D mandibular and landmarks images are shown in Fig. 2.

Anatomical landmarks of the mandible. A. right view; B. front view ① “supramentale” ② “pogonion” ③ “gnathion” ④ “menton” ⑤ “genial tubercle” ⑥ “fossa of mandibular foramen” ⑦ “mental foramen” ⑧ “gonion” ⑨ “condylion superius” ⑩ “condylion medialis” ⑪ “condylion lateralis” ⑫ “coronoid superius” ⑬ “sigmoid notch” ⑭ “ramus point” ⑮ “Jlat” ⑯ “Jmed”

Template mapping

The template mapping technique ensured that 17,415 uniformly sampled quasi-landmarks were automatically identified on the entire mandibular surface [12]. Using the robust Procrustes alignment algorithm, the pointwise surface-to-surface distance between the original and mirror models in 3D space was calculated, which was expressed as the overall asymmetry index (AI). The position and severity of mandibular asymmetry are shown by a color map in millimeters [4, 13].

Assessment of MMSP

Five central landmarks and the midpoint of 2 bilateral landmarks (mandibular foramen and mental foramen) composed 35 planes. Each plane was a mirror plane, and the first four planes with the lowest AIs were chosen (Fig. 3). The ideal MMSP was determined by clinical indices: distance, volume difference, and similarity index, in each of the four sagittal planes. Similarity index: sagittal plane mirroring of the left and right mandibles. Volumes were computed from nonoverlapping sections from overlapping images. The similarity index measures mandibular symmetry by comparing the overlapping volume to the total volume [2]. The similarity index was calculated as follows: 2 ∗ intersection (A, B)/ (A + B) (Fig. 4a, b, c, d).

Statistical analysis

All the statistical analyses were performed with SPSS software (version 27.0; IBM, Armonk, NY), with P < 0.05 indicating statistical significance. A paired-sample t test was used to compare the mean differences between the reference plane and sagittal plane measurements.

Results

Model evaluation

Automatic mandibular segmentation took 10 ± 1.5 s, whereas the two operators averaged 2067.9 ± 425.91 s and 1987.9 ± 391 s, respectively (P = 0.183). The automated method exceeded the evaluations performed by two radiologists, achieving a Dice similarity coefficient of 0.98.

Accuracy of landmarks identification

The PointRend method was used to locate anatomic landmarks within 0.5 s. The mean error of the 27 landmarks was 1.04 ± 0.28 mm; the error for the Me was the lowest (0.61 ± 0.18 mm), and that for the Conlat-R was the greatest (1.52 ± 0.28 mm) (Table 1).

Plane evaluation

The first four planes of AI were B-Gn-F (1.6), B-Me-F (1.95), B-Pog-F (1.97), and B-G-F (2.15) (Table 2). The B-Gn-F plane has the smallest error among the four planes. Except for Cor (P < 0.05), Go (P < 0.05), and RP (P < 0.01), the other seven landmarks showed no statistically significant differences in distance (P > 0.05) (Table 3). The difference in volume between the two sides was not statistically significant (P = 0.671). Nevertheless, the symmetry index was significantly different (P < 0.01). The other 3 planes were significantly different from the reference plane except for the difference in mandibular volume on both sides (Table 4).

Discussion

In recent years, artificial intelligence has been increasingly used in the field of medicine. In the field of orthodontics, the development of automatic cephalometric analysis is urgently needed [14]. We combined deep learning for mandibular segmentation, automated localization of landmarks, and automated construction of MMSP to enhance clinical efficiency and reveal the area and extent of mandibular asymmetry more precisely and intuitively. We demonstrated that the B-Gn-F plane is closest to the sagittal plane of the mandible.

Accurate segmentation of the mandible on CBCT is necessary for 3D analysis of the mandible. Semiautomatic segmentation methods based on threshold and region-growing algorithms are time-consuming and subjective and cannot be widely used in clinical practice [15,16,17]. Deep learning algorithms are more effective and accurate than traditional segmentation methods. Recently, medical image segmentation has employed artificial intelligence image segmentation techniques, and a convolutional neural network (CNN), a deep learning algorithm, has been commonly employed to analyze images [18]. CNNs learn task-specific features from data and are effective at image categorization, target identification, and recognition [17, 19]. Verhelst et al. constructed a 3D model of the mandible using a layered 3D U-Net architecture deep learning algorithm for direct segmentation of high-resolution CBCT images in 17 s, a 71.3-fold reduction compared to semiautomated segmentation [17]. Robert et al. created in-house segmentation software that increased the segmentation accuracy to 94.2% while decreasing the segmentation time to 2 min and 3 s. Segmenting skull bones with Mimics software provided Dice similarity coefficients of 0.924 and 0.949 for the maxilla and mandible, respectively, compared to the ground truth [20]. However, in this study, the time needed for mandibular segmentation by the PointRend algorithm was reduced to 10 ± 1.5 s, and the Dice similarity coefficient was 0.98 [17]. PointRend (point rendering) based on CNNs is an iterative segmentation technique proposed for efficient picture target recognition and segmentation [21, 22]. Inspired by computer graphics image rendering, this novel image segmentation method solves pixel labeling tasks and over- and under-sampling issues by treating image segmentation as a rendering problem and generating high-resolution segmentation masks [21,22,23]. With improved segmentation efficiency and application in CBCT 3D data, forward processing was performed directly on the whole sample, and the overall features of the mandible were better recognized from 3D space. The mandible in the image slices exhibited similar localized features to those of some regions of the maxilla and teeth. Notably, a clearer and more complete mandibular boundary can be extracted by iterative upsampling of only the edge points at the end of the model using the multilevel information of the network without affecting most of the foreground pixels.

3D anatomical landmarks reflect the morphological characteristics of the mandible and are the basis of 3D mandibular analysis. Manual landmark localization relies on doctors' clinical expertise and is tedious [24, 25]. The You-Only Look-Once version 3 (YOLOv3) algorithm was applied to 1028 cephalograms to automatically identify 80 cephalometric landmarks with a manual landmark average error of 1.46 ± 2.97 mm [25]. Zhang et al. simultaneously achieved the joint bone segmentation and landmark digitization (JSD) framework by context-guided fully convolutional networks (FCNs) with an average error of 1.1 mm for 15 anatomical landmarks [26]. In this study, we used the PoseNet algorithm [29], which has high expansibility and can accurately locate key points in 3D CBCT images without adding additional structure or computations to the model. On average, the error of the 27 landmarks was 1.04 ± 0.28 mm, while the clinical acceptability was 2 mm [24, 25, 27]. The mean error of the central landmarks was 0.63 ± 0.29 mm, which was smaller than that of the lateral landmarks 1.13 ± 0.28 mm, probably because the central landmarks were more accurate and reliable [24]. According to Schlicher et al., landmarks with a distinct anatomical structural contour showed fewer errors than landmarks that were located on curves, which also supported this result [28].

Previous studies have shown that central markers are more accurate than bilateral markers when the sagittal plane of the face is used. However, for the mandible, the bony markers at the mandible are very close together [29]. The reliability and stability of the MF and F points were high, and both sides of the structure were symmetrical, with no significant difference [7, 30, 31]. The F point was located on the posterior region of the mandible, and the fact that 4 planes were screened in this experiment provided additional evidence that this point is involved in the composition of the sagittal plane with a high degree of stability. Therefore, in this study, the MF and F points were added to form the sagittal plane.

The template mapping approach based on the MeshMonk algorithm was used to automatically assess the AIs of 35 planes. Technical template mapping and 3D surface-to-surface deviation analysis have become important scientific tools in orthodontics for studying changes in skeletal morphology [9, 32, 33]. This method was used in this study to mirror and superimpose a mandibular model onto a 3D color map to precisely identify morphological asymmetries. This approach, which was more intuitive and accurate than linear and angular measurements, assesses the asymmetry of the whole mandibular surface [4]. The ICP was not selected as a reference plane because although both methods use the root-mean-square value, the ICP algorithm calculates the closest distance between the original model and the mirror model, whereas the template mapping method calculates the distance between the original model and the mirror corresponding quasi-landmarks, which has the advantages of more points, intelligence, and good correspondence relationships [9]. When the mandible was heavily asymmetrical, the precision of the ICP algorithm was reduced [4]. Many previous studies used the B-G-Me plane as the MMSP to study the symmetry of mandibles [5, 6]. The results of the present study showed that the AI of the B-G-Me plane was 7.49 (Table 2), which differed significantly from the results obtained for the B-Gn-F plane (AI = 1.6). When the 3 points were spread to form a larger and broader triangle, the stability of the plane increased [34]. In contrast, 3 points in the B-G-Me plane were located at the mandibular symphysis, close enough to make the plane unstable.

Mandibular asymmetry is a complicated condition that is categorized into morphological and spatial structural differences between two sides [35]. On both sides of the mandible, the difference in volume between the 4 planes and the reference plane was not statistically significant (P > 0.05; Table 4). Although volumetric data are often used to compare differences between two sides of the mandible, these data do not allow for quantitative assessment of symmetrical and asymmetrical regions [36]. The similarity index and nonoverlapping volume can reflect both morphological and structural differences in the mandible [2]. Significant differences were found between the B-Gn-F plane and the reference plane (P < 0.01) (Table 4). This was probably because the algorithmic analysis examined the shape of the mandible, while the anatomical landmarks method simply looked at the structure. However, the mean value of the overall similarity index was closest to that of the reference plane. Therefore, it is reasonable to use the B-Gn-F plane as the MMSP in the clinical analysis of the mandible.

To assess mandibular asymmetry, landmarks-based analyses were applied. In this method, distance, volumetric data, similarity index, surface-to-surface deviation analysis, and template mapping techniques were combined to screen for relatively accurate MMSP data, facilitating the identification of regions affected by asymmetry. The limitations of this study are that the automated localization accuracy of severe mandibular deformity data was not evaluated. Hence, only adults with mandible symmetry were studied, while other asymmetry types were not confirmed. The MyDentViewer software is the unopen source and is currently used for collaborative research.

Conclusions

In this study, automated segmentation of the mandible and localization of anatomical landmarks were used to realize automatic cephalometric analysis. Furthermore, the B-Gn-F plane can be used for clinical application because of the symmetry of the mandibular structure.

The benefits of artificial intelligence go beyond shortening the time needed to obtain asymmetric information. Combining deviation analysis with artificial intelligence provides a more efficient workflow, and accurate quantification of mandibular asymmetry will help orthodontists and surgeons better understand asymmetry and guide treatment planning. Color-coded charts not only are an important tool for diagnosis, but also allow patients and parents to easily understand asymmetry.

Availability of data and materials

The datasets used and analyzed during the current study are available from the corresponding author upon reasonable request.

Abbreviations

- CBCT:

-

Cone beam computed tomography

- MMSP:

-

Mandibular midsagittal plane

- AI:

-

Asymmetry index

- 3D:

-

Three-dimensional

- 2D:

-

Two-dimensional

- CMSP:

-

Craniomaxillary median sagittal plane

- ICC:

-

Intraclass correlation coefficient

- ICP:

-

Iterative closest point

- WPA:

-

Weighted Procrustes analysis

References

Fang JJ, Tu YH, Wong TY, Liu JK, Zhang YX, Leong IF, et al. Evaluation of mandibular contour in patients with significant facial asymmetry. Int J Oral Maxillofac Surg. 2016;45(7):922–31.

Kwon SM, Hwang JJ, Jung YH, Cho BH, Lee KJ, Hwang CJ, et al. Similarity index for intuitive assessment of three-dimensional facial asymmetry. Sci Rep. 2019;9(1):10959.

AlHadidi A, Cevidanes LH, Mol A, Ludlow J, Styner M. Comparison of two methods for quantitative assessment of mandibular asymmetry using cone beam computed tomography image volumes. Dentomaxillofac Radiol. 2011;40(6):351–7.

Fan Y, Zhang Y, Chen G, He W, Song G, Matthews H, et al. Automated assessment of mandibular shape asymmetry in 3-dimensions. Am J Orthod Dentofacial Orthop. 2022;161(5):698–707.

You KH, Lee KJ, Lee SH, Baik HS. Three-dimensional computed tomography analysis of mandibular morphology in patients with facial asymmetry and mandibular prognathism. Am J Orthod Dentofacial Orthop. 2010;138(5):540.e1–8.

Kim SJ, Lee KJ, Lee SH, Baik HS. Morphologic relationship between the cranial base and the mandible in patients with facial asymmetry and mandibular prognathism. Am J Orthod Dentofacial Orthop. 2013;144(3):330–40.

Pittayapat P, Jacobs R, Bornstein MM, Odri GA, Kwon MS, Lambrichts I, et al. A new mandible-specific landmark reference system for three-dimensional cephalometry using cone-beam computed tomography. Eur J Orthod. 2016;38(6):563–8.

Xiong Y, Zhao Y, Yang H, Sun Y, Wang Y. Comparison between interactive closest point and procrustes analysis for determining the median sagittal plane of three-dimensional facial data. J Craniofac Surg. 2016;27(2):441–4.

Zhu Y, Zhao Y, Wang Y. A review of three-dimensional facial asymmetry analysis methods. Symmetry. 2022;14(7):1414.

Zhu YJ, Zhao YJ, Zheng SW, Wen AN, Fu XL, Wang Y. A method for constructing three-dimensional face symmetry reference plane based on weighted shape analysis algorithm. Beijing da xue xue bao Yi xue ban = Journal of Peking University Health sciences. 2020;53(1):220–6.

Gao L, Zhang LX, Meng HY, Ren YH, Lai YK, Kobbelt L. PRS-net: planar reflective symmetry detection net for 3d models. IEEE transactions on visualization and computer graphics 2021;27(6):3007–18.

Verhelst P-J, Matthews H, Verstraete L, Van der Cruyssen F, Mulier D, Croonenborghs TM, et al. Automatic 3D dense phenotyping provides reliable and accurate shape quantification of the human mandible. Scientific Reports 2021;11(1).

Fan Y, Schneider P, Matthews H, Roberts WE, Xu T, Wei R, et al. 3D assessment of mandibular skeletal effects produced by the Herbst appliance. BMC Oral Health. 2020;20(1):117.

Moon JH, Hwang HW, Yu Y, Kim MG, Donatelli RE, Lee SJ. How much deep learning is enough for automatic identification to be reliable? Angle Orthod. 2020;90(6):823–30.

Le C, Deleat-Besson R, Prieto J, Brosset S, Dumont M, Zhang W, et al. Automatic segmentation of mandibular ramus and condyles. Annu Int Conf IEEE Eng Med Biol Soc. 2021Nov:2021:2952–5.

Qiu B, Guo J, Kraeima J, Glas HH, Borra RJH, Witjes MJH, et al. Automatic segmentation of the mandible from computed tomography scans for 3D virtual surgical planning using the convolutional neural network. Phys Med Biol. 2019;64(17):175020.

Vinayahalingam S, Berends B, Baan F, Moin DA, van Luijn R, Berge S, et al. Deep learning for automated segmentation of the temporomandibular joint. J Dent. 2023;132: 104475.

Verhelst PJ, Smolders A, Beznik T, Meewis J, Vandemeulebroucke A, Shaheen E, et al. Layered deep learning for automatic mandibular segmentation in cone-beam computed tomography. J Dent. 2021;114:103786.

Lo Giudice A, Ronsivalle V, Spampinato C, Leonardi R. Fully automatic segmentation of the mandible based on convolutional neural networks (CNNs). Orthod Craniofac Res. 2021;24 Suppl 2 :100–7.

Ilesan RR, Beyer M, Kunz C, Thieringer FM. Comparison of artificial intelligence-based applications for mandible segmentation: from established platforms to in-house-developed software. Bioengineering. 2023;10(5):604.

MinJin Hwang BD, Enrique Dehaerne, Sandip Halder, Young-han Shin. SEMI-PointRend: Improved Semiconductor Wafer Defect Classification and Segmentation as Rendering. arXiv - CS - Computer Vision and Pattern Recognition. 2023.

Girshick AKYWKHR. PointRend: Image Segmentation as Rendering. arXiv - CS - Computer Vision and Pattern Recognition 2019.

Dong Y, Zhang Y, Hou Y, Tong X, Wu Q, Zhou Z, et al. Damage recognition of road auxiliary facilities based on deep convolution network for segmentation and image region correction. Advances in Civil Engineering. 2022;2022:1–10.

Blum FMS, Mohlhenrich SC, Raith S, Pankert T, Peters F, Wolf M, et al. Evaluation of an artificial intelligence-based algorithm for automated localization of craniofacial landmarks. Clin Oral Investig. 2023;27(5):2255–65.

Hwang HW, Park JH, Moon JH, Yu Y, Kim H, Her SB, et al. Automated identification of cephalometric landmarks: part 2-might it be better than human? Angle Orthod. 2020;90(1):69–76.

Zhang J, Liu M, Wang L, Chen S, Yuan P, Li J, et al. Context-guided fully convolutional networks for joint craniomaxillofacial bone segmentation and landmark digitization. Med Image Anal. 2020;60:101621.

Dot G, Schouman T, Chang S, Rafflenbeul F, Kerbrat A, Rouch P, et al. Automatic 3-dimensional cephalometric landmarking via deep learning. J Dent Res. 2022;101(11):1380–7.

Schlicher W, Nielsen I, Huang JC, Maki K, Hatcher DC, Miller AJ. Consistency and precision of landmark identification in three-dimensional cone beam computed tomography scans. Eur J Orthod. 2012;34(3):263–75.

Dobai A, Markella Z, Vízkelety T, Fouquet C, Rosta A, Barabás J. Landmark-based midsagittal plane analysis in patients with facial symmetry and asymmetry based on CBCT analysis tomography.Journal of Orofacial Orthopedics / Fortschritte der Kieferorthopädie. 2018;79(6):371–9.

Gada SK. Assessment of position and bilateral symmetry of occurrence of mental foramen in dentate Asian population. Journal of Clinical and Diagnostic Research. 2014;8(2):203–5

Findik Y, Yildirim D, Baykul T. Three-dimensional anatomic analysis of the lingula and mandibular foramen. Journal of Craniofacial Surgery. 2014;25(2):607–10.

Lo Giudice A, Ronsivalle V, Rustico L, Aboulazm K, Isola G, Palazzo G. Evaluation of the accuracy of orthodontic models prototyped with entry-level LCD-based 3D printers: a study using surface-based superimposition and deviation analysis. Clin Oral Investig. 2022;26(1):303–12.

Lo Giudice A, Ronsivalle V, Santonocito S, Lucchese A, Venezia P, Marzo G, et al. Digital analysis of the occlusal changes and palatal morphology using elastodontic devices. A prospective clinical study including Class II subjects in mixed dentition. Eur J Paediatr Dent. 2022;23(4):275–80.

Green MN, Bloom JM, Kulbersh R. A simple and accurate craniofacial midsagittal plane definition. Am J Orthod Dentofacial Orthop. 2017;152(3):355–63.

Duran GS, Dindaroglu F, Kutlu P. Hard- and soft-tissue symmetry comparison in patients with class III malocclusion. Am J Orthod Dentofacial Orthop. 2019;155(4):509–22.

Lo Giudice A, Ronsivalle V, Gastaldi G, Leonardi R. Assessment of the accuracy of imaging software for 3D rendering of the upper airway, usable in orthodontic and craniofacial clinical settings. Prog Orthod. 2022;23(1):22.

Acknowledgements

We thank Hefei Meyer Optoelectronic Technology Inc. for providing MyDentViewer software.

Funding

This work was supported by the Health Commission of Anhui Province Subjects (AHWJ2022b002) and the Discipline Construction “Feng Yuan” Cooperation Program of the Faculty of Dentistry of Anhui Medical University (2022xkfyts07).

Author information

Authors and Affiliations

Contributions

YW, WW, JX, YL, and HZ conceived and designed the study. YW, MC, and MS contributed to data collection and data labeling. WW and ZW performed data processing and data analysis. YW, WW drafted the manuscript. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

The studies involving human participants were reviewed and approved by the ethical committee of Anhui Medical University Stomatological Hospital. The patients/participants provided their written informed consent to participate in this study.

Consent for publication

All authors agree to publish.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Figure S1.

Framework of Point-Rend deep learning segmentation of mandible.

Additional file 2: Table S1.

Description of mandibular landmarks.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Wang, Y., Wu, W., Christelle, M. et al. Automated localization of mandibular landmarks in the construction of mandibular median sagittal plane. Eur J Med Res 29, 84 (2024). https://doi.org/10.1186/s40001-024-01681-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40001-024-01681-2