Abstract

Background

We aimed to develop a roadmap for conducting regular, sustainable, and strategic qualitative assessments of antibiotic use in medical institutions within the Republic of Korea.

Methods

A literature review on the current state of qualitative antibiotic assessments was conducted, followed by one open round to collect ideas, two scoring rounds to establish consensus, and one panel meeting between them. The expert panel comprised 20 experts in infectious disease or antibiotic stewardship.

Results

The response rate for all three surveys was 95% (19/20), while the panel meeting attendance rate was 90% (18/20). The following long-term goals were defined to assess the annual use of antibacterial and antifungal agents in all medical institutions, including clinics. The panel agreed that random sampling of antibiotic prescriptions was the most suitable method of selecting antibiotics for qualitative assessment, with the additional possibility of evaluating specific antibiotics or infectious diseases that warrant closer evaluation for promoting appropriate antibiotic use. The plan for utilization of results from evaluation involves providing feedback while maintaining anonymity and disclosure. It includes a quantitative assessment of antibiotic prescriptions and resistance rates to compare against institutional benchmarks. Furthermore, it was agreed to link the evaluation findings to the national antibiotic stewardship programme, enabling policy and institutional approaches to address frequently misused items, identified during the evaluation.

Conclusion

This study provides a framework for establishing a qualitative assessment of antimicrobial use for medical institutions at a national level in the Republic of Korea.

Similar content being viewed by others

Background

Qualitative assessments of antibiotic use play an essential role in antibiotic stewardship programs (ASPs), as they not only identify patterns of inappropriate antibiotic prescribing and establish intervention strategies but also evaluate the effectiveness of these interventions [1,2,3,4]. Given that conventional qualitative antibiotic assessments require significant human resources, it is crucial to develop strategies for effectiveness based on the specific resources available in each medical environment [5].

Since the 2000s, various countries, including Australia, the United States, Europe, and the Republic of Korea (ROK), have conducted qualitative antibiotic assessments, with approximately 20–55% of antibiotic prescriptions in these countries deemed inappropriate [6,7,8,9,10,11]. Australia has been operating the National Antimicrobial Prescribing Survey since 2010 to evaluate antibiotic adequacy and has expanded the scope of diseases and medical institutions subject to qualitative antibiotic assessment [6]. In the United States, the Center for Disease Control and Prevention (CDC) has been leading the surveillance of healthcare-associated infections and qualitative antibiotic assessment since 2009 [12]. In Europe, the European Surveillance of Antimicrobial Consumption Network has conducted similar studies on both quantitative and qualitative antibiotic assessments [7, 8]. In the ROK, a nationwide qualitative antibiotic assessment began in 2018 as a project of the Korea Disease Control and Prevention Agency (KDCA), and the scope of target diseases and institutions has been expanded [2, 9, 10]. However, there are currently no standardised methods for qualitative assessment in terms of target diseases, the scope of medical institutions, and evaluation methods [2, 13]. Furthermore, despite the Korean action plan on antimicrobial resistance, ASPs in most hospitals are not well established and are mostly limited to restrictive measures for designated antimicrobials [14].

To ensure systematic and sustainable qualitative antibiotic assessments for effective implementation in ASPs, it is essential to develop a framework outlining the current assessment method and scope, as well as long-term goals for the future. Therefore, this study aimed to create a comprehensive framework for conducting regular and strategic qualitative antibiotic assessments in Korean medical institutions.

Methods

Overview and assembly of panel

A modified Delphi study was conducted between June and August of 2022, which included three rounds of online surveys and a virtual meeting with an expert panel. Panellists were selected to include a range of experts and policymakers involved in antibiotic use and stewardship [15]. The expert panel comprised of 20 members, including five experts from the antibiotic resistance committee in the Korean Society of Infectious Diseases, four experts from the Korea National Antimicrobial Use Analysis System (KONAS), seven infectious disease specialists with experience in ASP-related research, three experts on policy regarding antibiotic resistance representing the government, and one pharmacist from the Korea Society of Health-System Pharmacists.

Development of survey items and the Delphi process

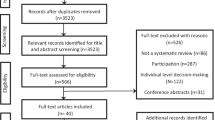

The study process is outlined in Fig. 1. An email questionnaire was sent to the expert panel members for each of the three rounds of the study, and responses were collected over a 10-days period. A reminder was sent on the 5th and 8th day of each survey to encourage participation. The survey questions were formulated following discussion among study team members (SYP, YCK, SMM, BK, RL, and HBK), drawing from a literature review of qualitative antibiotic assessment systems and researches from Europe, the US, Australia, ROK, and the global point prevalence survey of antimicrobial consumption and resistance (Global-PPS) [2]. The literature review results were summarised in the first survey to provide basic information for the subsequent surveys.

The first round of the survey aimed to gather insights and ideas from the expert panel. A questionnaire consisting of open-ended questions and a summary of the literature review were distributed to the panel members via email (Additional file 1). The questionnaire for the second survey was developed based on the panel members’ responses to the first survey. The experts’ responses for each item were evaluated using a 5-point Likert scale. The scale ranged from 1, indicating strong disagreement, to 5, indicating strong agreement, with 4 and 5 considered to signify ‘agree’. The panel members were also invited to add their opinions on each question. A virtual meeting was held between the second and third surveys, and all panel members were invited to participate. The meeting's agenda focused on exchanging opinions regarding the questionnaire items present in the second survey. A report with the results of the second survey was sent to all participants before the meeting. The results of the second survey were displayed in the third questionnaire, with central tendency and dispersion shown as median and interquartile ranges. In the third round, the panel members’ opinions on each item were again evaluated using a 5-point Likert scale. Respondents were invited to comment if their opinions fell outside the majority of the other experts' estimates.

Validation of items

We calculated content validity ratios (CVRs) to analyse the responses provided in the second and third surveys and select the items that showed the highest levels of agreement among the panel members. The formula used to calculate CVR was as follows: CVR = (ne − N/2)/(N/2), where ‘ne’ represents the number of panel experts who rated a given item as ‘agree’, and ‘N’ represents the total number of panellists. CVR values ranged from − 1 to + 1, with higher values indicating greater agreement among experts. CVR values above the cut-off level (minimum CVR values) were generally considered to have achieved a sufficient level of agreement. The minimum CVR values were determined based on the number of experts participating in each round, which were 0.44 for 18 participants and 0.47 for 19 participants [16, 17]. We used minimum CVRs as consensus criteria. The consensus was achieved after fixed three-round survey.

Results

The response rates for the first, second, and third rounds were all 95% (19/20). Eighteen expert panellists (90%) participated in the virtual meeting (Additional file 2: Table S1). One panellist did not complete the survey and provided partial answers; as a result, we applied different CVR standards for each item. The summarised results are presented in Fig. 2 and Additional file 2: Tables S2 and S3.

Practical challenges in conducting qualitative antibiotic assessments

The most frequently reported practical challenges in conducting qualitative antibiotic assessments included inadequate financial compensation (mean score 4.95), a shortage of qualified personnel, lack of institutional support (both scoring 4.84), limited awareness among management (4.79), the absence of computerised programs (4.42), inadequate long-term planning (4.05), and lack of agreed-upon evaluation criteria (3.84).

Current options and long-term goals for antibiotic quality assessments

Table 1 displays the Delphi survey results regarding antibiotic quality assessment operators, cycles, and target organisations. All experts agreed that the KDCA or its professional governmental organisations should manage antibiotic quality assessments. Most experts agreed that the current appropriate interval for antibiotic quality assessments is at least once a year (13/19); however, the agreement rate did not reach the consensus standard (CVR = 0.368). Conversely, out of 19 experts, 16 agreed that antibiotic quality assessments should be conducted at least once a year in the future, reaching a consensus (CVR = 0.684). As for the scope of target institutions, the experts agreed that tertiary care hospitals (19/19, CVR = 1.000), secondary care hospitals (18/19, CVR = 0.895), and hospitals with 500 or more beds (19/19, CVR = 1.000) are currently the most appropriate, whereas specialty hospitals (19/19, CVR = 1.000), clinics (16/19, CVR = 0.684), and hospitals with 300 or more beds (18/19, CVR = 0.895) should be included in the future.

Table 2 presents the Delphi survey results concerning the types of antibiotics to include in the quality assessments. Experts reached a consensus on systemic antibiotics (19/19, CVR = 1.000) and antibacterial agents (19/19, CVR = 1.000) as the appropriate range for the current quality assessments and agreed that antifungal agents (16/18, CVR = 0.778) should be included in the future. By contrast, antiviral, antitubercular, antiprotozoal, antimalarial, and locally administered antibiotics were not considered within the scope of the survey. Experts agreed that all types of antibiotics should be included in the assessment, regardless of prescription purpose. Regarding the method of extracting antibiotic use information for evaluation, the highest agreement rate was for the assessment of randomised samples rather than a full survey during a given period (19/19, CVR = 1.000). Combining an assessment of antibiotics prescribed within a specific period with an assessment of specific antibiotics or conditions as needed (18/19, CVR = 0.895) were identified as short-term and long-term plans, respectively.

Reporting/feedback on antibiotic quality assessment results

Regarding ways to encourage organisations to participate in antibiotic quality assessments, ‘establishing payment/incentives (19/19, CVR = 1.000) and incorporating the assessment into medical quality assessment or accreditation evaluation (19/19, CVR = 1.000) were strongly recommended by the panellists. For reporting/feedback methods, there was a strong consensus for anonymised disclosure through reports with quantitative assessments of antibiotic use and resistance rates and for individual healthcare organisations to view their results through a private website (19/19, CVR = 1.000). By contrast, publishing quality assessment results to the public or disclosing regional and national results to sites that reveal individual evaluation results of medical institutions, such as Health Insurance Review and Assessment Service (HIRA), was not preferred (0/19, CVR = − 1.000 and 5/19, CVR = − 0.474, respectively).

Strategies for linkage between the antibiotic assessment results and antimicrobial stewardship programs

Regarding strategies for linkage to ASPs, there was a positive consensus on reporting quality assessment results to management and staff within healthcare organisations (19/19%, CVR = 1.000). Additionally, a policy and regulatory approach (such as providing incentives, including in healthcare quality evaluations) and the implementation of healthcare professional education initiatives (such as mandatory education for medical associations) for frequent misuse of antibiotics identified in qualitative evaluation results were agreed upon by the experts (19/19, CVR = 1.000). While there was positive consensus regarding public disclosure and the promotion of qualitative analysis results on antibiotic use among the general population, three experts remained neutral (16/19, CVR = 0.684; Table 3).

Discussion

The significance of this study lies in the establishment of goals and detailed methods for conducting qualitative assessment of antibiotics, both today and long-term. Given the absence of standard recommendations for qualitative antibiotic assessments, the experts who participated in the survey were presented with various approaches currently being used in different countries. By identifying the strengths and weaknesses of these approaches, a more objective application of qualitative antibiotic assessments in the ROK can hopefully be achieved.

The consensus reached regarding the long-term goal for qualitative assessment of antibiotics involved conducting annual assessments by the KDCA for antibacterial and antifungal agents. Antibiotics prescribed for specific periods will be assessed through random sampling, and evaluations will be conducted for particular antibiotics or diseases as needed. The short-term goals largely align with the long-term goals, except that primary hospitals, clinics, specialised medical institutions, and hospitals with fewer than 300 beds will not be included in qualitative antibiotic assessments. These findings indicate that infectious disease or ASP experts in the ROK acknowledge the significance of qualitative assessments of antibiotics, even at present, and agree on the necessity of expanding the assessments to eventually include clinics. There is currently no consensus regarding the frequency of antibiotic evaluations, with experts agreeing on an annual evaluation for the long term. In the short term, annual evaluations are deemed unfeasible owing to resource constraints. It may be necessary to initiate a 2–5 years cycle, but the long-term goal is to gradually increase the frequency of evaluations to an annual basis, as is currently done in Australia [6].

Determining how to select the antibiotics to be evaluated is crucial, as it is connected to the required resources. In this study, there was a long-term consensus on using random sampling while also incorporating time periods. Evaluating all patients would be labour-intensive; therefore, random sampling presents a sustainable assessment method in the long run. In the ROK, qualitative assessments of antibiotic prescriptions were conducted in 2018 and 2019 using a 1-day complete enumeration and a 2-days random sampling method, respectively. Despite including more institutions in the second survey, the rates of inappropriate antibiotic prescriptions were similar in both surveys [2, 9].

Evaluating the duration of antibiotic use is an important aspect of qualitative assessment but is difficult to conduct using point surveillance methods [2]. In 2021 and 2022, disease-specific evaluations for urinary tract infections and bacteraemia were conducted, and the appropriate antibiotic prescription period was determined by evaluating the entire period. This approach overcame the challenge of assessing many quality indicators with other point surveillance methods [2]. It was agreed that specific quality indicators should be used for assessment, which cannot rely solely on expert judgement, and continuous updates are necessary [18].

The lack of expert consensus on using HIRA data for qualitative assessment of antibiotic prescriptions to minimise the labour input required from individual institutions is noteworthy, as only 47.4% of respondents agreed to its use. This could be due to concerns surrounding code shifting and the unavailability of clinical data in the HIRA database, as previously reported [19]. The survey results emphasised the need for significant human resources for qualitative assessments of antibiotics and the importance of actively seeking funding to recruit personnel for this purpose. However, since manpower alone would not cover all institutions, evaluation based on a national database method should be considered as a supplementary measure for some areas [20,21,22].

It was agreed that after assessments are conducted, the feedback method should involve anonymising and publicly disclosing the data, as well as including it in the antibiotic use/resistance report. In the ROK, KONAS conducts quantitative assessments of antibiotics, and annual antibiotic resistance reports are published through Kor-GLASS, the Korean national antimicrobial resistance surveillance system based on the GLASS platform [23,24,25]. The integration of reported data is expected to identify the impact of ASP activity on the antibiotic resistance rate through both quantitative and qualitative antibiotic assessments. If the same analysis is also performed on healthcare-associated infection rates, as is done in the US, it may serve as an indicator of the effect of the ASP [12].

It is noteworthy that the entire expert panel agreed on the utilization of the results of the qualitative assessment for the national ASP. The qualitative assessment results enable physicians to identify antibiotics that are being prescribed incorrectly and can be used as evidence to improve the use of those antibiotics. The qualitative assessment implemented at a national level provide an opportunity to reduce the amount of antibiotics used inappropriately, which may be related to decrease in antibiotic resistance. It is expected that the integration of antibiotic qualitative assessment into healthcare quality assessment or the introduction of incentive systems will increase the interest and participation of medical institutions in ASPs. However, three experts in this Delphi survey took a neutral attitude to public disclosure and publicizing the results of the qualitative assessment. Therefore, the strategy for linking the results of the qualitative assessment and the national ASP needs to be discussed further.

This study had some limitations. Firstly, there are several unanswered questions about conducting a large-scale, population-based assessment of antibiotic appropriateness. We did not discuss what criteria should be used to assess antibiotic appropriateness, how many cases should be sampled from each hospital, or who should actually perform the antibiotic appropriateness audits. Further research is warranted to address these questions. Secondly, the international applicability of this study is limited because the survey was only administered to experts in the ROK. However, the study framework itself could be used as a template in other countries, and the methodology presented could also be used to make country-specific adjustments. Lastly, consensus/closing criteria applied in studies using Delphi procedure are varied because there is no standard for that criteria [26]. In this study, we used CVRs, which are widely used to quantify content validity, and tried to effectively reflect the opinions of experts with consensus criteria, considering the number of experts.

Conclusions

This Delphi survey established an expert consensus regarding both short- and long-term roadmaps for conducting qualitative assessments of antibiotics in the ROK. The plan for quality assessment of antibiotic use included target institutions, evaluation interval, assessment tool, methods for data extraction, and target antibiotics. The plan for utilization of results from evaluation included engagement in qualitative antibiotic assessment, reporting/feedback methods, and ASP linkage. This practice has not yet been standardised worldwide. Its outcomes are expected to promote active qualitative assessments of antibiotics and to appropriately link evaluation results to individual medical institutions and the national ASP.

Availability of data and materials

The datasets analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- ASP:

-

Antibiotic stewardship programs

- HIRA:

-

Health Insurance Review and Assessment

- IRB:

-

Institutional review board

- KDCA:

-

Korea Disease Control and Prevention Agency

- KONAS:

-

Korea National Antimicrobial Use Analysis System

- ROK:

-

Republic of Korea

References

Barlam TF, Cosgrove SE, Abbo LM, MacDougall C, Schuetz AN, Septimus EJ, et al. Implementing an antibiotic stewardship program: guidelines by the infectious diseases society of America and the society for healthcare epidemiology of America. Clin Infect Dis. 2016;62:e51-77. https://doi.org/10.1093/cid/ciw118.

Park SY, Kim YC, Lee R, Kim B, Moon SM, Kim HB, et al. Current status and prospect of qualitative assessment of antibiotics prescriptions. Infect Chemother. 2022;54:599–609. https://doi.org/10.3947/ic.2022.0158.

Magill SS, O’Leary E, Janelle SJ, Thompson DL, Dumyati G, Nadle J, et al. Changes in prevalence of health care-associated infections in U.S. hospitals. N Engl J Med. 2018;379:1732–44. https://doi.org/10.1056/NEJMoa1801550.

Cheong HS, Park KH, Kim HB, Kim SW, Kim B, Moon C, et al. Core elements for implementing antimicrobial stewardship programs in Korean general hospitals. Infect Chemother. 2022;54:637–73. https://doi.org/10.3947/ic.2022.0171.

Park SY, Chang HH, Kim B, Moon C, Lee MS, Kim JY, et al. Human resources required for antimicrobial stewardship activities for hospitalized patients in Korea. Infect Control Hosp Epidemiol. 2020;41:1429–35. https://doi.org/10.1017/ice.2020.1234.

The National Centre for Antimicrobial Stewardship (NCAS). NCAS publications. https://www.ncas-australia.org/ncas-publications. Accessed 24 Jul 2023.

Zarb P, Amadeo B, Muller A, Drapier N, Vankerckhoven V, Davey P, et al. Identification of targets for quality improvement in antimicrobial prescribing: the web-based ESAC Point Prevalence survey 2009. J Antimicrob Chemother. 2011;66:443–9. https://doi.org/10.1093/jac/dkq430.

Zarb P, Amadeo B, Muller A, Drapier N, Vankerckhoven V, Davey P, et al. Antifungal therapy in European hospitals: data from the ESAC point-prevalence surveys 2008 and 2009. Clin Microbiol Infect. 2012;18:E389–95. https://doi.org/10.1111/j.1469-0691.2012.03973.x.

Park SY, Moon SM, Kim B, Lee MJ, Park JY, Hwang S, et al. Appropriateness of antibiotic prescriptions during hospitalization and ambulatory care: a multicentre prevalence survey in Korea. J Glob Antimicrob Resist. 2022;29:253–8. https://doi.org/10.1016/j.jgar.2022.03.021.

Kim YC, Park JY, Kim B, Kim ES, Ga H, Myung R, et al. Prescriptions patterns and appropriateness of usage of antibiotics in non-teaching community hospitals in South Korea: a multicentre retrospective study. Antimicrob Resist Infect Control. 2022;11:40. https://doi.org/10.1186/s13756-022-01082-2.

Magill SS, O’Leary E, Ray SM, Kainer MA, Evans C, Bamberg WM, et al. Assessment of the appropriateness of antimicrobial use in US hospitals. JAMA Netw Open. 2021;4:e212007. https://doi.org/10.1001/jamanetworkopen.2021.9526.

Magill SS, Hellinger W, Cohen J, Kay R, Bailey C, Boland B, et al. Prevalence of healthcare-associated infections in acute care hospitals in Jacksonville. Florida Infect Control Hosp Epidemiol. 2012;33:283–91. https://doi.org/10.1086/664048.

Spivak ES, Cosgrove SE, Srinivasan A. Measuring appropriate antimicrobial use: attempts at opening the black box. Clin Infect Dis. 2016;63:1639–44. https://doi.org/10.1093/cid/ciw658.

Kim B, Lee MJ, Moon SM, Park SY, Song KH, Lee H, et al. Current status of antimicrobial stewardship programmes in Korean hospitals: results of a 2018 nationwide survey. J Hosp Infect. 2020;104:172–80. https://doi.org/10.1016/j.jhin.2019.09.003.

Nair R, Aggarwal R, Khanna D. Methods of formal consensus in classification/diagnostic criteria and guideline development. Semin Arthritis Rheum. 2011;41:95–105. https://doi.org/10.1016/j.semarthrit.2010.12.001.

Lawshe CH. A quantitative approach to content validity. Pers Psychol. 1975;28:563–75. https://doi.org/10.1111/j.1744-6570.1975.tb01393.x.

Ayre C, Scaly AJ. Critical values for Lawshe’s content validity ratio. Meas Eval Couns Dev. 2014;47:79–86. https://doi.org/10.1177/0748175613513808.

Kim B, Lee MJ, Park SY, Moon SM, Song KH, Kim TH, et al. Development of key quality indicators for appropriate antibiotic use in the Republic of Korea: results of a modified Delphi survey. Antimicrob Resist Infect Control. 2021;10:48. https://doi.org/10.1186/s13756-021-00913-y.

Kim JA, Yoon S, Kim LY, Kim DS. Towards actualizing the value potential of Korea health insurance review and assessment (HIRA) data as a resource for Health Research: strengths, limitations, applications, and strategies for optimal use of HIRA data. J Korean Med Sci. 2017;32:718–28. https://doi.org/10.3346/jkms.2017.32.5.718.

Zhao H, Wei L, Li H, Zhang M, Cao B, Bian J, et al. Appropriateness of antibiotic prescriptions in ambulatory care in China: a nationwide descriptive database study. Lancet Infect Dis. 2021;21:847–57. https://doi.org/10.1016/S1473-3099(20)30596-X.

Kim ES, Park SW, Lee CS, Gyung Kwak Y, Moon C, Kim BN. Impact of a national hospital evaluation program using clinical performance indicators on the use of surgical antibiotic prophylaxis in Korea. Int J Infect Dis. 2012;16:e187–92. https://doi.org/10.1016/j.ijid.2011.11.010.

Health Insurance Review & Assessment Service. Results of quality assessment of prescriptions in 2021. https://bktimes.net/data/board_notice/1659074624-18.pdf. Accessed 23 Apr 2023.

Kim B, Ahn SV, Kim DS, Chae J, Jeong SJ, Uh Y, et al. Development of the Korean standardized antimicrobial administration ratio as a tool for benchmarking antimicrobial use in each hospital. J Korean Med Sci. 2022;37:e191. https://doi.org/10.3346/jkms.2022.37.e191.

Kim HS, Park SY, Choi H, Park JY, Lee MS, Eun BW, et al. Development of a roadmap for the antimicrobial usage monitoring system for medical institutions in Korea: a Delphi study. Infect Chemother. 2022;54:483–92. https://doi.org/10.3947/ic.2022.0107.

Liu C, Yoon EJ, Kim D, Shin JH, Shin JH, Shin KS, et al. Antimicrobial resistance in South Korea: a report from the Korean global antimicrobial resistance surveillance system (Kor-GLASS) for 2017. J Infect Chemother. 2019;25:845–59. https://doi.org/10.1016/j.jiac.2019.06.010.

Nasa P, Jain R, Juneja D. Delphi methodology in healthcare research: how to decide its appropriateness. World J Methodol. 2021;11:116–29. https://doi.org/10.5662/wjm.v11.i4.116.

Acknowledgements

The authors would like to acknowledge Eun Hwa Choi, Jin Yong Lee, Youngdae Cho, Hyung-Sook Kim, Soo Young Choo, Mi Suk Lee, Su-Mi Choi, Shin-Woo Kim, Jun Yong Choi, Sun Hee Park, Yoon Soo Park, Ki Tae Kown, Hyun-Ha Chang, and Ji Young Park for their participation in the survey. We would also like to express our gratitude to all researchers who have participated in the antibiotic prescription quality evaluation project from 2018 to present.

Funding

This work was supported by the Research Program funded by the Korea Disease Control and Prevention Agency (2021-10-027#).

Author information

Authors and Affiliations

Consortia

Contributions

Conceptualisation: HBK; Data curation: SYP, SMM, YCK, RL, BK; Formal analysis: SYP, SMM, YCK, RL, BK; Funding acquisition: HBK; Investigation: SYP, SMM, YCK, RL, BK; Project administration: SYP, SMM, BK; Methodology: SYP, SMM, YCK, RL, BK, HBK; Supervision: HBK; Writing-original draft: SYP; Writing-review and editing: SYP, SMM, YCK, BK, HBK.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

The study protocol was reviewed and approved for ethical exemption by the institutional review board (IRB) of Seoul National University Bundang Hospital (Seoul, Republic of Korea; IRB number: X-2305-828-906).

Consent for publication

Not applicable.

Competing interests

The authors have no potential conflicts of interest to disclosure.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1:

A questionnaire consisting of open-ended questions and a summary of the literature review.

Additional file 2

. Table S1: The response rate of the expertise panel in each round. Table S2: Proposed plan for qualitative assessment of antibiotic use. Table S3: How to best use assessment data.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Park, S.Y., Kim, Y.C., Moon, S.M. et al. Developing a framework for regular and sustainable qualitative assessment of antibiotic use in Korean medical institutions: a Delphi study. Antimicrob Resist Infect Control 12, 114 (2023). https://doi.org/10.1186/s13756-023-01319-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13756-023-01319-8