Abstract

Background

AI-based software may improve the performance of radiologists when detecting clinically significant prostate cancer (csPCa). This study aims to compare the performance of radiologists in detecting MRI-visible csPCa on MRI with and without AI-based software.

Materials and methods

In total, 480 multiparametric MRI (mpMRI) images were retrospectively collected from eleven different MR devices, with 349 csPCa lesions in 180 (37.5%) cases. The csPCa areas were annotated based on pathology. Sixteen radiologists from four hospitals participated in reading. Each radiologist was randomly assigned to 30 cases and diagnosed twice. Half cases were interpreted without AI, and the other half were interpreted with AI. After four weeks, the cases were read again in switched mode. The mean diagnostic performance was compared using sensitivity and specificity on lesion level and patient level. The median reading time and diagnostic confidence were assessed.

Results

On lesion level, AI-aided improved the sensitivity from 40.1% to 59.0% (18.9% increased; 95% confidence interval (CI) [11.5, 26.1]; p < .001). On patient level, AI-aided improved the specificity from 57.7 to 71.7% (14.0% increase, 95% CI [6.4, 21.4]; p < .001) while preserving the sensitivity (88.3% vs. 93.9%, p = 0.06). AI-aided reduced the median reading time of one case by 56.3% from 423 to 185 s (238-s decrease, 95% CI [219, 260]; p < .001), and the median diagnostic confidence score was increased by 10.3% from 3.9 to 4.3 (0.4-score increase, 95% CI [0.3, 0.5]; p < .001).

Conclusions

AI software improves the performance of radiologists by reducing false positive detection of prostate cancer patients and also improving reading times and diagnostic confidence.

Clinical relevance statement

This study involves the process of data collection, randomization and crossover reading procedure.

Graphical Abstract

Key points

-

The artificial intelligence (AI)-aided software improved the lesion-level sensitivity to detect MRI- visible clinically significant prostate cancer (csPCa) of radiologists.

-

The AI-aided software reduced the false positive detection of prostate cancer patients with improved specificity while preserving the sensitivity.

-

The AI-aided software reduced the mean reading and reporting time and increased the diagnostic confidence score for MRI-visible csPCa diagnosis.

Similar content being viewed by others

Introduction

Multiparametric MRI (mpMRI), as a noninvasive triage tool, can not only detect clinically significant prostate cancer (csPCa) lesions but also provide information on locoregional staging and biopsy [1,2,3]. Combined with the test of serum prostate-specific antigen (PSA), an “MRI diagnosis pathway” [4] may have the potential to mitigate excessive biopsy [5] and consequent overtreatment for indolent lesions [6]. Thus, the European Association of Urology has recommended mpMRI as the backbone for the primary prostate cancer diagnostic pipeline to properly identify candidates for image-guided biopsy [7].

The Prostate Imaging Reporting and Data System (PI-RADS) [8] has been launched to guide standardized acquisition, interpretation and reporting procedures for prostate mpMRI [9]. As shown in the meta-analysis [10], a pooled sensitivity of 0.89 and a specificity of 0.73 were shown for PI-RADS Version 2 in detecting visible csPCa. Since approximately half of the tumor foci are MRI-invisible, the sensitivity of detection at the lesion level is much lower [11, 12]. Even with visible lesions, PI-RADS performance is not optimal due to the high inter-rater and intra-rater variability, and a high degree of expertise is required [13,14,15,16].

Recently, many computer-aided detection (CAD) systems on mpMRI have shown good performance in prostate cancer diagnosis [17]. CAD systems can enhance radiologists diagnostic performance and reduce interpretation inconsistencies. Many studies [18,19,20,21] suggested that AI-based CAD systems have potential clinical utility in csPCa detection. However, the performances of CAD systems reported in these studies may be dataset-specific, and their generalization, that is, performance on outside datasets, has not been well studied. To this end, it is necessary for external validation in a multicenter, multivendor clinical setting before the CAD systems are applied to radiologists’ workflow.

In this study, previously trained AI algorithms were embedded into a proprietary structured reporting software, and radiologists simulated their real-life work scenarios to interpret and report the PI-RADS category of each case using this AI-based software. The purpose of this study is to compare the diagnostic performance, reading and reporting time, and diagnostic confidence of radiologists in detecting MRI-visible csPCa on MRI with and without AI-based software.

Materials and methods

Radiologists from four hospitals participated in this study. The mpMRI images were retrospectively gathered from three hospitals. No study data were used in the previous development of the AI models.

Study dataset

We collected mpMRI images from three hospitals (Peking University First Hospital, the Second Affiliated Hospital of Dalian Medical University and Fujian Medical University Union Hospital) between June 2017 and August 2018. All patients had clinical indications for prostate mpMRI examination, underwent both TRUS-guided systematic (12- or 6-core needles) and targeted biopsy after mpMRI examination, and no prostate cancer-related treatment was performed before the examination. Eleven different MR scanners were used in the four hospitals for the acquisition of prostate mpMRI. The detailed protocols of mpMRI are shown in Table 1. The correlated clinical information was also collected, including PSA value, pathological results and clinical follow-up results. Exclusion criteria were (a) ineligible image quality, (b) cases showing obvious extracapsular extension, diffuse pelvic lymph adenopathy and/or bone metastasis, and (c) mismatch between the mpMRI image and the pathology result, which includes cancer that is not visible on imaging and images that show cancer but are pathologically negative.

Reference standard

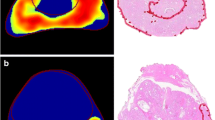

A combined pathology [22] was created from the systematic and targeted biopsy and used as reference standard. A total of 12 dedicated urologists (10–35-year experience in prostate biopsies) from three hospitals performed the prostate biopsy using the following same biopsy techniques with their own hardware, i.e., double-plane B-ultrasounds (LOGIQ E9, GE; EPIQ 7, Philips; Hivision Ascendus, Hitachi; RS80A, Samsung), transrectal probes and corresponding puncture needle guns. For system biopsy, 12- or 6-core needles biopsies were adopted. For the targeted biopsy, based on structured reports prepared by dedicated urogenital radiologists during the clinical routine, lesions suspected of malignancy were marked on a prostate sector map [23] for targeted biopsy. At least one urologist and one urogenital radiologist would review MR images before biopsy in a multidisciplinary meeting to ensure accurate localization of suspicious lesions. When performing biopsies, the urologists examined each suspicious lesion with an additional needle core (2 to 5-core needles). A total of 9 dedicated genitourinary pathologists (8- to 30-year experience in prostate pathology interpretation) analyzed and recorded the histopathology on each specimen. The criteria for a negative case were negative biopsy and no prostate cancer at more than one year of clinical follow-up. The criteria for a positive case were positive pathology with a Gleason score ≥ 7 or a Gleason score of 3 + 3 with volume ≥ 0.5 cc. For positive cases, the two uro-radiologists (Z.S. and X.W. with 4 and 30 years of experience in prostate MRI diagnosis) mapped the pathological ground truth of each csPCa focus to the diffusion-weighted imaging and annotated them with consensus. The open-source software ITK-SNAP [24] (version 3.8.0 2019; available at www.itksnap.org) was used to annotate the tumor foci.

AI software description

A proprietary deep learning-based AI software was used for the study. It consists of four AI models: (i) MRI sequence classification, (ii) prostate gland segmentation and measurement [25], (iii) prostate zonal anatomy segmentation and (iv) csPCa foci segmentation and measurement. Additional file 1 shows detailed information on the development and performance of the AI software. The models were sequentially executed, and the results were automatically input into the PI-RADS structured report [26].

Reporting with the structured reporting software

Sixteen radiologists (1–5-year experience in prostate mpMRI interpretation) from four hospitals (Peking University First Hospital, the Second Affiliated Hospital of Dalian Medical University, Fujian Medical University Union Hospital and Sichuan University West China Hospital) were invited as readers. They were familiar with the PI-RADS (version 2.1) guideline and followed it in their practical work. Six of them had experience with more than 100 cases, two had experience with 50–100 cases, and the other eight radiologists had experience with less than 50 cases. The readers were blinded to all patients’ clinical information. We used structured reporting software to read and record the results. Before the study, they were trained to use the reporting software with 80 practice cases outside the study data. Each reader had five practice cases, of which two were read with AI assistance, and three were read without AI assistance. Figure 1 illustrates the process of Reader-only and Reader-AI using the structured reporting software.

The Reader-only mode included four steps. The first step measures the diameters of the prostate gland. The readers measured the transverse, anterior–posterior and cranio–caudal lengths of the prostate gland. The second step is to detect and measure the suspected lesion. The readers recorded up to four largest PI-RADS ≥ 3 lesions. They recorded the location, measured the maximum diameter and gave a PI-RADS score to each lesion. The third step evaluates other findings. The readers recorded other findings as they did in their clinical practice, including invasion of surrounding structures and other benign findings. The fourth step gives the overall impression. The readers summarized all the findings and gave a global expression. The readers rated their diagnostic confidence for each case on a 5-point scale (1 ≤ 25%, 2 = 25–50%, 3 = 50–75%, 4 = 75–90%, 5 ≥ 90%) [27]. The reading and reporting time of each case was automatically recorded by the software.

The Reader-AI mode followed the same process; the only difference was that there was AI help in the first and second steps. When the readers opened the patient list, the prostate gland and the suspicious lesions were already annotated and highlighted by the AI software. The readers might approve, reject or amend the AI findings at their discretion.

Lesions with PI-RADS scores higher than or equal to 3 were considered positive for csPCa lesions. Patients with at least one positive csPCa lesion were considered positive, and patients with no csPCa lesion were considered negative.

Crossover reading method

The 480 cases were randomly divided into two groups: group A and group B. We assigned the two groups of cases to the 16 readers, i.e., each reader received 30 cases, with 15 in group A and 15 cases in group B.

The reading study was conducted in two reading sessions with an interval of four weeks. In the first reading session, cases in group A were read with Reader-AI mode, while cases in group B were read with Reader-only mode. In the second reading session, cases in group A were read with Reader-only mode, while cases in Group B were read with Reader-AI mode. The crossover reading procedure is shown in Fig. 2.

Statistical analysis

All statistical tests were performed using R 4.2.0 (Comprehensive R Archive Network, www.r-project.org). Quantitative variables were given as the mean (standard deviation) for normalized data and as the median [minimum, maximum] for nonnormalized data. The categorical variables are given as absolute frequency (relative frequency). The mean lesion-level sensitivity, patient-level sensitivity and specificity with 95% confidence intervals (CIs) across all 16 radiologists of the two reading modes were computed and then compared by the Chi-square test. The median reading and reporting time, and diagnostic confidence were compared by the Wilcoxon rank-sum test. All statistical tests were two-tailed with a 5% level of statistical significance.

Results

Clinical characteristics

A total of 480 cases were included in this study with a serum total PSA value of 7.69 [0.150, 100] ng/ml. A total of 180 (37.5%) cases were proved to be csPCa, with 349 MRI-visible csPCa lesions. Of the 180 positive cases, 39 cases had lesions located only in the peripheral zone, 28 cases had lesions located only in the transition zone, and 113 cases had lesions located in both peripheral and transition zones. The lesion volumes were calculated by summing the pixel volumes within the annotated areas of the reference standard. The median volume of MRI-visible csPCa lesions was 2.0 [1.0, 4.8] cm3. The clinical and demographic characteristics of the eligible cases are shown in Table 2.

Performance of Reader-AI and Reader-only

Table 3 shows the performance of Reader-AI and Reader-only. Reader-AI detected 302 suspected lesions. Among them, 206 (68.2%) were proved to be true positive lesions. Reader-only detected 304 suspected lesions, and 140 (46.5%) of them were proved to be true positive lesions. In terms of patient diagnosis, Reader-AI and Reader-only detected 168 (66.1%) and 159 (55.8%) true positive patients, respectively.

Table 4 shows the comparison of readers' diagnostic performance under the two reading modes. On a lesion level, the mean sensitivity improved from 40.1% for Reader-only to 59.0% for Reader-AI (18.9% increased; 95% CI [11.5, 26.1]; p < 0.001). On patient level, the use of AI improved the mean specificity of radiologists from 57.7 to 71.7% (14.0% increase, 95% CI [6.4, 21.4]; p < 0.001) while preserving the sensitivity (88.3% for Reader-only and 93.9% for Reader-AI, p = 0.06).

Reading and reporting times and diagnostic confidence

The time records of the readings were missing in two cases. The median reading and reporting time of one case was reduced by 56.3% from 423 to 185 s (238-s decrease, 95% CI [219, 260]; p < 0.001) with the AI-aided procedure (Fig. 3A). The median diagnostic confidence was increased by 10.3% from 3.9 to 4.3 (0.4-score increase, 95% CI [0.3, 0.5]; p < 0.001) with the AI-aided procedure (Fig. 3B).

Discussion

In this multicenter external validation study, the results showed that AI software substantially improved the lesion-level and patient-level specificity of the readers while preserving patient-level sensitivity in detecting MRI-visible csPCa. Meanwhile, with the help of AI, the radiologists reduced the mean reading and reporting time and increased the diagnostic confidence for diagnosis.

Recently, many studies [28] have emphasized the promising stand-alone AI performance for csPCa detection in mpMRI. Some researchers have further attempted to investigate how AI-assisted reading contributes to radiologists' interpretations of prostate MRIs. Several studies [26, 29,30,31] found that CAD-assisted reading improved sensitivity on patient level and/or lesion level, but specificity was sacrificed or not altered among radiologists. However, Niaf et al.'s study [32] showed that CAD could improve the classification specificity of lesions in the peripheral zone but not the sensitivity. The main finding in our study is that radiologists were significantly more sensitive to detecting MRI-visible csPCa foci with AI software assistance than they were without it, and their patient-level specificity also increased while not impairing patient-level sensitivity. The outcome discrepancy may be due to differences in external validation datasets, numbers of cases/lesions and parameters of the scanners. Of note, most previous studies provide results based on homogeneous data and propose the necessity of multicenter and multivendor research with an external dataset.

Similar to our study, Winkel et al. [33] conducted a study to validate the value of their prostate cancer CAD system for seven radiologists’ interpretations using a publicly available dataset in the PROSTATEx Challenge [34]. Their study used an external dataset, but the data were essentially homogeneous data from two different types of Siemens 3 T MR scanners, i.e., the MAGNETOM Trio and Skyra. The strength of our study is that the external data were collected from three different medical institutions. The mpMRI images were acquired using a total of 11 different MR devices with some variation in scan parameters. Thus, the data are very heterogeneous, which is a challenging task for AI algorithms.

In this retrospective study, the prevalence of prostate cancer was 37.5% for csPCa. Although not as good as prospective studies in real-world scenarios, the datasets we collected are a reasonable literature average, which is reflective of real-world datasets. However, data variations existed among the 3 hospitals. On the one hand, hospitals 2 and 3 have more advanced cases than hospital 1. On the other hand, most patients were examined at 3 T scanners, while approximately 5% of patients were examined at 1.5 T scanners. This may be related to different clinical protocols among these hospitals. Despite the above imbalances, it shows that our real-world dataset simulates a real-life clinical scenario and that the AI software can work with these imbalances, which lays a foundation for future prospective studies.

Several studies [30, 35] assessed the impact of a CAD system on less-experienced and experienced readers in mpMRI interpretation. The CAD system can significantly improve the performance of less-experienced readers to achieve expert-level performance. In our study, the 16 readers were from four hospitals, and their diagnostic experience varied widely (1- to 5-year experience). Each reader obtained reading cases from both their hospital and other hospitals. Therefore, for every reader, the data are heterogeneous and unfamiliar. Even so, the results of this study still show a significant improvement in diagnostic efficacy by using AI, indicating that the AI software used in this study has substantial generalization capability.

In this study, the patient-level sensitivity of Reader-only was 88.3%, and it increased to 93.9% in Reader-AI mode (p = 0.06). Even though the statistical significance of the difference in patient-level sensitivity was not achieved, we suppose it might be observed by increasing the sample size in a future study. Regarding the data, the sensitivity of Reader-only for MRI-visible csPCa lesion detection was 40.1%, and it was significantly increased to 59.0% (p < 0.001) with the Reader-AI procedure. At the same time, there is an improved patient-level specificity in the Reader-AI mode (57.7% vs. 71.7%, p < 0.001), which is beneficial to avoid unnecessary biopsies. Some studies indicate that the ability of humans to detect large lesions is usually sufficient on their own [36, 37], and AI software does not provide extra help for radiologists [26]. Thus, we excluded cases with prominent lesions as well as cases with advanced cancer, i.e., obvious extracapsular extension, diffuse pelvic lymph adenopathy and/or bone metastasis. The omission of these cases may lead to an underestimation of the diagnostic performance of Reader-only. Our findings suggest that the ability of radiologists to detect difficult lesions was significantly improved with the help of AI. Given the significant improvement in the detection of MRI-visible csPCa lesions by the Reader-AI mode, the AI-aided results have greater value in guiding the localization of biopsy.

In our study, a significant shortening in diagnostic time was observed in Reader-AI mode. Even so, our study's overall diagnostic time was longer than previously reported [29, 33]. In contrast to a previous study that only recorded the timing of prostate cancer detection, our reading procedure recorded the timing of the complete prostate interpretation report. The PI-RADS guidelines recommend a structured prostate report consisting of prostate volume measurement, detecting, measuring, characterizing and locating suspicious lesions, as well as other findings in the entire pelvis. Although our AI software only assisted in parts of the workflow, we can see that the efficiency of the overall report has been improved, indicating that embedding AI into structured reports is a good method to improve efficiency in clinical practice.

This study has obvious limitations. First, this study is a retrospective study, and a prospective study is of greater value. Second, the reference standard is based on cognitive fusion biopsy, which is subject to a higher risk of targeting errors than software fusion, so it is clearly a limitation. Thus, the annotations of the reference standard are possibly biased. And there is a lack of analysis of data that mismatch between MR images and pathology. The result obtained by using whole-mount step section pathology as the reference standard is more credible. Third, readers' experiences varied widely; thus, it was difficult to perform a stratification analysis. The consistency of all readers was also not analyzed because each case was evaluated by only one reader. Although there are 16 radiologists, each radiologist only read 30 cases. It would be better if all radiologists read all cases. Fourth, there is a gross imbalance in the datasets. Last but not least, we excluded data with poor image quality that should also be analyzed in our daily work.

To conclude, this multicenter, self-crossover-controlled study showed that AI software, when tested in high-quality, real-world datasets, improves the diagnostic performance of radiologists by reducing the detection of false positive patients and also improving reading and reporting times and diagnostic confidence.

Availability of data and materials

The datasets used and analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- CAD:

-

Computer-aided detection

- CI:

-

Confidence interval

- csPCa:

-

Clinically significant prostate cancer

- ISUP:

-

International Society of Urological Pathology

- mpMRI:

-

Multiparametric magnetic resonance imaging

- PI-RADS:

-

Prostate Imaging Reporting and Data System

- PSA:

-

Prostate-specific antigen

- TRUS:

-

Transrectal ultrasound

References

Stabile A, Giganti F, Rosenkrantz AB et al (2020) Multiparametric MRI for prostate cancer diagnosis: current status and future directions. Nat Rev Urol 17:41–61

Ahmed HU, El-ShaterBosaily A, Brown LC et al (2017) Diagnostic accuracy of multi-parametric MRI and TRUS biopsy in prostate cancer (PROMIS): a paired validating confirmatory study. Lancet 389:815–822

Heidenreich A, Bellmunt J, Bolla M et al (2011) EAU guidelines on prostate cancer. Part 1: screening, diagnosis, and treatment of clinically localised disease. Eur Urol 59:61–71

Van der Leest M, Cornel E, Israël B et al (2019) Head-to-head comparison of transrectal ultrasound-guided prostate biopsy versus multiparametric prostate resonance imaging with subsequent magnetic resonance-guided biopsy in biopsy-Naïve men with elevated prostate-specific antigen: a large prospective multicenter clinical study. Eur Urol 75:570–578

Winkel DJ, Wetterauer C, Matthias MO et al (2020) Autonomous detection and classification of PI-RADS lesions in an MRI screening population incorporating multicenter-labeled deep learning and biparametric imaging: proof of concept. Diagnostics 10(11):951

Klotz L, Chin J, Black PC et al (2021) Comparison of multiparametric magnetic resonance imaging-targeted biopsy with systematic transrectal ultrasonography biopsy for biopsy-Naive men at risk for prostate cancer: a phase 3 randomized clinical trial. JAMA Oncol 7:534–542

Mottet N, van den Bergh RCN, Briers E et al (2021) EAU-EANM-ESTRO-ESUR-SIOG guidelines on prostate cancer-2020 update. Part 1: screening, diagnosis, and local treatment with curative intent. Eur Urol 79:243–262

Turkbey B, Rosenkrantz AB, Haider MA et al (2019) Prostate imaging reporting and data system version 2.1: 2019 update of prostate imaging reporting and data system version 2. Eur Urol 76:340–351

Gupta RT, Mehta KA, Turkbey B, Verma S (2020) PI-RADS: past, present, and future. J Magn Reson Imaging 52:33–53

Woo S, Suh CH, Kim SY, Cho JY, Kim SH (2017) Diagnostic performance of prostate imaging reporting and data system version 2 for detection of prostate cancer: a systematic review and diagnostic meta-analysis. Eur Urol 72:177–188

Lee MS, Moon MH, Kim YA et al (2018) Is prostate imaging reporting and data system version 2 sufficiently discovering clinically significant prostate cancer? Per-lesion radiology-pathology correlation study. AJR Am J Roentgenol 211:114–120

Johnson DC, Raman SS, Mirak SA et al (2019) Detection of individual prostate cancer foci via multiparametric magnetic resonance imaging. Eur Urol 75:712–720

Westphalen AC, McCulloch CE, Anaokar JM et al (2020) Variability of the positive predictive value of PI-RADS for prostate MRI across 26 centers: experience of the society of abdominal radiology prostate cancer disease-focused panel. Radiology 296:76–84

Smith CP, Harmon SA, Barrett T et al (2019) Intra- and interreader reproducibility of PI-RADSv2: a multireader study. J Magn Reson Imaging 49:1694–1703

Girometti R, Giannarini G, Greco F et al (2019) Interreader agreement of PI-RADS v. 2 in assessing prostate cancer with multiparametric MRI: a study using whole-mount histology as the standard of reference. J Magn Reson Imaging 49:546–555

Byun J, Park KJ, Kim MH, Kim JK (2020) Direct comparison of PI-RADS Version 2 and 2.1 in transition zone lesions for detection of prostate cancer: preliminary experience. J Magn Reson Imaging 52:577–586

Wildeboer RR, van Sloun RJG, Wijkstra H, Mischi M (2020) Artificial intelligence in multiparametric prostate cancer imaging with focus on deep-learning methods. Comput Methods Programs Biomed 189:105316

Twilt JJ, van Leeuwen KG, Huisman HJ, Fütterer JJ, de Rooij M (2021) Artificial intelligence based algorithms for prostate cancer classification and detection on magnetic resonance imaging: a narrative review. Diagnostics 11(6):959

Padhani AR, Turkbey B (2019) Detecting prostate cancer with deep learning for MRI: a small step forward. Radiology 293:618–619

Mehralivand S, Yang D, Harmon SA et al (2022) Deep learning-based artificial intelligence for prostate cancer detection at biparametric MRI. Abdom Radiol 47:1425–1434

Khosravi P, Lysandrou M, Eljalby M et al (2021) A deep learning approach to diagnostic classification of prostate cancer using pathology-radiology fusion. J Magn Reson Imaging 54:462–471

Radtke JP, Schwab C, Wolf MB et al (2016) Multiparametric magnetic resonance imaging (MRI) and MRI-transrectal ultrasound fusion biopsy for index tumor detection: correlation with radical prostatectomy specimen. Eur Urol 70:846–853

Weinreb JC, Barentsz JO, Choyke PL et al (2016) PI-RADS prostate imaging—reporting and data system: 2015, version 2. Eur Urol 69:16–40

Yushkevich PA, Piven J, Hazlett HC et al (2006) User-guided 3D active contour segmentation of anatomical structures: significantly improved efficiency and reliability. Neuroimage 31:1116–1128

Zhu Y, Wei R, Gao G et al (2019) Fully automatic segmentation on prostate MR images based on cascaded fully convolution network. J Magn Reson Imaging 49:1149–1156

Zhu L, Gao G, Liu Y et al (2020) Feasibility of integrating computer-aided diagnosis with structured reports of prostate multiparametric MRI. Clin Imaging 60:123–130

Garcia-Reyes K, Passoni NM, Palmeri ML et al (2015) Detection of prostate cancer with multiparametric MRI (mpMRI): effect of dedicated reader education on accuracy and confidence of index and anterior cancer diagnosis. Abdom Imaging 40:134–142

Twilt JJ, van Leeuwen KG, Huisman HJ, Fütterer JJ, de Rooij M (2021) Artificial intelligence based algorithms for prostate cancer classification and detection on magnetic resonance imaging: a narrative review. Diagnostics 11(6):959

Giannini V, Mazzetti S, Armando E et al (2017) Multiparametric magnetic resonance imaging of the prostate with computer-aided detection: experienced observer performance study. Eur Radiol 27:4200–4208

Hambrock T, Vos PC, Hulsbergen-van de Kaa CA, Barentsz JO, Huisman HJ (2013) Prostate cancer: computer-aided diagnosis with multiparametric 3-T MR imaging–effect on observer performance. Radiology 266:521–530

Greer MD, Lay N, Shih JH et al (2018) Computer-aided diagnosis prior to conventional interpretation of prostate mpMRI: an international multi-reader study. Eur Radiol 28:4407–4417

Niaf E, Lartizien C, Bratan F et al (2014) Prostate focal peripheral zone lesions: characterization at multiparametric MR imaging–influence of a computer-aided diagnosis system. Radiology 271:761–769

Winkel DJ, Tong A, Lou B et al (2021) A novel deep learning based computer-aided diagnosis system improves the accuracy and efficiency of radiologists in reading biparametric magnetic resonance images of the prostate: results of a multireader, multicase study. Invest Radiol 56:605–613

Litjens G, Debats O, Barentsz J, Karssemeijer N, Huisman H (2017) ProstateX challenge data. Cancer Imaging Arch 10:K9TCIA. https://doi.org/10.7937/K9TCIA.2017.MURS5CL

Labus S, Altmann MM, Huisman H et al (2022) A concurrent, deep learning-based computer-aided detection system for prostate multiparametric MRI: a performance study involving experienced and less-experienced radiologists. Eur Radiol. https://doi.org/10.1007/s00330-022-08978-y

Purysko AS, Bittencourt LK, Bullen JA, Mostardeiro TR, Herts BR, Klein EA (2017) Accuracy and interobserver agreement for prostate imaging reporting and data system, version 2, for the characterization of lesions identified on multiparametric MRI of the prostate. AJR Am J Roentgenol 209:339–349

Thai JN, Narayanan HA, George AK et al (2018) Validation of PI-RADS version 2 in transition zone lesions for the detection of prostate cancer. Radiology 288:485–491

Funding

This work was supported by Capital Health Research and Development of Special (Recipient: XYW; Award no. 2020-2-40710).

Author information

Authors and Affiliations

Contributions

ZS was involved in conceptualization, data curation, formal analysis, investigation, methodology, project administration, resources, software, validation, visualization and writing—original draft. KW contributed to software, supervision, validation and visualization. ZK, ZX, YC, NL, YY and BS were responsible for investigation and resources. PW and XPW participated in formal analysis and software. XZ was responsible for software. XYW took part in conceptualization, data curation, formal analysis, investigation, methodology, project administration, resources, software, validation, visualization and writing—reviewing and editing. All authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was conducted in accordance with the Declaration of Helsinki and was approved by the institutional review board of the Peking University First Hospital. The informed consent was waived.

Consent for publication

Not applicable.

Competing interests

PW and XPW are employees of Beijing Smart Tree Medical Technology Co. Ltd. (Beijing, China). The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1:

Information on the development and performance of the AI software.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sun, Z., Wang, K., Kong, Z. et al. A multicenter study of artificial intelligence-aided software for detecting visible clinically significant prostate cancer on mpMRI. Insights Imaging 14, 72 (2023). https://doi.org/10.1186/s13244-023-01421-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13244-023-01421-w