Abstract

The rapid advancement and deployment of Artificial Intelligence (AI) poses significant regulatory challenges for societies. While it has the potential to bring many benefits, the risks of commercial exploitation or unknown technological dangers have led many jurisdictions to seek a legal response before measurable harm occurs. However, the lack of technical capabilities to regulate this sector despite the urgency to do so resulted in regulatory inertia. Given the borderless nature of this issue, an internationally coordinated response is necessary. This article focuses on the theoretical framework being established in relation to the development of international law applicable to AI and the regulatory authority to create and monitor enforcement of said law. The authors argue that the road ahead remains full of obstacles that must be tackled before the above-mentioned elements see the light despite the attempts being made currently to that end.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Artificial Intelligence (AI) presents a unique challenge for the international community, requiring a flexible approach (Gurkaynak et al., 2016, Abulibdeh et al., 2024) as nations race for its development (Armstrong et al., 2016) despite the uncertainties it entails (Emery-Xu et al., 2023). Its rapid growth, the decision-making responsibilities given to it, and the significance of its outcomes lead regulators to seek its governance through human principles. These include human oversight, understanding, and ethical reasoning (Miailhe and Hodes, 2017). The end goal is building trust and ensuring its effective governance (Alalawi et al., 2024). Laws are needed primarily because its progress is highly unpredictable and uncontrollable, and numerous risks have been identified (Buiten, 2019). These include introducing AI systems capable of changing people’s behavior, exploiting vulnerabilities, and examining the trustworthiness of citizens (EU AI Act, 2024). However, a balance must be struck to ensure that rules do not impede progress. While national and local regulations are important, a global framework is better suited to address the limitations at the transnational level. Government legislation often focuses on the development of the local AI industry, its ethics, and challenges (Turner, 2019). Nonetheless, nations may be hesitant to address global governance and the need for rules and institutions. A universal regime could provide model laws, drawing on expertise from nations around the world. This would be a first step towards greater harmonization (DiMatteo et al., 2022).

Nations and companies are investing heavily in this field. However, this poses a potential risk for developing and Least Developed Countries (LDCs), who may find themselves left behind (Miailhe and Hodes, 2017). Governments with significant AI investments may view it as a state-controlled resource. Countries with limited technological development capacities may argue that it should be considered a common heritage of mankind. The regulation of this sector at the international level is complex due to competing interests. Some may prioritize innovation and economic growth over regulation, while others may argue for a more cautious approach. The problems identified require coordination via a new global AI regulatory authority to tackle current and future issues emerging in this field. The objective is to anticipate and respond to rapidly changing technological developments, balance the interests of different stakeholders, and provide the necessary technical expertise and resources to effectively regulate this sector. Doing so will help ensuring that the benefits are shared, and the risks associated with its development are minimized.

This article discusses the framework to be created globally based on which a regulatory authority is established with the mission of enacting and monitoring enforcement of international law considering the relevant stakeholders. The authors argue that eventually a new transnational legal architecture is bound to be created for AI similar to what happened with other topics and challenges. They will also highlight current attempts towards that direction showing that further efforts are needed to that end and that many factors and circumstances must be considered including the current state of international law and organizations, their effectiveness, and states participation.

The article starts with presenting the theoretical framework and then a literature review. After that, the role of the different actors in this context is examined (states; natural persons; civil society organizations; companies and international organizations). The role of the AI regulatory authority with establishing and monitoring international law is later discussed. Based on the above, the authors will set the road ahead for the regulation of this field.

AI risks for the international community

The use of AI presents various risks that directly or indirectly impact the international community. These risks depend on the level of AI development and human agency (Turchin and Denkenberger, 2020). They include potential job losses and social instability in sectors such as finance, healthcare, and entertainment; the use of AI as a weapon; lack of accountability and transparency; algorithmic bias; data privacy breaches; virtual threats and cyber conflicts (Taeihagh, 2021; Lin, 2019; Butcher and Beridze, 2019). Other risks involve dependence on technology, loss of human connection, fairness, interpretability, safety, robustness and security, lawfulness, misinformation and manipulation, lack of compliance, and economic inequality and taxation (Gov.UK, n.d.; Marr, 2023; Golbin et al., 2020; Calo, 2017). AI poses significant risks to peace and security, necessitating a coordinated response (Ovink, 2024). It can globally impact hardware through viruses, damage populations via military autonomous systems, be used by governments to control citizens and lead to a war-inducing arms race. Critical global infrastructure, such as power generation, food supply, and transport systems, may be hacked, destroying technological advancements. Biohacking viruses, ransomware, and autonomous weapons are also concerns. Regarding human society, risks include AI’s impact on the market economy, potential human replacement by robots, brain modification technologies, a transnational totalitarian computer system, dangerous scientific progress, global health issues, and the potential for AI to control the planet. These risks can emerge at various stages of AI development (Turchin and Denkenberger, 2020; Schwalbe and Wahl, 2020).

AI benefits for the international community

The use of AI brings a wide range of benefits, including economic growth, improved performance, increased comfort and efficiency, enhanced safety through crime detection, and sustainability improvements. It also fosters social development and better quality outcomes in fields like healthcare and education (Sharma, 2024; Alhosani and Alhashmi, 2024; AI for Good, n.d.). However, some argue that these benefits should not come at the expense of human rights (Marwala, 2024). There are calls for frameworks to guide AI development to benefit all humanity equally (Gibbons, 2021), with global governance playing a crucial role in ensuring this (Leslie et al., 2024) by prioritizing ethics, the rule of law, and addressing challenges (UN, 2023a, 2023b). International groups, such as the AI Alliance, have been established to ensure the responsible use of technology for everyone’s benefit (IBM, 2023; US Department of State, 2023). It’s worth noting that the benefits may vary between the North and South due to differing priorities and perspectives (Anthony et al., 2024). This is necessary because AI research is becoming increasingly complex and resource-intensive, with different approaches to innovation further complicating the situation (Kerry et al., 2021). The AI advisory body of the UN, in its report “Governing AI for Humanity,” established the first guiding principle that AI should be governed inclusively, by and for the benefit of all. It justified this by noting that most of the global population is not capable of using AI to improve their lives, necessitating multi-stakeholder cooperation (AI Advisory Body, 2023).

Why AI needs to be regulated internationally?

The risks posed by AI have the potential to create various social, economic, and ethical problems. Ignoring these risks could result in significant global negative effects and hinder technological progress. This is where international laws play a crucial role, setting goals and standards to reduce the likelihood of risks (Cha, 2024), depending on their level and potential damage (Strous, 2019), which can occur in any territory (Trager et al., 2023). Stakeholders, including national and international policymakers, lawyers, industry experts, and academics, must be involved (Pesapane et al., 2021). Key issues requiring regulation include human rights protection, questions of sovereignty, state responsibility, dispute settlement, and the North-South divide (Lee, 2022). The ultimate goal is to balance societal interests with safe innovation while considering corporate interests (Cha, 2024). Since the AI industry is global, with networks and computing resources spread across many countries, international cooperation is essential (Trager et al., 2023). As AI entities are deemed to be operating within legal bounds as long as they are subject to human control, they should not be left to the discretion of states and corporations as they may prioritize their interests over other considerations (Burri, 2018). Moreover, confusion and uncertainty will rain if we use solutions on a case-by-case basis (Andrés, 2021). As control issues become more prevalent, the accumulated experience of this field dealing with similar matters could provide valuable guidance (Boon, 2014). Questions such as responsibility and decision-making authority in AI-related incidents, and the delegation of functions to AI entities will become increasingly common. Moreover, domestic rules apply only nationally (Cha, 2024) while their the extraterritorial application is often seen as a form of legal imperialism (Wang, 2024). Domestic regulations face criticism for failing to provide a unified definition of AI, adequately address key issues, and ensure compliance (Ratcliff, 2023). International organizations have begun recognizing the need for transnational AI regulations (Hars, 2022), including private ones (Veale et al., 2023), where limitations have been noticed (Nindler, 2019). Scholars emphasize the importance of such legislation, drawing parallels with fields like energy law (Huang et al., 2024), highlighting specific concerns (Erman and Furendal, 2020a), and stressing the need for global collaboration (Feijoo et al., 2020). Relying solely on national approaches could lead to fragmented global regulations, as nations compete for AI development and potentially implement inadequate rules (Cihon, 2019). Some cooperative efforts are already in place, such as transatlantic collaborations (Broadbent, 2021). However, reaching a consensus on the values to include in global regulations remains challenging (HEC Paris Insights, 2022), despite nations’ eagerness to lead these efforts (Meltzer, 2023) and call for global meetings (Baig, 2024). Overall, the extensive experience of international law can provide crucial support to a new field where these questions will arise (Burri, 2018).

Theoretical framework

It centers on the way an AI regulatory authority would balance and organize the role of the different actors globally, including their powers and duties. This is a complicated task that requires great efforts to adopt laws that consider the interests and needs of all of them mainly states whose consent is crucial to establish and enforce AI rules domestically (Besson, 2016).

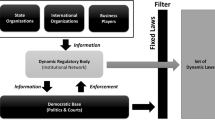

Figure 1 starts with the international organization responsible for regulating AI covering its various competences such as rulemaking, enforcement, and oversight. It also includes the representation of the different actors involved in the regulatory process mainly states, other organizations, private companies, and civil society. It is worth mentioning that some add other actors to this classification such as the market and the academic community. Those are considered as part of private companies and civil societies in this article (Chinen, 2023).

The question of who will regulate AI is closely tied to current global trends. As an emerging sector, it is already the subject of intense scholarly debate, with discussions about whether mechanisms similar to those currently tackling other fields should be used (Cogan, 2011; Losifidis, 2016). This also involves examining the form that international institutional governance would take considering other challenges such as those related to sustainable development goals (Neuwirth, 2024). The need for common standards and the creation of universal governance instruments is widely recognized (Cogan, 2011; Losifidis, 2016). Its effect on governance is also acknowledged to prevent its disruptive effects from being overlooked or understated (McGregor, 2018). This has been examined highlighting a multitude of issues and challenges particularly the way international law will be impacted and the legal disruption that may occur (Liu et al., 2020a).

This is happening in a context where the development of international law is a very complicated process that spans upon years and decades depending on the field and the sensitivity of the issue. This has been the case for instance for climate change (Peel, 2012), transboundary water resources (McCaffrey, 2019) and other fields. The complication stems from the fact that states dominate the global arena given the absence of authority over them. Based on this, laws cannot be adopted without their consent (Klabbers, 2013). Yet, nations have different interests they seek to either achieve or protect affecting the adoption as well as the implementation of laws. This reality led many to questioning the relevance of international law (Chinkin and Kaldor, 2017) and states responsibility under this framework as many nations violate regulatory frameworks without suffering any consequences (Hakimi, 2020). This is because the international community and global governance mechanisms do not have the necessary legitimacy to force a shift in a state’s behavior (Franzie, 2018). Hence, compliance is often seen the main challenge preventing the proper implementation of global rules (Howse and Teitel, 2010). Nonetheless, noncompliance of specific nations does not mean that the system is completely inefficient as many others do obey the laws as their interests are not hindered by them (Pickering, 2014), the existence of rewards for doing so such as cooperative gains and access to finance (Van Aaken and Simsek, 2021) and simply being concerned about losing their reputation because of noncompliance (Brewster, 2009).

Scholars will disagree among each other over the shape, functions, and even whether an international structure should be created to address the global AI governance regime. This is a repeating scenario that has occurred every time the international community tried to develop regulatory frameworks. AI professionals and experts must learn from the mistakes made in other fields to avoid them.

Literature review

The regulation of AI has been the subject of discussion (Goh and Vinuesa, 2021), for several decades for now (Jackson, 2019), where a race towards doing so among the different nations is taking place (Broadbent, 2021). Competition occurs also between countries and supra-national institutions such as the Council of Europe and the United Nations Educational, Scientific and Cultural Organization (Oprea et al., 2022). Regardless, the question of the most appropriate way to regulate AI (Bensoussan, 2020), and whether laws are needed remain unanswered (Han et al., 2020). What is certain is that lawmakers must possess great understanding of AI issues to adopt appropriate rules (Vinuesa et al., 2020), as the traditional ones are being challenged (Du, 2023). The interplay between the law and AI as a subject is not novel if one considers the literature related to regulations and technology over the decades (Dellapenna, 2000), particularly this topic (Governatori et al., 2022; Sartor et al., 2022; Villata et al., 2022) where governance represents one fundamental aspect tackled (Chinen, 2023). The role of international law in the progress of the AI field (Castel and Castel, 2016) is widely debated (Nash, 2019), in relation to its fundamental principles, as well as questions of ownership and liability (Tzimas, 2021).

AI rules are conceived as means for the protection of public interests and ethics as well as avoiding and tackling existing inequalities resulting from such technologies (Gantzias, 2020). This is very much needed considering the ambiguity surrounding the rules associated with this field across continents (Walker-Osborn and Chan, 2017), despite the huge complexities related to this discussion (Dremliuga et al., 2019). The obstacles are the result of a lack of consensus over the definition of the term (Smuha, 2021) and the different regulatory theories that can apply mainly those related to the public interest; conspiracy; organizational and capitalist state theories (Gantzias, 2020). This in addition to the broad nature of this sector impacting other fields given the existence of various AI techniques and applications and the regulatory race where various legal regimes are impacted (Smuha, 2021). These include international trade law; international criminal law (Borlini, 2023); international economic law (Peng et al., 2021); international human rights law (Roumate, 2021; Langford, 2020) where rights are discussed even for robots for instance (Gellers, 2020); intellectual property rights (Hashiguchi, 2017); international humanitarian law (Hua, 2019); international nuclear security law (Anastassov, 2021); relation between international law and regional rules such as European Union (EU) law considering this issue (Committee on Legal Affairs 2021; The Editors of the EAPIL, 2021) even from a global private regulatory framework perspective (Poesen, 2022). All these norms and rules represent international law’s initial response to AI (Zekos, 2021), as the rules often follow technological developments (Payne, 2018), considering the multilateral context and the different institutions (Garcia, 2021).

The Impact of technology on international law is relevant in terms of shaping and changing it (Deeks, 2020a), especially with regards to contributing to its establishment, enforcement (Deeks 2020a, 2020b) and the decision-making process (Arvidsson and Noll, 2023). This is in contrast to others who claim that AI may result in the displacement even destruction of international law (Maas, 2019a) or the revaluation of the existing rules (Zekos, 2021), as the law reaches its limits in its regulatory attempts (Alschner, 2021). In short, some claim that reliance on public international law would not yield the expected results in terms of controlling and tackling AI developments (Liu, 2018), as structural changes to this framework are being noticed (Burchardt, 2023a). These include the empowerment and emergence of new public and private actors beyond the nation; changing power dynamics among states; increasing fragmentation and legal gaps as well as challenging and reinforcing values simultaneously (Burchardt, 2023b). Moreover, questions with regards to AI’s potential personality (Hárs, 2022), and its limits (Chesterman, 2020) as well as its legal classification as global commons are taking place (Tzimas, 2018).

Given this reality, scholars are wondering whether more or less rules are needed (Woodcock, 2021), if a balance can be achieved in the short and long term (McCarty, 2018), and whether new concepts are required to tackle the disruption taking place in the legal field due to AI (Liu et al., 2020a), where current jurisprudence is perceived as inadequate (Paliwala, 2016). In this context, AI principles have been identified (Weber, 2021). Self-regulation initiative by tech companies have emerged given the absence of a globally established framework and the private sector push towards minimal rules (Butcher and Beridze, 2019). A global agreement on AI is not currently in place (Mishalchenko et al., 2022), as the fragmented global scene into multiple institutions and existing norms further complicates this situation (Schmitt, 2022). Overall, proposals range from existing norms and instruments to the creation of new rules and institutions (Koniakou, 2023). Some scholars have developed specific conceptual frameworks to that end stating the rational for doing so (de Almeida et al., 2021). Others called for the establishment of a global standard for AI regulation (Wu and Liu, 2023), while some consider regional rules such as the EU Act as a potential global regulation (Musch et al., 2023). This is part of a larger debate where the focus is on the best way to regulate this field (de Almeida et al., 2020). Approaches such as pragmatism to this matter are being considered (Ellul et al., 2021), in addition to whether regulations should target specific sectors or individual areas (Finocchiaro, 2023). Additionally, conversations concerning the adoption of strict or soft rules (Papyshev and Yarime, 2022), the use of regulatory sandboxes and risk based approaches are underway (Díaz-Rodríguez, 2023).

Overall, scholars are debating the efficiency of regulations to tackle AI (O’Halloran and Nowaczyk, 2019), and their impact on its subjects: states, natural persons, and corporations (Lee, 2022), claiming that smart regulations are the way forward (Hillman, 2023). Others claim that the approach of wait and see is the most effective one (Villarino and Vijeyarasa, 2022). AI affects various topics such as human rights, sovereignty, state responsibility, dispute settlement, war, North-South Divide (Lee, 2022), the rule of law (Greenstein, 2022); democracy (Leslie et al., 2021); corporate responsibility (Lane, 2023); autonomous weapons (Piccone, 2018); the judicial system overall (Said et al., 2023); weapons of mass destruction (Chesterman, 2021a); discrimination (Packin and Aretz, 2018); autonomous shipping (Soyer and Tettenborn, 2021); robots (Humble, 2023); space (Martin and Freeland, 2021); health (Murdoch, 2021); law enforcement (Alzou’bi et al., 2014); liability (Kingston, 2018); justice (Bex et al., 2017) and environmental concerns (Qerimi and Sergi, 2022). Challenges overall (Abashidze et al., 2021) especially to the application of laws such as its constant development; lack of transparency, the ability to avoid legal limitations (Hoffman-Riem 2020); lack of accountability (McGregor, 2018) are noticed.

It is worth mentioning that for the time being, international law lags behind when it comes to the adoption of appropriate rules for governing AI (Chache et al., 2023), given the complexity surrounding the adoption of a suitable regulatory design especially concerning the question of the exact time to make a regulatory intervention (Walz and Firth-Butterfield, 2019). The result was suggestions of adopting hybrid governance perspective via public-private partnerships (Radu, 2021). This gains further importance when one considers the calls for ensuring that humans govern through laws and not AI (Gantzias, 2021), and the need for flexibility (Johnson, 2022). The topic is becoming increasingly political (Tinnirello, 2022), particularly given the diverging public views associated with its regulation (Bartneck et al., 2023), society’s responsibility (Hilton, 2019), the limits of law and emerging dilemmas (Chesterman, 2021b).

Overview of most pertinent legal initiatives and regulations

The European Parliament and Council passed a regulation in 2023, establishing harmonized rules for AI. This legislation encompasses general provisions and identifies prohibited AI practices. It classifies AI systems by risk, specifying stringent requirements for those deemed high-risk. It outlines responsibilities for providers, users, and other stakeholders involved with high-risk AI systems. The act details the roles of notifying authorities and notified bodies, and sets standards for assessments, certifications, and registrations. It mandates transparency for certain AI systems and supports innovative measures. Governance structures are put in place, including the European AI Board, national competent authorities, and an EU database for standalone high-risk AI systems. The regulation provides for post-market monitoring, facilitates the sharing of information on incidents and malfunctions, and ensures market surveillance. It also introduces codes of conduct, confidentiality requirements, penalties, and outlines procedures for delegation of power and committee operations (EU AI Act, 2024).

The Council of Europe established a Committee on Artificial Intelligence (CAI) to lead international negotiations aimed at creating a comprehensive legal framework. This involves facilitating discussions among nations in line with the UN sustainable development agenda (CAI, 2021). In 2023, the Committee published a Revised Zero Draft [Framework] Convention on Artificial Intelligence, Human Rights, Democracy, and the Rule of Law (Council of Europe, 2024). This document includes became a binding treaty covering general provisions and obligations; principles related to activities within the lifecycle of artificial intelligence systems; remedies; assessment and mitigation of risks and adverse impacts; convention implementation; follow-up mechanism and cooperation and final clauses (Council of Europe, 2024).

The UN Educational, Scientific and Cultural Organization (UNESCO) adopted recommendations on the ethics of AI, covering various systems primarily related to education, science, culture, communication, and information. These aim to establish a global framework comprising values, principles, and actions to guide stakeholders in achieving multiple goals. The core values emphasized are respect, protection, and promotion of human rights, fundamental freedoms, and human dignity; environmental and ecosystem health; diversity and inclusiveness; and fostering peaceful, just, and interconnected societies. The principles include proportionality and non-harm; safety and security; fairness and non-discrimination; sustainability; privacy and data protection; human oversight and determination; transparency and explainability; responsibility and accountability; awareness and literacy; and multi-stakeholder, adaptive governance and collaboration. The policy action areas include ethical impact assessments, ethical governance and stewardship, data policies, development and international cooperation, environmental and ecosystem considerations, gender issues, cultural impacts, education and research, communication and information, economic and labor considerations, and health and social well-being (UNESCO, 2021).

The People’s Republic of China has enacted several laws concerning AI, particularly since 2021 (Kachra, 2024). These include the 2023 Deep Synthesis Provisions, which are expected to regulate AI-generated content at every stage until its publication (Cyberspace Administration of China, 2023a). The Internet Information Service Algorithmic Recommendation Management Provisions, enforced in 2022, aim to protect user rights in mobile applications by prohibiting fake accounts and the manipulation of traffic numbers (Creemers et al., 2022). Additionally, China adopted the Interim Measures for Generative Artificial Intelligence Service Management, through which legislators seek to balance innovation with legal governance based on specific principles (Cyberspace Administration of China, 2023b).

The United States adopted several federal regulations concerning AI. These include the White House Executive Order on AI, which covers numerous sectors, and the White House AI Bill of Rights, which lays down specific principles (White & Case, 2024). Additionally, various state legislations have been adopted concerning safety, data privacy, transparency, accountability, and other topics. Overall, the number of federal laws and state rules on this topic has tremendously increased over the years, with some states, such as California, being more ambitious than others (Lerude, 2023).

Artificial intelligence, international law, and global actors

This section will examine the role of the various relevant stakeholders in the creation of international law applicable to AI and a regulatory authority. The purpose is to highlight their role in the process that has already been initiated.

States

States represent the most relevant rule-makers in the global arena (Chesterman 2022). They are the main actor under international law with the authority to agree and implement global rules domestically. Treaties established by nations are used to organize relations among them concerning specific issues (Chinen, 2023), considering each one’s sovereignty, rights, and duties (Pagallo et al., 2023). Nations are held accountable by other ones for their actions or lack of where current rapid changes especially from a technological perspective are complicating the ability to regulate the evolving landscape (Weiss 2011). This includes AI (Langford, 2020), being used by nations to impact global developments (Hwang and Rosen, 2017), towards a more desirable future considering various factors and values (Gabriel, 2020) and the potential changes affecting the shaping of the legal regime (Maas, 2019b). In fact, governments are debating whether such framework is needed, the approach to use and the actors to be involved (Cath, 2018a), as political legitimacy plays an important role (Erman and Furendal, 2022a) in addition to balancing innovation with risks (Miailhe and Lannquist, 2022).

Given the absence of an actual dialog among nations albeit collaboration is seen as the way forward, its regulation remains difficult despite the need for it with the increasing sophistication and interconnectedness of AI systems (Butcher and Beridze, 2019; Sari and Celik, 2021), as well as its growing impact on the global economy (Saidakhrarovich and Sokhibjonovich, 2022). States are invited to provide suggestions on how to move forward (Roumate, 2021), while simultaneously using the new technological tools to implement international law (Deeks, 2020a), and address whether regulations necessitate amendments (Zekos, 2021). Existing regulatory frameworks require an accelerated adaptation and expansion to address the constantly evolving digitalization activities posing new questions for humanity and affecting old ones (Abashidze et al., 2021). This is of outmost importance as AI will affect sovereignty, social, cultural, economic and political norms where the effect differs from developed to developing nations (Usman, Nawaz and Naseer n.d.). This resulted in calls for a precautionary approach concerning this topic to balance opportunities and uncertainties (Leslie et al., 2021), when attempting to establish rules by governments (Tóth et al., 2022), that need to comply with said regulations (Yara et al., 2021a).

The regime to be installed, the values and principles, the decision-making process haven’t yet been discussed collectively among states (Erman and Furendal, 2022a, 2022b). These are expected to affect many domestic legal aspects of AI development (Maas, 2019b). Factors to be considered include institutional barriers; fragmentation of international law, geopolitical realities (Sepasspour, 2023), human rights, economic considerations (Abashidze et al., 2021), and the slow decision-making process globally (Hars, 2022). Doing so is challenging given the push to assume leadership at the domestic level (Ala-Pietilä and Smuha, 2021). The EU proposed through its various groups different suggestions for the regulation of this field. Meanwhile, Beijing suggested specific principles considering the priorities of Chinese government such as international competition and economic development. The US initiated a similar process while seeking to maintain its leadership. Meanwhile, many nations lack the technological and financial capacity to participate in this debate (Carrillo, 2020). Besides the ones mentioned, those active include Canada, France, Germany, India, Israel, Japan, Russia, South Korea, and the United Kingdom. Questions to whether all these nations can collaborate through legal mechanisms creating trust; confidence and assigning responsibility remain unanswered (Gill, 2019; Boutin, 2022), due to the existence of diverging interests (Blodgett-Ford, 2018), as these nations are making huge investments in the various aspects related to this field (Winter, 2022). Overall, states at this stage are seeking to adopt a holistic regulatory approach to this topic and attempting to reflect such strategy in the global sphere (Roberts et al., 2021). The difference in the various visions among governments is complicating the dialog and attempt to adopt proper AI regime (Cath et al., 2018b), as national administrations are themselves experimenting with AI systems (Surden, 2019). This is not say that it is an easy task for nations to address given the numerous aspects that must be dealt with (Akkus, 2023).

Given all the above, little progress has been made on this front (Haner and Garcia, 2019) signaling the need to further develop the domestic rules (Gerke et al., 2020). It is in this context that some call the scientific community to guide states in their endeavor to prevent the occurrence of harm and establish adequate responsibility and accountability mechanisms (Garcia, 2016). This is as neither China nor the US appears to favor establishing a regulatory authority, preferring instead to give their companies a competitive edge in the global race to develop AI. Without their support, such an authority would be ineffective in assessing the human rights, ethical, and societal implications of cutting-edge AI technology (Whyman, 2023; Sullivan, 2023). However, both nations cannot afford to be excluded globally. Therefore, they are participating in international discussions on AI regulation to influence its development (Smuha, 2023; Lee, 2024).

Natural persons

In international public law, individuals are usually not considered subjects that can influence the shaping of regimes as this field organizes state to state relations via agreements and nonbinding instruments (Von Bogdandy et al., 2017). This is why, citizens were not added to the conceptual framework established by the authors. Yet, AI technologies affect the lives of citizens (Ala-Pietilä and Smuha, 2021; Strange and Tucker, 2023). The law applicable to natural persons in this context is expected to be affected (Liu and Lin, 2020b), at the various levels especially considering the regimes in place such as democracy and dictatorships (Wright, 2018). The relevance of this field was seen during Covid19 pandemic as people’s vaccination status was tracked digitally across borders (Zeng, 2020), while the use of AI for predicting behavioral patterns is currently occurring (Saurae et al., 2022). Simultaneously, AI further enhanced citizens participation in the decision-making process through citizens’ science (Dauvergne, 2021), and other means as calls for their involvement is taking place (Büthe et al., 2022), given their contribution to human survival as witnessed during Covid19 (Mavridis et al., 2021).

Hence, citizens protection is needed in the various strategies used as awareness of AI risks is increasing and as their behavior is impacted by new technologies requiring legal clarity. Citizens expect cooperation transnationally to guarantee benefits and reduce risks (Ala-Pietilä and Smuha, 2021), as global governance via law is impacting their lives and communities (Benvenisti, 2018), and as they are progressively assuming the role of responsible global actors (How, 2021), via their progressive engagement (Sharma et al., 2020), at the different levels (Medaglia et al., 2021).

This is not to say that citizens’ participation in law-making globally is an easy task as the complexity of the issues discussed including AI may in many instances be beyond the capacity of numerous people to assess (Benvenisti, 2018). Indeed, this technology’s value and relevance within different sectors remains poorly understood (Wang et al., 2021), since many lack a proper knowledge of its mode of functioning (Robinson, 2020). Still, gaining the population’s approval provides great legitimacy to the adoption and implementation international rules in this regard (Gesk and Leyer, 2022), as trust in such technology is lacking given the existing risks (Konig, 2023a). To tackle this issue, regulatory intervention is conceived as the way forward to calm the concerns (Konig et al., 2023b), and ensure the overall population satisfaction (Chatterjee et al., 2022), considering their perception of the rules in place for governing AI (Starke and Lunich, 2020), and their overall expectations (Wirtz et al., 2019), with regards to being responsive to fulfilling their needs (Valle-Cruz et al., 2020), based on different benchmarks such as ethics (Kleizen et al., 2023).

This begs the question of whether it is time for citizens to play an active role in lawmaking globally. This issue has been the subject of debate for a while now not only concerning AI regulation but also other fields being tackled transnationally (Sohn, 1983; Hollis, 2002). One can argue that this role is being played indirectly via civil society organizations that are increasingly impacting the international discourse, and as citizens actions tend to be unorganized (Buhmann and Fieseler, 2021). One may also argue that states assume similar responsibility when participating in international lawmaking due to its responsibility of protecting its own citizens (Feijoo et al., 2020). It is worth mentioning that similar situation is encountered domestically where a clash is happening since many states de facto take decisions in the name of collective national interests. The message overall is the need to earn citizens trust via a responsible and trustworthy governance frameworks (Isom, 2022), that serves their interests (Yigitcanlar, 2020). Otherwise, such trust can be reduced and even lost due to a variety of reasons such as lack of fairness, absence of transparency (Zuiderwijk et al., 2021), and lack of freedom (Al Shebli et al., 2021). This is very important when one considers the negative consequences of AI witnessed so far (Wirtz et al., 2020).

Civil society organizations

Social institutions outside of the state that are selfgoverned, voluntary and not-for-profit have been pointing out to shortcomings and failures of policies at the national and international level. These include those related to culture and recreation; education and research; health; social services; environment; development and housing; law, advocacy, and politics; religion etc (Lynn et al., 2022). Activists and social movements are accepted as part of the international community affecting lawmaking (Chinen, 2023), given their importance to the advancement of human well-being (Mohammed et al., 2022). Various terminologies were established to refer to them such as international civil society; transnational social movements; global social-change organizations; world civic politics and others (O’Brien, 2005). The latter witnessed a vast expansion in the last decades (Jacobs, 2018). Over the years they expressed concerns about AI and have conducted multiple studies in relation to this field (Zhang et al., 2021), where the law is needed to address any of the potential shortcomings (Fay, 2019). The debate concerning this matter is polarized due to the pros and cons of digitalization (Sundberg, 2023). Still, the involvement of this specific actor is crucial given its unique position (Jones, 2022), which is why many are addressing the relevance of such participation (Furendal, 2023).

The main aim of civil society organizations is the protection of human rights from breaches that may occur because of the use of AI (Ozdan, 2023), where the implications of the use of such technologies are being examined (Maxwell and Tomlinson, 2020). The end goal is avoiding the potential negative consequences on populations and communities through transnational efforts (WHO, 2021). They are seen as means to critically assess technological progress. These are important public stakeholders with whom to collaborate. Their role lies in agenda setting, consultation and discussions impacting the adoption of transnational laws and their implementation by state authorities domestically (Buiten et al., 2023; Hoffman-Riem 2020). For instance, these entities take part in the AI for Good summit platform (Jelinek et al., 2021; Butcher and Beridze, 2019). They also played a role regionally in the negotiations and formulation of AI policies most recently the EU AI Act (Tallberg et al., 2023). Notably, there are initiatives to bring together states, companies, academia, and NGOs, such as the Athens Roundtable by the Future Society despite the high-costs associated with participation (The Future Society, n.d.). It remains true that even on such platforms, only a few states, companies, NGOs, and academics can participate although broader cooperation is needed.

Globally, civil society organizations call strongly for the regulation of this field (Martin and Freeland, 2021), while highlighting the dilemmas to decisionmakers and stakeholders with the objective of effectively governing this sector (Chinen, 2023), and opposing its use in situations that might entail risks (Lewis, 2019). For instance, they called for the adoption of a binding instrument prohibiting the use of AI in lethal autonomous weapons systems (Schmitt, 2022). Additionally, they developed ethical principles and guidelines paving the road ahead of legislators domestically and internationally (Koniakou, 2023). Examples include non-legally binding instruments called “Internet Bills of Rights” having different principles and standards (Celeste et al., 2023). The latters may try to develop a specific set of values that are ethically acceptable (Von Ingersleben-Seip 2023). These have been criticized by some entities as a means to escape from regulation or delay it in the name of ethics (van Dijk et al., 2021). Some scholars established a framework based on which AI can be used to empower civil society (Savaget et al., 2019). As a non-state actor, AI may also lead to a shift in power from states towards these organizations (Maas, 2019a), although states will always remain the more powerful. In fact, it has been noticed that it is not always possible for this actor to influence the development of rules in this regard. On the contrary, this technology will disrupt the traditional role of civil society (Williams, 2018), that remains despite this considered a partner in the development of the global AI legal architecture (Walz and Firth-Butterfield, 2019), to ensure the effectiveness of the rules to be adopted (Korinek and Stiglitz, 2021). In some instances, civil society was not up to date concerning new innovations (Kostantinova et al., 2020). Meanwhile, the high costs of participation may affect such entities presence in the debate (Cihon et al., 2020a). Teaming up with tech companies is taking place to address some of the abovementioned issues (Latonero, 2018).

Corporate entities and business enterprise

Multinational corporations are conducting businesses everywhere rendering the borders obsolete (Kelly and Moreno-Ocampo, 2016). They are increasingly influencing the creation, implementation, and enforcement of international law (Butler, 2020). Simultaneously, they are subjects of this regulatory framework due to their transboundary impact which raises questions such as those related to liability and compensation (Kinley and Tadaki, 2004), for damages including human rights violations (Kinley and Chambers, 2006). The nature of their obligations is widely debated where suggestions are made to ensure they are held accountable. Their conduct is being addressed indirectly by asking states to adopt rules applicable to the corporate sector with few exceptions like in the cases of war crimes and forced labor where international norms are in place (Vazquez, 2005).

Companies are using AI in various sectors such as finance and insurance; retail, healthcare, and information services where huge investments are made (Jablonowska et al., 2018). Companies play a vital role in the development of AI nationally and internationally as their products cross national borders where rules vary (Latonero, 2018). They assume a great responsibility when establishing and using such technology (Kriebitz and Lutge, 2020). This includes traditional technology companies and emerging ones (Lin, 2019). The costs of moving the AI resources of a company be it talent, capital or infrastructure globally are high especially given the existence of legal obstacles as a result of entering new jurisdictions (Smuha, 2021). The latter raise concerns concerning the possible oversight of these stakeholders given their great technical infrastructures and know-how (Stahl et al., 2021), and the overall influence of this technology on society if left unchecked (Leslie et al., 2021). Therefore, regulating AI activities seems the way forward where companies are subject to these rules (Bennett, 2023).

Paving the road in this sector meant that corporations pay great attention to regulations (Cath, 2018a), given their interests in profits and protecting their investments (Hars, 2022). This is why, it is being said that corporations are not scared of regulations but the type and content of those (Chou, 2023). A corporation’s product need to comply with laws applicable to AI like data protection and intellectual property. They also need to adapt to new laws associated with this field. In both cases, the goal is to reduce or avoid risks emerging from non-compliance and strengthening their trust and reputation among the stakeholders. Companies involvement is needed to also ensure that innovation and competitiveness are not hampered by laws where the latters should rather facilitate this reality. This can happen via the creation of standards and guidelines on AI use (Holitsche 2023). Already, for instance, some companies raised concerns with regards to the EU’s AI law proposal for potentially affecting competitiveness and resulting in investments fleeing the European market (Wodecki, 2023). Finally, corporations have a leadershing role to play by applying ethical and responsible AI practices, endorsing global instruments providing frameworks for addressing this technology’s activities (Holitsche 2023). Companies like Google and Amazon had made commitments to make sure that AI products and systems are safe and trustworthy (Diamond, 2023).

The private sector is calling for the adoption of soft law mechanisms to protect their interests. Many businesses created principles and standards. In contrast, hard laws consider the interests of various stakeholders including governments and the public in an unpredictable process (Gutierrez et al., 2020). For instance, IBM and Microsoft made a commitment to the Vatican to safeguard humankind in the development of AI in the Rome Call for AI Ethics (Hickman and Petrin, 2021). Various mechanisms have been proposed to tackle transnational companies use of AI. These include conditional support from international organizations such as the World Bank; the adoption of a global corporation treaty stipulating the responsibilities of AI use creating obligations for companies having a state’s nationality and having periodic monitoring (Schwarz, 2019). More concretely, there are calls for governments to establish global regulatory frameworks where private sector entities compete to achieve specific legal objectives at both local and international levels (Clark and Hadfield, 2019a). These markets are designed to address uncertainty more efficiently than direct regulations (Bova et al., 2023) as legislators often lack the necessary technical knowledge, whereas market forces and industry are better equipped to drive the adoption of appropriate regulations (Hadfield and Clark, 2023). It is worth mentioning that some caution of companies who are already a monopoly in the digital technology business having a great infrastructural importance (Peng et al., 2021). Meanwhile, organizations like the UN are calling corporations to live up to their responsibilities concerning this field (Turk, 2023). The challenge is to ensure that companies still move fast despite the regulations being drafted (Thomson Reuters 2023).

International organizations

Transnational entities such as the UN and the World Trade Organization produce international laws in various forms including binding, customary, and soft rules (Dung and Sartor, 2011). These initially especially after World War II were heavily trusted by the different stakeholders as the gatekeepers of peace, security, and prosperity. Only in later years, criticisms started emerging concerning their impact (Benvenisti, 2018). Still, their role being increasingly important since then and after the end of the cold war (Alvarez, 2006).

Various global organizations are creating AI frameworks especially in the last decade (Johnson and Bowman, 2021), or working on rules associated with this field (Roumate, 2021). These include the Organization for Economic Cooperation and Development; the International Organization for Standardization (Martin and Freeland, 2021); the Institute for Electrical and Electronics Engineers; Centre for the Study of Existential Risks, Future of Humanity Institute, Future of Life Institute, Future Society, Leverhulme Centre for the Future of Intelligence; AI Now Institute; the Machine Intelligence Research Institute and the Partnership for AI. Many UN organizations are also dealing with topic such as High-Level Panel on Digital Cooperation; UN Interregional Crime and Justice Research Institute; International Telecommunication Union; UN University Center for Policy Research; International Labour Organization and UN Office for Disarmament Affairs (Butcher and Beridze, 2019). Only major international organizations with a distinct legal personality and a founding international treaty (such as UNESCO, EU and the Council of Europe) will serve as platforms for international cooperation.

All these deal with one or several aspects in relation to AI governance and regulations asking questions such as what is the best approach to regulate this sector. Issues to address inter alia risks, challenges, deployment, harmonization, national implementation (Butcher and Beridze, 2019; Finocchiaro, 2023). Their initiatives and efforts often make an impact on the law-making process globally (Velasco, 2022), especially with the adoption of nonbinding instruments and declarations containing for instance ethical principles (Taddeo et al., 2021). The goal is to create consensus among nations parties to these organizations on regulatory AI elements without for the time having binding obligations upon states or being able to intervene in domestic decisions in relation to this topic (Smuha, 2021). Doing so is very challenging due to the different views held by the members concerning regulations (Deeks, 2020b). Yet, cooperation is the way forward considering the nature of the digital world that has a global reach (Algorithms, Artificial Intelligence and the Law, 2019), where transnational entities are seen as a means to strengthen collaboration (Kerry et al., 2021). International organizations create a space for states and other stakeholders to adopt AI norms and attempt to facilitate the reaching of an agreement among the parties (Chinen, 2023).

Given that the work of the various entities is not connected, suggestions are made for the creation of instruments for the coordination of activities of international organizations for regulating AI (Talimonchik, 2021a). Some proposed to make AI regulation and governance centralized within one organization to avoid having a fragmented landscape (Cihon et al., 2020a). This can take the form of a regulatory agency for AI (Erdélyi and Goldsmith, 2022), an International Artificial Intelligence Organization; an International Academy for AI Law and Regulation (Turner, 2019); or an international coordinating mechanism under the Group of 20 (Jelinek et al., 2021). Meanwhile, others argue for a decentralized system where multiple fragmented institutions play a role stating that forum shopping is beneficial as these entities self-organize (Cihon et al., 2020b).

Organizations such as the UN have the capacity to establish framework conventions including AI ones laying down general principles based on which more specific ones are adopted (Johnson and Bowman, 2021). Most recently, the UN adopted a resolution on seizing the opportunities of safe, secure, and trustworthy artificial intelligence systems for sustainable development. The resolution advocates for using AI to promote the 2030 agenda, supporting developing countries in its adoption, emphasizing the importance of domestic implementation through regulations, among many other points (United Nations General Assembly 2024). The UN also adopted an interim report entitled: “Governing AI for Humanity” citing five guiding principles and seven institutional functions for the governance of AI (UN 2023a). This is occurring within the general framework of initiatives such as the 2024 Summit of the Future, the UN Common Agenda, and the Global Digital Compact. All these address global challenges, including AI governance (UN 2024; UN Common Agenda n.d.a.; UN n.d.b). Limitations concerning the work of such entities have been noticed mainly the lack of representation from lower-income countries; the absence of specificity in the AI instruments and initiatives established and lack of incentives for state compliance with norms (Johnson and Bowman, 2021). This affects the legitimacy of their decisions and initiatives in this case regarding AI (Chinen, 2023). Yet, their leadership is very much needed (Fournier-Tombs, 2021), as highlighted through their extensive focus on this topic (Giannini, 2022). Worth mentioning that these international organizations have already enlisted several NGOs and academic institutions while compiling the regulation drafts (recommendations, framework conventions and acts (United Nations General Assembly 2024; UN Common Agenda n.da.; UN n.d.b).

AI regulatory authority

This section will examine the two main functions of a global AI organization: adopting international law and monitoring its enforcement domestically. It is worth mentioning that other functions will certainly be included and be subject to rigorous analysis.

Establish law

AI entities are deemed to be operating within legal bounds if they are subject to human control. This should not be left to the discretion of states and corporations as they may prioritize their interests over other considerations (Burri, 2018). Moreover, confusion and uncertainty will rain if we use solutions on a case-by-case basis (Andrés, 2021). Given that AI is a nascent field, the type of regulations to be adopted be it hard or soft law is witnessing a huge debate. Examples of nonbinding suggestions include the AI focus standards (Villasenor, 2020); principles for AI research and applications (Marchant, 2019); voluntary safety commitments for AI (Han et al., 2022); the EU Ethics Guidelines for Trustworthy AI, the Organization for Economic Co-Operation and Development Principles for responsible stewardship of trustworthy AI (OECD, 2019); UN Educational, Scientific and Cultural Organization Recommendations on the Ethics of Artificial Intelligence (UNESCO 2021). Hard law proposals cover the calls for UN to establish a regulatory framework for AI (Fournier-Tombs (2021) and the use of global commons as a structure (Tzimas, 2018). Meanwhile, some call for considering the EU AI Act as global AI law (Musch et al., 2023), while similar proposals are expected to occur with regards to the Council of Europe Framework Convention on Artificial Intelligence (Council of Europe, 2024). Many stakeholders are making suggestions such as the G20; standards organizations, NGOs and research institutes (Schmitt, 2022).

A push towards a soft approach is noticed due to various reasons. These are the need for flexibility due to the uncertainties surrounding this field; states reluctance to relinquish their sovereignty and control of information (Erdélyi and Goldsmith, 2018); the fast development of this technology cannot be matched through rules (Marchant, 2021); geopolitical and economic competition as well as national security (Whyman, 2023). Yet, as seen earlier, two binding instruments are already adopted (EU AI Act, 2024; Council of Europe, 2024). Therefore, a global AI authority should start by using nonbinding instruments such as recommendations, guidelines, and standards (Erdélyi and Goldsmith, 2018), while considering the provisions established within AI treaties. This responds to governments need to balance innovation with protection (Smuha, 2021) as AI covers numerous technologies across a variety of sectors (Schiff, 2020). Elements to be considered by the future regulatory authority include self-determination; transparency; discrimination and rule of law (Wishmeyer and Rademacher, 2020). Other factors that play an important role in the lawmaking process are the political control over the entity; its independence, quality of regulations and accountability (Maggetti et al., 2022). For the time being, the global community is far from creating such an authority as different regulatory initiatives and actors are in competition (Cheng and Zeng, 2023), while the appropriate type of regulatory authority and its powers is constantly debated (Anderljung et al., 2023). Lawyers have not yet fully grasped the entire aspects related to it, and the probability of reaching a global consensus on this issue is unclear. This is why, a high degree of pragmatism is needed given the complicated task ahead where suggestions on the way to move forward are seriously considered (Google n.d.).

Monitor enforcement

International organizations do not have the capacity to enforce a treaty domestically as only nations can do so. States based on their consent commit to implement the obligations stated within agreements by signing and ratifying the latters. In many cases, states either fail or decide to not comply with its obligations for various reasons. The global entities establish mechanisms to monitor whether a government is indeed implementing its commitments (Follesdal, 2022; Krisch, 2014; Besson, 2016; Lister, 2011; Bodansky and Watson, 1992). Examples include the Paris Agreement Compliance Committee (UN n.d.) and the Universal Periodic Review of the Human Rights Council (UN HRC n.d.).

States have different capacities and willingness to implement transnational obligations that shall be applied differently by each government (Djeffal et al., 2022), particularly in the context of North/South division where developing countries needed international support to comply with agreements (Cihon et al., 2020a). The regulatory authority would provide guidance, opinions, and expertise (Stahl et al., 2022). Such suggestion is also being called for domestically with AI national authorities (Salgado-Criado and Fernandez-Aller, 2021). These same authorities would have the task of implementing global agreements (Anzini, 2021). The latters have faced difficutlies in recent years in implementing digital regulations adopted by supranational institutions (Stuurman and Lachaud, 2022).

Monitoring is needed due to the high degree of complexity and uncertainty surrounding AI activities affecting the implementation of sound domestic rules due to the nature of this technology (Taeihagh, 2021). The latter requires the existence of appropriate conditions (Hadzovic et al., 2023), while considering that rules have limitations especially when applied to novel challenging sectors (Gervais, 2023). Monitoring of AI activities is highly challenging given the complex nature of the operations that happen (Truby et al., 2020), resulting in many problems for the entity conducting oversight (Busuioc, 2022), and as enforcement of international rules domestically is generally not an easy task (Brown and Tarca, 2005).

Time and efforts are necessary components in this process to ensure effective implementation (Engler, 2023). In the case of reckless application, social and political instability may happen affecting all kinds of values (De Almeida et al., 2021), especially given the existence of numerous high-risk AI systems (Hadzovic et al., 2023). Some even expect the monitoring to occur by a separate structure within the global regulatory authority (Wallach and Marchant, 2018), on a continuous basis in the various fields (Pesapane et al., 2021). Questions concerning the type of monitoring and its functions (Pagallo et al., 2019), how to carefully implement international principles domestically (Truby et al., 2020), and how to ensure that enforcement is not only effective but also legitimate and fair are made (Hacker, 2018).

It is worth mentioning that the monitoring of developments taking place globally should also occur to avoid conflicts and ensure coordination (Cihon et al., 2020b). Meanwhile, some suggest that monitoring takes place via a collaborative action among several international bodies (De Almeida et al., 2021).

AI and international law: the road ahead

Despite the importance of international law in the regulation of AI (Carrillo, 2020), the field is nascent resulting in numerous legal questions among others. These include for instance the role and responsibilities of the stakeholders examined above (states; natural person; civil society organizations; companies and international organizations); the classification and status of this technology, the means to control it (Castel and Castel, 2016), the need to rethink perhaps international law (Roumate, 2021) given the paradigm shift happening (Martino and Merenda, 2021), the potential incorrect scope of existing laws (Lane, 2022), legal obsolescence (Lane, 2022) as well as the traditional and novel challenges facing the regulation of this field (Čerka et al., 2015; Abashidze et al., 2021). One challenge that is unique to AI is its progressive autonomy resulting in an actual AI personhood and requiring the attribution of similar status by law (Tzimas, 2018). AI is to be deployed domestically which requires the implementation and enforcement of transnational rules (Deeks, 2020b), that have been agreed upon by states consenting to new obligations created via treaties and other binding mechanisms (Dulka, 2023). Another challenge arises from the belief that law alone is insufficient to fully address this issue due to the complex dynamics between social and technological frameworks (Powers et al., 2023).

Such issues have been discussed briefly since the 1970s but more in detail in recent years and decades (Bench-Capon et al 2012; Rissland et al., 2003), resulting in very heated debates concerning transnational regulations (Diaz-Rodriguez et al 2023) with the end goal of achieving global security and stability in the long term (Gill, 2019; Garcia, 2018). Indeed, international relations may be impacted by the absence of AI regulatory frameworks globally (Khalaileh, 2023), which is why a push towards its regulation at that level is happening (Li, 2023). So far, concerned stakeholders did not reach an understanding of how international law would regulate AI developments (Anastassov, 2021) and the aspects as well as matters to be regulated (Sari and Celik, 2021). International lawyers are extremely relevant in guiding the technological developments by creating a new regulatory framework via binding and nonbinding instruments, addressing transnational disputes and strengthening international law overall (Deeks, 2020a). For the time being, the international community did not provide an appropriate regulatory response to the fast AI developments. Yet the potential is there in terms of establishing a framework and a regulatory authority for overseeing, lawmaking and advancing AI technologies (Nash, 2019) building on the two succeful attempts of the EU and the Council of Europe, while using specific approaches or general ones depending on the consensus reached at that point (Krausová, 2017). This will ultimately lead to changes in the structure international law and organizations (Burchardt, 2023a). The nature of the changes remains unclear whether amending existing regulations; adopting new ones or even potentially the decline of the global legal order (Hars, 2022). This is as technological developments often test the limits of law that struggle to make the necessary adjustments (Gellers and Gunkel, 2023), even in terms of legal reasoning (Paliwala, 2016), given the great complexity of AI technologies (Villaronga et al., 2018). Still, international law is expected to provide much-needed clarifications concerning expectations and the agreed behavior over this matter (Garcia, 2016), considering the shifts and developments taking place in this regime over the decades (Zekos, 2021), and existing obligations (Shang and Du, 2021).

The universal and general scope of AI in addition to its technical and practical nature makes international law the main framework to regulate it given the inability to address such elements on a territorial basis. Same argument is made from an economic perspective with regards for instance to the registration of an AI patent that makes it impractical to do so nationally in an interconnected world. Finally, doing so would provide protection and legal certainty to all the stakeholders via a general and comprehensive rules globally (Carrillo, 2020). These arguments gain further relevance as nations are adopting different paths for AI regulations focusing on specific priorities such as tackling risks and keeping societal control (Hutson, 2023). Such laws require international harmonization as technological differences concerning AI among the different nations persist (Galceran-Vercher, 2023), and as the number of nations adopting national AI laws increases (Rai and Murali, 2020). This would occur based on cooperation to prevent jurisdictional conflicts (Whyman, 2023; Black and Murry 2019) and achieve the best potential result in terms of actual rules and its implementations domestically (Guerreiro et al., 2023), which would also reduce the influence of the race currently taking place among nations competing for AI leadership (Villarino et al., 2023b). Such a suggestion is already supported by some nations seeking to establish global norms for specific aspects of AI technologies (Goitom, 2019), where international lawyers have the task of guiding the legal process initiated (Van Benthem, 2021).

International law has already dealt with other challenges and is currently doing so such as poverty and global warming (Yusuf, 2020). Nonetheless, one has to keep in mind that the complexities surrounding AI would affect the implementation, compliance and enforcement of global rules related to it (Yudi and Berlian, 2023), considering the current struggle to agree on basic concepts to apply in AI context such as trust and risk (Villarino, 2023a) and the actual implications of AI activities on conventional legal areas (George and Walsh, 2022), and old concepts such as digital self-determination (Herve ́, 2021). One has to also consider that not all important questions in relation to this topic have received the same attention from the international community and the relevant stakeholders (Norodom et al., 2023). Simultaneously, states behavior will be the one mostly impacting future legal developments in this sphere (Tallberg et al., 2023). Meanwhile, new theorical ideas are already emerging and will appear in relation to this topic (Talimonchik, 2021b), across the various branches of international law (Vihul, 2020) as technological developments increasingly influence the legal sphere (DoCarmo et al., 2021). Moreover, one may also argue that international AI law as a field is starting to emerge resulting in nations having to clarify their positions concerning this matter globally. Based on this, many nonbinding instruments will be issued such as declarations and recommendations before the adoption of a treaty (Smuha, 2023) and the creation of a regulatory authority despite the progress witnessed with the EU and Council of Europe Framework Convention. This is seen by some as the responsibility of states to regulate progress (Schwamborn, 2023), through various means including non-traditional international law-making processes and fora (Tallberg et al., 2023) while moving progressively towards having hard rules for the field (McIntosh, 2020), taking into account all the relevant concerns of the different stakeholders (WHO, 2023).

Conclusion

This article attempted to address a very comply issue that is the governance and regulation of AI. The authors laid down the theoretical framework highlighting that the governance of this field is very complicated and is likely to remain as such in the near- and long-term future. One must also stress upon the efforts made by various scholars and experts in their attempts to propose ways and means to regulate this sector as eventually the accumulated efforts over the years would likely lead to a global regulatory framework tackling AI. The question is rather of timing than whether this would take place given the importance of this technology and the huge investments made to its development at the national level resulting. This is why, governments are pushing towards AI regulation domestically especially given the need to balance innovation societal protection via laws. Meanwhile, leaders from UN, academia and industry are setting the stage globally for what is coming: an extremely difficult conversation on the governance of AI leading to the establishment of binding law and a regulatory authority. This debate is in its infancy and expected to grow further over as the regulation of any new emerging technology that goes through several processes.

The study is limited by its focus on global developments related to AI law. It did not consider the interplay between international and national laws, although some domestic rules are mentioned. The field is slowly but progressively developing, hence only existing instruments could be used to assess the future of international law in this area, which impacts the ability to make long-term assessments and conclusions. The theoretical framework used may change as more actors play a role internationally, either positively or negatively. The focus of the study was more on Western instruments, creating a potentially Western-centric approach to the topic due to the lack of international documents from the Global South, excluding the Chinese example. The emphasis is more on the law, while other fields such as politics, economics, and so on, also play a role in the creation of global AI frameworks.

Based on the analysis, several recommendations can be made. Experts from the legal, engineering, computer science fields and others must cooperate to find common grounds. It is imperative that any proposed legal framework and global authority be examined by experts from all the concerned fields to ensure that all technical matters are handled appropriately. Further communication is required among all stakeholders involved, given their roles and influence in the global debate on this topic. Additionally, existing instruments such as the EU AI Act and the Council of Europe AI Convention must be further assessed and used as models for adopting international treaties. Finally, further studies are needed to assess the feasibility and potential impact of a future AI regulatory authority.

Data availability

Data sharing does not apply to this article, as no new data were created or analyzed in this study.

References

Abashidze AKH, Inshakova AO, Dementev AA (2021) New challenges of international law in the digital age. In: Popkova EG, Sergi BS (eds) Modern global economic system: evolutional development vs. revolutionary leap. Springer, Dordrecht, pp 1125–1132

Abulibdeh A, Zaidan E, Abulibdeh R (2024) Navigating the confluence of artificial intelligence and education for sustainable development in the era of industry 4.0: Challenges, opportunities, and ethical dimensions. Journal of Cleaner Production, 140527

AI Advisory Body (2023) Interim report: Governing AI for humanity. https://www.un.org/techenvoy/sites/www.un.org.techenvoy/files/ai_advisory_body_interim_report.pdf. Accessed 20 November 2024

Ala-Pietilä P, Smuha NA (2021) A framework for global cooperation on artificial intelligence and its governance. In Reflections on artificial intelligence for humanity. Springer, Dordrecht

Alalawi Z et al. (2024) Trust AI regulation? Discerning users are vital to build trust and effective AI regulation. Artificial Intelligence. 1–18. https://doi.org/10.48550/arXiv.2403.09510

Algorithms, Artificial Intelligence and the Law (2019) The Sir Henry Brooke Lecture for BAILII Freshfields Bruckhaus Deringer, London; Lord Sales, Justice of the UK Supreme Court 12 November 2019

Alhosani K, Alhashmi SM (2024) Opportunities, challenges, and benefits of AI innovation in government services: A review. Discov Artif Intell 4(8):1–19

Anthony A, Sharma L, Noor E (2024) Advancing a more global agenda for trustworthy artificial intelligence. Carnegie Endowment for International Peace. https://carnegieendowment.org/research/2024/04/advancing-a-more-global-agenda-for-trustworthy-artificial-intelligence?lang=en. Accessed 20 November 2024

Alschner W (2021) The computational analysis of international law. In: Deplano R, Tsagourias N (eds) Research methods in international law: a handbook. Edward Elgar Publishing, Cheltenham, pp 203–227

Al Shebli K et al. (2021) RTA’s employees perceptions toward the efficiency of artificial intelligence and big data utilization in providing smart services to the residents of Dubai. In: Hassanien AE, et al., (eds) Proceedings of the international conference on artificial intelligence and computer vision. Springer, Dordrecht

Anderljung M et al. (2023) Frontier AI regulation: managing emerging risks to public safety. Cornell University

Andrés MB (2021) Towards legal regulation of artificial intelligence. Rev IUS 15:35–53. https://doi.org/10.35487/rius.v15i48.2021.661

Alvarez JE (2006) International organizations as law-makers. Oxford University Press, Oxford

Alzou’bi S, Alshibly H, Al-Ma’aitah (2014) Artificial intelligence in law enforcement, a review. IJAIT 4:1–9. https://doi.org/10.5121/ijait.2014.4401

Akkus B (2023) An assessment of the acceptance of meaningful human control as a norm of international law in armed conflicts using artificial intelligence. J Ank Bar Assoc 81:49–102. https://doi.org/10.30915/abd.1143722

Anastassov A (2021) Artificial intelligence and its possible use in international nuclear security law. Papers of BAS. Hum Soc Sci 8:92–103

Anzini M (2021) The artificial intelligence act proposal and its implications for member states. European Institute of Public Administration. https://www.eipa.eu/publications/briefing/the-artificial-intelligence-act-proposal-and-its-implications-for-member-states/. Accessed 20 November 2024

Armstrong S, Bostrom N, Shulman C (2016) Racing to the precipice: a model of artificial intelligence development. AI Soc 31:201–206. https://doi.org/10.1007/s00146-015-0590-y

Arvidsson M, Noll G (2023) Artificial intelligence, decision making and international law. Nord J Int Law 92:1–8

Baig A (2024) An overview of emerging global AI regulations. https://securiti.ai/ai-regulations-around-the-world/ Accessed 20 November 2024

Bartneck C, Yogeeswaran K, Sibley CG (2023) Personality and demographic correlates of support for regulating artificial intelligence. AI and Ethics. 1–8. https://doi.org/10.1007/s43681-023-00279-4

Bench-Capon T et al. (2012) A history of AI and law in 50 papers: 25 years of the international conference on AI and law. Artif Intell Law 20:215–319. https://doi.org/10.1007/s10506-012-9131-x

Bennett M (2023) AI regulation: What businesses need to know. TechTarget. https://www.techtarget.com/searchenterpriseai/feature/AI-regulation-What-businesses-need-to-know. Accessed 20 November 2024

Bensousan A (2020) How to legally regulate artificial intelligence? Ann Mines 12:1–3

Benvenisti E (2018) Upholding democracy amid the challenges of new technology: What role for the law of global governance? EJIL 29:9–82. https://doi.org/10.1093/ejil/chy013

Besson S (2016) State consent and disagreement in international law-making. Dissolving the paradox. LJIL 29:289–316. https://doi.org/10.1017/S0922156516000030

Bex F, Prakken H, Van Engers T, Bart V (2017) Introduction to the special issue on Artificial Intelligence for Justice (AI4J). Artif Intell Law 25:1–3. https://doi.org/10.1007/s10506-017-9198-5

Black J, Murry AD (2019) Regulating AI and machine learning: Setting the regulatory agenda. EJLT 10:1–21

Blodgett-Ford SJ (2018) Future privacy: a real right to privacy for artificial intelligence. In Research handbook on the law of artificial intelligence. Edward Elgar Publishing, Cheltenham, pp 307–352

Bodansky D, Watson JS (1992) State consent and the sources of international obligation. Proceedings of the annual meeting. 86:108–113. https://www.jstor.org/stable/25658621

Boon K (2014) Are control tests fit for the future? The slippage problem in attribution doctrines. MJIL 15:1–46

Borlini L (2023) Economic interventionism and international trade law in the covid era. GLJ 24:1–16. https://doi.org/10.1017/glj.2023.13

Boutin B (2022) State responsibility in relation to military applications of artificial intelligence. LJIL 36:133–150. https://doi.org/10.1017/S0922156522000607

Bova P, Di Stefano A, Han TH (2023) Both eyes open: Vigilant incentives help regulatory markets improve AI safety. Artif Intell. 1–42. https://doi.org/10.48550/arXiv.2303.03174

Brewster R (2009) Unpacking the state’s reputation. Harv Int’l L J 50:231–269

Broadbent M (2021) What’s ahead for a cooperative regulatory agenda on artificial intelligence? Center for Strategic & International Studies. https://www.csis.org/analysis/whats-ahead-cooperative-regulatory-agenda-artificial-intelligence#:~:text=The%20Commission%20hopes%20that%20the,in%20which%20AI%20is%20adopted. Accessed 20 November 2024