Abstract

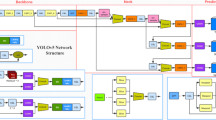

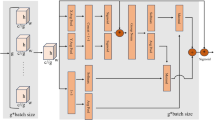

End-to-end self-driving is a method that directly maps raw visual images to vehicle control signals using deep convolutional neural network (CNN). Although prediction of steering angle has achieved good result in single task, the current approach does not effectively simultaneously predict the steering angle and the speed. In this paper, various end-to-end multi-task deep learning networks using deep convolutional neural network combined with long short-term memory recurrent neural network (CNN-LSTM) are designed and compared, which could obtain not only the visual spatial information but also the dynamic temporal information in the driving scenarios, and improve steering angle and speed predictions. Furthermore, two auxiliary tasks based on semantic segmentation and object detection are proposed to improve the understanding of driving scenarios. Experiments are conducted on the public Udacity dataset and a newly collected Guangzhou Automotive Cooperate dataset. The results show that the proposed network architecture could predict steering angles and vehicle speed accurately. In addition, the impact of multi-auxiliary tasks on the network performance is analyzed by visualization method, which shows the salient map of network. Finally, the proposed network architecture has been well verified on the autonomous driving simulation platform Grand Theft Auto V (GTAV) and experimental road with an average takeover rate of two times per 10 km.

Similar content being viewed by others

References

Chen, C., Seff, A., Kornhauser, A., et al.: Learning affordance for direct perception in autonomous driving. In: IEEE International Conference on Computer Vision, pp. 2722–2730 (2015)

Huval, B., Wang, T., Tandon, S., et al.: An empirical evaluation of deep learning on highway driving. Comput. Sci. Robot. (2015). arXiv:1504.01716

Gurghian, A., Koduri, T., Bailur S.V., et al.: Deeplanes: end-to-end lane position estimation using deep neural networks. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 38–45 (2016)

Geiger, A., Lauer, M., Wojek, C., et al.: 3D traffic scene understanding from movable platforms. IEEE Trans. Pattern Anal. Mach. Intell. 36(5), 1012–1025 (2014)

Pomerleau, D.A.: Knowledge-Based Training of Artificial Neural Networks for Autonomous Robot Driving. Robot Learning. Springer, Boston (1993)

Muller, U., Ben, J., Cosatto, E., et al.: Off-road obstacle avoidance through end-to-end learning. Adv. Neural Inf. Process. Syst. 18, 739–746 (2005)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 1097–1105 (2012)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. Comput. Sci. Comput. Vis. Pattern Recognit. (2014). arXiv:1409.1556

Szegedy, C., Liu, W., Jia, Y., et al.: Going deeper with convolutions. Comput. Sci. Comput. Vis. Pattern Recognit. (2014). arXiv:1409.4842

He, K., Zhang, X., Ren, S., et al.: Deep residual learning for image recognition. Comput. Sci. Comput. Vis. Pattern Recognit. (2015). arXiv:1512.03385

Bojarski, M., Del Testa, D., Dworakowski, D., et al.: End to end learning for self-driving cars. Comput. Sci. Comput. Vis. Pattern Recognit. (2016). arXiv:1604.07316

Xu, H., Gao, Y., Yu, F., et al.: End-to-end learning of driving models from large-scale video datasets. Comput. Sci. Comput. Vis. Pattern Recognit. (2017). arXiv:1612.01079

Yang, Z., Zhang, Y., Yu, J., et al.: End-to-end multi-modal multi-task vehicle control for self-driving cars with visual perception. Comput. Sci. Comput. Vis. Pattern Recognit. (2018). arXiv:1801.06734

Liu, W., Anguelov, D., Erhan, D., et al.: SSD: Single shot multibox detector. In: European Conference on Computer Vision, pp. 21–37 (2016)

Chen, L.C., Zhu, Y., Papandreou, G., et al.: Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 801–818 (2018)

Bojarski, M., Choromanska, A., Choromanski, K., et al.: VisualBackProp: visualizing CNNs for autonomous driving. arXiv preprint arXiv:1611.05418 (2016)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: International Conference on Learning Representations (2015)

Kim, J., Canny, J.: Interpretable learning for self-driving cars by visualizing causal attention. In: IEEE International Conference on Computer Vision, pp. 2961–2969 (2017)

Acknowledgements

This work is funded by the Youth Talent Lifting Project of China Society of Automotive Engineers.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding authors state that there is no conflict of interest.

Rights and permissions

About this article

Cite this article

Wang, D., Wen, J., Wang, Y. et al. End-to-End Self-Driving Using Deep Neural Networks with Multi-auxiliary Tasks. Automot. Innov. 2, 127–136 (2019). https://doi.org/10.1007/s42154-019-00057-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42154-019-00057-1