Abstract

We propose a nonparametric estimator of the jump activity index \(\beta \) of a pure-jump semimartingale X driven by a \(\beta \)-stable process when the underlying observations are coming from a high-frequency setting at irregular times. The proposed estimator is based on an empirical characteristic function using rescaled increments of X, with a limit that depends in a complicated way on \(\beta \) and the distribution of the sampling scheme. Utilising an asymptotic expansion we derive a consistent estimator for \(\beta \) and prove an associated central limit theorem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recent years have seen a notable development in the statistical analysis of time-continuous stochastic processes beyond the somewhat classical case of an Itô semimartingale driven by a Brownian motion. Generalisations of that class of processes are in fact manifold, and one can mention for example the analysis of integrals with respect to fractional Brownian motion (e.g. Bibinger 2020; Brouste and Fukasawa 2018), the discussion of Lévy-driven moving averages (e.g. Basse-O’Connor et al. 2017, 2018), inference on the solution of stochastic PDEs (e.g. Bibinger and Trabs 2020; Chong 2020; Kaino and Uchida 2021) and the behaviour of integrals with respect to stable pure-jump processes (e.g. Heiny and Podolskij 2021; Todorov 2015). All of the above results are concerned with high-frequency observations of the respective processes, always in the case of regularly spaced observations in time.

On the other hand, it is well understood that the underlying assumption of a regular spacing constitutes an ideal setting that simplifies the theoretical statistical analysis but is typically not met in practical applications. For this reason, there has always been a lot of interest in understanding the impact of irregular sampling schemes on the proposed statistical methods. For semimartingales driven by Brownian motion one can mention Hayashi et al. (2011) and Mykland and Zhang (2012) among others, with an almost complete treatment in Chapter 14 of Jacod and Protter (2012). The case of Brownian semimartingales with jumps is treated for example in Bibinger and Vetter (2015) and Martin and Vetter (2019). All of the aforementioned papers deal with exogenous sampling schemes, i.e. when the observation times are essentially independent from the underlying processes. There is also limited research on endogenous observation times, mostly modelled via hitting times. See for example Fukasawa and Rosenbaum (2012) in the continuous case or Vetter and Zwingmann (2017) when additional jumps are present.

In this paper, we are discussing the case of a near-stable jump semimartingale observed at irregular times, i.e. the underlying process is given by

and the observation times follow a version of the restricted discretisation scheme from Jacod and Protter (2012), essentially providing observation times independent of X. Loosely speaking and made precise below, L is driven by a \(\beta \)-stable process and Y comprises the residual jumps while \(\alpha \) and \(\sigma \) are appropriately chosen adapted processes. Our goal in this work is to provide a consistent estimator for \(\beta \) and to establish an associated central limit theorem. Statistical inference on \(\beta \) has already been conducted in Todorov (2015, 2017) for regular observations while Jacod and Todorov (2018) provides the theory in a general model where microstructure noise is present and dominates the statistical analysis.

A first glance, our strategy to estimate \(\beta \) somewhat resembles the procedure from Todorov (2015), but there are some notable challenges that might occur in other situations as well. First, we are computing an empirical characteristic function \(\widetilde{L}^n(p,u)\) which is constructed from local increments of X (and with an auxiliary parameter p), but it is important here to rescale any of these increments relative to the length of the underlying time period. Secondly, one can show convergence of this empirical distribution function to a function \(L(p,u,\beta )\) which not only is a function of u, p and the unknown \(\beta \) but also depends specifically on the distribution of the discretisation scheme. Unlike in Todorov (2015), where a consistent estimator for \(\beta \) is obtained via a suitable functional of empirical characteristic functions computed at arbitrary values u and v, we have to use sequences \(u_n\) and \(v_n\) converging to zero plus an asymptotic expansion to obtain a consistent estimator. This procedure also leads to a drop in the rate of convergence in the associated central limit theorem.

The remainder of this work is as follows: Sect. 2 deals with the assumptions on X as well as on the discretisation scheme. In Sect. 3 we establish our statistical method and we also present the main results on the asymptotic properties both of \(\widetilde{L}^n(p,u)\) and of \(\hat{\beta }(p,u_n,v_n)\). A thorough simulation study is provided in Sect. 4 where we also discuss issues connected with the estimation of the asymptotic variance in the normal approximation. All proofs are gathered in Sect. 5.

2 Setting

Throughout this work, we adopt the setting from Todorov (2015) and assume that we are given a univariate pure-jump semimartingale as defined in (1.1), i.e. that we observe

where L and Y are pure-jump Itô semimartingales and \(\alpha \) and \(\sigma \) are càdlàg. All processes are defined on some filtered probability space \((\Omega ,\mathcal {F},(\mathcal {F}_t)_{t\ge 0},\mathbb {P})\).

Specific assumptions on these processes will be given below, and we start with conditions on the jump processes L and Y. Below, \(\kappa (x)\) denotes a truncation function, i.e. it is the identity in a neighbourhood around zero, odd, bounded and equals zero for large values of |x|. We also set \(\kappa '(x)=x-\kappa (x)\), and whenever we discuss the characteristic triplet of a Lévy process it is to be understood as with respect to this choice of the truncation function.

Condition 2.1

We impose the following conditions on the processes L and Y:

-

(a)

L is a Lévy process with characteristic triplet (0, 0, F) where the Lebesgue density of the Lévy measure \(F(\textrm{d}x)\) is given by

$$\begin{aligned} h(x)=\frac{A}{|x|^{1+\beta }}+\tilde{h}(x) \end{aligned}$$for some \(\beta \in (1,2)\) and some \(A > 0\). The function \(\tilde{h}(x)\) satisfies

$$\begin{aligned} |\tilde{h}(x)|\le \frac{C}{|x|^{1+\beta '}} \end{aligned}$$for some \(\beta ' < 1\) and all \(|x|\le x_0\), for some \(x_0 > 0\).

-

(b)

Y is a finite variation jump process of the form

$$\begin{aligned} Y_t=\int _0^t \int _{\mathbb {R}} x \mu ^Y(\textrm{d}s,\textrm{d}x) \end{aligned}$$where \(\mu ^Y(\textrm{d}s,\textrm{d}x)\) denotes the jump measure of Y and its compensator is given by \(\textrm{d}s \otimes \nu _s^Y(\textrm{d}x)\). The process

$$\begin{aligned} \left( \int _{\mathbb {R}}\left( |x|^{\beta '}\wedge 1\right) \nu _t^Y(\textrm{d}x)\right) _{t\ge 0} \end{aligned}$$is locally bounded for the parameter \(\beta '\) from (a).

Condition 2.1 should be read in such a way that the pure-jump Lévy process L is essentially \(\beta \)-stable while all other jumps (both in L and in Y) are of much smaller activity and will be dominated by the \(\beta \)-stable part at high frequency. Note that dependence between L and Y is possible, and this will hold for the jump parts of \(\alpha \) and \(\sigma \) as well.

Condition 2.2

The processes \(\alpha \) and \(\sigma \) are Itô semimartingales of the form

where

-

(a)

\(|\sigma _t|\) and \(|\sigma _{t-}|\) are strictly positive;

-

(b)

W, \(\widetilde{W}\) and \({\overline{W}}\) are independent Brownian motions, \(\underline{\mu }\) is a Poisson random measure on \(\mathbb {R}_+\times E\) with compensator \(\textrm{d}t\otimes \lambda (\textrm{d}x)\) for some \(\sigma \)-finite measure \(\lambda \) on a Polish space E and \(\underline{\widetilde{\mu }}\) is the compensated jump measure;

-

(c)

\(\delta ^\alpha (t,x)\) and \(\delta ^\sigma (t,x)\) are predictable with \(|\delta ^\alpha (t,x)|+|\delta ^\sigma (t,x)|\le \gamma _k(x)\) for all \(t\le T_k\), where \(\gamma _k(x)\) is a deterministic function on \(\mathbb {R}\) with \(\int _E\left( |\gamma _k(x)|^r\wedge 1\right) \lambda (\textrm{d}x)<\infty \) for some \(0\le r < 2 \) and \(T_k\) is a sequence of stopping times increasing to \(+\infty \);

-

(d)

\(b^\alpha , b^\sigma \) are locally bounded while \(\eta ^\alpha , \eta ^\sigma , \widetilde{\eta }^\alpha , \widetilde{\eta }^\sigma , \overline{\eta }^\alpha \) and \(\overline{\eta }^\sigma \) are càdlàg.

These assumptions on \(\alpha \) and \(\sigma \) are extremely mild and covered by most processes used in the literature.

Our goal in the following is to estimate \(\beta \) based on irregular observations over the finite time interval [0, 1], say, and we will work in a setting where the observation times are typically random. To incorporate this additional randomness into the model we assume that the probability space contains a larger \(\sigma \)-field \(\mathcal {G}\), and we keep using \(\mathcal {F}\) to denote the \(\sigma \)-field with respect to which X is measurable. The following condition is loosely connected with the restricted discretisation schemes introduced in Chapter 14.1 of Jacod and Protter (2012) but with a slightly different predictability assumption and additional moment conditions. It allows for some dependence of the observations times and X (via \(\lambda \)) but usually depends on external randomness through the \(\phi _i^n\) as well.

Condition 2.3

For each \(n\in \mathbb {N}\) we observe the process X at stopping times \(0=\tau ^n_0<\tau _1^n<\tau _2^n<\cdots \) with \(\tau _0^n=0, \tau _1^n=\Delta _n\phi _1^n\) and

where \(\Delta _n \rightarrow 0\) and

-

(a)

\(\lambda _t\) is a strictly positive Itô semimartingale w.r.t. the filtration \((\mathcal {F}_t)_{t\ge 0}\) and fulfills the same conditions as \(\sigma _t\) stated in Assumption 2.2;

-

(b)

\((\phi _i^n)_{i\ge 1}\) is a family of i.i.d. random variables with respect to the \(\sigma \)-field \(\mathcal {G}\), and it is independent of \(\mathcal {F}\);

-

(c)

\(\phi _i^n\sim \phi \) for a strictly positive random variable \(\phi \) with \(\mathbb {E}[\phi ]=1\), and for all \(p > -2\) the moments \(\mathbb {E}\left[ \phi ^p\right] \) exist.

For all \(t>0\) we define \(({\mathcal {F}}_t^n)_{t \ge 0}\) to be the smallest filtration containing \(({\mathcal {F}}_t)_{t \ge 0}\) and with respect to which all \(\tau _i^n\) are stopping times. We also let \(N_n(t)\) denote the number of observation times until t, i.e.

and of particular importance for us is the case \(t=1\) because \(N_n(1)\) is the (random) number of observations over the trading day [0, 1] from which we construct the relevant statistics later on. Note that due to \(\Delta _n \rightarrow 0\) we are in a high-frequency situation where the time between two observations converges to zero while \(N_n(1)\) diverges to infinity (both in a probabilistic sense).

3 Results

The essential idea from Todorov (2015) is to base the estimation of the unknown activity index on the estimation of the characteristic function of a certain stable distribution. We will essentially proceed in a similar way but with some subtle changes because the underlying sampling scheme is not regular anymore. On one hand, we have to account for the fact that the time between successive observations is not constant, while on the other hand the characteristic function not only involves this particular stable distribution but also the unknown distribution \(\phi \) from Assumption 2.3.

Let us become more specific here. We assume that the probability space is large enough to allow for a representation as in Todorov and Tauchen (2012), namely that the pure-jump Lévy process L can be decomposed as

where all processes on the right-hand side are (possibly dependent) Lévy processes with a characteristic triplet of the form (0, 0, F) for a Lévy measure of the form \(F(\textrm{d}x) = F(x) \textrm{d}x\). For S the Lévy density satisfies \(F(x) = {A}|x|^{-(1+\beta )}\) while the Lévy densities of \(\acute{S}\) and \(\grave{S}\) are \(F(x)=|\tilde{h}(x)|\) and \(F(x) = 2|\tilde{h}(x)|\mathbbm {1}_{\{\tilde{h}(x)<0\}}\), respectively. Then S is strictly \(\beta \)-stable, and its characteristic function satisfies

for some constant \(A_\beta >0\) and any \(u,t > 0.\)

As a result of the previous decomposition (3.1) and since

for some \(\beta ' < \beta \) and all \(|x|\le x_0\) holds due to Condition 2.1, it is clear that the jump behaviour of L and thus of X is governed by the \(\beta \)-stable process S for small time intervals. This observation is the key to our following estimation procedure: Based on the high-frequency observations of X we will first estimate a function \(L(p,u,\beta )\) which, as noted before, is related to the characteristic function of S but involves the unknown distribution of \(\phi \) as well. Here, p and u are additional parameters that can be chosen by the statistician. In the second step we will essentially use a Taylor expansion of L (as a function of u) around zero to finally come up with an estimator for \(\beta \).

In the following, we denote with

the ith increment of the process X, but where we have included an additional rescaling to account for the different lengths of the intervals in an irregular sampling scheme, and we occasionally also use \(\widetilde{\Delta _i^n S}\) for the rescaled increment of the \(\beta \)-stable S. For any \(p > 0\) and \(u > 0\) we then set

where the auxiliary sequence \(k_n\) satisfies \(k_n \rightarrow \infty \) and \(k_n \Delta _n \rightarrow 0\) and where

is used to estimate the unknown local volatility \(\sigma \).

At first, it seems somewhat odd to include \(\Delta _n\) in the definition of \(\widetilde{\Delta _i^nX}\) because this quantity cannot be observed in practice. We will base our statistical procedure in the following on \(\widetilde{L}^n(p,u)\), however, and it is obvious from its definition that it is in fact independent of \(\Delta _n\) as the latter appears as a factor both in the numerator and in the denominator. Thus we are safe to work with \(\widetilde{\Delta _i^nX}\), and its definition makes it easier to compare its results with the standard increment \(\Delta _i^n X = X_{\tau _i^n}-X_{\tau _{i-1}^{n}}\). These obviously coincide in the case of a regular sampling scheme. Note also that, even though its asymptotic condition is stated in terms of \(\Delta _n\), the choice of \(k_n\) can in practice be based on the size of \(N_n(1)\) which essentially grows as \(\Delta _n^{-1}\).

The main part of the upcoming analysis is devoted to the study of the asymptotic behaviour of \(\widetilde{L}^n(p,u)\). Its definition together with the previous discussion suggests that its limit should involve the characteristic function of S, but it also becomes apparent that the limit cannot be independent of \(\phi \). We will prove in the following that the first-order limit is

with the constant \(C_{p,\beta }\) being defined via

and where \(\phi ^{(1)}\) and \(\phi ^{(2)}\) denote two independent copies with the same distribution as \(\phi \), defined on an appropriate probability space. For simplicity, we still use \(\mathbb {E}[\cdot ]\) to denote the expectation on this generic space. The first main theorem then reads as follows:

Theorem 3.1

Suppose that Conditions 2.1–2.3 are in place and let \(k_n\sim C_1 \Delta _n^{-\varpi }\) for some \(C_1> 0\) and some \(\varpi \in (0,1)\). Then we have

for any fixed \(u > 0\) and any choice of \(0< p < \beta /2\).

While this result is interesting in itself, at first glance it does not help much for the estimation of \(\beta \) because \(L(p,u,\beta )\) depends in a complicated way on the unknown distribution of \(\phi \). If we utilize the familiar approximation \(\exp (y) = 1+y+ o(y)\) for \(y \rightarrow 0\), however, it seems reasonable to hope that the approximation

holds for any choice of a small \(u > 0\), which now is much easier to handle. Namely, an estimator for \(\beta \) is then based on an appropriate combination of two estimators \(\widetilde{L}^n(p,u_n)\) and \(\widetilde{L}^n(p,v_n)\) with \(u_n \rightarrow 0\) and \(v_n = \rho u_n\) for some \(\rho > 0\). Precisely, we set

which obviously is symmetric upon exchanging \(u_n\) and \(v_n\).

Remark 3.2

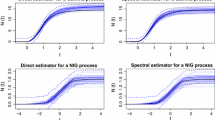

We will often choose \(\rho = 1/2\) in which case \(\hat{\beta }(p,u_n,v_n) \le 2\) can be shown. This is of course a desirable property as it resembles the bound for the stability index \(\beta \) itself, but it also bears some restrictions regarding the quality of a limiting normal approximation for values of \(\beta \) close to 2. See Figs. 1 and 2 below.

Before we discuss the asymptotic behaviour of the estimator \(\hat{\beta }(p,u_n,v_n)\) we will state a bivariate central limit theorem for \(\widetilde{L}^n(p,u_n) - L(p,u_n,\beta )\) and \(\widetilde{L}^n(p,v_n) - L(p,v_n,\beta )\) with \(u_n\) and \(v_n\) chosen as above.

Theorem 3.3

Suppose that Conditions 2.1–2.3 are in place and let \(k_n\sim C_1 \Delta _n^{-\varpi }\) for some \(C_1> 0\) and some \(\varpi \in (0,1)\) as well as \(u_n\sim C_2 \Delta _n^{\varrho }\) for some \(C_2 > 0\) and \(\varrho \in (0,1)\). Suppose further that

hold. Then

converges \({\mathcal {F}}\)-stably in law to a limit \((X',Y')\) which is jointly normal distributed (independent of \({\mathcal {F}}\)) with mean 0 and covariance matrix \(\mathcal {C'}\) given by

Remark 3.4

The above choice of the parameters \(\varrho \), \(\varpi \) and p is feasible even if we do not know \(\beta \). It can easily be seen that e.g. \(\varrho =\frac{1}{3}, \varpi =\frac{2}{3}\) and any \(p \in (\frac{3}{8},\frac{1}{2})\) satisfies the conditions in Theorem 3.3.

The following result is the main theorem of this work and it provides the central limit theorem for the estimator \(\hat{\beta }(p,u_n,v_n)\). Its proof builds heavily on Theorem 3.3.

Theorem 3.5

Under the conditions of Theorem 3.3 we have the \({\mathcal {F}}\)-stable convergence in law

where X is a normal distributed random variable (independent of \({\mathcal {F}}\)) with mean 0 and variance

A simple corollary is the consistency of \(\hat{\beta }(p,u_n,v_n)\) as an estimator for \(\beta \).

Corollary 3.6

Under the conditions of Theorem 3.3 we have

For a feasible application of Theorem 3.5 we need a consistent estimator for the variance of the limiting normal distribution which essentially boils down to the estimation of \(\kappa _{\beta , \beta }\). This problem will be discussed in the next section, alongside a thorough analysis of the finite sample properties of \(\hat{\beta }(p,u_n,v_n)\).

4 Simulation study

This chapter deals with the numerical assessment of the finite sample properties of \(\hat{\beta }(p,u_n,v_n)\), and we also include a discussion regarding the estimation of the variance in the central limit theorem in order to obtain a feasible result. In the following, let W be a standard Brownian motion and L a symmetric stable process with a Lévy density

for some \(\beta \in (1,2)\). We then set

and assume that we observe

which obviously fulfills Conditions 2.1 and 2.2. For the observation scheme we choose

with \(\phi ' \sim \text {Exp}(1)\) and with the starting values of the processes being \(\alpha _0 = \sigma _0 = X_0 = \lambda _0 = 1\). We assume that \(\widetilde{W}\) is a standard Brownian motion as well, independent of W. The purpose of the minimum in the definition of \(\phi \) in (4.1) is to ensure the (negative) moment condition from Assumption 2.3 (c) to hold, which (as can be seen from additional simulations) not only seems to be relevant in theory but in practice as well. Note also that the choice of \(\lambda _0=1\) combined with the mean reversion of \(\lambda \) to 5 leads to pronounced changes in the distribution of the \(\tau _i^n\) over time. For the simulation of X we use a standard Euler scheme, utilising Proposition 1.7.1 in Samorodnitsky and Taqqu (1994) to obtain symmetric stable random variables.

4.1 Consistency and normal approximation in finite samples

We begin the assessment of the finite sample properties with a discussion of the consistency result \(\hat{\beta }(p,u_n,v_n)-\beta {\mathop {\longrightarrow }\limits ^{\mathbb {P}}}0\) and the quality of the associated normal approximation. For this we set \(u_n = N_n(1)^{-1/3}\), \(k_n = N_n(1)^{2/3}\), \(p=1/2\), essentially in accordance with Remark 3.4. Below, we present the results for \(\beta \in \{1.1,1.3,1.5,1.7,1.9\}\) and \(\rho \in \{1/2,2\}\), generating \(N = 1000\) samples, and we discuss both \(\Delta _n^{-1} = 1000\) and \(\Delta _n^{-1} = 10{,}000\). Note that our choice of \(\lambda \) in (4.1) yields about \(N_n(1)\approx 520\) observations in the first case, whereas \(N_n(1)\approx 5200\) in the second one.

Table 1 shows mixed results regarding consistency but nevertheless allows us to draw some conclusions: First, we see for a choice of \(\rho =1/2\) that the estimator for \(\beta \) behaves correctly on average whereas the (relative) difference between the true and the estimated variances grows with \(\beta \). For \(\rho =2\) note first that \(\hat{\beta }(p,u_n,v_n)\) is symmetric in \(u_n\) and \(v_n\). Thus, choosing \(\rho =2\) and keeping \(u_n\) fixed is the same as choosing \(u_n\) twice as large and keeping \(\rho =1/2\). Hence Table 1 confirms empirically what is already known from the construction of the estimator: It relies on \(u_n \rightarrow 0\), so a larger choice of \(\rho \) induces an additional bias. On the other hand, the rate of convergence to the normal distribution improves as \(u_n\) becomes larger, and this may explain why the empirical variance seems to be much closer to the theoretical one if we choose the larger \(\rho = 2\).

We follow this discussion with Table 2 which is constructed in the same manner as above for \(\Delta _n^{-1}=10{,}000\), and we basically see improvement across the board. Now, the bias for both \(\rho =1/2\) and \(\rho =2\) is very small, even for large values for \(\beta \), and we can also observe that the approximated variance specifically for \(\beta \in \{1.5,1.7,1.9\}\) is much closer to the theoretical one than previously.

In a second step, we present some QQ-plots to visualise the quality of the approximating normal distribution from Theorem 3.5 for which we use the same configuration of parameters as discussed earlier. Due to the choice of \(\rho =1/2\) and \(\rho =2\) Remark 3.2 applies. As noted before, this condition prevents the normal approximation from working well in the right tails, and as expected Fig. 1 shows that this effect becomes more pronounced as \(\beta \) gets closer to the upper bound. For \(\Delta _n^{-1}=1000\), this dubious tail behaviour already starts to appear for \(\beta =1.5\). Nevertheless, it should be noted that the quality of the distributional approximation increases visibly with the higher sample size \(\Delta _n^{-1}=10{,}000\) for both choices of \(\rho \). Figure 2 shows for instance that \(\beta =1.5\) is not really critical anymore, i.e. an increasing sample size allows for an accurate approximation of larger values of \(\beta \). Also, a slight improvement from \(\rho =1/2\) to \(\rho =2\) can be noted, in line with the previous discussion regarding the rate of convergence.

A natural way to circumvent the problem in the upper tail is to apply a transformation such as \(x \mapsto \log (2-x)\) which maps \(\hat{\beta }(p,u_n,v_n)\) onto the entire real line, as there is no obvious lower bound for \(\hat{\beta }(p,u_n,v_n)\). From Theorem 3.5 and the delta method one obtains

with \(v^2\) as above, and Fig. 3 shows a clear improvement compared with the original normal approximation. Here and in the section below, we focus specifically on the case \(\beta =1.7\) to illustrate the benefits and shortcomings of the proposed methods in a situation where the original estimator does not behave too well.

4.2 Bias correction and variance estimation

A natural way to further improve the finite sample properties is to conduct a bias correction for the higher order terms. Our current approach to estimate \(\beta \) relies on the approximation (3.3) while a more precise one would e.g. be a third order expansion of the form

An estimator for \(\beta \) is then given by

where e.g. \(\widehat{deb}(u_n)\) estimates

Ideally, such a correction would allow for a bigger choice of \(u_n\) in finite samples, thus leading to a better rate of convergence.

To define a consistent estimator for \(\kappa _{\zeta ,\beta }^{\zeta /\beta }=\mathbb {E}\left[ \left( (\phi ^{(1)})^{1-\beta }+(\phi ^{(2)})^{1-\beta }\right) ^{\zeta /\beta }\right] \), \(\zeta > 0\), we use \(\tau _i^n-\tau _{i-1}^n = \Delta _n \phi _{i}^n\lambda _{\tau _{i-2}^n}\) and the càdlàg property of \(\lambda \). A natural way to construct an estimator for \(\kappa _{\zeta , \beta }\) is then to build it from sums of adjacent increments of the \(\tau _i^n\), rescaled by the length of the total time interval relative to the number of increments, and using an appropriate power. Whenever \(\beta \) needs to be included, it is replaced by its consistent estimator \(\hat{\beta }_n = {\hat{\beta }}(p, u_n, v_n)\). The consistency of such an estimator for \(\kappa _{\zeta , \beta }^{\zeta /\beta }\) is formally given in the following lemma. Its proof is rather straightforward but lengthy and therefore omitted.

Lemma 4.1

Let \(r_n \sim C_3 \Delta _n^{-\psi }\) for some \(\psi \in (0,1)\). Then, for \(\zeta >0\), we have

where

As an example, we discuss the case of \(\Delta _n^{-1}=1000\) with \(\beta = 1.7\), \(\rho = 0.5\) and \(r_n = N_n(1)^{4/5}\), but we now choose \(u_n = N_n(1)^{-0.28}\) and keep all other variables unchanged. In this case, mean and empirical variance become 1.7042 and 1.7970, both improving the corresponding values from Table 1. Also, the corresponding QQ-plot in Fig. 4 clearly shows a better approximation of the limiting normal distribution, still with the original problems in the right tail.

So far, we have used the theoretical variance to draw the QQ-plots, and we have seen that the boundedness of \(\hat{\beta }(p,u_n,v_n)\) causes the empirical distribution to not match the limiting normal distribution in the upper tails. In practice, however, the true limiting variance

in Theorem 3.5 is unknown and needs to be estimated. We will present two ways to estimate it. For a full plug-in estimator note from eq. (3.3) in Todorov (2015) that

holds. Hence, the only unknown quantities in the variance are \(\beta \) and \(\kappa _{\zeta , \beta }\) (with \(\zeta =p, \beta \)), so Corollary 3.6 and Lemma 4.1 provide us with a consistent plug-in estimator for the limiting variance.

An alternative estimator can be obtained without an actual need to directly estimate \(\kappa _{\zeta , \beta }\). To this end, note that Theorem 3.5 proves the variance of \(\hat{\beta }(p,u_n,v_n)-\beta \) to be proportional to the reciprocal of \(u_n^{\beta } \kappa _{\beta , \beta } C_{p, \beta }\), and we see from (3.3) and Theorem 3.3 that the latter can be consistently estimated by \(1-\widetilde{L}^n(p,u_n)\). Plugging in \(\hat{\beta }(p,u_n,v_n)\) for the unknown \(\beta \) then gives a second estimator for the unknown limiting variance.

Figure 5 contains the QQ-plots for the bias-corrected estimator \(\overline{\beta }(p,u_n,v_n)\) when standardised with the two different estimators for the limiting variance. For a direct comparison we have chosen the same setting as in Fig. 4, i.e. we have \(\Delta _n^{-1}=1000\), \(\beta = 1.7\), \(\rho = 0.5\) and \(u_n = N_n(1)^{-0.28}\), with the only difference being that we have run \(N=10{,}000\) simulations. We have further chosen \(r_n = N_n(1)^{4/5}\) for the full plug-in estimator with a variance estimation using Lemma 4.1. Both normal approximations work fine with a slight edge towards the estimation based on \(1-\widetilde{L}^n(p,u_n)\) which is better in the lower tails. Note that the clear improvement in comparison to Fig. 4 can be explained by the fact that for values of \(\hat{\beta }(p,u_n,v_n)\) very close to the upper bound of 2 the estimator for the variance takes values very close to 0.

QQ-plots in the case \(N=10{,}000\), \(\Delta _n^{-1}=1000\), \(\beta =1.7\) and \(\rho = 0.5\). On the left: The empirical distribution of the bias-corrected \(\overline{\beta }(p,u_n,v_n)- \beta \), standardised using the full plug-in estimator from Lemma 4.1. On the right: The empirical distribution of the bias-corrected \(\overline{\beta }(p,u_n,v_n)- \beta \), with a variance estimation based on \(1-\widetilde{L}^n(p,u_n)\)

5 Proofs

5.1 Prerequisites on localisation

As usual one starts with localisation results, i.e. with results that allow to prove the main theorems under conditions which are slightly stronger than Conditions 2.1 and 2.2 for the processes involved and also stronger than Condition 2.3 on the sampling scheme. We begin with the additional assumptions on the processes.

Condition 5.1

In addition to Conditions 2.1 and 2.2 we assume that

-

(a)

\(|\sigma _t|\) and \(|\sigma _t|^{-1}\) are uniformly bounded;

-

(b)

\(|\delta ^\alpha (t,x)|+|\delta ^\sigma (t,x)|\le \gamma (x)\) for all \(t>0\), where \(\gamma (x)\) is a deterministic bounded function on \(\mathbb {R}\) with \(\int _E|\gamma (x)|^r\lambda (\textrm{d}x)<\infty \) for some \(0\le r<2\);

-

(c)

\(b^\alpha , b^\sigma , \eta ^\alpha , \eta ^\sigma , \widetilde{\eta }^\alpha , \widetilde{\eta }^\sigma , \overline{\eta }^\alpha \) and \(\overline{\eta }^\sigma \) are bounded;

-

(d)

the process \(\left( \int _{\mathbb {R}}\left( |x|^{\beta '}\wedge 1\right) \nu _t^Y(\textrm{d}x)\right) _{t\ge 0}\) is bounded and the jumps of Y are bounded;

-

(e)

the jumps of \(\acute{S}\) and \(\grave{S}\) are bounded.

Similar properties are assumed to hold for \(\lambda \) from Condition 2.3.

The following lemma gives the formal result why we can assume in the following that the strengthened Condition 5.1 holds, namely because we are interested in X on the bounded interval [0, 1] only and eventually \(E_p > 1\) for a localising sequence, at least with a probability converging to 1. Its proof closely resembles the one of Lemma 4.4.9 in Jacod and Protter (2012) which is why we refer the reader to part 3) of their proof.

Lemma 5.2

Let X be a process fulfilling Conditions 2.1 and 2.2. Then, for each \(p>0\) there exists a stopping time \(E_p\) and a process X(p) such that X(p) and its components, \(\alpha (p)\), \(\sigma {(p)}\) and Y(p), fulfill Assumption 5.1, and it also holds that \(X(p)_t = X_t\) for all \(t<E_p\). The sequence of stopping times can be chosen such that \(E_p\nearrow \infty \) almost surely when \(p\rightarrow \infty \).

For all proofs concerning the asymptotics of \(\widetilde{L}^n(p,u)\) and \(\hat{\beta }(p,u_n,v_n)\) it becomes important that the process \(\lambda _t\) driving the observation times \(\tau _i^n\) is bounded from above and below. This means that we need a stronger assumption than just Condition 2.3 as well, and we also need to assume that for a given n the number of observations until any fixed T is bounded by a constant times \(\Delta _n^{-1} T\).

Condition 5.3

In addition to Condition 2.3 there exists some \(C>1\) such that

-

(a)

The process \(\lambda \) fulfills the same assumptions as \(\sigma \) in Condition 5.1, and in particular we have for all \(t>0\)

$$\begin{aligned} \frac{1}{C} \le \lambda _t \le C. \end{aligned}$$ -

(b)

For any given n and any \(T > 0\) we have

$$\begin{aligned} N_n(T) \le C \Delta _n^{-1} T. \end{aligned}$$

Strengthening Condition 2.3 ultimatively results in changing the entire observation scheme which makes it somewhat harder to formally prove that such an assumption is indeed adequate. We begin with a result on the boundedness of \(\lambda \) as in part (a) above, and for every n let \(F_n\) be a random variable which not just depends on n but also on the process X and on the discretisation scheme via \(\lambda \) and the variables \(\phi _i^n\). Likewise, a possible stable limit F of \(F_n\) is assumed to depend on the same factors and is realised on an extension \((\widetilde{\Omega },\widetilde{\mathcal {G}},\widetilde{\mathbb {P}})\) of the original probability space \((\Omega ,\mathcal {G},\mathbb {P})\). Furthermore, for each \(C > 1\) we define \(\lambda ^{(C)}_t\) in such a way that \(\frac{1}{C} \le \lambda ^{(C)}_t \le C\) holds. We then set \(E_C\) to be the stopping time from Lemma 5.2 with C replacing p, and this lemma can be applied because by Condition 2.3, \(\lambda \) is assumed to satisfy the same structural properties as \(\sigma \).

Lemma 5.4

Assume that Assumption 2.3 holds and construct, for each \(C>1\), each stopping time \(E_C\) and each process \(\lambda ^{(C)}\), a new discretisation scheme, i.e. new stopping times \(\{\tau _i^{n,C}:i \ge 0\}\) and a new \(N_n^C(T)\) as in Condition 2.3 but with the process \(\lambda ^{(C)}\) instead of \(\lambda \). Define a sequence of associated random variables \(F_n(C)\) similar to \(F_n\) as well but with the process \(\lambda ^{(C)}\) replacing \(\lambda \), \(\{\tau _i^{n,C}:i \ge 0\}\) replacing \(\{\tau _i^{n}:i \ge 0\}\) and \({N}_n^C(T)\) replacing \(N_n(T)\), and likewise for F(C) on \((\widetilde{\Omega },\widetilde{\mathcal {G}},\widetilde{\mathbb {P}})\). If for each \(C>1\) it holds that

and if furthermore

then we have \(F_n~{\mathop {\longrightarrow }\limits ^{{\mathcal {L}}-(s)}}~F\).

Proof

Let \(\widetilde{\mathbb {E}}\) be the expectation w.r.t. \(\widetilde{\mathbb {P}}\). We need to prove

where Y is any bounded random variable on \((\Omega ,\mathcal {G})\) and f is any bounded continuous function, and using

it is sufficient to prove that each of the three summands vanishes. For the first one, by boundedness of Y and f and using (5.2), it is obvious that

Here and below, K always denotes a generic positive constant. Thus,

and the same proof applies for the third term. Finally, note that

for each fixed C is an immediate consequence of (5.1). \(\square \)

By construction \(\lambda _t\) and \(\lambda ^{(C)}_t\) coincide on the set \(\{E_C\le T\}\) for all \(0\le t \le T\). As our estimators only deal with observations up to a fixed time horizon T (in our specific case the convenient but arbitrary \(T=1\)) it is clear that condition (5.2) is indeed met. Therefore we may assume for the following proofs that part (a) of Condition 5.3 is in force and only prove (5.1) under this strengthened assumption.

Finally, we need to explain why we can assume that part b) of Condition 5.3 holds as well. Here we refer to part 2) of the proof of Lemma 9 in Jacod and Todorov (2018) where a family of discretisation schemes with the desired properties is constructed and where each member of the family coincides with the original sampling scheme up to some random time \(S^n_{\ell _n}\). As it is shown that these times converge to infinity almost surely, the same argument as before allows us to assume part b) of Condition 5.3 without loss of generality.

For further information on random discretisation schemes one can consult Section 14.1 in Jacod and Protter (2012) where a slightly different version of Lemma 5.4 and other important properties of objects connected to these schemes are proven. We want to name one of those properties in particular because we will use it repeatedly in the following chapters: (14.1.10) in Jacod and Protter (2012) proves that for all \(t\ge 0\) we have

which basically allows us to treat the random \(N_n(t)\) like the deterministic \(\Delta _n^{-1}\) in most asymptotic considerations.

5.2 A crucial decomposition

The proofs of Theorems 3.1 and 3.3 rely on a simple decomposition which allows us to identify the terms that play a dominant role in the asymptotic treatment. Precisely, we have

where

drives the asymptotics while the residual terms are given by

Here we have set

and we use the short hand notation \(\mathbb {E}_i^n[\cdot ]\) in place of \(\mathbb {E}[\cdot |{\mathcal {F}}^n_{\tau _i^n}]\). We also introduce the notation

and set

We will start with a discussion of the asymptotic orders of the residuals for which we always assume that Conditions 5.1 and 5.3 as well as \(k_n\sim C_1 \Delta _n^{-\varpi }\) for some \(C_1> 0\) and some \(\varpi \in (0,1)\) are in place. Naturally, we need some preparation to obtain asymptotic negligibility and we will start with a lemma containing a series of bounds for moments of certain increments of (often integrated and rescaled) processes. We will not give proof of this result but refer to Todorov (2015, 2017). In fact, the techniques used for the proof will for most parts resemble the ones given therein. The main difference is that our arguments often involve the additional process \(\lambda \) which sometimes complicates matters considerably.

Recall the decomposition (3.1), and we further write

with \(S_t^{(1)}=\int _{0}^{t}\int _\mathbb {R}\kappa (x)\widetilde{\mu }(\textrm{d}s,\textrm{d}x)\), where \(\widetilde{\mu }(\textrm{d}s,\textrm{d}x)\) denotes the compensated jump measure of S, and \(S_t^{(2)}=\int _{0}^{t}\int _\mathbb {R}\kappa '(x){\mu }(\textrm{d}s,\textrm{d}x)\).

Lemma 5.5

Let \(i \ge 2\) be arbitrary.

-

(a)

For every \(p \in (-1,\beta )\) we have

$$\begin{aligned}&\mathbb {E}_{i-2}^n\left[ \left| \Delta _n^{-1/\beta }(\widetilde{\Delta _{i}^nS}-\widetilde{\Delta _{i-1}^nS)}\right| ^p\right] \le K, \\&\mathbb {E}_{i-2}^n\left[ \left| \Delta _n^{-1/\beta }(\lambda _{\tau _{i-2}^n}^{1-1/\beta }\widetilde{\Delta _{i}^nS} -\lambda _{\tau _{i-3}^n}^{1-1/\beta }\widetilde{\Delta _{i-1}^nS)}\right| ^p\right] = \kappa _{p,\beta }^{p/\beta }\mu _{p,\beta }^{p/\beta }, \end{aligned}$$with the constants from (3.2).

-

(b)

For every \(p\in (0,\beta )\) we have

$$\begin{aligned} \left| \mathbb {E}_{i-2}^n\left[ \left| \Delta _n^{-1/\beta }(\widetilde{\Delta _{i}^nS}-\widetilde{\Delta _{i-1}^nS)}\right| ^p\right] - \lambda _{\tau _{i-2}^n}^{p/\beta - p}\kappa _{p,\beta }^{p/\beta }\mu _{p,\beta }^{p/\beta } \right| \le K \Delta _n^{1/2}. \end{aligned}$$ -

(c)

For every \(q\in (0,2]\), every \(\iota > 0\) and every \(l \in \{0,1\}\) we have

$$\begin{aligned} \mathbb {E}_{i-2}^n\left[ \left| \frac{\Delta _n}{\tau _{i-2+l}^n-\tau _{i-1+l}^n}\int _{\tau _{i-2+l}^n}^{\tau _{i-1+l}^n}(\sigma _{u-} -\sigma _{\tau _{i-2}^n})\textrm{d}S_u^{(1)}\right| ^q\right] \le K \Delta _n^{q/2+q/\beta \wedge 1-\iota }. \end{aligned}$$ -

(d)

For every \(q\in (0,2]\) and every \(l \in \{0,1\}\) we have

$$\begin{aligned} \mathbb {E}_{i-2}^n\left[ \left| \frac{\Delta _n}{\tau _{i-1+l}^n-\tau _{i-2+l}^n}\int _{\tau _{i-2+l}^n}^{\tau _{i-1+l}^n}(\sigma _{u-} -\sigma _{\tau _{i-2}^n})\textrm{d}S_u^{(2)}\right| ^q\right] \le K \Delta _n^{q/2+1}. \end{aligned}$$ -

(e)

For every \(q> 0\), every \(\iota > 0\) and every \(l \in \{0,1\}\) we have

$$\begin{aligned} \mathbb {E}_{i-2}^n\left[ \left| \frac{\Delta _n}{\tau _{i-1+l}^n-\tau _{i-2+l}^n}\int _{\tau _{i-2+l}^n}^{\tau _{i-1+l}^n}(\sigma _{u-} -\sigma _{\tau _{i-2}^n}) \textrm{d}\acute{S}_u\right| ^q\right] \le K\Delta _n^{q/2+{(q/\beta ')\wedge 1}-\iota }, \end{aligned}$$and the same relation holds with \(\grave{S}\) instead of \(\acute{S}\).

-

(f)

For every \(q > 0\) and every \(l \in \{0,1\}\) we have

$$\begin{aligned} \mathbb {E}_{i-2}^n\left[ \left| \frac{\Delta _n}{\tau _{i-1+l}^n-\tau _{i-2+l}^n}\Delta _{i-1+l}^nY\right| ^q\right] \le K\Delta _n^{(q/\beta ')\wedge 1}. \end{aligned}$$ -

(g)

For every \(q\in (0,2]\) we have

$$\begin{aligned} \mathbb {E}_{i-2}^n\left[ \left| \frac{\Delta _n}{\tau _{i}^n-\tau _{i-1}^n}\int _{\tau _{i-1}^n}^{\tau _{i}^n}\alpha _{u}\textrm{d}u-\frac{\Delta _n}{\tau _{i-1}^n-\tau _{i-2}^n}\int _{\tau _{i-2}^n}^{\tau _{i-1}^n}\alpha _{u}\textrm{d}u\right| ^q\right] \le \Delta _n^{3q/2}. \end{aligned}$$

A second lemma, again without proof, discusses bounds for moments of increments of semimartingales. Again, it has some resemblance to results in Todorov (2015, 2017) but its proof is slightly more involved due to the random observation scheme.

Lemma 5.6

Let A be a semimartingale satisfying the same properties as \(\sigma \) in Assumption 5.1. Then, for any \(-1<p<1\) and any \(y>0\) and with K possibly depending on y we have

After the presentation of these auxiliary claims, we focus on results that directly simplify the discussion of the asymptotic negligibility of the residual terms. We begin with a result that helps in the treatment of \(R_1^n\).

Lemma 5.7

Let \(\iota >0\) and \(0<p<\frac{\beta }{2}\) be arbitrary. Then, for any \(i \ge 2\), we have

with \(\alpha _n=\Delta _n^{\frac{\beta }{2}\frac{p+1}{\beta +1} \wedge ((\frac{p}{\beta '}\wedge 1)-\frac{p}{\beta })\wedge \frac{1}{2}-\iota }\).

Proof

The proof relies on bounds for moments of several stochastic integrals, mostly connected with the jump process \(L_t\) and its parts. Using (3.1) and (5.5) we may write \(\widetilde{\Delta _i^nX} -\widetilde{\Delta _{i-1}^nX} = \chi _i^{(n,1)} + \chi _i^{(n,2)} + \chi _i^{(n,3)}\) with

We obviously have

and since \(p< \beta /2 < 1\) holds, the inequality

which is any easy consequence of parts (d), (e) and (f) of Lemma 5.5, is enough to fully treat the first term on the right hand side. For the second term, we will use parts (a), (c) and (g) of Lemma 5.5 plus the algebraic inequality

which holds for any \(\epsilon >0\) and \(p\in (0,1]\) and a constant K that does not depend on \(\epsilon \) and which we apply with \(a = \Delta _n^{-1/\beta } \chi _i^{(n,1)}\) and \(b=\Delta _n^{-1/\beta } \chi _i^{(n,2)}\). We start with the latter two terms and let \(0< \epsilon < 1\) be arbitrary. Then Markov inequality in combination with Hölder inequality first gives

(with a slight abuse of notation but remember that \(\iota > 0\) can be chosen arbitrarily) and then

as well. Setting \(\epsilon =\Delta _n^{\frac{1}{2}\frac{\beta }{\beta +1}}\) gives \(K \Delta _n^{\frac{\beta }{2}\frac{p+1}{\beta +1}-\iota }\) as the upper bound in both terms.

Finally, we have to distinguish between \(p > 1/\beta \) and \(p \le 1/\beta \). In the first case a simple application of Hölder inequality gives

for our specific choice of \(\iota > 0\). Note that (a) in Lemma 5.5 was indeed applicable as \(p > 1/\beta \) ensures \((p-1)\beta /(\beta -1) > -1\). In the second case, we set \(\epsilon \) as above and use Markov inequality with \(r = \frac{1+\iota }{\beta } - p\). Then

and as now \(p+r > 1/\beta \) by construction, the same proof as in the first case proves this term to be of the order \(\Delta _n^{1/2-\iota } \epsilon ^{-r}\). Then

(with the same abuse of notation) ends the proof. \(\square \)

We also need to control the denominators in \(R_1^n\) and \(R_2^n\) to make sure that they are bounded away from zero with high probability. To this end, we need two auxiliary results, and in both cases we let \(i \ge k_n + 3\) and \(0<p<\frac{\beta }{2}\) be arbitrary. The first result deals with the variables \(\overline{V}_i^n(p)\) which we introduced before, and it will be used for the treatment of \(R_3^n\) later on as well. Its proof is omitted as it is essentially the same as the one for equation (9.4) in Todorov (2015) and exploits standard inequalities for discrete martingales.

Lemma 5.8

Let \(k_n \sim C_1 \Delta _n^{-\varpi }\) for some \(C_1 > 0\) and \(\varpi \in (0,1)\). Then for all \(1\le x<\frac{\beta }{p}\) we have

where the constant \(K_x\) might depend on x.

The second result deals with the set

and bounds its probability.

Lemma 5.9

For every fixed \(\iota > 0\) we have

where the constant \(K_\iota \) might depend on \(\iota \).

Proof

so

with \(|\overline{\sigma }|^p_i\) being defined as \(|\overline{\sigma \lambda }|^p_i\) but with \(\lambda = 1\). Now, it is a simple consequence of Conditions 5.1 and 5.3 that both \(|\overline{\sigma \lambda }|^p_i\) and \(|\overline{\sigma }|^p_i\) are uniformly bounded from above and below. Using \(\alpha _n\rightarrow 0\) and \(\Delta _n^{1/2}\rightarrow 0\) there then exists some \(n_0\in \mathbb {N}\) such that

for all \(n\ge n_0\). For these n,

from Lemma 5.8, with a choice of x arbitrarily close to \(\frac{\beta }{p}\). The claim follows. \(\square \)

Finally, we provide two lemmas that simplify the discussion of \(R_4^n\). The first one gives an alternative representation for the limiting variable \(L(p,u,\beta )\).

Lemma 5.10

It holds that

Proof

For the sake of simplicity, let us assume that the probability space can be even further enlarged to accommodate three independent random variables \(S^{(1)}\), \(S^{(2)}\) and \(S^{(3)}\), independent of \({\mathcal {G}}\), all with the same distribution as \(S_1\), i.e. distributed as a Lévy process with characteristic triplet (0, 0, F) at time 1, \(F(\textrm{d}x) = F(x) \textrm{d}x\) with \(F(x) = {A}|x|^{-(1+\beta )}\). Using standard properties of stable processes (see e.g. Section 1.2 in Samorodnitsky and Taqqu 1994), for constants \(\sigma _1, \sigma _2 \in \mathbb {R}\) we have

and for our original process \(S_t\) the stability relation

holds as well. Because the increments of the process \((S_t)_{t\ge \tau _{i-2}^n}\) are independent of \(\tau _{i}^n-\tau _{i-1}^n= \Delta _n \phi _i^n \lambda _{\tau _{i-2}^n}\) the conditional distribution of \(\Delta _{i}^nS\) given \((\tau _{i}^n-\tau _{i-1}^n)=a\) equals the one of \(a^{1/\beta }S^{(1)}\). Thus, for all Borel sets M we obtain e.g.

using that we assume that all moments of \((\phi _i^n)^{q}\) for \(q\in (-2,0)\) exist, as well as \(1/\beta -1>-1\). Put differently,

where \(\phi _{i-1}^n\), \(\phi _i^n\), \(S^{(1)}\), \(S^{(2)}\) are all independent of \(\mathcal {F}_{\tau _{i-2}^n}\) and of each other. Thus

after successive conditioning. \(\square \)

Using Lemma 5.10 it is clear that the treatment of \(R_4^n\) hinges on the question how well \(|\overline{\sigma \lambda }|^p_i\) can be approximated by \(|\sigma _{\tau _{i-2}^n}|^p |\lambda _{\tau _{i-2}^n}|^{\frac{p}{\beta }-p}\).

Lemma 5.11

Let \(k_n \sim C_1 \Delta _n^{-\varpi }\) for some \(C_1 > 0\) and \(\varpi \in (0,1)\). Then for all \(y>1\) we have

Proof

We start first with a proof of

and

For the first claim, note that the decomposition

holds. Now, we can apply Lemma 5.6 for each of the three terms, for the third one together with Cauchy–Schwarz inequality, and using boundedness of \(\sigma \) and \(\lambda \) from below and above plus the fact that \(1< \beta < 2\) and \(p< \beta /2 < 1\) guarantee the exponents to lie between \(-1\) and 1. A similar reasoning works for the second claim.

We then obtain

easily, and by convexity of \(x\mapsto x^y\) on \(\mathbb {R}_+\)

gives the claim. \(\square \)

5.3 Bounding the residual terms

In what follows, let \(u > 0\), \(0<p<\frac{\beta }{2}\) and \(\iota > 0\) be arbitrary but fixed, and we always assume that \(k_n\sim C_1 \Delta _n^{-\varpi }\) for some \(C_1 > 0\) and \(\varpi \in (0,1)\). In the following, we also use the notation \(n = \Delta _n^{-1}\) for convenience.

Lemma 5.12

We have

where the constant K might depend on p, \(\beta \) and \(\iota \) but not on u.

Proof

We decompose \(r_i^1(u_n)=r_i^1(u_n) \mathbbm {1}_{\mathcal {C}_i^n}+r_i^1(u_n)\mathbbm {1}_{({\mathcal {C}_i^n})^C}\), and as \(\cos (x)\) is bounded we have for any \(i \ge k_n+3\) by Lemma 5.9

Thus, using Assumption 5.3 we obtain

On the other hand, on \(({\mathcal {C}_i^n})^C\) the relation

holds. Since \(|\overline{\sigma \lambda }|^p_i\) is bounded from above and below by Assumption 5.1, \(\Delta _n^{-p/\beta }\widetilde{V}_i^n(p)\) is now likewise with a constant possibly depending on p and \(\beta \). Let us use the notation from the proof of Lemma 5.7 and write

Using the boundedness of \(\Delta _n^{-p/\beta }\widetilde{V}_i^n(p)\) on \(({\mathcal {C}_i^n})^C\) and the inequality \(|\cos (x)-\cos (y)|\le 2|x-y|^p\) for all \(x,y\in \mathbb {R}\) and \(p \in (0,1]\) we have

We then get

using parts (c) and (g) of Lemma 5.5 as well as (5.6) which holds for any \(0< p < 2\). The claim now follows from the same reasoning as in (5.11), with an additional step of successive conditioning. \(\square \)

Lemma 5.13

We have

where the constant K might depend on p, \(\beta \) and \(\iota \) but not on u.

Proof

We get

with the same arguments that led to (5.11). Similar arguments as in the previous proof plus Assumption 5.1, boundedness of \(\Delta _n^{-p/\beta }\widetilde{V}_i^n(p)\) on \(({\mathcal {C}_i^n})^C\) and \(\beta > 1\) to ensure the existence of moments give

The expectation of the right hand side is bounded by \(K u \Delta _n^{1/2}\), using (5.10) and successive conditioning. We then obtain

as in the previous proof. \(\square \)

Lemma 5.14

We have

where the constant K might depend on p, \(\beta \) and \(\iota \) but not on u.

Proof

Again we obtain

as in (5.11), this time using the boundedness of \(x \mapsto \exp (-x)\) on the positive halfline. We then use a first order Taylor expansion of the (random) function

(defined for \(x > 0\)) and get

for some \({\mathcal {F}}_{\tau _{i-2}^n}\)-measurable \(\epsilon _i^n\) between \(\Delta _n^{-p/\beta } \widetilde{V}_i^n(p)\) and \(|\overline{\sigma \lambda }|^p_i\mu _{p,\beta }^{p/\beta }\kappa _{p,\beta }^{p/\beta }\). An easy computation proves

for any positive \({\mathcal {F}}_{\tau _{i-2}^n}\)-measurable random variable X where the constant K does not depend on u. Using the boundedness of \(\Delta _n^{-p/\beta }\widetilde{V}_i^n(p)\) and \(|\overline{\sigma \lambda }|^p_i\mu _{p,\beta }^{p/\beta }\kappa _{p,\beta }^{p/\beta }\) again we get

where the last line holds by Lemma 5.8 and (5.8) plus the definition of \(\alpha _n\). \(\square \)

Lemma 5.15

We have

where the constant K might depend on p and \(\beta \) but not on u.

Proof

Recall the function \(f^n_{i,u}\) from (5.12) and set \(\widetilde{r}_{i,n}=(|\overline{\sigma \lambda }|^p_i-|\sigma _{\tau _{i-2}^n}|^p|\lambda _{\tau _{i-2}^n}|^{\frac{p}{\beta }-p})\). Then

In the sequel, we prove the same rate of convergence for all three terms on the right-hand side. Starting with (5.14), from the definition of \(r_i^4(u)\) we have

using the independence of \(\phi _{i-1}^n\) and \(\phi _{i}^n\) from \({\mathcal {F}}_{\tau _{i-2}^n}\). A second-order Taylor expansion, possible by the usual boundedness assumptions, now gives

for some \(\delta _{i,n}\) between \(\mu _{p,\beta }^{p/\beta }\kappa _{p,\beta }^{p/\beta }|\overline{\sigma \lambda }|^p_i\) and \(\mu _{p,\beta }^{p/\beta }\kappa _{p,\beta }^{p/\beta }| \sigma _{\tau _{i-2}^n}|^p|\lambda _{\tau _{i-2}^n}|^{\frac{p}{\beta }-p}\). Now it is an easy consequence of (5.13) and Lemma 5.11 together with the reasoning from (5.11) that the expectation in (5.14) is bounded by \(K u^\beta k_n\) with K as in the statement of the lemma.

For (5.16), boundedness of all processes involved gives

by (5.13) whereas Lemma 5.11 proves

Thus

Finally, for the treatment of (5.15) we have to be a little more specific. A simple computation proves

for some K as above. Thus, setting \(\Xi _i=\widetilde{r}_{i,n}-\mathbb {E}_{i-k_n-3} \left[ \widetilde{r}_{i,n}\right] \) we can bound (5.15) by

An application of the Cauchy–Schwarz inequality bounds (5.18) by the product of

and

Lemma 5.11 together with (5.17) proves

and from Lemma 5.6 we have

with the same reasoning as when establishing (5.10). Boundedness of \(L(p,u,\beta )\) and \(N_n(1)\le C \Delta _n^{-1}\) now prove that (5.18) is bounded by \(K u^\beta k_n\).

For (5.19) we use an argument involving discrete martingales, and we first change the upper summation bound from \(N_n(1)\) to \(N_n(1)+ 2k_n +5\) as the corresponding error term is of the order \(K u^\beta k_n\) by boundedness of \(\sigma \) and \(\lambda \), so similar to the one from (5.18). The martingale argument is explained the easiest if we first pretend that the factors \(K u^\beta L(p,u,\beta ) |\sigma _{\tau _{i-k_n-3}^n}|^{-p}|\lambda _{\tau _{i-k_n-3}^n}|^{p-\frac{p}{\beta }}\) were not present. We write

with

\(j=1, \ldots , k_n+3.\) It can be shown that

holds for every \(1 \le \ell < i\), using the fact that by construction one knows at time \(\tau _{(i-1)(k_n+3)+(j-1)}^n\) whether the event \(\{\tau _{(i-1)(k_n+3)}^n \le 1 \}\) has happened or not. The latter event is equivalent to \(\{i-1 \le \lfloor N_n(1)/(k_n+3)\rfloor \}\), so after conditioning on \({\mathcal {F}}_{(i-1)(k_n+3)+(j-1)}^n\) the claim follows from \(\mathbb {E}_{r-k_n-3}^n[\Xi _{r}] = 0\) for every r.

Thus, by (5.20), Cauchy–Schwarz inequality and \(\lfloor N_n(1)/(k_n+3)\rfloor +1 \le Kn/k_n\) we obtain

As the sum over the residual terms in (5.21) has at most \(k_n+2\) elements we obtain

as desired. If we now include \(K u^\beta L(p,u,\beta ) |\sigma _{\tau _{i-k_n-3}^n}|^{-p}|\lambda _{\tau _{i-k_n-3}^n}|^{p-\frac{p}{\beta }}\), we just get an additional factor \(u^{\beta }\) as usual. This is due to boundedness of \(\sigma \) and \(\lambda \) again (and of the function L) plus the fact that measurability w.r.t. \({\mathcal {F}}_{\tau _{i-k_n-3}^n}\) keeps the martingale property from above intact. \(\square \)

Lemma 5.16

We have

where the constant K might depend on p, \(\beta \) and \(\iota \) but not on u.

Proof

As usual we have

and for the analogous sum involving \(\mathbbm {1}_{({\mathcal {C}_i^n})^C}\), as in the previous proof, we may change the upper summation index to \(N_n(1)+2\) without loss of generality. Now, note that by Lemma 5.10 and using the same arguments for \(z_i(u_n)\) we have

Thus for all \(i,j \ge k_n +3\) with \(j-i \ge 2\) we have

where we have used that \(\mathbbm {1}_{\{N_n(1)+2\ge j\}},\mathbbm {1}_{\{N_n(1)+2\ge i\}},\mathbbm {1}_{({\mathcal {C}_i^n})^C}\) and \(\mathbbm {1}_{(\mathcal {C}_j^n)^C}\) are all \(\mathcal {F}_{\tau _{j-2}^n}^n\)-measurable. Using \(2|xy|\le x^2+y^2\) and \(N_n(1)\le C \Delta _n^{-1}\) we then get

Now, with (5.9) plus the standard inequalities \(|\cos (x)-\cos (y)|^2\le 4|x-y|^p\) and \(|\exp (-x)-\exp (-y)|^2\le |x-y|^p\), which hold for all \(p \in (0,2]\), we obtain

and part (a) of Lemma 5.5 together with \(\mathbb {E}\left| (\phi _{i}^n)^{1-\beta }+(\phi _{i-1}^n)^{1-\beta }\right| <\infty \) and the \({\mathcal {F}}_{i-2}^n\)-measurability of the other terms proves that the term above is bounded by

Let us for a moment only discuss the first term. On \(({\mathcal {C}_i^n})^C\) and using Condition 5.1, \(\Delta _n^{-p/\beta }\widetilde{V}_i^n(p)\) as well as all quantities involving \(\sigma \) and \(\lambda \) are bounded from above and below by K. Thus, together with \(|x^q-y^q| \le q|\max (x,y)^{q-1}||x-y|\) for \(q\ge 1\) we get

With a similar argument for the second term we then obtain

Now, we have

using Lemma 5.8, (5.7) and Lemma 5.11. A similar result holds with the exponent being replaced by \(\beta - \iota > 1\). The claim now follows easily. \(\square \)

5.4 Proof of the main theorems

5.4.1 Proof of Theorem 3.1

As discussed before, we may assume Conditions 5.1 and 5.3 to hold. First, we have

and it is a simple consequence of (5.3), the decomposition in (5.4) and Lemmas 5.12 to 5.16 that

holds. Note that we have convergence to zero of all bounds in Lemmas 5.12 to 5.16 as \(\Delta _n \rightarrow 0\), \(k_n \rightarrow \infty \) and \(k_n \Delta _n \rightarrow 0\) by assumption.

The proof of

follows along the lines of Lemma 5.16. We may first change the upper summation index to \(N_n(1)+ 2\) which does not change anything asymptotically because of (5.3), and we then have

for all \(i,j \ge k_n +3\) with \(j-i \ge 2\) using Lemma 5.10. Thus, we obtain

and the claim follows from (5.3) again. \(\square \)

5.4.2 Proof of Remark 3.2

From the definition of \({\hat{\beta }}(p, u_n, v_n)\) it follows easily that the claim \({\hat{\beta }}(p, u_n, v_n) \le 2\) is equivalent to

where we have used the shorthand notation \(a_i=\frac{\widetilde{\Delta _i^n X}-\widetilde{\Delta _{i-1}^nX} }{(\widetilde{V}_i^n(p))^{1/p}}\). A sufficient condition for (5.22) is \(\rho ^2(1-\cos (x)) \le 1-\cos (\rho x)\) for all \(x\in \mathbb {R}\) which itself is equivalent to

Using properties of the cosine and inserting \(\rho =1/2\) we note \(g_{\frac{1}{2}}(x)=g_{\frac{1}{2}}(-x)\) and \(g_{\frac{1}{2}}(x) = g_{\frac{1}{2}}(x+4\pi )\). For (5.23) to hold it then suffices to show \(g_{\frac{1}{2}}(x)\ge 0\) for all \(x\in [0,2\pi ]\). So let \(x\in [0,2\pi ]\). Then

by properties of the trigonometric functions. The claim follows from \(g_{\frac{1}{2}}(0) = 0\). \(\square \)

5.4.3 Proof of Theorem 3.3

We will assume throughout that Assumptions 5.1 and 5.3 are in place, and we set \(k_n\sim C_1 \Delta _n^{-\varpi }\) for some \(C_1> 0\) and some \(\varpi \in (0,1)\) as well as \(u_n\sim C_2 \Delta _n^{\varrho }\) for some \(C_2 > 0\) and \(\varrho \in (0,1)\).

Lemma 5.17

Under the conditions

we have

Proof

The claim follows from a tedious but straightforward computation, using (5.3) and Lemmas 5.12 to 5.16 as well as the conditions on \(k_n\) and \(u_n\). \(\square \)

Lemma 5.18

Let \(u_n\) be as above and set \(v_n=\rho u_n\) for some \(\rho > 0\). Then, for every fixed \(i > 2\) we have

and the same result holds with interchanged roles of \(u_n\) and \(v_n\).

Proof

Using the shorthand notation \(\widehat{\Delta _i^nS} = \lambda _{\tau _{i-2}^n}^{-1/\beta +1}\widetilde{\Delta _{i}^nS}\) and the equality \(\cos (x)\cos (y)=\frac{1}{2}\left( \cos (x-y)+\cos (x+y)\right) \) we have

With the same notation as in the proof of Lemma 5.10 we obtain

as the (\(\mathcal {F}_{\tau _{i-2}^n}\)-conditional) distribution, and in the same manner

We see in particular that exchanging the roles of \(u_n\) and \(v_n\) is irrelevant to the distributions. Then, with Lemma 5.10 and its proof,

We now have for example

for some \(\epsilon _{i}^n\in [0,u_n^\beta |1-\rho |^\beta C_{p,\beta } ((\phi _i^n)^{1-\beta }+(\phi _{i-1}^n)^{1-\beta })]\), and since \(u_n \rightarrow 0\) and \(\mathbb {E}[(\phi _i^n)^{1-\beta }] = M < \infty \) hold, dominated convergence gives

The same argument for the other terms, including the usage of \(u_n \rightarrow 0\) when dealing with the product of the two expectations, gives

Similar arguments lead to

\(\square \)

Lemma 5.19

Let \(u_n\) be as above and set \(v_n=\rho u_n\) for some \(\rho > 0\). Then the \({\mathcal {F}}\)-stable convergence in law

holds, where (X, Y) is mixed normal distributed with mean 0 and covariance matrix \(\mathcal {C}\) consisting of the elements \(\mathcal {C}_{ij}(1)\) given by

Proof

We set

and we can see by the uniform boundedness of \(\overline{z}_i(\cdot )\) and because of \(\Delta _nu_n^{-\beta } \rightarrow 0\) that

are asymptotically equivalent. We note that \(N_n(t)+1\) is an \((\mathcal {F}_{\tau _{i}^n})_{i\ge 1}\)-stopping time for each \(t > 0\), and, therefore, to apply Theorem 2.2.15 in Jacod and Protter (2012) it is sufficient to show that for \(q=\frac{2}{1-\varrho \beta }+2>2\), \(\eta _i^n=\zeta _i^n-\mathbb {E}^n_{i-1}\left[ \zeta _i^n\right] +\mathbb {E}^n_{i}\left[ \zeta _{i+1}^n\right] \) and any fixed \(t > 0\)

hold, where M is either one of the Brownian motions W, \(\widetilde{W}\) or \({\overline{W}}\) or a bounded martingale orthogonal to any of the Brownian motions. Note that Theorem 2.2.15 in Jacod and Protter (2012) is stated as a functional result, and our claim then simply follows by specifically choosing \(t=1\).

First note that Lemma 5.10 gives \(\mathbb {E}^n_{i-1}\left[ \zeta _{i+1}^n\right] =(0,0)\) and therefore \(\mathbb {E}^n_{i-1}\left[ \eta _i^n\right] = (0,0)\) by definition as well. (5.24) then holds. Also, \(\varrho <\frac{1}{\beta }\) gives

Thus, the uniform boundedness of \(\overline{z}_i(\cdot )\) and Assumption 5.3 give

which proves (5.26). To show (5.25) we first recall \(\mathbb {E}^n_{i-1}\left[ \eta _i^n\right] =(0,0)\) and then a simple calculation yields

using iterated expectations, \(\mathbb {E}^n_{i-1}\left[ \zeta _{i+1}^n\right] =(0,0)\) and the fact that the distribution of \(\mathbb {E}^n_{i}\left[ \zeta _{i+1}^{n,j}\right] \mathbb {E}^n_{i}\left[ \zeta _{i+1}^{n,k}\right] \) is independent of \(\mathcal {F}_{\tau _{i-1}^n}\). We then prove

and that the limits on the right-hand side exist and are the same, irrespective of the choice of m. This would result in

everything for an arbitrary m.

We give the arguments for the first convergence result above in detail, the other ones can be treated in exactly the same way. We set \(X_i^{n,1}=\mathbb {E}_{i}^n\left[ ({\zeta _{i+1}^n})^T({\zeta _{i+1}^n})\right] \) and then prove

as well as

Lemma 2.2.12 in Jacod and Protter (2012) (plus the usual asymptotic negligibility when adding finitely many summands) finally gives the claim.

Now, note first that the distribution of \(X_i^{n,1}\) is independent of \(\mathcal {F}_{\tau _{i-1}^n}\). Therefore

(5.29) is then an easy consequence of

where we used (5.3) and Lemma 5.18 to prove that the limit on the right hand side exists. To show (5.30) we use Jensen inequality to obtain

and uniform boundedness of \(\overline{z}_i(\cdot )\) as well as \(N_n(t) \le C \Delta _n^{-1}\) give

using \(\Delta _nu_n^{-\beta }\rightarrow 0\) along with Lemma 5.18 in the last step. The proof of (5.25) can then be finished by a tedious but straightforward computation, combining (5.28) with Lemma 5.18 again.

Finally, to prove (5.27) we use Theorem 4.34 in Chapter III of Jacod and Shiryaev (2003). We set for \(k_n+3\le i \le N_n(t)\) and \(u\ge \tau _{i-2}^n\):

i.e. \((\mathcal {H}_u)_{u\ge \tau _{i-2}^n }\) is the filtration generated by \(\mathcal {H}\) and \(\sigma \left( S_r: u \ge r \ge \tau _{i-2}^n \right) \). Now \((S_u)_{u\ge \tau _{i-2}^n}\) is a process with independent increments w.r.t. to \(\sigma \left( S_r: r\ge \tau _{i-2}^n \right) \). For all \(u\ge \tau _{i-2}^n\) we set \(K_u= \mathbb {E}\left[ \zeta _i|\mathcal {H}_u\right] \) and note that \(K_{\tau _{i}^n} = \zeta _i\) due to \(\zeta _i\) being \(\mathcal {H}_{\tau _i^n}\)-measurable. Then with the aforementioned Theorem 4.34 we have

where \((H_u)_{u\ge \tau _{i-2}^n}\) is a predictable process. Then

where we used that the martingale \((S_u)_{u\ge 0}\) is orthogonal to M in all cases. \(\square \)

Corollary 5.20

Suppose that the conditions in Lemma 5.17 hold and choose \(v_n=\rho u_n\) for some \(\rho > 0\). Then we have the \({\mathcal {F}}\)-stable convergence in law

where \((X',Y')\) is jointly normal distributed with mean 0 and covariance matrix \(\mathcal {C'}\) consisting of

Proof

Using Lemma 5.17 we have

and similarly for \(v_n\), and from several applications of (5.3) together with \(k_n \Delta _n \rightarrow 0\) we also get

Then Lemma 5.19 together with the properties of stable convergence in law yields the claim. \(\square \)

5.4.4 Proof of Theorem 3.5

Using \(L(p,u_n,\beta ) = \mathbb {E}\left[ \exp \left( -u^\beta C_{p,\beta }((\phi ^{(1)})^{1-\beta }+(\phi ^{(2)})^{1-\beta })\right) \right] \), a Taylor expansion of the function

with gradient

around \((L(p,u_n,\beta ),L(p,v_n,\beta ))\) gives

for some \(\eta _1^n\) between \(\widetilde{L}^n(p,u_n)\) and \(L(p,u_n,\beta )\) and some \(\eta _2^n\) between \(\widetilde{L}^n(p,v_n)\) and \(L(p,v_n,\beta )\).

As before, we have

for some \(\varepsilon _1^n\) between 0 and \(C_{p,\beta }u_n^\beta ((\phi ^{(1)})^{1-\beta }+(\phi ^{(2)})^{1-\beta })\). Obviously, \(\varepsilon _1^n \rightarrow 0\) almost surely, so by dominated convergence

We now prove

from which, together with Corollary 5.20 and Slutsky’s lemma, the asymptotic negligibility of (5.34) follows. A similar result obviously holds for the term involving \(\eta _2^n\) and \(v_n\). Using (5.35), we get (5.36) from

To prove the latter claim we use \(1-\eta _1^n = (1-L(p,u_n,\beta )) + (L(p,u_n,\beta )-\eta _1^n)\) and the fact that \((1-L(p,u_n,\beta ))\) is of the order \(u_n^\beta \) using (5.35) while \(|L(p,u_n,\beta )-\eta _1^n| \le |L(p,u_n,\beta )-\widetilde{L}^n(p,u_n)|\) is at most of order \(\Delta _n^{1/2} u_n^{\beta /2}\) using Corollary 5.20. \(\Delta _nu_n^{-\beta } \rightarrow 0\) together with Slutsky’s lemma then yields the claim.

In order to prove the convergence of the bias term (5.31) towards zero we use that for \(\varepsilon _1^n\) as above there exists some \(\epsilon _{2}^n\) between \(\mathbb {E}[\exp (-\epsilon _{1}^n)((\phi ^{(1)})^{1-\beta }+(\phi ^{(2)})^{1-\beta })]\) and \(\kappa _{\beta ,\beta }\) such that

Clearly \(\varepsilon _2^n \rightarrow \kappa _{\beta , \beta }\), and with \(\varepsilon _3^n\) between 0 and \(\varepsilon _1^n\) we obtain for an arbitrary \(\iota >0\)

where we used \(\epsilon _1^n ((\phi ^{(1)})^{1-\beta }+(\phi ^{(2)})^{1-\beta }) \le u_n^\beta C_{p,\beta } ((\phi ^{(1)})^{1-\beta }+(\phi ^{(2)})^{1-\beta })^2\) and dominated convergence via part (c) of Assumption 2.3. The same arguments hold for \(\log (-(L(v_n,p,\beta )-1))\), so

Now, \(\frac{1}{3\beta }<\varrho \) and \(N_n(1)\le C \Delta _n^{-1}\) yield \(u_n^{\frac{3}{2}\beta -\iota }\sqrt{N_n(1)}\rightarrow 0\) almost surely for \(\iota > 0\) small enough, and therefore (5.31) converges in probability to zero.

The claim then follows from deriving the asymptotics of (5.32) and (5.33) for which we use (5.35) and Corollary 5.20. The form of the limiting variance is then computed easily. \(\square \)

5.4.5 Proof of Corollary 3.6

The result follows from Theorem 3.5 immediately, upon using Slutsky’s lemma and \(u_n^{\beta /2}\sqrt{N_n(1)} \rightarrow \infty \) almost surely, where the latter is a consequence of (5.3) and \(\Delta _n u_n^{-\beta } \rightarrow 0\). \(\square \)

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Basse-O’Connor, A., Heinrich, C., & Podolskij, M. (2018). On limit theory for Lévy semi-stationary processes. Bernoulli, 24(4A), 3117–3146.

Basse-O’Connor, A., Lachièze-Rey, R., & Podolskij, M. (2017). Power variation for a class of stationary increments Lévy driven moving averages. Annals of Probability, 45(6B), 4477–4528.

Bibinger, M. (2020). Cusum tests for changes in the Hurst exponent and volatility of fractional Brownian motion. Statistics and Probability Letters, 161(108725), 9.

Bibinger, M., & Trabs, M. (2020). Volatility estimation for stochastic PDEs using high-frequency observations. Stochastic Processes and their Applications, 130(5), 3005–3052.

Bibinger, M., & Vetter, M. (2015). Estimating the quadratic covariation of an asynchronously observed semimartingale with jumps. Annals of the Institute of Statistical Mathematics, 67(4), 707–743.

Brouste, A., & Fukasawa, M. (2018). Local asymptotic normality property for fractional Gaussian noise under high-frequency observations. Annals of Statistics, 46(5), 2045–2061.

Chong, C. (2020). High-frequency analysis of parabolic stochastic PDEs. Annals of Statistics, 48(2), 1143–1167.

Fukasawa, M., & Rosenbaum, M. (2012). Central limit theorems for realized volatility under hitting times of an irregular grid. Stochastic Processes and their Applications, 122(12), 3901–3920.

Hayashi, T., Jacod, J., & Yoshida, N. (2011). Irregular sampling and central limit theorems for power variations: The continuous case. Annales de l’Institut Henri Poincaré, Probabilités et Statistiques, 47(4), 1197–1218.

Heiny, J., & Podolskij, M. (2021). On estimation of quadratic variation for multivariate pure jump semimartingales. Stochastic Processes and their Applications, 138, 234–254.

Jacod, J., & Protter, P. E. (2012). Discretization of processes. Stochastic modelling and applied probability. Springer.

Jacod, J., & Shiryaev, A. N. (2003). Limit theorems for stochastic processes, 2nd edn. Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences] (Vol. 288). Berlin: Springer.

Jacod, J., & Todorov, V. (2018). Limit theorems for integrated local empirical characteristic exponents from noisy high-frequency data with application to volatility and jump activity estimation. The Annals of Applied Probability, 28, 511–576.

Kaino, Y., & Uchida, M. (2021). Parametric estimation for a parabolic linear SPDE model based on discrete observations. Journal of Statistical Planning and Inference, 211, 190–220.

Martin, O., & Vetter, M. (2019). Laws of large numbers for Hayashi–Yoshida-type functionals. Finance and Stochastics, 23(3), 451–500.

Mykland, P. A., & Zhang, L. (2012). The econometrics of high-frequency data. Statistical methods for stochastic differential equations, Monogr. Statist. Appl. Probab. (Vol. 124, pp. 109–190). CRC Press.

Samorodnitsky, G., & Taqqu, M. S. (1994). Stable non-Gaussian random processes. Stochastic modeling. Stochastic models with infinite variance. Chapman & Hall.

Todorov, V. (2015). Jump activity estimation for pure-jump semimartingales via self-normalized statistics. Annals of Statistics, 43(4), 1831–1864.

Todorov, V. (2017). Testing for time-varying jump activity for pure jump semimartingales. Annals of Statistics, 45(3), 1284–1311.

Todorov, V., & Tauchen, G. (2012). Realized laplace transforms for pure-jump semimartingales. Annals of Statistics, 40(2), 1233–1262.

Vetter, M., & Zwingmann, T. (2017). A note on central limit theorems for quadratic variation in case of endogenous observation times. Electronic Journal of Statistics, 11(1), 963–980.

Acknowledgements

The authors want to thank two anonymous referees for their very valuable reports that helped to improve this work considerably.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Theopold, A., Vetter, M. On the estimation of the jump activity index in the case of random observation times. Jpn J Stat Data Sci 6, 457–503 (2023). https://doi.org/10.1007/s42081-023-00187-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42081-023-00187-1