Abstract

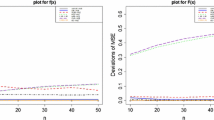

This article addresses the different methods of estimation of the probability density function and the cumulative distribution function for the Gompertz distribution. Following estimation methods are considered: maximum likelihood estimators, uniformly minimum variance unbiased estimators, least squares estimators, weighted least square estimators, percentile estimators, maximum product of spacings estimators, Cramér–von-Mises estimators, Anderson–Darling estimators. Monte Carlo simulations are performed to compare the behavior of the proposed methods of estimation for different sample sizes. Finally, one real data set and one simulated data set are analyzed for illustrative purposes.

Similar content being viewed by others

References

Anderson TW, Darling DA (1952) Asymptotic theory of certain ‘goodness-of-fit’ criteria based on stochastic processes. Ann Math Stat 23:193–212

Anderson TW, Darling DA (1954) A test of goodness of fit. J Am Stat Assoc 49:765–769

Asrabadi BR (1990) Estimation in the Pareto distribution. Metrika 37:199–205

Bagheri SF, Alizadeh M, Nadarajah S (2014) Efficient estimation of the PDF and the CDF of the Weibull extension model. Commun Stat Simul Comput. doi:10.1080/03610918.2014.894059

Bagheri SF, Alizadeh M, Nadarajah S (2016) Efficient estimation of the PDF and the CDF of the exponentiated Gumbel distribution. Commun Stat Simul Comput 45:339361

Cheng RCH, Amin NAK (1979) Maximum product of spacings estimation with application to the lognormal distribution. University of Wales Institute of Science and Technology, Cardiff, Math. Report 79-1

Cheng RCH, Amin NAK (1983) Estimating parameters in continuous univariate distributions with a shifted origin. J R Stat Soc Ser B 45:394–403

Dattner I, Reiser B (2013) Estimation of distribution functions in measurement error models. J Stat Plan Inference 143:479–493

Dixit UJ, Jabbari Nooghabi M (2010) Efficient estimation in the Pareto distribution. Stat Methodol 7:687–691

Dixit UJ, Jabbari Nooghabi M (2011) Efficient estimation in the Pareto distribution with the presence of outliers. Stat Methodol 8:340–355

Durot C, Huet S, Koladjo F, Robin S (2013) Least-squares estimation of a convex discrete distribution. Comput Stat Data Anal 67:282–298

Duval C (2013) Density estimation for compound Poisson processes from discrete data. Stoch Process Appl 123:3963–3986

Er GK (1998) A method for multi-parameter PDF estimation of random variables. Struct Saf 20:25–36

Gupta RD, Kundu D (2001) Generalized exponential distribution: different method of estimations. J Stat Comput Simul 69:315–338

Hand DJ, Daly F, Lunn AD, McConway KJ, Ostrowski E (1994) A handbook of small data sets. Chapman & Hall, London

Jabbari Nooghabi M, Jabbari Nooghabi H (2010) Efficient estimation of PDF, CDF and rth moment for the exponentiated Pareto distribution in the presence of outliers. Statistics 44:1–20

Johnson NL, Kotz S, Balakrishnan N (1994) Continuous univariate distribution, vol 1, 2nd edn. Wiley, New York

Kao JHK (1958) Computer methods for estimating Weibull parameters in reliability studies. Trans IRE Reliab Qual Control 13:15–22

Kao JHK (1959) A graphical estimation of mixed Weibull parameters in life testing electron tubes. Technometrics 1:389–407

Kundu D, Raqab MZ (2005) Generalized Rayleigh distribution: different methods of estimations. Comput Stat Data Anal 49:187–200

Lehmann EL, Casella G (1998) Theory of point estimation, 2nd edn. Springer, New York

MacDonald PDM (1971) Comment on a paper by Choi and Bulgren. J R Stat Soc Ser B 33:326–329

Mann NR, Schafer RE, Singpurwalla ND (1974) Methods for statistical analysis of reliability and life data. Wiley, New York

Olver FWJ, Lozier DW, Boisvert RF, Clark CW (2010) NIST handbook of mathematical functions. National Institute of Standards and Technology, U.S. Department of Commerce, Cambridge University Press, Cambridge, New York

Pettitt AN (1976) Cramér-von-Misess statistics for testing normality with censored samples. Biometrika 63:475–481

Przybilla C, Fernandez-Canteli A, Castillo E (2013) Maximum likelihood estimation for the three-parameter Weibull CDF of strength in presence of concurrent flaw populations. J Eur Ceram Soc 33:1721–1727

Ranneby B (1984) The maximum spacing method. An estimation method related to the maximum likelihood method. Scand J Stat 11:93–112

Stephens MA (1974) EDF statistics for goodness of fit and some comparisons. J Am Stat Assoc 69:730–737

Swain J, Venkatraman S, Wilson J (1988) Least squares estimation of distribution function in Johnson’s translation system. J Stat Comput Simul 29:71–297

Tripathi YM, Mahto AK, Dey S (2017) Efficient estimation of the PDF and the CDF of a generalized logistic distribution. Ann Data Sci 4:63–81

Tripathi YM, Kayal T, Dey S (2017) Estimation of the PDF and the CDF of exponentiated moment exponential distribution. Int J Syst Assur Eng Manag. doi:10.1007/s13198-017-0599-3

Acknowledgements

The authors would like to thank the Editor and the referees for careful reading and for providing constructive comments that greatly improved the presentation of article.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Suppose that \(X_1, X_2, \ldots , X_n\) denotes a random sample of size n drawn from the Gompertz distribution as defined in (1.1). Then we estimate unknown parameters of the \(G(c, \theta )\) distribution using following estimation methods.

1.1 Maximum Likelihood Estimation

The log-likelihood function of \((c, \theta )\) is given by

The corresponding MLEs \((\hat{c},\hat{\theta })\) of \((c , \theta )\) is obtained by simultaneously solving the following non-linear equations

1.2 Least Squares Estimation

The LSEs \((\tilde{c}_{ls},\tilde{\theta }_{ls})\) of \((c , \theta )\) can be obtained by minimizing

with respect to c and \(\theta \).

1.3 Weighted Least Square Estimation

The WLSEs \((\tilde{c}_{wls},\tilde{\theta }_{wls})\) of \((c , \theta )\) can be obtained by minimizing

with respect to c and \(\theta \).

1.4 Percentile Estimation

Let \((\tilde{\alpha },\tilde{\beta })\) are the PCEs of \((c , \theta )\), then \((\tilde{c},\tilde{\theta })\) can be obtained by minimizing

with respect to c and \(\theta \).

1.5 Method of Maximum Product Spacing

The MPS estimates \((\tilde{c},\tilde{\theta })\) of \((c, \theta )\) can be obtained by solving

where \(H(c , \theta )\!=\! \frac{1}{n+1}\sum _{i=1}^{n+1} \log D_i(c, \theta )\) and \(D_i(c, \theta )\!=\!F(x_{(i:n)}\mid c, \theta )-F(x_{(i-1:n)}\mid c, \theta ).\) Also \(\Delta _1 \left( x_{(i:n)}\mid \theta , c \right) =\frac{\theta }{c}e^{-\theta /c (e^{cx_{(i:n)}}-1)}\left[ x_ie^{cx_{(i:n)}}-\frac{1}{c}(e^{cx_{(i:n)}}-1)\right] \) and \(\Delta \left( x_{(i:n)}\mid \theta , c \right) =- \frac{1}{c}(e^{c x_{i}}-1)e^{-\frac{\theta }{c}(e^{c x_{(i:n)}}-1)}. \)

1.6 Method of Cramér–von-Mises

The CVM estimates of c and \(\theta \), say \(\tilde{c}_{cvm}\) and \(\tilde{\theta }_{cvm}\), can be obtained by solving

where \(\Delta _1\) and \(\Delta \) are specified previously.

1.7 Method of Anderson–Darling and Modified Anderson–Darling

The AD estimates of c and \(\theta \), say \(\tilde{c}_{AD}\) and \(\tilde{\theta }_{AD}\), can be obtained by solving

Rights and permissions

About this article

Cite this article

Dey, S., Kayal, T. & Tripathi, Y.M. Evaluation and Comparison of Estimators in the Gompertz Distribution. Ann. Data. Sci. 5, 235–258 (2018). https://doi.org/10.1007/s40745-017-0126-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40745-017-0126-z

Keywords

- Gompertz distribution

- Maximum likelihood estimator

- Uniformly minimum variance unbiased estimator

- Least square estimator

- Percentile estimator

- Cramér–von-Mises estimator