Abstract

Objectives

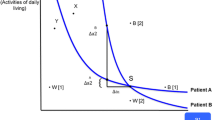

The objective of this study was to investigate the degree of inconsistency in quality-of-life attribute preference orderings generated via successive best–worst scaling (a form of ranking whereby the respondent chooses the best and worst attributes from a choice set, these attributes are then eliminated and the best and worst attributes from the reduced choice set are then chosen and this process is continued until all presented attributes are eliminated) and conventional ranking methods (whereby the respondent chooses the best, second best and third best from a choice set until all presented attributes are eliminated).

Methods

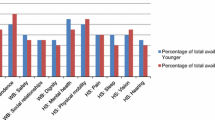

An on-line survey was developed for administration to two general population samples comprising younger people (aged 18–64 years) and older people (aged 65 years and above). Data were analysed in STATA through an empirical examination of the relative level of choice inconsistency (randomness in responses or the variability in choice outcomes not explained by attributes and their associated preference weights) for successive best–worst in comparison with the conventional ranking method for the younger and older person samples.

Results

For the younger person sample, both methods were found to be similarly consistent. In contrast, for the older person sample, ranking performed relatively worse than best–worst scaling with more inconsistent responses (tau = 0.515, p < 0.01).

Conclusions

These findings lend some support to the hypothesis initially propagated by the developers of best–worst scaling that it is a comparatively easier choice task for respondents to undertake than a traditional ranking task.

Similar content being viewed by others

Data Availability

The dataset, software code and econometric model/s underpinning this research are available upon request from the study authors.

Notes

The three cases are also described as best–worst object scaling, best–worst attribute scaling and best–worst discrete choice experiments [12].

References

Soekhai V, de Bekker-Grob E, Ellis A, Vass C. Discrete choice experiments in health economics: past, present and future. Pharmacoeconomics. 2019;37(2):201–26.

Brazier J, Ratcliffe J, Salomon J, Tsuchiya A. Measuring and valuing health benefits for economic evaluation. 2nd ed. Oxford: Oxford University Press; 2017.

Whitty J, Lancsar E, Rixon K, Golenko X, Ratcliffe J. A systematic review of stated preference studies reporting public preferences for healthcare priority setting. Patient. 2014;7(4):365–86.

Gu Y, Lancsar E, Ghijben P, Butler J, Donaldson C. Attributes and weights in health care priority setting: a systematic review of what counts and to what extent. Soc Sci Med. 2015;146:41–52.

Fryback D, Dasbach E, Klein R, et al. The Beaver Dam Health Outcomes Study: initial catalog of health state quality factors. Med Decis Making. 1993;13(2):89–102.

Brazier J, Roberts J, Deverill M. The estimation of a preference-based measure of health from the SF-36. J Health Econ. 2002;21(2):271–92.

Craig B, Busschbach J, Salomon J. Keep it simple: ranking health states yields values similar to cardinal measurement approaches. J Clin Epidemiol. 2009;62(3):296–305.

Farmakas A, Theodorou M, Galanis P, et al. Public engagement in setting healthcare priorities: a ranking exercise in Cyprus. Cost Eff Resour Alloc. 2017;9(15):16.

Wiseman V, Mooney G, Berry G, Tang KC. Involving the general public in priority setting: experiences from Australia. Soc Sci Med. 2003;56(5):1001–12.

Kaplan G, Baron-Epel O. The public’s priorities in health services. Health Expect. 2015;18(5):904–17.

Finn A, Louviere J. Determining the appropriate response to evidence of public concern: the case of food safety. J Public Policy Marketing. 1992;11(2):12–25.

Lancsar E, Louviere J, Donaldson C, Currie G, Burgess L. Best worst discrete choice experiments in health: methods and an application. Soc Sci Med. 2013;76(1):74–82.

Louviere JJ, Flynn TN. Using best-worst scaling choice experiments to measure public perceptions and preferences for healthcare reform in Australia. Patient. 2010;3(4):275–83.

Uy E, Bautista D, Xin X, et al. Using best-worst scaling choice experiments to elicit the most important domains of health for health-related quality of life in Singapore. PLoS One. 2018;13(2):e0189687.

Hauber A, Mohamed A, Johnson R, et al. Understanding the relative importance of preserving functional abilities in Alzheimer’s disease in the United States and Germany. Qual Life Res. 2014;23(6):1813–21.

Louviere J, Street D, Burgess L, et al. Modelling the choices of individual decision makers be combining efficient choice experiment designs with extra preference information. J Choice Modelling. 2008;1:126–63.

Louviere J, Flynn T, Marley A. Best-worst scaling: theory, methods and applications. Cambridge: Cambridge University Press; 2015.

Wright S, Vass C, Sim G, et al. Accounting for scale heterogeneity in health care related discrete choice experiments when comparing stated preferences: a systematic review. Patient. 2018;11:475–88.

Vass C, Wright S, Burton M, Payne K. Scale heterogeneity in healthcare discrete choice experiments: a primer. Patient. 2018;11:167–73.

Ratcliffe J, Lancsar E, Flint T, et al. Does one size fit all? Assessing the preferences of older and younger people for attributes of quality of life. Qual Life Res. 2017;26(2):299–309.

Hawthorne G, Richardson J, Osborne R. The Assessment of Quality of Life (AQoL) instrument: a psychometric measure of health-related quality of life. Qual Life Res. 1999;8(3):209–24.

Netten A, Burge P, Malley J, et al. Outcomes of social care for adults: developing a preference-weighted measure. Health Technol Assess. 2001;16:1–165.

Pink B. Information paper: an introduction to Socio-Economic Indexes for Areas (SEIFA). Cat no. 2039.0. Canberra (ACT): Australian Bureau of Statistics; 2006.

Pink B. Socio-Economic Indexes for Areas (SEIFA)-Technical Paper. Cat no. 2039.0.55.001. Canberra (ACT: Australian Bureau of Statistics; 2006A.

Greene W, Hensher D. Does scale heterogeneity across individuals’ matter? An empirical assessment of alternative logit models. Transportation. 2010;37(3):413–28.

Lancsar E, Fiebig D, Hole A. Discrete choice experiments: a guide to model specification, estimation and software. Pharmacoeconomics. 2017;35(7):697–716.

Harada C, Natelson Love M, Triebel K. Normal cognitive aging. Clin Geriatr Med. 2013;29(4):737–52.

Kumar S, Kant S. Exploded logit modelling of stakeholders’ preferences for multiple forest values. For Policy Econ. 2007;9(5):515–26.

Swait J, Louviere J. The role of the scale parameter in the estimation and comparison of multinomial logit models. J Mark Res. 1993;30(3):305–14.

Greene W. Econometric analysis. 7th ed. Upper Saddle River: Prentice Hall; 2007.

Fiebig D, Keane M, Louviere J, et al. The generalized multinomial logit: accounting for scale and coefficient heterogeneity. Mark Sci. 2010;29(3):393–421.

Akaike H. A new look at the statistical model identification. IEEE Transact Autom Control. 1974;19:716–23.

Australian Bureau of Statistics. Household use of information technology, Australia, 2016–17. Canberra (ACT): Australian Bureau of Statistics; 2018.

Milte R, Ratcliffe J, Chen G, et al. Cognitive overload? An exploration of the potential impact of cognitive functioning in discrete choice experiments with older people in health care. Value Health. 2014;17(5):655–9.

Acknowledgements

The authors thank Dr. Nicolas Krucien, Dr. Yuanyuan Gu and Associate Prof. Gang Chen for their helpful comments on a previous version of this paper.

Author information

Authors and Affiliations

Contributions

JR, BK and EL conceived the study. All authors designed the study. JR and EL oversaw the data analysis. BK and CH performed the data analysis. JR led and BK, EL and CH contributed to drafting the article. All authors read and approved the final article.

Corresponding author

Ethics declarations

Funding

This study was funded in part by an Australian Research Council Linkage Grant (LP110200079).

Conflict of interest

Julie Ratcliffe, Billingsley Kaambwa, Claire Hutchinson and Emily Lancsar have no conflicts of interest that are directly relevant to the content of this article.

Ethics approval

This study was approved by the Flinders University Social and Behavioural Research Ethics Committee (Project No.: 6682).

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Ratcliffe, J., Kaambwa, B., Hutchinson, C. et al. Empirical Investigation of Ranking vs Best–Worst Scaling Generated Preferences for Attributes of Quality of Life: One and the Same or Differentiable?. Patient 13, 307–315 (2020). https://doi.org/10.1007/s40271-019-00406-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40271-019-00406-6