Abstract

To investigate how to accurately identify bee species using their sounds, we conducted acoustic analysis to identify three pollinating bee species (Apis mellifera, Bombus ardens, Tetralonia nipponensis) and a hornet (Vespa simillima xanthoptera) by their flight sounds. Sounds of the insects and their environment (background noises and birdsong) were recorded in the field. The use of fundamental frequency and mel-frequency cepstral coefficients to describe feature values of the sounds, and supported vector machines to classify the sounds, correctly distinguished sound samples from environmental sounds with high recalls and precision (0.96–1.00). At the species level, our approach could classify the insect species with relatively high recalls and precisions (0.7–1.0). The flight sounds of V.s. xanthoptera, in particular, were perfectly identified (precision and recall 1.0). Our results suggest that insect flight sounds are potentially useful for detecting bees and quantifying their activity.

Similar content being viewed by others

1 Introduction

Monitoring insect activity is useful for many purposes, such as pest control and monitoring beneficial insects. For pest control, it is important to spray pesticides at the right time, but scheduling pesticide application is difficult for farmers since the occurrence of pest species is hard to predict. For pollination in greenhouses, monitoring the activity of bees is useful in managing their activity and knowing when to replace nest boxes (Fisher and Pomeroy 1989; Morandin et al. 2001). Detection of insects can also be used to better understand the biodiversity of pollinators and their habitat use (Miller-Struttmann et al. 2017; Hill et al. 2018). Monitoring insect activity and detecting insects are thus useful in both agricultural production and ecological research.

Several methods for monitoring insects automatically have been developed to date. For example, image processing and analysis techniques are used to identify orchard insects automatically (Wen and Guyer 2012), and Zhu et al. (2017) developed a method to detect Lepidoptera species in digital images using a cascade architecture which combines deep convolutional neural networks and supported vector machines.

Another way to monitor insect activity is to use acoustic or vibrational information. Such analysis can be used at night or in places where it is impractical to use digital cameras, such as underground or in dense grass. For example, Celis-Murillo et al. (2009) studied birdsong to investigate bird species and density in a range of places, and reported that acoustic analysis performed better than the human ear. In addition, acoustic analysis was used in postharvest management for monitoring insects such as rice weevils, Sitophilus oryzae, in grain storage (Fleurat-Lessard et al. 2006; Njoroge et al. 2017). Towsey et al. (2014) demonstrated that the use of acoustic indices could identify the cicada chorus in the natural environment, and Lampson et al. (2013) developed automatic identification methods for stink bugs (Euschistus servus and Nezara viridula) using acoustic analysis of intraspecific substrate-borne vibrational signals. Recently, Gradišek et al. (2017) tried to discriminate bumblebee species using the acoustic features of their flight sounds, and found that the different species differed in their flight sounds. In this way, acoustic/vibrational-based monitoring technology is becoming popular, but previous studies have focused on the Cicadae and Orthoptera (Obrist et al. 2010) or specific bee species such as bumble bees (De Luca et al. 2014; Gradišek et al. 2017; Miller-Struttmann et al. 2017), and, to our knowledge, there are still few studies that focus on identifying different types of bees by their sounds. Especially, in practical sense, distinguishing predators and pollinators are important for beekeepers or ecologist so that investigating whether the acoustic analysis can identify bee species into functional group is informative.

The objective of our study was to develop methods to distinguish bee species from environmental sounds recorded under natural field conditions. Here, we analyzed the flight sounds of three bee species which are popular pollinators in Japan, including western honey bees, Apis mellifera (Apidae: Apinae), Bombus ardens (Apidae: Bombus), Tetralonia nipponensis (Apidae: Eucerini), and one hornet species, the Japanese yellow hornet, Vespa simillima xanthoptera (Vespidae: Vespa), which is a predator of honeybees in Japan. We expect that technology that can identify such insects against background noise will be useful for the evaluation of pollination services, and the study of behavioral ecology. Bees produce specific flight sounds, and some insect species, such as hornets, produce particularly distinctive sounds. As such, we expected that flight sounds of some bees could be identified automatically using acoustic features. Monitoring predator-prey relationships is particularly important in ecological surveys, and we expect that the methods developed in this study will contribute to the monitoring of hornet and bee activities in an ecological context.

2 Materials and methods

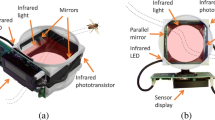

Sounds were sampled using a microphone (AT9905, Audio-Technica, Tokyo, Japan) connected to a portable linear PCM recorder (R-05 WAVE/MP3 Recorder, Roland, Shizuoka, Japan). The microphone was connected with the edge of a metal stick, and we gently approached the flying bee and hornet species with the microphone. The sounds were sampled at 44.1 kHz with a resolution of 16 bits. The raw sound data were processed in Adobe Audition CC sound analysis software (Adobe Systems Incorporated, CA, USA).

The experiments were conducted in rural areas or remote forests in Fukuyama and Kyoto, western Japan. We collected the flying sounds of A. mellifera, B. ardens, T. nipponensis, and V. simillima xanthoptera. We chose these species since they are commonly observed in the countryside in Japan (especially B. ardens, A. mellifera, and V. simillima xanthoptera). In terms of the body size, V. simillima xanthoptera was largest among four species, and B. ardens was slightly larger than other two pollinator species (unpublished data). The bees were all female and their sounds were recorded when they approached flowering herbs. The flight sounds of V. s. xanthoptera were recorded when they hovered close to honey bee nest boxes. In Adobe Audition CC, we extracted 200 samples of A. mellifera and B. ardens sounds, 160 samples of T. nipponensis sounds, and 120 samples of V. s. xanthoptera sounds in wav file format. Most recordings were 0.3 to 1.0 s long. We also collected 200 recordings of background sounds and unspecified birdsong (mostly from sparrows). Most of the background sounds we heard were wind sounds, and sounds made by leaves swaying in the wind. To understand the sound features of the four insect species, we investigated the fundamental frequency of each species by inspection of spectrums of their flight sounds using Adobe Audition CC.

We used machine learning techniques to classify sound recordings as the sounds made by the three bee species, the hornet species, birdsong, and background sounds. We split the sample data into training data (80% of total samples) for calibration of the classification model, and test data (20% of total samples) for evaluation of the model. There were clear differences in the frequency spectra and the harmonic components between their flight sounds and the background sounds (Figure 1). Therefore, we used mel-frequency cepstral coefficients (MFCC) to describe the acoustic characteristic feature values of the different types of sounds, because MFCC was one of the most frequently used feature values in identifying sounds from different insects in previous studies, such as Orthoptera (Chaves et al. 2012; Zhang et al. 2012), Cicadae (Zilli et al. 2014), and some bumble bees (Gradišek et al. 2017). Basically, MFCC describes the timbre of sounds and is calculated using the following steps: (1) slicing the original sound into frames, (2) applying a window function to each frame, (3) applying Fourier transformation to each frame and obtaining the power spectrum of each frame, (4) applying mel-scale filter banks to the frames, and (5) applying a discrete cosine transformation (DCT). MFCC was originally used for human voice identification, and it is more capable of discriminating sounds at lower frequencies, and less capable of discriminating sounds at higher frequencies. In our study, 12 kHz low-pass filter was applied to eliminate unspecified high frequency sounds such as machinery and sliced the original sounds with length of 1024 sample points. Hamming window was applied to each frame and applied fast Fourier transformation (FFT) before applying mel-scale filter banks to the frames. Furthermore, we also used fundamental frequency sounds of each sample as one of the feature values used to describe the pitch of the sound. Since the background sounds and birdsong had no harmonic structure, we extracted the fundamental frequency of those sounds using the “fund” function in package “seewave” (Sueur et al. 2008) in R v. 3.2.4.

For classification, we used a support vector machine (SVM), since previous studies reported that SVM performed as well as other classification techniques, such as decision tree or linear discriminant analysis, in classifying bird or amphibian species (Acevedo et al. 2009). SVM is a supervised machine learning algorithm and is based on finding a hyperplane which divides a certain dataset into different classes. The essence of SVM is that it maximizes margins that separate datasets, and it can transform a non-linear problem into linear one by using kernel functions (Chapelle et al. 2002). All analyses were conducted in Python v. 3.6 and R v. 3.2.4 software. For calculation of MFCC, we used the “python speech features” library, and for SVM, we used the “ksvm” function of R v.3.2.4 in the “kernlab” package (Karatzoglou et al. 2004). We evaluated the performance of the model using “recall” and “precision” in each species. Precision is the ratio of the number of true positives to the total number of predicted positives (Raghavan et al. 1989). Recall is the ratio of the number of true positives to the total number of actual positives (Raghavan et al. 1989). Precision and recall were calculated following Eqs. (1) and (2).

3 Results

The mean fundamental frequency of the sounds was 251.19 Hz ± 45.04 Hz (mean ± SD, N = 200) for A. mellifera, 203.06 ± 51.79 Hz (N = 200) for B. ardens, 224.08 ± 49.22 Hz (N = 160) for T. nipponensis, and 107.13 ± 15.91 Hz (N = 120) for V. s. xanthoptera. The classifier produced by SVM correctly distinguished 136 out of 136 samples of flight sounds from environmental sounds (Table I). On the other hand, 77 out of 80 samples of environmental sounds were correctly classified (Table I). Precisions and recalls of both types of sounds were above 0.95.

The model correctly classified 34 out of 40 samples of A. mellifera, 37 out of 40 samples of B. ardens, 21 out of 32 samples of T. nipponensis, and 24 out of 24 samples of V. s. xanthoptera (Table II). Both precision (1.00) and recall (1.00) in classifying V. s. xanthoptera were higher than for any other species. The results indicate that T. nipponensis had the lowest recall (0.66) among the bee and hornet species, while B. ardens had the lowest precision (0.73). The samples of B. ardens and T. nipponensis were mutually misclassified (Table II). The samples of A. mellifera were more often misclassified as B. ardens than vice versa (Table II). Among environmental sounds, 38 out of 40 samples of background sounds, and 34 out of 40 samples of birdsong were correctly classified. Three samples of birdsong were misclassified as the sounds of A. mellifera (Table II).

4 Discussion

Our results suggest that it is possible to discriminate insect flight sounds from environmental sounds at a high accuracy (≥ 0.95 in precision and recall), which indicates that this method can be used to discriminate insect sounds from background sounds. However, in terms of species identification, bee species were classified with relatively low accuracy (0.7–0.9 in precision and recall), although the hornet species (V. s. xanthoptera) could be accurately classified (1.00 in precision and recall). Regarding bee species discrimination, Gradišek et al. (2017) tried to identify 12 species of bumblebees using acoustic analysis, and found that the accuracy of identification varied between species (0.0–1.00 in precision and recall) (Caliculated from Table II in Gradišek et al. 2017). In their study, a few species (such as brown-banded carder bee, B. humilis, queens or early bumble bee, B. pratorum, workers) were more accurately identified (precision and recall both > 0.9), and most of the species were identified with precision and recall between 0.50–0.85 in their validation of the model using the training dataset (Caliculated from Table II in Gradišek et al. 2017). In other insect species, Ganchev et al. (2007) could correctly classify more than 95% of the sounds of crickets, cicadas, and grasshoppers to the family level, and 86% to the species level. The results of our study could not be directly compared with this previous study, but these results support the use of acoustic analysis for family or species classification.

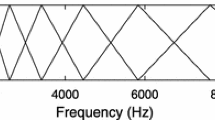

In this study, the sounds of V. s. xanthoptera were correctly classified more often than that of the three bee species. The former had a relatively lower fundamental frequency (around 100 Hz) than the latter (more than 200 Hz for each bee species), which can be advantageous in distinguishing sounds. The sounds of B. ardens and T. nipponensis were mutually misclassified. These results indicate that the sound features of these species are relatively similar (Figure 2), and the fundamental frequency of the sounds of these two-species (B. ardens 203.06 ± 51.79 and T. nipponensis 224.08 ± 49.22) further supports this. The sounds of T. nipponensis were most often misclassified as other bee species (eight samples were misclassified as B. ardens, and three samples were misclassified as A. mellifera). The fundamental frequency of the sounds of T. nipponensis was slightly higher than that of B. ardens, and lower than that of A. mellifera, which may result in relatively rates of high misclassification.

Regarding the reason why there are distinct differences in the accuracy with which the hornet species and the three bee species were identified, this may be due to differences in morphological features such as body shape or wing size of the species, as this can determine their flight sounds. Byrne et al. (1988) showed that the smaller size of homopterous insects has higher wingbeat frequency, and Burkart et al. (2011) demonstrated that the frequency of wing beat of bees was in a certain range which was anatomically determined and correlated to the size of the bees. Miller-Struttmann et al. (2017) investigated the relationship between the sound characteristics of flight sounds and wing length of bumble bees, and found a negative relationship between wing length and the fundamental frequency of flight sounds of bumble bees. The wing length of V. simillima xanthoptera and A. mellifera are 31.76 mm (Byun et al. 2009) and 9.3 mm (Ruttner 1988), respectively. Our results indicate that the fundamental frequency of V. s. xanthoptera sounds is lower than that of A. mellifera, which supports the idea that wing length correlates flight sounds in bees and hornets. In general, the body and wing size of hornets, which are the main predators of pollinator bees, are larger than those of pollinator bees. For example, Byun et al. (2009) reported that the wing length of Vespa dybowskii and red wasps, Vespula rufa schrenckii, were 18.66 mm and 47.00 mm, respectively, while Ruttner (1988) reported that the wing length of other honeybees were comparatively smaller (dwarf honey bee, A. florea 6.8 mm, giant honey bee, A. dorsata 14.2 mm). Bumble bees also have relatively small wing lengths (B. diversus diversus 13.36 mm, B. ignites 15.01 mm, (Tsuyuki and Sudo 2004), buff-tailed bumble bee, B. terrestris 9.0 to 13.0 mm (Free 1955)). In the case of B. ardens, we were not able to find data on the wing length of this species in the literature, but its body size/wing length is likely smaller than that of B. terrestris, considering the comparative morphological research conducted by Nagamitsu et al. (2007). In terms of fundamental frequency, Gradišek et al. (2017) investigated the fundamental frequency of different bumblebee species (garden bumble bee, B. hortorum 153 ± 16 Hz, B. humilis 193 ± 13 Hz, tree bumble bee, B. hypnorum 186 ± 5.6 Hz, heath bumble bee, B. jonellus 206 ± 4 Hz, red-tailed bumble bee, B. lapidarius 160 ± 11 Hz, white-tailed bumble bee, B. lucorum 161 ± 9 Hz, common carder bee, B. pascuorum 180 ± 20 Hz, B. paratorum 211 ± 17 Hz, red-shanked carder bee, B. ruderarius 180 ± 5 Hz, shrill carder bee, B. sylvarum 252 ± 16 Hz). Regarding hornets or wasps, the fundamental frequency of median wasps, Dolichovespula media, was around 150 Hz (Tautz and Markl 1978), and Ishay (1975) also reported that Oriental hornets, Vespa orientalis, produce sounds with peaks between 80 and 125 Hz. Considering our results and the abovementioned previous studies, it is possible that acoustical analysis of the flight sound of bees can be used to differentiate pollinators from predators.

Our results indicate that MFCC and fundamental frequency were useful for differentiating the sounds of the three bee species and the hornet species. MFCC are used to extract features of human voices and have proved useful for obtaining feature values of the sounds made by insects. In our study, some samples of three bee species except for the hornet were mutually misclassified, but we expect that the accuracy could be improved by using additional feature values or new classification methods. In particular, owing to the development of information technology, classification of sounds using deep-learning techniques is becoming widely used in several areas. Although the deep learning-based classification usually requires a large dataset, it can discriminate between objects without preparing hand-calculated feature values such as MFCC or fundamental frequency, and can differentiate between more subtle differences of the sound data, so that it can be used for discriminating flight sounds with high precision. For example, Kiskin et al. (2017) found that the use of a convolutional neural network to analyze and detect the buzz sounds of mosquitos performed better than SVM or random forest methods.

Sound or vibrational information offers a useful tool for quantitatively monitoring insect activities. Image-processing–based analysis is already widely used, and sound- or vibration-based analysis also has potential. Sound information can complement image-based information, which is influenced by weather and light. So far, acoustic/vibrational analysis has not been extensively used to detect insects, but our results point to various applications. For example, acoustic/vibrational analysis could be used to replicate the studies of Miller-Struttmann et al. (2017), who analyzed the buzzing of bumble bees visiting two alpine forbs to evaluate pollination services, and of Potamitis et al. (2015), who analyzed wing beats of insect pests to predict the arrival of the pests. We used only a single microphone, but placing multiple microphones in a wide range of places would enable us to study animal movements in the field, and evaluate how they use their habitat over a wide range of areas and time periods (Blumstein et al. 2011). For example, microphone arrays can be used to locate birds in the air, and to understand signal interactions among the calls of many animals (Mennill et al. 2006; Mennill and Vehrencamp 2008).

The higher sampling frequency is one of the improvements of our method, but it must be noted that the sounds of insects are not loud, and there are limits to the ability to detect and analyze these sounds. As described above, the acoustic feature of the flight sounds is thought to be dependent upon the morphological features of insects (especially wing shape), and, as such, using sound would be limited to discrimination of relatively distant taxa and would not be suitable for discrimination of species in relatively closely related taxa. As such, it is likely that our method can be used to classify bees into some functional groups, such as pollinator and predator, rather than to accurately identify species. Furthermore, some insects, such as butterflies, make very little sound when they fly and should be monitored using images rather than sound. We expect that combining multiple techniques and choosing optimal monitoring instruments is important for monitoring insect activity, and our study suggests that acoustic analysis of insect flight sounds could be a potential tool to help understand the occurrence patterns of several bee species.

References

Acevedo, M.A., Corrada-Bravo, C.J., Corrada-Bravo, H., Villanueva-Rivera, L.J., Aide, T.M. (2009) Automated classification of bird and amphibian calls using machine learning: A comparison of methods. Ecol. Inform. 4, 206–214. https://doi.org/10.1016/J.ECOINF.2009.06.005

Blumstein, D.T., Mennill, D.J., Clemins, P., Girod, L., Yao, K., Patricelli, G., Deppe, J.L., Krakauer, A.H., Clark, C., Cortopassi, K.A., Hanser, S.F., McCowan, B., Ali, A.M., Kirschel, A.N.G. (2011) Acoustic monitoring in terrestrial environments using microphone arrays: applications, technological considerations and prospectus. J. Appl. Ecol. https://doi.org/10.1111/j.1365-2664.2011.01993.x

Burkart, A., Lunau, K., Schlindwein, C. (2011) Comparative bioacoustical studies on flight and buzzing of neotropical bees. J. Poll. Ecol. 6, 118–124

Byrne, D.N., Buchmann, S.L., Spangler, H.G. (1988) Relationship between wing loading, wing stroke frequency and body mass in homopterous insects. J. Exp. Biol. 135, 9–23

Byun, D., Hong, J., Saputra, Ko, J.H., Lee, Y.J., Park, H.C., Byun, B.K., Lukes, J.R. (2009) Wetting characteristics of insect wing surfaces. J. Bionic Eng. https://doi.org/10.1016/S1672-6529(08)60092-X

Celis-Murillo, A., Deppe, J.L., Allen, M.F. (2009) Using soundscape recordings to estimate bird species abundance, richness, and using soundscape recordings to estimate bird species abundance, richness, and composition. Source J. F. Ornithol. https://doi.org/10.1111/j.1557-9263.2009.00206.x

Chapelle, O., Vapnik, V., Bousquet, O., Mukherjee, S. (2002) Choosing multiple parameters for support vector machines. Mach. Learn. https://doi.org/10.1023/A:1012450327387

Chaves, V.A.E., Travieso, C.M., Camacho, A., Alonso, J.B. (2012) Katydids acoustic classification on verification approach based on MFCC and HMM, in: INES 2012 - IEEE 16th International Conference on Intelligent Engineering Systems, Proceedings. https://doi.org/10.1109/INES.2012.6249897

De Luca, P.A., Cox, D.A., Vallejo-Marín, M. (2014). Comparison of pollination and defensive buzzes in bumblebees indicates species-specific and context-dependent vibrations. Naturwissenschaften 101 (4), 331–338

Fisher, R.M., Pomeroy, N. (1989) Pollination of greenhouse muskmelons by bumble bees (Hymenoptera: Apidae). J. Econ. Entomol. https://doi.org/10.1093/jee/82.4.1061

Fleurat-Lessard, F., Tomasini, B., Kostine, L., Fuzeau, B. (2006). Acoustic detection and automatic identification of insect stages activity in grain bulks by noise spectra processing through classification algorithms. Proc. 9th Int. Work. Conf. Stored-Product Prot. ABRAPOS, Passo Fundo, RS, Brazil, 15–18 Oct. 2006 476–486

Free, J.B. (1955) The division of labour within bumblebee colonies. Insectes Soc. https://doi.org/10.1007/BF02224381

Ganchev, T., Potamitis, I., Fakotakis, N. (2007) Acoustic monitoring of singing insects, in: ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing - Proceedings. IEEE, p. IV-721-IV-724. https://doi.org/10.1109/ICASSP.2007.367014

Gradišek, A., Slapničar, G., Šorn, J., Luštrek, M., Gams, M., Grad, J. (2017) Predicting species identity of bumblebees through analysis of flight buzzing sounds. Bioacoustics https://doi.org/10.1080/09524622.2016.1190946

Hill, A.P., Prince, P., Piña Covarrubias, E., Doncaster, C.P., Snaddon, J.L., Rogers, A. (2018) AudioMoth: Evaluation of a smart open acoustic device for monitoring biodiversity and the environment. Methods Ecol. Evol. https://doi.org/10.1111/2041-210X.12955

Ishay, J. (1975) Frequencies of the sounds produced by the oriental hornet, Vespa orientalis, J. Insect Physiol. https://doi.org/10.1016/0022-1910(75)90233-4

Karatzoglou, A., Smola, A., Hornik, K., Zeileis, A. (2004) Kernlab – An S4 package for kernel methods in R. J. Stat. Softw. https://doi.org/10.1016/j.csda.2009.09.023

Kiskin, I., Orozco, B.P., Windebank, T., Zilli, D., Sinka, M., Willis, K., Roberts, S. (2017) Mosquito detection with neural networks: the buzz of deep learning. eprint arXiv:1705.05180.

Lampson, B.D., Han, Y.J., Khalilian, A., Greene, J., Mankin, R.W., Foreman, E.G. (2013) Automatic detection and identification of brown stink bug, Euschistus servus, and southern green stink bug, Nezara viridula, (Heteroptera: Pentatomidae) using intraspecific substrate-borne vibrational signals. Comput. Electron. Agric. https://doi.org/10.1016/j.compag.2012.12.010

Mennill, D.J., Vehrencamp, S.L. (2008) Context-dependent functions of avian duets revealed by microphone-array recordings and multispeaker playback. Curr. Biol. https://doi.org/10.1016/J.CUB.2008.07.073

Mennill, D.J., Burt, J.M., Fristrup, K.M., Vehrencamp, S.L. (2006) Accuracy of an acoustic location system for monitoring the position of duetting songbirds in tropical forest. J. Acoust. Soc. Am. https://doi.org/10.1121/1.2184988

Miller-Struttmann, N.E., Heise, D., Schul, J., Geib, J.C., Galen, C. (2017) Flight of the bumble bee: Buzzes predict pollination services. PLoS One https://doi.org/10.1371/journal.pone.0179273

Morandin, L.A., Laverty, T.M., Kevan, P.G., (2001) Bumble bee (Hymenoptera: Apidae) activity and pollination levels in commercial tomato greenhouses. J. Econ. Entomol. https://doi.org/10.1603/0022-0493-94.2.462

Nagamitsu T., Kenta T., Inari N., Kato E., Hiura T. (2007) Abundance, body size, and morphology of bumblebees in an area where an exotic species, Bombus terrestris, has colonized in Japan. Ecol. Res. 22, 331–341

Njoroge, A.W., Mankin, R.W., Smith, B.W., Baributsa, D. (2017) Effects of hermetic storage on adult Sitophilus oryzae L. (Coleoptera: Curculionidae) acoustic activity patterns and mortality. J. Econ. Entomol. https://doi.org/10.1093/jee/tox260

Obrist, M.K., Pavan, G., Sueur, J., Riede, K., Llusia, D., Márquez, R. (2010) Bioacoustics approaches in biodiversity inventories. ABC Taxa 8, 68–99.

Potamitis, I., Rigakis, I., Fysarakis, K. (2015). Insect biometrics: optoacoustic signal processing and its applications to remote monitoring of McPhail Type Traps. PLoS One https://doi.org/10.1371/journal.pone.0140474

Raghavan, V., Bollmann, P., Jung, G.S. (1989) A critical investigation of recall and precision as measures of retrieval system performance. ACM Trans. Inf. Syst. https://doi.org/10.1145/65943.65945

Ruttner, F. (1988) Biogeography and taxonomy of honeybees, Springer-Verlag Berlin Heidelberg GmbH. https://doi.org/10.1016/0169-5347(89)90176-6

Sueur, J., Aubin, T., Simonis, C. (2008) Equipment review: Seewave, a free modular tool for sound analysis and synthesis. Bioacoustics https://doi.org/10.1080/09524622.2008.9753600

Tautz, J., Markl, H. (1978) Caterpillars detect flying wasps by hairs sensitive to airborne vibration. Behav. Ecol. Sociobiol. https://doi.org/10.1007/BF00302564

Towsey, M., Wimmer, J., Williamson, I., Roe, P. (2014) The use of acoustic indices to determine avian species richness in audio-recordings of the environment. Ecol. Inform. https://doi.org/10.1016/j.ecoinf.2013.11.007

Tsuyuki, K., Sudo, S. (2004) The properties of wing and airflow of flying insects, in: 24th International Congress of the Aeronautical Sciences.

Wen, C., Guyer, D. (2012) Image-based orchard insect automated identification and classification method. Comput. Electron. Agric. https://doi.org/10.1016/J.COMPAG.2012.08.008

Zhang, B., Cheng, J., Han, Y., Ji, L., Shi, F. (2012) An acoustic system for the individual recognition of insects. J. Acoust. Soc. Am. https://doi.org/10.1121/1.3692236

Zhu, L.-Q., Ma, M.-Y., Zhang, Z., Zhang, P.-Y., Wu, W., Wang, D.-D., Zhang, D.-X., Wang, X., Wang, H.-Y. (2017) Hybrid deep learning for automated lepidopteran insect image classification. Orient. Insects. https://doi.org/10.1080/00305316.2016.1252805

Zilli, D., Parson, O., Merrett, G.V., Rogers, A. (2014) A hidden Markov model-based acoustic cicada detector for crowdsourced smartphone biodiversity monitoring. J. Artif. Intell. Res. https://doi.org/10.1613/jair.4434

Acknowledgments

We thank Dr. Fumio Sakamoto and Dr. Junichiro Abe for supporting our experiments and giving us the opportunity to record the flight sounds of insects.

Author information

Authors and Affiliations

Contributions

SK conceived the research; KI participated in the design and interpretation of the data; SK performed experiments and analysis. Both authors wrote the paper and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest

Additional information

Manuscript editor: Monique Gauthier

Classification automatisée des abeilles et des frelons à l'aide d'une analyse acoustique de leurs sons de vol

Classification des espèces / Hyménoptères / apprentissage automatique / analyse acoustique

Automatisierte Klassifizierung von Bienen und Hornissen mittels akustischer Analyse der Fluggerãusche.

Artenklassifizierung / Hymenoptera / Maschinenlernen / akustische Analyse

Electronic supplementary material

Flight sound sample of A. mellifera

(WAV 140 kb)

Flight sound sample of B. ardens

(WAV 48 kb)

Flight sound sample of T. nipponensis

(WAV 56 kb)

Flight sound sample of V. s. xanthoptera

(WAV 90 kb)

Rights and permissions

About this article

Cite this article

Kawakita, S., Ichikawa, K. Automated classification of bees and hornet using acoustic analysis of their flight sounds. Apidologie 50, 71–79 (2019). https://doi.org/10.1007/s13592-018-0619-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13592-018-0619-6