Abstract

There is a widely shared view that planning actors require planning support systems (PSS) that can be easily adapted to changing project demands packaged in easy-to-understand formats. Recent studies confirm this and show that PSS are increasingly user-friendly. Still, little is known about under what conditions they add value in practice. This paper tests three hypotheses about PSS performance and usability in an experimental study. 133 students were exposed to different conditions of PSS facilitation flexibility and visualization hardware (tablets versus maptable). They performed identical strategy-making tasks consisting of divergence and convergence. In addition to measuring the quantity and quality of ideas, we assessed perceived process quality and usability of the PSS. Tablet groups performed better on idea generation and evaluated their solution to the planning problem more positively. In contrast, maptable groups performed better on ideational quality and evaluated their experiences in terms of collaboration, more positively. Groups under indicator flexibility performed best in idea generation, while groups under no flexibility received the highest score for ideational quality. Process quality scores were highest under no flexibility followed by indicator only flexibility. Findings suggest tablet use may be more effective for idea generation, an outcome of divergence, while maptables better support group communication, a key aspect of convergence. The study confirms the need for tools and methods that fit both individual and group work. Findings also indicate that identifying structured ways of applying adaptive PSS to the complex world of planning practice may be key to contextualizing such tools.

Similar content being viewed by others

Introduction

This introduction is partly adapted from Pelzer et al. (2015) Usefulness of Planning Support Systems: Conceptual Perspectives and Practitioners’ Experiences. In Planning, Groningen. Reprinted with permission of the author.

The question of supporting planning and policy making with dedicated information is an old and important one (e.g. Geertman 2006; Gudmundsson 2011; Harris 1965; Innes 1998; Weiss 1977). However, the question is as poignant as ever. The challenges planners face are vast and complex. Yet, contrary to traditional, modernist beliefs about planning, these challenges can likely not be addressed by simply conducting more research and providing more scientific information (Hajer et al. 2010; Rydin 2007). Research about the relationship between science and policy has revealed that the application of scientific insights in policy making is far from straightforward (e.g. Amara et al. 2004; Weiss 1977). In order for information to be fruitfully applied it has to connect to the interactive, participatory and fuzzy nature of planning (cf. Klosterman 1997). Consensus about what knowledge is used is at least as important as its scientific validity (van de Riet 2003).

Dedicated tools are increasingly available to support planners in their tasks, captured under the header of Planning Support Systems (PSS). These can be defined as: ‘... geo-information technology-based instruments that incorporate a suite of components that collectively support some specific parts of a unique professional planning task’ (Geertman 2008, p.217 - emphasis in original). To do so, they actively feed explicit/codified information (often provided by computer models) into planning processes. PSS, especially those that are designed to support the more strategic planning phases, are increasingly designed as visually attractive and interactive platforms that aim to structure the mutual exchange of knowledge among a diverse group of actors.

However, although many waves of excitement about such instruments have been observed, they play a modest role in planning practice, at best (Klosterman 1997; Vonk et al. 2007). A persistent mismatch between the information models and architectures of PSS (Vonk and Ligtenberg 2010) and the information needs of strategy-making processes seems to stifle this long-anticipated progress. Planners keep seeing PSS as overly detailed and precise, mathematically complex, rigid, slow, unintelligible and not transparent enough to be compatible with the unpredictable and dynamic nature of strategy-making processes (Vonk et al. 2005). Vonk et al. (2007), for instance, show how Dutch provinces have a rather low uptake of geo-information based systems, whereas Goodspeed (2013a) emphasizes that there are vast differences in the extent to which American metropolitan planning organizations have support tools embedded in their organization. Next to the frequency of usage, little is also known about the quality of usage and their added value for planning practices.

Recent scholarship has begun to study this omission by paying dedicated attention to the performance of a PSS in planning (Goodspeed 2016; Pelzer 2017; te Brömmelstroet 2013; te Brömmelstroet 2016). This includes for instance the extent to which tools influence the learning that takes place (Goodspeed 2016). One important measure is the usability of a PSS, for instance operationalized as the transparency or understanding of the underlying model (Pelzer 2017).

In this paper we are building on this conceptual work to understand the performance effects of two recent trends in PSS applications in the Netherlands. First, PSS are increasingly used not as desktop applications but as part of a dynamic workshop or process in which a range of stakeholders engage. Both te Brömmelstroet and Schrijnen (2010) and Vonk and Ligtenberg (2010) suggest that the process of developing the underlying model should be both flexible and facilitated, allowing for input of these stakeholders, for instance in selecting indicators and customizing workspaces. Following Pelzer et al. (2015), such flexible facilitation strategies can have profound effects on PSS performance. Second, planning includes a range of tasks that influence the role of the PSS. This is also casted as the task-technology fit (Goodhue and Thompson 1995). New visualization technologies that come to the PSS domain include maptables and tablets. They aim to make knowledge more interactive and they can be used in a dynamic workshop setting. Since most PSS studies were done before the advent of such hardware, we know little about how these innovative techniques change PSS performance.

Existing hypotheses about PSS performance are typically the result of testing ‘one-off’ technologies or academic prototypes used within a specific planning context of a single-case study (Brail 2008; Geertman and Stillwell 2003, 2009; Geertman et al. 2013; Klosterman 2001; Biermann 2011; Demetriou et al. 2012; Marsden 2015; Pettit et al. 2015). Although we acknowledge the merit of this, we argue that the value of such studies increases when they are combined with control-rich studies in which the performance of PSS is systematically measured (te Brömmelstroet 2009, 2013). Levy (2008) similarly states that hypothesis-generating case studies (i.e. typical PSS case studies), ‘examine one or more cases for the purpose of developing more general theoretical propositions, which can then be tested through other methods, including large-N methods’ (p.5). Here, we aim to test the two abovementioned PSS trends in an experimental study.

The Operationalization of Key Terms section introduces the key terms and concepts that informed the design of the study. In the Research Design section the study is introduced. After presenting the results, the paper closes with a Discussion of the Findings followed by Conclusions and Reflections.

Operationalization of Key Terms

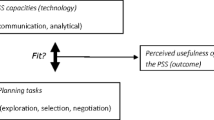

According to several authors the incompatibility of rigid, technology-oriented PSS with the flexible and unpredictable nature of planning tasks and needs is rooted in social aspects that cannot be overcome by just improving the computational capabilities of these tools (e.g. te Brömmelstroet 2012). Strategic urban problems do not have an optimal solution and are increasingly political and contested. Improving the strategic capacity and ability of planning actors requires a process of shared ‘enlightenment’ and the creation of ‘negotiated knowledge’ (Amara et al. 2004; Gudmundsson 2011; Healey 2007). Consequently, performance measures of PSS have shifted from a strict focus on their technical functionality to their performance with respect to specific planning tasks. PSS performance comprises two interrelated concepts, utility and usability (for further discussion see Pelzer 2017). According to (Nielsen 1993), ‘utility is the question of whether the functionality of the system in principle can do what is needed, and usability is the question of how well users can use that [utility] functionality’ (p.25). Increased attention to usability has resulted in the increased use of PSS applications on tablets and the development of specialized collaborative planning hardware, for example the maptable.

In addition to these technological advancements, it is necessary to approach the use of PSS holistically, by both looking at the instrumental characteristics and the process in which the application should be embedded. The effort to embed these technologies in planning practice is part of a socio-technical discussion surrounding the contextualization of PSS (e.g. te Brömmelstroet and Schrijnen 2010; Vonk and Ligtenberg 2010). This discussion shows that assessing PSS performance solely on the fit between the technical functionality of a system and the planning task is inadequate. The PSS contextualization debate is broadening the performance discussion to include the conditions of use. To the authors’ knowledge, the causal relationship between PSS performance and the conditions of PSS use has yet to be explored in an experimental setting. In this section we introduce the process and outcome measures that we use to assess PSS performance during strategy making. We then formulate two hypotheses about how different conditions of use will impact PSS performance and one hypothesis about the usability of the PSS and the session in general.

Dependent Variables: PSS Performance of Strategy-Making Tasks

In collaborative planning settings, since outcome and process are tightly intertwined (e.g. Innes and Booher 1999), the application of PSS could (or should) add value to both. Following earlier scholarship (Pelzer 2017; te Brömmelstroet 2013), PSS performance can be measured along two outcome dimensions (variety- and quality of ideas) and at two process levels (individual and group).

During strategy making, actors engage in tasks that encourage shared learning and knowledge exchange for the purpose of identifying and structuring a planning problem. The aim is to reach consensus about the planning problem to steer decision making (Carsjens 2009; Friend and Hickling 2005). It is crucial that individual issues and interests have been fully explored and differences have been resolved creatively (Innes and Booher 1999). Following the Group Support Systems (GSS) literature, this can also be couched in terms of ‘divergence’ (opening up to different ideas or knowledge claims) and ‘convergence’ (closing down on different ideas or knowledge claims). Dennis and Wixom (2002), for example, explain that actors are encouraged to diverge to generate a variety of ideas and then to reach consensus by converging on the best alternative(s). The assumption is that the quality of ideas selected during convergence is influenced by the generation of a large, diverse set of ideas from which to select.

In planning, the rational, linear and expert-driven approach to producing ‘better’ decisions has been replaced by a process orientation (de Roo and Rauws 2012; Healey 2003; Salet and Faludi 2000). From this orientation it is generally accepted that improving divergence and convergence tasks can benefit outcomes (Dennis and Wixom 2002; Phillips and Phillips 1993). The strategic change that accompanies such tasks often occurs in spurts and is evoked by available resources (Minzberg 1978). In collaborative planning contexts, these spurts often take place in group settings. Workshops not only provide a group setting for actors to engage in strategy making, but they also serve to test tools and to obtain indications of the potential benefits of tool use (Janssen et al. 2006). This is why numerous studies have explored the influence of planning and decision support technologies in socio-technical workshop settings that involve collaboration between PSS developers and users (See Carsjens 2009; Goodspeed 2013b; Pelzer et al. 2016; te Brömmelstroet and Bertolini 2008; te Brömmelstroet and Schrijnen 2010; Vonk and Ligtenberg 2010). The added value of these technologies for group work is well documented, yet little is known about their influence on individual work. Evidence shows that divergent thinking should be performed by ‘nominal’ groups of noninteracting individuals rather than in an open forum of discussion and idea exchange (Lamm and Trommsdorff 1973; Vennix 1992). Thus, performance of workshops should be analyzed both at the group level and individual level (cf. Pelzer et al. 2014).

Dennis and Wixom (2002) suggest measuring GSS performance based on factors such as the number of ideas generated, decision quality and participant satisfaction. While counting the number of generated non-redundant ideas is straightforward, measuring decision quality is more difficult. This is because convergence on a decision requires participants to reach consensus on a course of action (Dennis and Wixom 2002). If consensus is absent, external quality assessment based on ratings can be used (Lamm and Trommsdorff 1973). In their metastudy on measuring ideational quality, Dean et al. (2006) suggest to use a multidimensional framework to capture the different aspects of possible decision quality such as innovativeness, implementability, completeness and effectiveness (see p. 663). When assessing process quality, particularly in the context of PSS use, explanatory insights can be gained from exploring participant perceptions of the strategy-making process at the group and individual level (see e.g. Rouwette et al. 2009).

Independent Variables: Facilitation Flexibility and Visualization Hardware

For optimal performance in planning, PSS would ideally be flexible and user-friendly systems that help the user (1) to select an appropriate method from a toolbox of analysis and forecasting tools, (2) to link these tools to relevant data and (3) to run these models in different graphical and interactive formats (Klosterman 2001). In addition to these specifications, two significant factors influence PSS performance. These are the degree of flexibility permitted by PSS facilitation (the conditions of use) and the type of hardware on which interaction with the tool is organized.

Facilitation Flexibility

Hypothesis 1

Less facilitation flexibility leads to more generated ideas, higher scores on idea quality and higher process satisfaction scores both at the group level (communication, shared language and consensus) and at the individual level (insight) (based on Pelzer et al. 2015).

In PSS literature, the term ‘flexibility’ refers both to the extent to which the information model or tool can be influenced by the user (Geertman et al. 2013) or to limitations placed by facilitation on when a PSS is used and what data or visualization method will be used (Pelzer et al. 2015). The process of model building is to a large extent about making choices (Meadows and Robinson 2002; Vennix and Gubbels 1992) and in most PSS many of these choices are made long before the PSS is applied in practice (Petch and Reeve 1999; Vonk and Ligtenberg 2010). Newer, more flexible information models solicit user involvement to build queries, select from options and to work with individual characteristics (for examples, see Geertman et al. 2013). In this case, ‘flexibility means that PSS should leave room for assumptions and outcomes to be adjusted in such a way that they can address a (specified) range of planning issues. This can create more room for a real and realistic mutual learning process between PSS developers and planners’ (te Brömmelstroet 2012, p.103).

In this study, we are primarily interested in the effects of facilitation on PSS performance. Since most stakeholders do not have the technical knowledge to use PSS (Vonk 2006), interaction with a PSS should be facilitated. In a workshop setting, facilitation mainly involves encouraging PSS use while preventing the PSS from dominating group discussion (Pelzer et al. 2015). The facilitator can determine the conditions of PSS use (facilitation flexibility), for instance, deciding when to engage with the PSS and what indicators to use in a workshop. Khalifa et al. (2002) similarly examined the effects of content and process facilitation restrictiveness on GSS performance. Though facilitation is acknowledged as an integral part of a successful PSS workshop, little empirical research has been conducted into the effects of restrictions on facilitation flexibility. Restrictions on flexibility in this study included both process and indicator (content) flexibility. See The Facilitation Flexibility and Visualization Hardware Conditions section.

Visualization Hardware

Hypothesis 2

Maptable use leads to higher process satisfaction at both the group level and the individual level compared to tablet use (Pelzer et al. 2014).

Whereas initial PSS applications included single-user desktop computers and projectors, there is now a whole gamut of visualization techniques available. These techniques have been developed based on principles of usability and collaboration. Recent additions to these are tablets and maptables. Tablet mobile devices provide user-friendly touch interfaces and display applications, often for single-user activities. After Apple brought tablets to the consumer market with the 2010 launch of the IPad, tablets found their way into the domain of planning to help professionals engage with spatial information. A recent survey of 133 planners across California found that respondents most often used email, search engines and GIS/mapping on their mobile devices, i.e. smartphones and tablets (Riggs and Gordon 2015). Empirical evidence is yet lacking concerning the performance of PSS applications on tablets, for example, for idea generation, individual and group learning or gaining insight about the problem. Maptables, on the other hand, were explicitly developed to support collaborative planning situations (for an early application, see Hopkins et al. 2004). Maptables are map-based touch tables ‘particularly tailored to support collaborative planning processes’ (Pelzer et al. 2014, p.18). Extensive users indicate that maptables facilitate dialogue and shared learning and also benefit learning at the individual level (Pelzer et al. 2014).

Usability

Hypothesis 3

Perceived PSS usability is positively correlated with process satisfaction (see te Brömmelstroet 2016).

Participant perceptions of usability are relevant when assessing PSS workshop performance. Usability measures how well users can use the [utility] functionality of a system (Nielsen 1993). The quality of the interaction between the user and a product is in part determined by the context of use (van der Bijl-Brouwer 2012). The emphasis on context means that the user experience of a product is in part influenced by the circumstances surrounding its use. In the socio-technical application of PSS, as described by Vonk and Ligtenberg (2010), usability serves as a measure of both process and tool. Therefore, it stands to reason that there is a correlation between usability of the PSS under different conditions of visualization hardware and facilitation flexibility and process satisfaction. A recent study into the links between usability and perceived quality of process supports that these are positively correlated (te Brömmelstroet 2016).

Research Design

In this study we randomly assigned groups of planning students to perform a typical strategy making exercise that involved the tasks of divergence and convergence. All groups performed these tasks using an identical setup and received full support of state-of-the-art PSS software, a facilitator and a chauffeur (see The Procedure section). Following the three hypotheses, we –again randomly– applied different use conditions that were expected to influence the performance of the PSS: three levels of process and indicator flexibility and two types of visualisation hardware (see The Facilitation Flexibility and Visualization Hardware Conditions section). We tested to see if these different conditions had systematic effects on the performance of the groups during divergence and convergence tasks (see Performance Variables section).

The study is populated with 133 Urban Planning Bachelor students of the University of Amsterdam (68) and Utrecht University (65). These numbers gave us the repetition needed to control for many contextual variables and to find systematic differences. We are aware that the research design is not ‘representative’ (for a discussion, see Araujo et al. 2007) of the real-world. Although the students are future planning participants, they do not yet have a (good/bad) history with PSS, power relations or real stakes.

The Procedure

Preparation

The research team consisted of two of the authors accompanied by a chauffeur (operating the PSS) and a facilitator (facilitating the group process), the latter two from the Dutch knowledge institute TNO. In both universities, the experiment was part of a regular course in an undergraduate program in urban planning. The students were briefed in a plenary session. They were informed that they were going to take part in a design competition to develop solutions for a typical urban planning problem: an existing road is causing a number of negative externalities that hamper the development potential of an urban infill location in Utrecht. The PSS was introduced by showing an online video.Footnote 2 The students were told that their performance was going to be measured based on the number of ideas they could generate and on the best solution (judged by external experts).

The PSS

Urban Strategy, a state-of-the-art software package that was recently developed by TNO, was used as PSS. It offers a wide range of computer models that cover urban dynamics ranging from traffic to air quality and ground water levels. These models are linked in an architecture that allows the PSS chauffeur to access and run the models from a distant location over a Wi-Fi connection. Each model is simplified to the extent that it can do a complete model run within a minute to test the effect of an intervention. The output is presented in tables, graphs, 2D and 3D maps. The instrument aims to support complex urban strategy-making sessions in practice. The following indicators were the most important during the workshop;

-

Noise (40 DB): the extent to which traffic and industry causes noise nuisances. This was depicted in a categorized ‘contour map’, showing how different areas experience different amounts of noise measured in decibels (DB).

-

Air quality (PM10): the extent to which traffic and industry effects the air quality. This was depicted in a categorized ‘contour map’, showing how different areas experience different amounts of air pollution as measured in particulate matter (PM).

In some sessions these indicators were discussed step by step (low facilitation flexibility), in other sessions the participants could choose themselves how to use these indicators (high facilitation flexibility). In this experiment the TNO facilitator, also considered part of the PSS, took responsibility for the interaction among the participants and between them and Urban Strategy. The facilitator followed a strict script for this interaction, which was developed by the researchers. This script was altered per treatment (See 3.2 for details). The TNO chauffeur communicated with the models.

The Session

Each group was invited to attend the session room in a specific timeslot of 40 min. The PSS facilitator welcomed them. Then he repeated the abovementioned challenge of the design competition. Participants were told to develop as many solutions for this as possible, and to select the three solutions that seem most effective, innovative and realistic. After this, the facilitator mainly supervised the group process and was keeping them on time.

The Facilitation Flexibility and Visualization Hardware Conditions

Three Degrees of Flexibility

In GSS literature, Khalifa et al. (2002) examined the effects of content and process facilitation restrictiveness on GSS performance. Similarly, we are interested in how restrictions on facilitation flexibility influence PSS performance. In the sessions, we simulated three different levels of process and indicator (content) facilitation flexibility:

-

None: the participants were informed that Urban Strategy could perform many analyses, but that they were going to work with the PM10 contour map and the 40Db noise contour map. Also, the process structure of the session was given to them (10 min for brainstorming, 10 min for calculation, 10 min to extend/refine the ideas).

-

Indicator: the participants were informed that Urban Strategy could perform many analyses. They were presented with more detail on maps showing different indicators such as noise quality, air quality and traffic flows and asked to self-select two to work with. The same process structure of the session was again given.

-

Indicator and process: the participants were informed that Urban Strategy could perform many analyses. They were presented with more detail on maps showing different indicators such as noise quality, air quality and traffic flows and asked to self-select two to work with. They were also asked to design their own process structure for the session (especially when they wanted to interact with the PSS).

Visualisation Hardware

One quarter of the groups were supplied with tablets to conduct the exercise; the other groups worked with a maptable (see Table 1). The software was exactly the same for both groups, and in both groups the chauffeur assisted them to conduct impact analyses with Urban Strategy. In the tablet groups the students typically provided answers to a specific question independently, whereas in the maptable groups these specific questions were answered in groups (as visualized on the maptable).

Performance Variables

In this study we measured three variables of performance during the divergence and convergence tasks. They are operationalized based on a study on the performance of brainstorming groups (Rietzschel et al. 2006) and a paper on performance during Group Support Systems use (Dennis and Wixom 2002): productivity or number of ideas generated, ideational quality and the satisfaction of the participants (quality of process). We then measured the usability of the PSS and the session in general.

Idea Generation

During divergence, each group listed as many ideas as they could come up with on a blank form that was provided to record the group ideas. The ideas were counted, where redundant ideas were discarded (see Lamm and Trommsdorff 1973).

Ideational Quality

The quality of an idea is operationalized as a combination of effectiveness, implementability, innovativeness and completeness (Dean et al. 2006). During the convergence task, the students were asked to select their three best ideas. These three ideas were rated by two field experts with extensive experience in the domain on these four qualities using a five-point Likert scale. The raters were blind to both the treatment conditions and our hypotheses.

Quality of the Process

We evaluated perceived process quality at the group and individual level based on 20 statements (See Appendix 1) using a seven-point Likert scale. Following studies on the quality of group processes (mainly Rouwette et al. 2009) and PSS performance (mainly te Brömmelstroet 2013), these statements reflected the process quality dimensions: general reaction, insight, communication, shared language, consensus and efficiency gains.

Usability of PSS

Finally, we measured perceived PSS usability with 14 statements (based on Pelzer 2017; Pelzer et al. 2015 and te Brömmelstroet 2016 see Appendix 2) using a seven-point Likert scale. These statements related to aspects of tool and tool facilitation such as transparency, output clarity, credibility, focus, level of detail, process organization and chaperoning. Process organization and chaperoning of the tool are also related to the process quality variables since they measure the usability of the PSS workshop setup and process support.

Analytical Approach

We applied a two-way ANOVA using SPSS Statistics to compare mean differences of the different conditions of use on PSS performance. This procedure also detects effects from interactions between independent variables on the dependent variable. The mean output values are provided in the Results section. To analyze the divergence task, we compared the mean number of generated ideas by groups according to their treatment. During the convergence task, the four ideational quality factors were analyzed by comparing the mean score of the top three ideas generated by each group. We analyzed the ideational quality scores given by one external rater and used scores given by a second rater to control for interrater validity. We also analyzed student responses to each of the perceived quality of process and usability statements. We report the statements that scored significantly different for quality of process and usability in the paper and provide comprehensive lists of mean values in Appendix 3. Additionally, we looked for interactions between the flexibility and hardware conditions on each of the dependent variables. And finally, we conducted a bivariate correlation test to assess whether there were correlations between the measures of perceived PSS usability and quality of the process. We provide a comprehensive list of the correlation values in Appendix 4.

Results

Divergence

The analysis of the divergence task showed little effect of PSS flexibility and visualization hardware conditions on the number of ideas generated. Though differences among the flexibility conditions are negligible, the findings indicate some impact of the visualization hardware conditions. Groups that used tablets generated on average more ideas than those that used the maptable (see Table 2). None of these effects was statistically significant, in part because of the limited number of groups in each condition.

Convergence

The analysis of the convergence task showed scores on the four qualities of generated ideas that were around, and mostly slightly below the average score on the five-point Likert Scale. For the criteria Implementability, Innovativeness and Completeness, as flexibility decreased, quality scores increased, although only slightly (see Table 3). Scores for visualization hardware use were split, whereby tablets performed better on Effectiveness and Innovativeness and maptables performed better on Implementability and Completeness. None of these effects was statistically significant also in part because of the limited number of groups in each condition.

Quality of Process

The students were generally positive about the PSS-supported strategy-making workshop. Almost all process quality statements for the 133 participants show a positive score, significantly higher than 4.Footnote 3 Four statements show statistically significant differences within flexibility conditions or within the hardware conditions and one statement shows interaction between the hardware and flexibility conditions. Findings indicate that the conditions had varying influence at the two levels of analysis. No flexibility was perceived more positively, evidenced at the individual level by the statement ‘the result offers a [real solution] to the problem’ and evidenced at the group level by the statement ‘the other participants understand my view of the problem [my vision clear]’. Although other differences were not statistically significant, they did point in the same direction. At the group level, maptable use outperformed tablet use on the statements ‘I now understand how the other participants view the problem [problems of others]’ and ‘during the session a [platform] emerged that supported the sharing of ideas’ (see Table 4). The statement ‘the result of the session is based on [correct assumptions] about the urban system’ shows an interaction effect between the flexibility and hardware conditions (interaction values not displayed in table), whereby tablet use under the no flexibility condition resulted in the highest score (M = 5,69) .

Findings indicate an inverse relationship, whereby decreased facilitation flexibility corresponded to an increase in average scores given by the students on three of the five statistically significant statements (see Fig. 1). This inverse relationship was consistent across 14 of the 20 statements. Highest agreement was given to the group-level statement ‘I was able to [share my ideas] and opinion’ under the no flexibility condition, while lowest agreement was given to the group-level statement ‘during the session we developed a [shared professional language]’ under the indicator and process flexibility condition (see Appendix 3).

Usability

Almost all of the 14 usability statements for the 133 participants, show a positive score, significantly higher than 4.Footnote 4 One statement, ‘the [level of detail] of the maps was sufficient’ shows statistically significant differences between the visualization hardware conditions (see Table 5). Here, the maptable outperformed tablet use while differences among the three facilitation flexibility conditions were not significant.

A bivariate correlation test of usability and quality of process generated numerous significant correlations between the two datasets (see Appendix 4). Roughly three-quarters (72,9%) of individual-level quality of process responses (strongly) correlated to usability responses compared to 57,9% of group-level quality of process responses. In most instances, the 5 statistically significant quality of process statements (strongly) correlated with the usability statements (see Table 6). ‘The [level of detail] of the maps was sufficient’, which was the only significant usability statement, and 8 other usability statements (strongly) correlated with all five of the significant quality of process statements. ‘Engagement with the tool was well supported [tool support]’, on the other hand, only correlated with the statements ‘the result of the session is based on [correct assumptions] about the urban system’, ‘the other participants understand my view of the problem [my vision clear]’ and ‘during the session a [platform] emerged that supported the sharing of ideas’.

Discussion of Findings

Building on theoretical expectations and empirical results from case studies of PSS use, this study formulated three hypotheses about the expected effect of facilitation flexibility and visualization hardware on two important tasks of strategy making: divergence and convergence. We designed a procedure where groups of students were asked to diverge to generate as many planning solutions as possible and then to converge to make a selection of planning solutions, supported by a state-of-the-art PSS. We randomly changed the conditions of PSS use enabling us to test the effect of these differences on the quality of divergence and convergence and on perceptions of process quality and usability of both tool and process.

Hypothesis 1

Less facilitation flexibility leads to more generated ideas, higher scores on idea quality and higher process satisfaction scores both at the group level (communication, shared language and consensus) and at the individual level (insight) (based on Pelzer et al. 2015).

Contrary to expectations, the measured effects of facilitation flexibility on idea generation and idea quality were not statistically significant. The different conditions of facilitation flexibility did, however, significantly influence perceived quality of process. Here, we found numerous instances where increased quality of process was linked to limited facilitation flexibility. In most instances the no flexibility condition outscored the other flexibility conditions. Three of the four ideational qualities showed a similar trend. No flexibility consistently outscored the other facilitation flexibility conditions across the different measures of PSS performance of this study, from process quality at both the group and individual levels to the outcomes both in terms of number of ideas generated (quantitative) and ideational quality (qualitative). A caveat to take into account here is that we did not apply the full variation of facilitation treatments that might include no facilitation at all or deliberate interventions triggered by the flow of the process (cf. Pelzer et al. 2015).

Hypothesis 2

Maptable use leads to higher process satisfaction at both the group level and the individual level compared to tablet use (Pelzer et al. 2014).

The visualization hardware results show that while quality of the process at the group level was higher for maptable use, tablet scores were higher at the individual level. This only partly confirms the expected better performance of maptables. Maptables performed better on statements that evaluated understanding, e.g. ‘I now understand how the other participants view the problem [problems of others]‘and ‘during the session a [platform] emerged that supported the sharing of ideas’. However, there was some indication that participants may not trust their outputs, as was seen in the higher scores given by tablet users on the individual-level statement ‘I [trust that the outcome] is good’. Although the differences were not statistically significant, tablet use outscored maptable use on related statements such as ‘the result offers a [real solution] to the problem’ and ‘the session produced [usable results]’.

Interestingly, more ideas were generated by the tablet groups and ideational quality scores were mixed between the two hardware types. Together, these findings are compelling. They point to a potential causal relationship between the outcomes of divergence and convergence. The higher ideational quality scores by tablet groups for Effectiveness and Innovativeness may be explained by the ability of the tablet groups to generate more ideas from which to select while tablet use may also have contributed to more ‘radical’ individual thought. Comparatively, the maptable may have supported a stronger atmosphere for shared learning resulting in more Complete and Implementable ideas. While these findings substantiate the performance of maptables at the group level for communication and shared learning, they also indicate a need for further investigation into alternatives that support divergence tasks and individual needs during strategy making. The findings also suggests that the hardware on which PSS software is applied may influence user perceptions of process quality and process outcomes.

Hypothesis 3

Perceived PSS usability is positively correlated with process satisfaction (see te Brömmelstroet 2016).

Finally, we found many (strong) correlations between participant perceptions of quality of process and usability. These correlations were more dominant at the individual level than at the group level, indicating that perceptions of tool and process usability may be more positively linked to individual-level process quality factors such as gaining insight ‘my [insight into the problem] has increased’ and trusting outcomes ‘I [trust that the outcome] is good’ than to communication at the group level.

The findings, furthermore, underscore claims by Pelzer et al. (2015) of the need to strike a delicate balance between PSS use and group discussion. This means interaction with the PSS either to learn about the technology or to set up and to explore the data should not dominate shared learning and communication about the problem at hand. Structuring conditions of PSS use by limiting indicator options and steering both process and interaction with the tool is important. Doing so seemed to allow participants to focus on communication and shared learning of their ideas rather than learning to use the tool or managing group work.

The confirmation of this hypothesis could be interpreted as a sign that the emphasis in the literature on improving the usability of PSS to overcome implementation barriers was valid (e.g. Vonk et al. 2005). However, while higher usability correlates with higher process satisfaction, this relationship should not be interpreted too linearly. One of the reasons of the relatively high usability scores is arguably the fact that the instrument and the process are carefully tailored to each other. The PSS is not an ‘external’ tool that is brought in, but part of the planning process and the tasks therein. While this synthesis is positive, it also means that the causality becomes more complex, i.e. assessments of usability become in a way similar to assessments of process quality. Future research could further unpack this blurring of lines as instrumental interpretations of PSS give way to the view that PSS belong to an integrated support process.

Conclusions and Reflections

The findings do not provide conclusive evidence that different conditions of PSS use have significant effects on strategy making. This study produced few significant values perhaps because of the small sample size of results analysed at the group level and because of the overwhelmingly positive responses of the students that showed little variation in the quality of process and usability scores. The less positive scores the external rater gave for the quality of the ideas indicate that running a similar experiment with planning experts might yield more variation in the results; however, it would be difficult to gather a statistically significant number of participants for such a study. A higher N in the current study would have allowed us to conduct a more sophisticated quantitative analysis, like a regression model, which could shed more light on the relative weight of the different variables.

Moreover, there are evident problems with the representativeness of these types of experiments with planning students (te Brömmelstroet 2015). When compared to planning experts, these students lack field-specific knowledge and experience. This likely explains the rather poor scores given by the external rater on ideational quality across all of the solutions produced by the students. However, we considered the lack of experience and, thus, lack of bias or (positive/negative) feelings about planning practice to further validate the findings. Working with these ‘blank slates’ in a controlled setting allows us to look at relative differences in scores, instead of absolute scores, making internal and external validity of the findings less problematic. Additionally, we were able to carry out our analysis under the assumption that the exercise was properly designed and executed. The overwhelmingly positive responses of the students to the process quality and usability statements indicate that they understood the tasks at hand, that sufficient time was provided to complete the tasks and that they had sufficient support in using the visualization hardware. Therefore, we assume that the exercise setup itself, which was not a part of the study, effected results either minimally or consistently across the conditions of use.

The PSS under scrutiny in this paper, Urban Strategy, is a sophisticated and innovative instrument that allows real time impact analysis of proposed changes in the urban environment (i.e. answering What if?-questions). Since our chief interest lies in the PSS performance, we have decided not to discuss the technical capabilities of Urban Strategy in detail, but focus on participant experience instead. It would, however, also be valuable if a more technical paper on Urban Strategy would appear in the literature, since these kinds of papers about PSS like LEAM, What if? And SLEUTH have inspired developers and researchers to develop technology to support planning.

The analysis in this study was primarily concerned with the main effects of two condition of use variables on strategy making. Our analysis only revealed one interaction between the conditions. This lack of interaction is in part because we measured divergence and convergence at the group level, resulting in a low N. Future research that involves multiple factors impacting the planning process should, however, be aware of a potential interaction effect during analysis. The many strong correlations between quality of process and usability reflect findings from a recent study that looked at correlations between user-friendliness and usefulness (see te Brömmelstroet 2016). Combined, these studies highlight the need to better understand user perceptions of PSS. At the same time, we acknowledge that the correlations we found can be explained in part by the overlap of frameworks for the terms, which we borrowed from previous studies. The ambiguity of terms that describe user perceptions of PSS including usefulness, usability, user-friendliness, performance and added value seems to be a pitfall of the young debate surrounding socio-technical PSS development.

This study of PSS performance adds to a growing body of studies that addresses PSS from a socio-technical perspective using rigorous social science methods (e.g. Goodspeed 2013b; Pelzer et al. 2015; Vonk and Ligtenberg 2010; te Brömmelstroet and Schrijnen 2010). This strand focuses on the way in which a PSS is contextualized in a particular socio-technical setting. The contribution of this paper to this emerging strand is that it has (a) defined and categorized the relevant dependent and independent variables, (b) illustrated how an experiment could be set up and analyzed, and (c) provided – preliminary – insight on how the variables effect each other. In future research, we should not wait for final evidence to advance, but rather we need to keep better track of innovations that are used. Our findings indicate that process facilitation that provides both individualized support for idea generation and collaborative support for evaluating ideas could be beneficial. Such support interventions would, further, take both individual and group learning and communication needs into account when introducing visualization hardware. Monitoring the effects of these interventions on the quality of planning in a more structured way can lead to proofs of concept. This requires PSS academics and developers to become more explicit about what they aim to improve, in which context and how this can be measured.

While continuously changing technology and context-dependency prevent cookbook solutions – even if hypotheses are confirmed – being explicit and reflective about the relevant dimensions of a PSS application is critical for researchers, developers and practitioners. While we acknowledge the demand for more flexible information models, our findings indicate that the use of such models benefits from less process and content flexibility, through careful facilitation. Facilitation has arguably always played an important role when PSS are applied in group settings, but is only recently popping up as a dimension that deserves conceptual and empirical attention (e.g. this paper; Pelzer et al. 2015). Moreover, since the PSS field is never static, new relevant aspects will inevitably pop up. Such emergent aspects need to be spotted by reflective practitioners and researchers and subsequently be scrutinized in rigorous empirical studies.

Notes

The only statement that did not score above 4 was considered to have a potentially negative sentiment, meaning a low score implies high perceived quality of process.

The only statement that did not score above 4 was considered to have a potentially negative sentiment, meaning a low score implies high perceived usability.

References

Amara, N., Ouimet, M., & Landry, R. (2004). New evidence on instrumental conceptual, and symbolic utilization of university research in government agencies. Science Communication, 26, 75–106.

Araujo, D., Davids, K., & Passos, P. (2007). Ecological validity, representative design, and correspondence between experimental task constraints and behavioral setting: Comment on Rogers, Kadar, and Costall (2005). Ecological Psychology, 19, 69–78.

Biermann, S. (2011). Planning support Systems in a Multi-Dualistics Spatial Planning Context. Journal of Urban Technology, 18, 5–37.

Brail, R. K. (2008). Planning support Systems for Cities and Regions. Cambridge: Lincoln Institute of Land Policy.

Carsjens, G. J. (2009). Supporting strategic spatial planning: planning support systems for the spatial planning of metropolitan landscapes dissertation. Wageningen: Wageningen University.

de Roo, G., & Rauws, W. S. (2012). Positioning planning in the world of order, chaos and complexity: On perspectives, behaviour and interventions in a non-linear environment, in Complexity Theories of Cities Have Come of Age. In J. Portugali, H. Meyer, E. Stolk, & E. Tan (Eds.), An overview with implications to urban planning and design. Berlin: Springer.

Dean, D. L., Hender, J. M., Rodgers, T. L., & Santanen, E. L. (2006). Identifying quality, novel, and creative ideas: Constructs and scales for idea evaluation. Journal of the Association for Information Systems, 7, 646.

Demetriou, D., Stillwell, J., & See, L. (2012). An integrated planning and decision support system (IPDSS) for land consolidation: Theoretical framework and applicaiton of the land-redistribution modules. Environment and Planning B, 39, 609–628.

Dennis, A. R., & Wixom, B. H. (2002). Investigating the moderators of the group support systems use with meta-analysis. Journal of Management Information Systems, 18, 235–257.

Friend, J., & Hickling, A. (2005). Planning under pressure: the strategic choice approach. Oxford: Elsevier Butterworth-Heinemann.

Geertman, S. (2006). Potentials for planning support: A planning-conceptual approach. Environment and Planning. B, Planning & Design, 33, 863–880.

Geertman, S. (2008). Planning support systems: A planner's perspective. In Planning Support Systems for Cities and Regions Ed R K Brail (Lincoln Institute for Land Policy, Cambridge) (pp. 213–230).

Geertman, S., & Stillwell, J. (2003). Planning support Systems in Practice: Advances in spatial science. Berlin: Springer Verlag.

Geertman, S., & Stillwell, J. (2009). Planning support systems best practices and new methods. Dordrecht: Springer/GeoJournal Library 95.

Geertman, S., Toppen, F., & Stillwell, J. (2013). Planning support Systems for Sustainable Urban Development. Berlin: Springer-Verlag.

Goodhue, D. L., & Thompson, R. L. (1995). Task-technology fit and individual performance. MIS Quarterly, 19, 213–236.

Goodspeed, R. (2013a). US metropolitan planning. Infrastructures: institutions, models and tools, In Computers in urban planning and urban management (CUPUM) conference (Utrecht).

Goodspeed, R. (2013b). Planning Support Systems for Spatial Planning through Social Learning dissertation, Department of Urban Studies and Planning, Massachusetts Institute of Technology.

Goodspeed, R. (2016). Digital knowledge technologies in planning practice: from black boxes to media for collaborative inquiry. Planning Theory and Practice, 17, 577–600.

Gudmundsson, H. (2011). Analysing models as a knowledge technology in transport planning. Transport Reviews, 31, 145–159.

Hajer, M., van ’t klooster, S., & Grijzen, J. (2010). Strong stories. how the Dutch are reinventing spatial planning. Rotterdam: 010 Publishers.

Harris, B. (1965). New tools for planning. Journal of the American Institute of Planners, 31, 90–95.

Healey, P. (2003). The communicative turn in planning theory and its implications for spatial strategy formation. In Readings in Planning Theory Eds S Campbell, S S Fainstein (pp. 237–255). Oxford: Blackwell.

Healey, P. (2007). Urban complexity and spatial strategies: Towards a relational planning for our times. London and New York: Routledge.

Hopkins, L. D., Ramanathan, R., & Pallathucheril, V. G. (2004). Interface for a sketch-planning workbench. Computers, Environment and Urban Systems, 28, 653–666.

Innes, J. E. (1998). Information in communicative planning. Journal of the American Planning Association, 64, 52–63.

Innes, J. E., & Booher, D. E. (1999). Consensus building and complex adaptive systems: A framework for evaluating collaborative planning. American Planning Association, 65, 412–423.

Janssen, M. A., Goosen, H., & Omtzigt, N. (2006). A simple mediation and negotiation support tool for water management in the Netherlands. Landschape and Urban Planning, 78, 71–84.

Khalifa, M., Kwok, R.-W., & Davison, R. (2002). The effects of process and content facilitation restrictiveness on GSS-mediated collaborative learning. Group Decision and Negotiation, 11, 345–361.

Klosterman, R. E. (1997). Planning support systems: A new perspective on computer-aided planning. Journal of Planning Education and Research, 17, 45–54.

Klosterman, R. E. (2001). Planning support systems: a new perspective on computer-aided planning. In R. K. Brail, & R. E. Klosterman (Eds.), Planning support systems: integrating geographic information systems, models and visualization tools. Redlands: ESRI Press.

Lamm, H., & Trommsdorff, G. (1973). Group versus individual performance on tasks requiring ideational proficiency (brainstorming): A review. European Journal of Social Psychology, 3, 361–388.

Levy, J. S. (2008). Case studies: Types, designs, and logics of inference. Conflict Management and Peace Science, 25, 1–18.

Marsden, R. (2015). A web based information system for planning support in Barnsley. Applied Spatial Analysis and Policy, 8, 131–153.

Meadows, D. H., & Robinson, J. M. (2002). The electronic Oracle: Computer models and social decisions. System Dynamics Review, 18, 271–308.

Minzberg, H. (1978). Patterns in strategy formation. Management Science, 24, 934–948.

Nielsen, J. (1993). Usability Engineering. London: Academic Press, Inc..

Pelzer, P. (2017). Usefulness of planning support systems: a conceptual framework and an emperical illustration. Transportation Research Part A: Policy and Practice, 104, 84–95.

Pelzer, P., Geertman, S., van der Heijden, R., & Rouwette, E. (2014). The added value of planning support systems: A practitioner's perspective. Computers, Environment and Urban Systems, 48, 16–27.

Pelzer, P., Goodspeed, R., & Te Brömmelstroet, M. (2015). Facilitating PSS workshops: A conceptual framework and findings from interviews with facilitators. In S. Geertman, J. Ferreira, R. Goodspeed, & J. Stillwell (Eds.), Planning Support Systems and Smart Cities (pp. 355–369). Switzerland: Springer International Publishing.

Pelzer, P., Geertman, S., & van der Heijden, R. (2016). A comparison of the perceived added value of PSS applications in group settings. Computers, Environment and Urban Systems, 56, 25–35.

Petch, J., & Reeve, D. E. (1999). GIS, organisations and people. A socio-technical approach. Padstow: Taylor & Francis.

Pettit, C. J., Klosterman, R. E., Delaney, P., Whitehead, A. L., Kujala, H., Bromage, A., & Nino-Ruiz, M. (2015). The online what if? Planning support system: A land suitability application in Western Australia. Applied Spatial Analysis and Policy, 8, 93–112.

Phillips, L. D., & Phillips, M. C. (1993). Facilitated work groups: Theory and practice. The Journal of the Operational Research Society, 44, 533–540.

Rietzschel, E. F., Nijstad, B. A., & Stroebe, W. (2006). Productivity is not enough: A comparison of interactive and nominal brainstorming groups on idea generation and selection. Journal of Experimental Psyschology, 42, 244–251.

Riggs, W., & Gordon, K. (2015). How is mobile technology changing City planning? Developing a taxonomy for the future. Environment and Planning B, 0, 1–20.

Rouwette, E., Vennix, J. A. M., & Felling, A. J. A. (2009). On evaluating the performance of problem structuring methods: An attempt at formulating a conceptual model. Group Decision and Negotiation, 18, 567–587.

Rydin, Y. (2007). Re-examining the role of knowledge within planning theory. Planning Theory, 6, 52–68.

Salet, W., & Faludi, A. (2000). Three approaches to strategic spatial planning. In W. Salet & A. Faludi (Eds.), The revival of strategic spatial planning (pp. 1–10). Amsterdam: Royal Netherlands Academy of Arts and Sciences.

te Brömmelstroet, M. (2009). Commentary: The relevance of research in planning support systems. Environment and Planning. B, Planning & Design, 36, 4–7.

te Brömmelstroet, M. (2012). Transparency, flexibility, simplicity: From buzzwords to strategies for real PSS improvement. Computers, Environment and Urban Systems, 36, 96–104.

te Brömmelstroet, M. (2013). Performance of planning support systems: What is it, and how do we report on it? Computers, Environment and Urban Systems, 41, 299–308.

te Brömmelstroet, M. (2015). A critical reflection on the experimental method for planning research: Thesting the added value of PSS in a controlled environment. Planning Practice and Research, 30, 179–201.

te Brömmelstroet, M. (2016). PSS are more user-friendly, but are they also increasingly useful? Transport Research Part A: Policy and Practice, 104, 96–107.

te Brömmelstroet, M., & Bertolini, L. (2008). Developing land use and transport PSS: Meaningful information through a dialogue between modelers and planners. Transport Policy, 15, 251–259.

te Brömmelstroet, M., & Schrijnen, P. M. (2010). From planning support systems to mediated planning support: A structured dialogue to overcome the implementation gap. Environment and Planning. B, Planning & Design, 37, 3–20.

van de Riet, O. (2003). Policy analysis in multi-actor policy settings: Navigating between negotiated nonsense and superfluous knowledge. Delft: Delft University of Technology.

van der Bijl-Brouwer, M. (2012). Exploring usability: Design for dynamic and diverse use situations. Enschede: University of Twente.

Vennix, J. A. M. (1992). Model-building for group decision support: Issues and alternatives in knowledge elicitation. European Journal of Operational Research, 59, 28–41.

Vennix, J. A. M., & Gubbels, J. W. (1992). Knowledge elicitation in conceptual model building: A case study in modeling a regional Dutch health care system. European Journal of Operational Research, 59, 85–101.

Vonk, G. A. (2006). Improving planning support: The use of planning support systems for spatial planning. Utrecht: Faculty of Geosciences Utrecht University.

Vonk, G., & Ligtenberg, A. (2010). Socio-technical PSS development to improve functionality and usability—Sketch planning using a Maptable. Landscape and Urban Planning, 94, 166–174.

Vonk, G., Geertman, S., & Schot, P. P. (2005). Bottlenecks blocking widespread usage of planning support systems. Environment and Planning A, 37, 909–924.

Vonk, G., Geertman, S., & Schot, P. P. (2007). New technologies stuck in old hierarchies: The diffusion of geoinformation technologies in Dutch public organizations. Public Administration Review, 67, 745–756.

Weiss, C. H. (1977). Using social research in public policy making. Lexington: Lexington Books.

Acknowledgements

The authors would like to thank Ralph Klerkx and Basten Kolthof from TNO for their help in setting up and facilitating the experiments. Also thanks to the experts from the Municipality of Utrecht and TNO for rating the students’ plans in addition to all the students for their participation and feedback. Thanks to Professors Geert Dewulf and Timo Hartmann who provided comments on earlier versions of this paper.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1

Quality of process statements | N |

|---|---|

Individual | |

I have a positive feeling about the session | 132 |

The session produced useful results | 132 |

I trust that the outcome is good | 133 |

The result offers a real solution to the problem | 133 |

The result of the session is based on correct assumptions about the urban system | 133 |

My insight into the problem has increased | 133 |

The cause of the problem is clear to me | 133 |

For me, the session has led to new insights | 132 |

I now better understand the suggested solutions from the other participants | 119 |

The session was successful | 132 |

Group | |

The other participants understand my view of the problem | 127 |

I now understand how the other participants view the problem | 125 |

During the session we developed a shared professional language | 121 |

We have achieved a shared vision about possible solutions | 131 |

There was conflict about the task we were to conduct | 127 |

Alternative perspectives were taken seriously | 130 |

I was able to share my ideas and opinion | 130 |

We used our time efficiently | 130 |

During the session a platform emerged that supported the sharing of ideas | 132 |

There was a strong sense of group during the session | 132 |

Appendix 2

Usability statements | N |

|---|---|

The instrument was transparent | 132 |

The communicative value of the output was high | 133 |

The output was clearly displayed | 133 |

The process was well organized | 133 |

Engagement with the tool was well supported | 130 |

The output was credible/believable | 133 |

The instrument was comprehensive enough | 133 |

The instrument helped us to reach consensus | 130 |

The instrument helped me to imagine which developments could be realized | 133 |

The focus of the instrument was sufficient | 130 |

The level of detail of the maps was sufficient | 133 |

The instrument was easy to understand | 133 |

Through use of the instrument, sense and nonsense could be distinguished | 130 |

Through use of the instrument, our creativity was limited | 132 |

Appendix 3

Appendix 4

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Champlin, C., te Brömmelstroet, M. & Pelzer, P. Tables, Tablets and Flexibility: Evaluating Planning Support System Performance under Different Conditions of Use. Appl. Spatial Analysis 12, 467–491 (2019). https://doi.org/10.1007/s12061-018-9251-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12061-018-9251-0