Abstract

Purpose

Accurately locating and analysing surgical instruments in laparoscopic surgical videos can assist doctors in postoperative quality assessment. This can provide patients with more scientific and rational solutions for healing surgical complications. Therefore, we propose an end-to-end algorithm for the detection of surgical instruments.

Methods

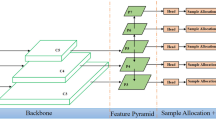

Dual-Branched Head (DBH) and Overall Intersection over Union Loss (OIoU Loss) are introduced to solve the problem of inaccurate surgical instrument detection, both in terms of localization and classification. An effective method (DBHYOLO) for the detection for laparoscopic surgery in complex scenarios is proposed. This study manually annotates a new laparoscopic gastric cancer resection surgical instrument location dataset LGIL, which provides a better validation platform for surgical instrument detection methods.

Results

The proposed method's performance was tested using the m2cai16-tool-locations, LGIL, and Onyeogulu datasets. The mean Average Precision (mAP) values obtained were 96.8%, 95.6%, and 98.4%, respectively, which were higher than the other classical models compared. The improved model is more effective than the benchmark network in distinguishing between surgical instrument classes with high similarity and avoiding too many missed detection cases.

Conclusions

In this paper, the problem of inaccurate detection of surgical instruments is addressed from two different perspectives: classification and localization. And the experimental results on three representative datasets verify the performance of DBH-YOLO. It is shown that this method has a good generalization capability.

Similar content being viewed by others

References

Zhao Z, Voros S, Weng Y, Chang F, Li R (2017) Tracking-by-detection of surgical instruments in minimally invasive surgery via the convolutional neural network deep learning-based method. Comput Assist Surg 22(sup1):26–35. https://doi.org/10.1080/24699322.2017.1378777

Liu K, Zhao Z, Shi P, Li F, Song H (2022) Real-time surgical tool detection in computer-aided surgery based on enhanced feature-fusion convolutional neural network. J Comput Des Eng 9(3):1123–1134. https://doi.org/10.1093/jcde/qwac049

Peng J, Chen Q, Kang L, Jie H, Han Y (2022) Autonomous recognition of multiple surgical instruments tips based on arrow OBB-YOLO network. IEEE Trans Instrum Meas 71:1–13. https://doi.org/10.1109/TIM.2022.3162596

Orentlicher D (2000) Medical malpractice: treating the causes instead of the symptoms. Med Care 38(3):247–249. https://doi.org/10.1097/00005650-200003000-00001

Ramesh A, Beniwal M, Uppar A M, Vikas V, Rao M (2021) Microsurgical tool detection and characterization in intra-operative neurosurgical videos. In: 2021 43rd Annual international conference of the IEEE engineering in medicine & biology society (EMBC), pp 2676–2681. https://ieeexplore.ieee.org/document/9630274

Yang C, Zhao Z, Hu S (2020) Image-based laparoscopic tool detection and tracking using convolutional neural networks: a review of the literature. Comput Assist Surg 25(1):15–28. https://doi.org/10.1080/24699322.2020.1801842

Sarikaya D, Corso J, Guru K (2017) Detection and localization of robotic tools in robot-assisted surgery videos using deep neural networks for region proposal and detection. IEEE Trans Med Imaging 36(7):1542–1549. https://doi.org/10.1109/TMI.2017.2665671

Zhang B, Wang S, Dong L, Chen P (2020) Surgical tools detection based on modulated anchoring network in laparoscopic videos. IEEE Access 8:23748–23758. https://doi.org/10.1109/ACCESS.2020.2969885

Lee JD, Chien JC, Hsu YT, Wu CT (2021) Automatic surgical instrument recognition—a case of comparison study between the faster R-CNN, mask R-CNN, and single-shot multi-box detectors. Appl Sci 11(17):8097. https://doi.org/10.3390/app11178097

Jo K, Choi Y, Choi J, Chung JW (2019) Robust real-time detection of laparoscopic instruments in robot surgery using convolutional neural networks with motion vector prediction. Appl Sci 9(14):2865. https://doi.org/10.3390/app9142865

Li L, Li X, Ouyang B, Ding S, Yang S, Qu Y (2021) Autonomous multiple instruments tracking for robot-assisted laparoscopic surgery with visual tracking space vector method. IEEE/ASME Trans Mechatron 27(2):733–743. https://doi.org/10.1109/TMECH.2021.3070553

Zheng Z, Wang P, Liu W, Li J, Ye R, Ren D (2020) Distance-IoU loss: faster and better learning for bounding box regression. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, pp 12993–13000. https://doi.org/10.1609/aaai.v34i07.6999

Wu Y, Chen Y, Yuan L, Liu Z, Wang L, Li H, Fu Y (2020) Rethinking classification and localization for object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10186–10195. https://doi.org/10.1109/CVPR42600.2020.01020

Song G, Liu Y, Wang X (2020) Revisiting the sibling head in object detector. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11563–11572. https://doi.org/10.48550/arXiv.2003.07540

Bodla N, Singh B, Chellappa R, Davis LS (2017) Soft-NMS--improving object detection with one line of code. In: Proceedings of the IEEE international conference on computer vision, pp 5561–5569. https://doi.org/10.48550/arXiv.1704.04503

Jin A, Yeung S, Jopling J, Krause J, Azagury D, Milstein A, Fei-Fei L (2018) Tool detection and operative skill assessment in surgical videos using region-based convolutional neural networks. In: 2018 IEEE winter conference on applications of computer vision (WACV), IEEE, pp 691–699. https://doi.org/10.1109/WACV.2018.00081

Onyeogulu T, Khan S, Teeti I, Islam A, Jin K, Rubio-Solis A, Naik R, Mylonas G, Cuzzolin F, (2022) Situation awareness for automated surgical check-listing in AI-assisted operating room. arXiv preprint arXiv:2209.05056. https://doi.org/10.48550/arXiv.2209.05056

Jha D, Ali S, Emanuelsen K, Hicks S A, Halvorsen P (2021) Kvasir-instrument: diagnostic and therapeutic tool segmentation dataset in gastrointestinal endoscopy. https://doi.org/10.1007/978-3-030-67835-7_19

Bochkovskiy A, Wang CY, Liao HYM (2020) Yolov4: optimal speed and accuracy of object detection. https://doi.org/10.48550/arXiv.2004.10934

Wang CY, Bochkovskiy A, Liao HYM (2023) YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7464–7475. https://doi.org/10.48550/arXiv.2207.02696

Tan M, Pang R, Le QV (2020) Efficientdet: scalable and efficient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10781–10790. https://doi.org/10.48550/arXiv.1911.09070

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu CY, Berg AC (2016) SSD: single shot multibox detector. In: Computer vision–ECCV 2016: 14th European conference, Amsterdam, The Netherlands, October 11–14, 2016, proceedings, Part I, vol 14, pp 21–37. https://arxiv.org/abs/1512.02325

Lin TY, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision, pp 2980–2988. https://doi.org/10.48550/arXiv.1708.02002

Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S (2020) End-to-end object detection with transformers. In: European conference on computer vision, pp 213–229. https://doi.org/10.1007/978-3-030-58452-8_13

Ren S, He K, Girshick R, Sun J (2015) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39(6):1137–1149. https://doi.org/10.1109/TPAMI.2016.2577031

Abdulbaki Alshirbaji T, Jalal NA, Docherty PD, Neumuth T, Möller K (2022) Robustness of convolutional neural networks for surgical tool classification in laparoscopic videos from multiple sources and of multiple types: a systematic evaluation. Electronics 11(18):2849. https://doi.org/10.3390/electronics11182849

Wang X, Girdhar R, Yu SX, Misra I (2023) Cut and learn for unsupervised object detection and instance segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3124–3134. https://doi.org/10.48550/arXiv.2301.11320

Funding

The Key Industry Innovation Chain of Shaanxi (2022ZDLSF04-05).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

For this type of research, formal consent is not required.

Informed consent

This paper contains patient data from two publicly available datasets and one self-constructed dataset. Informed consent was obtained from patients for non-public records.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Pan, X., Bi, M., Wang, H. et al. DBH-YOLO: a surgical instrument detection method based on feature separation in laparoscopic surgery. Int J CARS (2024). https://doi.org/10.1007/s11548-024-03115-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11548-024-03115-0