Abstract

A chatbot is artificial intelligence software that converses with a user in natural language. It can be instrumental in mitigating teaching workloads by coaching or answering student inquiries. To understand student-chatbot interactions, this study is engineered to optimize student learning experience and instructional design. In this study, we developed a chatbot that supplemented disciplinary writing instructions to enhance peer reviewer’s feedback on draft essays. With 23 participants from a lower-division post-secondary education course, we delved into characteristics of student-chatbot interactions. Our analysis revealed students were often overconfident about their learning and comprehension. Drawing on these findings, we propose a new methodology to identify where improvements can be made in conversation patterns in educational chatbots. These guidelines include analyzing interaction pattern logs to progressively redesign chatbot scripts that improve discussions and optimize learning. We describe new methodology providing valuable insights for designing more effective instructional chatbots by enhancing and engaging student learning experiences through improved peer feedback.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Introduction

There has been much interest recently in chatbots as a medium for education. These software technologies are designed to simulate human conversations, answer questions, support users, and tutor learners (Lin & Chang, 2023; Lin et al., 2023). Studies (Clarizia et al., 2018; Mekni et al., 2020) suggest three benefits when effective chatbots are included in educational contexts. Chatbots can (a) create a one-to-one interactive learning opportunity for each student, (b) enhance learning by tracking and analyzing the student’s accounts of behaviour and performance, and (c) contribute to an authentic learning environment (Lin & Chang, 2023; Reiners et al., 2014). However, only a few studies have charted how the instructional design underlying a chatbot might coordinate with a plan for evaluating student-chatbot interactions relative to that design (e.g., Chang et al., 2023; Fryer & Carpenter, 2006; Kerly et al., 2007; Wang & Petrina, 2013).

Most research about chatbots in learning evaluated capabilities for managing novel natural language input and students’ overall satisfaction with chatbots. Methods used in these studies range widely. Managing language input has been evaluated using keyword-matching techniques (Abbasi & Kazi, 2014; Ahmad et al., 2020; Clarizia et al., 2018; Sarosa et al., 2020; Weizenbaum, 1966), machine learning (Mekni et al., 2020; Ranavare & Kamath, 2020; Serban et al., 2017; Sinha et al., 2020), and measures of the efficiency of conversational exchange (Lin et al., 2023; Pham et al., 2018). User’s satisfaction with a chatbot has been assessed mainly in terms of how well a chatbot helped in solving problems, ease of use (Clarizia et al., 2018; Følstad & Brandtzaeg, 2020; Goli et al., 2023), contributions to a conversation/task and engagement (Goda et al., 2014; Schuetzler et al., 2020; Song et al., 2017; Wang & Petrina, 2013), and how students’ interests developed in a course (Fryer et al., 2017; Sarosa et al., 2022).

While quite broad, these methods do not develop clear guidance about how to tune a chatbot’s features and functions to an instructional design. How students engage with a chatbot is underexplored (Abbasi & Kazi, 2014; Clarizia et al., 2018; Fryer et al., 2017; Goda et al., 2014; Kerly et al., 2007). In this context, we collected data describing undergraduates’ interactions with a purpose-designed chatbot, DD. DD was engineered to guide writers in generating effective peer review feedback about composing thesis statements. A successful chatbot supporting this task could supplement writing instruction, particularly in large classes where instructors may not be available when students seek help (Smutny & Schreiberova, 2020; Song et al., 2017). Our goal was to trial a new methodology for examining how well design goals for DD’s conversational moves were realized when students engaged with the chatbot. In other words, how well did exchanges between students and DD match reference schemas for those conversations?

The study was situated in a first-year educational psychology course in higher education. One of the graded components of the course required students to develop an essay outline before drafting the final essay. In prior literature, peer review activities have been found to help students improve their writing and create better reviews. Researchers interpret these effects to arise because reviewing another student’s drafts stimulates the reviewer’s critical thinking and evaluation of their drafts (e.g., Cho & Cho, 2011; Cho & Schunn, 2007; Woodhouse & Wood, 2020). However, peer review is a complex task, and students often have difficulties generating useful feedback for peer authors (Macdonald, 2001; Patchan et al., 2016). Some research investigated ways to support students in developing better reviews, including training (Min, 2005), peer review guidance (Cho & Schunn, 2007), and an online support system (Kulkarni et al., 2016). If peer review can build students’ writing skills, this brings up questions about how to tailor chatbots to instructions, in our case, helping students to become better reviewers of peers’ draft outlines.

To fill gaps in prior research, our design goal was that DD would guide peer reviewers to give more fruitful written feedback, specifically about the author’s development of well-formed thesis statements. Chang’s (2021) study recommends qualities of thesis statements are good predictors of the quality of an essay’s introduction and its overall quality. DD focused on simplifying instructions about creating peer review and enhancing student reviewers’ understanding of giving effective peer feedback. The study analyzes student-chatbot interactions as a vehicle for proposing a new methodology for examining patterns of student-chatbot interaction. Eight possible interaction patterns were identified and analyzed qualitatively. The new methodology offers insights about exchanges between students and the chatbot that can be useful in coordinating the instructional design for a task with the chatbot’s “role” in that instructional design.

Theoretical background & literature review

Cognitive apprenticeship model for developing chatbot

The Cognitive Apprenticeship Model (CAM) has been utilized as a theoretical design basis for both planning and designing learning environments (Woolley & Jarvis, 2007) and analyzing teaching and learning processes (e.g., Poitras et al., 2024; Saucier et al., 2012; Stalmeijer et al., 2009). CAM is based on theories of situated learning and cognition. Based on situated learning and cognition, acquiring knowledge is socially constructed (Clancey, 2008; Wilson et al., 1993). Thus, knowledge acquired is inherent within the activity, the environment, and the culture (Brown et al., 1989).

Collins et al. (1988) described a set of CAM approaches to teaching with an emphasis on two critical dimensions. First, CAM can consist of pedagogical strategies instructors use to guide the process of completing complex tasks. Secondly, CAM may consist of students engaging in deeper cognitive and metacognitive processes and skills required for developing expertise. Collins et al. (1988) also argued that school-based education should expand the conventional apprenticeship models to assist cognitive learning because of limited resources for developing students’ conceptual and problem-solving abilities and transferring these skills learned in school into an authentic context. This is especially true in writing instructions. A conventional way for students to develop writing skills is to maximize the amount of exposure to a variety of reading and genres. However, utilizing CAM in developing writing skills allows students to develop the problem-solving strategies they encounter in the writing process. Therefore, implementing CAM as a framework in instructional and technology-induced learning contexts might foster problem-based and experiential learning opportunities for students.

We developed the chatbot based on the four components of CAM: content, methods, sequence, and sociology (Collins et al., 1991). First, in terms of content, the chatbot guided what the students needed to know before they provided peer feedback in terms of the four types of feedback (positive, constructive, negative and suggestions) and the rhetorical locations where they should place the feedback (i.e., thesis statement, argument, and counterarguments). After interacting with the chatbot, students need to develop a method based on their knowledge learned from the chatbot to implement the identification of corresponding feedback sentences and revision of these sentences on a fabricated feedback sheet. Also, the sequence setup of the chatbot is a major aspect of instructional writing lesson design with the chatbot. The proposed sequence in the study demonstrated that the students first master the feedback knowledge (acquisition of content knowledge and strategic knowledge), check their understanding of the four types of feedback through CCQs, and apply what they have learned in a task (i.e., revising the fabricated feedback). Lastly, the learning environment (sociology) where such interaction took place could be three types: a) teacher-student interaction focuses on the teachers giving instructions to students about the chatbot and clarifying the task requirement, b) student-to-student interaction focuses on students reviewing feedback of their peers, and c) student-to-chatbot interaction focuses on the virtual platform where the chatbot works with the students to develop their knowledge of the types of peer feedback. In this study, we focus on the latter student-to-chatbot interaction. Studies (e.g., Chang et al., 2023; Lin & Chang, 2023; Reiners et al., 2014) suggested a chatbot acts as a mentor, oversees learners achieving learning objectives, and initiates a discussion about how to approach an objective rather than dictating how to proceed. Thus, we hope the chatbot can act as a virtual teacher who guides students through the process of providing peer feedback and implementing the feedback on a pre-defined feedback sheet of an essay outline. The chatbot created for this study, DD, offers options to guide students to develop peer feedback on draft essay outlines. Among these options are concepts about how to give feedback from which a peer can learn. Therefore, drawing on CAM, we investigate student interaction patterns using a new methodology that provides indicators about how to refine and improve the chatbot’s navigation and learning effects.

Peer review & self-assessment

Self-assessment and peer review can help students become aware of their performance. Self-assessment is to have students evaluate their performance to predetermined internal or external criteria, while peer review compares another person’s performance to a predetermined standard (Nicol, 2021). Existing approaches to support peer review include rubrics guidance (e.g., Cho & Schunn, 2007; Patchan et al., 2016; Strijbos et al., 2010) and training by giving a model criterion before an actual review task (e.g., Min, 2005; Sluijsmans et al., 2002; Wu & Schunn, 2023).

Peer review has often been adopted as part of writing instructions. Instructors use peer review as a way for students to learn from each other (Iglesias Perez et al., 2020; Reinholz, 2016; Wu & Schunn, 2023). Additionally, studies indicate that exposing students to peers’ work through peer review might also improve self-regulated learning, as students can learn from their peers and use peers’ work as a model to improve their work (Bellhäuser et al., 2022; Panadero, 2016). This self-regulatory process is evident when self and peer evaluation are integrated, allowing students to become constructive feedback givers and users. Several studies investigated the effectiveness in terms of either the benefits of peer review (Iglesias Perez et al., 2020; Wu & Schunn, 2023) or self-assessment (Sadler & Good, 2006; Zhang & Zhang, 2022). Panadero and Lipnevich (2022) and Dochy et al. (1999) argued that both instructional ways could be complementary and utilizing both have the potential for developing self-regulation. Together, we integrate both peer review strategies (i.e., rubrics and training) and self-assessment into a chatbot to assist students in giving effective peer feedback and potentially increase engagement.

Type of chatbot and input processing techniques

A chatbot is software that engages in conversation with humans. One class of chatbots is non-task-oriented (e.g., chitchat or casual dialogue). Non-task-oriented chatbots can be traced back to ELIZA, a chatbot developed by MIT which used natural language to coax a personal conversation with a human (Weizenbaum, 1966). ELIZA used keyword-matching to search a backend template for replies to human input. If a response could not be found in the backend template, ELIZA used clever natural language computational methods to reorganize keywords in a user’s input and prompt the user to continue the conversation. ELIZA was designed to engage a user in a personal conversation rather than support the user in solving a problem (Serban et al., 2017; Weizenbaum, 1966). Recent advances continue to build on this foundation, with modern non-task-oriented chatbots incorporating machine learning algorithms, large language models, and embedding approaches to enhance conversation quality and personalization (Ait Baha et al., 2023; Lin & Chang, 2023; Lin et al., 2024).

The second class of chatbots is task-oriented. A task-oriented chatbot is designed to instruct or guide a user with prompts or nudges about how to complete an activity (Chang et al., 2023; Reiners et al., 2014). This chatbot works better in a simple scenario by guiding a user to stay “on track.” Tasks explored using task-oriented chatbots include developing peer review skills and providing writing assistance about a particular topic (Reiners et al., 2014).

Users’ interaction pathways can also be classified depending on backend programming techniques and task orientation. Programming a chatbot usually leverages two text-processing techniques, such as keyword matching (Clarizia et al., 2018; Weizenbaum, 1966) and natural language modelling (Kerly et al., 2007; Serban et al., 2017). These methods set the stage for the chatbot to understand input and deliver appropriate conversational invitations and replies. Keyword matching associates pre-defined phrases/words and responses. The most common applications of this technique are FAQ-based task-oriented chatbots (Abbasi & Kazi, 2014; Ahmad et al., 2020; Sarosa et al., 2020), in which multiple pre-defined pathways have been programmed for the chatbot’s interactions with users. A non-task-oriented, keyword-matching chatbot only provides casual conversational exchange in which users are provided buttons to select (Jain et al., 2018; Pham et al., 2018). Natural language modelling techniques incorporate unsupervised machine learning techniques, creating a large set of discipline-specific pathways (Kerly et al., 2007; Stöhr, 2024). Input is pre-modelled, and the chatbot “learns” from the corpus set to search for optimal responses based on the users’ input. A notable application is Jill Watson, which uses advanced machine learning to categorize information and provide accurate answers (Goel & Polepeddi, 2019; Kakar et al., 2024; Wang et al., 2020).

Design features and effectiveness of chatbots used in classrooms

Prior research investigated how chatbots help learners solve problems (Følstad & Brandtzaeg, 2020; Kerly et al., 2007), chatbot’s contribution to a task (Goda et al., 2014; Song et al., 2017; Wang & Petrina, 2013), and how students’ perception of chatbots affects learning (Fryer & Carpenter, 2006; Fryer et al., 2017; Pérez et al., 2020). Lin and Chang (2020) observed student writing improved after students worked with the chatbot. Goda et al. (2014) found English language learners produced more conversation on a discussion board after engaging with the Eliza chatbot than just by searching for information alone. These results suggested increasing student engagement through interactions with a chatbot. Not all findings are positive, however. A few studies found some students negatively evaluated the chatbot because of irrelevant conversation pathways (e.g., Goda et al., 2014; Wang & Petrina, 2013).

Kerly et al. (2007) identified design features vital for a chatbot interacting with students. They proposed a chatbot should: (1) connect to a database to store and update data, (2) be able to handle user requests alongside small talk to fashion a productive conversation, (3) guide the user to stay on topic, (4) improve its understanding of natural language based on analyses of prior conversations, and (5) provide easy access to users, e.g., through web integration. Recent studies (i.e., Chang et al., 2023; Lin & Chang, 2023; Pérez et al., 2020) emphasize additional features such as personalized feedback, adaptive learning pathways, and multi-platform integration to enhance user engagement and learning outcomes.

Student perception of chatbots

Fryer and Carpenter (2006) explored the benefits of using two chatbots to assist undergraduates in learning a foreign language. Students felt more motivated to learn a language when interacting with chatbots than a human partner. Fryer et al. (2017) contrasted Japanese students’ task interest with chatbot or human, in first- and second-year compulsory English as a Foreign Language (EFL) classes. Interest in interacting with the chatbot partner while practicing English decreased while it remained high for a human partner. Practicing speaking with the chatbot might not be an authentic experience for students, so students viewed it as a poor learning experience. This suggests properties of a task can be important when integrating a chatbot into the student learning experience.

Reiners et al. (2014) conducted semi-structured interviews with six educators to gather opinions about using chatbots. These educators identified several reasons they were reluctant to use chatbots in their educational settings. First, building a chatbot is complex and error-prone. Secondly, tools for developing a chatbot lack flexibility and require expertise beyond that of an “average” educator. Thirdly, the Natural Language Understanding (NLU) on which chatbots rely does not meet users’ standards for natural language interactions. Lastly, there are limited pedagogical models to guide integrating a chatbot within a broader e-learning platform. These obstacles must be overcome so educators can easily incorporate a chatbot into pedagogy. Pérez et al. (2020) highlighted that while students generally have a positive perception of chatbots, the effectiveness and acceptance of these tools significantly depend on their design and integration into the learning process, suggesting that advancements in chatbot technology continue to evolve and improve student engagement and learning outcomes.

Overall, the literature suggests important guidelines for designing an effective educational chatbot. The chatbot should (a) converse meaningfully, (b) guide students to stay on topic, and (c) use dialogue data to continuously upgrade the quality of language exchanges.

Absent from needs identified in prior research is a careful study of interaction patterns when learners and the chatbot interact. Analyzing student-chatbot interaction patterns can provide process insights into how the structure of exchanges mesh with an instructional design to support learning and meet educational objectives. A typical question arises from research about chatbots is their effectiveness and the link to achievement. As learners engage in conversation with a chatbot requested by instructors, the decisions and paths they make during the conversation can offer insight into their psychological states over time and capturing these interaction patterns can provide a deeper understanding of the proximal psychological processes that drive learning (Winne & Nesbit, 2010). The case we explore is a chatbot’s role in guiding peer feedback and the implications of using a chatbot in writing instructions. This study aims to introduce a new methodology for researchers, chatbot developers and instructional designer(s) that can reveal important properties of student-chatbot interactions regarding their learning trajectories and processes.

Design of the chatbot DD

Backend design

The chatbot DD was developed using Rasa (version: rasa_core 0.11.12, rasa_core_sdk: 0.11.5, rasa_nlu 0.13.7), an open-source conversational artificial intelligence (AI) framework (Bocklisch et al., 2017). Rasa was designed to meet needs of non-specialist software developers (Bocklisch et al., 2017), providing dialogue management and machine learning to “remember” contextual interactions with users, thus continuously adding to initial training data. Rasa also offers options to deploy on a cloud or a local server. The design of the chatbot can be customized, for example, by integrating customized codes into Rasa framework.

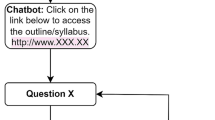

There are three components in developing a Rasa-based chatbot (Fig. 1): (1) Rasa core consists of Stories (customizable dialogues) and a Domain (the universe in which the bot lives). (2) Rasa core SDK (software development kit) includes customizable actions, such as data storage or database access. (3) Rasa NLU (natural language unit) analyzes training data so the chatbot can understand utterances.

A web-based server, a chatroom, bridges Rasa and users’ conversation with DD (Left of Fig. 2). There are two ways of interaction in this chatroom: inputting text and clicking “SUBMIT” or one of the options from the screen. Students in this study mainly interacted with DD by clicking buttons (Ahmad et al., 2020; Clarizia et al., 2018; Jain et al., 2018; Luger & Sellen, 2016; Pham et al., 2018).

Research (Clarizia et al., 2018; Reiners et al., 2014) emphasizes the integration of pedagogical perspectives in designing a productive, interactive and successful conversational flow. Studies (Chang et al., 2023; Lin & Chang, 2023; Wang & Petrina, 2013) suggest a chatbot should be designed for specific purposes. DD focuses on guiding peers to provide more effective feedback about classmate’s essays via human-like natural language (e.g., praise and causal language). We incorporated several pictures of a dog to promote positive engagement (Jain et al., 2018; Luger & Sellen, 2016) and experienced teaching staff modified DD’s natural language (scripts) to make it more enjoyable.

One key issue in chatbot design is the fallback action triggered when the chatbot cannot process user inputs or chatbot action fails to be triggered due to student user technical errors, such as irrelevant inputs (Jain et al., 2018). The left of Fig. 2 shows how DD guides a user to recover from a conversation error. All the conversation with the chatbot was automatically stored in Rasa’s backend server.

DD’s design was participatory by including consultations with the course instructor, a former teaching assistant, and members of a research lab. All provided feedback to refine the chatbot and tested it before release.

Instructional materials in DD

We operationalize four types of feedback based on Gielen et al. (2010) recommendations: constructive suggestions, positive comments, negative comments, and questions for improvement as shown on the right of Fig. 2. Combining such four feedback would maximize improving writing, enhancing critical thinking, and producing a better essay (e.g., Cho & Cho, 2011; Kulkarni et al., 2016; Sluijsmans et al., 2002). Effective peer feedback that offers constructive suggestions and negative feedback should identify specifics about the problem needing attention and provide an explicit correction or an explanation (Cho & Cho, 2011; Gielen et al., 2010; Nelson & Schunn, 2009; Topping et al., 2000). Constructive suggestions about writing differ from negative comments by giving the author information about why and how an idea unit or rhetorical structure could be improved. Positive comments should highlight why or how an idea is high quality instead of simply praising. Questions for improvement stimulate appropriate student reflection (Gielen et al., 2010; Lan & Lin, 2011; Prins et al., 2006). Together, these four types of feedback identify aspects of quality and informativeness in an essay and help a writer profit from reviews to improve a draft essay. Each type of feedback was included in Chatbot DD.

DD’s interaction pattern for providing types of feedback followed a sequential plan. After a student was provided the definition of a type of feedback, DD tested the student’s understanding by asking one to two comprehension checking questions (CCQ) about it. To acknowledge students’ work and boost motivation in this learning activity, DD praised students’ correct answers to CCQs (Song et al., 2017). In real classroom settings, studies showed CCQs create opportunities for teachers to check whether students understand content and for students to recall content (Chen et al., 2009; King, 1994; Redfield & Rousseau, 1981).

After students were introduced to and answered CCQs about each of the four types of feedback, they were invited to give feedback on a peer’s draft outline. As shown in Fig. 3, with guidance from DD, students could choose to provide any type of feedback on a thesis statement and accompanying arguments/counterarguments. Student interactions with DD (e.g., button clicks and texts submitted) were stored on the backend Rasa server.

Methods

Participants

Participants who agreed to take part in our study (N = 23) were recruited from one of 10 tutorial sections in a first-year educational psychology course at a university in Western Canada. The course had slightly over 200 undergraduate students enrolled. The majority of participants were in their first year of study (23%) or second year (26%), while the remaining participants were distributed across third (26%), fourth (17%), and fifth year or beyond (4%). Psychology and Criminology were the most popular majors, each representing 22% of the group. Additionally, 30% of the participants had interdisciplinary academic interests. The group also had a diverse linguistic composition, with 22% being English as an Additional Language (EAL) speakers.

Instruments

This study used three main instruments: pre-made sample outlines, a review sheet with fabricated feedback, and the chatbot DD. Appendix A shows an example of the pre-made sample outlines and a review sheet with fabricated feedback. Unlike most studies of chatbots that investigate effects on essay writing, our goal was to develop a new method for analyzing how students engage with a chatbot.

We fabricated five outlines based on analyses of a random selection of 190 actual outlines students created in the preceding year of the educational psychology course (Fall 2018). For each fabricated outline, we created a corresponding peer review sheet illustrating substandard feedback for each of the four types. Participants in our study were provided one randomly chosen outline and its fabricated feedback. Their task was to improve that feedback with assistance from DD illustrated for one conversation in Figs. 2, 3 and 5, 6.

Procedure

Figure 4 illustrates the study procedure. In a regularly-scheduled 50 min tutorial period, participants gathered in a computer lab and to work on improving “peer” feedback about a “peer’s” outline while interacting with DD. Each participant was randomly assigned one of the five review sheets containing fabricated feedback and introduced to the purposes of the session and chatbot. Then, students interacted with DD in two segments: (a) learning how to give effective peer feedback, and (b) reviewing the fabricated peer feedback to improve it.

The chatbot guided participants through self-evaluation and four types of peer feedback identified in Fig. 2. First, the chatbot asked the participant whether s/he knew how to provide positive comments. If the participant judged they were familiar with a type of feedback, the chatbot tested their understanding by posing two CCQs. If a participant indicated s/he did not know how to provide feedback of that type, the chatbot offered instruction followed by two CCQs. Whenever the participant answered CCQs correctly, the chatbot offered praise and progressed to the next type of feedback. However, if the participant answered one or both CCQs incorrectly, the chatbot provided the correct answer with an instructional explanation about that type of feedback and then restarted the cycle for the next type of feedback. This protocol is shown in Fig. 5.

Upon completing the first session, the participant decided whether s/he was ready to review the outline with the chatbot’s guidance. If they accepted the invitation, the chatbot provided in-depth guidance about reviewing the thesis statement on the sample outline. As shown in left of Fig. 6, a student indicated they wanted to review thesis statement. The chatbot then provided prompts. Meanwhile, the student reviewed constructive suggestions on thesis statement of the sample outline and considered how to improve the fabricated sample feedback on the review sheet (right of Fig. 6). Revisions were made using a word processor. After participants finished polishing the feedback sheet, they ended the conversation with the chatbot, automatically causing all conversational exchanges to be saved. If for any invitation the participant rejected guidance from the chatbot, the chatbot program terminated after all entries in the preceding conversation were automatically saved. The participant then uploaded their improved peer review sheet to the course learning management system.

Data analysis

Data collected in this study included (1) participant’s recommendations for improving fabricated feedback on the review sheet and (2) a transcript of each participant’s chat history with DD. One effective approach to analyzing students’ interaction patterns is content analysis. This method uncovers and explores student data to generate inferences about student interaction patterns (Chen et al., 2011; Patton, 1990; Weber, 1990; Yang, 2010).

The chat history was stored in JSON format. An online tool (https://jsonformatter.org/) was used to format the chat history; each participant’s choices were converted to an Excel spreadsheet. As described in "Procedure" Section, student participants can take multiple pathways depending on their judgements and their needs for guidance. If DD asked a participant, Do you know how to give [a type of feedback]?, the participant has two choices: yes or no. Participants’ self-judgements were coded 0 when they did not know how to give the feedback and 1 indicated they judged they did know how to give feedback, respectively.

Then, the second author, an experienced teaching assistant for this course, and the first author met to categorize participants’ feedback. Both were well-acquainted with the course content and its objectives. This coding process produced a 2 × 2 matrix for each participant as shown in Table 1. If the participant rejected guidance from DD and correctly revised fabricated feedback, it was coded as CR (correct response, rejected guidance). If the participant accepted guidance from the chatbot and correctly revised fabricated feedback, it was coded as CA (correct response, accepted guidance). Coders discussed and resolved the few discrepancies between their codes to produce a final matrix for each participant. After coding, we operationally defined and investigated relationships between chatbot interactions and participants’ skills in revising fabricated feedback. Correctness of feedback reflected whether a student participant correctly (1) identified the feedback sentences on the fabricated feedback sheet and (2) proposed the corresponding improvement on the feedback. No in-depth examination of improved feedback is needed at this stage as it will be another level of analysis (i.e., content analysis). If a student identified the target feedback sentence on the fabricated feedback sheet and they successfully implemented changes on the target feedback sentence, we call this session effective, as it meets two task-related requirements—identification and revision.

Results

The first session: participant self-judgement and CCQs on learning four types of feedback

Upon analyzing the results presented in Table 2, we can observe that two paths, D and E, provide valuable insights into participant’s perceived ability to provide feedback. Path D points towards an encouraging trend of chatbot effectiveness in enhancing participants’ confidence and knowledge about giving feedback. Conversely, path E reveals a disturbing inclination towards overconfidence among participants, highlighting the need for caution when interpreting results. Furthermore, paths B and G might suggest that the chatbot conversation could effectively improve participants’ knowledge about providing feedback. However, path A indicates that the chatbot was less effective for participants who required additional instruction, while path H suggests that participants with prior knowledge were independent of the chatbot. Finally, paths C and F offer limited insights as they could be attributed to lucky guesses on the comprehension questions and contribute little to the evaluation of chatbot effectiveness.

Paths about posing questions for improvement generated four possible paths, I to L, as shown in Table 3. Evidently, two particular paths, J and K, offer valuable insights regarding participants' self-judged ability to pose questions for improvement. Path J suggests that the chatbot instruction has effectively guided participants with insufficient prior knowledge to pose questions for improvement. Conversely, path K still highlights a tendency toward overconfidence among participants with sufficient prior knowledge. Path L indicates the participant already knows about providing helpful questions to improve an essay and may not need help from the chatbot. Overall, the data presented in Tables 2 and 3 emphasize the importance of considering participants’ self-judged ability to provide feedback and how it may impact their interactions with educational chatbots.

Tables 4 and 5 show counts of participants whose data match the possible paths just described and possible interpretations. In Table 4, data in path D suggests that for constructive suggestions, positive, and negative comments, there were 4, 5, and 7 participants, respectively, who benefited from the chatbot instruction. There was one participant for constructive suggestions and negative comments, respectively, who showed overconfidence when learning with the chatbot. When learning how to pose questions for improvement (Table 5), more than half of the participants (n = 16) who followed path J exhibited improved performance after receiving chatbot instruction.

The second session: participant revision choices with/without guidance from the chatbot

Students choosing to revise thesis statements

Table 6 describes profiles of participants’ interactions with the chatbot in terms of their success revising the fabricated feedback. More than 60% of the participants rejected guidance from the chatbot when revising four types of feedback on a thesis statement. Many participants rejected guidance from the chatbot and proposed incorrect revisions (IR). In this category, 18 of 23 participants judged they knew how to pose questions for improvement but did not succeed in realizing that kind of feedback. Also, 11, 5, and 13 participants, respectively, incorrectly revised constructive suggestions, positive comments, and negative comments on a thesis statement without guidance from the chatbot. Not many participants judged they needed guidance, sought it and succeeded in revising the fabricated feedback on a thesis statement. For instance, only 2 out of 23 participants chatted with the chatbot about revising negative comments and posing questions for improvement.

Students choosing to revise arguments and counterarguments

Table 7 summarizes numbers of participants regarding revisions to arguments and counterarguments to the four types of feedback. Similar to revising feedback on a thesis statement, more than 50% of participants reject the chatbot’s guidance when revising arguments and counterarguments. This result is consistent with the previous one that 11 participants misjudged learning from the prior session and incorrectly revised arguments and counterarguments without the chatbot’s guidance, for instance. The category CA reveals a few participants chose to accept guidance from the chatbot and thus correctly revised the feedback on arguments and counterarguments.

Examining participants’ review ability and interaction pathways

Sections “The first session: participant self-judgement and CCQs on learning four types of feedback” and “The second session: participant revision choices with/without guidance from the chatbot" show effects of the first and second sessions of the chatbot, respectively. Figure 7 combines findings from Sects. “The first session: participant self-judgement and CCQs on learning four types of feedback” and “The second session: participant revision choices with/without guidance from the chatbot” about participants’ interaction pathways when interacting with the chatbot about four types of feedback revisions. All other pathways are presented in Appendix B. The blue and green squares represent the patterns of second chatbot guidance of revision on thesis statement arguments and counterarguments, respectively, from Sect. “The second session: participant revision choices with/without guidance from the chatbot”. The basic question investigated is this: If chatbot instruction was effective and thus participants felt confident (paths D and J), or participants were overconfident (path E) in the first session, did participants still accept the guidance from the chatbot? We highlight the interesting findings below. The numbers describe the events participants travelled through.

Constructive suggestions

In Path D during the first session, there were 4 participants. In the second session, which included 8 total events (4 on thesis statement and 4 on arguments and counterarguments). The total reject rate of chatbot instruction is 87%, with a higher incorrect rate of 75% in path D.

The participant in path E did not accept chatbot guidance and only correctly revised the feedback once. These results from constructive suggestions indicate participants were overconfident.

Positive comments

In terms of the interaction pathways on positive comments, there were five participants (10 events) from path D who rejected the chatbot’s guidance (70%) but correctly revised (90%) positive comments on thesis statement and arguments and counterarguments. This result may indicate prior chatbot instructions were effective.

Negative comments

Path D illustrates a higher rejection rate of 71% from the chatbot’s guidance and more than 50% of incorrect revision events on both thesis statement and arguments and counterarguments. There were two events in path E with 50% of rejection and incorrect revision rates, respectively. This is another evidence that participants were overconfident from the first session.

Posing questions for improvement

Interestingly, path J shows that most participants (n = 15) incorrectly revised fabricated feedback when they rejected the chatbot’s guidance on thesis statement. The correct revision rate in posing questions for improvement is less than 50%, with a very high rejection guidance rate of 94%. This result of overconfidence is consistent with the results of constructive suggestions and negative comments. In the final section, we discuss how these interaction pathways provide guidance for future instructional design.

Discussion and recommendation

Very little research uncovers students’ learning trace and conversational trajectories with instructional chatbots relating to developing students’ writing skills (Malik et al., 2023; Reiners et al., 2014; Wang & Petrina, 2013). Filling this gap, this study introduced a methodological framework depicting student-chatbot-interaction pathways. Winne and Nesbit’s problematization of the snapshot approach of the research paradigm guides the development of the proposed methodology in this paper (Winne & Nesbit, 2010). Lin and Chang’s study (2020) reported a positive effect of using the same chatbot in writing instructions. Lin and Chang’s finding is referred to as what Winne and Nesbit (2010) called SBBG—“snapshot, bookend, between-group paradigm” (p. 669). In this study, we mapped out the trajectories of each participant’s conversing session with the chatbot and indicated the specific decisions and pathways each participant has travelled. Mapping out the interaction patterns allows us (the researchers, teachers, or instructional designers) to trace learners’ psychological state of learning (i.e., the judgement of learning) and understand their pathways of learning leading to the actions they take for the task on hand. The specific case we investigated concerned learning to give effective feedback (peer review) about a peer’s writing (Cho & Cho, 2011; Cho & Schunn, 2007; Min, 2005; Wu & Schunn, 2023). We suggest this new methodology in this study can help trace and better understand student-chatbot interaction pathways when working with a chatbot.

Possible paths were visualized to describe how participants interact with a chatbot when offered choices for learning about four forms of feedback in peer review: constructive suggestions, positive comments, negative comments and posing questions for improvement. It is difficult to judge the effectiveness of a chatbot’s instruction just by examining the results presented in Tables 2 and 3. For instance, a participant who is instructed about a form of feedback and successfully completes the CCQs appears to show the effectiveness of chatbot instruction (path D). However, as our findings show, this participant may not correctly identify and revise the corresponding feedback in the second session. An example appears in Fig. 7 where only 43% of participants on path D correctly identified and revised the fabricated feedback on the thesis statement or argument and counterargument. Our findings underscore the challenge of relying solely on CCQs to gauge understanding. This directly informs our first recommendation, emphasizing the need for a more comprehensive approach to CCQs. Our preliminary findings suggest participants were over-confident in their judgement as they often misjudged their understanding of how to provide feedback. If such participants are given options to engage with a chatbot, they may choose ineffectively.

Schwartz (1994) described judgements like those learners make about optional engagements with a chatbot, as a “process of making a prospective judgment at the time of retrieval” (p. 364). Consider Fig. 7 as an example of participants’ interaction pathways regarding constructive suggestions. Four participants who travelled path D indicated they knew how to provide constructive suggestions. However, only one participant correctly identified and revised constructive suggestions on thesis statement and arguments and counterarguments. Studies found students typically struggle to make accurate judgements about their learning (Glenberg et al., 1982; Pashler et al., 2007).

Thus, consistent with Winne and Nesbit’s revised research paradigm for research and practice (2010), this pilot study has proposed a new way that helps researchers to collect data that trace learners’ psychological states over time when they interact with the chatbot. This proposed methodology also helps to conceptualize the learners’ pathways of learning and how they process knowledge and make decisions at each stage to have a more grounded explanation for what learners are doing in self-regulatory processes. Given our findings about students’ overconfidence and misjudgments, designers should strive to identify students’ decision-making processes when engineering a chatbot for educational purposes. This insight directly leads to our recommendation about considering students’ potential inaccuracies or overconfidence in their decisions.

However, our findings also raise another question: Is an instructional design for a chatbot validly guided by using true/false CCQs? Is asking a student to judge whether they know how to develop a particular form of feedback a sufficient indication? The purpose of CCQs in the chatbot was to check prior learning and whether students understood content (Chen et al., 2009; King, 1994). Roediger and Karpicke (2006) point out that testing comprehension through CCQ can engage students with learning materials. Similarly, O’Dowd (2018) reported that students perceived quizzes as a formative tool for checking understanding and learning. The more quizzes they attempted, the better their online engagement as measured by task completion. Based on our findings and the interaction patterns, designers should ensure students can complete a given task successfully rather than relying on just asking them to judge their achievement. CCQs might be better designed as actual tasks students complete rather than judgments about their ability to complete tasks.

Lastly, we have developed a novel methodology to examine interaction pathways relating to two chatbot sessions: learning how to provide peer feedback and revising peer feedback. The former session focuses on chatbot-to-student interaction, whereas the latter session focuses on examining whether students can successfully apply what they learn in the first session to feedback giving. As developed in Sect. “Examining participants’ review ability and interaction pathways”, if the chatbot was effective in the first session (path D), some participants did not successfully revise fabricated feedback in the second session. To successfully revise the fabricated feedback, participants must give four types of effective feedback on the outlines. However, not all the participants were successful. It is possible the instruction was not explicit; the instruction on the fabricated feedback review sheet and the chatbot did not tell the participants to provide feedback on both thesis statements and arguments and counterarguments. Therefore, we recommend incorporating explicit instruction on peer review tasks in the future chatbot design.

Technically, we also want to share some experiences of building the chatbot. To avoid failures in student-to-chatbot conversation, we structured the flow of conversation using buttons in place of natural language. Studies (e.g., Kerly et al., 2007; Reiners et al., 2014) have suggested an effective NLU and may facilitate better learning outcomes. Jain et al. (2018) further suggested that a chatbot must proactively ask effective questions to reduce the search space of the NLU and engage users in a meaningful conversation. Consequently, future chatbot designers might give greater attention to improving the NLU mechanism.

Furthermore, the design of a chatbot needs progressive refinement that leverages users’ data. Prior studies have illustrated ways to examine users’ input to understand and improve student-to-chatbot interaction (Pereira et al., 2018; Picciano, 2012; Wang & Petrina, 2013). Our preliminary study adds a new approach to those lines of work, emphasizing the importance of iterative design based on user interactions.

In summary, future chatbot designers are recommended to consider these guidelines to improve methodology when designing a chatbot for an educational setting:

-

(1)

Redesign CCQs to be more informed about students’ knowledge beyond what can be revealed by true/false questions. Our interaction patterns showed students often misjudged their understanding, suggesting a need for more comprehensive CCQs.

-

(2)

Improve the NLU to manage a wider range of conversational forms (Reiners et al., 2014; Wang & Petrina, 2013).

-

(3)

Enhance a chatbot’s ability to detect errors students make and lend support to correct errors.

-

(4)

Ensure easy access to lend assistance with a task, for example, as Kerly et al. (2007) suggested, embed the chatbot window or sidebar within a webpage or application window.

-

(5)

Progressive refinement from user input can successively improve chatbot performance and enhance student learning and interaction (Pereira et al., 2018; Wang & Petrina, 2013). As our study showed, understanding nuances of student-chatbot interactions can provide valuable insights for refining chatbot design.

While we explored one task, improving an essay’s thesis statement, arguments and counterarguments, we recommend generalizing to other genres, such as lab reports or expository essays. Future research also should explore generalizations of the methodology developed here to investigate data-driven decision-making that can further enhance the effects of a chatbot by utilizing learning analytics (Picciano, 2012).

Limitations

This study took an initial step toward developing a methodology to expand understanding of innovative chatbot educational technology. There are several limitations. First, the small sample size constrains understanding of specific student interaction pathways in relation to the chatbot used in this study. As well, the small training set of thesis statements (about 200) limited the NLU’s accuracy. Possible NLU restrictions or limitations may have misclassified similar sentences that confuse a chatbot (Clarizia et al., 2018).

An example is:

Intent question: “What is a thesis statement?”

Intent thesis_statement: “My thesis statement is positive reinforcement is beneficial to student learning”

Intent thesis_statement_clarification: “Is my thesis statement positive reinforcement may beneficial learning a well-structured thesis statement?”.

Such an example may confuse the chatbot as the intent thesis_statement_clarification might be identified as the Intent question or Intent thesis_statement because such similar sentences can cause errors. As suggested by the Rasa team, the more training data provided, the fewer errors would occur. We provided about 200 thesis statements as the training data. However, after we trained the NLU model, the chatbot still sometimes failed to identify the intent.

This study did not examine reasons for judgements or decisions students made. This information could be beneficial for understanding whether and how student judgement contributes to performance (Bol & Hacker, 2012). Future research might include methods to explore students’ reasons for their judgements and choices.

Future work

Some students misjudged their understanding of how to give feedback in this study. Future research could investigate whether increasing the focus of CCQs, frequency of testing, or re-engaging students in learning from a chatbot might repair this problem. Second, future work is recommended on improving a chatbot’s accuracy to better identify and classify intents. Improving the NLU may require (a) assembling a large-scale repository of student writing to mark issues and solutions in essays (Kerly et al., 2007; Lin & Chang, 2020; Wang & Petrina, 2013), (b) applying semantic processing based on conceptual representations of knowledge (Goel & Polepeddi, 2019), (c) using a self-repair model with native/non-native speaker chat data (Höhn, 2017), and (d) incorporating a linguistic discourse tree (Galitsky & Ilvovsky, 2017). Third, future research may explore how students learn with guidance provided by a chatbot about other sectors of an essay (e.g., body paragraphs) and other essay types, e.g., expository or narrative.

References

Abbasi, S., & Kazi, H. (2014). Measuring effectiveness of learning chatbot systems on student’s learning outcome and memory retention. Asian Journal of Applied Science and Engineering, 3(2), 251–260.

Ahmad, N. A., Baharum, Z., Hamid, M. H. C., & Zainal, A. (2020). UNISEL Bot: Designing simple chatbot system for university FAQS. International Journal of Innovative Technology and Exploring Engineering, 9(2), 4689–4693. https://doi.org/10.35940/ijitee.B9067.129219

Ait Baha, T., El Hajji, M., Es-Saady, Y., & Fadili, H. (2023). The power of personalization: A systematic review of personality-adaptive chatbots. SN Computer Science, 4(5), 661. https://doi.org/10.1007/s42979-023-02092-6

Bellhäuser, H., Liborius, P., & Schmitz, B. (2022). Fostering self-regulated learning in online environments: Positive Effects of a web-based training with peer feedback on learning behavior. Frontiers in Psychology. https://doi.org/10.3389/fpsyg.2022.813381

Bocklisch, T., Faulkner, J., Pawlowski, N., & Nichol, A. (2017). Rasa: Open source language understanding and dialogue management. Journal arXiv Preprint arXiv. https://doi.org/10.48550/arXiv.1712.05181

Bol, L., & Hacker, D. J. (2012). Calibration research: Where do we go from here? Frontiers in Psychology, 3, 229. https://doi.org/10.3389/fpsyg.2012.00229

Brown, J. S., Collins, A., & Duguid, P. (1989). Situated cognition and the culture of learning. Educational Researcher, 18(1), 32–42.

Chang, D. (2021). What’s within a thesis statement? Exploring features of argumentative thesis statements (unpublished doctoral dissertation). Simon Fraser University.

Chang, D. H., Lin, M.P.-C., Hajian, S., & Wang, Q. Q. (2023). Educational design principles of using AI chatbot that supports self-regulated learning in education: Goal setting, feedback, and personalization. Sustainability, 15(17), 12921. https://doi.org/10.3390/su151712921

Chen, N. S., Wei, C. W., Wu, K. T., & Uden, L. (2009). Effects of high level prompts and peer assessment on online learner’s reflection levels. Computers & Education, 52(2), 283–291. https://doi.org/10.1016/j.compedu.2008.08.007

Chen, Y. L., Liu, E. Z. F., Shih, R. C., Wu, C. T., & Yuan, S. M. (2011). Use of peer feedback to enhance elementary students’ writing through blogging. British Journal of Educational Technology, 42(1), E1–E4. https://doi.org/10.1111/j.1467-8535.2010.01139.x

Cho, K., & Schunn, C. D. (2007). Scaffolded writing and rewriting in the discipline: A web-based reciprocal peer review system. Computers & Education, 48(3), 409–426. https://doi.org/10.1016/j.compedu.2005.02.004

Cho, Y. H., & Cho, K. (2011). Peer reviewers learn from giving comments. Instructional Science, 39(5), 629–643.

Clancey, W. J. (2008). Scientific antecedents of situated cognition. Cambridge Handbook of Situated Cognition. https://doi.org/10.1017/CBO9780511816826.002

Clarizia, F., Colace, F., Lombardi, M., Pascale, F., & Santaniello, D. (2018). Chatbot: An education support system for student. In A. Castiglione, F. Pop, M. Ficco, & F. Palmieri (Eds.), International symposium on cyberspace safety and security. Springer.

Collins, A., Brown, J. S., & Holum, A. (1991). Cognitive apprenticeship: Making thinking visible. American Educator, 15(3), 6–11. https://www.psy.lmu.de/isls-naples/intro/all-webinars/collins/cognitive-apprenticeship.pdf

Collins, A., Brown, J. S., & Newman, S. E. (1988). Cognitive apprenticeship: Teaching the craft of reading, writing and mathematics. Thinking: the Journal of Philosophy for Children, 8(1), 2–10.

Dick, W. (1991). An instructional designer’s view of constructivism. Educational Technology, 31(5), 41–44.

Dochy, F. J. R. C., Segers, M., & Sluijsmans, D. (1999). The use of self-, peer- and co-assessment in higher education: A review. Studies in Higher Education, 24(3), 331–350. https://doi.org/10.1080/03075079912331379935

Følstad, A., & Brandtzaeg, P. B. (2020). User’s experiences with Chatbots: Findings from a questionnaire study. Quality and User Experience, 5(1), 3. https://doi.org/10.1007/s41233-020-00033-2

Fryer, L. K., Ainley, M., Thompson, A., Gibson, A., & Sherlock, Z. (2017). Stimulating and sustaining interest in a language course: An experimental comparison of Chatbot and human task partners. Computers in Human Behavior, 75, 461–468. https://doi.org/10.1016/j.chb.2017.05.045

Fryer, L., & Carpenter, R. (2006). Bots as language learning tools. Language Learning & Technology, 10(3), 8–14.

Gielen, S., Peeters, E., Dochy, F., Onghena, P., & Struyven, K. (2010). Improving the effectiveness of peer feedback for learning. Learning and Instruction, 20(4), 304–315. https://doi.org/10.1016/j.learninstruc.2009.08.007

Glenberg, A. M., Wilkinson, A. C., & Epstein, W. (1982). The illusion of knowing: Failure in the self-assessment of comprehension. Memory & Cognition, 10(6), 597–602. https://doi.org/10.3758/BF03202442

Goda, Y., Yamada, M., Matsukawa, H., Hata, K., & Yasunami, S. (2014). Conversation with a chatbot before an online EFL group discussion and the effects on critical thinking. The Journal of Information and Systems in Education, 13(1), 1–7. https://doi.org/10.12937/ejsise.13.1

Goel, A. K., & Polepeddi, L. (2019). Jill Watson. In C. Dede, J. Richards, & B. Saxberg (Eds.), Learning engineering for online education (pp. 120–143). Routledge.

Goli, M., Sahu, A. K., Bag, S., & Dhamija, P. (2023). User’s acceptance of artificial intelligence-based chatbots: An empirical study. International Journal of Technology and Human Interaction (IJTHI), 19(1), 1–18. https://doi.org/10.4018/IJTHI.318481

Hsu, Y. H., & Cho, K. (2011). Peer reviewers learn from giving comments. Instructional Science, 39(5), 629–643.

Iglesias Pérez, M. C., Vidal-Puga, J., & Pino Juste, M. R. (2020). The role of self and peer assessment in higher education. Studies in Higher Education. https://doi.org/10.1080/03075079.2020.1783526

Kakar, S., Maiti, P., Taneja, K., Nandula, A., Nguyen, G., Zhao, A., Nandan, V., & Goel, A. (2024). Jill Watson: scaling and deploying an AI conversational agent in online classrooms. In A. Sifaleras & F. Lin (Eds.), Generative intelligence and intelligent tutoring systems (pp. 78–90). Springer.

Kerly, A., Hall, P., & Bull, S. (2007). Bringing chatbots into education: Towards natural language negotiation of open learner models. Knowledge-Based Systems, 20(2), 177–185. https://doi.org/10.1016/j.knosys.2006.11.014

King, A. (1994). Guiding knowledge construction in the classroom: Effects of teaching children how to question and how to explain. American Educational Research Journal, 31(2), 338–368. https://doi.org/10.3102/00028312031002338

Kulkarni, C., Kotturi, Y., Bernstein, M. S., & Klemmer, S. (2016). Designing scalable and sustainable peer interactions online. Design thinking research. Springer.

Lan, Y. F., & Lin, P. C. (2011). Evaluation and improvement of student’s question-posing ability in a web-based learning environment. Australasian Journal of Educational Technology, 27(4), 581–599. https://doi.org/10.14742/ajet.939

Lin, C.-C., Huang, A. Y. Q., & Yang, S. J. H. (2023). A review of AI-driven conversational chatbots implementation methodologies and challenges (1999–2022). Sustainability. https://doi.org/10.3390/su15054012

Lin, M. P. C., & Chang, D. (2020). Enhancing post-secondary writers’ writing skills with a chatbot: A mixed-method classroom study. Journal of Educational Technology & Society, 23(1), 78–92.

Lin, M. P. C., & Chang, D. (2023). CHAT-ACTS: A pedagogical framework for personalized chatbot to enhance active learning and self-regulated learning. Computers and Education: Artificial Intelligence. https://doi.org/10.1016/j.caeai.2023.100167

Lin, M. P. C., Chang, D., Hall, S., & Jhajj, G. (2024). Preliminary Systematic review of open-source large language models in education. In A. Sifaleras & F. Lin (Eds.), Generative intelligence and intelligent tutoring systems (pp. 68–77). Springer.

Ma, W., Adesope, O. O., Nesbit, J. C., & Liu, Q. (2014). Intelligent tutoring systems and learning outcomes: A meta-analysis. Journal of Educational Psychology, 106(4), 901–908. https://doi.org/10.1037/a0037123

Macdonald, J. (2001). Exploiting online interactivity to enhance assignment development and feedback in distance education. Open Learning: THe Journal of Open, Distance and e-Learning, 16(2), 179–189. https://doi.org/10.1080/02680510120050334

Malik, A. R., Pratiwi, Y., Andajani, K., Numertayasa, I. W., Suharti, S., & Darwis, A. (2023). Exploring artificial intelligence in academic essay: Higher education student’s perspective. International Journal of Educational Research Open, 5, 100296. https://doi.org/10.1016/j.ijedro.2023.100296

Min, H. T. (2005). Training students to become successful peer reviewers. System, 33(2), 293–308. https://doi.org/10.1016/j.system.2004.11.003

Murray, T. (1999). Authoring intelligent tutoring systems: An analysis of the state of the art. International Journal of Artificial Intelligence in Education, 10, 98–129.

Nelson, M. M., & Schunn, C. D. (2009). The nature of feedback: How different types of peer feedback affect writing performance. Instructional Science, 37(4), 375–401. https://doi.org/10.1007/s11251-008-9053-x

Nicol, D. (2021). The power of internal feedback: Exploiting natural comparison processes. Assessment & Evaluation in Higher Education, 46(5), 756–778.

O’Dowd, I. (2018). Using learning analytics to improve online formative quiz engagement. Irish Journal of Technology Enhanced Learning, 3(1), 30–43. https://doi.org/10.22554/ijtel.v3i1.25

Panadero, E. (2016). Is it safe? Social, interpersonal, and human effects of peer assessment. Handbook of human and social conditions in assessment (pp. 247–266). Routledge.

Panadero, E., & Lipnevich, A. A. (2022). A review of feedback models and typologies: Towards an integrative model of feedback elements. Educational Research Review, 35, 100416. https://doi.org/10.1016/j.edurev.2021.100416

Patchan, M., Schunn, C., & Correnti, R. (2016). The Nature of Feedback: How peer feedback features affect student’s implementation rate and quality of revisions. Journal of Educational Psychology, 108, 1098–1120. https://doi.org/10.1037/EDU0000103

Patton, M. Q. (1990). Qualitative evaluation and research methods (2nd ed.). Sage.

Pereira, J., & Díaz, Ó. (2018). Chatbot dimensions that matter: Lessons from the trenches. International conference on web engineering (pp. 129–135). Springer.

Pérez, J. Q., Daradoumis, T., & Puig, J. M. M. (2020). Rediscovering the use of chatbots in education: A systematic literature review. Computer Applications in Engineering Education, 28(6), 1549–1565. https://doi.org/10.1002/cae.22326

Picciano, A. G. (2012). The evolution of big data and learning analytics in American higher education. Journal of Asynchronous Learning Networks, 16(3), 9–20.

Poitras, E., Crane, B., Dempsey, D., Bragg, T., Siegel, A., & Lin, M. P. C. (2024). Cognitive Apprenticeship and artificial intelligence coding assistants. In C. Bosch, L. Goosen, & J. Chetty (Eds.), Navigating computer science education in the 21st century. IGI Global.

Prins, F. J., Sluijsmans, D. M., & Kirschner, P. A. (2006). Feedback for general practitioners in training: Quality, styles, and preferences. Advances in Health Sciences Education, 11(3), 289–303. https://doi.org/10.1007/s10459-005-3250-z

Ranavare, S. S., & Kamath, R. S. (2020). Artificial intelligence based chatbot for placement activity at college using dialogflow. Our Heritage, 68(30), 4806–4814.

Redfield, D., & Rousseau, E. (1981). A meta-analysis of experimental research on teacher questioning behavior. Review of Educational Research, 51(2), 237–245. https://doi.org/10.3102/00346543051002237

Reiners, T., Wood, L., & Bastiaens, T. (2014). Design perspective on the role of advanced bots for self-guided learning. The International Journal of Technology, Knowledge, and Society, 9(4), 187–199.

Reinholz, D. (2016). The assessment cycle: A model for learning through peer assessment. Assessment & Evaluation in Higher EducAtion, 41(2), 301–315. https://doi.org/10.1080/02602938.2015.1008982

Roediger, H. L., III., & Karpicke, J. D. (2006). The power of testing memory: Basic research and implications for educational practice. Perspectives on Psychological Science, 1(3), 181–210. https://doi.org/10.1111/j.1745-6916.2006.00012.x

Sadler, P. M., & Good, E. (2006). The impact of self-and peer-grading on student learning. Educational Assessment, 11(1), 1–31.

Sarosa, M., Kusumawardani, M., Suyono, A., & Wijaya, M. H. (2020). Developing a social media-based Chatbot for English learning. IOP Conference Series: Materials Science and Engineering., 732(1), 012074. https://doi.org/10.1088/1757-899X/732/1/012074

Sarosa, M., Wijaya, M. H., Tolle, H., & Rakhmania, A. E. (2022). Implementation of Chatbot in online classes using google classroom. International Journal of Computing, 21(1), 42–51. https://doi.org/10.47839/ijc.21.1.2516

Saucier, D., Paré, L., Côté, L., & Baillargeon, L. (2012). How core competencies are taught during clinical supervision: Participatory action research in family medicine. Medical Education, 46(12), 1194–1205.

Schuetzler, R., Grimes, G., & Giboney, J. (2020). The impact of chatbot conversational skill on engagement and perceived humanness. Journal of Management Information Systems, 37, 875–900. https://doi.org/10.1080/07421222.2020.1790204

Schwartz, B. L. (1994). Sources of information in metamemory: Judgments of learning and feelings of knowing. Psychonomic Bulletin & Review, 1(3), 357–375. https://doi.org/10.3758/BF03213977

Sinha, S., Basak, S., Dey, Y., & Mondal, A. (2020). An educational chatbot for answering queries. In J. Kumar Mandal & D. Bhattacharya (Eds.), Emerging technology in modelling and graphics. Springer.

Sluijsmans, D. M., Brand-Gruwel, S., & van Merriënboer, J. J. (2002). Peer assessment training in teacher education: Effects on performance and perceptions. Assessment & Evaluation in Higher Education, 27(5), 443–454. https://doi.org/10.1080/0260293022000009311

Smutny, P., & Schreiberova, P. (2020). Chatbots for learning: A review of educational chatbots for the facebook messenger. Computers & Education, 151, 103862. https://doi.org/10.1016/j.compedu.2020.103862

Stalmeijer, R. E., Dolmans, D. H., Wolfhagen, I. H., & Scherpbier, A. J. (2009). Cognitive apprenticeship in clinical practice: Can it stimulate learning in the opinion of students? Advances in Health Sciences Education, 14(4), 535–554.

Stöhr, F. (2024). Advancing language models through domain knowledge integration: A comprehensive approach to training, evaluation, and optimization of social scientific neural word embeddings. Journal of Computational Social Science. https://doi.org/10.1007/s42001-024-00286-3

Strijbos, J. W., Narciss, S., & Dünnebier, K. (2010). Peer feedback content and sender’s competence level in academic writing revision tasks: Are they critical for feedback perceptions and efficiency? Learning and Instruction, 20(4), 291–303. https://doi.org/10.1016/j.learninstruc.2009.08.008

Topping, K. J., Smith, E. F., Swanson, I., & Elliot, A. (2000). Formative peer assessment of academic writing between postgraduate students. Assessment & Evaluation in Higher Education, 25(2), 149–169. https://doi.org/10.1080/713611428

Wang, Y. F., & Petrina, S. (2013). Using learning analytics to understand the design of an intelligent language tutor–Chatbot Lucy. International Journal of Advanced Computer Science and Applications, 4(11), 124–134. https://doi.org/10.14569/IJACSA.2013.041117

Weber, R. P. (1990). Basic content analysis (2nd ed.). Sage.

Weizenbaum, J. (1966). ELIZA-a computer program for the study of natural language communication between man and machine. Communications of the ACM, 9(1), 36–45. https://doi.org/10.1145/365153.365168

Wilson, B. G., Jonassen, D. H., & Cole, P. (1993). Cognitive approaches to instructional design. The ASTD Handbook of Instructional Technology, 4, 21–21.

Winne, P. H., & Nesbit, J. C. (2010). The psychology of academic achievement. Annual Review of Psychology, 61, 653–678.

Woodhouse, J., & Wood, P. (2020). Creating dialogic spaces: Developing doctoral students’ critical writing skills through peer assessment and review. Studies in Higher Education, 47, 643–655. https://doi.org/10.1080/03075079.2020.1779686

Woolley, N. N., & Jarvis, Y. (2007). Situated cognition and cognitive apprenticeship: A model for teaching and learning clinical skills in a technologically rich and authentic learning environment. Nurse Education Today, 27(1), 73–79.

Wu, Y., & Schunn, C. D. (2023). Passive, active, and constructive engagement with peer feedback: A revised model of learning from peer feedback. Contemporary Educational Psychology, 73, 102160. https://doi.org/10.1016/j.cedpsych.2023.102160

Yang, Y. F. (2010). Student’s reflection on online self-correction and peer review to improve writing. Computers & Education, 55(3), 1202–1210. https://doi.org/10.1016/j.compedu.2010.05.017

Zhang, X. S., & Zhang, L. J. (2022). Sustaining learner’s writing development: Effects of using self-assessment on their foreign language writing performance and rating accuracy. Sustainability. https://doi.org/10.3390/su142214686

Galitsky, B., & Ilvovsky, D. (2017). Chatbot with a discourse structure-driven dialogue management. Proceedings of the Software Demonstrations of the 15th Conference of the European Chapter of the Association for Computational Linguistics (pp. 87–90). Association for Computational Linguistics.

Höhn, S. (2017). A data-driven model of explanations for a chatbot that helps to practice conversation in a foreign language. In Proceedings of the 18th annual SIGdial meeting on discourse and dialogue. Association for Computational Linguistics.

Jain, M., Kumar, P., Kota, R., & Patel, S. N. (2018). Evaluating and informing the design of chatbots. In Proceedings of the 2018 on designing interactive systems conference 2018 (pp. 895–906). ACM. https://doi.org/10.1145/3196709.3196735

Luger, E., & Sellen, A. (2016). Like having a really bad PA: The gulf between user expectation and experience of conversational agents. In Proceedings of the 2016 CHI conference on human factors in computing systems (pp. 5286–5297). ACM. https://doi.org/10.1145/2858036.2858288

Mekni, M., Baani, Z., & Sulieman, D. (2020). A smart virtual assistant for students. In Proceedings of the 3rd international conference on applications of intelligent systems (pp. 1–6). Association for Computing Machinery. https://doi.org/10.1145/3378184.3378199

Pashler, H., Bain, P. M., Bottge, B. A., Graesser, A., Koedinger, K., McDaniel, M., & Metcalfe, J. (2007). Organizing instruction and study to improve student learning. IES Practice Guide. NCER 2007–2004. National Center for Education Research.

Pham, X. L., Pham, T., Nguyen, Q. M., Nguyen, T. H., & Cao, T. T. H. (2018). Chatbot as an intelligent personal assistant for mobile language learning. In Proceedings of the 2018 2nd international conference on education and E-learning (pp. 16–21). ACM. https://doi.org/10.1145/3291078.3291115

Serban, I. V., Sankar, C., Germain, M., Zhang, S., Lin, Z., Subramanian, S., Kim, T., Pieper, M., Chandar, S., Ke, N. R., Rajeshwar, S., de Brebisson, A., Sotelo, J. M. R., Suhubdy, D., Michalski, V., Nguyen, A., Pineau, J., & Bengio, Y. (2017). A deep reinforcement learning chatbot. arXiv preprint arXiv:1709.02349.

Song, D., Oh, E. Y., & Rice, M. (2017). Interacting with a conversational agent system for educational purposes in online courses. In 2017 10th international conference on human system interactions (HSI) (pp. 78–82). IEEE. https://doi.org/10.1109/HSI.2017.8005002

Wang, Q., Jing, S., Camacho, I., Joyner, D., & Goel, A. (2020). Jill Watson SA: Design and evaluation of a virtual agent to build communities among online learners. In Extended abstracts of the 2020 CHI conference on human factors in computing systems (pp. 1–8). https://doi.org/10.1145/3334480.3382878

Acknowledgements

This research was supported by the Social Sciences and Humanities Research Council of Canada (SSHRC), grant numbers 435-2015-0273 (Dr. Philip Winne), 430-2024-00269 (Dr. Michael Lin), and 31-28679 (Dr. Daniel Chang). The APC was funded by the SSHRC Insight Development Grant (Dr. Michael Lin) and SFU’s Small Explore Grant (Dr. Daniel Chang). We also thank the journal editor and all the anonymous reviewers for offering feedback. All names/courses/institution names have been mocked to ensure confidentiality.

Funding

Social Sciences and Humanities Research Council, 435-2015-0273, Philip Winne, 430-2024-00269, Michael Pin-Chuan Lin, 31-28679, Daniel Chang

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The main parts of this research were partitioned into the authors’ doctoral dissertations. The authors have no conflict of interest to disclose.

Ethical approval

This research involved human participants, and the ethical approval (#20180734) for this study was granted by the Office of Research Ethics, Simon Fraser University.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

Dialectical map sample A

THESIS STATEMENT: Reinforcement is the teaching practice that uses consequences to strengthen a desired behaviour. Although there are different types of reinforcements, they all lead to the same conclusion of either creating a new and desired behaviour or correcting it. The Reinforcement teaching practice relates to Behaviourism as it promotes modified behaviour. Behaviourism emphasizes the role that environmental factors have when it comes to influencing behaviour. Reinforcement relates to Behaviourism through the connection that behaviours can either be made or modified by one’s surroundings. Reinforcement allows the teacher to be able to provide the necessary feedback one needs in regards to changing or creating new behaviour. Through the teaching practice of Reinforcement, students are able to modify or create appropriate behaviour, improve and increase motivation, as well as helping to establish the student’s autonomy and self efficacy.

ARGUMENT: The use of Reinforcement entices new responses and behaviours from the student. Behaviourism mentions that one’s behaviours are often influenced by their peers, culture, and society. Reinforcement helps shape that intuitive behaviour learned from their surrounding to make it appropriate. Positive Reinforcement occurs when the appropriate behaviour is strengthened by presenting an advantageous or desired result for the student.

EVIDENCE 1: An example of this may be a student wearing a new outfit which may produce compliments by those around them. Another example is a child constantly scoring well on their exams and receiving a toy of their choice from their parents.

WARRANT 1: Another example is a child constantly scoring well on their exams and receiving a toy of their choice from their parents.

EVIDENCE 2: Another example is a child constantly scoring well on their exams and receiving a toy of their choice from their parents.

COUNTERARGUMENT: Reinforcement is ineffective in teaching because students may depend on the reinforcer in order to achieve the appropriate behaviour. The students may rely on the teaching practice rather than understanding that this is a way for them to learn situational behaviours. The student may not completely understand that the purpose of the feedbacks that they receive are for them to understand their actions and the way they think. Students may rely on the teacher to always prompt them for the appropriate behaviour and this reliance does not help the child develop their sense of autonomy.

EVIDENCE 1: A student who always received positive reinforcements might think that acting appropriately in the situation means that they deserve a prize afterward. To clarify, if a student who is always gifted a prize after producing the desired behaviour will expect a prize in return when reacting the way they are supposed to.

WARRANT 1: If a student were to expect this as they grow, they end up using the reinforcement as an extrinsic motivator rather than intrinsic motivation to establish the appropriate behaviour.

Peer feedback sample sheet A

Improving Feedback on Outline

A. Positive Comments: On which part did the writer do a good job? and Why?

The thesis statement is clear. Good job.

B. Negative Comments: On which part did the writer need to improve? and Why?

Although you provide several bullet points in your argument, your supporting evidence is unclear.

You don’t have three arguments.

No supporting evidence.

C. Constructive suggestions: What should the writer have done in order to improve the map?

There are three same sentences in your evidence and warrant under argument: Another example is a child constantly scoring well on their exams and receiving a toy of their choice from their parents.

D. Pose a question: Provide one question to the writer to improve this map.

Do you think your argument will be stronger if you provide supporting evidence to each of your bullet point?

Appendix B

All other students’ interaction pathways by interacting with the chatbot on constructive suggestions, positive comments, negative comments, and posing questions for improvement revision.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article