Abstract

Comparison is an important mechanism for learning in general, and comparing two worked examples has garnered support over the last 15 years as an effective tool for learning algebra in mainstream classrooms. This study was aimed at improving our understanding of how Modified for Language Support-Worked Example Pairs (MLS-WEPs) contribute to effective mathematics learning in an ESOL (English to Speakers of Other Languages) context. It investigated a novel instructional approach to help English Learners (ELs) develop better understanding in mathematical reasoning, problem solving, and literacy skills (listening, reading, writing, and speaking). Findings suggest that MLS-WEPs not only enhanced ELs’ ability to solve algebra problems, but it also improved their written explanation skills and enabled them to transfer such skills to different mathematical concepts. Moreover, when controlling for ELs’ prior knowledge, the effectiveness of the MLS-WEPs intervention for performing and explaining calculations did not vary by their English proficiency.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

According to the National Center for Education Statistics (NCES), 9.6% of the United States public schools’ 4.9 million students are English Learners (ELs). An EL is defined as any student in a U.S. school setting whose native language is not English (Kersaint et al., 2008). ELs have been a historically underrepresented group in STEM (Science, Technology, Engineering, and Math) (The National Clearinghouse for English Language Acquisition & Language Instruction Educational Programs, 2010). Prior research has found that teachers tend to use computational tasks and fail to provide opportunities for students to explain solutions/rationales when teaching math to ELs and other marginalized groups (e.g., de Araujo, 2017). However, it is recommended that teachers of ELs provide effective instructional tasks that are centered on math-rich activities, promote dialogue, and help ELs perform well on content-based assessments while also supporting their developing language proficiency (Abedi et al., 2005; Moschkovich, 2015). The current study examined whether using the supports of Modified for Language Support-Worked Example Pairs (MLS-WEPs) could help ELs improve mathematical performance, which includes mathematical literacy (i.e., listening, reading, writing, and speaking) and problem-solving skills. MLS-WEPs is a supplemental curriculum for ELs that requires them to compare worked examples in order to deepen their understanding of mathematics through rich and structured mathematical discourse and writing.

Literature review

To situate the study, we describe two perspectives that guide the current research: cognitive load theory and mathematical learning in a second language context. Situated in these broad perspectives, we then elaborate on how instructional strategies can help English learners continue to develop proficiency in an additional language and learn mathematics more successfully. In particular, we present the research on how comparing worked examples can benefit mathematics learning. Next, we evaluate research on second language acquisition specific to mathematics content for ELs, to show how to create an effective second language acquisition environment that will help students develop English language proficiency and learn mathematics well. (Note: In this paper, we define English language proficiency as a student’s ability to use the English language to express and communicate in oral and written contexts while studying content subjects.) To intertwine comparison with mathematics learning in a second language context, we also analyze how the previous work on comparing worked examples in mathematics aligns with research recommendations for ELs.

Cognitive load theory and ELs

Mathematics is considered a “universal language” by many educators because the organization of symbols and formulas is the same for most countries in the world. They assume that mathematics is about numbers, symbols, and formulas, not language (Brown et al., 2009). They believe prior mathematical background is sufficient for understanding math concepts in second languages. However, mathematics requires more language proficiency than we would expect. As early as the 1990s, various researchers found that language components heavily affect mathematical learning and teaching (Clarkson & Galbraith, 1992; Ellerton & Clarkson, 1996; Jarrett, 1999). Moreover, research suggests that mathematical learning becomes more difficult when mathematics is learned in a second language context (Heng & Tang, 2003). One explanation of this issue is grounded in cognitive load theory (CLT), which refers to the amount of working memory used as resources during learning and problem solving (Sweller, 1988, 1989).

Working Memory (WM) is involved in the learning of novel tasks, but it can handle only a very limited number (possibly less than four) of novel interacting elements, which is far less than the number of interacting elements required for most human intellectual activities (Paas et al., 2004). Additionally, information can only be retained in WM for about 30s (Paas & Ayres, 2014). In sum, WM is for short term and temporary memory storage. While ELs engage in mathematical learning, they use their working memory not only to handle mathematical content difficulties but also to deal with language challenges. For instance, ELs often translate their educational materials from English to their native languages (Kazima, 2007). This means that in addition to using working memory to master mathematical concepts, they must sometimes use their working memory to translate or code-switch between L1 (native language) and L2 (second language). In such cases, compared to their native English-speaking peers, without appropriate modifications and/or accommodations ELs might need to process more novel information at any given time, which takes up more space in the already limited capacity of working memory. Although translation may cost working memory, it allows ELs to retrieve appropriate cues from their long-term memory (LTM) and then process the information. Thus, learning mathematics in a foreign language is more likely to result in a heavier cognitive load on learners, and implementing an instructional method that reduces ELs’ cognitive load in WM is imperative. The goal is not to reduce the cognitive demands of the task, but to help ELs fully utilize their bilingual strengths to learn mathematics.

Cognitive load theory states that “effective instructional material facilitates learning by directing cognitive resources toward activities that are relevant to learning rather than toward preliminaries to learning” (Chandler & Sweller, 1991, p. 293). It aims at optimizing the learning of complex novel tasks by efficiently using the relation between the limited WM and unlimited LTM (Paas & Ayres, 2014). Cooper and Sweller (1987) found that worked examples (i.e., a step-by-step demonstration showing all the steps needed to complete a task or solve a problem) accelerate the automation process compared to a problem-solving approach. More importantly, Sweller (2011) claimed that worked examples productively provide learners with problem-solving schemas that are yet to be stored in LTM. Recently, substantial research on worked examples from laboratory studies has been extended to real-world classrooms, where the use of both correct and incorrect worked examples has been shown to positively support algebra learning (e.g., Barbieri, 2021; Booth et al., 2015). Yet to our knowledge and despite its benefit for WM, this work has not been extended to ELs.

In addition to asking students to study a single example, worked examples can be presented side-by-side for the purpose of comparison. Rittle-Johnson and Star (2007) found that comparing two worked examples facilitates learning in algebra. A practice guide from the US Department of Education indicates that comparison is one of the five recommendations for enhancing mathematical problem-solving skills in the middle grades (Star et al., 2015a, 2015b; Woodward et al., 2012). Next, we elaborate on the use of comparison in mathematics classrooms, with a particular focus on comparing worked examples.

Comparing worked examples during mathematics learning

Incorporating an analogical approach into instruction promotes learning and helps learners to be more flexible and effective in applying strategies (Croße & Renkl, 2006). More importantly, the analogical approach allows learners to integrate and apply what they have learned to new contexts. However, this approach is prone to cognitive overload because analogical learning requires learners to synthesize different solution methods in their minds in order to extract valid information to gain coherent understanding of the learning materials (Croße & Renkl, 2006). Research over the past 30 years has shown that studying worked examples helps to reduce cognitive load on students (Cooper & Sweller, 1987; Sweller, 1988, 1989; Sweller & Cooper, 1985). However, worked examples are limited in developing insight into far-reaching mathematical interrelationships (Croße & Renkl, 2006). Students studying a simple worked example may focus on unimportant features of the problem, whereas comparing two worked examples can promote analogical reasoning and help students to notice important underlying mathematical structures (Rittle-Johnson et al., 2020). In this case, using worked examples alongside analogical learning may mitigate the cognitive overload that might arise from solely using analogical learning, thereby facilitating learning (Croße & Renkl, 2006) and transfer of what has been learned to new contexts (Gentner et al., 2003; Rittle-Johnson et al., 2009, 2020; Schwartz & Bransford, 1998).

In mathematics instruction, comparing worked examples is powerful and promising for enhancing mathematics learning (Rittle-Johnson, Star, & Durkin, 2017). Students can benefit from comparing two correct methods to the same problem, a correct and incorrect method, or two easily confused problem types (Rittle-Johnson et al., 2020). Research found that comparing worked examples can lead to greater procedural flexibility, conceptual knowledge, and procedural knowledge because it facilitates mathematical exploration and promotes the use of alternative solutions (Rittle-Johnson & Star, 2007). Comparing multiple correct methods promotes attention to and adoption of unconventional methods by directing attention to accuracy and efficiency of problem solving (Croße & Renkl, 2006; Rittle-Johnson et al., 2009). When comparing a correct and incorrect method, conflicting ideas and relevant concepts are highlighted, and comparing confusable problem types (e.g., 2(x+5) and (x+5)2) can help students focus on important structural features rather than shallow ones (Rittle-Johnson et al., 2020).

As noted, studying worked examples presented side-by-side can ease cognitive load during comparison. Other supports include prompts for students to explain important ideas in the comparison and providing a summary at the end of the discussion. It can also be helpful to have some knowledge of one method being compared, particularly when comparing two correct methods to the same problem (Rittle-Johnson et al., 2020). However, research found that with appropriate instructional pacing and scaffolding, learners with limited prior knowledge had improved flexibility from comparing correct but unfamiliar worked examples (Rittle-Johnson et al., 2012). In a study exploring the feasibility of classroom implementation, Newton et al. (2010) demonstrated that high school students with gaps in their prior algebraic knowledge were able to learn multiple problem-solving strategies by comparing worked examples. However, in some cases those students chose to use familiar, general methods that were “most clear or understandable to them” (p. 34), even after expressing a preference for a more efficient method. It appears that flexibility takes time to develop, and prior knowledge plays a prominent role (Newton et al., 2010). In short, comparison takes mental effort and requires instructional support, but it can be an effective tool for deepening mathematical knowledge (Rittle-Johnson et al., 2020).

Although comparison is a powerful learning tool in cognitive science and comparing worked examples has shown promising results in mathematics classrooms (Rittle-Johnson et al., 2009, 2012; Star et al., 2015a, 2015b), comparing worked examples has not been experimented with in ESOL classrooms. Based on research on mathematical content for English learners (Kersaint et al., 2008), we hypothesize that the comparing worked examples approach to learning mathematics can also work in an ESOL context, with appropriate supports. In this section, we analyze the potential benefits of the comparing worked examples approach for ELs in terms of mathematics learning.

Mathematics literacy development in the ESOL context

Over four decades ago, researchers found that mathematics performance is highly correlated to language skills (Aiken, 1972; Cossio, 1978). Purpura and colleagues (2017) had two important findings: (1) math and reading achievement were highly correlated (r = 0.70), and (2) early math ability predicted later reading ability. The latter phenomenon was found to be due to the mediating role of children’s mathematical language, which again implicates the role of language competence in mathematics learning. Therefore, it is necessary to emphasize the importance of focusing on the language skills required for achievement in the mathematics curriculum (Cuevas, 1984).

The literature from the ESOL context of mathematics learning suggests that English learners are given many opportunities to read, write, listen to, and discuss oral and written English and mathematical texts expressed in a variety of ways (Kersaint et al., 2008). Thus, it is necessary to provide explicit instruction that enables ELs to practice these four language domains. Teachers should provide ELs opportunities to discuss and share mathematical concepts in order to help them develop mathematics literacy and mathematics problem solving skills (Boscolo & Mason; Kersaint et al., 2008). Helping ELs engage in discussions on mathematical texts will help them to develop, become familiar with, and recognize mathematical content knowledge. In addition, academic literacy can be developed through enhancing reading comprehension via the following: providing explicit instruction in context; offering instruction to assist learners with reading and writing in mathematics; designing mathematics assignments that require learners to read and write; providing more time for ELs to apply mathematical language as they process and/or develop mathematics knowledge; and facilitating the development of reasoning and critical thinking skills (Kersaint et al., 2008; Meltzer & Hamann, 2005).

Worked example comparison in the ESOL context

Research on mathematics content for ELs shows that an effective second language acquisition environment includes the following two general characteristics: 1) providing sufficient and various opportunities for reading, listening, speaking and writing, and 2) supporting learners to take risks, negotiate, and construct meaning, and seek content knowledge reinterpretation (Garcia & Gonzalez, 1995; Moschkovich, 2012). Research also shows that math instruction in an ESOL context should treat language as a resource (Moschkovich, 2000) and address much more than vocabulary as ELs learn English (Moschkovich, 1999, 2002). Therefore, to best support ELs’ development of written and oral communication skills, instruction should provide opportunities for students to apply mathematical language and negotiate meaning.

WEPs align well with research recommendations for ELs. Worked examples present solution methods step-by-step, which enables ELs to describe the problem-solving procedures and compare and contrast the two methods presented even if they do not know how to solve the problems. In the previous WEPs studies (Rittle-Johnson & Star, 2007, 2009; Rittle-Johnson et al., 2009, 2012; Rittle-Johnson, Star, & Durkin, 2017; Star et al., 2015a, 2015b), the researchers provided the detailed descriptions of problem-solving procedures that aligned with the solution steps. Such design not only provides learners opportunity to engage in mathematical reading but also facilitates the understanding of solution steps. Students are asked to describe each method, compare them, and then draw conclusions based on the comparison. In conclusion, WEPs have the potential to help with creating an effective, acquisition-rich second language learning environment because they provide various opportunities for speaking, listening, writing, and reading. Such an environment allows for meaning construction and knowledge reinterpretations (Garcia & Gonzalez, 1995).

One consideration is that ELs are often confused about what the questions ask and how to organize their responses in sentences (Sonoma County Office of Education, 2006), and the previous WEPs model has not implemented structured language practice to provide scaffolding for ELs to communicate using disciplinary language. Donnelly and Roe (2010) suggested using sentence frames (e.g., The similarity between the two methods is ___________.) to help ELs organize their responses. In general, sentence frames help support students’ explanations, scaffold class discussions, and develop students’ disciplinary language by providing grammatical and syntactic structures for mathematical writing and discourse (Buffington et al., 2017).Sentence frames enable ELs to focus more on the academic content, because they do not need to think about how to form answers on their own. In addition, ELs are often confused about what is being asked, and a sentence frame can provide clarification.

Additionally, research has shown that students’ home languages positively affect their math and science learning, regardless of whether or not the teacher speaks these languages. When students are allowed to use their native language in the classroom, both their subject content performance and English-language development improved (Kang & Pham, 1995). Lems (2017) also demonstrated that “educators need to consider children’s home languages and dialects as assets” (p.19). Crawford (1995) revealed that students can shift their skills in content areas that they learned in their first languages to the second language. Previous models of WEPs have not directly provided opportunities for ELs to explore new concepts through their native language. However, it is easy to incorporate this strategy into WEPs, such as providing opportunities to look up unfamiliar terms in their native language, having students write down mathematical reasoning or problem-solving procedures in their native language, and allowing students to discuss mathematical problems in their native language in small groups etc.

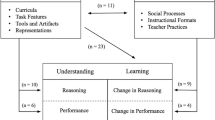

To summarize, research has demonstrated that comparison is a best practice in mathematics education (Rittle-Johnson et al., 2017). However, research is lacking on the potential for worked example comparisons to improve learning in ESOL context classrooms. The current study seeks to fill this gap. As a result, the current study aims at examining how the use of comparing worked examples affects English learners’ mathematical learning outcomes (Table 1).

Research questions

In the current study, we asked the following two research questions (1) To what extent does the use of MLS-WEPs impact ELs’ mathematical knowledge? Specifically, we explored the effects of using MLS-WEPs on ELs’ calculation and mathematical explanation scores. Additionally, we examined how ELs’ prior knowledge affected their mathematical performance. (2) Is the effectiveness of the MLS-WEPs intervention related to ELs’ English Language proficiency?

Methodology

Participants and settings

Data for this study were collected in two schools from a large K-12 urban school district. This study received research ethics committee approval. Before the study began, we sent out a recruitment letter to all the math teachers in the district who teach sheltered algebra classes (i.e., an approach to teaching ELs that incorporates English language development and mathematics content instruction). As stated in the district’s EL Rostering Policy and Guidance: Ideally, students with English language proficiency level 1.0–1.9 should be together in their own Sheltered math courses (i.e., Classes are composed of only ELs to help transition them to general education courses.) and level 2.0 to 3.5 students should be together in their own Sheltered or ESL-friendly (i.e.,Classes are composed of up to 50% ELs with students whose first language is English.) math courses. Two sheltered math teachers agreed to participate in the current study. There were 49 participants from Teacher 1’s three sheltered algebra classes, and 31 participants from Teacher 2’s four sheltered algebra classes. Two of the participants from Teacher 1’s class missed more than 50% of the instructional time when the intervention or control materials were taught, so their data were excluded from the analysis. In the final sample, there were 47 students from Teacher 1’s three sheltered algebra classes and 31 students from Teacher 2’s four sheltered algebra classes. All classes were mixed English language proficiency classes (See Table 1 for details) and mixed grade level classes for grades 9 through 11 (see Table 2 for details). However, students in all three grades were at similar levels of math skills. The percentage of students who speak each native language and a comparison with school districts are shown in Table 3. See Appendix E for the description of each proficiency level (Level 1 to Level 5), which is a measurement of the extent to which a person has mastered the English language (ranging from 1 to 5). Appendix E briefly describes what students can do under each proficiency level. Teachers agreed with students’ self-rating most of the time, thus only slight adjustments were made. When disagreements arose, we adjusted the data by taking the average of the teacher and student reported scores. Both teachers were very experienced. See Table 4 for teacher demographics. The study was conducted during COVID 19, therefore, all lectures in this study were delivered in a virtual environment.

Materials

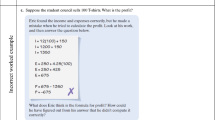

We adapted the Worked Example Pair (WEP) curriculum from Durkin and colleagues (Durkin et al., 2023) for use with ELs. The intervention materials contain four types of worked example comparisons: (1) Which is better? (2) Why does it work? (3) Which is correct? (4) How do they differ? (Newton & Star, 2013; Star et al., 2015a, 2015b). First, Which is better? WEPs (see Appendix A) demonstrate two correct but different approaches to solving a problem, where one method is more efficient or easier than the other under certain circumstances. Similarly, Why does it work? WEPs (see Appendix B) illustrate two different correct methods of solving the same problem but with one of the two methods illuminating the conceptual rationale. Next, Which is correct? WEPs (see Appendix C) present a problem solved in two different ways, where one method illustrates a common error. Finally, How do they differ? WEPs (see Appendix D) show two different problems solved in similar ways, which illuminates a mathematical concept or feature.

Given the dearth of research on the potential for using WEPs in an ESOL context, we conducted a pilot study to obtain ELs’ input on their experience of using WEPs in one-on-one after school tutorials, and we subsequently modified the previous WEPs model to MLS-WEPs. As a result, two adjustments were made to the implementations and three modifications were made to the materials in order to engage ELs in mathematical learning while they are still developing proficiency in English. The first adjustment: the MLS-WEPs provide learners opportunities to engage in discussion or writing using native languages and present the final product in English to the whole group. The second adjustment: ELs have freedom to choose their preferred language to describe their problem-solving procedures and answer the prompt questions. The first modification: to improve ELs’ mathematical writing skills, we implemented sentence frames. The goal was for sentence frames to help ELs express themselves clearly and confidently. The second modification: we rephrased the prompt questions to adjust their language complexity. The final modification: we asked students to provide specific mathematical examples before answering the related conceptual understanding questions. For example, before asking ELs to describe the definition of like terms, we first asked students to provide mathematical examples of like terms (e.g., 3×and 5x).

Design

The intervention materials were supplements to the existing curriculum. Therefore, they were implemented after the corresponding topics were introduced. Teachers were asked to select at least two units from the intervention bank, which comprises: Unit 1 Linear Equations, Unit 2 Functions, Unit 3 System Equations, Unit 4 Polynomials, and Unit 5 Radicals. The participating teachers decided to implement the Linear Equation and Function units. To ensure credibility and reliability, both the control and treatment groups received the intervention materials (Elliott & Brown, 2002). After discussion, Teacher 1 implemented the intervention material of the Linear Equation unit in her classes, while Teacher 2 taught the same unit without using the MLS-WEPs. As they moved to the Function unit, Teacher 2 implemented the intervention material in her classes, while Teacher 1 taught the same unit without the use of the MLS-WEPs. At the beginning of each topic, both groups were given a researcher-designed math content knowledge pretest. The treatment group was then taught using the MLS-WEPs curriculum and the control group were taught the same topics using the business-as-usual instruction (i.e., teacher demonstrated the step-by-step problem-solving procedures) and were provided with the same language support as the experimental group. The examples used in the control conditions are the same as those in the MLS-WEPs curriculum. At the end of the intervention, both groups were given a content-based posttest, which was the same as the pretest.

Teachers were asked to submit the lesson videos. However, only the assessments were coded and analyzed in this study. The purpose of the lesson videos was to verify that teachers were implementing the same language supports as intended and that teachers who used intervention materials in Unit 1 did not continue to use the comparison method when teaching Unit 2.

Data

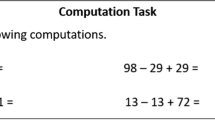

To measure student learning and effectiveness of the intervention materials, we developed unit assessments that were consistent with the selected topics. Thus, the teaching and assessments were aligned. Specifically, Unit 1 assessment was only focused on linear equations, and Unit 2 assessment was focused on functions. For each unit, the pretest and posttest were the same. Each item in the assessments contained two parts: (1) calculation and (2) explanation of how to get the answer (e.g., “show your work”, “explain your reasoning”). Unit 1 assessment contained seven items. Of these, one was solving one-step equations, one was simplifying expressions, and five were solving multi-step equations. All were designed to assess procedural knowledge. Procedural knowledge is the ability to perform a series of actions to solve a problem, including transferring a known procedure to a new problem (Rittle-Johnson & Star, 2007). Unit 2 assessment contained eight items. Of these, two were examining conceptual understanding of function (e.g., students needed to determine and explain whether the given relation is a function or not). Conceptual knowledge is the ability to explain a certain understanding of concepts in a field and the interconnections of the concepts or ideas in that field (Star et al., 2015a, 2015b). The other six items were designed to assess procedural knowledge of function: one was evaluating a function, two were finding the y-intercept of an equation when given two points, three were about graphs of linear functions.

The unit assessments were designed to tap two components of mathematics performance—calculation skill and verbal explanation skill. The essential question in Unit 1 is how to solve linear equations, so students were introduced to the problem solving procedures in the MLS-WEP and in the controlled examples. In Unit 2, students needed to determine whether a given representation (e.g., order pairs, tables, graphs, and equations) is a linear function, and students need to use their conceptual understanding to explain how they made their judgment. Students also learned how to evaluate and graph functions in intervention/control materials. Consistent with the intervention and control materials, the Unit 1 assessment required students to describe problem-solving procedures after solving different types of linear equations. Similarly, on the Unit 2 assessment, students were asked to demonstrate their understanding of the concepts by explaining how they knew whether each representation was a function or not, and they were asked to show their procedural understanding by describing how they evaluated and graphed the function.

At the beginning of the intervention, the participating teachers reviewed the students’ assessment questions, and both teachers found the questions to be clear, understandable, and solvable. The internal consistency was satisfactory for the Unit 1 overall assessment (\(\alpha =\) 0.80) and sub-components (\(\alpha =\) 0.84 and 0.78, for explanation and calculation parts, respectively). The internal consistency was acceptable for the Unit 2 overall assessment (\(\alpha =\) 0.70) and sub-components (\(\alpha =\) 0.65 and 0.74, for the explanation and calculation parts, respectively). See Fig. 1 for the amount of time between each assessment.

Implementation

Prior to the start of the study, we met with the teachers individually for three hours to discuss the project and how to implement MLS-WEPs. During the one-on-one meetings, participating teachers were taught how to teach using MLS-WEPs materials as if they were the students. Participant teachers were informed that this was one of the ways to incorporate MLS-WEPs, and they had flexibility to make adjustments according to their teaching styles and the learning abilities and styles of their students. The participating teachers were not given specific instructions on when to implement the MLS-WEPs. Therefore, the MLS-WEPs were implemented at different times (e.g., as an opening activity, as an example, and as a closing activity). Neither instructor was comfortable with having students work in small groups in a virtual environment. Therefore, students did not work in pairs or small groups. In addition to the three-hours meeting, we also communicated with both teachers at least once a week. The participant teachers were blinded to the hypotheses of the study to avoid potential bias.

Coding and analyses

Both the calculation and explanation parts on the pretest and posttest were scored for answer accuracy. Students received one point for correctly solving each calculation part. And each explanation item was scored on a 1-point scale (1 point for fully correct explanation, 0.5 point for partially correct explanation, 0 point for incorrect). After further examination, we found that in the case of incorrect responses some responses were of higher quality than others (e.g., concept relevant but incorrect responses were of higher quality than the concept irrelevant responses). Thus, we decided to explore the explanation items further. Through several rounds of the coding processes, we found that students’ responses fell into these six categories: fully correct explanation, partially correct explanation, concept relevant but incorrect, concept irrelevant explanation, uninterpretable explanation, and blank. The inter-rater reliability of one-third of the data from a second coder exceeded 85%. After a round of individual coding, the coders worked together to discuss and resolve their discrepancies. Examples of coding will be presented in the Result Section.

To determine the correlations between Els’ mathematical performance (i.e., calculation score and explanation score) and their English language proficiency, we computed Pearson’s r (Pearson’s correlation coefficient). To examine whether the MLS-WEPs intervention effects are related to Els’ English language proficiency and to what extent the use of MLS-WEPs impacts Els’ mathematical performance, multiple linear regressions were conducted, controlling for prior knowledge. In the regression analysis, we used students’ overall scores from the pre- and post-assessments.

Results

Knowledge at the beginning of the unit 1 intervention

At the beginning of the intervention (i.e., during Unit 1 pretest), there was no significant difference between treatment and control groups for the calculation part, t (76) = -0.879, p = 0.382, Cohen’s d = 0.203, power = 0.407, although the control group (Mcalculation = 5.45, SDcalculation = 1.93) attained slightly higher scores than those of the treatment group (Mcalculation = 5.06, SDcalculation = 1.89). Yet for the explanation part, compared to students in the treatment group (Mexplanation = 1.21, SDcalculation = 1.89), students in the control group (Mexplanation = 2.47, SDexplanation = 2.49) demonstrated significantly higher scores, t (52.2) = -2.392, p = 0.020, Cohen’s d = 0.585, power = 0.999. In other words, the control group students performed better on both the calculation (not significant) and explanation (significant) parts than those in the treatment group. Thus, it was necessary to control for this initial difference in the subsequent analyses of performance at the posttest. Should a significant difference emerge favoring the treatment group, such a finding would imply that the treatment was able to not only overcome this initial difference, but also improve performance thereafter.

Knowledge gains from unit 1 pretest to posttest

In the Unit 1 posttest, the control group’s superior performance vanished. There was no significant effect for conditions in both the calculation part, t (48.9) = 1.552, p = 0.127, Cohen’s d = 0.386, power = 0.906, and explanation part, t (76) = 1.348, p = 0.182, Cohen’s d = 0.312, power = 0.754, of the Unit 1 posttest. It may be argued that the control group had a higher starting point, so it had less room to grow. In fact, the treatment group (Mcalculation = 6.03, SDcalculation = 1.49; Mexplanation = 3.40, SDexplanation = 2.74) outperformed the control group (Mcalculation = 5.61, SDcalculation = 2.14; Mexplanation = 2.48, SDexplanation = 2.71) in the Unit 1 posttest.

Next, we conducted statistical analyses to explore what affected ELs’ mathematical knowledge (i.e., calculation and explanation). Given the nested nature of the data, where students (level-1) were nested within classrooms (level-2), we considered a hierarchical linear model (HLM) to analyze the data. The unrestricted (null) model was performed to examine whether the variability in the outcome variable (mathematics performance), by level-2 group, was significantly different than zero. However, the results (\({X}_{U1Post\_PS}^{2}=0.10, p=.75; {X}_{U1Post\_R}^{2}=0, p=1; {X}_{U2Post\_PS}^{2}=0, p=1; {X}_{U2Post\_R}^{2}=0.93, p=.33)\) confirmed the differences at the group level on the outcome variables were not significant and the intra class correlation was 0.06, indicating that there were no nested effects to consider in this study and confirming that HLM is not necessary. Therefore, we proceeded with the multiple regression analysis to see if English language proficiency, prior knowledge, and whether they received the intervention predicted their mathematical calculation and reasoning skills. Assumption checks were performed to determine the appropriateness of the multiple regression. The collinearity statistics indicated that multicollinearity was not a concern (Unit 1 Calculation: Proficiency, VIF = 1.33, Tolerance = 0.750; Prior Knowledge, VIF = 1.34, Tolerance = 0.748; Intervention, VIF = 1.03, Tolerance = 0.968. Unit 1 Explanation: Proficiency, VIF = 1.62, Tolerance = 0.617; Prior Knowledge, VIF = 1.75, Tolerance = 0.573; Intervention, VIF = 1.19, Tolerance = 0.839). The data also met the assumption of independent errors (Unit 1 Calculation: Durbin-Watson value = 1.62; Unit 1 Explanation: Durbin-Watson value = 2.04). According to the Central Limit Theorem, the sampling distribution will approximate a normal distribution for large sample sizes (usually for n ≥ 30), regardless of the shape of the population (Starnes & Tabor, 2019). Therefore, based on the assumption check results and Central Limit Theorem (CLT), we decided to use the multiple regression.

We first estimated the impact of the intervention on ELs’ Unit 1 algebraic knowledge (calculation (Eq. 1) and explanation (Eq. 2) skills):

The results showed that the predictors explained a large amount of the variance in the Unit 1 calculation (F(3, 74) = 24.1,\(p<.001, {R}^{2}=.49, {R}_{adjusted}^{2}=.47, , Cohe{n}{\prime}s {f}^{2}=0.96, power=1\)) and explanation (F(3, 74) = 50.9,\(p<.001, {R}^{2}=.67, {R}_{adjusted}^{2}=.66, Cohe{n}{\prime}s {f}^{2}=2.03, power=1\)) scores.

The analysis showed that, holding all else constant, for each standard deviation increase in ELs’ prior knowledge, their calculation scores increased by 0.601 (t(77) = 6.28, p < 0.001) standard deviations and their explanation score increased by 0.735 (t(77) = 8.38, p < 0.001) standard deviations. In addition, ELs who received the intervention scored 0.481 (t(77) = 2.82, p = 0.01) standard deviations higher on the calculation component and 0.702 (t(77) = 4.77, p < 0.001) standard deviations higher on the explanation component than those in the control condition. Moreover, the analysis showed that ELs’ English language proficiency did not have a significant effect on ELs’ Unit 1 calculation (\(\beta =.140, t\left(77\right)=1.47, p=.15\)) or explanation (\(\beta =.149, t\left(77\right)=1.77, p=.08)\) scores.

Knowledge at the beginning of the unit 2 intervention

When Unit 2 was introduced at a later date, the wait-listed participants (Teacher 2 and her students) who were in the Unit 1 control condition, now received the Unit 2 intervention. This time, Teacher 1 and her students were assigned as the control group. At the beginning of the Unit 2 intervention (i.e., Unit 2 pretest), there was no significant effect by condition in the calculation part, t (76) = 1.88, p = 0.064, Cohen’s d = 0.434, power = 0.447. However, compared to students in the treatment group (M = 0.58, SD = 0.88), the students in the control group scored significantly higher in the explanation part (M = 1.11, SD = 1.43) of the Unit 2 pretest, t (75.7) = 2.01, p = 0.048, Cohen’s d = 0.424, power = 0.430. Note that the control group was the treatment group for Unit 1 and thus received the Unit 1 intervention materials. Therefore, this finding may be due to the transfer of explanatory skills learned in the intervention materials in Unit 1 to different mathematical concepts.

Knowledge gains from unit 2 pretest to posttest

Similar to Unit 1, we began by estimating the effects of English language proficiency, prior knowledge, and whether ELs’ received the intervention on their Unit 2 mathematical performance (calculation (Eq. 3) and explanation (Eq. 4) skills). Assumption checks were performed to determine the appropriateness of the multiple regression. The collinearity statistics indicated that multicollinearity was not a concern (Unit 2 Calculation: Proficiency, VIF = 1.10, Tolerance = 0.912; Prior Knowledge, VIF = 1.14, Tolerance = 0.877; Intervention, VIF = 1.05, Tolerance = 0.955. Unit 2 Explanation: Proficiency, VIF = 1.28, Tolerance = 0.783; Prior Knowledge, VIF = 1.32, Tolerance = 0.755; Intervention, VIF = 1.04, Tolerance = 0.957). The data also met the assumption of independent errors (Unit 2 Calculation: Durbin-Watson value = 1.97; Unit 2 Explanation: Durbin-Watson value = 2.09). Based on the assumption check results and CLT, we decided to use the multiple regression.

The results showed that the predictors explain a great amount of the variance in the Unit 2 calculation (F(3, 74) = 24.4,\(p<.001, {R}^{2}=.50, {R}_{adjusted}^{2}=.48, , Cohe{n}{\prime}s {f}^{2}=1, power=1\)) and explanation (F(3, 74) = 35.8,\(p<.001, {R}^{2}=.59, {R}_{adjusted}^{2}=.58, , Cohe{n}{\prime}s {f}^{2}=1.44, power=1\)) scores.

The analysis revealed that for every standard deviation increase in ELs’ prior knowledge, their calculation scores increased by 0.549 (t(77) = 6.24, p < 0.001) standard deviations and their explanation scores increased by 0.384 (t(77) = 4.5, p < 0.001) standard deviations. In addition, ELs who received the intervention scored 0.498 (t(77) = 2.91, p = 0.01) standard deviations higher on the calculation component and 0.730 (t(77) = 4.74, p < 0.001) standard deviations higher in the explanation component than those in the control condition. Unlike Unit 1, the analysis showed that ELs’ English language proficiency had a significant effect on ELs’ calculation and explanation scores in Unit 2. Specifically, holding all else constant, a one standard deviation increase in English language proficiency level resulted in a 0.319 (t(77) = 3.70, p < 0.001) standard deviation increase in Unit 2 calculation scores and a 0.478 (t(77) = 5.70, p < 0.001) standard deviation increase in explanation scores.

Quality of explanation

To examine the quality of written explanations, we coded the explanation part of the assessments. The coding scheme focused on six general types of responses: fully correct explanations, partially correct explanations, conceptually relevant but incorrect explanations, conceptually irrelevant explanations, uninterpretable explanations, and blank. Examples of each coding and the results of the analysis are presented in Tables 5 and 6. The results of this analysis showed that the number of fully correct explanations increased in the post-assessments in both control and intervention conditions. However, the intervention condition had a greater gain in terms of fully correct explanations. In addition, at the beginning of the intervention, many students did not know how to do mathematical reasoning in writing. A representative explanation at the beginning of the intervention was, “I used my pen and pencil calculate.” The intervention materials appeared to help ELs better understand how to reason in writing than those in the control materials. The number of concept-irrelevant explanations reduced in the Unit 1 intervention condition and this advantage was retained until the end of the Unit 2 intervention, even though they did not receive the intervention materials in Unit 2. Yet, for those who received control material in Unit 1, the number of concept-irrelevant explanations remained almost the same. Uninterpretable explanations are the responses that the coder could not understand. This issue may be caused by a lack of mathematical concept knowledge and language or English language proficiency. In both Unit 1 and Unit 2, there was an unexpected increase in the uninterpretable explanation category in the treatment group and a slight decrease in the control group. Furthermore, the number of blank explanations reduced substantially in the treatment group, while there was almost no change in the control group. Overall, the intervention materials improved the quality of English learners’ written explanations.

Is the effectiveness of the MLS-WEPs intervention related to ELs’ english language proficiency?

The correlation matrix (Table 7) showed that ELs’ mathematical performance was positively correlated with their English language proficiency, and this association was stronger in the explanation part than in the calculation part. These findings are consistent with the existing literature (Clarkson & Galbraith, 1992; Ellerton & Clarkson, 1996; Jarrett, 1999). In addition, the scores on each part of the posttest were highly correlated with those on the corresponding part of the pretest, implying that ELs’ performance in mathematics was highly dependent on prior knowledge. This is consistent with our multiple regression analysis. To investigate the effect of English language proficiency on the MLS-WEPs intervention, we conducted regression analyses for the Unit 1 and 2 training groups respectively, controlling for prior knowledge. Assumption checks were performed to determine the appropriateness of the multiple regression. The collinearity statistics indicated that multicollinearity was not a concern (Unit 1: Proficiency, VIF = 1.09, Tolerance = 0.919; Prior Knowledge-Calculation, VIF = 1.14, Tolerance = 0.874; Prior Knowledge-Explanation: VIP = 1.27, Tolerance = 0.786. Unit 2: Proficiency, VIF = 1.10, Tolerance = 0.906; Prior Knowledge-Calculation, VIF = 1.21, Tolerance = 0.827; Prior Knowledge-Explanation: VIP = 1.32, Tolerance = 0.760). The data met the assumption of independent errors (Unit 1 Calculation: Durbin-Watson value = 1.36; Unit 1 Explanation: Durbin-Watson value = 2.15; Unit 2 Calculation: Durbin-Watson value = 1.46; Unit 2 Explanation: Durbin-Watson value = 1.19). Shapiro–Wilk tests were performed to check normality and did not show evidence of of non-normality except for Unit 1 calculation (Unit 1 Calculation: W = 0.826, p < 0.001; Unit 1 Explanation: W = 0.957, p = 0.08; Unit 2 Calculation: W = 0.972, p = 0.575; Unit 2 Explanation: W = .973, p = 0.600). Based on the assumption check results and CLT, we decided to use the multiple regression. The results (see Table 7) showed that, holding prior knowledge constant, ELs in the Unit 1 treatment group did not differ significantly in their math outcomes by their English proficiency in the calculation (\(Cohe{n}{\prime}s {f}^{2}=0.41, power=0.81)\) and explanation (\(Cohe{n}{\prime}s {f}^{2}=1.04, power=1)\) parts. Similarly, the analysis (see Table 8) showed ELs’ calculation scores in the Unit 2 treatment group also did not vary significantly by their English language proficiency (\(Cohe{n}{\prime}s {f}^{2}=1.01, power=0.95 )\). Unlike the previous three results, ELs in the treatment group with proficiency level 5 scored significantly higher on the explanation part than those with proficiency levels 1 and 3 (\(Cohe{n}{\prime}s {f}^{2}=1.58, power=1 ).\) Overall, these results suggest that the effectiveness of the MLS-WEPs intervention did not vary by English language proficiency after controlling for prior knowledge, implying that ELs of all language proficiency levels benefited from the MLS-WEPs intervention.

Discussion and conclusion

The purpose of this study was to investigate how Modified for Language Support-Worked Examples Pairs (MLS-WEPs), adapted based on best practices described in the research on learning for Els, could be used to help English learners learn mathematics. The results indicated that worked example comparison not only enhanced ELs’ ability to solve mathematical problems, but also improved their written explanation skills and enabled them to transfer such skills to different mathematical concepts. More importantly, the data suggested when controlling ELs’ prior knowledge, the effectiveness of the MLS-WEPs intervention generally did not vary by their English language proficiency. This information is important given that all other comparable studies have been conducted in mainstream, native English-speaking classrooms. In this section, we present some of the implications of this study for practice and opportunities for future research.

Comparing worked examples appeared to support ELs’ progress in mathematical problem-solving skills, which is consistent with prior literature demonstrating that worked example comparison enhances students’ procedural knowledge (Pashler et al., 2007; Rittle-Johnson & Star, 2007, 2009; Rittle-Johnson et al., 2009) but extends those findings to ELs. Based on the results, the comparison of worked examples with detailed descriptions of the problem-solving procedures led to a significant improvement in the quality of the explanations. This design treats language as a resource that provides ample and varied opportunities to read, write, listen, and speak in mathematics, which best supports the development of English learners’ written and oral communication skills (Moschkovich, 2000). In addition, worked examples provide ELs with solutions to problems, which helps to reduce their cognitive load (Sweller, 2011). When students learn mathematics in a language that they are not proficient in, without appropriate instructional modifications and/or accommodations, they might experience an increased likelihood of heavier cognitive load due to having to use more working memory to decipher different parts of their non-native language. Comparing worked examples enables students to develop relational thinking and to align prior knowledge with new concepts (Richland et al., 2012). Once they have acquired sufficient knowledge, their cognitive load will be reduced, thus contributing to learning. An unexpected finding was that the number of uninterpretable explanations increased in the treatment group, while the number of such explanations decreased slightly in the control group. One explanation may be that the intervention materials motivated ELs to perform mathematical explanations even though they may not have the ability to do so. More research is needed to confirm this conjecture.

Another interesting finding was that English language proficiency did not appear to have a significant effect on mathematical knowledge (i.e., calculation and explanation scores) in Unit 1 (Solving Equations), while it had a significant effect on mathematical explanation in Unit 2 (Functions). Recall that Unit 1 assessed only procedural knowledge, whereas Unit 2 assessed both procedural and conceptual knowledge. Perhaps understanding/expressing conceptual knowledge requires a higher level of verbal ability than procedural knowledge. It is also possible that solving equations does not require as high a level of language proficiency as learning functions. More research is needed to confirm these conjectures.

More importantly, this study showed that ELs who received the Unit 1 intervention materials showed significantly higher scores on the explanation part of the Unit 2 pretest compared to students who did not previously receive the intervention materials. Unit 1 and Unit 2 covered two completely different mathematical concepts. It appears that once ELs knew how to perform written explanations, this skill was maintained. The findings suggest that the written explanation skills were transferable across mathematical concepts. However, why and how these skills can be transferred to different concepts that remain unexplained. Indeed, it also remains unknown whether such skills are transferable to any mathematical concept. Therefore, more research is needed on the written explanation in the mathematical domain. Importantly, the results revealed that although ELs’ mathematical performance was positively correlated with their English language proficiency (see Table 7), the effectiveness of the MLS-WEPs intervention for calculation and explaining problem solving procedures generally did not vary by ELs’ English language proficiency after controlling for prior knowledge (see Tables 8 and 9), implying that students of all language proficiency levels benefited from the MLS-WEPs intervention. In other words, the MLS-WEPs intervention enabled ELs to learn mathematical concepts and describe mathematical procedures in writing while they are still developing their English language proficiency. It seems the design of the MLS-WEPs intervention can reduce the interference of language limitations and help ELs with low English proficiency benefit as much as those with high English language proficiency. The MLS-WEPs intervention materials productively support ELs’ engagement in mathematical reading, writing, speaking, and reasoning, regardless of their proficiency in English, which allows mathematics instruction to focus on rigorous concepts and reasoning; such focus is one of the most important goals of mathematics instruction in an ESOL context (Moschkovich, 2012).

The MLS-WEPs materials benefited English learners of all English language levels perhaps because these materials not only aligned well with the principles from the literature on mathematics learning in an ESOL context, but they also provided instructional strategies for scaffolding instruction and accommodating linguistic needs. First, MLS-WEPs provided a variety of opportunities for students to explore math concepts through listening, reading, writing, and speaking. The worked examples to be compared were presented with detailed problem-solving descriptions and students were prompted to read each description before comparing them. MLS-WEPs also provided learners with various opportunities to apply mathematical language in writing and speaking. In order to guide students to focus on specific aspects of comparison, different types of discussion prompts were implemented. For example, after studying problem solving descriptions and steps, students may be prompted to discuss the similarities and differences between the two methods, identify how each method works, or determine the effectiveness of each method and so forth. Open-ended writing prompts were also included to facilitate writing skills and develop reasoning and critical thinking skills. For instance, students may be asked to answer in what case one method is better than the other. In addition, students were exposed to mathematical listening since the comparison process included multiple interactions (teacher-student, student–student, student–teacher etc.). Second, the MLS-WEPs curriculum gave ELs sufficient time to use their English productively and negotiate meaning through both writing and speaking. Moschkovich (2012) mentioned that ELs “need time and support for moving from expressing their reasoning and arguments in imperfect form” (p. 22). Throughout the intervention, students had multiple opportunities to revise their written responses, such processes enable ELs to negotiate the meaning, modify the language, and comprehend the text (Kersaint et al., 2008) and help ELs develop mathematics literacy and problem-solving skills (Boscolo & Mason, 2001).

Limitations

There are several major limitations to this study that can be addressed in future research. The main limitation of these results is the small sample size of teacher and student participants. Our findings are preliminary; the results should be replicated with a larger and more diverse group of teachers and students with different teaching and learning styles. Second, we were unable to obtain official ACCESS scores (i.e., ELs’ English language proficiency level scores) for the participant students because the staff member who prepared the data was out of the office during COVID-19. Therefore, the English language proficiency levels used in this study were derived from students’ self-reports, thus accuracy may be compromised. However, we incorporated their level of comfort with English materials and the level of English classes they were taking to ensure that the self-reported English proficiency levels in this study were as accurate as possible. Moreover, the teachers reviewed the level of self-reports and provided professional input. More importantly, although official English proficiency levels were not obtained, for the purpose of this analysis, we just needed to know Els’ relative English proficiency levels (i.e., who had a higher English proficiency level than the other). Both participating teachers have more than ten years of experience teaching math to English learners and know their students’ relative English language proficiency levels very well. Furthermore, the control and treatment materials covering the same topic were taught by different teachers (e.g., the first unit of intervention material was taught by Teacher 1, whereas its control material was taught by Teacher 2), so that this analysis included two variables: the teacher and the intervention material. Therefore, variations due to differences in teachers should also be taken into account when analyzing the impact of the intervention materials. Future studies should enhance the design of inter-condition comparison. However, the two teachers had similar professional backgrounds. For example, both teachers were certified in secondary mathematics, had attended two QTEL trainings, and had many years of teaching experience (i.e., 30 and 19 years). More importantly, both teachers were instructed to implement interventions and control materials in the same manner. Therefore, the differences caused by the teachers were likely to be minor. Additionally, one might argue that it is possible that the control teacher in Unit 2 still used some of the intervention techniques she obtained from Unit 1. However, according to the lesson recordings, the control teacher in Unit 2 did not implement any of the intervention techniques when teaching the Unit 2 control materials. This study was conducted during COVID-19, so all learning took place in a virtual environment. We lack data on which students chose to complete the questions in other languages, as all students responded in English during the assessment, although some may have chosen to translate the questions into their native language. Therefore, further research is needed to examine the impact of different languages on mathematical explanations.

Conclusion

Although there is significant support in the literature for the use of comparison, and more specifically comparison of worked examples, as an effective learning tool in algebra, it has been unclear whether Els could experience a similar benefit. The results of this study suggest that Modified for Language Support-Worked Examples Pairs (MLS-WEPs) can help ELs learn mathematics effectively in several ways. MLS-WEPs enhanced ELs’ ability to solve mathematical problems and improve their written explanatory skills, and also enabled them to transfer this ability to different mathematical concepts. More importantly, the general effectiveness of the MLS-WEPs intervention did not appear to vary by ELs’ English language proficiency. This is an exciting finding because it demonstrated that learners with low English language proficiency can also benefit from comparison in mathematics before they develop their English proficiency.

References

Abedi, J., Courtney, M., Leon, S., & Goldberg, J. (2005). Language accommodation for English language learners in large-scale assessments: Bilingual dictionaries and linguistic modification (CSE Tech. Rep. No. 666. Los Angeles: University of California, National Center for Research on Evaluation, Standards, and Student Testing.

Aiken, L. R., Jr. (1972). Language factors in learning mathematics. Review of Educational Research, 42, 359–385.

Barbieri, C. A., Booth, J. L., Begolli, K. N., & McCann, N. (2021). The effect of worked examples on student learning and error anticipation in algebra. Instructional Science, 49, 419–439. https://doi.org/10.1007/s11251-021-09545-6

Booth, J. L., Oyer, M. H., Paré-Blagoev, J., Elliot, A. J., Barbieri, C., Augustine, A., & R Koedinger, K. R. (2015). Learning algebra by example in real-world classrooms. Journal of Research on Educational Effectiveness, 8(4), 530–551. https://doi.org/10.1080/19345747.2015.1055636

Boscolo, P., & Mason, L. (2001). Writing to learn, writing to transfer. In P. Tynjälä, L. Mason, & K. Lonka (Eds.), Writing as a learning tool: Integrating theory and practice (pp. 83–104). Kluwer Academic Publishers.

Brown, C. L., Cady, J. A., & Taylor, M. (2009). Problem solving and the English language learner. Mathematics Teaching in the Middle School, 14(9), 533–539.

Buffington, P., Knight, T., & Tierny-Fife, P. (2017). Supporting mathematics discourse with sentence starters and sentence frames. EDC.

Chandler, P., & Sweller, J. (1991). Cognitive load theory and the format of instruction. Cognition and Instruction, 8(4), 293–332.

Clarkson, P., & Galbraith, P. (1992). Bilingualism and mathematics learning: Another perspective. Journal for Research and Mathematics Education, 23(1), 34–44.

Cooper, C., & Sweller, J. (1987). Effects of schema acquisition and rule automation on mathematical problem-solving transfer. Journal of Educational Psychology, 79(4), 347–3662.

Cossio, M. G. (1978). The effects of language on mathematics placement sores in metropolitan colleges. Dissertation Abstracts International, 38, 4002A-4003A.

Crawford, J. (1995). Bilingual education: History, politics, theory, and practice (3rd ed.). Bilingual Educational Services.

Cuevas, G. (1984). Mathematics learning in English as a second language. Journal for Research in Mathematics, 15(2), 134–144.

de Araujo, Z. (2017). Connections between secondary mathematics teachers’ beliefs and their selection of tasks for English language learners. Curriculum Inquiry, 47, 363–389.

Donnelly, W., & Roe, C. (2010). Using sentence frames to develop academic vocabulary for English learners. The Reading Teacher, 64(2), 131–136.

Durkin, K., Rittle-Johnson, B., Star, J. R., & Loehr, A. (2023). Comparing and discussing multiple strategies: An approach to improving algebra instruction. The Journal of Experimental Education, 91(1), 1–19.

Ellerton, N. F., & Clarkson, P. C. (1996). Language factors in mathematics teaching and learning. In A. J. Bishop, K. Clements, C. Keitel, J. Kilpatrik, & C. Laborde (Eds.), International handbook of mathematics education part 2 (pp. 987–1033). Kluwer Academic Publishers.

Elliott, S. A., & Brown, J. S. (2002). What are we doing to waiting list controls? Behaviour Research and Therapy, 40(9), 1047–1052.

Garcia, E., & Gonzalez, R. (1995). Issues in systemic reform for culturally and linguistically diverse students. Teachers College Record, 96(3), 418–431.

Gentner, D., Loewenstein, J., & Thompson, L. (2003). Learning and transfer: A general role for analogical encoding. Journal of Educational Psychology, 95(2), 393–408.

Große, C. S., & Renkl, A. (2006). Effects of multiple solution methods in mathematics learning. Learning and Instruction, 16(2), 122–138. https://doi.org/10.1016/j.learninstruc.2006.02.001

Heng, C., & Tan, H. (2003). Teaching mathematics and science in English: A perspective from University of Putra in Malaysia. ELTC ETEMS Conference (pp. 1-16) Malaysia: N/A

Jarrett, D. (1999). The inclusive classroom: Teaching mathematics and science to English-Language learners. Portland: Northwest Reginal Educational Laboratory.

Kang, H. & Pham, K. (1995). From 1 to Z: Integrating math and language learning. Annual Meeting of the Teachers of English to Speakers of Other Languages.

Kazima, M. (2007). Malawian students’ meanings for probability vocabulary. Educational Studies in Mathematics, 64, 169–189.

Kersaint, G., Petkova, M., & Thompson, D. R. (2008). Teaching mathematics to English language learners. Routledge.

Lems, K., Miller, L. D., & Soro, T. (2017). Building literacy with English language learners: Insights from linguistics. The Guilford Press.

Meltzer, J., & Hamann, E. T. (2005). Meeting the literacy development needs of adolescent English Language learners through content-area learning. Part Two: Focus on classroom teaching strategies. Education Alliance at Brown University.

Moschkovich, J. N. (1999). Supporting the participation of English language learners in mathematical discussions. For the Learning of Mathematics, 19(1), 11–19.

Moschkovich, J. N. (2000). Learning mathematics in two languages: Moving from obstacles to resources. Changing the faces of mathematics. Perspectives on multiculturalism and Gender Equity, 1, 85–93.

Moschkovich, J. N. (2002). A situated and sociocultural perspective on bilingual mathematics learners. Mathematical Thinking and Learning, 4(2 & 3), 189–212.

Moschkovich, J. (2012). Mathematics, the Common Core, and language: Recommendations for mathematics instruction for Els aligned with the common core. Commissioned Papers on Language and Literacy Issues in the Common Core State Standards and Next Generation Science Standards, 94, 17.

Moschkovich, J. N. (2015). Academic literacy in mathematics for English learners. Journal of Mathematical Behavior, 40, 43–62.

Newton, K., & Star, J. (2013). Exploring the nature and impact of model teaching with worked examples pairs. Mathematics Teacher Educator, 2(1), 86–102.

Newton, K. J., Star, J. R., & Lynch, K. (2010). Understanding the development of flexibility in struggling algebra students. Mathematical Thinking & Learning, 12, 282–305.

Paas, F., & Ayres, P. (2014). Cognitive load theory: A broader view on the role of memory in learning and education. Educational Psychologist, 26(2), 191–195.

Paas, F., Renkl, A., & Sweller, J. (2004). Cognitive load theory: Instructional implications of the interaction between information structures and cognitive architecture. Journal of Instructional Science, 32, 1–8.

Pashler, H., Rohrer, D., Cepeda, N. J., & Carpenter, S. K. (2007). Enhancing learning and retarding forgetting: Choices and consequences. Psychonomic Bulletin & Review, 14, 187–193. https://doi.org/10.3758/BF03194050

Purpura, D., Logan, J. A. R., Hassinger-Das, B., & Napoli, A. R. (2017). Why do early mathematics skills predict later reading? The role of mathematical language. Developmental Psychology, 53(9), 1633–1642.

Richland, L. E., Stigler, J. W., & Holyoak, K. J. (2012). Teaching the conceptual structure of mathematics. Educational Psychologist, 47(3), 189–203. https://doi.org/10.1080/00461520.2012.667065

Rittle-Johnson, B., & Star, J. R. (2007). Does comparing solution methods facilitate conceptual and procedural knowledge? An experimental study on learning to solve equations. Journal of Educational Psychology, 99(3), 561–574.

Rittle-Johnson, B., & Star, J. R. (2009). Compared with what? The effects of different comparisons on conceptual knowledge and procedural flexibility for equation solving. Journal of Educational Psychology, 101, 529–544. https://doi.org/10.1037/a0014224

Rittle-Johnson, B., Star, J. R., & Durkin, K. (2009). The importance of prior knowledge when comparing examples: Influences on conceptual and procedural knowledge on equation solving. Journal of Educational Psychology, 101, 836–852. https://doi.org/10.1037/a0016026

Rittle-Johnson, B., Star, J. R., & Durkin, K. (2012). Developing procedural flexibility: Are novices prepared to learn from comparing procedures? British Journal of Educational Psychology, 82(436), 455. https://doi.org/10.1111/j.2044-8279.2011.02037.x

Rittle-Johnson, B., Star, J. R., & Durkin, K. (2017). The power of comparison in mathematics instruction: Experimental evidence from classrooms. In D. Geary, D. Berch, R. Ochsendorf, & K. Mann Koepke (Eds.), Mathematical cognition and learning. Acquisition of complex arithmetic skills and higher-order mathematics concepts (pp. 271–295). Elsevier. https://doi.org/10.1016/B978-0-12-805086-6.00012-6

Rittle-Johnson, B., Star, J. R., & Durkin, K. (2020). How Can cognitive-science research help improve Education? The case of comparing multiple strategies to improve mathematics learning and teaching. Current Directions in Psychological Science, 29(6), 599–609. https://doi.org/10.1177/0963721420969365

Schwartz, D. L., & Bransford, J. D. (1998). A time for telling. Cognition and Instruction, 16, 475–522. https://doi.org/10.1207/s1532690xci1604_4

Sonoma County Office of Education. (2006, January). Providing language instruction. Aiming high [Aspirando a lo major]

Star, J. R., Caronongan, P., Foegen, A., Furgeson, J., Keating, B., Larson, M. R., Lyskawa, J., McCallum, W. G., Porath, J., & Zbiek, R. M. (2015). Teaching strategies for improving algebra knowledge in middle and high school students (NCEE 2014–4333). Washington, DC: National Center for Education Evaluation and Regional Assistance (NCEE), Institute of Education Sciences, U.S. Department of Education. Retrieved from the NCEE website

Star, J. R., Pollack, C., Durkin, K., Rittle-Johnson, B., Lynch, K., Newton, K., & Gogolen, C. (2015b). Learning from comparison in algebra. Contemporary Educational Psychology, 40, 41–54.

Starnes, D. S., & Tabor, J. (2019). The practice of statistics: For the AP exam. Freeman.

Sweller, J. (1988). Cognitive load during problem solving: Effects on learning. Cognitive Science, 12(2), 257–285.

Sweller, J. (1989). Cognitive technology: Some procedures for facilitating learning and problem solving in mathematics and science. Journal of Educational Psychology, 81(4), 457–466.

Sweller, J., Ayres, P., & Kalyuga, S. (2011). Cognitive load theory (Vol. 1). Springer.

Sweller, J., & Cooper, G. (1985). The use of worked examples as a substitute for problem solving in learning algebra. Cognition and Instruction, 2(1), 59–89.

The National Clearinghouse for English Language Acquisition and Language Instruction Educational Programs (2010). The Growing Number of English Learners Students: 1997/8-2007/8. Washington, DC, U.S.

WIDA. (2020). WIDA English language development standards framework, 2020 edition: Kindergarten–grade 12. Board of Regents of the University of Wisconsin System.

Woodward, J., Beckmann, S., Driscoll, M., Franke, M., Herzig, P., & Jitendra, A. (2012). Improving mathematical problem solving in grades 4 through 8: A practice guide (NCEE 2012-4055)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

Which is better? WEP

Appendix B

Why does it work? WEP

Appendix C

Which is correct? WEP

Appendix D

How do they differ? WEP

Appendix E

At the given level of English language proficiency, English learners will (WIDA, 2020).

1 | - Understand visual representations of content area languages - Not comprehend one-step commands, directions, multiple choice, or yes/no questions, or statements with sensory, graphic, or interactive support - Barely uses words or phrases with phonological, syntactic, or semantic errors |

2 | - Understand visual representations of content area languages - Use words, phrases, or chunks of language when presented with one-step commands, directions, multiple choice, or yes/no questions, or statements with sensory, graphic, or interactive support - Use words and/or phrases with phonological, syntactic, or semantic errors to respond when presented with basic verbal commands, direct questions, or simple statements with sensory, graphic, or interactive support |

3 | - Understand general language associated with the content areas - Use phrases or short sentences to respond - Use words and/or phrases with phonological, syntactic, or semantic errors to respond when presented with basic verbal commands, direct questions, or simple statements with sensory, graphic, or interactive support |

4 | - Understand general and some specific language of the content areas - Use expanded sentences in oral communication or written paragraphs - Use words and/or phrases with phonological, syntactic, or semantic errors to respond when presented with basic verbal commands, direct questions, or simple statements |

5 | - Understand specific and some technical language of the content areas - Use sentences of varying lengths and linguistic complexity in oral discourse - Use oral or written language with minimal phonological, syntactic, or semantic errors |

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ke, X., Newton, K.J. English learners learn from worked example comparison in algebra. Instr Sci (2024). https://doi.org/10.1007/s11251-024-09668-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11251-024-09668-6