Abstract

Many model inversion problems occur in industry. These problems consist in finding the set of parameter values such that a certain quantity of interest respects a constraint, for example remains below a threshold. In general, the quantity of interest is the output of a simulator, costly in computation time. An effective way to solve this problem is to replace the simulator by a Gaussian process regression, with an experimental design enriched sequentially by a well chosen acquisition criterion. Different inversion-adapted criteria exist such as the Bichon criterion (also known as expected feasibility function) and deviation number . There also exist a class of enrichment strategies (stepwise uncertainty reduction—SUR) which select the next point by measuring the expected uncertainty reduction induced by its selection. In this paper we propose a SUR version of the Bichon criterion. An explicit formulation of the criterion is given and test comparisons show good performances on classical test functions.

Similar content being viewed by others

References

Bect, J., Ginsbourger, D., Li, L., Picheny, V., Vazquez, E.: Sequential design of computer experiments for the estimation of a probability of failure. Stat. Comput. 22(3), 773–793 (2012)

Bichon, B.J., Eldred, M.S., Swiler, L.P., Mahadevan, S., McFarland, J.M.: Efficient global reliability analysis for nonlinear implicit performance functions. AIAA J. 46(10), 2459–2468 (2008)

Chevalier, C.: Fast Uncertainty Reduction Strategies Relying on Gaussian Process Models. PhD Thesis, Universität Bern (2013)

Chevalier, C., Picheny, V., Ginsbourger, D.: Kriginv: an efficient and user-friendly implementation of batch-sequential inversion strategies based on kriging. Comput. Stat. Data Anal. 71, 1021–1034 (2014)

Dagnelie, P.: Statistique théorique et appliquée. Presses agronomiques de Gembloux Belgium (1992)

Dupuy, D., Helbert, C., Franco, J.: DiceDesign and DiceEval: two R packages for design and analysis of computer experiments. J. Stat. Softw. 65(11), 1 (2015)

Dutang, C., Savicky, P.: randtoolbox: generating and testing random numbers. R Package 67, 1 (2013)

Echard, B., Gayton, N., Lemaire, M.: AK-MCS: an active learning reliability method combining kriging and Monte Carlo simulation. Struct. Saf. 33(2), 145–154 (2011)

El Amri, M. R.: Analyse d’incertitudes et de robustesse pour les modèles à entrées et sorties fonctionnelles. Theses, Université Grenoble Alpes (2019). https://tel.archives-ouvertes.fr/tel-02433324

El Amri, M.R., Helbert, C., Lepreux, O., Zuniga, M.M., Prieur, C., Sinoquet, D.: Data-driven stochastic inversion via functional quantization. Stat. Comput. 30(3), 525–541 (2020)

Ginsbourger, D.: Sequential design of computer experiments. Wiley StatsRef 99, 1–11 (2017)

Helbert, C., Dupuy, D., Carraro, L.: Assessment of uncertainty in computer experiments from universal to Bayesian kriging. Appl. Stoch. Model. Bus. Ind. 25(2), 99–113 (2009)

Jin, R., Chen, W., Sudjianto, A.: On sequential sampling for global metamodeling in engineering design. In: International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, vol. 36223, pp. 539–548 (2002)

Jones, D.R., Schonlau, M., Welch, W.J.: Efficient global optimization of expensive black-box functions. J. Global Optim. 13(4), 455–492 (1998)

Lemieux, C.: Quasi–Monte Carlo constructions. In: Monte Carlo and Quasi-Monte Carlo sampling, Springer, pp. 1–61 (2009)

Mebane, W.R., Jr., Sekhon, J.S.: Genetic optimization using derivatives: the rgenoud package for R. J. Stat. Softw. 42(1), 1–26 (2011)

Molchanov, I. S.: Theory of Random Sets, vol. 87, Springer (2005)

Moustapha, M., Marelli, S., Sudret, B.: A generalized framework for active learning reliability: survey and benchmark. arXiv preprint arXiv:2106.01713 (2021)

O’Hagan, A.: Curve fitting and optimal design for prediction. J. R. Stat. Soc. Ser. B (Methodol.) 40(1), 1–24 (1978)

Paciorek, C. J.: Nonstationary Gaussian Processes for Regression and Spatial Modelling. Ph.D Thesis, Carnegie Mellon University (2003)

Picheny, V., Ginsbourger, D., Roustant, O., Haftka, R. T., Kim, N.-H.: Adaptive designs of experiments for accurate approximation of a target region (2010)

Picheny, V., Wagner, T., Ginsbourger, D.: A benchmark of kriging-based infill criteria for noisy optimization. Struct. Multidiscip. Optim. 48(3), 607–626 (2013)

Ranjan, P., Bingham, D., Michailidis, G.: Sequential experiment design for contour estimation from complex computer codes. Technometrics 50(4), 527–541 (2008)

Roustant, O., Ginsbourger, D., Deville, Y.: Dicekriging, Diceoptim: two R packages for the analysis of computer experiments by kriging-based metamodeling and optimization (2012)

Acknowledgements

The authors are very grateful to the reviewers for their relevant and helpful comments. The authors would like to express their gratitude to Morgane Menz from IFP Énergies Nouvelles for her valuable help on numerical aspects and in particular for the study of the robustness of estimators with respect to the GP stationarity assumption. All the computations presented in this paper were performed using the GRICAD infrastructure (https://gricad.univ-grenoble-alpes.fr), which is supported by Grenoble research communities. We are also grateful of the useful feedback from Julien Bect and the CIROQUO consortium partners. CIROQUO is an Applied Mathematics consortium, gathering partners in technological and academia in the development of advanced methods for Computer Experiments (https://doi.org/10.5281/zenodo.6581216). This work was carried out as part of the “HPC/AI/HPDA Convergence for the Energy Transition” Inria/IFPEN joint research laboratory (High-performance computation/Artificial Intelligence/High-Performance Data Analysis).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A Basics on Vorob’ev theory and corresponding SUR strategy

1.1 A.1 Vorob’ev expectation

In this part, the notion of expectation for random closed sets in the sense of Vorob’ev is defined from Molchanov (2005). The framework is a compact set \({\mathbb {X}}\subset {\mathbb {R}}^d\) and a random closed set \(\Gamma \) of \({\mathbb {X}}\). It is recalled that \(\Gamma :\Omega \rightarrow {\mathcal {C}}\) is a random closed set if it is a measurable function on the probability space \((\Omega , {\mathcal {F}}, {\mathbb {P}})\) with values in the set of all compacts of \({\mathbb {X}}\) in the sense that:

Let’s define the parametric family \(\big \{Q_\alpha \big \}_{\alpha \in [0,1]}\) of Vorob’ev quantiles is defined by:

The elements of \(\{Q_\alpha \}_{\alpha \in [0,1]}\) are called the Vorob’ev quantiles of the random closed set \(\Gamma \) and the function p is called the coverage function of \(\Gamma \).

To define the expectation of the random closed set \(\Gamma \), Molchanov (2005) comes back to the expectation of a real random variable: the measure of the \(\Gamma \) set \({\mathbb {P}}_{\mathbb {X}}(\Gamma )\). From the parametric family of Vorob’ev quantiles (see (25)), the expectation of \(\Gamma \) in the sense of Vorob’ev is then defined as the Vorob’ev quantile of measure equal (or the closest one higher) to the expectation of the measure of \(\Gamma \). More precisely, the Vorob’ev expectation of a random closed set \(\Gamma \) is the set \(Q_{\alpha ^\star }\), where \(\alpha ^\star \) is defined as the Vorob’ev threshold by

where \({\mathbb {P}}_{\mathbb {X}}\) denotes the Lebesgue measure on \({\mathbb {X}}\). \(\alpha ^\star \) is called the Vorob’ev threshold.

Remark 1

\(\bullet \) Based on Eq. (25)), the function \(\alpha \mapsto {\mathbb {P}}_{\mathbb {X}}(Q_\alpha )\) is decreasing on [0, 1].

\(\bullet \) The uniqueness of \(\alpha ^\star \) in the definition is easily checked. The existence of such \(\alpha ^\star \) in the definition of Vorob’ev expectation is based on the decreasing and continuity to the left of the function \(\alpha \mapsto {\mathbb {P}}_{\mathbb {X}}(Q_\alpha )\) which is itself guaranteed by the superior semi-continuity of the coverage function p (see Molchanov (2005) p. 23).

\(\bullet \) The continuity of the function \(\alpha \mapsto {\mathbb {P}}_{\mathbb {X}}(Q_\alpha )\) ensures equality \({\mathbb {P}}_{\mathbb {X}}(Q_{\alpha ^\star })={\mathbb {E}}[{\mathbb {P}}_{\mathbb {X}}(\Gamma )]\) in the definition of Vorob’ev expectation.

In the particular case where \(\Gamma \) is given by \(\Gamma := \{{\textbf{x}} \in {\textbf{X}},\, \xi ({\textbf{x}}) \le T\}\) with \(\xi \) a stochastic process indexed by \({\mathbb {X}}\) with continuous trajectories conditioned on the event \({\mathcal {E}}_n\) corresponding to n evaluations of \(\xi \) and T a fixed threshold, \(\Gamma \) is a random closed set (Molchanov 2005, p. 3). A sufficient condition to obtain a stochastic process with continuous trajectories is to consider a separable Gaussian process with continuous mean and covariance kernel of type Matérn 3/2 or 5/2 (Paciorek 2003, pp. 35 and 44). Moreover, in this case, the function \(\alpha \mapsto {\mathbb {P}}_{\mathbb {X}}(Q_\alpha )\) is continuous and so the equality \({\mathbb {P}}_{\mathbb {X}}(Q_{\alpha ^\star })={\mathbb {E}}[{\mathbb {P}}_{\mathbb {X}}(\Gamma )| \, {\mathcal {E}}_n]\) is verified. It is also important to notice that naive estimator \({\hat{\Gamma }}_1\) is almost surely equal to the median of Vorob’ev (quantile of order 1/2). Indeed, by noting \(\phi \) the distribution function of the standard normal distribution,

where \(p_n\) is the coverage function \(p_n({\textbf{x}}):={\mathbb {P}}(\xi ({\textbf{x}})\le T |\, {\mathcal {E}}_n )\).

Repeating the previous calculation and replacing 1/2 by the Vorob’ev threshold \(\alpha ^\star \), we obtain :

1.2 A.2 Vorob’ev deviation

The introduction of the concept of Vorob’ev deviation is used to define residual uncertainty \({\mathcal {H}}_n({\textbf{x}})\) in a SUR strategy. Let’s start by introducing the notion of distance between two random closed sets.

The average distance \(\textrm{d}_{{\mathbb {P}}_{\mathbb {X}}}\) with respect to a measure \({\mathbb {P}}_{\mathbb {X}}\) on all pairs of random closed sets included in \({\mathbb {X}}\) is defined by: for all random closed sets \( \Gamma _1, \Gamma _2\) defined on \({\mathbb {X}}\),

where \(\Delta \) is the random symmetric difference: \(\forall \, \omega \in \Omega , \, \Gamma _1 \Delta \Gamma _2(\omega ) := (\Gamma _1 \backslash \Gamma _2)(\omega ) \cup (\Gamma _2 \backslash \Gamma _1)(\omega ).\) In addition, the function \(\textrm{d}_{{\mathbb {P}}_{\mathbb {X}}}\) checks the properties of a distance.

The following proposition Molchanov (2005) justifies the choice of the Vorob’ev quantile family to define the Vorob’ev expectation and also allows to define the Vorob’ev deviation. Moreover, when \({\mathbb {E}}[{\mathbb {P}}_{\mathbb {X}}(\Gamma )]={\mathbb {P}}_{\mathbb {X}}(Q_{\alpha ^\star })\) (especially in the case of \(\Gamma :=\left\{ {\textbf{x}} \in {\textbf{X}},\, \xi ({\textbf{x}}) \le T\right\} \) with \(\xi \) a stochastic process indexed by \({\mathbb {X}}\) with continuous trajectories), the condition \(\alpha ^\star \ge \frac{1}{2}\) is no longer necessary (see (El Amri 2019, p. 28) for the proof)

Proposition 2

Noting \(Q_{\alpha ^\star }\) the Vorob’ev expectation of the random closed set \(\Gamma \) and assuming that \(\alpha ^\star \ge \frac{1}{2}\), it results that: for any measurable set \(\textrm{M}\) included in \({\mathbb {X}}\) such that \({\mathbb {P}}_{\mathbb {X}}(\textrm{M})={\mathbb {E}}[{\mathbb {P}}_{\mathbb {X}}(\Gamma )]\),

The Vorob’ev deviation of the random set \(\Gamma \) is defined as the quantity \(\textrm{d}_{{\mathbb {P}}_{\mathbb {X}}}(\Gamma , Q_{\alpha ^\star })\). The Vorob’ev deviation quantifies the variability of the random closed set \(\Gamma \) relative to its Vorob’ev expectation.

1.3 A.3 SUR Vorob’ev criterion

Once the basic elements of Vorob’ev theory are introduced, the associated SUR strategy is simply defined from the definition of SUR strategies via Eq. (15) by taking:

where \(Q_{n, \alpha _n^\star }\) denotes the Vorob’ev expectation conditioned on \({\mathcal {E}}_n\) and \(Q_{n+1, \alpha _{n+1}^\star }\) the Vorob’ev expectation conditioned on \({\mathcal {E}}_n\) and the addition of the point \(({\textbf{x}}, \xi ({\textbf{x}}))\) to the DoE. The idea behind (31) is to take as residual uncertainty , the variation with respect to the Vorob’ev expectation of the random closed set \(\Gamma \), with \( \Gamma :=\{{\textbf{x}} \in {\textbf{X}},\, \xi ({{\textbf{x}}}) \le T\}\). With the assumption that \(\alpha _{n+1}^\star =\alpha _n^\star \) and by re-injecting the quantity \({\mathcal {H}}_{n+1}({\textbf{x}})\) of (31) in the \({\mathcal {J}}_n\) criterion (15), it is possible to find a simplified formulation involving only an integral of a simple quantity (Chevalier 2013). This quantity is dependent on the cumulative distribution functions of the standard normal distribution and the bivariate centered normal distribution with given covariance matrix. Such a formulation then allows less time consuming computations and therefore is implemented in the package KrigInv (Chevalier et al. 2014).

B Kriging update formulas

In the context of SUR strategies, the quantity \({\mathcal {J}}_n({\textbf{x}})\) in Eq. (15) for a fixed \({\textbf{x}}\) is usually simplified thanks to formulas of kriging and conditionally on the point \(({\textbf{x}}, \xi ({\textbf{x}}))\) added to the DoE, and more particularly using the kriging standard deviation. Indeed, contrary to the trend, the kriging standard deviation does not depend on surrogate model observations. For instance the recurrent formula, used in Chevalier (2013) is efficient for calculating kriging model in the context of universal kriging and when the kriging parameters \(\beta \) and \(\theta \) do not need to be re-estimated. These kriging update formulas are given for all \({\textbf{y}}, {\textbf{y}}'\) in \({\mathbb {X}}^2\) by

with \({\textbf{x}}^{(n+1)}\) the \({n+1}\)th observation point.

As for SUR strategies, it is possible to generalize these formulas in the case of simultaneous additions of q points (Chevalier 2013). The advantage of these formulas is that the expressions of \(m_n\), \(\sigma _n\), and \(k_n\) are reused to reduce computational time. It is particularly useful in SUR strategies where many evaluations of the kriging formulas may be required for the numerous evaluations of the sampling criterion (in the context of its minimization (15)). Finally, it can be shown that these kriging formulas still coincide with the Gaussian process conditional formulas in the context of universal kriging (see Appendix A of Chevalier (2013) for a proof).

C Proof of the explicit formula for SUR Bichon

The interest of this appendix is to propose a demonstration of Proposition 1, allowing to give an explicit expression of SUR Bichon. Let’s start by stating and proving an intermediate lemma.

Lemma 2

Let N be a standard Gaussian random variable and \((a,b) \in {\mathbb {R}}^2\) such that \(a<b\), then:

where \(\varphi \) is the probability density function of the standard normal distribution.

Proof

\(\square \)

Proposition 1. For all \({\textbf{x}}, \,{\textbf{z}}\) belonging to \({\mathbb {X}}^2\), we have:

where \(\epsilon _{\textbf{x}}({\textbf{y}}):=\kappa \sigma _{n+1}({\textbf{z}})\), \(T^\pm :=T \pm \,\epsilon _{\textbf{x}}({\textbf{z}})\), \(\varphi \) and \(\phi \) denote the probability density and cumulative distribution functions of the standard normal distribution, respectively.

Proof

For the proof only and for the sake of lightening the notations, we note \({\mathbb {E}}_{n}\) the conditional expectation \({\mathbb {E}}\; [\, .\, |\, {\mathcal {E}}_n]\). In addition, the expression to be calculated is separated into three terms that are calculated separately. Specifically:

The calculation of the three terms separately is as follows:

For the calculation of \(\textcircled {3}\), the use of the Lemma 2 is necessary, using the notations of it.

The expected result is then obtained by re-injecting the expressions of \(\textcircled {1}\), \(\textcircled {2}\), and \(\textcircled {3}\) obtained in Eqs. (39)–(41) in Eq. (38). \(\square \)

Remark 2

\(\bullet \) In this proof of the proposition 1, the fact that \(m_n({\textbf{z}})\), \(\sigma _n({\textbf{z}})\), \(\epsilon _{\textbf{x}}({\textbf{z}})\) and \(T^\pm \) are constant with respect to \(\xi ({\textbf{z}})\) is implicitly used, in particular to output \(m_n({\textbf{z}})\), \(\sigma _n({\textbf{z}})\) and \(\epsilon _{\textbf{x}}({\textbf{z}})\) of the conditional expectation \({\mathbb {E}}_{n}\), but also for the renormalization of \(\xi ({\textbf{z}})\) and the transition to the density probability and cumulative distribution functions of the standard normal distribution.

At left and center, representation of the two \({\hat{\Gamma }}_1\) and \({\hat{\Gamma }}_2\) estimators after 100 iterations (first line) and 200 iterations (second line) for SUR Vorob’ev, and with the contour lines of \(p_n\) (first column) and \(m_n\) (second column), in comparison with the true excursion set (top right), for Loggruy function inversion (\(d=2\)) with \(T=10\,log_{10}(3.6)\), for a particular initial DoE of size 10

D Robustness of estimators with respect to the GP stationarity assumption

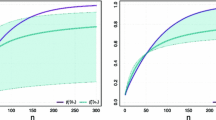

A certain instability of the approximation error was observed at the beginning of the enrichment in Figs. 4 and 6 in the case of estimator \({\hat{\Gamma }}_2\) corresponding to Vorob’ev expectation, in comparison to \({\hat{\Gamma }}_1\) naive estimator. This robustness of naive estimator \({\hat{\Gamma }}_1\) compared to \({\hat{\Gamma }}_2\) can be explained by the fact that naive estimator corresponds to the median of Vorob’ev (Appendix A, Eq. 27). Indeed, even if the notions of expectation and median of Vorob’ev are not similar to the classical ones, the property of minimizing the first absolute central moment of the median is preserved when extending the notion of median to the framework of Vorob’ev random sets (Molchanov 2005 p. 178). It is also possible to “read” the lack of robustness on Eq. (28) of Appendix A which is recalled below:

Knowing the strong dependence of the \(\sigma _n\) term on the stationarity condition of the process, it is straightforward that the term \(\phi ^{-1}(\alpha ^\star )\,\sigma _n({\textbf{x}})\) plays an important role in the non-robustness of \({\hat{\Gamma }}_2=Q_{\alpha ^\star }\) estimator, compared to naive estimator \({\hat{\Gamma }}_1\) where this term \(\phi ^{-1}(\alpha ^\star )\,\sigma _n({\textbf{x}})\) does not appear.

To illustrate this robustness issue, we define the Loggruy function in dimension 2 as follows:

with \(({\textbf{a}}_i)_i=\begin{pmatrix} 0.153 \\ 0.939 \\ \end{pmatrix}\); \(({\textbf{b}}_i)_i=\begin{pmatrix} 0.854 \\ 0.814 \\ \end{pmatrix}\); \(({\textbf{c}}_i)_i=\begin{pmatrix} 0.510 \\ 0.621 \\ \end{pmatrix}\); \(({\textbf{d}}_i)_i=\begin{pmatrix} 0.207 \\ 0.386 \\ \end{pmatrix}\); \(({\textbf{e}}_i)_i=\begin{pmatrix} 0.815 \\ 0.146 \\ \end{pmatrix}\) and \(r:=0.07\).

The threshold chosen is \(T=10\log _{10}(3.6)\) and the corresponding \(\Gamma ^\star \) excursion set (Eq. 1) is composed of 5 disconnected components. This function is particularly interesting in our context, since it has strong gradients at the edges of the domain and weaker gradients in the middle, where the different zones of the \(\Gamma ^\star \) excursion set are located (Fig. 8c). This means that the stationarity assumption of the kriging meta-model cannot be verified.

Figure 8 represents in the case of an enrichment of 100 and 200 points of SUR Vorob’ev, from an initial DoE of size 10, the contour lines of the coverage probability \(p_n\) and the kriging mean \(m_n\). Estimators \({\hat{\Gamma }}_1\) and \({\hat{\Gamma }}_2\) are also represented and compared to \(\Gamma ^\star \). An irregularity is observed for the contour lines of \(p_n\) either after 100 or 200 iterations. But, \(m_n\) is relatively accurate even after 100 iterations. \({\hat{\Gamma }}_2\) estimator is then less efficient than \({\hat{\Gamma }}_1\) estimator.

In summary, \({\hat{\Gamma }}_2\) estimator related to Vorob’ev theory is more dependent on the stationarity hypothesis than \({\hat{\Gamma }}_1\) naive estimator, and this is essentially explained by the construction of Vorob’ev expectation which is more sensitive to the kriging standard deviation (Eq. 28). Consequently, the approximation error \(\textrm{Err}\big ({\hat{\Gamma }}_2(\chi _{j,n}) \big )\) is more dependent on the stationarity hypothesis than the naive approximation error \(\textrm{Err}\big ({\hat{\Gamma }}_1(\chi _{j,n}) \big )\).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Duhamel, C., Helbert, C., Munoz Zuniga, M. et al. A SUR version of the Bichon criterion for excursion set estimation. Stat Comput 33, 41 (2023). https://doi.org/10.1007/s11222-023-10208-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11222-023-10208-4