Abstract

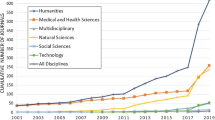

In recent decades China has witnessed an impressive improvement in science and its scientific output has become the second largest in the world. From both quantitative and qualitative perspectives, this paper aims to explore China’s comparative advantages in different academic disciplines. This paper employs two datasets: publications in all journals and publications in the top 5 % journals by discipline. With the former database we investigate the comparative advantages of each academic discipline in terms of absolute output volume, and with the latter database we evaluate the scientific output published in prestigious resources. Different from the criticism stated in previous literature, this paper finds that the quality of China’s research (represented by papers published in high-impact journals) is promising. Since 2006 the growth of scientific publications in China has been driven by papers published in English-language journals. The increasing visibility of Chinese science seems to be paving the way for its wider recognition and higher citation rates.

Similar content being viewed by others

Notes

These primary goals are from the official guideline of the “Medium- and Long-Term Programme for Science and Technology Development” (in Chinese). The whole document is available on the website of MSTC (Ministry of Science and Technology of the People’s Republic of China) http://www.most.gov.cn/kjgh/kjghzcq/.

The growth rate is calculated based on the R&D expenditure data from China Statistical Yearbooks on S&T, various issues.

See more details at http://www.gov.cn/gongbao/content/2006/content_240246.htm.

Data were collected from Scopus-SciVerse Elsevier. Detailed information on data is provided in “Data and methodology” section.

Data are collected from Scopus-SciVerse Elsevier. Detailed information on data is provided in “Data and methodology” section.

It should be noted that citation impact and journal impact factor are two related indicators. Journal impact factor is one step further and calculated based on citation counts.

The h-index indicates that the first h publications of a scientist/researcher received at least h citations.

SJR is regarded as an extension of JIR. Therefore, in the remainder of the paper, impact factor also refers to SJR.

The more citation accounts can be manipulated the less accurate they are to reflect the research quality. Some example tips to increase citation counts can be seen at https://www.aje.com/en/education/other-resources/articles/10-easy-ways-increase-your-citation-count-checklist.

As of May 1997.

See more at http://www.natureindex.com/.

For instance, Conroy et al. (1995) focus on a core set of eight “Blue Ribbon” journals to evaluate the performance of Economic Departments while Nature Publish Group (NPG) selects 68 journals to form a high-quality science dataset.

The impact factor values for journals in different disciplines were downloaded from Scopus “Journal Metrics” website (excel file). http://www.journalmetrics.com/values.php. The journal dataset this analysis employed is the 2013 version.

In order to keep the scientific output comparable in different years, we select only the high-impact journals that have existed through the whole 2000–2012 period.

This is calculated by the worldwide total minus China.

See footnote 15.

Data are collected from Scopus-SciVerse Elsevier (as of May 1997).

EU27 includes Austria, Belgium, Bulgaria, Cyprus, Czech Republic, Denmark, Estonia, Finland, France, Germany, Greece, Hungary, Ireland, Italy, Latvia, Lithuania, Luxembourg, Malta, Netherlands, Poland, Portugal, Romania, Slovakia, Slovenia, Spain, Sweden and United Kingdom.

Examples are Business & management, Decision sciences, Economics, Arts & humanities, Veterinary, Nursing, Psychology, Healthy and Dentistry.

We choose 2005 and 2013 as the two comparable years in the RCA figures. As will be explained in a later section, 2005 is the changing point after which the language structure of China’s publications has greatly changed. Therefore, for the RCA quality index we would like to take 2005 as a reference year. In order to be consistent, we use 2005 for RCA quantity index as well. Data and figures for other years are available upon request.

This is based on the data collected from Scopus—SciVerse Elsevier (as of May 1997).

References

Balassa, B. (1965). Trade liberalization and “revealed” comparative advantage. The Manchester School, 33(2), 99–123.

Balassa, B. (1977). “Revealed” comparative advantage revisited: An analysis of relative export shares of the industrial countries, 1953–1971. The Manchester School, 45(4), 327–344.

Bensman, S. J. (1996). The structure of the library market for scientific journals: The case of Chemistry. Library Resources & Technical Services, 40, 145–170.

Braun, T., Glänzel, W., & Schubert, A. (2006). A Hirsch-type index for journals. Scientometrics, 69, 169–173.

Castillo, C., Donato, D., & Gionis, A. (2007). Estimating number of citations using author reputation. String Processing and Information Retrieval-LNCS, 4726, 107–117.

Chuang, Y., Lee, L., Hung, W., & Lin, P. (2010). Forging into the innovation lead—A comparative analysis of scientific capacity. International Journal of Innovation Management, 14(3), 511–529.

Conroy, M., Dusansky, R., Drukker, D., & Kildegaard, A. (1995). The productivity of economics departments in the U.S.: Publications in the core journals. Journal of Economic Literature, 33, 1966–1971.

Ding, Z., Ge, J., Wu, X., & Zheng, X. (2013). Bibliometrics evaluation of research performance in pharmacology/pharmacy: China relative to ten representative countries. Scientometrics, 96, 829–844.

Eysenbach, G. (2011). Can Tweets predict citations? Metrics of social impact based on Twitter and correlation with traditional metrics of scientific impact. Journal of Medical Internet Research, 13(4), e123. doi:10.2196/jmir.2012.

Falagas, M., Kouranos, V., Arencibia-Jorge, R., & Karageorgopoulos, D. (2008). Comparison of SCImago journal rank indicator with journal impact factor. The FASEB Journal, 22, 2623–2628.

Feist, G. (1997). Quantity, quality, and depth of research as influences on scientific eminence: Is quantity most important? Creativity Research Journal, 10, 325–335.

Garfield, E. (1955). Citation indexes for science—A new dimension in documentation through association of ideas. Science, 122, 108–111.

Garfield, E. (1972). Citation analysis as a tool in journal evaluation. Science, 178, 471–479.

Garfield, E. (2003). The meaning of the impact factor. International Journal of Clinical and Health Psychology, 3, 363–369.

Guan, J., & Gao, X. (2008). Comparison and evaluation of Chinese research performance in the field of bioinformatics. Scientometrics, 75(2), 357–379.

Hayati, Z., & Ebrahimy, S. (2009). Correlation between quality and quantity in scientific production: A case study of Iranian organizations from 1997 to 2006. Scientometrics, 80, 625–636.

Hirsch, J. E. (2005). An index to quantify an individual’s scientific research output. Proceedings of the National Academy of Sciences of the USA, 102(46), 16569–16572.

Hoeffel, C. (1998). Journal impact factor. Allergy, 53, 1225.

Jiang, J. (2011). Chinese science ministry increases funding. Nature,. doi:10.1038/news.2011.515.

Jin, B., & Rousseau, R. (2004). Evaluation of research performance and scientometric indicators in China. In H. F. Moed, W. Glänzel, & U. Schmoch (Eds.), Handbook of Quantitative Science and Technology Research (pp. 497–514). Dordrecht: Kluwer Academic Publishers.

Kostoff, R. N. (2008). Comparison of China/USA science and technology performance. Journal of Informetrics, 2, 354–363.

Kostoff, R. N., Briggs, M. B., Rushenberg, R. L., Bowles, C. A., Icenhour, A. S., Nikodym, K. F., et al. (2007). Chinese science and technology—Structure and infrastructure. Technological Forecasting and Social Change, 74, 1539–1573.

Leydesdorff, L. (2009). How are new citation-based journal indicators adding to the bibliometric toolbox? Journal of the American Society for Information Science and Technology, 60(7), 1327–1336.

Leydesdorff, L. (2012). World shares of publications of the USA, EU-27, and China compared and predicted using the new interface of the Web-of-Science versus Scopus. El professional de la información, 21(1), 43–49.

May, R. M. (1997). The scientific wealth of nations. Science, 275, 793–796.

Moed, H. (2008). UK Research Assessment Exercises: Informed judgements on research quality or quantity? Scientometrics, 74, 153–161.

Moiwo, J., & Tao, F. (2013). The changing dynamics in citation index publication position China in a race with the USA for global leadership. Scientometrics, 95, 1031–1051.

Nature Publishing Group. (2015). Nature journals offer double-blind review. Nature, 518, 274.

Nomaler, O., Frenken, K., & Heimeriks, G. (2013). Do more distance collaborations have more citation impact. Journal of Informetrics, 7, 966–971.

OECD. (2008). OECD reviews of innovation policy: China. Paris: OECD.

Saha, S., Saint, S., & Christakis, D. (2003). Impact factor: A valid measure of journal quality? Journal of the Medical Library Association, 91, 42–46.

Schubert, A., & Braun, T. (1986). Relative indicators and relational charts for comparative assessment of publication output and citation impact. Scientometrics, 9, 281–291.

Seglen, P. (1997). Why the impact factor of journals should not be used for evaluating research. British Medical Journal, 314, 498–502.

Shuai, X., Pepe, A., & Bollen, J. (2012). How the scientific community reacts to newly submitted preprints: Article downloads, twitter mentions, and citations. PLoS One, 7(11), e47523. doi:10.1371/journal.pone.0047523.

Snodgrass, R. (2006). Single- versus double-blind reviewing: An analysis of the literature. SIGMOD Record, 35(3), 8–21.

The State Council of the People’s Republic of China (SC-PRC). (2006). The National Medium- and Long-Term Programme for Science and Technology Development (2006–2020). http://www.gov.cn/jrzg/2006-02/09/content_183787.htm (in Chinese)

Van Leeuwen, T. N., Moed, H. F., Tijssen, R. J. W., Visser, M. S., & Van Raan, A. F. J. (2001). Language biases in the coverage of the Science Citation Index and its consequences for international comparisons of national research performance. Scientometrics, 51, 335–346.

Wang, L., Meijers, H., & Szirmai, A. (2013b). Technological spillovers and industrial growth in Chinese regions. UNU-MERIT Working Papers 2014-044.

Wang, L., Notten, A., & Surpatean, A. (2013a). Interdisciplinarity of nano research fields: A keyword mining approach. Scientometrics, 94(3), 877–892.

Zhou, P., & Leydesdorff, L. (2006). The emergence of China as a leading nation in science. Research Policy, 35, 83–104.

Acknowledgments

The author of this study is grateful to the valuable comments from Richard Deiss (policy officer in DG Research and Innovation), members of the consortium and the anonymous referees.

Author information

Authors and Affiliations

Corresponding author

Additional information

This paper is a follow-up to an earlier research “Science Technology and Innovation Performance of China”, which was funded by the European Commission and implemented by the consortium of Sociedade Portuguesa de Inovação (SPI), The United Nations University—Maastricht Economic and Social Research and Training Centre on Innovation and Technology (UNU-MERIT), and the Austrian Institute of Technology (AIT).

Appendix

Rights and permissions

About this article

Cite this article

Wang, L. The structure and comparative advantages of China’s scientific research: quantitative and qualitative perspectives. Scientometrics 106, 435–452 (2016). https://doi.org/10.1007/s11192-015-1650-2

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-015-1650-2