Abstract

Consumers’ feedback helps firms, yet most requests for feedback are unanswered. Research on question–behavior effects suggests that providing feedback on prior experiences might influence subsequent consumption behavior, but provides little insight regarding users who decline requests (e.g., by clicking “No, Thanks”). Accordingly, we investigate whether the exposure to a request to rate a consumption experience influences users’ future conversion regardless of their compliance. We carried out two large-scale field studies in collaboration with a leading international website that offers basic service for free, and additional desirable features for a fee (“freemium”). We exposed users to a rating request and measured their subsequent likelihood of converting to the paid service. Users exposed to a rating request were more likely to convert compared with users who were not exposed; this effect persisted over 90 days. Notably, users who complied with the request were no more likely to convert compared with non-compliers.

Similar content being viewed by others

1 Introduction

Consumer-generated product reviews are a powerful source of influence on consumer behavior (see, e.g., King et al., 2014 and Rosario et al., 2016). Accordingly, websites and apps are increasingly focused on soliciting reviews from consumers, asking users to “take a moment to rate” their experiences.

Numerous studies have investigated how reviews affect consumers who read them, and have identified various factors that moderate these effects (e.g., Lee & Youn, 2009; Rosario et al., 2016; Sen & Lerman, 2007). Yet, little is known about how users’ consumption behavior is affected by the act of producing an online review, or by merely being asked to do so (King et al., 2014). Research in the offline domain suggests that eliciting feedback from an individual about a product (e.g., a review or a statement of purchase intentions) influences that individual’s subsequent consumption behavior (Chandon et al., 2004; Spangenberg et al., 2006). These observations are examples of question–behavior effects (QBEs), broadly defined as phenomena in which questions about intentions, predictions of future behavior, or measures of satisfaction affect subsequent performance (Sprott et al., 2006). Notably, thus far, studies examining QBEs have effectively suffered from a selection bias: They considered only individuals who complied with experimenters’ requests, comparing them against individuals who were not exposed to such requests. Accordingly, these studies cannot provide insight regarding what happens when a participant declines to respond to a request. This lack of information substantially impairs our capacity to understand how consumption behavior is influenced by requests to provide online reviews, given that, in practice, 90% of users who are presented with a request to review a product or service online do not comply (Arthur, 2006; Nielsen, 2006).

The current study addresses these gaps by exploring how individuals’ consumption behavior is affected by exposure to a request to review their experiences online—as well as by their compliance with the request. We draw from research in marketing communications, integrated with current knowledge on QBEs, to propose that mere exposure to a rating request can affect a user’s consumption behavior, regardless of compliance. We test this proposition in two field experiments with over 80,000 users from around the world. These experiments were performed in collaboration with a leading international website with tens of millions of users. The website, “Webservice.com,” asked to remain anonymous, and we have taken steps to ensure confidentiality of business outcomes. Webservice.com employs a “freemium” business model, offering a basic service package for free, and additional desirable features for a fee. We exposed some users to a request to rate their experiences with the website, and we evaluated all users’ likelihood of conversion from the free version of the service to the paid version.

We found that users who were exposed to a rating request were more likely than users who were not exposed (i.e., the control group) to convert to the paid service. Notably, this effect was not contingent on compliance with the request: users who were exposed to a request and did not comply (clicking a “no thanks” button or the “x” at the upper right corner of the pop-up window) were significantly more likely than control-group members to convert to a paid version of the service, and were no less likely to convert compared with those who complied with the request. We use the term “No Thanks Effect” to refer to the potential positive effect of exposure to rating requests on the conversion likelihood of non-compliers.

2 Theory Development and Hypothesis

Given the lack of research on how review requests affect users’ consumption behavior, we approach this question through the lens of QBEs, a category of phenomena that includes self-prophesy, mere measurement, self-erasing errors of prediction, and self-generated validity (Sprott et al., 2006). In general, the notion that approaching an individual for feedback regarding a behavior subsequently affects that behavior has been shown to be robust across different domains, experimental procedures, types of products, and time durations (Chandon et al., 2004; Sherman, 1980; Spangenberg, 1997). Yet, thus far, most studies providing evidence for QBEs on consumer behavior elicited those effects using elaborate intention or satisfaction surveys, comprising multiple questions and often requiring a significant time investment on the respondent’s part (e.g., Gollwitzer & Oettingen, 2008; Morwitz & Fitzsimons, 2004). Accordingly, it is unclear to what extent QBEs are applicable to online environments, in which rating requests are commonly embedded in prompts that users are exposed to only briefly and can—and typically do—ignore (Nielsen, 2006).

Notably, some QBE studies suggest that a brief or simple intervention is sufficient to affect behavior. For example, Ofir and Simonson (2001) and Ofir et al. (2009) showed that it is possible to (negatively) affect consumers’ evaluations of a consumption experience simply by informing them prior to consumption that they will be asked to provide such evaluations—yet those studies did not measure the consumption behavior itself. In turn, a study by Spangenberg et al. (2003) revealed that posing a question can influence behavior even among individuals who do not verbally respond. For example, posting a sign reading “Ask Yourself… Will You Recycle?” in an area highly trafficked by students elicited an uptick in student recycling behavior. Yet, in contrast to the typical prompt requesting an online review, that study did not offer participants an explicit opportunity to choose whether to answer or ignore the question.

We suggest that the effect identified by Spangenberg et al. (2003) resembles observations in the domain of marketing communications, in which exposure to a brief ad for a product is well known to enhance consumption. Indeed, prior studies have identified additional common threads between QBEs and consumers’ responses to advertising, suggesting that the two may be driven by similar mechanisms. An example of such a mechanism is attitude accessibility, defined as the speed at which an attitude can be activated from memory (Fazio, 1995). Notably, this mechanism—which can be manipulated (e.g., Powell & Fazio, 1984) and can be triggered even by exposure to brief communications (Berger & Mitchell, 1989; Fazio et al., 1989)—has been shown to drive enhanced consumption following exposure (and response) to questions regarding consumption intentions and satisfaction (Morwitz & Fitzsimons, 2004; see the General Discussion for further discussion). On the basis of the commonalities between QBEs and responses to brief advertising communications, coupled with the findings of Spangenberg et al. (2003), we suggest that prompting users with a request to rate a product or service can enhance their consumption behavior—even if they do not comply with the request. Formally:

-

H1: Exposure to a rating request will positively influence consumption behavior.

-

H2: Non-compliance with a rating request is associated with enhanced consumption behavior compared with non-exposure to a rating request (control group).

3 Field Experiments

3.1 Research Context—Webservice.com

We tested our experiments in a collaboration with Webservice.com. Webservice.com offers a virtual marketplace platform from which users can market products to the public. The company provides a basic marketplace service free of charge, where users can display their products (including pictures, prices, and descriptions). Webservice.com also offers several for-fee premium service packages, starting from $12.95 per month, which offer additional features (e.g., unlimited bandwidth, VIP helpdesk support). Most importantly, paying users can carry out sales transactions through the platform itself, whereas non-paying users must rely on alternative channels (e.g., email or an affiliate website). According to Webservice.com, the onsite transaction feature is the most significant functional difference between the free and the premium service packages.

Most users of Webservice.com use the free version of the service. Webservice.com regards conversion rate—the percentage of users who adopt premium services—as a key metric of its success.

Our collaboration with Webservice.com included two studies: a short-term (one-week) study comprising only US-based English-speaking users; and a large-scale experiment, executed over 3 months, with participants from 90 countries. None of the participants in the first experiment was included in the second.

3.2 Study 1: Short-Term Effect of a Rating Request on Conversion

Design and participant recruitment procedure

This study focused on English-speaking, US-based Webservice.com users who had joined on a specific date that did not overlap with any bank or religious holidays in the USA. On that day, a random set of new Webservice.com users (n = 4102)—i.e., users with no prior experience with the platform—was assigned to our sample. Each user was randomly assigned to the treatment group (2038 users) or the control group (2064 users). Users in the treatment group were asked to rate the website at some point in their usage of the service, whereas users in the control group were not (see below). After 8 days, we calculated the number of users who had converted from free to premium in both conditions (conversion rate).

Treatment

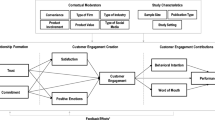

During the eight days following the recruitment day, each member of the treatment group was presented with a request to rate their experience using Webservice.com (1–5 stars) and to add an optional comment. Both the rate request and the comment box were included in the same pop-up window (see Fig. 1). Users who did not wish to comply with the request were given an option to remove the prompt (by clicking a “no thanks” button or the “x” at the upper right corner of the pop-up window). The design of the prompt, in terms of graphics and placement, was determined by Webservice.com.

A key challenge was to determine when to display the rating request to users, so as to create maximal treatment consistency. After careful deliberation with Webservice.com’s management, we decided to time the rating request such that a given user would only view the request after adding at least one product to the website. This approach, in line with the behavior model for persuasive design (Fogg, 2009), ensured that a user would view the rating request only after expressing both motivation (having products to sell) and ability (adding products to the marketplace). The treatment and control groups did not differ in terms of the number of individuals who added at least one product to the website (93.45% and 93.46% of users, respectively, i.e., the vast majority of users).

Results

For each group, we computed the conversion rate using all conversion decisions that occurred in the week after assignment to our sample. A binary logit regression, in which the independent variable was experimental condition (1—treatment group; 0—control group) and the dependent variable was the conversion rate, showed that conversion rate was positively affected by treatment (β = 027, wald = 2.931, p = 0.087).

We also used a crosstabs analysis to compare the two groups. The conversion rate for the control group was 3.7% (76 out of 2064 users), whereas the conversion rate for the treatment group was 4.8% (97 out of 2038 users, χ2 = 2.95; likelihood ratio = 2.953, p = 0.086). Thus, the conversion rate of treatment-group participants was 29.3% higher than the control-group conversion rate.

In this experiment, we had no information about compliance with the rating request. The results suggest that, in line with H1(a), the overall effect on conversion was positive. While these effects are only significant at the p < 0.1 level, the effect size is clearly large and holds economic significance. Hence, Webservice.com invited us to carry out a second, larger-scale experiment.

3.3 Study 2: A Large-Scale Longitudinal Field Experiment

The goals of study 2 were threefold: (1) lending robustness to study 1’s findings through the use of a much larger population from diverse locations; (2) testing H1(b), by distinguishing between users who complied with the request and those who did not; (3) eliminating time duration as a possible alternative explanation. That is, one might argue that our treatment in study 1 merely expedited the process of users who intended to convert, and that the effect might diminish over time. To rule out this explanation, we observed users in study 2 for a longer period (90 days).

Participants and procedure

The design of this experiment was similar to that of study 1, but with a few modifications to the recruitment procedure. First, the participant pool was expanded to users from all 190 countries the website serves and the prompt was translated into 46 languages. Second, the recruitment period was extended to 70 days. During this period, Webservice.com randomly assigned users who joined its platform to our study (n = 91,424). Each of these users was randomly assigned to either the treatment group (n = 45,430) or the control group (n = 45,994). Third, we tracked participants for 90 days after the recruitment period. We made note of the usage package (basic/free, premium/paid) of each user at three time points: 30, 60, and 90 days following their specific date of exposure to treatment. For each user in the control group, we defined these time points on the basis of the “hypothetical” date on which the user would have seen the prompt had he or she been assigned to our treatment group.

With this information, at each focal time point, we calculated the conversion rate as the percentage of participants who had upgraded their free accounts to one of the available (for-fee) premium options.

In this experiment, 6,429 usersFootnote 1 (around 7%) converted to a premium subscription before adding a product to the platform, i.e., prior to the date when they would have been exposed to our treatment. Those were removed from the data. Thus, our final sample comprised 84,995 users (treatment group: 42,258 users; control group: 42,737 users).

4 Results

Conversion rate at each time point

First, to evaluate the effect of treatment on conversion rate, we used a binary logistic analysis with experimental condition as the independent variable (1—treatment group; 0—control group) and conversion rate at the 30-day mark as the dependent variable. We observed that the conversion rate at the 30-day mark was positively and significantly affected by the rating request (β = 0.043, wald = 4.169, p = 0.041). We also used crosstabs analysis to compare the conversion rates between experimental conditions at each time point considered. At 30 days after treatment (or hypothetical treatment for the control group), the conversion rate for the control group was 11.79% (5,040 out of 42,737 users), and the treatment group’s conversion rate was 12.25% (5,176 out of 42,258 users); these two conversion rates were significantly different (χ2 = 4.169, likelihood ratio = 4.169, p = 0.041). Similarly, at the 60-day and 90-day time points, the conversion rates for the treatment group were significantly higher than those of the control group. Specifically, at 60 days, conversion rates were 12.27% vs. 12.79% for the control and treatment groups, respectively (χ2 = 5.245, likelihood ratio = 5.245, p = 0.022); and at 90 days the conversion rates were 13.28% vs. 13.84%, respectively (χ2 = 5.780, likelihood ratio = 5.780, p = 0.016). Taken together, these results support H1(a) (Table 1).Footnote 2 Moreover, the fact that the differences between experimental conditions persisted over time suggests that exposure to the prompt elicited an actual increase in conversion rate, as opposed to merely expediting the conversions of users who would have converted in any case.

The association between rating request and conversion among non-compliers

Of the 42,258 users who were exposed to the rating request, 34,494 (81.61%) chose not to rate their experience on Webservice.com—that is, they clicked either the “no thanks” button or the “x” at the top right corner of the pop-up window. We compared the conversion rate of these (treated) non-compliers to the conversion rate of the control group, as well as to that of the (treated) compliers.

When distinguishing between non-compliers and compliers, it is important to acknowledge that the two groups are not randomly assigned, and thus may be biased by selection. We had almost no information about users that we could use to check for systematic differences. Nevertheless, for robustness, we reran our analyses using a sample balanced according to users’ country—one of the only two covariates available for each user (the other was language, which was highly correlated with country and thus could not be used separately). In what follows, we first report our results for the unbalanced data set, and then report results for the balanced sample.

4.1 Comparison of non-compliers to the control group

We carried out a binary logistic regression in which we compared the subsample of (treated) non-compliers to the control group, with experimental condition as the independent variable (1—treated non-compliers; 0—control group) and conversion rate at the 30-day mark as the dependent variable. This analysis showed that exposure to the rating request was significantly associated with the conversion rate, despite non-compliance (β = 0.046, wald = 4.239, p = 0.040). The conversion rate for the control group was 11.79% (5,040 out of 42,737 users), whereas the conversion rate for the non-compliers in the treatment group was 12.28% (4,235 out of 34,494 users). A crosstabs analysis (Table 2) showed that this difference was significant (χ2 = 4.239, likelihood ratio = 4.234, p = 0.040). We subsequently compared the various conversion rates at 60 days and at 90 days. As shown in Table 2, the conversion rate of treated non-compliers was significantly higher than that of the control group at both time points (60 days: χ2 = 5.184, likelihood ratio = 5.178, p = 0.023; 90 days: χ2 = 6.362, likelihood ratio = 6.353, p = 0.012). Notably, though its magnitude is not large, the effect is significant and consistent over time, and is considered substantial in freemium-based industries.

Our robustness analysis with the balanced sample (weighted according to country) produced similar results. For example, when focusing on the conversion rate at the 30-day mark as the dependent variable, we find that exposure to the rating request was positively associated with the conversion rate of the non-compliers, despite non-compliance (β = 0.046, wald = 14.406, p < 0.001).

4.2 Comparison of non-compliers to compliers

We carried out a binary logistic regression in which we compared the subsample of (treated) non-compliers to the (treated) compliers (1—non-compliers; 2—compliers), with conversion rate at the 30-day mark as the dependent variable. This analysis showed that compliance with the rating request was not significantly associated with the conversion rate (β = -0.015, wald = 0.146, p = 0.702). We subsequently used crosstabs analysis to compare the conversion rate of non-compliers and the conversion rate of compliers at the 30-day mark. We observed no significant differences between the conversion rates (χ2 = 0.146, likelihood ratio = 0.147, p = 0.702). Similarly, the difference between non-compliers and compliers was not significant at 60 days (χ2 = 0.107, likelihood ratio = 0.107, p = 0.744) or at 90 days (χ2 = 0.573, likelihood ratio = 0.575, p = 0.449). Our robustness analysis using the balanced data set produced similar results. For example, when focusing on the conversion rate at the 30-day mark as the dependent variable, we observe that compliance had no significant effect on the conversion rate (β = -0.009, wald = 0.11, p = 0.917).

Comparing the compliers to the control group we find no statistical difference in the conversion rate between the two (See Table 2). However, the 7,764 compliers include two different compliance behaviors. 1,380 users have provided a written comment and a star rating (labeled: “comment compliers”), whereas the others only provided only a star rating (labeled: “rate compliers”). When looking at the rate compliers and comment compliers separately we find that the rate compliers exhibit a higher conversion rate than the control (p = 0.076 after 30 days, p = 0.60 after 60 days, p = 0.58 after 90 days; See Table 3). These effects are similar to those documented in the literature (Wood et al., 2016), and thus marginal significance may be attributed to compliers group size. Comment compliers exhibit lower conversion rates compared with the control group, though this difference is not significant (p > 0.1). We further observe marginally significant difference when comparing the conversion rates of comment compliers versus those of rate compliers (see Table 3). The reason might be those comment compliers are different in their traits or their experience of the website. Indeed, a t test in which we compared the average ratings of rate compliers and of comment compliers revealed a significant difference. Specifically, the average rating (out of five) given by the rate compliers (M = 4.67, SD = 0.69) was significantly higher than the average rating given by the comment compliers (M = 4.53, SD = 0.91; t = 6.193, p < 0.001). Since the experiment design enabled comments in 46 languages from more than a hundred cultural contexts, and since our focus is the non-compliers, we do not analyze the comments themselves in this study.

5 General Discussion

The current research reveals that, in line with our hypothesis, a single exposure to a request to rate one’s experience with an online service has a positive effect on users’ likelihood of converting from a free version of the service to a paid version—and was found to be positively associated with conversion rate, even among users who do not comply with the request. In two field studies involving tens of thousands of participants from all over the world, we observed that the effect of exposure to a rating request on conversion persisted one week (study 1), and even one, two, and three months after exposure to the rating request (study 2). Moreover, study 2 revealed that the behavior of users who complied with the request was no different from that of users who were exposed to the request but did not comply, alluring to the existence of a “No Thanks Effect.”

Our work makes several theoretical contributions. First, it contributes to research on the influence of online reviews on consumer behavior by focusing on the consumption patterns of individuals who have been asked to provide a review, rather than on consumers who read reviews (the dominant focus of this stream of literature thus far; King et al., 2014). Our findings suggest that a review request can, in fact, influence consumption behavior, and that mere exposure to the request may be just as powerful as the act of reviewing. Moreover, our results expand the literature on QBEs, by exploring these effects in the online realm and, more importantly, by suggesting that even when users decide not to comply with a feedback request from a website, their subsequent behavior is similar to what it would have been if they had, in fact, complied.

Our hypothesis was built on the idea that QBEs may, in certain ways, resemble individuals’ responses to advertising, and may be driven by similar mechanisms. In our view, attitude accessibility, discussed briefly in the Theory Development section, is a particularly plausible mechanism for the effects we observed. Attitude accessibility—which can be manipulated by exposure to questions about one’s own preferences (Morwitz & Fitzsimons, 2004) or to advertising communications (Berger & Mitchell, 1989; Fazio et al., 1989)—is well known to affect behavior (e.g., Glasman & Albarracin, 2006). Specifically, when an attitude is more accessible, less thinking is required to act in accordance with that attitude. This reduction in effort has been shown to facilitate decision making (Blascovich et al., 1993; Fazio & Powell, 1997; Fazio et al., 1989; Holland et al., 2003), as well as to enhance consumption (Morwitz & Fitzsimons, 2004). In our studies, exposure to rating requests may have increased the accessibility of users’ attitudes regarding Webservice.com, encouraging these users to convert, even if they did not comply with the requests. We note that this proposition implies that the attitudes that consumers accessed were generally positive. And indeed, 91.1% of the users in our sample who provided feedback rated the website positively (4–5 out of 5 stars). These ratings are in line with Webservice.com’s reputation as a high-quality website, receiving favorable reviews in high-profile industry outlets.

Numerous alternative mechanisms have been proposed to underlie QBEs, and these mechanisms may also have contributed to the effects observed. In general, many of the prominent mechanisms proposed—including cognitive dissonance (e.g., Spangenberg et al., 2003), behavioral simulation, and processing fluency (e.g., Janiszewski & Chandon, 2007)—are typically considered to capture cognitive changes triggered by the answering process (even if the answer is not verbalized; Spangenberg et al., 2003), and thus are unlikely to explain the behavior of users who did not comply with the rating request. In contrast to these response-contingent mechanisms, theories of attitude accessibility (e.g., Fazio, 1995) suggest that mere exposure to a question can activate attitudes associated with that question, regardless of whether one responds. Moreover, attitude accessibility is the only QBE mechanism that has been shown to produce long-term effects such as those we observed (Fitzsimons & Morwitz, 1996; Godin et al., 2008; Morwitz et al., 1993). Together, these arguments support our conjecture that attitude accessibility underlies the effects we identified.

Yet, our research design did not enable us to directly investigate the role of attitude accessibility—or any other mechanism—in the effects observed. Indeed, this is one of the limitations of our research. Future studies should explore potential underlying mechanisms in a controlled environment—including, for example, the possibility that the rating request itself influenced participants’ positive perceptions of the website (e.g., by showing that the company cares about the user experience) and thus increased their likelihood of conversion.

Our data were also limited in their capacity to reveal differences across user types and segments. The few details at our disposal regarding user characteristics—specifically, users’ country and language—suggest that such differences are likely to exist. A detailed analysis of the effect of such differences on conversion is beyond the scope of this research but constitutes an interesting direction for future work.

Our findings have practical implications for website owners and providers of digital services. In particular, freemium-based websites—which may have large user bases, yet struggle to elicit payment from these users—might be able to boost their conversion rates merely by prompting their users to rate their experiences, and they should not be discouraged by the fact that the vast majority of users are likely to ignore these prompts.

Notes

For users in the control group, we omitted users who converted before their respective “hypothetical” treatment dates. We omitted 3,172 users from the treatment group and 3,257 users from the control group.

For additional robustness, we reran our analysis while controlling for valence of review (for those who complied) and found no differences.

References

Arthur, C. (2006). What is the 1% rule? TheGuardian. Retrieved July 1, 2019, from http://www.theguardian.com/technology/2006/jul/20/guardianweeklytechnologysection2.

Berger, I. E., & Mitchell, A. A. (1989). The effect of advertising on attitude accessibility, attitude confidence, and the attitude-behavior relationship. Journal of Consumer Research, 16(3), 269. https://doi.org/10.1086/209213

Blascovich, J., Ernst, J. M., Tomaka, J., Kelsey, R. M., Salomon, K. L., & Fazio, R. H. (1993). Attitude accessibility as a moderator of autonomic reactivity during decision making. Journal of Personality and Social Psychology, 64(2), 165–176. https://doi.org/10.1037/0022-3514.64.2.165

Chandon, P., Morwitz, V., & Reinartz, W. (2004). The short- and long-term effects of measuring intent to repurchase. Journal of Consumer Research, 31(3), 566–572. https://doi.org/10.1086/425091

Fazio, R. H. (1995). Attitudes as object-evaluation associations: Determinants, consequences, and correlates of attitude accessibility. In R. E. Petty & J. A. Krosnick (Eds.), Ohio State University series on attitudes and persuasion Attitude strength: Antecedents and consequences, vol 4 (pp. 247–282). Lawrence Erlbaum Associates Inc.

Fazio, R. H., & Powell, M. C. (1997). On the value of knowing one’s likes and dislikes: Attitude accessibility, stress, and health in college. Psychological Science, 8(6), 430–436. https://doi.org/10.1111/j.1467-9280.1997.tb00456.x

Fazio, R. H., Powell, M. C., & Williams, C. J. (1989). The role of attitude accessibility in the attitude-to-behavior process. Journal of Consumer Research, 16(3), 280. https://doi.org/10.1086/209214

Fitzsimons, G. J., & Morwitz, V. G. (1996). The effect of measuring intent on brand-level purchase behavior. Journal of Consumer Research, 23(1), 1–11.

Fogg, B. (2009). A behavior model for persuasive design. Proceedings of the 4th International Conference on Persuasive Technology. doi:https://doi.org/10.1145/1541948.1541999

Glasman, L. R., & Albarracin, D. (2006). Forming attitudes that predict future behavior: A meta-analysis of the attitude-behavior relation. Psychological Bulletin, 132(5), 778. https://doi.org/10.1037/0033-2909.132.5.778

Godin, G., Sheeran, P., Conner, M., & Germain, M. (2008). Asking questions changes behavior: Mere measurement effects on frequency of blood donation. Health Psychology, 27(2), 179. https://doi.org/10.1037/0278-6133.27.2.179

Gollwitzer, P. M., & Oettingen, G. (2008). The question-behavior effect from an action control perspective. Journal of Consumer Psychology, 18(2), 107–110. https://doi.org/10.1016/j.jcps.2008.01.004

Holland, R. W., Verplanken, B., & Knippenberg, A. V. (2003). From repetition to conviction: Attitude accessibility as a determinant of attitude certainty. Journal of Experimental Social Psychology, 39(6), 594–601. https://doi.org/10.1016/s0022-1031(03)00038-6

Janiszewski, C., & Chandon, E. (2007). Transfer-appropriate processing, response fluency, and the mere measurement effect. Journal of Marketing Research, 44(2), 309–323. https://doi.org/10.1509/jmkr.44.2.309

King, R. A., Racherla, P., & Bush, V. D. (2014). What we know and don’t know about online word-of-mouth: A review and synthesis of the literature. Journal of Interactive Marketing, 28(3), 167–183. https://doi.org/10.1016/j.intmar.2014.02.001

Lee, M., & Youn, S. (2009). Electronic word of mouth (eWOM). International Journal of Advertising, 28(3), 473–499. https://doi.org/10.2501/s0265048709200709

Morwitz, V. G., Johnson, E., & Schmittlein, D. (1993). Does measuring intent change behavior? Journal of Consumer Research, 20(1), 46. https://doi.org/10.1086/209332

Morwitz, V. G., & Fitzsimons, G. J. (2004). The mere-measurement effect: Why does measuring intentions change actual behavior? Journal of Consumer Psychology, 14(1–2), 64–74. https://doi.org/10.1207/s15327663jcp1401and2_8

Nielsen, J. (2006). The 90–9–1 rule for participation inequality in social media and online communities. Nielsen Norman Group. Retrieved July 1, 2019, from http://www.nngroup.com/articles/participation-inequality/.

Ofir, C., & Simonson, I. (2001). In search of negative customer feedback: The effect of expecting to evaluate on satisfaction evaluations. Journal of Marketing Research, 38(2), 170–182. https://doi.org/10.1509/jmkr.38.2.170.18841

Ofir, C., Simonson, I., & Yoon, S. O. (2009). The robustness of the effects of consumers’ participation in market research: The case of service quality evaluations. Journal of Marketing, 73(6), 105–114. https://doi.org/10.1509/jmkg.73.6.105

Powell, M. C., & Fazio, R. H. (1984). Attitude accessibility as a function of repeated attitudinal expression. Personality and Social Psychology Bulletin, 10, 139–148. https://doi.org/10.1177/0146167284101016

Rosario, A. B., Sotgiu, F., Valck, K. D., & Bijmolt, T. H. (2016). The effect of electronic word of mouth on sales: A meta-analytic review of platform, product, and metric factors. Journal of Marketing Research, 53(3), 297–318. https://doi.org/10.1509/jmr.14.0380

Sen, S., & Lerman, D. (2007). Why are you telling me this? An examination into negative consumer reviews on the Web. Journal of Interactive Marketing, 21(4), 76–94. https://doi.org/10.1002/dir.20090

Sherman, S. J. (1980). On the self-erasing nature of errors of prediction. Journal of Personality and Social Psychology, 39(2), 211–221. https://doi.org/10.1037//0022-3514.39.2.211

Spangenberg, E. (1997). Increasing health club attendance through self-prophecy. Marketing Letters, 8(1), 23–31. https://doi.org/10.1023/A:1007977025902

Spangenberg, E. R., Block, L. G., Fitzsimons, G. J., Morwitz, V. G., & Williams, P. (2006). The question–behavior effect: What we know and where we go from here. Social Influence, 1(2), 128–137. https://doi.org/10.1080/15534510600685409

Spangenberg, E. R., Sprott, D. E., Grohmann, B., & Smith, R. J. (2003). Mass-communicated prediction requests: Practical application and a cognitive dissonance explanation for self-prophecy. Journal of Marketing, 67(3), 47–62. https://doi.org/10.1509/jmkg.67.3.47.18659

Sprott, D. E., Spangenberg, E. R., Knuff, D. C., & Devezer, B. (2006). Self-prediction and patient health: Influencing health-related behaviors through self-prophecy. Medical science Monitor, 12(5), RA85–RA91.

Wood, C., Conner, M., Miles, E., Sandberg, T., Taylor, N., Godin, G., & Sheeran, P. (2016). The impact of asking intention or self-prediction questions on subsequent behavior: A meta-analysis. Personality and Social Psychology Review, 20(3), 245–268.

Funding

European Research Council Award Number: 759540 | Recipient: Gal Oestreicher-Singer, PhD.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

Approved by the Tel Aviv University Ethic committee June 19th, 2018.

Conflict of Interest

Authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors are grateful for the helpful comments made by Yael Steinhart, as well as seminar participants at Bar-Ilan University, and session participants at the CBSIG conference in Bern 2019.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Perez, D., Oestreicher-Singer, G., Zalmanson, L. et al. “No, Thanks”: How Do Requests for Feedback Affect the Consumption Behavior of Non-Compliers?. Mark Lett 34, 83–97 (2023). https://doi.org/10.1007/s11002-022-09631-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11002-022-09631-w