Abstract

In this paper we present an approach where ultrasonic testing data (UT) is linked with its spatial coordinates and direction vector to the examined specimen. Doing so, the processed nondestructive testing (NDT) results can be visualized directly on the sample in real-time using augmented or virtual reality. To enable the link between NDT data and physical object, a 3D-tracking system is used. Spatial coordinates and NDT sensor data are stored together. For visualization, texture mapping was applied on a 3D model. The testing process consists of data recording, processing and visualization. All three steps are performed in real-time. The data is recorded by an UT-USB interface, processed on a PC workstation and displayed using a Mixed-Reality-system (MR). Our system allows real-time 3D visualization of ultrasonic NDT data, which is directly drawn into the virtual representation. Therefore, the possibility arises to assist the operator during the manual testing process. This new approach results in a much more intuitive testing process and a data set optimally prepared to be saved in a digital twin environment. The size of the samples is not limited to a laboratory scale, but also works for larger objects, e.g. a helicopter fuselage. Our approach is inspired by concepts of NDE 4.0 to create a new kind of smart inspection systems.

Similar content being viewed by others

1 Introduction

Digital representations of non-destructive testing and evaluation (NDT/NDE) results are commonly displayed as two-dimensional images disregarding the actual three-dimensional surface structure of the examined objects. Non-destructive ultrasonic scans (UT) covering a curved area suffer from this flattening effect. In order to correctly reallocate collected data to its measurement point, the inspector is burdened with an additional cognitive load. The second disadvantage is the lack of a permanent relation to the examined area on the aircraft structure, which may be important for future analyses and damage comparison. Because of the missing link to the real structure respectively its digital representation, even today temporary marks are drawn onto large objects like the surface of aircraft structures to record and discuss the effects of damages. In aeronautics, NDT investigations are done on a regular basis or if indicated by incidents. Non-destructive UT data is commonly displayed as flattened lateral C- and D-scans. These are typically created during the measurement following approved engineering guidelines. Other evaluation techniques make use of advanced reconstruction methods to achieve 3D imaging, e.g. as proposed by Ewert et al. [1] or Vrana et al. [2].

New technologies and especially new methods to compute and visualize data are addressed by numerous researches in the concept of NDE 4.0. This idea suggests a completely new kind of smart inspection systems and digital data eco systems [3, 4]. It is a multidisciplinary approach aiming to implement technologies of Industry 4.0 as well as new process structures to create and improve value chains. To achieve this goal underlaying concepts like digital twins are being used [5]. A digital twin is a virtual representation of a real-world object. It includes all relevant information and algorithms to describe the real object in a highly accurate manner. This offers the opportunity to simulate and hence, predict the system behavior under certain conditions. As structural damages may have a significant impact on system operations, NDT data and results are an important part of a digital twin [6, 7].

2 Related Research

Augmented and virtual reality technologies are active fields of research. Countless projects were started to investigate the potential benefits by integrating these technologies into various processes. Areas of application that are often mentioned for industrial use are training, maintenance and inspection [8, 9].

Various of these mentioned research projects focus on identifying the potential benefits of the combination of NDT methods with augmented and virtual reality (AR/VR) technologies. These projects come from different research areas like medicine, civil engineering and material science.

Physical diagnosis in medicine and NDT in industrial developments are using the same sensor principles and therefore often overlap and trigger each other. The integration of AR/VR technologies in medical applications for displaying additional virtual content has promising advantages [10]. One example of how AR can be used to visualize UT results is shown by Garcia-Vázquez et al. They use AR glasses (Microsoft HoloLens) to directly project the current ultrasound result into the user’s field of view. The virtual projection is aligned to an “augmented reality marker” (comparable to a QR code, QR: Quick Response) mounted on the probe. Their approach reduces the necessity to permanently switch the view from the patient to the device display [11].

Research in civil engineering also shows a growing interest to integrate AR/VR technologies into their NDT processes. Schickert et al. store the CAD (Computer Aided Design) and NDT data in a building information modelling (BIM) system which we interpret as a subset of a digital twin. The results of their performed UT and RADAR investigations of a test specimen can be overlayed in an AR view using an Android tablet. Optical markers were used for the tracking of their specimen. The alignment of NDT and CAD data is done manually [12].

Multiple approaches in material science try to integrate AR/VR into NDT-processes: In 2015 Meyer et al. published an overview of possible applications that use AR for the visualization of NDT results [13]. Deppe et al. use a system setup comparable to our demonstrator for their research. They focus on inspecting flat metal surfaces. Therefore, they use the tracking system to identify the position of their sensor in a two-dimensional space. The visualization of the inspection path is realized with an extended reality (XR) head mounted display (HMD) [14].

The 3D-SmartInspect system, presented by Fraunhofer IZFP is meant to assist manual ultrasound or eddy current (ET) inspections. Their two-dimensional tracking is realized by a colored marker placed on the sensor. They focus on automatic real-time signal processing. The results can be instantly visualized, either on a computer screen or through a Microsoft HoloLens [3, 15].

Prado et al. from Embraer S.A. present a concept to display structural health monitoring (SHM) data on an aircraft structure. With their AR system, they can selectively overlay the camera image with the inner aircraft structure, SHM sensors and the SHM sensor data. A tablet PC is used as display hardware and the object tracking is done with fiducial markers [16].

The combination of NDT with AR/VR can be considered as a part of NDE 4.0. The overall aim of the mentioned projects is to improve individual processes by providing additional visual content.

3 Demonstrator Development

3.1 Technological Concept

In our project we aimed to improve NDT on aircraft structures made of carbon-fiber reinforced polymers. The three main technologies used for this work are mixed reality for visualization, spatial tracking for creating a link between the physical object and NDT data and elements of digital twins for data assignment.

Instead of creating an entire digital twin, we were inspired by related concepts to realize the described technology demonstrator. Accordingly, it is limited to the necessary functionalities. Our developed approach may be adopted by a complete implementation of a digital twin in the future.

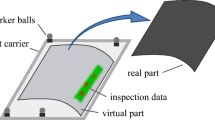

The main idea to address deficiencies mentioned in the introduction is to take advantage of pre-known 3D geometry of the evaluated object and to draw UT data directly into the texture of this virtual object. The required 3D data can be gained from CAD data or—if unavailable—be created using reverse engineering technologies like 3D scanning. Our 3D model was obtained using a commercially available handheld 3D scanner. The virtual 3D model needs to be aligned to the real-world object to achieve a geometrical overlap. This creates the link between the physical sample and its virtual representation. Thus, the system implicitly knows the position of the tracked sensor in the real-world and the virtual space. Accordingly, data can be directly drawn into the texture of the virtual object as a C-/D-Scan fitted to the curvature of the tested object. Therefore, our approach does not provide a complete UT tomography but a 3D mapped representation of the inspection results.

In order to simulate a realistic testing environment, a helicopter tail structure made of carbon fiber reinforced plastics (CFRP) is used as a sample object. Depending of the investigated area, the structure consists of monolithic and sandwich CFRP-structure.

3.2 Implementation

A prerequisite is that the 3D model has to be UV unwrapped at first. UV unwrapping means that texture UV coordinates have already been calculated to apply a texture [17]. Using these UV coordinates, every point on the 3D model surface can be assigned to a 2D point on the texture and reverse.

The demonstrator consists of a spatial tracking system, UT equipment, a mixed reality headset (Fig. 1) and a PC running a game engine with MR capabilities [18]. The modular approach allows us to run all software components on different machines. This has advantages in terms of load balancing, which may be beneficial when integrating more complex sensor technologies like phased array probes. Additionally, all soft- and hardware components (e.g., Human–Machine-Interfaces, UT hardware) can quickly be exchanged or reimplemented. In our experiment the different software programs run on the same computer to simplify the data transfer.

One part of the demonstrator is a spatial tracking system. It is used to determine the position and orientations of the UT sensor and the MR HMD in regard to a global coordinate system. We implemented two different tracking systems. To provide a proof of our concept, we started with the consumer grade equipment HTC Vive Lighthouse used for home entertainment applications [19, 20]. After successfully testing the setup, the integration of a more performant system was necessary. We chose a camera based optical ARTTRACK5 tracking system [21]. The new system has the advantage of a higher accuracy while providing more flexibility by means of camera number, their placement, the number of tracked objects and data distribution. Both systems operate using infrared radiation sent out by base stations, e.g., cameras. Although the mechanisms for position calculation are fundamentally different, both systems require a continuous line of sight from the base station to the tracked object.

The second part of the demonstrator is a mixed reality headset. We use the Varjo XR-1 head mounted display suitable for augmented and virtual reality applications [22]. Two built-in cameras in the front make the system capable of integrating their captured images of the surrounding area in the VR environment. Therefore, it is possible to selectively hide parts of reality and to integrate additional virtual three-dimensional content.

In order to record NDT data, we used an UT-USB interface box (PCUS pro-Single) [23]. A 10 MHz UT sensor is directly attached to this device and operated in impulse-echo mode. With the provided. NET software development kit (SDK) the device can be configured. The measurement data can be read as an array with configurable length of float values to be processed in further steps.

Prior to any NDT activities, the digital 3D model needs to be aligned with its real representation using the tracking system. This is currently done with the analog sticks of an Xbox Controller. A more precise alignment can be made writing the coordinates directly into the game engine. In our approach the tail structure stays at a fixed position. This eliminates the necessity to track the sample and to permanently realign the real with the virtual object. Once the 3D model has been aligned and the settings of the UT hardware have been adjusted, the system can be set into recording mode. This enables the system to save all measured UT data for further evaluation.

Signal-processing runs in the UT frontend software (see Fig. 2, left), so the performance critical part (game engine) doesn’t have to handle it. Optionally the signal processing for more complex data evaluation can be transferred to a separate machine. This can be useful for more complex data reconstruction methods like 3D-SAFT (Synthetic Aperture Focusing Technique). At first, the raw UT signal is gathered and processed using a Hilbert transformation to generate the simply evaluable analytic signal. After that, triggers for configurable time gates can be defined. A particular trigger/time gate setup tagging the entry echo is used for coupling detection. This enables the system to recognize the dip of the entry echo amplitude caused by the couplant on the sensor-tip. The triggers/time gates can also be used to color code the signal. This can be done in two ways according to C- and D-scan image generation: amplitude encoding for C-scans and time encoding for D-scans. The assigned false color is calculated using the HSV color model. The maximum signal value (C-scan) or the position of the maximum signal value (D-scan) within the time gate is normalized and then assigned to a HSV color by varying the H value between 0 (120°, green) and 1 (360°, red). If multiple time gates for color assignment are defined, the first time gate that is triggered will be used to assign the color for the whole UT signal. The time gates are ordered by their start values and must not overlap. If no time gate is triggered, the signal color will stay at its default value (120°, green). The tagged and color-assigned signal then needs to be serialized for transmission via the network interface. The compiled UDP (User Datagram Protocol) packet has a size of approx. 33 kB, containing five time gates and 8192 sample values. For each UT impulse one packet is generated and sent to the game engine.

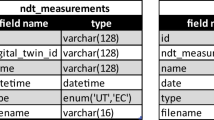

The used format to serialize the UT data for transmission to the game engine is described in Tables 1 and 2.

In the game engine the virtual representation of the UT tool is colored in the assigned signal color. This happens regardless to the coupling detection tag to give the operator visual feedback for a working network connection (e.g., for trouble shooting).

Then the trigger for coupling detection is evaluated. If the corresponding field in the data packet is not set for a successful coupling detection, the signal is being discarded. In order to limit the number of recorded data points per sensor position, the system calculates the distance to the closest data point that has already been recorded. Only if the distance is larger than an adjustable threshold, the signal will pass the proximity test and be further evaluated. In the next step, the game engine runs a collision detection test. If the position of the UT sensor tip is close to any point on the surface of the virtual 3D model, the collision criteria will be satisfied. Otherwise, the signal will be discarded as well. This is done to filter signals that have passed the prior steps as false positives. False positives may occur if remaining couplant on the UT sensor is damping the entry echo.

Once the signal has successfully passed these three tests, the assigned color is drawn into the texture of the 3D model using the UV texture coordinates. The necessary UV coordinates are provided by the collision detection data structures of the game engine [24]. The base element used for drawing into the texture is a sprite in the form of a symmetric 2D Gaussian, meaning that the color intensity/opacity of the drawn spot decreases radially [25]. The base color of the 3D model texture is 100% transparent so that—for an AR view—only the UT measurement data is being shown. An additional background layer may be applied to achieve a semi-transparent rendering of the whole sample.

All the signal processing is done in real-time so the inspector gets immediate feedback.

4 Results

4.1 Overview

We have built a system that is capable to draw UT measurement data on a 3D model in real-time. We established a relation between NDT/UT data and the underlaying 3D model being inspired by the concept of a digital twin. The recorded results contain the UT data and the corresponding position with respect to the virtual representation of the CFRP structure.

An example of the result is shown in Fig. 3. It shows the tail structure of a helicopter made of carbon fiber reinforced plastics. While large parts of the fuselage are made of a CFRP sandwich structure the inside stringers and frames have a monolithic structure.

The color assignment is based on depth, similar to D-scan image generation. In the right part of Fig. 3 the thin monolithic areas are drawn in violet where the thicker sandwich areas are drawn in green.

Using the described system setup, viewing the results is not limited to two-dimensional images anymore. As we combine the NDT data with spatial information, three-dimensional representations can be created. It can be viewed from any arbitrary angle and additionally from the back side. Thus, a much more intuitive imaging of NDT results is possible.

Figure 5 shows the process of inspection in a VR view and the coloring of the UT sensor tool mentioned before.

4.2 System Performance

The hardware of the used workstation laptop consists of an Intel Core i9 CPU, 64 GB RAM and Nvidia GTX 2080 SUPER GPU. The UDP stream is sent over the loopback ethernet interface of this machine.

Coloring of the 3D model happens without any noticeable lag in real-time: UT signal recording and time gate processing without transferring to the game engine needs approx. 2.5 ms (2048 sample values, 3 time gates). For the whole data path from recording until drawing a colored spot into the 3D model texture, the delay varies stochastically in a time window defined by the frame rate of the game engine. This is caused by the current implementation: the collision detection step (see Fig. 2) is currently done during the frame calculation. Thus, it is synchronized with the actual frame rate, while UT signal measurement is not synchronized as it is running in a separate process. Therefore, the resulting delay depends on the exact moment, when a UT signal arrives at the game engine with respect to the frame calculation. This can also be seen in Fig. 6: the occurrences of the delays are almost evenly distributed in a range between a few milliseconds and approx. 30 ms. This 30 ms value corresponds to the average frame rate of slightly above 30 frames per second during this specific experiment. This flat distribution only applies if the frame rate is higher than the signal measurement rate. Otherwise, the short delays would dominate, because of multiple arriving signals per frame. If the UT measurement is done on a separate machine, an additional delay for networking will be added.

In the current configuration (2048 sample values, 3 time gates), the UT frontend software needs 2.1 mbit/s bandwidth for sending the UT UDP stream to the game engine. Table 3 shows the measured data rates of the software in regard to the signal length:

Although the UT data sent via UDP is not optimized at this time (e.g. compression) an average internet connection is sufficient for real-time data transfer, when applying the mentioned configuration of 2048 sample values.

During the measurement, the game engine runs at a constant refresh rate of 60 frames per second, which results in a smooth display of the virtual contents. The frame rate is limited to 60 fps caused by activated vertical synchronization. The frame rate remains stable when performing additional calculations caused by the drawing of new measurement spots. We ran performance tests drawing up to 11,000 measurement spots into a underlaying 3D model that is made of 482,764 triangles.

The manufacturer of the tracking system states an accuracy of at least 0.75 mm/m with a standard deviation of 0.22 mm for a setup comparable to ours. For the whole tail structure volume this results in a calculated accuracy of approx. 4.8 mm (structure volume approx. 5 m × 2.6 m × 2.2 m) [26].

5 Discussion

The overall goal of our project is to improve NDT workflows and explanatory power of NDT by using new digital technologies that are part of Industry 4.0. This implementation is necessary to create smart inspect systems proposed by NDE 4.0. The presented demonstrator shows a lot of possible ways of how to change and improve NDT processes—not only from the inspector’s point of view, but also in regard to the way the data will be processed and used.

The potential benefits can be separated in two main areas:

The first area deals with the inspector and the execution of the testing itself. Currently there are only a few technology prototypes like the 3D SmartInspect system by Fraunhofer IZFP to dynamically assist the inspector during their work [3]. In traditional inspection procedures, already evaluated areas need to be marked manually—e.g., by drawing lines or dots using a permanent marker. Using the setup as described it gives clear visual feedback to the inspector. It contains information about the NDT result displayed at the correct position on the sample. This reduces the initially mentioned cognitive load. Additionally, it is easy to identify areas that have not yet been inspected. To further simplify the interpretation of the results, the inner structure can be displayed as a semi-transparent overlay during the actual inspection. The evaluation during and after the inspection is significantly simplified, because underlaying geometrical structures and composites can additionally be considered. All the mentioned points in combination are shifting the testing process to a tremendously more intuitive level in regard to performing the inspection and interpreting the results.

In addition to the benefits for the inspection, there is more potential to use. As the NDT data is already combined with the digital representation of the physical object, this provides the base for implementation into Industry 4.0 processes, e.g., life cycle management (LCM) or maintenance, repair and overhaul (MRO). All data is available in a digital format and can easily be accessed for various purposes, not yet associated with NDT applications. As a potential use case the spatial coordinates of the defects can be used to automatically design a milling pattern and the corresponding patch geometry for the repair of CFRP structures [27].

5.1 Requirements

In order to realize the described demonstrator, some basic requirements have to be fulfilled.

A requirement of the presented approach is the availability of a 1:1 3D model of the object to be evaluated. These 3D models from CAD data may not always be present. Alternatively, the use of reverse engineering technologies like 3D scanning allows to generate the required 3D data on-site. Necessary UV unwrapping may be automated. Depending on the size of the real sample and the scanning device, generation of the 3D model may take a considerable amount of time.

Besides the necessity of 3D model data, the presented approach requires accurate spatial tracking of the UT sensor and the evaluated object. For inspection sites without a permanently installed tracking system, appropriate mobile solutions for ad-hoc usage without the need of complex installation procedures are nowadays available as commercial off-the-shelf (COTS) products. Outside of a laboratory environment, there might exist additional challenges depending on the tracking technology, e.g., infrared radiation, dirt, climate.

5.2 Limitations

In the following paragraph we discuss some of the limitations we found during our work with the demonstrator.

5.2.1 HMD

To cover both, AR and VR environments, the demonstrator operates using the Varjo XR-1 mixed reality headset. While working with this setup, we experienced that MR is in general the most suitable visualization technology for NDT, although the used MR HMD is not ideal for the inspection. This opinion is based on three issues: the range and movement area are limited due to the fact that a permanent cable connection to the workstation is required. Additionally, the display part is constructed too bulky and too heavy for longer NDT inspections.

As the operator is compelled to interact with the real test sample, pure virtual reality is not really suitable, because the surrounding reality gets cut out. We assume, augmented reality headsets will be the better alternative for displaying the NDT result data during the inspection.

VR HMDs are suitable for evaluating the NDT data after the inspection. This is also beneficial when the examiner does not have access to the physical objects. The evaluation usually does not take as long as the inspection, so the ergonomic disadvantages concerning the inspection can be neglected.

5.2.2 Edge Effects

Due to the fact that unwrapping a 3D model to 2D may lead to unconnected polygon edges that are actually connected on the 3D model, measurement spots drawn into the texture will not cover all relevant polygons. Conversely this may lead to sharp edges in the rendering of the 3D model.

Figure 7 visualizes this problem. It shows a cube with its UV unwrapped faces A to F. As described above, the drawing of a measurement spot is done in a circular area around the actual sensor position using a Gaussian intensity distribution. A measurement spot is drawn into face D close to the edge to face A. In the UV unwrapping scheme, A and D do not share an edge, so the overlapping part of the measurement spot in not drawn into A. In the case of a shared edge like A and C, the overlapping part of the spot is automatically correctly drawn into A.

This effect can be minimized or prevented in different ways:

The UV unwrapping may be modified if edge effects occur in the evaluated area on the surface. The drawback of this procedure is, that it will likely cause multiple UV mappings for a single 3D model.

In the second method the adjacent polygon edges can be tested for touching with the measurement spot, when UT data is drawn into the texture. With additionally stored information out of the UV mapping, the touched edges can be determined as disconnected edges. Using the UV mapping the relevant polygons can be found. This procedure is being used by 3D texturing software.

5.2.3 Size Scaling of the Measurement Spots Needs to be Done Manually

The size of a drawn measurement spot should match the actual resolution of the UT sensor. It depends on various factors, e.g., sensor geometry and sensor frequency. When drawing the measurement spot into the texture, the ratio between the real object size and the UV scale needs to be taken into account.

6 Outlook and Future Work

With the demonstrator we have shown that the technical integration of XR technologies into NDT processes is feasible. Our approach to draw into the texture of a 3D model allows to display the results directly on the examined object in real-time. This leads to a very intuitive handling and eases the interpretation of the results and consequently, a reduction of the cognitive load.

Although we have shown the general feasibility, there are further investigations required in terms of accuracy in the context of NDT. This includes the accuracy of the tracking system as well as the data processing part. A digital representation always has some discrepancies to the real object—in our case geometry. This may also have an impact on the texture mapping accuracy.

For further development we aim to focus on the three main areas usability, system improvements and system extensions.

6.1 Usability

At the moment the prototype usability is not optimized for the actual NDT process. All NDT settings must be configured using standard input methods directly at the workstation running the software. Customized user input interfaces for AR/VR applications are not yet integrated. These are necessary to adapt a smooth NDT workflow. Therefore, future research will investigate various Human–Machine-Interfaces (HMI) for user input interaction.

Additionally, the 3D model has to be manually aligned with the real-world object at the workstation PC. The first step to simplify this process will be the integration of a three-point-calibration. A set of three unique positions on the real object and their corresponding positions on the 3D model needs to be marked by the user. The computer then uses regression algorithms to calculate the spatial transformation of the virtual model for an optimized alignment. A more complex approach would be to use optical data and depth information generated by stereo cameras already integrated in many XR HMDs. Based on deep learning technologies these data can be used to achieve auto-alignment capabilities [28].

6.2 System Improvements

As mentioned before, the used MR headset has some disadvantages regarding the comfort during the inspection. To address this fact, we plan to integrate pure AR into the inspection process and add a pure VR mode for the evaluation of the NDT data.

In the previous section we have shown that the existing edge effects may negatively influence the display of the NDT results. To enhance the precision, we plan to integrate further calculations to reduce the described impact.

In the current version of the demonstrator, the data is only recorded if it passes the coupling detection test, the proximity test and the collision detection test. In addition to its original function of limiting the data points per sensor position, we want to use the proximity test as a form of an examination grid [29]. Therefore, the threshold needs to be adjusted to the sensor resolution. This should reduce the possibility of hiding small defects.

The measured delays from UT signal recording to drawing a spot into the 3D model texture are statistically distributed in a range of 30 ms. To further reduce this range and thus improve the determinability of the system, the UT measurement should either be synchronized to the frame calculation or the collision detection step must be done independently from the frame calculation.

Additionally, we aim to work with smaller and movable samples. For those objects, we want to achieve more flexibility in handling by tracking these as well. This ensures that the link to their virtual representation remains, even if the samples are repositioned.

6.3 System Extensions

Using our approach, the whole ultrasound inspection data is available in a digital form. The consolidation of all referenced data can be described as a 3D digital representation of an inspection. This includes on the one hand UT settings and UT signals, and on the other hand the position data (coordinates and orientation) of the sensor and the inspector as well as the 3D model of the sample. A possible future application is to store all the mentioned information in a cloud service and to distribute it over a computer network in real-time. Accordingly, we plan to implement a web service to store all our acquired data. Using this technique, we want to create the possibility to supervise a UT inspection from a distant location and provide a new kind of remote assistance. In contrast to the established remote assistance systems based on video streams, we want to realize an ergonomic and intuitive experience in a mixed reality environment [30, 31]. Storing the NDT data in a cloud-like environment also offers us the opportunity to display and compare NDT results from the past with the current inspection data.

Other possible extensions are the integration of a phased array sensor and (semi-)automatization of the inspection process. Further NDT modalities like ET are planned to be integrated for research on data fusion technologies.

Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Code Availability

The source code generated during and/or analyzed during the current study is available from the corresponding author on reasonable request.

References

Ewert, U., Redmer, B., Kreutbruck, M., et al.: Quantitative tomographic non-destructive testing for flaw size measurement and life time prediction. In: European Conference on Fracture (ECF) (2010)

Vrana, J., Schörner, K., Mooshofer, H., et al.: Ultrasonic computed tomography: pushing the boundaries of the ultrasonic inspection of forgings. Steel Res. Int. 89, 1700448 (2018). https://doi.org/10.1002/srin.201700448

Valeske, B., Osman, A., Römer, F., et al.: Next generation NDE sensor systems as IIoT elements of industry 4.0. Res. Nondestr. Eval. 31, 340–369 (2020). https://doi.org/10.1080/09349847.2020.1841862

Vrana J, Singh R (2021) NDE 4.0-A Design Thinking Perspective. J Nondestr Eval 40:8. https://doi.org/10.1007/s10921-020-00735-9

Vrana, J.: The core of the fourth revolutions: industrial internet of things, digital twin, and cyber-physical loops. J Nondestruct Eval (2021). https://doi.org/10.1007/s10921-021-00777-7

Kuhn, T.: Digitaler zwilling. Informatik Spektrum 40, 440–444 (2017). https://doi.org/10.1007/s00287-017-1061-2

Ramm, S., Wache, H., Dinter, B., et al.: Der kollaborative digitale zwilling. Zeitschrift für wirtschaftlichen Fabrikbetrieb 115, 94–96 (2020). https://doi.org/10.3139/104.112319

Dini, G., Mura, M.D.: Application of augmented reality techniques in through-life engineering services. Procedia CIRP 38, 14–23 (2015). https://doi.org/10.1016/j.procir.2015.07.044

de Pace, F., Manuri, F., Sanna, A.: Augmented reality in industry 40. Am. J. Comput. Sci. Inform. Technol. 6, 17 (2018)

Casari, F., Navab, N., Hruby, L., et al.: Augmented Reality in Orthopedic Surgery Is Emerging from Proof of Concept Towards Clinical Studies: a Literature Review Explaining the Technology and Current State of the Art. Curr Rev Musculoskelet Med 14, 192–203 (2021). https://doi.org/10.1007/s12178-021-09699-3

García-Vázquez, V., von Haxthausen, F., Jäckle, S., et al.: Navigation and visualisation with HoloLens in endovascular aortic repair. Innov Surg Sci 3, 167–177 (2018). https://doi.org/10.1515/iss-2018-2001

Schickert, M., Koch, C., Bonitz, F.: Prospects for integrating augmented reality visualization of nondestructive testing results into model-based infrastructure inspection. In: NDE/NDT for Highways & Bridges: SMT (2018)

Meyer, J., Rehbein, J., de Freese, J., et al.: Visualisation of ultrasonic testing data using augmented reality. In: 7th International Symposium on NDT in Aerospace (2015)

Deppe R, Nemitz O, Herder J (2018) Augmented reality for supporting manual non-destructive ultrasonic testing of metal pipes and plates. Virtuelle und Erweiterte Realität – 15. Workshop der GI-Fachgruppe VR/AR:45–52. https://doi.org/10.2370/9783844062151

Fraunhofer IZFP: 3D-SmartInspect: Intelligente Prüfüberwachung und -dokumentation durch optisches Trackingsystem für Ultraschall- und Wirbelstromprüfung (2017). https://www.izfp.fraunhofer.de/content/dam/izfp/de/documents/aktuelles/2017/3D-SmartInspect-de.pdf. Accessed 22 Sep 2021

Prado, G., Silva, P., Simomura, F., et al.: SHM with augmented reality for aircraft maintenance. In: 31st Congress of the International Council of the Aeronautical Sciences (2018)

Beck, T.: Blender 2.7: Das umfassende Handbuch, 2., aktualisierte und erweiterte Auflage. Rheinwerk Design. Rheinwerk Verlag, Bonn (2018)

Unity Technologies: Unity Pro (2021). https://store.unity.com/de/products/unity-pro. Accessed 16 Sep 2021

HTC Corporation: VIVE Pro (2021). https://www.vive.com/de/product/vive-pro/. Accessed 16 Sep 2021

Valve Corporation: SteamVR (2021). https://store.steampowered.com/app/250820/SteamVR/. Accessed 16 Sep 2021

Advanced Realtime Tracking GmbH & Co. KG: ARTTRACK5 (2021). https://ar-tracking.com/en/product-program/arttrack5. Accessed 16 Sep 2021

Varjo HQ (2021). https://varjo.com. Accessed 16 Sep 2021

Fraunhofer IKTS: PCUS pro Single (2016). Accessed 13 Sep 2021

Unity Technologies: Unity - Scripting API: Physics.Raycast (2022). https://docs.unity3d.com/ScriptReference/Physics.Raycast.html. Accessed 09 Feb 2022

Shimodaira, H.: Gaussians, University of Edinburgh (2020)

Advanced Realtime Tracking GmbH & Co. KG: Accuracy of an ART Tracking System (2020)

Holtmannspötter, J., von Czarnecki, J., Feucht, F., et al.: On the fabrication and automation of reliable bonded composite repairs. J. Adhes. 91, 39–70 (2015). https://doi.org/10.1080/00218464.2014.896211

Advanced Realtime Tracking GmbH & Co. KG: Products in connection with the software CAPTA (2021). https://www.ar-tracking.com/en/product-program/products-connection-software-capta. Accessed 22 Sep 2021

Vrana, J.: Determination of an optimal examination grid for the automated ultrasonic inspection of heavy rotor forgings. In: 11th European Conference on Non-Destructive Testing (ECNDT 2014)

Meyendorf, N., Schallert, R., Pal, S., et al.: Using remote NDE, including external experts in the inspection process, to enhance reliability and address todays NDE challenges. In: 7th European–American Workshop on Reliability of NDE (2017)

Westerkamp, C., Behrens, R., Kolesnikow, A., et al.: Online maintenance assistance (OMA)—remote control of service and lab experiments. In: International Conference on Remote Engineering and Virtual Instrumentation (REV), pp. 353–356 (2014)

Funding

Open Access funding enabled and organized by Projekt DEAL. The authors have no relevant financial interests to disclose.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant non-financial interests to disclose.

Ethics Approval

All ethical COPE guidelines have been considered.

Informed Consent

All authors consent to participate in this work.

Consent for Publication

All authors consent to publish this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rehbein, J., Lorenz, SJ., Holtmannspötter, J. et al. 3D-Visualization of Ultrasonic NDT Data Using Mixed Reality. J Nondestruct Eval 41, 26 (2022). https://doi.org/10.1007/s10921-022-00860-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10921-022-00860-7