Abstract

Interviewers in face-to-face surveys can potentially introduce bias both in the recruiting and the measurement phase. One reason behind this is that the measurement of subjective well-being has been found to be associated with social desirability bias. Respondents tend to tailor their responses in the presence of others, for instance by presenting a more positive image of themselves instead of reporting their true attitude. In this study, we investigated the role of interviewers in the measurement of happiness. We were particularly interested in whether the interviewer’s happiness correlates with the respondent’s happiness. Our data comes from a face-to-face survey conducted in Hungary, which included the attitudes of both respondents and interviewers. The results of the multilevel regression models showed that interviewers account for a significant amount of variance in responses obtained from respondents, even after controlling for a range of characteristics of both respondents, interviewers, and settlements. We also found that respondents were more likely to report a happy personality in the presence of an interviewer with a happy personality. We argue that as long as interviewers are involved in the collection of SWB measures, further training of interviewers on raising awareness on personality traits, self-expression, neutrality, and unjustified positive confirmations is essential.

Similar content being viewed by others

Face-to-face surveys are still one of the main methods to measure happiness. In contrast to self-administered modes, interviewersFootnote 1 may be the cause of various errors such as coverage error, nonresponse error, or measurement error (West & Blom, 2016). For that reason, survey methodologists have been examining interviewer-effects since the 1950s (Hyman, 1954; Kahn & Cannell, 1957). Most studies focused on the correlation between the responses of cases assigned to the same interviewer, commonly referred to as interviewer variance. Interviewer variance may be related to interviewer characteristics (e.g. gender, age education, behavior, experience, physical appearance, etc.). High within-interviewer correlations may strongly influence survey outcomes, thus understanding what increases interviewer variance is essential. In addition, attitudinal questions have been found to be more susceptible to interviewer effects (Schaeffer et al., 2010). Nevertheless, research is lacking on how much bias interviewers introduce in the measurement of happiness. To fill this research gap, our study aims to assess interviewer variance in the measurement of happiness, and more specifically, to discover whether interviewers' happiness leads to variance in response distributions.

1 Theoretical Background

Biased measures face-to face surveys may originate from errors introduced by the interviewers either during the recruitment, or the measurement stage, or both. This section provides theoretical considerations and previous empirical results on the two error sources and their potential relation to the measurement of happiness.

1.1 Interviewer Effects During Recruitment

Interviewers play a key role in building sample frames and selecting sample units. Earlier studies have shown that there is variability among interviewers listing addresses (Eckman, 2013; Kwiat, 2009), generating household rosters (Tourangeau et al., 1997) and selecting household members (SAMHSA, 2008), resulting in varying coverage rates and coverage error. If coverage error is related to the variables of interest (e.g. happiness), results may be biased.

Interviewers are also responsible to gain cooperation from sampling units. Several interviewer characteristics have been found to predict the success of recruitment. For instance, the interviewers' behavior, personality, or attitudes can impact contact-, cooperation rates, or response rates. Examining data from the European Social Survey (ESS), Blom et al. (2011) found that interviewers with high self-confidence and ability to project a professional and positive image when reacting to refusals yielded higher contact- and cooperation rates. In the study of Jäckle et al. (2013), extroversion was positively, while openness and assertiveness were negatively associated with co-operations rates. Yu et al. (2011) also detected a positive relationship with extroversion and a negative one with agreeableness, but no association for conscientiousness, openness, or neuroticism. Vassallo et al. (2017) reported higher response rates among interviewers with lower neuroticism, who are less satisfied with their ability of self-expression, but more aware of how they portray themselves. Turner et al. (2014), on the other hand, found no link between the Big Five personality traits and response variance. Self-monitoring, the ability to tailor how one is perceived by others, for instance, has also been found to be unrelated to response rates (Campanelli et al., 1997). As argued by Groves and Couper (2012) interviewer success may depend more on learned skills, and therefore more closely related to experience, rather than fixed personality trait.

Interviewer characteristics may not only impact response rates, but may also affect sample compositions. Some studies found that socio-demographic similarities between interviewers and respondents increased the success of recruitment (Brehm, 1993; Durrant et al., 2010; Lord et al., 2005; Moorman et al., 1999; Webster, 1996). For instance, Durrant et al. (2010) reported that a “match” between the interviewer’s and the respondent’s gender and education produced higher cooperation rates. The phenomena may be explained by the liking theory of Groves et al. (1992), which suggests that people are favorably inclined toward those with whom they have similar characteristics or attitudes. Although, we are not aware of such evidence, it is plausible to assume that interviewers holding positive attitudes or presenting themselves as a “happy person” may not only increase cooperation, but especially increase the cooperation of those respondents with similar characteristics.

1.2 Interviewer Effects During Measurement

The effect of the interviewer on measurement has been widely studied in the literature. Such effects also strongly vary with interviewer characteristics, or question type (West & Blom, 2016). The race of the interviewer is considered a strong moderator, whereas evidence on other characteristics, such as age, gender, or experience is mixed. Several studies have used matching of respondents and interviewers by, for instance, race to compensate for this. The reason why matching can help is that respondents may be less likely to edit their responses when the perceived normative expectations of an interviewer is not strongly different from the respondent’s attitudes. We discuss why normative expectations may matter in details above. Another relatively consistent finding in the literature is that “attitudinal, sensitive, ambiguous, complex, and open-ended questions are more likely to produce variable interviewer effects” (West & Blom, 2016, 11.)

Few studies addressed the question of whether the interviewer's substantive responses are correlated with the respondents’ substantive responses. Andersen and Olsen (2002) reported no links between the interviewers' personal habits or attitudes toward smoking and alcohol consumption during pregnancy and the responses they gained. Similarly, Healy and Malhotra (2014) found no effect of the interviewer's partisan identification on the respondents’ political affiliation. Other studies, however, found that the interviewers' political preferences do predict respondents' answers. In the study of Lipps and Lutz (2010), respondents were more likely to have an opinion similar to the opinion of the interviewer on different issues. Németh and Luksander (2018) found a strong link between the interviewers’ and the respondents’ party choices. In the study of Himelein (2016), responses were also affected by the interviewer's self-reported attitudes toward social issues, especially among female respondents. Finally, Mneimneh et al. (2020) found that the interviewers’ religious attitudes influenced reports about religious attitudes. To our knowledge, no study so far has discovered whether the happiness of the interviewer is associated with the happiness of the respondent.

Why would interviewers play a role when measuring happiness? The measurement of happiness has been found to be related to social desirability bias (SDB; Bajo et al., 2020; Brajša-Žganec et al., 2011; Fastame & Penna, 2012; Steel & Ones, 2002; but see the negative results of Veehoven (1991 and Caputo (2017)). That is when respondents intend to present a more positive image of themselves instead of reporting their true attitude (e.g. level of happiness, Tourangeau and Smith (1996)). Several authors argued that high SDB may undermine the validity of measures of happiness and result in overreporting of happiness (Holder, 2012). A similar mechanism, impression management (the conscious or subconscious effort towards influencing how others perceive oneself), has also been linked to the reporting of SWB measures (Diener et al., 1999).

SDB has been found to be more common in interviewer-administered modes, compared to self-administered modes (De Leeuw, 2005). Interviewers, especially in face-to-face interview situations, tend to give the respondents cues, for instance by nonverbal communication, which helps respondents to find the most desirable answer (Lipps & Lutz, 2010). Conti & Pudney (2011) reported higher job satisfaction in face-to-face interviews compared to self-administered interviews. The fact that satisfaction was lower in the presence of a spouse, and higher in the presence of a child highlights that people tend to tailor their responses due to the social context of the interview (see also Chadi, 2013a; Kavetsos et al., 2014)). Similarly, Ng et al. (2019) found higher reports of subjective well-being (SWB) and more socially desirable responses in face-to-face interviews compared to responses collected online. Nevertheless, we are aware of one study where the telephone mode yielded significantly higher reports of SWB, compared to the face-to-face mode (Dolan & Kavetsos, 2016), and Sarracino et al. (2017) found comparable results on SWB in web and telephone surveys.

Anyhow, it seems plausible to assume that respondents may unconsciously tailor their responses in the light of the interviewer’s personality or attitudes. If an interviewer presents himself or herself with a positive, happy personality, that may impact how respondents see themselves. Happiness has been found to be strongly correlated to compassion (Mongrain et al., 2011), empathy and altruism (Lu et al., 2020), trait emotional intelligence, and social support (Blasco-Belled et al., 2020; Ye et al., 2019). Such interviewer attitudes may become transparent during the interview in verbal- and nonverbal forms and may impact the self-perception of the respondent positively. Furthermore, happy interviewers may be more likely to evoke or reinforce socially desirable responding, as SDB can be stronger when respondents have positive feelings towards the other person in the conversation.

Of the few studies that investigated interviewer-effects in SWB measurement, Chadi (2013b) hypothesized that in a panel survey the relationship between the interviewee and the interviewer may affect reports of SWB questions (trust-in-interviewer hypothesis). He found that changes to the person conducting the interview partially explained life satisfaction drops in time. Another study reported significant effects of interviewer characteristics in the measurement of well-being (Kassenboehmer & Haisken-DeNew, 2012). They found that male interviewers and more experienced interviewers elicit significantly lower life satisfaction responses. The study of Biermann et al. (2019) corroborated these findings. The authors found strong impact of the interviewer on reported SWB measures, with a lower level of SWB if interviewed by a male or more experienced interviewer.

2 The Current Study

This study intended to uncover the role of interviewers in the measurement of happiness. Although interviewer bias may arise both during recruitment and measurement (West & Olson, 2010), in this study we focused on measurement error. In order to get more nuanced results, we assessed interviewer effects on three happiness measure: present happiness, childhood happiness, and happy personality. We intended to analyze separately from the standard measurement of happiness the two other components of happiness (often mentioned in different segments of the literature of psychology, e.g. the impact of happiness during childhood in developmental psychology or the role of satisfaction (as part of a happy character) in labor market success, etc.), because we assumed that the size of the interviewer effects will vary with the time or the personality component. We posed three main research questions. We asked what proportion of variance is accountable for interviewers in the measurement of the three happiness variables (RR1a), and which measures are more sensitive to interviewer-effects (RQ1b)? Second, we asked which interviewer characteristics are associated with the reporting of happiness, closely focusing on the interviewer’s assessment of their own happiness (RQ2). Third, we asked whether any “match” in demographic features between the respondent and the interviewer has a direct effect on the responses or interacts with the effects posed in RQ2.

3 Data and Methods

3.1 Data collection and Sample

We draw on data collected in Hungary in 2020. The fieldwork was carried out in 7 major cities of Hungary. The sample can only be considered representative of the population of the 7 cities, and not the total population of Hungarian cities (384 cities), or Hungary. However, the current population of these cities is 26 percent of the Hungarian population (Hungarian Central Statistical Office, 2019), thus we were able to capture a significant part of the total population. Additionally, there is no theoretical or empirical reason to suspect that the effect of interviewers on the measurement of happiness would be related to the place of residence or settlement size. Data was collected by face-to-face interviews. Random-walking method was used by the interviewers to select households. Interviewers were assigned with a random starting location and provided with instructions on the random walking rules. They were instructed which direction to start, on which side of the streets to walk etc. After the random walk, interviewers proceeded to the starting address. Respondents were selected from each household using the Kish selection method (Kish, 1949). After attempting to conduct the interview in the first address, the random walk continued by skipping two addresses, and contacting the third address. Interviews were conducted this way until interviewers reached their daily target. In total 1000 interviews were conducted by 39 interviewers. Weights were not applied, because official statistics on this population were lacking. The socio-demographic composition of the sample can be found in Appendix Table 3.

Interviews were conducted by interviewers. Interviewers in face-to-face surveys are responsible for a variety of tasks besides asking survey questions. Interviewers sample the units, contact and recruit sample members, collect, and process data. In this study each interviewer completed interviews in only one city due to practical reasons. The median of completed interviews was 24, with a minimum of 7 and a maximum of 47. The age of the interviewers ranged from 26 to 82 years, the median age of the interviewers was 61 years. A third of the interviewers had college degree, all interviewers completed at least high school. The minimum year of interviewer experience was 3, the median was 15 years of experience. All interviewers completed a self-administered questionnaire; thus, data was available for 1000 respondents matched with interviewer data.

3.2 Measures

The survey was preceded by cognitive interviews (N = 40) to increase the internal validity of the questionnaire. As an outcome of the cognitive phase we incorporated several flexible interview elements (such as to address the interview formally of informally, to ask the happiness block in the beginning or at the end of the interview, etc.), and used these features of the interview as controls of the interview situation.

3.2.1 Dependent Variables

In this analysis, we focus on three different single-item measures of happiness. Our first approach, similar to the Cantril ladder (Cantril, 1965), assessed respondents’ present level of happiness by asking “How happy are you right now?”. Respondents were asked to use a showcard. The scale ranged from 0 (‘Very unhappy’) to 10 (‘Very happy). This is the standard technique to measure the level of happiness in the World Happiness Reports (Helliwell et al., 2021). To make sure that the respondent understands the scale properly and to decrease the level of frustration to be forced to use a scale (expressed by almost every fifth of the interviewees in the course of the cognitive interview) we added a sad face emoji to the lowest and a smiley to highest value. Our second dependent variable thus assessed childhood happiness by asking respondents “How happy were you during your childhood?”. Similarly, respondents were asked to use a showcard, and the scale ranged from 0 ('Very unhappy') to 10 ('Very happy). Finally, the third approach measured to what extent respondents considered themselves a happy person. The question wording was as follows: "Altogether do you feel yourself a happy person?". Showcards were used, and respondents were able to place themselves on a scale ranging from 0 (‘Not at all”) to 10 (‘Very much).

3.2.2 Respondent-Level Predictors

We included several variables for controlling different respondent characteristics. Gender was assessed by a binary variable with 1 for females. Age was included as a continuous predictor. Educational level was fitted to the Hungarian educational system, ranging from 1 (maximum primary school) to 4 (college degree or higher). We further controlled for the financial situation of the household by asking “How would you rate your household's financial situation?”. Respondents were able to choose from a scale ranging from 1 (Living in deprivation) to 6 (Living without livelihood problems and can save regularly). Continuous predictors were scaled from 0 to 1 and centred around their grand mean. We preferred grand-mean centring above standardization because this only affects the intercepts (Hox et al., 2017).

3.2.3 Interviewer-Level Predictors

Interviewers were asked the same three questions on current happiness, childhood happiness, and happy personality. The same showcards and scales were used for the interviewers. Besides these key predictors, we included the age of the interviewer as a continuous predictor. The education of the interviewer was assessed by a binary variable labeled 1 for college or higher, and 0 for the lower level of education. Finally, the experience of the interviewer as a continuous predictor was also included. Continuous predictors were scaled from 0 to 1 and centred around their grand mean.

3.2.4 Statistical Analysis

Considering that our dependent variables were negatively skewed, but not overdispersed, multilevel Poisson regressions with random intercepts were estimated for each dependent variable using the glmmTMB package of RStudio (Brooks et al., 2017) to account for the nested nature of our data. We reverse-scored the dependent variable in order to better fit a Poisson distribution. When interviewers are not randomly assigned between respondents' locations, interviewer effects may confound with area effects (O’Muircheartaigh & Campanelli, 1998; Rohm et al., 2020; Schnell & Kreuter, 2005; Vassallo et al., 2017). To disentangle interviewer and area effects, originally each model had three levels: respondents in level one, interviewers in level two, and settlement in level three. The results of these models, however, showed that the settlement variance was close to zero, and including a random term for settlement did not improve model fit either. Thus, we decided to exclude the settlement level, and build two-level models.

We started with empty models to estimate the variance accountable for interviewers without involving any predictors. In Model 1 individual-level predictors, such as socio-demographic characteristics were then added as fixed-effects, as these factors have been found to be correlated with SWB (see, e.g., Dolan et al. (2008)). Individual-level predictors may further capture area effects as settlements are expected to differ concerning their socio-demographic context. In Model 2 we added interviewer-level predictors as fixed effects. In the final model (Model 3), to control for area effects, we included several settlement characteristics. Population size (Lawless & Lucas, 2011; Želinský et al., 2021), unemployment rate (Lindberg & Wolsko, 2019; Welsch et al., 2021), birth rate (Shrotryia & Mazumdar, 2017), financial income (Dolan et al., 2008), and consumption (Carver & Grimes, 2019) have been found to influence SWB.Footnote 2 Finally, to answer RQ3, we ran additional models to investigate the effect of matching between interviewers’ and respondents’ characteristics. We included these variables both as fixed, and interactive effects. 95% confidence intervals for intraclass coefficients (ICCs) were estimated using the rpt function of the rptR package in R (Stoffel et al., 2017). The number of parametric bootstraps were set to 1000.

First, we provide a comparison of a range of goodness of fit statistics of the models, second, we discuss the individual effects of the explanatory variables, lastly, we provide the results of the “matching” models.

4 Results

4.1 Comparing ICCs and Model Fit

To answer RQ1a and b we first compare ICCs. Table 1 shows model summaries of the four models per each dependent variable. Starting with the null-models, including random effects for interviewers, resulted in a significantly better model fit, compared to a one-level model, indicating that they account for a significant amount of variance of each happiness measure. Interviewer variance and ICCs were the highest for childhood happiness, and the lowest for present happiness, with happy personality in the middle.

Including respondent-level predictors in Model 1 significantly improved model fit. Interviewer variances and interviewer ICCs decreased after involving respondent-level predictors, highlighting that the effect of interviewers can be partially explained by the respondents’ characteristics. Including interviewer-level predictors in the second models did increase result in a significantly better model fit for the happy personality measure, but not for the other two dependent variables. Interviewer variances also decreased, however, a large unexplained portion remained. Finally, in the third models, including settlement-level variables further decreased interviewer variance, but did not significantly improve model fit in either case.

4.2 Estimates of Predictors

Turning to RQ2 and the estimates of the different explanatory variables in the final models, we found few predictors significantly affecting happiness (Table 2). Among respondents' characteristics, respondents' age had a positive effect on all happiness measures (present happiness: β = 0.532; childhood happiness: β = 0.433, happy person: β = 0.416). Note, that the dependent variables were reverse coded, thus such an association means that older respondents were more likely to report lower happiness. Subjective financial situation statistically affected happiness, but negatively (present happiness: β = -0.808; childhood happiness: β = -0.500, happy person: β = -1.067). Respondents with higher levels of education were also more likely to report high levels of happiness, especially their current happiness.

Only one interviewer-level predictor was found to significantly influence responses: respondents were more likely to report a happy personality in the presence of an interviewer with a happy personality (β = -0.928). Besides, the interviewers' gender, age, educational level, or experience were unrelated to respondents’ reports.

Finally, none of the settlement-level predictors were found to affect reports of happiness, indicating weak area-effects.

4.3 Matching

To answer RQ3 and examine the effect of matching, we first included the fixed effects of match between the interviewer’s and respondent’s gender, age, and education, respectively in the third models. We recoded age into five categories and defined matching as the interviewer and respondent falling into the same age category. Education match was defined as interviewers and respondents both either having or not having a college degree. After including the fixed effects, we included interaction terms with each matching variables and our key interviewer level predictors (happiness measures) to determine whether matching interacts with the effect of interviewer’s happiness.

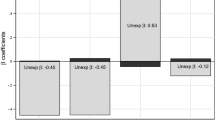

Neither the fixed, nor the interactive effects were significant when predicting present or past happiness. Matching did not have a direct effect on happy personality measure either, but we found a positive interaction between gender match and the interviewer’s happy personality (see Fig. 1, and Appendix Table 4). This indicates that the negative effect of the interviewer’s happy personality on the respondent’s happy personality reports was moderated when respondents were interviewed by an interviewer in their same gender.

5 Discussion

Our study provided evidence on the influence of interviewers in the measurement of happiness in a face-to-face survey in Hungary. We specifically, aimed to assess interviewer variance in the measurement of happiness (RQa-b), identify interviewer characteristics that influence responses (RQ2), and examine the effect of matching (RQ3). To our knowledge, this study was the first to link the substantive responses of the interviewers to respondents' answers in the field of SWB.

The results corroborated previous studies (Biermann et al., 2019; Kassenboehmer & Haisken-DeNew, 2012) by showing that interviewers do account for a significant amount of variance in responses obtained from respondents. ICCs ranged between 0.141 and 0.300 even after controlling for a range of characteristics of both respondents, interviewers, and settlements. Moreover, the interviewer did account for a much larger portion of explained variance than the settlement. This seems logical, as the place of residence is a passive element of SWB, whereas interviewers are more actively involved in the reporting of SWB. Altogether, happy personality measures were found to be the most susceptible to interviewer effects. This is especially interesting, as personality traits and self-identification are considered rather stable, therefore, a larger bias would have been more reasonable for the reporting of present happiness. Contrarily, we found a weak, but significant and positive effect of the interviewers' happy personalities and the respondents' happy personality measures. Nevertheless, a large portion of unexplained interviewer variance remained, even after controlling for a line of predictors, indicating that there are important unobserved conditions in the reporting of SWB.

The link we found between the respondents’ and the interviewers’ happiness provides further support on the influence of the interviewer’s personality on the survey process (West & Blom, 2016). We suspect that either happy interviewers are more successful in recruiting happy respondents, or happy interviewers affect the response process by eliciting more positive responses. The former phenomena may be linked to liking theory. It is possible that respondents who hold positive attitudes are more likely to cooperate with an interviewer whom they perceive as a similarly positive person. This effect may be weaker among less happier respondents. In terms of measurement, we suspect that perceived normative expectations may be stronger in the presence of a happier interviewer, and due to that respondents tend to overreport their level of happiness. Clearly, further research is needed to better understand the underlying reasons for this association.

Contrary to earlier findings (Biermann et al., 2019; Himelein, 2016; Kassenboehmer & Haisken-DeNew, 2012), the gender and experience of the interviewer did not affect responses. One possible explanation for that may be that previous studies examined data from panel surveys. The role of the interviewer and building rapport in a panel survey has been found to be even more important (Chadi, 2013b), thus, it seems plausible to assume that panel respondents are not fully comparable to respondents of cross-sectional surveys.

Interestingly, our results suggest that gender matching between the respondent and the interviewer may moderate the effect of the interviewer’s happiness. Considering that matching situations can increase cooperation rates as well (Durrant et al., 2010), future studies measuring SWB should experiment with matching interviewers and respondents by gender. Again, it is unclear whether this moderating effect arises during recruitment or measurement.

One limitation of our study is that interviewers conducted interviews in one city only, which raises issues on the highly confounding interviewer and area effects. We did, however, take efforts to compensate for the lack of an interpenetrated design and to disentangle interviewer and area effects in our analysis. Furthermore, in this study, we were unable to differentiate whether the observed correlation between the responses of the interviewer and respondent was due to selection or measurement bias. An important direction for future research would be to disentangle whether interviewers introduce bias during the recruitment or the measurement phase. Further research is also needed to better understand how the conscious or unconscious interactions between interviewers and respondents shift responses, for instance, by inviting socially desirable answering. Lastly, in this study only single-item SWB measures were used. Other studies may also experiment with multiple items measures, and assess how interviewer-effects are related to validity.

6 Conclusions

The monitoring of SWB measures is becoming increasingly important for policy-makers (Dolan & Metcalfe, 2012). The efficiency of these policies, however, largely depends on the validity and precision of SWB measures. Studies have shown that SWB measures are strongly dependent on question order (Nikolova & Sanfey, 2016), question wording (Deaton, 2012), scale labeling (Conti & Pudney, 2011), the mode of administration (Dolan & Kavetsos, 2016; Sarracino et al., 2017), or the day of the interview (Akay & Martinsson, 2009; Kavetsos et al., 2014; Taylor, 2006). Our study provided further evidence on the fragility of these measures related to interviewer effects. We argue that as long as interviewers are involved in the collection of SWB measures, awareness should be raised on the possible biases. First, surveyors should focus the training of interviewers on raising awareness on personality traits, self-expression, neutrality, and unjustified positive confirmations. Second, analysis of SWB reports should include controls for interviewer characteristics, whenever it is possible. Third, comparisons of data obtained from interviewer-administered modes and self-administered modes, or mixed-mode surveys should be interpreted with caution.

Data Availability

Not applicable.

Code Availability (Software Application or Custom Code)

Not applicable.

Notes

The term’interviewer’ covers a variety of tasks besides conducting the interview itself. Interviewers in face-to-face surveys are responsible for sampling the units, contacting and recruiting sample members, collecting, and processing data.

The sources of the data obtained were the Hungarian Central Statistical Office, the National Tax and Customs Administration of Hungary, and Gfk, Hungary.

References

Akay, A., & Martinsson, P. (2009). Sundays are blue: Aren’t they? The day-of-the-week effect on subjective well-being and socio-economic status. Working Papers in Economics, No. 397, University of Göteborg, Sweden.

Andersen, A.-M.N., & Olsen, J. (2002). Do interviewers’ health beliefs and habits modify responses to sensitive questions? A study using data collected from pregnant women by means of computer-assisted telephone interviews. American Journal of Epidemiology, 155(1), 95–100. https://doi.org/10.1093/aje/155.1.95

Bajo, M., Stavraki, M., Blanco, A., & Díaz, D. (2020). Direct versus indirect well-being measures: Using partially structured stimuli to evaluate well-being. Journal of Happiness Studies. https://doi.org/10.1007/s10902-020-00332-7

Biermann, P., Bitzer, J., & Gören, E. (2019). The relationship between age and subjective well-being: Estimating within and between effects simultaneously. Oldenburg Discussion Papers in Economics.

Blasco-Belled, A., Rogoza, R., Torrelles-Nadal, C., & Alsinet, C. (2020). Emotional intelligence structure and its relationship with life satisfaction and happiness: New findings from the bifactor model. Journal of Happiness Studies, 21(6), 2031–2049. https://doi.org/10.1007/s10902-019-00167-x

Blom, A. G., Leeuw, E. D., & Hox, J. (2011). Interviewer effects on nonresponse in the European social survey. Journal of Official Statistics, 27(2), 359–377.

Brajša-Žganec, A., Ivanović, D., & Lipovčan, L. K. (2011). Personality traits and social desirability as predictors of subjective well-being. Psihologijske Teme, 20(2), 261–276.

Brehm, J. (1993). The phantom respondents: Opinion surveys and political representation. University of Michigan Press. https://doi.org/10.3998/mpub.9690285

Brooks, M. E., Kristensen, K., Benthem, K. J., van Magnusson, A., Berg, C. W., Nielsen, A., Skaug, H. J., Mächler, M., & Bolker, B. M. (2017). GlmmTMB balances speed and flexibility among packages for zero-inflated generalized linear mixed modeling. The R Journal, 9(2), 378. https://doi.org/10.32614/RJ-2017-066

Campanelli, P., Sturgis, P., & Purdon, S. (1997). Can you hear me knocking? An investigation into the impact of interviewers on survey response rates. London: Social and Community Planning Research.

Cantril, H. (1965). The pattern of human concerns. Rutgers University Press.

Caputo, A. (2017). Social desirability bias in self-reported well-being measures: Evidence from an online survey. Universitas Psychologica, 16(2). https://doi.org/10.11144/Javeriana.upsy16-2.sdsw

Carver, T., & Grimes, A. (2019). Income or consumption: Which better predicts subjective well-being? Review of Income and Wealth, 65(S1), S256–S280. https://doi.org/10.1111/roiw.12414

Chadi, A. (2013b). The role of interviewer encounters in panel responses on life satisfaction. Economics Letters, 121(3), 550–554. https://doi.org/10.1016/j.econlet.2013.10.024

Chadi, A. (2013a). Third person effects in interview responses on life satisfaction. IAAEU Discussion Paper Series in Economics.

Conti, G., & Pudney, S. (2011). Survey design and the analysis of satisfaction. Review of Economics and Statistics, 93(3), 1087–1093.

De Leeuw, E. D. (2005). To mix or not to mix data collection modes in surveys. Journal of Official Statistics, 21(5), 233–255.

Deaton, A. (2012). The financial crisis and the well-being of Americans: 2011 OEP Hicks Lecture *. Oxford Economic Papers, 64(1), 1–26. https://doi.org/10.1093/oep/gpr051

Diener, E., Suh, E. M., Lucas, R. E., & Smith, H. L. (1999). Subjective well-being: Three decades of progress. Psychological Bulletin, 125(2), 276–302. https://doi.org/10.1037/0033-2909.125.2.276

Dolan, P., & Kavetsos, G. (2016). Happy talk: Mode of administration effects on subjective well-being. Journal of Happiness Studies, 17(3), 1273–1291. https://doi.org/10.1007/s10902-015-9642-8

Dolan, P., & Metcalfe, R. (2012). Measuring subjective wellbeing: Recommendations on measures for use by national governments. Journal of Social Policy, 41(2), 409–427.

Dolan, P., Peasgood, T., & White, M. (2008). Do we really know what makes us happy? A review of the economic literature on the factors associated with subjective well-being. Journal of Economic Psychology, 29(1), 94–122. https://doi.org/10.1016/j.joep.2007.09.001

Durrant, G. B., Groves, R. M., Staetsky, L., & Steele, F. (2010). Effects of interviewer attitudes and behaviors on refusal in household surveys. Public Opinion Quarterly, 74(1), 1–36. https://doi.org/10.1093/poq/nfp098

Eckman, S. (2013). Do different listers make the same housing unit frame? Variability in housing unit listing. Journal of Official Statistics, 29(2), 249–259. https://doi.org/10.2478/jos-2013-0021

Fastame, M. C., & Penna, M. P. (2012). Does social desirability confound the assessment of self-reported measures of well-being and metacognitive efficiency in young and older adults? Clinical Gerontologist, 35(3), 239–256. https://doi.org/10.1080/07317115.2012.660411

Groves, R. M., & Couper, M. P. (2012). Nonresponse in household interview surveys. Wiley.

Groves, R. M., Cialdini, R. B., & Couper, M. P. (1992). Understanding the decision to participate in a survey. Public Opinion Quarterly, 56(4), 475–495.

Healy, A., & Malhotra, N. (2014). Partisan bias among interviewers. Public Opinion Quarterly, 78(2), 485–499. https://doi.org/10.1093/poq/nft078

Helliwell, J. F., Layard, R., Sachs, J., & De Neve, J. E. (Eds.). (2021). World happiness report 2021. Sustainable Development Solutions Network.

Himelein, K. (2016). Interviewer effects in subjective survey questions: Evidence from Timor-Leste. International Journal of Public Opinion Research, 28(4), 511–533. https://doi.org/10.1093/ijpor/edv031

Holder, M. D. (2012). Happiness in children: Measurement, correlates and enhancement of positive subjective well-being. Springer Science & Business Media.

Hox, J. J., Moerbeek, M., & Van de Schoot, R. (2017). Multilevel analysis: Techniques and applications. Routledge.

Hungarian Central Statistical Office. (2019). Data of urban area centrum (Urban audit). http://statinfo.ksh.hu/Statinfo/haViewer.jsp Accessed on 5 April 2022

Hyman, H. H. (1954). Interviewing in social research. The university of chicago press.

Jäckle, A., Lynn, P., Sinibaldi, J., & Tipping, S. (2013). The effect of interviewer experience, attitudes, personality and skills on respondent co-operation with face-to-face surveys. Survey Research Methods, 7(1), 1–15. https://doi.org/10.18148/srm/2013.v7i1.4736

Kahn, R. L., & Cannell, C. F. (1957). The dynamics of interviewing; theory, technique, and cases. New York: John Wiley.

Kassenboehmer, S. C., & Haisken-DeNew, J. P. (2012). Heresy or enlightenment? The well-being age U-shape effect is flat. Economics Letters, 117(1), 235–238. https://doi.org/10.1016/j.econlet.2012.05.013

Kavetsos, G., Dimitriadou, M., & Dolan, P. (2014). Measuring happiness: Context matters. Applied Economics Letters, 21(5), 308–311. https://doi.org/10.1080/13504851.2013.856994

Kish, L. (1949). A procedure for objective respondent selection within the household. Journal of the American Statistical Association, 44(247), 380–387.

Kwiat, A. (2009). Examining blocks with lister error in area listing. Proceedings of the Section on Survey Research Methods, American Statistical Association, 2546–2557.

Lawless, N. M., & Lucas, R. E. (2011). Predictors of regional well-being: A county level analysis. Social Indicators Research, 101(3), 341–357. https://doi.org/10.1007/s11205-010-9667-7

Lindberg, K., & Wolsko, C. (2019). Assessing well-being factors in a growing community: A multi-attribute vignette approach. Social Indicators Research, 144(1), 315–335. https://doi.org/10.1007/s11205-018-2040-y

Lipps, O., & Lutz, G. (2010). How answers on political attitudes are shaped by interviewers: Evidence from a panel survey. Swiss Journal of Sociology, 2, 345–358.

Lord, V. B., Friday, P. C., & Brennan, P. K. (2005). The effects of interviewer characteristics on arrestees’ responses to drug-related questions. Applied Psychology in Criminal Justice, 1, 36–54.

Lu, C., Jiang, Y., Zhao, X., & Fang, P. (2020). Will helping others also benefit you? Chinese adolescents’ altruistic personality traits and life satisfaction. Journal of Happiness Studies, 21(4), 1407–1425. https://doi.org/10.1007/s10902-019-00134-6

Mneimneh, Z. N., de Jong, J., Cibelli Hibben, K., & Moaddel, M. (2020). Do I look and sound religious? Interviewer religious appearance and attitude effects on respondents’ answers. Journal of Survey Statistics and Methodology, 8(2), 285–303. https://doi.org/10.1093/jssam/smy020

Mongrain, M., Chin, J. M., & Shapira, L. B. (2011). Practicing compassion increases happiness and self-esteem. Journal of Happiness Studies, 12(6), 963–981. https://doi.org/10.1007/s10902-010-9239-1

Moorman, P., Newman, B., Millikan, R., Tse, C.-K., & Sandler, D. (1999). Participation rates in a case-control study: The impact of age, race, and race of interviewer. Annals of Epidemiology, 9(3), 188–195.

Németh, R., & Luksander, A. (2018). Strong impact of interviewers on respondents’ political choice: Evidence from Hungary. Field Methods 30(2), 155–170.

Ng, W., Russell Kua, W. S., & Kang, S.-H. (2019). The relative importance of personality, financial satisfaction, and autonomy for different subjective well-being facets. The Journal of Psychology, 153(7), 680–700. https://doi.org/10.1080/00223980.2019.1598928

Nikolova, E., & Sanfey, P. (2016). How much should we trust life satisfaction data? Evidence from the Life in Transition Survey. Journal of Comparative Economics, 44(3), 720–731. https://doi.org/10.1016/j.jce.2015.11.003

O’Muircheartaigh, C., & Campanelli, P. (1998). The relative impact of interviewer effects and sample design effects on survey precision. Journal of the Royal Statistical Society: Series A (statistics in Society), 161(1), 63–77. https://doi.org/10.1111/1467-985X.00090

Rohm, T., Carstensen, C. H., Fischer, L., & Gnambs, T. (2020). Disentangling Interviewer and Area Effects in Large-Scale Educational Assessments Using Cross-Classified Multilevel Item Response Models. Journal of Survey Statistics and Methodology, smaa015. https://doi.org/10.1093/jssam/smaa015

SAMHSA. (2008). Chapter 11: Effect of Interviewers on Data Quality. Computer assisted interviewing for SAMHSA’s National Household Survey on Drug Abuse. U.S. Department of Health and Human Services.

Sarracino, F., Riillo, C. F. A., & Mikucka, M. (2017). Comparability of web and telephone survey modes for the measurement of subjective well-being. Survey Research Methods, 11(2), 141–169. https://doi.org/10.18148/srm/2017.v11i2.6740

Schaeffer, N. C., Dykema, J., & Maynard, D. W. (2010). Interviewers and interviewing. Handbook of Survey Research, 2, 437–471.

Schnell, R., & Kreuter, F. (2005). Separating interviewer and sampling-point effects. Journal of Official Statistics, 21, 389–410.

Shrotryia, V. K., & Mazumdar, K. (2017). The history of well-being in South Asia. In R. J. Estes & M. J. Sirgy (Eds.), The Pursuit of Human Well-Being: The Untold Global History (pp. 349–380). Springer International Publishing. https://doi.org/10.1007/978-3-319-39101-4_11

Steel, P., & Ones, D. S. (2002). Personality and happiness: A national-level analysis. Journal of Personality and Social Psychology, 83(3), 767–781. https://doi.org/10.1037/0022-3514.83.3.767

Stoffel, M. A., Nakagawa, S., & Schielzeth, H. (2017). rptR: Repeatability estimation and variance decomposition by generalized linear mixed-effects models. Methods in Ecology and Evolution, 8(11), 1639–1644. https://doi.org/10.1111/2041-210X.12797

Taylor, M. P. (2006). Tell me why I don’t like Mondays: Investigating day of the week effects on job satisfaction and psychological well-being. Journal of the Royal Statistical Society: Series A (statistics in Society), 169(1), 127–142.

Tourangeau, R., & Smith, T. W. (1996). Asking sensitive questions: The impact of data collection mode, question format, and question context. The Public Opinion Quarterly, 60(2), 275–304.

Tourangeau, R., Shapiro, G., Kearney, A., & Ernst, L. (1997). Who lives here? Survey undercoverage and household roster questions. Journal of Official Statistics, 13, 1–18.

Turner, M., Sturgis, P., Martin, D., & Skinner, C. (2014). Can interviewer personality, attitudes and experience explain the design effect in face-to-face surveys? In U. Engel, B. Jann, P. Lynn, A. Scherpenzeel, & P. Sturgis, Abingdon (Eds.), Improving Survey Methods: Lessons from Recent Research (pp. 72–85). UK: Routledge.

Vassallo, R., Durrant, G., & Smith, P. (2017). Separating interviewer and area effects by using a cross-classified multilevel logistic model: Simulation findings and implications for survey designs. Journal of the Royal Statistical Society: Series A (statistics in Society), 180(2), 531–550. https://doi.org/10.1111/rssa.12206

Veehoven, R. (1991). Questions on happiness: Classical topics, modern answers, blind spots. In F. Strack, M. Argyle, & N. Schwarz (Eds.), Subjective wellbeing, an interdisciplinary perspective (pp. 7–26). London: Pergamon Press.

Webster, C. (1996). Hispanic and Anglo interviewer and respondent ethnicity and gender: The impact on survey response quality. Journal of Marketing Research, 33(1), 62. https://doi.org/10.2307/3152013

Welsch, H., Bierman, P., & Kühling, J. (2021). Immigration attitudes and subjective well-being: A matter of identity? Journal of Happiness Studies, 22(4), 1563–1581. https://doi.org/10.1007/s10902-020-00284-y

West, B. T., & Blom, A. G. (2016). Explaining Interviewer Effects: A Research Synthesis. Journal of Survey Statistics and Methodology, smw024. https://doi.org/10.1093/jssam/smw024

West, B. T., & Olson, K. (2010). How much of interviewer variance is really nonresponse error variance? Public Opinion Quarterly, 74(5), 1004–1026. https://doi.org/10.1093/poq/nfq061

Ye, J., Yeung, D. Y., Liu, E. S. C., & Rochelle, T. L. (2019). Sequential mediating effects of provided and received social support on trait emotional intelligence and subjective happiness: A longitudinal examination in Hong Kong Chinese university students. International Journal of Psychology, 54(4), 478–486. https://doi.org/10.1002/ijop.12484

Yu, R., Liu, Y., & Yang, M. (2011). Does interviewer personality matter for survey outcomes. Proceedings of the 64th Annual Conference on World Association for Public Opinion Research, 89–118.

Želinský, T., Hudec, O., Mojsejová, A., & Hricová, S. (2021). The effects of population density on subjective well-being: A case-study of Slovakia. Socio-Economic Planning Sciences, 78, 101061. https://doi.org/10.1016/j.seps.2021.101061

Funding

Open access funding provided by Eötvös Loránd University. This work was supported by the Hungarian Research Fund (NKFIH / OTKA, grant number: K 124940).

Author information

Authors and Affiliations

Contributions

The second author designed the survey and was responsible for the data collection. The first author wrote the manuscript in consultation with the second author. The final version of the manuscript was approved by the authors.

Corresponding author

Ethics declarations

Conflicts of Interest

The authors declare that they have no conflict of interest exists.

Consent to Participate (Include Appropriate Statements)

Not applicable.

Consent for Publication (Include Appropriate Statements)

Not applicable.

Ethics Approval (Include Appropriate Approvals or Waivers)

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Stefkovics, Á., Sik, E. What Drives Happiness? The Interviewer’s Happiness. J Happiness Stud 23, 2745–2762 (2022). https://doi.org/10.1007/s10902-022-00527-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10902-022-00527-0