Abstract

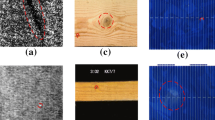

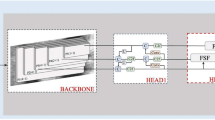

Vision-based defect recognition in the metal industry is an important and challenging task. Many mainstream methods based on supervised learning need a large amount of annotated defect data. However, the data acquisition in industrial scenarios is quite difficult and time-consuming. To alleviate the problem, in this paper, we propose a few-shot defect recognition (FSDR) method for metal surfaces by attention-embedding and self-supervised learning. The proposed method includes two stages,pre-training and meta learning. In the pre-training stage, an attention embedding network (AEN) is designed for better learning the defect local correlation of multi-receptive field and reducing the background interference, and to train a robust AEN without any annotations, a multi-resolution cropping self-supervised method (MCS) is developed for better generalizing the few-shot recognition task. Then, in the meta learning stage, to generate the embedding features, the defect images are encoded by the pre-trained AEN, and we also design a query-guided weight (QGW) to address the bias of embedding feature vector. The classification information is gained by computing the distance of the embedding feature vector of each category. We evaluate the proposed FSDR on the NEU dataset and the experiments show competitive results in 1-shot and 5-shot defect recognition tasks compared with the mainstream methods.

Similar content being viewed by others

References

Bromley, J., Bentz, J. W., Bottou, L., Guyon, I., Lecun, Y., Moore, C., Sackinger, E., & Shah, R. (1993). Signature verification using a “Siamese-time delay neural network’’. International Journal of Pattern Recognition and Artificial Intelligence, 7(04), 669–688.

Chen, T., Kornblith, S., Norouzi, M., & Hinton, G. (2020). A simple framework for contrastive learning of visual representations. In International conference on machine learning, PMLR (pp. 1597–1607).

Chollet, F. (2017). Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1251–1258).

Finn, C., Abbeel, P., & Levine, S. (2017). Model-agnostic meta-learning for fast adaptation of deep networks. In International conference on machine learning, PMLR (pp. 1126–1135).

Gao, Y., Gao, L., & Li, X. (2020). A generative adversarial network based deep learning method for low-quality defect image reconstruction and recognition. IEEE Transactions on Industrial Informatics, 17(5), 3231–3240.

Gao, Y., Lin, J., Xie, J., & Ning, Z. (2020). A real-time defect detection method for digital signal processing of industrial inspection applications. IEEE Transactions on Industrial Informatics, 17(5), 3450–3459.

Garcia, V., & Bruna, J. (2018). Few-shot learning with graph neural networks. In 6th International conference on learning representations, ICLR 2018.

Grill, J. B., Strub, F., Altché, F., Tallec, C., Richemond, P., Buchatskaya, E., Doersch, C., Pires, B., Guo, Z., Azar, M., Piot, B., Kavukcuoglu, K., Munos, R., & Valko, M. (2020). Bootstrap your own latent: A new approach to self-supervised learning. In Neural information processing systems.

He, K., Fan, H., Wu, Y., Xie, S., & Girshick, R. (2020). Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 9729–9738).

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770–778).

Howard, A. G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., Andreetto, M., & Adam, H. (2017). Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint. arXiv:1704.04861

Hu, J., Shen, L., & Sun, G. (2018). Squeeze-and-excitation networks. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 7132–7141).

Jain, S., Seth, G., Paruthi, A., Soni, U., & Kumar, G. (2020). Synthetic data augmentation for surface defect detection and classification using deep learning. Journal of Intelligent Manufacturing, 33, 1007–1020.

Kim, M., Lee, M., An, M., & Lee, H. (2020). Effective automatic defect classification process based on cnn with stacking ensemble model for tft-lcd panel. Journal of Intelligent Manufacturing, 31(5), 1165–1174.

Kolesnikov, A., Zhai, X., & Beyer, L. (2019). Revisiting self-supervised visual representation learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 1920–1929).

Lv, Q., & Song, Y. (2019). Few-shot learning combine attention mechanism-based defect detection in bar surface. ISIJ International, 59(6), 1089–1097.

Masci, J., Meier, U., Fricout, G., & Schmidhuber, J. (2013). Multi-scale pyramidal pooling network for generic steel defect classification. In The 2013 international joint conference on neural networks (IJCNN), IEEE (pp. 1–8).

Munkhdalai, T., & Yu, H. (2017). Meta networks. In International conference on machine learning, PMLR (pp. 2554–2563).

Oord, A., Li, Y., & Vinyals, O. (2018). Representation learning with contrastive predictive coding. arXiv preprint. arXiv:1807.03748

Ravi, S., & Larochelle, H. (2017). Optimization as a model for few-shot learning. In International conference on learning representations. https://openreview.net/forum?id=rJY0-Kcll

Rodríguez, P., Laradji, I., Drouin, A., & Lacoste, A. (2020). Embedding propagation: Smoother manifold for few-shot classification. In European conference on computer vision (pp. 121–138). Springer.

Sa, L., Yu, C., Chen, Z., Zhao, X., & Yang, Y. (2021). Attention and adaptive bilinear matching network for cross-domain few-shot defect classification of industrial parts. In 2021 International joint conference on neural networks (IJCNN), IEEE (pp. 1–8).

Scarselli, F., Gori, M., Tsoi, A. C., Hagenbuchner, M., & Monfardini, G. (2008). The graph neural network model. IEEE Transactions on Neural Networks, 20(1), 61–80.

Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., & Batra, D. (2017). Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision (pp. 618–626).

Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint. arXiv:1409.1556

Snell, J., Swersky, K., & Zemel, R. (2017). Prototypical networks for few-shot learning. In Proceedings of the 31st international conference on neural information processing systems (pp. 4080–4090).

Song, K., & Yan, Y. (2013). A noise robust method based on completed local binary patterns for hot-rolled steel strip surface defects. Applied Surface Science, 285, 858–864.

Song, Y., Liu, Z., Ling, S., Tang, R., Duan, G., & Tan, J. (2022). Coarse-to-fine few-shot defect recognition with dynamic weighting and joint metric. IEEE Transactions on Instrumentation and Measurement. https://doi.org/10.1109/TIM.2022.3193204

Song, Y., Liu, Z., Wang, J., Tang, R., Duan, G., & Tan, J. (2021). Multiscale adversarial and weighted gradient domain adaptive network for data scarcity surface defect detection. IEEE Transactions on Instrumentation and Measurement, 70, 1–10. https://doi.org/10.1109/TIM.2021.3096284

Sung, F., Yang, Y., Zhang, L., Xiang, T., Torr, P. H., & Hospedales, T. M. (2018). Learning to compare: Relation network for few-shot learning. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1199–1208).

Tian, Y., Krishnan, D., & Isola, P. (2020). Contrastive multiview coding. In Proceedings of 16th European conference on computer vision–ECCV 2020, Glasgow, UK, 23–28 August 2020 (Vol. 16, Part XI, pp. 776–794). Springer.

Vinyals, O., Blundell, C., Lillicrap, T., & Wierstra, D. (2016). Matching networks for one shot learning. Advances in Neural Information Processing Systems, 29, 3630–3638.

Wang, Q., Wu, B., Zhu, P., Li, P., Zuo, W., & Hu, Q. (2020). Eca-net: efficient channel attention for deep convolutional neural networks, 2020 IEEE. In CVF conference on computer vision and pattern recognition (CVPR). IEEE.

Wang, X., Kan, M., Shan, S., & Chen, X. (2019). Fully learnable group convolution for acceleration of deep neural networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 9049–9058).

Woo, S., Park, J., Lee, J. Y., & Kweon, I. S. (2018). Cbam: Convolutional block attention module. In Proceedings of the European conference on computer vision (ECCV) (pp. 3–19).

Yang, L., Li, L., Zhang, Z., Zhou, X., Zhou, E., & Liu, Y. (2020). DPGN: Distribution propagation graph network for few-shot learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 13390–13399).

Ye, H. J., Hu, H., Zhan, D. C., & Sha, F. (2020). Few-shot learning via embedding adaptation with set-to-set functions. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 8808–8817).

Zagoruyko, S., & Komodakis, N. (2016). Wide residual networks. In British machine vision conference 2016. British Machine Vision Association.

Acknowledgements

This work was supported in part the Key Research and Development Program of Zhejiang Province under grant 2021C01008, in part by the National Natural Science Foundation of China under Grant 51935009 and 52075480, and in part by the High-level Talent Special Support Plan of Zhejiang Province under Grant 2020R52004.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflicts of interest to this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, Z., Song, Y., Tang, R. et al. Few-shot defect recognition of metal surfaces via attention-embedding and self-supervised learning. J Intell Manuf 34, 3507–3521 (2023). https://doi.org/10.1007/s10845-022-02022-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-022-02022-y