Abstract

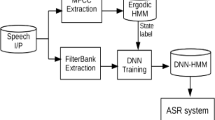

In this paper, the effect of emotional speech on the performance of neutral speech trained ASR systems is studied. Prosody-modification based data augmentation is explored to compensate the affected ASR performance due to emotional speech. The primary motive is to develop an Telugu ASR system that is least affected by these emotion based intrinsic speaker related acoustic variations. Two factors contributing towards the intrinsic speaker related variability that are focused in this research are the fundamental frequency [\((F_0)\) or pitch] and the speaking rate variations. To simulate ASR task, we performed the training of our ASR system on neutral speech and tested it for data from emotional as well as neutral speech. Compared to the performance metrics of neutral speech at testing stage, emotional speech performance metrics are extremely degraded. This performance degradation is observed due to the difference in the prosody and speaking rate parameters of neutral and emotional speech. To overcome this performance degradation problem, prosody and speaking rate parameters are varied and modified to create the newer augmented versions of the training data. The original and augmented versions of the training data are pooled together and re-trained in order to capture greater emotion-specific variations. For the Telugu ASR experiments, we used Microsoft speech corpus for Indian languages(MSC-IL) for training neutral speech and Indian Institute of Technology Kharagpur Simulated Emotion Speech Corpus (IITKGP-SESC) for evaluating emotional speech. The basic emotions of anger, happiness and sad are considered for evaluation along with neutral speech.

Similar content being viewed by others

References

Dhananjaya, N., & Yegnanarayana, B. (2010). Voiced/nonvoiced detection based on robustness of voiced epochs. IEEE Signal Processing Letters, 17(3), 273–276.

Gangamohan, P., Mittal, V., & Yegnanarayana, B. (2012). Relative importance of different components of speech contributing to perception of emotion. In Speech Prosody.

Geoffrey, H., Li, D., Dong, Y., George, E. D., & Mohamed, A.-R. (2012). Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Processing Magazine, 29(6), 82–97.

Gerosa, M., Giuliani, D., Narayanan, S., & Potamianos, A. (2009). A review of asr technologies for children’s speech. In Proceedings of the 2nd workshop on child, computer and interaction. (pp. 1–8).

Hsiao, R., Ma, J., Hartmann, W., Karafiát, M., Grézl, F., Burget, L., Szöke, I., Černockỳ, J. H., Watanabe, S., Chen, Z., et al. (2015). Robust speech recognition in unknown reverberant and noisy conditions. In Workshop on automatic speech recognition and understanding (ASRU) (pp. 533–538). IEEE.

Ko, T., Peddinti, V., Povey, D., Seltzer, M. L., & Khudanpur, S. (2017) A study on data augmentation of reverberant speech for robust speech recognition. In International conference on acoustics, speech and signal processing (ICASSP). (pp. 5220–5224). IEEE

Koolagudi, S. G., Maity, S., Kumar, V. A., Chakrabarti, S.& Rao, K. S. (2009) Iitkgp-sesc: Speech database for emotion analysis. In International conference on contemporary computing. (pp. 485–492). Springer.

Lippmann, R., Martin, E., & Paul, D. (1987) Multi-style training for robust isolated-word speech recognition. In International conference on acoustics, speech, and signal processing (ICASSP), (vol. 12, pp. 705–708). IEEE

Murty, K. S. R., & Yegnanarayana, B. (2008). Epoch extraction from speech signals. IEEE Transactions on Audio, Speech, and Language Processing, 16(8), 1602–1613.

Panayotov, V., Chen, G., Povey, D., & Khudanpur, S. (2015) Librispeech: An asr corpus based on public domain audio books. In International conference on acoustics, speech and signal processing (ICASSP). (pp. 5206–5210). IEEE.

Peddinti, V., Chen, G., Manohar, V., Ko, T., Povey, D., & Khudanpur, S. (2015). Jhu aspire system: Robust lvcsr with tdnns, ivector adaptation and rnn-lms. In Workshop on automatic speech recognition and understanding (ASRU), (pp. 539–546)

Povey, D., Ghoshal, A., Boulianne, G., Burget, L., Glembek, O., Goel, N., Hannemann, M., Motlicek, P., Qian, Y., Schwarz, P. et al. (2011) The kaldi speech recognition toolkit. In IEEE 2011 workshop on automatic speech recognition and understanding. IEEE Signal Processing Society.

Prasanna, S., Govind, D., Rao, K. S., & Yegnanarayana, B. (2010). Fast prosody modification using instants of significant excitation. In Proceedings of Fifth International Conference of Speech Prosody.

Raju, V. V., Gangamohan, P., Gangashetty, S. V., & Vuppala, A. K. (2016). Application of prosody modification for speech recognition in different emotion conditions. In IEEE Region 10 Conference (TENCON), IEEE, 2016 (pp. 951–954).

Raju, V. V., Vydana, H. K., Gangashetty, S. V., & Vuppala, A. K. (2017) Importance of non-uniform prosody modification for speech recognition in emotion conditions, In 2017 Asia-Pacific signal and information processing association annual summit and conference (APSIPA ASC). (pp. 573–576). IEEE.

Rao, K. S., Prasanna, S. M., & Yegnanarayana, B. (2007). Determination of instants of significant excitation in speech using hilbert envelope and group delay function. IEEE Signal Processing Letters, 14(10), 762–765.

Rousseau, A., Deléglise, P., & Esteve, Y. (2012). Ted-lium: An automatic speech recognition dedicated corpus. In LREC (pp. 125–129).

Russell, M., & D’Arcy, S. (2007). Challenges for computer recognition of children’s speech. In Workshop on speech and language technology in education.

Schalkwyk, J., Beeferman, D., Beaufays, F., Byrne, B., Chelba, C., Cohen, M., Kamvar, M., & Strope, B. (2010) “Your word is my command”: Google search by voice: A case study. In Advances in speech recognition. (pp. 61–90). Springer.

Shahnawazuddin, S., Adiga, N., Kathania, H. K., & Sai, B. T. (2020). Creating speaker independent asr system through prosody modification based data augmentation. Pattern Recognition Letters, 131, 213–218.

Smits, R., & Yegnanarayana, B. (1995). Determination of instants of significant excitation in speech using group delay function. IEEE Transactions on Speech and Audio Processing, 3(5), 325–333.

Srivastava, B. M. L., Sitaram, S., Mehta, R. K., Mohan, K. D., Matani, P., Satpal, S., Bali, K., Srikanth, R., & Nayak, N. (2018). Interspeech 2018 low resource automatic speech recognition challenge for indian languages. In SLTU (pp. 11–14).

Vegesna, V. V. R., Gurugubelli, K., & Vuppala, A. K. (2018). Prosody modification for speech recognition in emotionally mismatched conditions. International Journal of Speech Technology, 21(3), 521–532.

Vegesna, V. V. R., Gurugubelli, K., & Vuppala, A. K. (2019). Application of emotion recognition and modification for emotional telugu speech recognition. Mobile Networks and Applications, 24(1), 193–201.

Vishnu Vidyadhara Raju, V. (2020). Towards building a robust Telugu ASR system for emotional speech. PhD Thesis. http://hdl.handle.net/10603/306355

Yu, D., & Deng, L. (2016). Automatic speech recognition. Springer.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kammili, P.R., Ramakrishnam Raju, B.H.V.S. & Krishna, A.S. Handling emotional speech: a prosody based data augmentation technique for improving neutral speech trained ASR systems. Int J Speech Technol 25, 197–204 (2022). https://doi.org/10.1007/s10772-021-09897-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-021-09897-x