Abstract

In order to prevent the disclosure of privacy-sensitive data, such as names and relations between users, social network graphs have to be anonymised before publication. Naive anonymisation of social network graphs often consists in deleting all identifying information of the users, while maintaining the original graph structure. Various types of attacks on naively anonymised graphs have been developed. Active attacks form a special type of such privacy attacks, in which the adversary enrols a number of fake users, often called sybils, to the social network, allowing the adversary to create unique structural patterns later used to re-identify the sybil nodes and other users after anonymisation. Several studies have shown that adding a small amount of noise to the published graph already suffices to mitigate such active attacks. Consequently, active attacks have been dubbed a negligible threat to privacy-preserving social graph publication. In this paper, we argue that these studies unveil shortcomings of specific attacks, rather than inherent problems of active attacks as a general strategy. In order to support this claim, we develop the notion of a robust active attack, which is an active attack that is resilient to small perturbations of the social network graph. We formulate the design of robust active attacks as an optimisation problem and we give definitions of robustness for different stages of the active attack strategy. Moreover, we introduce various heuristics to achieve these notions of robustness and experimentally show that the new robust attacks are considerably more resilient than the original ones, while remaining at the same level of feasibility.

Similar content being viewed by others

1 Introduction

Data is useful. Science heavily relies on data to (in)validate hypotheses, discover new trends, tune up mathematical and computational models, etc. In other words, data collection and analysis is helping to cure diseases, build more efficient and environmentally-friendly buildings, take socially-responsible decisions, understand our needs and those of the planet where we live. Despite these indisputable benefits, it is also a fact that data contains personal and potentially sensitive information, and this is where privacy and usefulness should be considered as a whole.

A massive source of personal information is currently being handled by online social networks. People’s life is often transparently reflected on popular social network platforms, such as Facebook, Twitter and Youtube. Therefore, releasing social network data for further study comes with a commitment to ensure that users remain anonymous. Anonymity, however, is remarkably hard to achieve. Even a simple social graph, where an account consists of a user’s pseudonym only and its relation to other accounts, allows users to be re-identified by just considering the number of relations they have (Liu and Terzi 2008).

The use of pseudonyms is insufficient to guarantee anonymity. An attacker can cross-reference information from other sources, such as the number of connections, to find out the real user behind a pseudonym. Taking into account the type of information an attacker may have, called background or prior knowledge, is thus a common practice in anonymisation models. In a social graph, the adversary’s background knowledge is regarded as any subgraph that is isomorphic to a subgraph in the original social graph. Various works bound the adversary’s background knowledge to a specific family of graphs. For example, the adversary model introduced by Liu and Terzi relies on knowing the degrees of the victim vertices, thus in this case the background knowledge is fully defined by star graphs.Footnote 1 Others assume that an adversary may know the subgraphs induced by the neighbours of their victims (Zhou and Pei 2008), an extended vicinity (Zou et al. 2009), and so on.

A rather different notion of background knowledge was introduced by Backstrom et al. (2007). They describe an adversary able to register several (fake) accounts to the network, called sybil accounts. The sybil accounts establish links between themselves and also with the victims. Therefore, in Backstrom et al.’s attack to a social graph \(G=(V,E)\), the adversary’s background knowledge is the induced subgraph formed by the sybil accounts in G joined with the connections to the victims.

The adversary introduced by Backstrom et al. is said to be active, because he influences the structure of the social network. Previous authors have claimed that active attacks are either unfeasible or detectable. Such a claim is based on two observations. First, inserting many sybil nodes is hard, and they may be detected and removed by sybil detection techniques (Narayanan and Shmatikov 2009). Second, active attacks have been reported to suffer from low resilience, in the sense that the attacker’s ability to successfully recover the sybil subgraph and re-identify the victims is easily lost after a relatively small number of (even arbitrary) changes are introduced in the network (Ji et al. 2015; Mauw et al. 2016, 2018a, b). As a consequence, active attacks have been largely overlooked in literature. Backstrom et al. argue for the feasibility of active attacks, showing that proportionally few sybil nodes (in the order of \(\log _2 n\) nodes for networks of order n) are sufficient for compromising any legitimate node. This feature of active attacks is relevant in view of the fact that sybil defence mechanisms do not attempt to remove every sybil node, but to limit their number to no more than \(\log _2 n\) (Yu et al. 2006, 2008), which entails that sufficiently capable sybil subgraphs are likely to go unnoticed by sybil defences. The second claim, that of lack of resilience to noisy releases, is the main focus of this work.

Contributions In this paper we show that active attacks do constitute a serious threat for privacy-preserving publication of social graphs. We do so by proposing the first active attack strategy that features two key properties. Firstly, it can effectively re-identify users with a small number of sybil accounts. Secondly, it is resilient, in the sense that it resists not only the introduction of reasonable amounts of noise in the network, but also the application of anonymisation algorithms specifically designed to counteract active attacks. The new attack strategy is based on new notions of robustness for the sybil subgraph and the set of fingerprints, as well as noise-tolerant algorithms for sybil subgraph retrieval and re-identification. The comparison of the robust active attack strategy to the original active attack is facilitated by the introduction of a novel framework of study, which views an active attack as an attacker–defender game.

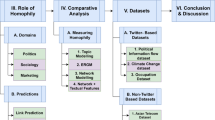

The remainder of this paper is structured as follows. Section 2 examines the literature on social network privacy with a clear focus on active attacks. As part of the problem formulation, we enunciate our adversarial model in the form of an attacker–defender game in Sect. 3. Then, the new notions of robustness are introduced in Sect. 4, and their implementation is discussed in Sect. 5. Finally, we experimentally evaluate our proposal in Sect. 6 and give our conclusions in Sect. 7.

2 Related work

Privacy attacks on social networks exploit structural knowledge about the victims for re-identifying them in a released version of the social graph. These attacks can be divided in two categories, according to the manner in which the adversary obtains the knowledge used to re-identify the victims. On the one hand, passive attacks rely on existing knowledge, which can be collected from publicly available sources, such as the public view of another social network where the victims are known to have accounts. The use of this type of information was demonstrated by Narayanan and Shmatikov (2009), who used information from Flickr to re-identify users in a pseudonymised Twitter graph.

On the other hand, active attacks rely on the ability to alter the structure of the social graph, in such a way that the unique structural properties allowing to re-identify the victims after publication are guaranteed to hold, and to be known by the adversary. As we discussed previously, the active attack methodology was introduced by Backstrom et al. (2007). They proposed to use sybil nodes to create re-identifiable patterns for the victims, in the form of fingerprints defined by sybil-to-victim edges. Under this strategy, they proposed two attacks, the walk-based attack and the cut-based attack. The difference between both attacks lies in the structure given to the sybil subgraph for facilitating its retrieval after publication. In the walk-based attack, a long path linking all the sybil nodes in a predefined order is created, with remaining inter-sybil edges randomly generated. In the cut-based attack, a subset of the sybil nodes are guaranteed to be the only cut vertices linking the sybil subgraph and the rest of the graph. Interestingly, Backstrom et al. also study a passive version of these attacks, where fingerprints are used as identifying information, but no sybil nodes are inserted. Instead, they model the situation where legitimate users turn rogue and collude to share their neighbourhood information in order to retrieve their own weakly induced subgraph and re-identify some of their remaining neighbours. However, the final conclusion of this study is that the active attack is more capable because sybil nodes can better guarantee to create a uniquely retrievable subgraph and unique fingerprints.

A hybrid attack strategy was proposed by Peng et al. (2012, 2014). This attack is composed of two stages. First, a small-scale active attack is used to re-identify an initial set of victims, and then a passive attack is used to iteratively enlarge the set of re-identified victims with neighbours of previously re-identified victims. Because of the order in which the active and the passive phases are executed, the success of the initial active attack is critical to the entire attack. Beyond that interplay between active and passive attacks, Peng et al. do not introduce improvements over the original active attack strategy.

There exist a large number of anonymisation methods for the publication of social graphs that can resist privacy attacks, as those described previously. They can be divided into three categories: those that produce a perturbed version of the original graph (Liu and Terzi 2008; Zhou and Pei 2008; Zou et al. 2009; Cheng et al. 2010; Lu et al. 2012; Casas-Roma et al. 2013, 2017; Chester et al. 2013; Wang et al. 2014; Ma et al. 2015; Salas and Torra 2015; Rousseau et al. 2017), those that generate a new synthetic graph sharing some statistical properties with the original graph (Hay et al. 2008; Mittal et al. 2013; Liu and Mittal 2016; Jorgensen et al. 2016), and those that output some aggregate statistic of the graph without releasing the graph itself, e.g. differentially private degree correlation statistics (Sala et al. 2011), degree distributions (Karwa and Slavković 2012), subgraph counts (Zhang et al. 2015), etc. Active attacks, both the original formulation and the robust version presented in this paper, are relevant to the first type of releases. In this context, a number of methods have been proposed aiming to transform the graph into a new one satisfying some anonymity property based on the notion of k-anonymity (Samarati 2001; Sweeney 2002). Examples of this type of anonymity properties for passive attacks are k-degree anonymity (Liu and Terzi 2008), k-neighbourhood anonymity (Zhou and Pei 2008) and k-automorphism (Zou et al. 2009). For the case of active attacks, the notion of \((k,\ell )\)-anonymity was introduced by Trujillo-Rasua and Yero (2016). A \((k,\ell )\)-anonymous graph guarantees that an active attacker with the ability to insert up to \(\ell \) sybil nodes in the network will still be unable to distinguish any user from at least other \(k-1\) users, in terms of their distances to the sybil nodes. Several relaxations of the notion of \((k,\ell )\)-anonymity were introduced by Mauw et al. (2018b). The notion of \((k,\ell )\)-adjacency anonymity accounts for the unlikelihood of the adversary to know all distances in the original graph, whereas \((k,\varGamma _{G,\ell })\)-anonymity models the protection of the victims only from vertex subsets with a sufficiently high re-identification probability and \((k,\varGamma _{G,\ell })\)-adjacency anonymity combines both criteria.

Anonymisation methods based on the notions of \((k,\ell )\)-anonymity, \((k,\varGamma _{G,\ell })\)-anonymity and \((k,\varGamma _{G,\ell })\)-adjacency anonymity were introduced by Mauw et al. (2016, 2018a, b). As we discussed above, despite the fact that these methods only give a theoretical privacy guarantee against adversaries with the capability of introducing a small number of sybil nodes, empirical results show that they are in fact capable of thwarting attacks leveraging larger numbers of sybil nodes. These results are in line with the observation that random perturbations also thwart active attacks in their original formulation (Narayanan and Shmatikov 2009; Ji et al. 2015). In contrast, our robust active attack strategy performs significantly better in the presence of random perturbation, as we demonstrate in Sect. 6.

In the context of obfuscation methods, which aim to publish a new version of the social graph with randomly added perturbations, Xue et al. (2012) assess the possibility of the attacker leveraging the knowledge about the noise generation to launch what they call a probabilistic attack. In their work, Xue et al. provided accurate estimators for several graph parameters in the noisy graphs, to support the claim that useful computations can be conducted on the graphs after adding noise. Among these estimators, they included one for the degree sequence of the graph. Then, noting that an active attacker can indeed profit from this estimator to strengthen the walk-based attack, they show that after increasing the perturbation by a sufficiently small amount this attack also fails. Although the probabilistic attack presented by Xue et al. features some limited level of noise resilience, it is not usable as a general strategy, because it requires the noise to follow a specific distribution and the parameters of this distribution to be known by the adversary. Our definition of robust attack makes no assumptions about the type of perturbation applied to the graph. It is also worth noting, in the context of noise addition methods, that anonymisation algorithms based on privacy properties for passive attacks, such as k-degree anonymity or k-neighbourhood anonymity, can in some cases thwart an active attack. This may happen if such a method introduces a sufficiently large amount of changes in the graph. However, these anonymity notions do not offer formal privacy guarantees against active attacks, because they target adversary models based on different forms of background knowledge. In other words, if some of these algorithms happen to thwart an active attack, it will be a side effect of the noise that it introduced rather than a consequence of the privacy property imposed on the graph.

Finally, we point out that the active attack strategy shares some similarities with graph watermarking methods (Collberg et al. 2003; Zhao et al. 2015; Eppstein et al. 2016). The purpose of graph watermarking is to release a graph containing embedded instances of a small subgraph, the watermark, that can be easily retrieved by the graph publisher, while remaining imperceptible to others and being hard to remove or distort. Note that the goals of the graph owner and the adversary are to some extent inverted in graph watermarking, with respect to active attacks. Moreover, since the graph owner knows the entire graph, he can profit from this knowledge for building the watermark. However, during the sybil subgraph creation phase of an active attack, only a partial view of the social graph is available to the attacker. The next section will make it easier to understand the exact limitations and capabilities of the active adversary, as well as those of the defender.

3 Adversarial model

We design a game between an attacker \({\mathcal {A}}\) and a defender \({\mathcal {D}}\). The goal of the attacker is to identify the victim nodes after pseudonymisation and transformation of the graph by the defender. We first introduce the necessary graph theoretical notation, and then formulate the three stages of the attacker–defender game.

3.1 Notation and terminology

We use the following standard notation and terminology. Additional notation that may be needed in other sections of the paper will be introduced as needed.

-

A graphG is represented as a pair (V, E), where V is a set of vertices (also called nodes) and \(E\subseteq V\times V\) is a set of edges. The vertices of G are denoted by \(V_G\) and its edges by \(E_G\). As we will only consider undirected graphs, we will consider an edge (v, w) as an unordered pair. We will use the notation \({\mathcal {G}}\) for the set of all graphs.

-

An isomorphism between two graphs \(G=(V,E)\) and \(G'=(V',E')\) is a bijective function \(\varphi {:}\,V \rightarrow V'\), such that \(\forall v_1,v_2\in V {:}\,(v_1,v_2)\in E \iff (\varphi (v_1),\varphi (v_2))\in E'\). Two graphs are isomorphic, denoted by \(G\simeq _{\varphi } G'\), or briefly \(G\simeq _{} G'\), if there exists an isomorphism \(\varphi \) between them. Given a subset of vertices \(S \subseteq V\), we will often use \(\varphi S\) to denote the set \(\{\varphi (v) | v \in S\}\).

-

The set of neighbours of a set of nodes \(W\subseteq V\) is defined by \(N_{G}(W) = \{v\in V{\setminus } W \mid \exists {w\in W} {:}\,(v,w)\in E \vee (w,v)\in E\}\). If \(W=\{w\}\) is a singleton set, we will write \(N_{G}(w)\) for \(N_{G}(\{w\})\). The degree of a vertex \(v\in V\), denoted as \(\delta _G(v)\), is defined as \(\delta _G(v)=|N_{G}(v)|\).

-

Let \(G=(V,E)\) be a graph and let \(S\subseteq V\). The weakly-induced subgraph of S in G, denoted by \(\langle S \rangle ^{^{\!w}}_{G}\), is the subgraph of G with vertices \(S\cup N_{G}(S)\) and edges \(\{(v,v')\in E \mid v\in S \vee v'\in S\})\).

3.2 The attacker–defender game

The attacker–defender game starts with a graph \(G=(V,E)\) representing a snapshot of a social network. The attacker knows a subset of the users, but not the connections between them. This is a common scenario in online social networks such as Facebook, where every user has the choice of not showing her friend list, even to her friends or potential adversaries who, in principle, do know that the victim is enrolled in the network. In other types of social networks, e.g. in e-mail networks such as Gmail or messaging networks such as WhatsApp, the existence of relations (determined in this case by the action of exchanging messages) is by default not known, even if the adversary knows the victim’s e-mail address or phone number. Figure 1a exemplifies the initial state of a small network, where capital letters represent the real identities of the users and dotted lines represent the relations existing between them, which are not known to the adversary.

Before a pseudonymised graph is released, the attacker manages to enrol sybil accounts in the network and establish links with the victims, as depicted in Fig. 1b, where sybil accounts are represented by dark-coloured nodes and the edges known to the adversary (because they were created by her) are represented by solid lines. The goal of the attacker is to later re-identify the victims in order to learn information about them. Coming back to the real-life scenarios discussed before, creating accounts in Facebook or Gmail is trivial, and social engineering may be used to get the victims to accept a friend request or answer an e-mail.

When the defender decides to publish the graph, she anonymises it by removing the real user identities, or replacing them with pseudonyms, and possibly perturbing the graph. In Fig. 1c we illustrate the pseudonymisation process of the graph in Fig. 1b. The pseudonymised graph contains information that the attacker wishes to know, such as the existence of relations between users, but the adversary cannot directly learn this information, as the identities of all the vertices are hidden, including those of the sybil nodes themselves. Thus, after the pseudonymised graph is published, the attacker analyses the graph to first re-identify her own sybil accounts, and then the victims (see Fig. 1d). This allows her to acquire new information, which was supposed to remain private, such as the fact that E and F are friends on Facebook, or e-mail each other via Gmail. Note that by “publishing an anonymised graph”, we do not necessarily mean publishing the entire graph underlying large networks such as Facebook or Gmail. This is unlikely to occur in practice. However, sanitised samples from graphs of this type, covering users in a particular group, such as students of a particular school, have indeed been published in the past for research purposes (Guimera et al. 2003; Panzarasa et al. 2009).

In what follows, we formalise the three stages of the attacker–defender game, assuming an initial graph \(G=(V,E)\).

-

1.

Attacker subgraph creation The attacker constructs a set of sybil nodes \(S=\{x_1,x_2,\ldots ,x_{|S|}\}\), such that \(S\cap V = \emptyset \) and a set of edges \(F\subseteq (S\times S) \cup (S\times V) \cup (V\times S)\). It clearly follows that \(E\cap F = \emptyset \). We call \(G^{+}=(V\cup S, E\cup F)\) the sybil-extended graph of G. The attacker does not know the complete graph \(G^{+}\), but he knows \(\langle S \rangle ^{^{\!w}}_{G^{+}}\), the weakly-induced subgraph of S in \(G^{+}\). We say that \(\langle S \rangle ^{^{\!w}}_{G^{+}}\) is the attacker subgraph. The attacker subgraph creation has two substages:

-

(a)

Creation of inter-sybil connections A unique (with high probability) and efficiently retrievable connection pattern is created between sybil nodes to facilitate the attacker’s task of retrieving the sybil subgraph at the final stage.

-

(b)

Fingerprint creation For a given victim vertex \(y \in N_{G^{+}}(S) {\setminus } S\), we call the victim’s neighbours in S, i.e \(N_{G^{+}}(y)\cap S\), its fingerprint. Considering the set of victim vertices \(Y = \{y_1, \ldots , y_m\}\), the attacker ensures that \(N_{G^{+}}(y_i)\cap S \ne N_{G^{+}}(y_j)\cap S\) for every \(y_i,y_j\in Y\), \(i\ne j\).

-

(a)

-

2.

Anonymisation The defender obtains \(G^{+}\) and constructs an isomorphism \(\varphi \) from \(G^{+}\) to \(\varphi G^{+}\). We call \(\varphi G^{+}\) the pseudonymised graph. The purpose of pseudonymisation is to remove all personally identifiable information from the vertices of G. Next, given a non-deterministic procedure \(t\) that maps graphs to graphs, known by both \({\mathcal {A}}\) and \({\mathcal {D}}\), the defender applies transformation \(t\) to \(\varphi G^{+}\), resulting in the transformed graph \(t(\varphi G^{+})\). The procedure t modifies \(\varphi G^{+}\) by adding and/or removing vertices and/or edges.

-

3.

Re-identification After obtaining \(t(\varphi G^{+})\), the attacker executes the re-identification attack in two stages.

-

(a)

Attacker subgraph retrieval Determine the isomorphism \(\varphi \) restricted to the domain of sybil nodes S.

-

(b)

Fingerprint matching Determine the isomorphism \(\varphi \) restricted to the domain of victim nodes \(\{y_1,y_2,\ldots ,y_m\}\).

-

(a)

As established by the last step of the attacker–defender game, we consider the adversary to succeed if she effectively determines the isomorphism \(\varphi \) restricted to the domain of victim nodes \(\{y_1,y_2,\ldots ,y_m\}\). That is, when the adversary re-identifies all victims in the anonymised graph.

4 Robust active attacks

This section formalises robust active attacks. We provide mathematical formulations, in the form of optimisation problems, of the attacker’s goals in the first and third stages. In particular, we address three of the subtasks that need to be accomplished in these stages: fingerprint creation, attacker subgraph retrieval and fingerprint matching.

4.1 Robust fingerprint creation

Active attacks, in their original formulation (Backstrom et al. 2007), aimed at re-identifying victims in pseudonymised graphs. Consequently, the uniqueness of every fingerprint was sufficient to guarantee success with high probability, provided that the attacker subgraph is correctly retrieved. Moreover, several types of randomly generated attacker subgraphs can indeed be correctly and efficiently retrieved, with high probability, after pseudonymisation. The low resilience reported for this approach when the pseudonymised graph is perturbed by applying an anonymisation method (Mauw et al. 2016, 2018a, b) or by introducing arbitrary changes (Ji et al. 2015), comes from the fact that it relies on finding exact matches between the fingerprints created by the attacker at the first stage of the attack and their images in \(t(\varphi G^{+})\). The attacker’s ability to find such exact matches is lost even after a relatively small number of perturbations is introduced by t.

Our observation is that setting for the attacker the goal of obtaining the exact same fingerprints in the perturbed graph is not only too strong, but more importantly, not necessary. Instead, we argue that it is sufficient for the attacker to obtain a set of fingerprints that is close enough to the original set of fingerprints, for some notion of closeness. Given that a fingerprint is a set of vertices, we propose to use the cardinality of the symmetric difference of two sets to measure the distance between fingerprints. The symmetric difference between two sets X and Y, denoted by \(X \triangledown Y\), is the set of elements in \(X \cup Y\) that are not in \(X \cap Y\). We use d(X, Y) to denote \(|X \triangledown Y|\).

Our goal at this stage of the attack is to create a set of fingerprints satisfying the following property.

Definition 1

(Robust set of fingerprints) Given a set of victims \(\{y_1, \ldots , y_m \}\) and a set of sybil nodes S in a graph \(G^{+}\), the set of fingerprints \(\{F_1, \ldots , F_m\}\) with \(F_i = N_{G^{+}}(y_i)\cap S\) is said to be robust if it maximises

The property above ensures that the lower bound on the distance between any pair of fingerprints is maximal. In what follows, we will refer to the lower bound defined by Eq. (1) as minimum separation of a set of fingerprints. For example, in Fig. 1b, the fingerprint of the vertex E with respect to the set of attacker vertices \(\{1, 2, 3\}\) is \(\{2, 3\}\), and the fingerprint of the vertex F is \(\{1\}\). This gives a minimum separation between the two victim’s fingerprints equal to \(| \{2, 3\} \triangledown \{1\} | = | \{1, 2, 3\} | = 3\), which is maximum. Therefore, given attacker vertices \(\{1,2,3\}\), the set of fingerprints \(\{\{2,3\},\{1\}\}\) is robust for the set of victim nodes \(\{E,F\}\).

Next we prove that, if the distance between each original fingerprint F and the corresponding anonymised fingerprint \(\varphi F\) is less than half the minimum separation, then the distance between F and any other anonymised fingerprint, say \(\varphi F'\), is strictly larger than half the minimum separation.

Theorem 1

Let S be the set of sybil nodes, let \(\{y_1, \ldots , y_m \}\) be the set of victims and let \(\{F_1, \ldots , F_m\}\) be their fingerprints with minimum separation \(\delta \). Let \(F_i'\) be the fingerprint of \(\varphi y_i\) in the anonymised graph \(t(\varphi G^{+})\), for \(i \in \{1, \ldots , m\}\). Then,

Proof

In order to achieve a contradiction, we assume that \(d(\varphi F_i, F_j') \le \delta /2\) for some \(i, j \in \{1, \ldots , m\}\) with \(i \ne j\). Because \(d(\varphi F_j, F_j') < \delta /2\), we have \(d(\varphi F_i, F_j') + d(\varphi F_j, F_j') < \delta \). By the triangle inequality we obtain that \(d(\varphi F_i, \varphi F_j ) \le d(\varphi F_i, F_j') + d(\varphi F_j, F_j') < \delta \). Hence \(d(\varphi F_i, \varphi F_j)\) is lower than the minimum separation of \(\{F_1, \ldots , F_m\}\), which yields a contradiction given that \(d(\varphi F_i, \varphi F_j) = d(F_i, F_j) \ge \delta \). \(\square \)

We exploit Theorem 1 later in the fingerprint matching step through the following corollary. If \(\delta /2\) is the maximum distance shift from an original fingerprint \(F_i\) of \(y_i\) to the fingerprint \(F_i'\) of \(y_i\) in the perturbed graph, then for every \(F\in \{F_1', \ldots , F_m'\}\) it holds that \(d(F, \varphi F_i) < \delta /2 \iff F= \varphi F_i\). In other words, given a set of victims for which a set of fingerprints needs to be defined, the larger the minimum separation of these fingerprints, the larger the number of perturbations that can be tolerated in \(t(\varphi G^{+})\), while still being able to match the perturbed fingerprints to their correct counterparts in \(G^{+}\).

As illustrated earlier in our running example, the fingerprints of E and F are \(\{2, 3\}\) and \(\{1\}\), respectively, which gives a minimum separation of \(\delta = 3\). Theorem 1 states that, if after anonymisation of the graph, the fingerprints of E and F become, say \(\{2\}\) and \(\{1, 2\}\), respectively, then it must hold that \(|\{2, 3\} \triangledown \{1, 2\}| > 3/2\) and \(|\{1\} \triangledown \{2\}| > 3/2\), while \(|\{2, 3\} \triangledown \{2\}| < 3/2\) and \(|\{1\} \triangledown \{1, 2\}| < 3/2\). This makes it easy to match the original fingerprint, say \(\{2, 3\}\), with the correct perturbed fingerprint \(\{2\}\) by calculating their distance and verifying that it remains below the threshold \(\delta /2\). In Sect. 5.1, we will describe an efficient algorithm for addressing this optimisation problem.

4.2 Robust attacker subgraph retrieval

Let \({\mathcal {C}}=\{\langle X \rangle ^{^{\!w}}_{t(\varphi G^{+})} \mid X\subseteq V_{t(\varphi G^{+})}, |X|=|S|, \langle X \rangle ^{^{\!w}}_{t(\varphi G^{+})}\cong \langle S \rangle ^{^{\!w}}_{G^{+}}\}\) be the set of all subgraphs isomorphic to the attacker subgraph \(\langle S \rangle ^{^{\!w}}_{G^{+}}\) and weakly induced in \(t(\varphi G^{+})\) by a vertex subset of cardinality |S|. The original active attack formulation assumes that \(|{\mathcal {C}}|=1\) and that the subgraph in \({\mathcal {C}}\) is the image of the attacker subgraph after pseudonymisation. This assumption, for example, holds on the pseudonymised graph in Fig. 1c, but it rarely holds on perturbed graphs. In fact, \({\mathcal {C}}\) becomes empty by simply adding an edge between any pair of attacker nodes, which makes the attack fail quickly when increasing the amount of perturbation.

To account for the occurrence of perturbations in releasing \(t(\varphi G^{+})\), we introduce the notion of robust attacker subgraph retrieval. Rather than limiting the retrieval process to finding an exact match of the original attacker subgraph, we consider that it is enough to find a sufficiently similar subgraph, thus adding some level of noise-tolerance. By “sufficiently similar”, we mean a graph that minimises some graph dissimilarity measure \(\varDelta {:}\,{\mathcal {G}}\times {\mathcal {G}}\rightarrow {\mathbb {R}}^+\) with respect to \(\langle S \rangle ^{^{\!w}}_{G^{+}}\). The problem is formulated as follows.

Definition 2

(Robust attacker subgraph retrieval problem) Given a graph dissimilarity measure \(\varDelta {:}\,{\mathcal {G}}\times {\mathcal {G}}\rightarrow {\mathbb {R}}^+\), and a set S of sybil nodes in the graph \(G^{+}\), find a set \(S' \subseteq V_{t(\varphi G^{+})}\) that minimises

A number of graph (dis)similarity measures have been proposed in the literature (Sanfeliu and Fu 1983; Bunke 2000; Backstrom et al. 2007; Fober et al. 2013; Mallek et al. 2015). Commonly, the choice of a particular measure is ad hoc, and depends on the characteristics of the graphs being compared. In Sect. 5.2, we will describe a measure that is efficiently computable and exploits the known structure of \(\langle S \rangle ^{^{\!w}}_{G^{+}}\), by separately accounting for inter-sybil and sybil-to-non-sybil edges. Along with this dissimilarity measure, we provide an algorithm for constructively finding a solution to the problem enunciated in Definition 2.

4.3 Robust fingerprint matching

As established by the attacker–defender game discussed in Sect. 3, fingerprint matching is the last stage of the active attack. Because it clearly relies on the success of the previous steps, we make the following two assumptions upfront.

-

1.

We assume that the robust sybil subgraph retrieval procedure succeeds, i.e. that \(\varphi S = S'\) where \(S'\) is the set of sybil nodes obtained in the previous step.

-

2.

Given the original set of victims Y, we assume that the set of vertices in the neighbourhood of \(S'\) contains those in \(\varphi Y\), i.e. \(\varphi Y \subseteq N_{t(\varphi G^{+})}(S'){\setminus } S'\), otherwise \(S'\) is insufficient information to achieve the goal of re-identifying all victim vertices.

Given the correct set of sybil nodes \(S'\) and a set of potential victims \(Y'=\{y_1', \ldots , y_n' \}=N_{t(\varphi G^{+})}(S'){\setminus } S'\), the re-identification process consists in determining the isomorphism \(\varphi \) restricted to the vertices in \(Y'\). Next we define re-identification as an optimisation problem, and after that we provide sufficient conditions under which a solution leads to correct identification.

Definition 3

(Robust re-identification problem) Let S and \(S'\) be the set of sybil nodes in the original and anonymised graph, respectively. Let \(\{y_1, \ldots , y_m \}\) be the victims in \(G^{+}\) with fingerprints \(F_1 = N_{G^{+}}(y_1)\cap S, \ldots , F_m = N_{G^{+}}(y_m)\cap S\). The robust re-identification problem consists in finding an isomorphism \(\phi {:}\,S \rightarrow S'\) and subset \(\{z_1, \ldots , z_m\} \subseteq N_{t(\varphi G^{+})}(S'){\setminus } S'\) that minimises

where \(||\cdot ||_{\infty }\) stands for the infinity norm.

Optimising the infinity norm gives the lowest upper bound on the distance between an original fingerprint and the fingerprint of a vertex in the perturbed graph. This is useful towards the goal of correctly re-identifying all victims. However, should the adversary aims at re-identifying at least one victim with high probability, then other plausible objective functions can be used, such as the Euclidean norm.

As stated earlier, our intention is to exploit the result of Theorem 1, provided that the distance between original and perturbed fingerprints is lower than \(\delta /2\), where \(\delta \) is the minimum separation of the original set of fingerprints. This is one out of three conditions that we prove sufficient to infer a correct mapping \(\varphi \) from a solution to the robust re-identification problem, as stated in the following result.

Theorem 2

Let \(\phi {:}\,S \rightarrow S'\) and \(\{z_1, \ldots , z_m\}\) be a solution to the robust re-identification problem defined by the set of sybil nodes S in the original graph \(G^{+}\), the set of sybil nodes \(S'\) in the anonymised graph \(t(\varphi G^{+})\), and the set of victims \(\{y_1, \ldots , y_m \}\) in \(G^{+}\). Let \(\{F_1, \ldots , F_m\}\) be the set of fingerprints of \(\{y_1, \ldots , y_m\}\) and \(\delta \) its minimum separation. If the following three conditions hold :

-

1.

\(\forall {x \in S} {:}\,\phi (x) = \varphi (x)\)

-

2.

\(\{\varphi (y_1), \ldots , \varphi (y_m)\} = N_{t(\varphi G^{+})}(S'){\setminus } S'\)

-

3.

For every \(y_i \in \{y_1, \ldots , y_m \}\), \(d(\varphi F_i, F_i') < \delta /2\) where \(F_i' = N_{t(\varphi G^{+})}(\varphi (y_i))\cap S'\),

then \(\varphi (y_i) = z_i\) for every \(i \in \{1, \ldots , m\}\).

Proof

From the third condition we obtain that the correct mapping \(\varphi \) satisfies

Now, the second condition gives that \(\{\varphi (y_1), \ldots , \varphi (y_m)\} = \{z_1, \ldots , z_m\}\). This means that, for every \(i \in \{1, \ldots , m\}\), \(F_i' = N_{t(\varphi G^{+})}(z_j)\cap S'\) for some \(j \in \{1, \ldots , m\}\). Let f be an automorphism in \(\{1, \ldots , m\}\) such that \(F_i' = N_{t(\varphi G^{+})}(z_{f(i)})\cap S'\) for every \(i \in \{1, \ldots , m\}\). We use \(f^{-1}\) to denote the inverse of f. Then, considering that \(\phi F_i = \varphi F_i\) for every \(i \in \{1, \ldots , m\}\) (first condition), we obtain the following equalities.

Considering Theorem 1, we obtain that for every \(i, j \in \{1, \ldots , m\}\) with \(i \ne j\) it holds that \(d(\varphi F_i, F_j') > \delta /2\). Therefore, if f is not the trivial automorphism, i.e. \(f(i) = i \; \forall i \in \{1, \ldots , m\}\), then \(\max \{d(\varphi F_1, F'_{f^{-1}(1)}), \ldots , d(\varphi F_m, F'_{f^{-1}(m)})\} > \delta /2\). This implies that,

However, this contradicts the optimality of the solution \(\phi \) and \(\{z_1, \ldots , z_m\}\). Therefore, f must be the trivial automorphism, which concludes the proof. \(\square \)

In Theorem 2, the first condition states that the adversary succeeded on correctly identifying each of her own sybil nodes in the perturbed graph. That is to say, the adversary retrieved the mapping \(\varphi \) restricted to the set of victims. This is clearly an important milestone in the attack as victim’s fingerprints are based on such mapping. The second condition says that the neighbours of the sybil vertices remained the same after perturbation. As a result, the adversary knows that \(\{z_1, \ldots , z_m\}\) is the victim set in the perturbed graph, but she does not know yet the isomorphism \(\varphi \) restricted to the set of victims \(\{y_1, \ldots , y_m\}\). Lastly, the third condition states that \(\delta /2\) is an upper bound on the distance between a victim’s fingerprints in the pseudonymised graph \(\varphi G^{+}\) and the perturbed graph \(t(\varphi G^{+})\), where \(\delta \) is the minimum separation between the victim’s fingerprints. In other words, the transformation method did not perturb a victim’s fingerprint “too much”. If those three conditions hold, Theorem 2 shows that the isomorphism \(\varphi \) restricted to the set of victims \(\{y_1, \ldots , y_m\}\) is the trivial isomorphism onto \(\{z_1, \ldots , z_m\}\).

Summing up In this section we have enunciated the three problem formulations for robust active attacks, namely:

-

Creating a robust set of fingerprints.

-

Robustly retrieving the attacker subgraph in the perturbed graph.

-

Robustly matching the original fingerprints to perturbed fingerprints.

Additionally, we have defined a set of conditions under which finding a solution for these problems guarantees a robust active attack to be successful. Each of the three enunciated problem has been stated as an optimisation task. Since obtaining exact solutions to these problems is computationally expensive, in the next section we introduce heuristics for finding approximate solutions.

5 Heuristics for an approximate instance of the robust active attack strategy

In this section we present the techniques for creating an instance of the robust active attack strategy described in the previous section. Since finding exact solutions to the optimisation problems in Eqs. (1)–(3) is computationally expensive, we provide efficient approximate heuristics.

5.1 Attacker subgraph creation

For creating the internal links of the sybil subgraph, we will use the same strategy as the so-called walk-based attack (Backstrom et al. 2007), which is the most widely-studied instance of the original active attack strategy. By doing so, we make our new attack as (un)likely as the original to have the set of sybil nodes removed by sybil defences. Thus, for the set of sybil nodes S, the attack will set an arbitrary (but fixed) order among the elements of S. Let \(x_1,x_2,\ldots ,x_{|S|}\) represent the vertices of S in that order. The attack will firstly create the path \(x_1x_2\ldots x_{|S|}\), whereas the remaining inter-sybil edges are independently created with probability 0.5.

For creating the set of fingerprints, we will apply a greedy algorithm for maximising the minimum separation defined in Eq. (1). The idea behind the algorithm is to arrange all possible fingerprints in a grid-like auxiliary graph, in such a way that nodes representing similar fingerprints are linked by an edge, and nodes representing well-separated fingerprints are not. Looking for a set of maximally separated fingerprints in this graph reduces to a well-known problem in graph theory, namely that of finding an independent set. An independent setI of a graph G is a subset of vertices from G such that \(E_{\langle I \rangle _{G}}=\emptyset \), that is, all vertices in I are pairwise not linked by edges. If the graph is constructed in such a way that every pair of fingerprints whose distance is less then or equal to some value i, then an independent set represents a set of fingerprints with a guaranteed minimum separation of at least \(i+1\). For example, the fingerprint graph shown in Fig. 2a represents the set of fingerprints \(\{\{1\},\{2\},\{3\},\{1,2\},\{1,3\},\{2,3\},\{1,2,3\}\}\), with edges linking all pairs X, Y of fingerprints such that \(d(X,Y)\le 1\), whereas Fig. 2b represents an analogous graph where edges link all pairs X, Y of fingerprints such that \(d(X,Y)\le 2\). Note that the vertex set of both graphs is the power set of \(\{1,2,3\}\), except for the empty set, which does not represent a valid fingerprint, as every victim must be linked to at least one sybil node. A set of fingerprints built from an independent set of the first graph may have minimum separation 2 (e.g. \(\{\{1\},\{2\},\{1,2,3\}\}\)) or 3 (e.g. \(\{\{1,3\},\{2\}\}\)), whereas a set of fingerprints built from an independent set of the second graph will have minimum separation 3 (the independent sets of this graph are \(\{\{1\},\{2,3\}\}\), \(\{\{1,2\},\{3\}\}\) and \(\{\{1,3\},\{2\}\}\)).

Our fingerprint generation method iteratively creates increasingly denser fingerprint graphs. The vertex set of every graph is the set of possible fingerprints, i.e. all subsets of S except the empty set. In the ith graph, every pair of nodes X, Y such that \(d(X,Y)\le i\) will be linked by an edge. Thus, an independent set of this graph will be composed of nodes representing fingerprints whose minimum separation is at least \(i+1\). A maximalFootnote 2 independent set of the fingerprint graph is computed in every iteration, to have an approximation of a maximum-cardinality set of uniformly distributed fingerprints with minimum separation at least \(i+1\). For example, in the graph of Fig. 2a, the method will find \(\{\{1\},\{2\},\{3\},\{1,2,3\}\}\) as a maximum-cardinality set of uniformly distributed fingerprints with minimum separation 2; whereas for the graph of Fig. 2b, the method will find, for instance, \(\{\{1\},\{2,3\}\}\) as a maximum-cardinality set of uniformly distributed fingerprints with minimum separation 3. The method receives as a parameter a lower bound b on the number of fingerprints to generate. It iterates until the maximal independent set \(I_i\) obtained at the ith step satisfies \(|I_i|<b\), and gives \(I_{i-1}\) as output. Clearly, b must satisfy \(b\ge m\), as every victim should be assigned a different fingerprint. Note that the algorithm does not guarantee to obtain exactly as many fingerprints as victims, so the output \(I_{i-1}\) is used as a pool, from which the attack randomly draws m fingerprints. Algorithm 1 lists the pseudo-code of this method.

In Algorithm 1, the order of every graph \(G_F^{(i)}\) is \(2^{|S|}-1\). Thus, the time complexity of every graph construction is \({\mathcal {O}}\left( \left( 2^{|S|}\right) ^2\right) ={\mathcal {O}}\left( 2^{2|S|}\right) \). Moreover, the greedy algorithm for finding a maximal independent set runs in quadratic time with respect to the order of the graph, so in this case its time complexity is also \({\mathcal {O}}\left( 2^{2|S|}\right) \). Finally, since the maximum possible distance between a pair of fingerprints is |S|, the worst-case time complexity of Algorithm 1 is \({\mathcal {O}}\left( |S|\cdot 2^{2|S|}\right) \). This worst case occurs when the number of victims is very small, as the number of times that steps 4–9 of the algorithm are repeated is more likely to approach |S|. While this time complexity may appear as excessive at first glance, we must consider that, for a social graph of order n, the algorithm will be run for sets of sybil nodes having at most cardinality \(|S|=\log _2 n\). Thus, in terms of the order of the social graph, the worst-case running time will be \({\mathcal {O}}(n^2 \log _2 n)\).

5.2 Attacker subgraph retrieval

As discussed in Sect. 4.2, in the original formulation of active attacks, the sybil retrieval phase is based on the assumption that the attacker subgraph can be uniquely and exactly matched to a subgraph of the released graph. This assumption is relaxed by the formulation of robust attacker subgraph retrieval given in Definition 2, which accounts for the possibility that the attacker subgraph has been perturbed. The problem formulation given in Definition 2 requires a dissimilarity measure \(\varDelta \) to compare candidate subgraphs to the original attacker subgraph. We will introduce such a measure in this section. Moreover, the problem formulation requires searching the entire power set of \(V_{t(\varphi G^{+})}\), which is infeasible in practice. In order to reduce the size of the search space, we will establish a perturbation threshold \(\vartheta \), and the search procedure will discard any candidate subgraph X such that \(\varDelta (\langle X \rangle ^{^{\!w}}_{t(\varphi G^{+})},\langle S \rangle ^{^{\!w}}_{G^{+}})>\vartheta \).

We now define the dissimilarity measure \(\varDelta \) that will be used. To that end, some additional notation will be necessary. For a graph H, a vertex set \(V\subseteq V_H\), and a complete order \(\prec \subseteq V\times V\), we will define the vector \({\mathbf {v}}_{\prec }=(v_{i_1}, v_{i_2}, \ldots , v_{i_{|V|}})\), as the one satisfying \(v_{i_1} \prec v_{i_2} \prec \cdots \prec v_{i_{|V|}}\). When the order \(\prec \) is fixed or clear from the context, we will simply refer to \({\mathbf {v}}_{\prec }\) as \({\mathbf {v}}\). Moreover, for the sake of simplicity in presentation, we will in some cases abuse notation and use \({\mathbf {v}}\) for V, \(\langle {\mathbf {v}} \rangle ^{^{\!w}}_{H}\) for \(\langle V \rangle ^{^{\!w}}_{H}\), and so on. The search procedure assumes the existence of a fixed order \(\prec _S\) on the original set of sybil nodes S, which is established at the attacker subgraph creation stage, as discussed in Sect. 5.1. In what follows, we will use the notation \({\mathbf {s}}=(x_1,x_2,\ldots ,x_{|S|})\) for the vector \({\mathbf {s}}_{\prec _S}\).

Given the original attacker subgraph \(\langle S \rangle ^{^{\!w}}_{G^{+}}\) and a subgraph of \(t(\varphi G^{+})\) weakly induced by a candidate vector \({\mathbf {v}}=(v_1,v_2,\ldots ,v_{|S|})\), the dissimilarity measure \(\varDelta \) will compare \(\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\) to \(\langle S \rangle ^{^{\!w}}_{G^{+}}\) according to the following criteria:

-

The set of inter-sybil edges of \(\langle S \rangle ^{^{\!w}}_{G^{+}}\) will be compared to that of \(\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\). This is equivalent to comparing \(E(\langle S \rangle _{G^{+}})\) and \(E(\langle {\mathbf {v}} \rangle _{t(\varphi G^{+})})\). To that end, we will apply to \(\langle S \rangle _{G^{+}}\) the isomorphism \(\varphi '{:}\,\langle S \rangle _{G^{+}}\rightarrow \langle {\mathbf {v}} \rangle _{t(\varphi G^{+})}\), which makes \(\varphi '(x_i)=v_i\) for every \(i\in \{1,\ldots ,|S|\}\). The contribution of inter-sybil edges to \(\varDelta \) will thus be defined as

$$\begin{aligned} \varDelta _{syb}\left( \langle S \rangle ^{^{\!w}}_{G^{+}},\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\right) = \left| E(\varphi '\langle S \rangle _{G^{+}}) \triangledown E(\langle {\mathbf {v}} \rangle _{t(\varphi G^{+})}) \right| , \end{aligned}$$(4)that is, the symmetric difference between the edge sets of \(\varphi '\langle S \rangle _{G^{+}}\) and \(\langle {\mathbf {v}} \rangle _{t(\varphi G^{+})}\).

-

The set of sybil-to-non-sybil edges of \(\langle S \rangle ^{^{\!w}}_{G^{+}}\) will be compared to that of \(\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\). Unlike the previous case, where the orders \(\prec _{S}\) and \(\prec _{{\mathbf {v}}}\) allow to define a trivial isomorphism between the induced subgraphs, in this case creating the appropriate matching would be equivalent to solving the re-identification problem for every candidate subgraph, which is considerably inefficient. In consequence, we introduce a relaxed criterion, which is based on the numbers of non-sybil neighbours of every sybil node, which we refer to as marginal degrees. The marginal degree of a sybil node \(x\in S\) is thus defined as \(\delta _{\langle S \rangle ^{^{\!w}}_{G^{+}}}'(x)=\left| N_{\langle S \rangle ^{^{\!w}}_{G^{+}}}(x){\setminus } S\right| \). By analogy, for a vertex \(v\in {\mathbf {v}}\), we define \(\delta _{\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}}'(v)=\left| N_{\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}}(v){\setminus } {\mathbf {v}}\right| \). Finally, the contribution of sybil-to-non-sybil edges to \(\varDelta \) will be defined as

$$\begin{aligned} \varDelta _{neigh}\left( \langle S \rangle ^{^{\!w}}_{G^{+}},\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\right) = \displaystyle \sum _{i=1}^{|S|}\left| \delta _{\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}}'(v_i) - \delta _{\langle S \rangle ^{^{\!w}}_{G^{+}}}'(x_i)\right| \end{aligned}$$(5) -

The dissimilarity measure combines the previous criteria as follows:

$$\begin{aligned} \varDelta \left( \langle S \rangle ^{^{\!w}}_{G^{+}},\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\right) = \varDelta _{syb}\left( \langle S \rangle ^{^{\!w}}_{G^{+}},\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\right) + \varDelta _{neigh}\left( \langle S \rangle ^{^{\!w}}_{G^{+}},\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\right) \end{aligned}$$(6)

Figure 3 shows an example of the computation of this dissimilarity measure, with \({\mathbf {s}}=(x_1,x_2,x_3, x_4,x_5)\) and \({\mathbf {v}}=(v_1,v_2,v_3,v_4,v_5)\). In the figure, we can observe that \((x_1,x_3)\in E_{\langle S \rangle ^{^{\!w}}_{G^{+}}}\) and \((v_1,v_3)\notin E_{\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}}\), whereas \((x_3,x_4)\in E_{\langle S \rangle ^{^{\!w}}_{G^{+}}}\) and \((v_3,v_4)\notin E_{\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}}\). In consequence, \(\varDelta _{syb}\left( \langle S \rangle ^{^{\!w}}_{G^{+}},\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\right) =2\). Moreover, we can also observe that \(\delta _{\langle S \rangle ^{^{\!w}}_{G^{+}}}'(x_2)= |\emptyset |=0\), whereas \(\delta _{\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}}'(v_2)=| \{y'_1,y'_5\}|=2\). Since \(\delta _{\langle S \rangle ^{^{\!w}}_{G^{+}}}'(x_i)= \delta _{\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}}'(v_i)\) for \(i\in \{1,3,4,5\}\), we have \(\varDelta _{neigh}\left( \langle S \rangle ^{^{\!w}}_{G^{+}},\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\right) =2\), so \(\varDelta \left( \langle S \rangle ^{^{\!w}}_{G^{+}},\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\right) =4\). It is simple to see that the value of the dissimilarity function is dependent on the order imposed by the vector \({\mathbf {v}}\). For example, consider the vector \({\mathbf {v}}'=(v_5,v_2,v_3,v_4,v_1)\). We can verifyFootnote 3 that now \(\varDelta _{syb}\left( \langle S \rangle ^{^{\!w}}_{G^{+}},\langle {\mathbf {v}}' \rangle ^{^{\!w}}_{t(\varphi G^{+})}\right) =4\), whereas \(\varDelta _{neigh}\left( \langle S \rangle ^{^{\!w}}_{G^{+}},\langle {\mathbf {v}}' \rangle ^{^{\!w}}_{t(\varphi G^{+})}\right) =4\), so the dissimilarity value is now \(\varDelta \left( \langle S \rangle ^{^{\!w}}_{G^{+}},\langle {\mathbf {v}}' \rangle ^{^{\!w}}_{t(\varphi G^{+})}\right) =8\).

The search procedure assumes that the transformation t did not remove the image of any sybil node from \(\varphi G^{+}\), so it searches the set of cardinality-|S| permutations of elements from \(V_{t(\varphi G^{+})}\), respecting the tolerance threshold. The method is a breadth-first search, which analyses at the ith level the possible matches to the vector \((x_1,x_2,\ldots ,x_i)\) composed of the first i components of \({\mathbf {s}}\). The tolerance threshold \(\vartheta \) is used to prune the search tree. A detailed description of the procedure is shown in Algorithm 2. Ideally, the algorithm outputs a unitary set \({\tilde{\mathcal {C}}}^*=\{(v_{j_1},v_{j_2},\ldots ,v_{j_{|S|}})\}\), in which case the vector \({\mathbf {v}}=(v_{j_1},v_{j_2},\ldots ,v_{j_{|S|}})\) is used as the input to the fingerprint matching phase, described in the following subsection. If this is not the case, and the algorithm yields \({\tilde{\mathcal {C}}}^*=\{{\mathbf {v}}_1,{\mathbf {v}}_2,\ldots ,{\mathbf {v}}_t\}\), the attack randomly picks an element \({\mathbf {v}}_i\in {\tilde{\mathcal {C}}}^*\) and proceeds to the fingerprint matching phase. Finally, if \({\tilde{\mathcal {C}}}^*=\emptyset \), the attack is considered to fail, as no re-identification is possible.

To conclude the discussion of Algorithm 2, we point out that if it is executed with \(\vartheta =0\), then \({\tilde{\mathcal {C}}}^*\) contains exactly the same candidate set that would be recovered by the attacker subgraph retrieval phase of the original walk-based attack. In fact, in choosing a value for the parameter \(\vartheta \), the practitioner must assess the trade-off between the retrieval capability of the attack and its computational cost. On the one hand, a low tolerance threshold leads to a fast execution of the retrieval method, at the cost of a higher risk of failing to retrieve a largely perturbed sybil subgraph. On the other hand, by making the tolerance threshold arbitrarily large, one can guarantee that the sybil subgraph will not be discarded during the search process.Footnote 4 However, in this case the retrieval method may end-up performing a near-to-exhaustive search, which is prohibitively expensive in terms of memory and execution time.

5.3 Fingerprint matching

Now, we describe the noise-tolerant fingerprint matching process. Let \(Y=\{y_1,\ldots ,y_m\}\subseteq V_{G^{+}}\) represent the set of victims. Let S be the original set of sybil nodes and \({\tilde{S}}'\subseteq V_{t(\varphi G^{+})}\) a candidate obtained by the sybil retrieval procedure described above. As in the previous subsection, let \({\mathbf {s}}=(x_1,x_2,\ldots ,x_{|S|})\) be the vector containing the elements of S in the order imposed at the sybil subgraph creation stage. Moreover, let \(\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\), with \({\mathbf {v}}=(v_1,v_2,\ldots ,v_{|S|})\in {\tilde{\mathcal {C}}}^*\), be a candidate sybil subgraph, retrieved using Algorithm 2. Finally, for every \(i\in \{1,\ldots ,m\}\), let \(F_i\subseteq S\) be the original fingerprint of the victim \(y_i\) and \(\phi F_i\subseteq {\mathbf {v}}\) its image by the isomorphism mapping \({\mathbf {s}}\) to \({\mathbf {v}}\).

We now describe the process for finding \(Y'=\{y'_1,\ldots ,y'_m\}\subseteq V_{t(\varphi G^{+})}\), where \(y'_i=\varphi (y_i)\), using \(\phi F_1, \phi F_2, \ldots , \phi F_m\), \({\mathbf {s}}\) and \({\mathbf {v}}\). If the perturbation \(t(G^{+})\) had caused no damage to the fingerprints, checking for the exact matches is sufficient. Since, as previously discussed, this is usually not the case, we will introduce a noise-tolerant fingerprint matching strategy that maps every original fingerprint to its most similar candidate fingerprint, within some tolerance threshold \(\beta \).

Algorithm 3 describes the process for finding the set of optimal re-identifications. For a candidate victim \(z\in N_{t(\varphi G^{+})}({\mathbf {v}}){\setminus }{\mathbf {v}}\), the algorithm denotes as \({\tilde{F}}_{z,{\mathbf {v}}}=N_{t(\varphi G^{+})}(z)\cap {\mathbf {v}}\) its fingerprint with respect to \({\mathbf {v}}\). The algorithm is a depth-first search procedure. First, the algorithm finds, for every \(\phi F_i\), \(i\in \{1,\ldots ,m\}\), the set of most similar candidate fingerprints, and keeps the set of matches that reach the minimum distance. From these best matches, one or several partial re-identifications are obtained. The reason why more than one partial re-identification is obtained is that more than one candidate fingerprint may be equally similar to some \(\phi F_i\). For every partial re-identification, the method recursively finds the set of best completions and combines them to construct the final set of equally likely re-identifications. The search space is reduced by discarding insufficiently similar matches. For any candidate victim z and any original victim \(y_i\) such that \(d({\tilde{F}}_{z,{\mathbf {v}}},\phi F_i)<\beta \), the algorithm discards all matchings where \(\varphi (y_i)=z\).

To illustrate how the method works, recall the graphs \(\langle S \rangle ^{^{\!w}}_{G^{+}}\) and \(\langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\) depicted in Fig. 3. The original set of victims is \(Y=\{y_1,y_2,y_3,y_4\}\) and their fingerprints are \(F_1=\{x_1\}\), \(F_2=\{x_1,x_3\}\), \(F_3=\{x_3,x_5\}\), and \(F_4=\{x_3\}\), respectively. In consequence, we have \(\phi F_1=\{v_1\}\), \(\phi F_2=\{v_1,v_3\}\), \(\phi F_3=\{v_3,v_5\}\), and \(\phi F_4=\{v_3\}\). The set of candidate victims is \(N_{t(\varphi G^{+})}({\mathbf {v}}){\setminus }{\mathbf {v}}=\{z_1,z_2,z_3,z_4,z_5\}\). The method will first find all exact matchings, that is \(\varphi (y_2)=z_2\), \(\varphi (y_3)=z_3\), and \(\varphi (y_4)=z_4\), because the distances between the corresponding fingerprints is zero in all three cases. Since none of these matchings is ambiguous, the method next determines the match \(\varphi (y_1)=z_1\), because \(d({\tilde{F}}_{z_1},\phi F_1)=d(\{v_1,v_2\},\{v_1\})=1 <2=d(\{v_2\},\{v_1\})=d({\tilde{F}}_{z_5},\phi F_1)\). At this point, the method stops and yields the unique re-identification \(\{(y_1,z_1),(y_2,z_2),(y_3,z_3),(y_4,z_4)\}\). Now, suppose that the vertex \(z_5\) is linked in \(t(\varphi G^{+})\) to \(v_3\), instead of \(v_2\), as depicted in Fig. 4. In this case, the method will unambiguously determine the matchings \(\varphi (y_2)=z_2\) and \(\varphi (y_3)=z_3\), and then will try the two choices \(\varphi (y_4)=z_4\) and \(\varphi (y_4)=z_5\). In the first case, the method will make \(\varphi (y_1)=z_1\) and discard \(z_5\). Analogously, in the second case the method will also make \(\varphi (y_1)=z_1\), and will discard \(z_4\). Thus, the final result will consist in two equally likely re-identifications, namely \(\{(y_1,z_1),(y_2,z_2),(y_3,z_3),(y_4,z_4)\}\) and \(\{(y_1,z_1),(y_2,z_2),(y_3,z_3),(y_4,z_5)\}\).

Ideally, Algorithms 2 and 3 both yield unique solutions, in which case the sole element in the output of Algorithm 3 is given as the final re-identification. If this is not the case, the attack picks a random candidate sybil subgraph from \({\tilde{\mathcal {C}}}^*\), uses it as the input of Algorithm 3, and picks a random re-identification from its output. If either algorithm yields an empty solution, the attack fails. Finally, it is important to note that, if Algorithm 2 is run with \(\vartheta =0\) and Algorithm 3 is run with \(\beta =0\), then the final result is exactly the same set of equally likely matchings that would be obtained by the original walk-based attack. As for the case of sybil subgraph retrieval, in choosing the value of the parameter \(\beta \) for robust fingerprint matching, the practitioner must consider the trade-off between efficiency and noise tolerance, in a manner analogous to the one discussed for the selection of the parameter \(\vartheta \) in Algorithm 2.

6 Experiments

The purpose of our experimentsFootnote 5 is threefold. Firstly, we show the considerable gain in resilience of robust active attacks, in comparison to the original walk-based attack. Secondly, we assess the contributions of different components of the robust active attack strategy to the success of the attacks. Finally, we analyse the weaknesses shared by the robust and the original active attacks, and discuss how they may serve as the basis for the development of new privacy-preserving graph publication methods. Each run of our experiments is determined by the selection of a graph type, a perturbation method and an active attack strategy. In what follows, we first describe the choices for each of these three components, and conclude the section by discussing the empirical results obtained.

6.1 Three models of synthetic graphs and two real-life networks

In order to make the results reported in this section comparable to those reported for the walk-based attack on anonymised graphs (Mauw et al. 2018b), we first study the behaviour of the attacks under evaluation on Erdős–Rényi (ER) random graphs (Erdős and Rényi 1959) of order 200. We generated 200,000 ER graphs, 10,000 featuring every density value in the set \(\{0.05, 0.1, \ldots , 1.0\}\).

We also study the behaviour of the attacks on Watts–Strogatz (WS) small world graphs (Watts and Strogatz 1998) and Barabási–Albert (BA) scale-free graphs (Barabási and Albert 1999). The WS model has two parameters, the number K of neighbours originally assigned to every vertex, and the probability \(\varrho \) that an edge of the initial K-regular ring lattice is randomly rewritten. In our experiments, we generated 10,000 graphs of order 200 for every pair \((K,\varrho )\), where \(K\in \{10, 20, \ldots , 100\}\) and \(\varrho \in \{0.25, 0.5, 0.75\}\). In the case of BA graphs, we used seed graphs of order 50 and every graph was grown by adding 150 vertices, and performing the corresponding edge additions. The BA model has a parameter m defining the number of new edges added for every new vertex. Here, we generated 10,000 graphs for every value of m in the set \(\{5, 10, \ldots , 50\}\). In generating every graph, the seed graph was chosen to be, with probability 1/3, a complete graph, an m-regular ring lattice, or an ER random graph of density 0.5.

Finally, in order to complement the results obtained on randomly generated graphs, and to showcase the behaviour of the attacks in larger, real-life social networks, we additionally study the attacks in the context of two benchmark social graphs. The first one, which is commonly referred to as the Panzarasa graph, after one of its creators (Panzarasa et al. 2009), was collected from an online community of students at the University of California, Irvine. In the Panzarasa graph, a directed edge (A, B) represents that student A sent at least one message to student B. In our experiments, we use a processed version of this graph, where edge orientation, loops and isolated vertices were removed. This graph has 1893 vertices and 20,296 edges. The second real-life social graph that we use was constructed from a collection of e-mail messages exchanged between students, professors and staff at Universitat Rovira i Virgili (URV), Spain (Guimera et al. 2003). For the construction of the graph, the data collectors added an edge between every pair of users that messaged each other. In doing so, they ignored group messages with more than 50 recipients. Moreover, they removed isolated vertices and connected components of order 2. The URV graph has 1133 vertices and 5451 edges.

6.2 Graph perturbation

In our experiments we considered existing anonymisation methods against active attacks and random perturbation. Although the latter does not provide formal privacy guarantees, it is a useful benchmark for evaluating resilience against noisy releases. The graph perturbation methods used in our experiments are the following:

-

(a)

An algorithm enforcing \((k,\ell )\)-anonymity for some \(k>1\) or some \(\ell >1\) (Mauw et al. 2016).

-

(b)

An algorithm enforcing \((2,\varGamma _{G,1})\)-anonymity (Mauw et al. 2018b).

-

(c)

An algorithm enforcing \((k,\varGamma _{G,1})\)-adjacency anonymity for a given value of k (Mauw et al. 2018b). Here, we run the method with \(k=|S|\) (i.e. the number of sybil nodes), since it has been empirically shown that the original walk-based attack is very likely to be thwarted in this case (Mauw et al. 2018b).

-

(d)

Randomly flipping \(1\%\) of the edges in \(G^{+}\). Each flip consists in randomly selecting a pair of vertices \(u,v\in V_{G^{+}}\), removing the edge (u, v) if it belongs to \(E_{G^{+}}\), or adding it in the opposite case. In the case of synthetic graphs, since every instance of \(G^{+}\) has order \(n=208\), this perturbation performs \(\left\lfloor 0.01\cdot \frac{n(n-1)}{2}\right\rfloor =215\) flips. In the case of the Panzarasa graph, the order of \(G^{+}\) is 1904, so this perturbation performs 18,116 flips. Finally, in the case of the URV graph, the order of \(G^{+}\) is 1144, so this perturbation performs 6537 flips.

-

(e)

Randomly flipping \(5\%\) of the edges in \(G^{+}\) (that is 1076 flips on synthetic graphs, 90,582 on the Panzarasa graph and 32,689 on the URV graph), in a manner analogous to the one used above.

-

(f)

Randomly flipping \(10\%\) of the edges in \(G^{+}\) (that is 2153 flips on synthetic graphs, 181,165 on the Panzarasa graph and 65,379 on the URV graph), in a manner analogous to the one used above.

6.3 Attack variants

We compare the behaviour of the original walk-based attack to four instantiations of the robust attack described in Sect. 5. All four instantiations have in common the use of noise-tolerant sybil subgraph retrieval and fingerprint matching, since noise tolerance is the basis of the notion of robustness of the new attacks. The differences between the instances are given by the combination of choices in terms of two features: (1) the use of high versus low noise-tolerance thresholds, and (2) the use of maximally separated fingerprints versus the use of randomly generated fingerprints. In both cases, each choice represents the alternative between attacks featuring higher robustness at the cost of an overhead in computation (higher noise tolerance, maximally separated fingerprints) and attacks which are more efficient but sacrifice some robustness features (lower noise tolerance, randomly generated fingerprints). Table 1 summarises the list of attack variants to be compared. Note that the attacks labelled as Robust-High-Max and Robust-Low-Max in Table 1 are both instances of the robust active attack featuring all its components, and only differ in the tolerance levels used in sybil subgraph retrieval and fingerprint matching. Also note that attack variants using maximally separated fingerprints are run with a random component as well, because a set of maximally separated fingerprints may be larger than the number of victims and in this case the fingerprints used for every run of the attacks are randomly selected from this pool.

As discussed by Backstrom et al. (2007), for a graph of order n, it suffices to insert \(\log _2 n\) sybil nodes for being able to compromise any possible victim, whereas even the so-called near-optimal sybil defences (Yu et al. 2006, 2008) do not aim to remove every sybil node, but to limit their number to around \(\log _2 n\). In light of these two considerations, when evaluating every attack variant on the collections of synthetic graphs, we use 8 sybil nodes, as \(\left\lceil \log _2 200\right\rceil =8\). For the same reason, we use 11 sybil nodes on the Panzarasa and URV graphs. In all cases, we use the same number of victims as sybil nodes, to make the results comparable to those reported for the walk-based attack on anonymised graphs (Mauw et al. 2018b).

Finally, we set the tolerance thresholds to be \(\vartheta =\beta =8\) for the attacks Robust-High-Rand and Robust-High-Max, and \(\vartheta =\beta =4\) for Robust-Low-Rand and Robust-Low-Max, when executed on synthetic graphs. In the case of the Panzarasa and URV graphs, due to their considerably larger size, and in order to keep the execution time and memory consumption of Algorithms 2 and 3 within reasonable limits, we set the tolerance thresholds to be \(\vartheta =\beta =4\) for the attacks Robust-High-Rand and Robust-High-Max, and \(\vartheta =\beta =2\) for Robust-Low-Rand and Robust-Low-Max.

6.4 Probability of success of the attacks

Following the attacker–defender game in Sect. 3, for every graph and every attack variant in Table 1, we first run the attacker subgraph creation stage. Then, for every resulting graph, we obtain six variants of anonymised graphs, which differ from each other in the perturbation method applied [items (a)–(f) listed in Sect. 6.2]. Finally, for each perturbed graph, we simulate the execution of the re-identification stage and compute its probability of success as follows:

where \({\mathcal {X}}\) is the set of equally-likely possible sybil subgraphs retrieved in \(t(\varphi G^{+})\) by the third phase of the attack, and

with \({\mathcal {Y}}_{X}\) containing all equally-likely fingerprint matchings according to X. Note that, for the original walk-based attack, \({\mathcal {X}}\) is the set of subgraphs of \(t(\varphi G^{+})\) isomorphic to \(\langle S \rangle ^{^{\!w}}_{G^{+}}\), whereas for the robust attack we have \({\mathcal {X}}=\left\{ \langle {\mathbf {v}} \rangle ^{^{\!w}}_{t(\varphi G^{+})}\;|\; {\mathbf {v}}\in {\tilde{\mathcal {C}}}^*\right\} \), being \({\tilde{\mathcal {C}}}^*\) the output of Algorithm 2. Moreover, for the original walk-based attack, \({\mathcal {Y}}_X=\{\{y_1,y_2\ldots ,y_m\}\subseteq V_{t(\varphi G^{+})}\;|\; \forall {i\in \{1,\ldots ,m\}} {:}\,F_{y_i,X}=F_i\}\), whereas for the robust attack \({\mathcal {Y}}_X\) is the output of Algorithm 3.

In the case of synthetic graphs, in order to obtain the scores used for comparing the different approaches, we computed, for every combination of an attack variant and a perturbation strategy, the average of the success probabilities over every group of 10,000 graphs sharing the same set of parameter choices (as described in Sect. 6.1). In the case of the two real networks, we executed, for every combination of an attack variant and a perturbation strategy, 10 runs on the Panzarasa graph and 10 runs on the URV graph. In each of these runs, a different set of victims was randomly chosen. The final scores used for comparisons were the averaged success probabilities over every group of runs.

6.5 Analysis of results

Figure 5 shows the averaged success probabilities of the five attack variants on the set of Erdős–Rényi random graphs, after applying the perturbation strategies (a)–(f). Every chart represents the behaviour of the attacks on graphs perturbed with a specific method. The x axis displays density values and the y axis displays success probabilities. Analogous results are shown in Figs. 6, 7 and 8 for Watts–Strogatz random graphs with \(\varrho =0.25\), \(\varrho =0.5\) and \(\varrho =0.75\), respectively. In this case, in every chart the x axis displays the values of K. Likewise, Fig. 9 shows analogous results for Barabási–Albert random graphs. Here, in every chart the x axis displays the values of m. Finally, Table 2 shows the averaged success probabilities of the five attack variants on the two real-life networks. In the table, every row represents the combination of a network and a perturbation method, and every column represents an attack variant. Success probabilities are rounded to four significant figures.

From the analysis of all results, an important and consistently occurring first observation is that the robust attack, in all its variants, displays a larger success probability than the original walk-based attack. In fact, for many parameter settings in ER and WS synthetic graphs, the difference is extreme, as the best-performing robust attack (Robust-High-Max) displays an average success probability close to 1, whereas the original attack displays an average success probability close to 0. Another important observation that holds in all collections of synthetic graphs [see Figs. 5, 6, 7, 8, 9, items (d) and (e)] is that even \(1\%\) of random noise completely thwarts the original attack, whereas different variants of the robust attack still perform at around 0.4–0.6 average success probabilities. In fact, the attack variants Robust-High-Max and Robust-High-Rand, that is the ones featuring large tolerance thresholds, still perform acceptably well on all synthetic graphs with a 5% random perturbation. An extreme case of resilience in the presence of noise is observed on real-life networks. Because of the large sizes of these graphs, the percentages of noise injected in these experiments translate into an enormous amount of modifications, and even in this case the robust attack variants manage to be successful in a small, but non-zero, fraction of cases in the URV graph, whereas the original attack is again completely thwarted. Also note that, in the case of the anonymisation methods (a)–(c), which also perform a considerably large number of perturbations on the real-life graphs, all variants of the robust attack display success probabilities ranging from medium to high, and continue to largely outperform the original walk-based attack.

Summing up the findings discussed in the previous paragraph, on the one hand we corroborate the previously reported fact that the original walk-based attack shows low resilience against the addition of even small amounts of random noise. On the other hand, and more importantly, the results obtained here support our claim that low resilience is not an inherent property of the active attack strategy itself, and that it is possible to design active attacks which are considerably more robust and thus represent a more serious threat in the context of noise addition approaches to privacy-preserving graph publication.

We now focus on the suitability of robust active attacks as a more appropriate benchmark, in comparison with the original attack, for evaluating anonymisation methods based on formal privacy guarantees. By analysing the results in items (a), (b) and (c) of Figs. 5, 6, 7 and 8 and items (b) and (c) of Fig. 9, we can see that the anonymisation methods were almost fully ineffective against the best performing robust attack (Robust-High-Max in most cases and Robust-High-Rand in the remaining cases). These results are consistent with the formal privacy guarantees provided by the anonymisation algorithms, since in all cases they only guarantee full protection from an attacker leveraging one sybil node, whereas all attacks displayed in these figures were conducted with 8 sybil nodes. On the other hand, the original walk-based attack is easily thwarted by most instances of all anonymisation algorithms. This behaviour of the original attack had already been reported (Mauw et al. 2016, 2018b), and its causes discussed. As the authors of these studies explain, this better-than-expected performance of the anonymisation methods was a side effect of the disruptions they caused in the graph, rather than a consequence of the formal privacy guarantees themselves. In other words, the low resilience of the attack played a more important role in the apparent effectiveness of the anonymisation methods than their formal privacy guarantees. Another undesirable effect of this problem is that it may enable misleading conclusions in comparing formally equivalent algorithms. For example, Mauw et al.’s algorithms for obtaining a \((k,\ell )\)-anonymous graph with \(k>1\) or \(\ell >1\) (Mauw et al. 2016) and a \((2,\varGamma _{G,1})\)-anonymous graph (Mauw et al. 2018b) provide equivalent formal privacy guarantees. However, as seen in items (a) and (b) of Fig. 7 and, to a lesser extent, in items (a) and (b) of Fig. 5, there are some graph families where one of the two algorithms performs better than the other in terms of resistance to the original walk-based attack. These differences are not a consequence of the privacy guarantees provided by the algorithms. Instead, they are a consequence of the number of modifications that each method performs. As can be observed in the figures, the performance of both algorithms in terms of resistance to the robust attack Robust-High-Max is the same, which is consistent with the fact that both methods provide equivalent formal privacy guarantees. In scenarios like the one described here, researchers studying both methods would benefit from using the attack Robust-High-Max as comparison benchmark, since that would largely attenuate potentially misguiding side effects.

Next, we will discuss the effectiveness of the robust active attack variants in different types of graphs. From the analysis of Figs. 5, 6, 7 and 8, items (a), (b) and (c), we can see that, on anonymised Erdős–Rényi and Watts–Strogatz graphs, the tolerance threshold plays a discrete role in differentiating the attacks leveraging maximally separated fingerprints. On the contrary, a high tolerance threshold does improve the effectiveness of the attack leveraging randomly generated fingerprints. Furthermore, in the case of randomly perturbed synthetic graphs of all types [see Figs. 5, 6, 7, 8, 9, items (d) and (e)], the choice of a high tolerance threshold does lead to larger success probabilities in all cases. These observations highlight the central role of noise tolerance as a resilience-improving factor in robust attacks, especially when dealing with perturbation techniques based on noise addition.