Abstract

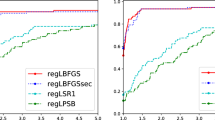

The limited memory BFGS (L-BFGS) method is one of the popular methods for solving large-scale unconstrained optimization. Since the standard L-BFGS method uses a line search to guarantee its global convergence, it sometimes requires a large number of function evaluations. To overcome the difficulty, we propose a new L-BFGS with a certain regularization technique. We show its global convergence under the usual assumptions. In order to make the method more robust and efficient, we also extend it with several techniques such as the nonmonotone technique and simultaneous use of the Wolfe line search. Finally, we present some numerical results for test problems in CUTEst, which show that the proposed method is robust in terms of solving more problems.

Similar content being viewed by others

Data Availability Statement

All 297 test problems analyzed in this study are available in the supplementary information. The data of all test problems were obtained from https://github.com/ralna/CUTEst.

Notes

Quite recently, Steck and Kanzow [19] proposed an efficient calculation technique for \((B_k + \mu I)^{-1}\)

References

Barazilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8, 141–148 (1988)

Burdakov, O., Gong, L., Zikrin, S., Yuan, Y.X.: On efficiently combining limited-memory and trust-region techniques. Math. Program. Comput. 9, 101–134 (2017)

Burke, J. V., Wiegmann, A.: Notes on limited memory BFGS updating in a trust-region framework, Technical report, Department of Mathematics, University of Washington, (1996)

Burke, J. V., Wiegmann, A., Xu, L.: Limited memory BFGS updating in a trust-region framework, Technical report, Department of Mathematics, University of Washington, (2008)

Byrd, R.H., Nocedal, J., Schnabel, R.B.: Representations of quasi-Newton matrices and their use in limited memory methods. Math. Program. 63, 129–156 (1994)

Dennis, J.E., Jr., Moré, J.J.: Quasi-Newton methods, motivation and theory. SIAM Rev. 19, 46–89 (1977)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Gould, N.I.M., Orban, D., Toint, P.L.: CUTEr and SifDec, a constrained and unconstrained testing environment, revisited. ACM Trans. Math. Softw. 29, 373–394 (2003)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search technique for Newton’s method. SIAM J. Numer. Anal. 23, 707–716 (1986)

Liu, D.C., Nocedal, J.: On the limited memory BFGS method for large scale optimization. Math. Program. 45, 503–528 (1989)

Moré, J.J., Sorenson, D.C.: Computing a trust region step. SIAM J. Sci. Stat. Comput. 4, 553–572 (1983)

Nocedal, J.: Updating quasi-Newton matrices with limited storage. Math. Comput. 35, 773–782 (1980)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, New York (1999)

Nocedal, J.: Software for Large-scale Unconstrained Optimization: L-BFGS distribution, Available at: http://users.iems.northwestern.edu/~nocedal/lbfgs.html

Pearson, J.D.: Variable metric methods of minimisation. Comput. J. 12, 171–178 (1969)

Powell, M. J. D.: Some global convergence properties of a variable metric algorithm for minimization without exact line search, in: Cottle, R. W., Lemke, C. E. eds., Nonlinear Programming, SIAM-AMS Proceedings IX, SIAM Publications, (1976)

Raydan, M.: The Barzilai and Borwein gradient method for large scale unconstrained minimization problem. SIAM J. Optim. 7, 26–33 (1997)

Shanno, D.F., Kang-Hoh, P.A.: Matrix conditioning and nonlinear optimization. Math. Program. 14, 149–160 (1978)

Steck, D., Kanzow, C.: Regularization of limited memory quasi-newton methods for large-scale nonconvex minimization. arXiv preprint arXiv:1911.04584 (2019)

Sugimoto, S., Yamashita, N.: A regularized limited-memory BFGS method for unconstrained minimization problems, Technical report, Department of Applied Mathematics and Physics, Graduate School of Informatics, Kyoto University, Japan, (2014). Available at: http://www.optimization-online.org/DB_HTML/2014/08/4479.html

Sun, W.: Nonmonotone trust region method for solving optimization problems. Appl. Math. Comput. 156, 159–174 (2004)

Tarzangh, D.A., Peyghami, M.R.: A new regularized limited memory BFGS-type method based on modified secant conditions for unconstrained optimization problems. J. Global Optim. 63, 709–728 (2015)

Ueda, K., Yamashita, N.: Convergence properties of the regularized newton method for the unconstrained nonconvex optimization. Appl. Math. Optim. 62, 27–46 (2010)

Ueda, K., Yamashita, N.: A regularized Newton method without line search for unconstrained optimization, Technical Report, Department of Applied Mathematics and Physics, Kyoto University, (2009)

Ueda, K.: Studies on Regularized Newton-type methods for unconstrained minimization problems and their global complexity bounds, Doctoral thesis, Department of Applied Mathematics and Physics, Kyoto University, (2012)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

About this article

Cite this article

Tankaria, H., Sugimoto, S. & Yamashita, N. A regularized limited memory BFGS method for large-scale unconstrained optimization and its efficient implementations. Comput Optim Appl 82, 61–88 (2022). https://doi.org/10.1007/s10589-022-00351-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-022-00351-5