Abstract

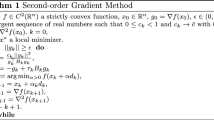

In this paper is developed an accelerated version of the steepest descent method by a two-step iteration. The new algorithm uses information with delay to define the iterations. Specifically, in the first step, a prediction of the new test point is calculated by using the gradient method with the exact minimal gradient steplength and then, a correction is computed by a weighted sum between the prediction and the predecessor iterate of the current point. A convergence result is provided. In order to compare the efficiency and effectiveness of the proposal, with similar methods existing in the literature, numerical experiments are performed. The numerical comparison of the new algorithm with the classical conjugate gradient method shows that our method is a good alternative to solve large-scale problems.

Similar content being viewed by others

References

Kincaid, D., Kincaid, D.R., Cheney, E.W.: Numerical analysis: mathematics of scientific computing, vol. 2. American Mathematical Society (2009)

Cauchy, A.: Méthode générale pour la résolution des systemes d’équations simultanées. Comptes Rendus Sci. Paris 25(1847), 536–538 (1847)

Hestenes, M.R., Stiefel, E. (eds.): Methods of Conjugate Gradients for Solving Linear Systems, vol. 49. NBS, Washington (1952)

Barzilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8(1), 141–148 (1988)

Yuan, Y.: A new stepsize for the steepest descent method. J. Comput. Math. 24(2), 149–156 (2006)

Dai, Y.-H., Yuan, Y.-X.: Alternate minimization gradient method. IMA J. Numer. Anal. 23(3), 377–393 (2003)

Dai, Y.-H.: Alternate step gradient method. Optimization 52(4–5), 395–415 (2003)

Nesterov, Y.E.: One class of methods of unconditional minimization of a convex function, having a high rate of convergence. USSR Comput. Math. Math. Phys. 24(4), 80–82 (1984)

Raydan, M.: On the Barzilai and Borwein choice of steplength for the gradient method. IMA J. Numer. Anal. 13(3), 321–326 (1993)

Dai, Y.-H., Liao, L.-Z.: R-linear convergence of the Barzilai and Borwein gradient method. IMA J. Numer. Anal. 22(1), 1–10 (2002)

Di Serafino, D., Ruggiero, V., Toraldo, G., Zanni, L.: On the steplength selection in gradient methods for unconstrained optimization. Appl. Math. Comput. 318, 176–195 (2018)

Dai, Y.-H., Fletcher, R.: On the asymptotic behaviour of some new gradient methods. Math. Program. 103(3), 541–559 (2005)

Zhou, B., Gao, L., Dai, Y.-H.: Gradient methods with adaptive step-sizes. Comput. Optim. Appl. 35(1), 69–86 (2006)

Frassoldati, G., Zanni, L., Zanghirati, G., et al.: New adaptive stepsize selections in gradient methods. J. Ind. Manag. Optim. 4(2), 299–312 (2008)

De Asmundis, R., di Serafino, D., Riccio, F., Toraldo, G.: On spectral properties of steepest descent methods. IMA J. Numer. Anal. 33(4), 1416–1435 (2013)

Luenberger, D.G., Ye, Y., et al.: Linear and Nonlinear Programming, vol. 2. Springer, New York (1984)

De Asmundis, R., Di Serafino, D., Hager, W.W., Toraldo, G., Zhang, H.: An efficient gradient method using the Yuan steplength. Comput. Optim. Appl. 59(3), 541–563 (2014)

Birgin, E.G., Martínez, J.M., Raydan, M., et al.: Spectral projected gradient methods: review and perspectives. J. Stat. Softw. 60(3), 1–21 (2014)

Friedlander, A., Martínez, J.M., Molina, B., Raydan, M.: Gradient method with retards and generalizations. SIAM J. Numer. Anal. 36(1), 275–289 (1999)

Lamotte, J.-L., Molina, B., Raydan, M.: Smooth and adaptive gradient method with retards. Math. Comput. Model. 36(9–10), 1161–1168 (2002)

Brezinski, C.: Variations on richardson’s method and acceleration. Bull. Belg. Math. Soc. Simon Stevin 3(5), 33–44 (1996)

Brezinski, C.: Multiparameter descent methods. Linear Algebra Appl. 296(1–3), 113–141 (1999)

Brezinski, C., Redivo-Zaglia, M.: Hybrid procedures for solving linear systems. Numer. Math. 67(1), 1–19 (1994)

Liu, Z., Liu, H., Dong, X.: An efficient gradient method with approximate optimal stepsize for the strictly convex quadratic minimization problem. Optimization 67(3), 427–440 (2018)

Dai, Y.-H., Fletcher, R.: Projected Barzilai–Borwein methods for large-scale box-constrained quadratic programming. Numer. Math. 100(1), 21–47 (2005)

Steihaug, T.: The conjugate gradient method and trust regions in large scale optimization. SIAM J. Numer. Anal. 20(3), 626–637 (1983)

Acknowledgements

I would like to thank Dr. Marcos Raydan for your helpful comments and suggestions on this work, and also for sending me pertinent information. The author also would like to thank Dr. Hugo Lara and two anonymous referees for their useful suggestions and comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Oviedo Leon, H.F. A delayed weighted gradient method for strictly convex quadratic minimization. Comput Optim Appl 74, 729–746 (2019). https://doi.org/10.1007/s10589-019-00125-6

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-019-00125-6